prompt

stringlengths 501

4.98M

| target

stringclasses 1

value | chunk_prompt

bool 1

class | kind

stringclasses 2

values | prob

float64 0.2

0.97

⌀ | path

stringlengths 10

394

⌀ | quality_prob

float64 0.4

0.99

⌀ | learning_prob

float64 0.15

1

⌀ | filename

stringlengths 4

221

⌀ |

|---|---|---|---|---|---|---|---|---|

# Precipitation exercises

***

## <font color=steelblue>Exercise 3 - Double-mass curve</font>

<font color=steelblue>Perform a double-mass curve analysis with the data in sheet *Exercise_003* from file *RainfallData.xlsx*.</font>

```

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

sns.set()

sns.set_context('notebook')

from scipy.optimize import curve_fit

```

### Import data

```

# Importar los datos

data3 = pd.read_excel('../data/RainfallData.xlsx', sheet_name='Exercise_003',

skiprows=0, index_col=0)

# name of the gages

gages = data3.columns

# calculate the mean across stations

data3['AVG'] = data3.mean(axis=1)

data3.head()

```

### Double-mass curves

We are going to plot simultaneously the double-mass curve for all the stations, so we can start identifying stations that may have problems.

To plot several plots in the same figure, we will use the function `subplots` in `Matplotlib`.

```

fig, axes = plt.subplots(nrows=2, ncols=3, figsize=(12, 8), sharex=True, sharey=True)

for (gage, ax) in zip(gages, axes.flatten()):

# line of slope 1

ax.plot((0, 800), (0, 800), ':k', label='1:1 line')

# double-mass curve

ax.plot(data3.AVG.cumsum(), data3[gage].cumsum(), '.-', label='data')

ax.set_title('gage ' + gage)

ax.legend()

axes[1, 2].axis('off');

```

From the plot we are certain that the series in gage C is correct, but there might be problems in the rest of the gages.

### Identify errors

The double-mass curve must represent a linear regression with no intercept. We will create a function representing this linear regression which we will use in the following steps.

```

def linear_reg(x, m):

"""Linear regression with no intecept

y = m * x

Input:

------

x: float. Independet value

m: float. Slope of the linear regression

Output:

-------

y: float. Regressed value"""

y = m * x

return y

```

#### Gage A

To identify errors, we will have to fit the linear regression with no intercept to both the series before and after a specific year; if the diference in the fitted slope for those two series exceed an error threshold, we identify that year as a break point in the double-mass curve. We will iterate this process for each year and set a error threshold (or tolerance) to find all the possible break points in the series.

```

# define the gage

gage = 'A'

# define the error threshold

error = .2

for year in data3.index[3:-3]:

# fit the regression from 1978 onwards

m1 = curve_fit(linear_reg, data3.loc[:year, 'AVG'].cumsum(), data3.loc[:year, gage].cumsum())[0][0]

# fit the regression from 1978 onwards

m2 = curve_fit(linear_reg, data3.loc[year:, 'AVG'].cumsum(), data3.loc[year:, gage].cumsum())[0][0]

## correction factor

#factor = m1 / m2

#if (factor < 1 - error) | (factor > 1. + error):

if abs(m1 - m2) > error:

print('{0} m1 = {1:.3f} m2 = {2:.3f} factor = {3:.3f}'.format(year, m1, m2, factor))

```

There are no errors in the series of gage A.

#### All gages

Simply changing the name of the gage in the previous section we can repeat the process. Let's create a function and the run it in a a loop.

```

def identify_errors(dataGage, dataAVG, error=.1):

"""Identify possible break points in the double-mass curve

Parameters:

-----------

dataGage: series. Annual series for the gage to be checked

dataAVG: series. Annual series of the mean across gages in a region

error: float. Error threshold

Output:

-------

It will print the years with a difference in slopes higher than 'error', alon with the values of the slopes.

"""

for year in dataGage.index[3:-3]:

# fit the regression from 1978 onwards

m1 = curve_fit(linear_reg, dataAVG.loc[:year].cumsum(), dataGage.loc[:year].cumsum())[0][0]

# fit the regression from 1978 onwards

m2 = curve_fit(linear_reg, dataAVG.loc[year:].cumsum(), dataGage.loc[year:].cumsum())[0][0]

## correction factor

#factor = m1 / m2

#if (factor < 1 - error) | (factor > 1. + error):

if abs(m1 - m2) > error:

print('{0} m1 = {1:.3f} m2 = {2:.3f}'.format(year, m1, m2))

for gage in gages:

print('Gage ', gage)

identify_errors(data3['AVG'], data3[gage], error=.1)

print()

```

We have identified errors in gages B, D and E. This was an automatic search to discard correct stations. Now, we have to analyse one by one these three stations that might have errors.

### Correct errors

#### Gage B

##### Analyse the series

We have identified anomalies in the years between 1929 and 1939. It will probably mean that there are two break points in the double mass curve. Let's look at the double mass curve and the specific points representing those two years.

```

# set gage and year corresponding to the break in the line

gage = 'B'

breaks = [1929, 1939]

# visualize

plt.figure(figsize=(5, 5))

plt.axis('equal')

plt.plot((0, 800), (0, 800), '--k')

plt.plot(data3.AVG.cumsum(), data3[gage].cumsum(), '.-', label='original')

plt.plot(data3.AVG.cumsum().loc[breaks], data3[gage].cumsum().loc[breaks], '.', label='breaks')

plt.legend();

```

At a glance, we can identify three periods. There is period at the beginning of the series with a higher than usual slope; this period seem so extend until 1930 (not 1929 as we had identified). There is aperiod at the end of the series with a lower than usual slope; this period seems to start in 1938 (not 1939 as we had identified).

We will reset the break points and calculate the slope of the regression to check it.

```

# reset the break points

breaks = [1930, 1938]

# fit the regression untill the first break

m1 = curve_fit(linear_reg, data3.loc[:breaks[0], 'AVG'].cumsum(), data3.loc[:breaks[0], gage].cumsum())[0][0]

# fit the regression from the first to the second break

m2 = curve_fit(linear_reg, data3.loc[breaks[0]:breaks[1], 'AVG'].cumsum(), data3.loc[breaks[0]:breaks[1], gage].cumsum())[0][0]

# fit the regression from t

m3 = curve_fit(linear_reg, data3.loc[breaks[1]:, 'AVG'].cumsum(), data3.loc[breaks[1]:, gage].cumsum())[0][0]

print('m1 = {0:.3f} m2 = {1:.3f} m3 = {2:.3f}'.format(m1, m2, m3))

```

As expected, there are three different slopes in the series. We will assume that the correct data is that from 1930 to 1937, because it is longest period of the three and its slope is closer to 1. Therefore, we have to calculate the correction factors for two periods: before 1930 and after 1937; with these factors we can correct the series.

##### Correct the series

```

# correction factors

factor12 = m2 / m1

factor23 = m2 / m3

factor12, factor23

# copy of the original series

data3['B_'] = data3[gage].copy()

# correct period before the first break

data3.loc[:breaks[0], 'B_'] *= factor12

# correct period after the second break

data3.loc[breaks[1]:, 'B_'] *= factor23

plt.figure(figsize=(5, 5))

plt.axis('equal')

plt.plot((0, 800), (0, 800), '--k')

plt.plot(data3.AVG.cumsum(), data3[gage].cumsum(), '.-', label='original')

plt.plot(data3.AVG.cumsum(), data3['B_'].cumsum(), '.-', label='corrected')

plt.legend();

```

Now we can check again for errors in the correceted series.

```

# chech again for errors

identify_errors(data3.B_, data3.AVG)

```

There aren't any more errors, so we've done correcting data from gage B.

#### Gage D

##### Analyse the series

We found a break point in year 1930.

```

# set gage and year corresponding to the break in the line

gage = 'D'

breaks = [1930]

# visualize

plt.figure(figsize=(5, 5))

plt.axis('equal')

plt.plot((0, 800), (0, 800), '--k')

plt.plot(data3.AVG.cumsum(), data3[gage].cumsum(), '.-', label='original')

plt.plot(data3.AVG.cumsum().loc[breaks], data3[gage].cumsum().loc[breaks], '.', label='breaks')

plt.legend();

# fit the regression untill the break

m1 = curve_fit(linear_reg, data3.loc[:breaks[0], 'AVG'].cumsum(), data3.loc[:breaks[0], gage].cumsum())[0][0]

# fit the regression after the break

m2 = curve_fit(linear_reg, data3.loc[breaks[0]:, 'AVG'].cumsum(), data3.loc[breaks[0]:, gage].cumsum())[0][0]

print('m1 = {0:.3f} m2 = {1:.3f}'.format(m1, m2))

```

This case is simpler than the previous and we easily spot the breal point in 1930. THe period before 1930 has a slope closer to 1, so we will assume that this is the correct part of the series.

##### Correct the series

```

# correction factor

factor = m1 / m2

factor

# copy of the original series

data3[gage + '_'] = data3[gage].copy()

# correct period after the break

data3.loc[breaks[0]:, gage + '_'] *= factor

plt.figure(figsize=(5, 5))

plt.axis('equal')

plt.plot((0, 800), (0, 800), '--k')

plt.plot(data3.AVG.cumsum(), data3[gage].cumsum(), '.-', label='original')

plt.plot(data3.AVG.cumsum(), data3[gage + '_'].cumsum(), '.-', label='corrected')

plt.legend();

# chech again for errors

identify_errors(data3[gage + '_'], data3.AVG, error=.1)

```

We identify two more possible break point in the corrected series. Both might indicate that the last section of the series has a higher slope that the initial. Let's correct the series from 1935 on, and this may solve the second break point in 1937.

```

gage = 'D_'

breaks = [1935]

# fit the regression untill the break

m1 = curve_fit(linear_reg, data3.loc[:breaks[0], 'AVG'].cumsum(), data3.loc[:breaks[0], gage].cumsum())[0][0]

# fit the regression after the break

m2 = curve_fit(linear_reg, data3.loc[breaks[0]:, 'AVG'].cumsum(), data3.loc[breaks[0]:, gage].cumsum())[0][0]

print('m1 = {0:.3f} m2 = {1:.3f}'.format(m1, m2))

# correction factor

factor = m1 / m2

factor

# copy of the original series

data3[gage + '_'] = data3[gage].copy()

# correct period after the break

data3.loc[breaks[0]:, gage + '_'] *= factor

plt.figure(figsize=(5, 5))

plt.axis('equal')

plt.plot((0, 800), (0, 800), '--k')

plt.plot(data3.AVG.cumsum(), data3[gage].cumsum(), '.-', label='original')

plt.plot(data3.AVG.cumsum(), data3[gage + '_'].cumsum(), '.-', label='corrected')

plt.legend();

# chech again for errors

identify_errors(data3[gage + '_'], data3.AVG, error=.1)

```

#### Gage E

##### Analyse the series

The series in gage E has a similar behaviour to series B. There is an anomaly in the series between 1929 and 1938, indicating that there might be two break points in the double-mass curve.

```

# set gage and year corresponding to the break in the line

gage = 'E'

breaks = [1929, 1938]

# visualize

plt.figure(figsize=(5, 5))

plt.axis('equal')

plt.plot((0, 800), (0, 800), '--k')

plt.plot(data3.AVG.cumsum(), data3[gage].cumsum(), '.-', label='original')

plt.plot(data3.AVG.cumsum().loc[breaks], data3[gage].cumsum().loc[breaks], '.', label='1929')

plt.legend();

# fit the regression untill the first break

m1 = curve_fit(linear_reg, data3.loc[:breaks[0], 'AVG'].cumsum(), data3.loc[:breaks[0], gage].cumsum())[0][0]

# fit the regression from the first to the second break

m2 = curve_fit(linear_reg, data3.loc[breaks[0]:breaks[1], 'AVG'].cumsum(), data3.loc[breaks[0]:breaks[1], gage].cumsum())[0][0]

# fit the regression from the second break on

m3 = curve_fit(linear_reg, data3.loc[breaks[1]:, 'AVG'].cumsum(), data3.loc[breaks[1]:, gage].cumsum())[0][0]

print('m1 = {0:.3f} m2 = {1:.3f} m3 = {2:.3f}'.format(m1, m2, m3))

```

There seems to be only one break in the line between the first and the second period. The slopes in the second and third periods are that close that, most probably, there isn't a change from 1938 on. Apart from that, the break in the line seems to be stronger in 1930 than in 1929, so we will change the breaks to only include 1930. We will assume that the period to be corrected is that before 1930.

```

breaks = [1930]

# fit the regression untill the first break

m1 = curve_fit(linear_reg, data3.loc[:breaks[0], 'AVG'].cumsum(), data3.loc[:breaks[0], gage].cumsum())[0][0]

# fit the regression from the first break

m2 = curve_fit(linear_reg, data3.loc[breaks[0]:, 'AVG'].cumsum(), data3.loc[breaks[0]:, gage].cumsum())[0][0]

m1, m2

```

##### Correct the series

```

# correction factor

factor = m2 / m1

factor

# copy of the original series

data3['E_'] = data3[gage].copy()

# correct period before the first break

data3.loc[:breaks[0], 'E_'] *= factor

plt.figure(figsize=(5, 5))

plt.axis('equal')

plt.plot((0, 800), (0, 800), '--k')

plt.plot(data3.AVG.cumsum(), data3[gage].cumsum(), '.-', label='original')

plt.plot(data3.AVG.cumsum(), data3[gage + '_'].cumsum(), '.-', label='corrected')

plt.legend();

# chech again for errors

identify_errors(data3[gage + '_'], data3.AVG)

```

We don't identify any more errors, so the assumption that the slopes of the second and third period were close enough was correct.

#### Redraw the double-mass plot

```

# recalculate the average

gages = ['A', 'B_', 'C', 'D__', 'E_']

data3['AVG_'] = data3[gages].mean(axis=1)

fig, axes = plt.subplots(nrows=2, ncols=3, figsize=(12, 8), sharex=True, sharey=True)

for (gage, ax) in zip(gages, axes.flatten()):

ax.plot((0, 800), (0, 800), ':k')

# double-mass curve

ax.plot(data3.AVG_.cumsum(), data3[gage].cumsum(), '.-', label='corrected')

ax.set_title('gage ' + gage)

axes[1, 2].axis('off');

# save figure

plt.savefig('../output/Ex3_double-mass curve.png', dpi=300)

# export corrected series

data3_ = data3.loc[:, gages]

data3_.columns = ['A', 'B', 'C', 'D', 'E']

data3_.to_csv('../output/Ex3_corrected series.csv', float_format='%.2f')

```

| true |

code

| 0.597021 | null | null | null | null |

|

Originaly taken from https://www.easy-tensorflow.com and adapted for the purpose of the course

# Imports

```

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

```

# Load the MNIST dataset

## Data dimenstion

```

from tensorflow.examples.tutorials.mnist import input_data

img_h = img_w = 28 # MNIST images are 28x28

img_size_flat = img_h * img_w # 28x28=784, the total number of pixels

n_classes = 10 # Number of classes, one class per digit

n_channels = 1

```

## Helper functions to load the MNIST data

```

def load_data(mode='train'):

"""

Function to (download and) load the MNIST data

:param mode: train or test

:return: images and the corresponding labels

"""

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

if mode == 'train':

x_train, y_train, x_valid, y_valid = mnist.train.images, mnist.train.labels, \

mnist.validation.images, mnist.validation.labels

x_train, _ = reformat(x_train, y_train)

x_valid, _ = reformat(x_valid, y_valid)

return x_train, y_train, x_valid, y_valid

elif mode == 'test':

x_test, y_test = mnist.test.images, mnist.test.labels

x_test, _ = reformat(x_test, y_test)

return x_test, y_test

def reformat(x, y):

"""

Reformats the data to the format acceptable for convolutional layers

:param x: input array

:param y: corresponding labels

:return: reshaped input and labels

"""

img_size, num_ch, num_class = int(np.sqrt(x.shape[-1])), 1, len(np.unique(np.argmax(y, 1)))

dataset = x.reshape((-1, img_size, img_size, num_ch)).astype(np.float32)

labels = (np.arange(num_class) == y[:, None]).astype(np.float32)

return dataset, labels

def randomize(x, y):

""" Randomizes the order of data samples and their corresponding labels"""

permutation = np.random.permutation(y.shape[0])

shuffled_x = x[permutation, :, :, :]

shuffled_y = y[permutation]

return shuffled_x, shuffled_y

def get_next_batch(x, y, start, end):

x_batch = x[start:end]

y_batch = y[start:end]

return x_batch, y_batch

```

## Load the data and display the sizes

Now we can use the defined helper function in "train" mode which loads the train and validation images and their corresponding labels. We'll also display their sizes:

```

x_train, y_train, x_valid, y_valid = load_data(mode='train')

print("Size of:")

print("- Training-set:\t\t{}".format(len(y_train)))

print("- Validation-set:\t{}".format(len(y_valid)))

```

# Hyperparameters

```

logs_path = "./logs" # path to the folder that we want to save the logs for Tensorboard

lr = 0.001 # The optimization initial learning rate

epochs = 10 # Total number of training epochs

batch_size = 100 # Training batch size

display_freq = 100 # Frequency of displaying the training results

```

# Network configuration

```

# 1st Convolutional Layer

filter_size1 = 5 # Convolution filters are 5 x 5 pixels.

num_filters1 = 16 # There are 16 of these filters.

stride1 = 1 # The stride of the sliding window

# 2nd Convolutional Layer

filter_size2 = 5 # Convolution filters are 5 x 5 pixels.

num_filters2 = 32 # There are 32 of these filters.

stride2 = 1 # The stride of the sliding window

# Fully-connected layer.

h1 = 128 # Number of neurons in fully-connected layer.

```

# Create network helper functions

## Helper functions for creating new variables

```

# weight and bais wrappers

def weight_variable(shape):

"""

Create a weight variable with appropriate initialization

:param name: weight name

:param shape: weight shape

:return: initialized weight variable

"""

initer = tf.truncated_normal_initializer(stddev=0.01)

return tf.get_variable('W',

dtype=tf.float32,

shape=shape,

initializer=initer)

def bias_variable(shape):

"""

Create a bias variable with appropriate initialization

:param name: bias variable name

:param shape: bias variable shape

:return: initialized bias variable

"""

initial = tf.constant(0., shape=shape, dtype=tf.float32)

return tf.get_variable('b',

dtype=tf.float32,

initializer=initial)

```

## Helper-function for creating a new Convolutional Layer

```

def conv_layer(x, filter_size, num_filters, stride, name):

"""

Create a 2D convolution layer

:param x: input from previous layer

:param filter_size: size of each filter

:param num_filters: number of filters (or output feature maps)

:param stride: filter stride

:param name: layer name

:return: The output array

"""

with tf.variable_scope(name):

num_in_channel = x.get_shape().as_list()[-1]

shape = [filter_size, filter_size, num_in_channel, num_filters]

W = weight_variable(shape=shape)

tf.summary.histogram('weight', W)

b = bias_variable(shape=[num_filters])

tf.summary.histogram('bias', b)

layer = tf.nn.conv2d(x, W,

strides=[1, stride, stride, 1],

padding="SAME")

layer += b

return tf.nn.relu(layer)

```

## Helper-function for creating a new Max-pooling Layer

```

def max_pool(x, ksize, stride, name):

"""

Create a max pooling layer

:param x: input to max-pooling layer

:param ksize: size of the max-pooling filter

:param stride: stride of the max-pooling filter

:param name: layer name

:return: The output array

"""

return tf.nn.max_pool(x,

ksize=[1, ksize, ksize, 1],

strides=[1, stride, stride, 1],

padding="SAME",

name=name)

```

# Helper-function for flattening a layer

```

def flatten_layer(layer):

"""

Flattens the output of the convolutional layer to be fed into fully-connected layer

:param layer: input array

:return: flattened array

"""

with tf.variable_scope('Flatten_layer'):

layer_shape = layer.get_shape()

num_features = layer_shape[1:4].num_elements()

layer_flat = tf.reshape(layer, [-1, num_features])

return layer_flat

```

## Helper-function for creating a new fully-connected Layer

```

def fc_layer(x, num_units, name, use_relu=True):

"""

Create a fully-connected layer

:param x: input from previous layer

:param num_units: number of hidden units in the fully-connected layer

:param name: layer name

:param use_relu: boolean to add ReLU non-linearity (or not)

:return: The output array

"""

with tf.variable_scope(name):

in_dim = x.get_shape()[1]

W = weight_variable(shape=[in_dim, num_units])

tf.summary.histogram('weight', W)

b = bias_variable(shape=[num_units])

tf.summary.histogram('bias', b)

layer = tf.matmul(x, W)

layer += b

if use_relu:

layer = tf.nn.relu(layer)

return layer

```

# Network graph

## Placeholders for the inputs (x) and corresponding labels (y)

```

with tf.name_scope('Input'):

x = tf.placeholder(tf.float32, shape=[None, img_h, img_w, n_channels], name='X')

y = tf.placeholder(tf.float32, shape=[None, n_classes], name='Y')

```

## Create the network layers

```

conv1 = conv_layer(x, filter_size1, num_filters1, stride1, name='conv1')

pool1 = max_pool(conv1, ksize=2, stride=2, name='pool1')

conv2 = conv_layer(pool1, filter_size2, num_filters2, stride2, name='conv2')

pool2 = max_pool(conv2, ksize=2, stride=2, name='pool2')

layer_flat = flatten_layer(pool2)

fc1 = fc_layer(layer_flat, h1, 'FC1', use_relu=True)

output_logits = fc_layer(fc1, n_classes, 'OUT', use_relu=False)

```

## Define the loss function, optimizer, accuracy, and predicted class

```

with tf.variable_scope('Train'):

with tf.variable_scope('Loss'):

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y, logits=output_logits), name='loss')

tf.summary.scalar('loss', loss)

with tf.variable_scope('Optimizer'):

optimizer = tf.train.AdamOptimizer(learning_rate=lr, name='Adam-op').minimize(loss)

with tf.variable_scope('Accuracy'):

correct_prediction = tf.equal(tf.argmax(output_logits, 1), tf.argmax(y, 1), name='correct_pred')

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32), name='accuracy')

tf.summary.scalar('accuracy', accuracy)

with tf.variable_scope('Prediction'):

cls_prediction = tf.argmax(output_logits, axis=1, name='predictions')

```

## Initialize all variables and merge the summaries

```

# Initialize the variables

init = tf.global_variables_initializer()

# Merge all summaries

merged = tf.summary.merge_all()

```

# Train

```

sess = tf.InteractiveSession()

sess.run(init)

global_step = 0

summary_writer = tf.summary.FileWriter(logs_path, sess.graph)

# Number of training iterations in each epoch

num_tr_iter = int(len(y_train) / batch_size)

for epoch in range(epochs):

print('Training epoch: {}'.format(epoch + 1))

x_train, y_train = randomize(x_train, y_train)

for iteration in range(num_tr_iter):

global_step += 1

start = iteration * batch_size

end = (iteration + 1) * batch_size

x_batch, y_batch = get_next_batch(x_train, y_train, start, end)

# Run optimization op (backprop)

feed_dict_batch = {x: x_batch, y: y_batch}

sess.run(optimizer, feed_dict=feed_dict_batch)

if iteration % display_freq == 0:

# Calculate and display the batch loss and accuracy

loss_batch, acc_batch, summary_tr = sess.run([loss, accuracy, merged],

feed_dict=feed_dict_batch)

summary_writer.add_summary(summary_tr, global_step)

print("iter {0:3d}:\t Loss={1:.2f},\tTraining Accuracy={2:.01%}".

format(iteration, loss_batch, acc_batch))

# Run validation after every epoch

feed_dict_valid = {x: x_valid, y: y_valid}

loss_valid, acc_valid = sess.run([loss, accuracy], feed_dict=feed_dict_valid)

print('---------------------------------------------------------')

print("Epoch: {0}, validation loss: {1:.2f}, validation accuracy: {2:.01%}".

format(epoch + 1, loss_valid, acc_valid))

print('---------------------------------------------------------')

```

# Test

```

def plot_images(images, cls_true, cls_pred=None, title=None):

"""

Create figure with 3x3 sub-plots.

:param images: array of images to be plotted, (9, img_h*img_w)

:param cls_true: corresponding true labels (9,)

:param cls_pred: corresponding true labels (9,)

"""

fig, axes = plt.subplots(3, 3, figsize=(9, 9))

fig.subplots_adjust(hspace=0.3, wspace=0.3)

for i, ax in enumerate(axes.flat):

# Plot image.

ax.imshow(np.squeeze(images[i]), cmap='binary')

# Show true and predicted classes.

if cls_pred is None:

ax_title = "True: {0}".format(cls_true[i])

else:

ax_title = "True: {0}, Pred: {1}".format(cls_true[i], cls_pred[i])

ax.set_title(ax_title)

# Remove ticks from the plot.

ax.set_xticks([])

ax.set_yticks([])

if title:

plt.suptitle(title, size=20)

plt.show(block=False)

def plot_example_errors(images, cls_true, cls_pred, title=None):

"""

Function for plotting examples of images that have been mis-classified

:param images: array of all images, (#imgs, img_h*img_w)

:param cls_true: corresponding true labels, (#imgs,)

:param cls_pred: corresponding predicted labels, (#imgs,)

"""

# Negate the boolean array.

incorrect = np.logical_not(np.equal(cls_pred, cls_true))

# Get the images from the test-set that have been

# incorrectly classified.

incorrect_images = images[incorrect]

# Get the true and predicted classes for those images.

cls_pred = cls_pred[incorrect]

cls_true = cls_true[incorrect]

# Plot the first 9 images.

plot_images(images=incorrect_images[0:9],

cls_true=cls_true[0:9],

cls_pred=cls_pred[0:9],

title=title)

# Test the network when training is done

x_test, y_test = load_data(mode='test')

feed_dict_test = {x: x_test, y: y_test}

loss_test, acc_test = sess.run([loss, accuracy], feed_dict=feed_dict_test)

print('---------------------------------------------------------')

print("Test loss: {0:.2f}, test accuracy: {1:.01%}".format(loss_test, acc_test))

print('---------------------------------------------------------')

# Plot some of the correct and misclassified examples

cls_pred = sess.run(cls_prediction, feed_dict=feed_dict_test)

cls_true = np.argmax(y_test, axis=1)

plot_images(x_test, cls_true, cls_pred, title='Correct Examples')

plot_example_errors(x_test, cls_true, cls_pred, title='Misclassified Examples')

plt.show()

# close the session after you are done with testing

sess.close()

```

At this step our coding is done. We can inspect more in our network using the Tensorboard open your terminal and move inside the notebookz folder in my case *C:\Dev\UpdateConference2019\notebooks*

and type:

```

tensorboard --logdir=logs --host localhost

```

| true |

code

| 0.858985 | null | null | null | null |

|

# Calculating the Bilingual Evaluation Understudy (BLEU) score: Ungraded Lab

In this ungraded lab, we will implement a popular metric for evaluating the quality of machine-translated text: the BLEU score proposed by Kishore Papineni, et al. In their 2002 paper ["BLEU: a Method for Automatic Evaluation of Machine Translation"](https://www.aclweb.org/anthology/P02-1040.pdf), the BLEU score works by comparing "candidate" text to one or more "reference" translations. The result is better the closer the score is to 1. Let's see how to get this value in the following sections.

# Part 1: BLEU Score

## 1.1 Importing the Libraries

We will first start by importing the Python libraries we will use in the first part of this lab. For learning, we will implement our own version of the BLEU Score using Numpy. To verify that our implementation is correct, we will compare our results with those generated by the [SacreBLEU library](https://github.com/mjpost/sacrebleu). This package provides hassle-free computation of shareable, comparable, and reproducible BLEU scores. It also knows all the standard test sets and handles downloading, processing, and tokenization.

```

%%capture

!pip3 install sacrebleu

%%capture

!wget https://raw.githubusercontent.com/martin-fabbri/colab-notebooks/master/deeplearning.ai/nlp/datasets/wmt19_can.txt

!wget https://raw.githubusercontent.com/martin-fabbri/colab-notebooks/master/deeplearning.ai/nlp/datasets/wmt19_ref.txt

!wget https://raw.githubusercontent.com/martin-fabbri/colab-notebooks/master/deeplearning.ai/nlp/datasets/wmt19_src.txt

import math

from collections import Counter

import matplotlib.pyplot as plt

import nltk

import numpy as np

import sacrebleu

from nltk.util import ngrams

nltk.download("punkt")

!pip list | grep "nltk\|sacrebleu"

```

## 1.2 Defining the BLEU Score

You have seen the formula for calculating the BLEU score in this week's lectures. More formally, we can express the BLEU score as:

$$BLEU = BP\Bigl(\prod_{i=1}^{4}precision_i\Bigr)^{(1/4)}$$

with the Brevity Penalty and precision defined as:

$$BP = min\Bigl(1, e^{(1-({ref}/{cand}))}\Bigr)$$

$$precision_i = \frac {\sum_{snt \in{cand}}\sum_{i\in{snt}}min\Bigl(m^{i}_{cand}, m^{i}_{ref}\Bigr)}{w^{i}_{t}}$$

where:

* $m^{i}_{cand}$, is the count of i-gram in candidate matching the reference translation.

* $m^{i}_{ref}$, is the count of i-gram in the reference translation.

* $w^{i}_{t}$, is the total number of i-grams in candidate translation.

## 1.3 Explaining the BLEU score

### Brevity Penalty (example):

```

ref_length = np.ones(100)

can_length = np.linspace(1.5, 0.5, 100)

x = ref_length / can_length

y = 1 - x

y = np.exp(y)

y = np.minimum(np.ones(y.shape), y)

# Code for in order to make the plot

fig, ax = plt.subplots(1)

lines = ax.plot(x, y)

ax.set(

xlabel="Ratio of the length of the reference to the candidate text",

ylabel="Brevity Penalty",

)

plt.show()

```

The brevity penalty penalizes generated translations that are too short compared to the closest reference length with an exponential decay. The brevity penalty compensates for the fact that the BLEU score has no recall term.

### N-Gram Precision (example):

```

data = {"1-gram": 0.8, "2-gram": 0.7, "3-gram": 0.6, "4-gram": 0.5}

names = list(data.keys())

values = list(data.values())

fig, ax = plt.subplots(1)

bars = ax.bar(names, values)

ax.set(ylabel="N-gram precision")

plt.show()

```

The n-gram precision counts how many unigrams, bigrams, trigrams, and four-grams (i=1,...,4) match their n-gram counterpart in the reference translations. This term acts as a precision metric. Unigrams account for adequacy while longer n-grams account for fluency of the translation. To avoid overcounting, the n-gram counts are clipped to the maximal n-gram count occurring in the reference ($m_{n}^{ref}$). Typically precision shows exponential decay with the with the degree of the n-gram.

### N-gram BLEU score (example):

```

data = {"1-gram": 0.8, "2-gram": 0.77, "3-gram": 0.74, "4-gram": 0.71}

names = list(data.keys())

values = list(data.values())

fig, ax = plt.subplots(1)

bars = ax.bar(names, values)

ax.set(ylabel="Modified N-gram precision")

plt.show()

```

When the n-gram precision is multiplied by the BP, then the exponential decay of n-grams is almost fully compensated. The BLEU score corresponds to a geometric average of this modified n-gram precision.

## 1.4 Example Calculations of the BLEU score

In this example we will have a reference translation and 2 candidates translations. We will tokenize all sentences using the NLTK package introduced in Course 2 of this NLP specialization.

```

reference = "The NASA Opportunity rover is battling a massive dust storm on planet Mars."

candidate_1 = "The Opportunity rover is combating a big sandstorm on planet Mars."

candidate_2 = "A NASA rover is fighting a massive storm on planet Mars."

tokenized_ref = nltk.word_tokenize(reference.lower())

tokenized_cand_1 = nltk.word_tokenize(candidate_1.lower())

tokenized_cand_2 = nltk.word_tokenize(candidate_2.lower())

print(f"{reference} -> {tokenized_ref}")

print("\n")

print(f"{candidate_1} -> {tokenized_cand_1}")

print("\n")

print(f"{candidate_2} -> {tokenized_cand_2}")

```

### STEP 1: Computing the Brevity Penalty

```

def brevity_penalty(candidate, reference):

ref_length = len(reference)

can_length = len(candidate)

# Brevity Penalty

if ref_length < can_length: # if reference length is less than candidate length

BP = 1 # set BP = 1

else:

penalty = 1 - (ref_length / can_length) # else set BP=exp(1-(ref_length/can_length))

BP = np.exp(penalty)

return BP

```

### STEP 2: Computing the Precision

```

def clipped_precision(candidate, reference):

"""

Clipped precision function given a original and a machine translated sentences

"""

clipped_precision_score = []

for i in range(1, 5):

ref_n_gram = Counter(ngrams(reference,i))

cand_n_gram = Counter(ngrams(candidate,i))

c = sum(cand_n_gram.values())

for j in cand_n_gram: # for every n-gram up to 4 in candidate text

if j in ref_n_gram: # check if it is in the reference n-gram

if cand_n_gram[j] > ref_n_gram[j]: # if the count of the candidate n-gram is bigger

# than the corresponding count in the reference n-gram,

cand_n_gram[j] = ref_n_gram[j] # then set the count of the candidate n-gram to be equal

# to the reference n-gram

else:

cand_n_gram[j] = 0 # else set the candidate n-gram equal to zero

clipped_precision_score.append(sum(cand_n_gram.values())/c)

weights =[0.25]*4

s = (w_i * math.log(p_i) for w_i, p_i in zip(weights, clipped_precision_score))

s = math.exp(math.fsum(s))

return s

```

### STEP 3: Computing the BLEU score

```

def bleu_score(candidate, reference):

BP = brevity_penalty(candidate, reference)

precision = clipped_precision(candidate, reference)

return BP * precision

```

### STEP 4: Testing with our Example Reference and Candidates Sentences

```

print(

"Results reference versus candidate 1 our own code BLEU: ",

round(bleu_score(tokenized_cand_1, tokenized_ref) * 100, 1),

)

print(

"Results reference versus candidate 2 our own code BLEU: ",

round(bleu_score(tokenized_cand_2, tokenized_ref) * 100, 1),

)

```

### STEP 5: Comparing the Results from our Code with the SacreBLEU Library

```

print(

"Results reference versus candidate 1 sacrebleu library BLEU: ",

round(sacrebleu.corpus_bleu(candidate_1, reference).score, 1),

)

print(

"Results reference versus candidate 2 sacrebleu library BLEU: ",

round(sacrebleu.corpus_bleu(candidate_2, reference).score, 1),

)

```

# Part 2: BLEU computation on a corpus

## Loading Data Sets for Evaluation Using the BLEU Score

In this section, we will show a simple pipeline for evaluating machine translated text. Due to storage and speed constraints, we will not be using our own model in this lab (you'll get to do that in the assignment!). Instead, we will be using [Google Translate](https://translate.google.com) to generate English to German translations and we will evaluate it against a known evaluation set. There are three files we will need:

1. A source text in English. In this lab, we will use the first 1671 words of the [wmt19](http://statmt.org/wmt19/translation-task.html) evaluation dataset downloaded via SacreBLEU. We just grabbed a subset because of limitations in the number of words that can be translated using Google Translate.

2. A reference translation to German of the corresponding first 1671 words from the original English text. This is also provided by SacreBLEU.

3. A candidate machine translation to German from the same 1671 words. This is generated by feeding the source text to a machine translation model. As mentioned above, we will use Google Translate to generate the translations in this file.

With that, we can now compare the reference an candidate translation to get the BLEU Score.

```

# Loading the raw data

wmt19_src = open("wmt19_src.txt", "rU")

wmt19_src_1 = wmt19_src.read()

wmt19_src.close()

wmt19_ref = open("wmt19_ref.txt", "rU")

wmt19_ref_1 = wmt19_ref.read()

wmt19_ref.close()

wmt19_can = open("wmt19_can.txt", "rU")

wmt19_can_1 = wmt19_can.read()

wmt19_can.close()

tokenized_corpus_src = nltk.word_tokenize(wmt19_src_1.lower())

tokenized_corpus_ref = nltk.word_tokenize(wmt19_ref_1.lower())

tokenized_corpus_cand = nltk.word_tokenize(wmt19_can_1.lower())

print("English source text:")

print("\n")

print(f"{wmt19_src_1[0:170]} -> {tokenized_corpus_src[0:30]}")

print("\n")

print("German reference translation:")

print("\n")

print(f"{wmt19_ref_1[0:219]} -> {tokenized_corpus_ref[0:35]}")

print("\n")

print("German machine translation:")

print("\n")

print(f"{wmt19_can_1[0:199]} -> {tokenized_corpus_cand[0:29]}")

print(

"Results reference versus candidate 1 our own BLEU implementation: ",

round(bleu_score(tokenized_corpus_cand, tokenized_corpus_ref) * 100, 1),

)

print(

"Results reference versus candidate 1 sacrebleu library BLEU: ",

round(sacrebleu.corpus_bleu(wmt19_can_1, wmt19_ref_1).score, 1),

)

```

**BLEU Score Interpretation on a Corpus**

|Score | Interpretation |

|:---------:|:-------------------------------------------------------------:|

| < 10 | Almost useless |

| 10 - 19 | Hard to get the gist |

| 20 - 29 | The gist is clear, but has significant grammatical errors |

| 30 - 40 | Understandable to good translations |

| 40 - 50 | High quality translations |

| 50 - 60 | Very high quality, adequate, and fluent translations |

| > 60 | Quality often better than human |

From the table above (taken [here](https://cloud.google.com/translate/automl/docs/evaluate)), we can see the translation is high quality (*if you see "Hard to get the gist", please open your workspace, delete `wmt19_can.txt` and get the latest version via the Lab Help button*). Moreover, the results of our coded BLEU score are almost identical to those of the SacreBLEU package.

| true |

code

| 0.562056 | null | null | null | null |

|

Avani Gupta <br>

Roll: 2019121004

# Excercise - Multi-class classification of MNIST using Perceptron

In binary perceptron, where $\mathbf{y} \in \{-1, +1\}$, we used to update our weights only for wrongly classified examples.

The multi-class perceptron is regarded as a generalization of binary perceptron. Learning through iteration is the same as the perceptron. Weighted inputs are passed through a multiclass signum activation function. If the predicted output label is the same as true label then weights are not updated. However, when predicted output label $\neq$ true label, then the wrongly classified input example is added to the weights of the correct label and subtracted from the weights of the incorrect label. Effectively, this amounts to ’rewarding’ the correct weight vector, ’punishing’ the misleading, incorrect weight

vector, and leaving alone an other weight vectors.

```

from sklearn import datasets

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

import random

import numpy as np

import seaborn as sns; sns.set();

import pandas as pd

import math

import gif

import warnings

warnings.filterwarnings('ignore')

# Setting the seed to ensure reproducibility of experiments

np.random.seed(11)

# One-hot encoding of target label, Y

def one_hot(a):

b = -1 * np.ones((a.size, a.max()+1))

b[np.arange(a.size), a] = 1

return b

# Loading digits datasets

digits = datasets.load_digits()

# One-hot encoding of target label, Y

Y = digits.target

Y = one_hot(Y)

# Adding column of ones to absorb bias b of the hyperplane into X

X = digits.data

bias_ones = np.ones((len(X), 1))

X = np.hstack((X, bias_ones))

# Train-val-test data

X_train_val, X_test, Y_train_val, Y_test = train_test_split(X, Y, shuffle=True, test_size = 0.2)

X_train, X_val, Y_train, Y_val = train_test_split(X_train_val, Y_train_val, test_size = 0.12517)

print("Training dataset: ", X_train.shape)

print("Validation dataset: ", X_val.shape)

print("Test dataset: ", X_test.shape)

sns.reset_orig();

plt.gray()

plt.matshow(digits.images[10])

plt.show();

```

#### Write your code below

tut notebook functions

```

# Defining signum activation function

def signum(vec_w_x):

""" signum activation for perceptron

Parameters

------------

vec_w_x: ndarray

Weighted inputs

"""

vec_w_x[vec_w_x >= 0] = 1

vec_w_x[vec_w_x < 0] = -1

return vec_w_x

# multi-class signum

def multi_class_signum(vec_w_x):

""" Multiclass signum activation.

Parameters

------------

vec_w_x: ndarray

Weighted inputs

"""

flag = np.all(vec_w_x == 0)

if flag:

return vec_w_x

else:

num_examples, num_outputs = np.shape(vec_w_x)

range_examples = np.array(range(0, num_examples))

zero_idxs = np.argwhere(np.all(vec_w_x == 0, axis=1))

non_zero_examples = np.delete(range_examples, zero_idxs[:, 0])

signum_vec_w_x = vec_w_x[non_zero_examples]

maxvals = np.amax(signum_vec_w_x, axis=1)

for i in range(num_examples):

idx = np.argwhere(signum_vec_w_x == maxvals[i])[0]

signum_vec_w_x[idx[0], idx[1]] = 1

non_maxvals_idxs = np.argwhere(signum_vec_w_x != 1)

signum_vec_w_x[non_maxvals_idxs[:, 0], non_maxvals_idxs[:, 1]] = -1

vec_w_x[non_zero_examples] = signum_vec_w_x

return vec_w_x

# Evaluation for train, val, and test set.

def get_accuracy(y_predicted, Y_input_set, num_datapoints):

miscls_points = np.argwhere(np.any(y_predicted != Y_input_set, axis=1))

miscls_points = np.unique(miscls_points)

accuracy = (1-len(miscls_points)/num_datapoints)*100

return accuracy

def get_prediction(X_input_set, Y_input_set, weights, get_acc=True, model_type='perceptron', predict='no'):

if len(Y_input_set) != 0:

num_datapoints, num_categories = np.shape(Y_input_set)

vec_w_transpose_x = np.dot(X_input_set, weights)

if num_categories > 1: # Multi-class

if model_type == 'perceptron':

y_pred_out = multi_class_signum(vec_w_transpose_x)

elif model_type == 'logreg':

y_pred_out = softmax(X_input_set, vec_w_transpose_x, predict=predict)

else: # Binary class

if model_type == 'perceptron' or model_type == 'LinearDA':

y_pred_out = signum(vec_w_transpose_x)

elif model_type == 'logreg':

y_pred_out = sigmoid(vec_w_transpose_x, predict=predict)

# Both prediction and evaluation

if get_acc:

cls_acc = get_accuracy(y_pred_out, Y_input_set, num_datapoints)

return cls_acc

# Only prediction

return y_pred_out

# Perceptron training algorithm

def train(X_train, Y_train, weights, learning_rate=1, total_epochs=100):

"""Training method for Perceptron.

Parameters

-----------

X_train: ndarray (num_examples(rows) vs num_features(columns))

Input dataset which perceptron will use to learn optimal weights

Y_train: ndarray (num_examples(rows) vs class_labels(columns))

Class labels for input data

weights: ndarray (num_features vs n_output)

Weights used to train the network and predict on test set

learning_rate: int

Learning rate use to learn and update weights

total_epochs: int

Max number of epochs to train the perceptron model

"""

n_samples, _ = np.shape(X_train)

history_weights = []

epoch = 1

# Number of missclassified points we would like to see in the train set.

# While training, its value will change every epoch. If m==0, our training

# error will be zero.

m = 1

# If the most recent weights gave 0 misclassifications, break the loop.

# Else continue until total_epochs is completed.

while m != 0 and epoch <= total_epochs:

m = 0

# Compute weighted inputs and predict class labels on training set.

weights_transpose_x = np.dot(X_train, weights)

weights_transpose_x = signum(weights_transpose_x)

y_train_out = np.multiply(Y_train, weights_transpose_x)

epoch += 1

# Collecting misclassified indexes and count them

y_miscls_idxs = np.argwhere(y_train_out <= 0)[:, 0]

y_miscls_idxs = np.unique(y_miscls_idxs)

m = len(y_miscls_idxs)

# Calculate gradients and update weights

dweights = np.dot((X_train[y_miscls_idxs]).T, Y_train[y_miscls_idxs])

weights += (learning_rate/n_samples) * dweights

weights = np.round(weights, decimals=4)

# Append weights to visualize decision boundary later

history_weights.append(weights)

if m == 0 and epoch <= total_epochs:

print("Training has stabilized with all points classified: ", epoch)

else:

print(f'Training completed at {epoch-1} epochs. {m} misclassified points remain.')

return history_weights

```

My code

```

weights_arr = np.zeros((X_train.shape[1], Y_train.shape[1]))

for i in range(Y_train.shape[1]):

weights = np.zeros((X_train.shape[1], 1))

weights_arr[:, i:i+1] = train(X_train, Y_train[:, i].reshape((-1,1)), weights, 1, 10000)[-1].copy()

def accuracy(X, Y, W):

pred = X @ W

Class_value = np.max(pred, axis=1, keepdims=True)

pred = (pred == Class_value )

class1 = np.where(Y == 1, True, False)

match = pred[class1]

acc = np.mean(match) * 100

return acc

train_acc = accuracy(X_train, Y_train, weights_arr)

print("Train accuracy: ",train_acc)

val_acc = accuracy(X_val, Y_val, weights_arr)

print("Validation accuracy: ", val_acc)

test_acc = accuracy(X_test, Y_test, weights_arr)

print("Test accuracy: ", test_acc)

```

| true |

code

| 0.782761 | null | null | null | null |

|

<a href="https://colab.research.google.com/github/bs3537/dengueAI/blob/master/V5_San_Juan_XGB_all_environmental_features.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

```

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

#https://www.drivendata.org/competitions/44/dengai-predicting-disease-spread/page/80/

#Your goal is to predict the total_cases label for each (city, year, weekofyear) in the test set.

#Performance metric = mean absolute error

```

##LIST OF FEATURES:

You are provided the following set of information on a (year, weekofyear) timescale:

(Where appropriate, units are provided as a _unit suffix on the feature name.)

###City and date indicators

1. city – City abbreviations: sj for San Juan and iq for Iquitos

2. week_start_date – Date given in yyyy-mm-dd format

###NOAA's GHCN daily climate data weather station measurements

1. station_max_temp_c – Maximum temperature

2. station_min_temp_c – Minimum temperature

3. station_avg_temp_c – Average temperature

4. station_precip_mm – Total precipitation

5. station_diur_temp_rng_c – Diurnal temperature range

###PERSIANN satellite precipitation measurements (0.25x0.25 degree scale)

6. precipitation_amt_mm – Total precipitation

###NOAA's NCEP Climate Forecast System Reanalysis measurements (0.5x0.5 degree scale)

7. reanalysis_sat_precip_amt_mm – Total precipitation

8. reanalysis_dew_point_temp_k – Mean dew point temperature

9. reanalysis_air_temp_k – Mean air temperature

10. reanalysis_relative_humidity_percent – Mean relative humidity

11. reanalysis_specific_humidity_g_per_kg – Mean specific humidity

12. reanalysis_precip_amt_kg_per_m2 – Total precipitation

13. reanalysis_max_air_temp_k – Maximum air temperature

14. reanalysis_min_air_temp_k – Minimum air temperature

15. reanalysis_avg_temp_k – Average air temperature

16. reanalysis_tdtr_k – Diurnal temperature range

###Satellite vegetation - Normalized difference vegetation index (NDVI) - NOAA's CDR Normalized Difference Vegetation Index (0.5x0.5 degree scale) measurements

17. ndvi_se – Pixel southeast of city centroid

18. ndvi_sw – Pixel southwest of city centroid

19. ndvi_ne – Pixel northeast of city centroid

20. ndvi_nw – Pixel northwest of city centroid

####TARGET VARIABLE = total_cases label for each (city, year, weekofyear)

```

import sys

#Load train features and labels datasets

train_features = pd.read_csv('https://s3.amazonaws.com/drivendata/data/44/public/dengue_features_train.csv')

train_features.head()

train_features.shape

train_labels = pd.read_csv('https://s3.amazonaws.com/drivendata/data/44/public/dengue_labels_train.csv')

train_labels.head()

train_labels.shape

#Merge train features and labels datasets

train = pd.merge(train_features, train_labels)

train.head()

train.shape

#city, year and week of year columns are duplicate in train_features and train_labels datasets so the total_cases column is added to the features dataset

train.dtypes

#Data rows for San Juan

train.city.value_counts()

#San Juan has 936 rows which we can isolate and analyze separately

train = train[train['city'].str.match('sj')]

train.head(5)

train.shape

#Thus, we have isolated the train dataset with only city data for San Juan

#Distribution of the target

import seaborn as sns

sns.distplot(train['total_cases'])

#The target distribution is skewed

#Find outliers

train['total_cases'].describe()

#Remove outliers

train = train[(train['total_cases'] >= np.percentile(train['total_cases'], 0.5)) &

(train['total_cases'] <= np.percentile(train['total_cases'], 99.5))]

train.shape

sns.distplot(train['total_cases'])

#Do train, val split

from sklearn.model_selection import train_test_split

train, val = train_test_split(train, train_size=0.80, test_size=0.20,

random_state=42)

train.shape, val.shape

#Load test features dataset (for the competition)

test = pd.read_csv('https://s3.amazonaws.com/drivendata/data/44/public/dengue_features_test.csv')

#Pandas Profiling

```

#####Baseline statistics (mean and MAE) for the target variable total_cases in train dataset and baseline validation MAE

```

train['total_cases']. describe()

#Baseline mean and mean absolute error

guess = train['total_cases'].mean()

print(f'At the baseline, the mean total number of dengue cases in a year is: {guess:.2f}')

#If we had just guessed that the total number of dengue cases was 31.58 for a city in a particular year, we would be off by how much?

from sklearn.metrics import mean_absolute_error

# Arrange y target vectors

target = 'total_cases'

y_train = train[target]

y_val = val[target]

# Get mean baseline

print('Mean Baseline (using 0 features)')

guess = y_train.mean()

# Train Error

y_pred = [guess] * len(y_train)

mae = mean_absolute_error(y_train, y_pred)

print(f'Train mean absolute error: {mae:.2f} dengue cases per year')

# Test Error

y_pred = [guess] * len(y_val)

mae = mean_absolute_error(y_val, y_pred)

print(f'Validation mean absolute error: {mae:.2f} dengue cases per year')

#we need to convert week_start_date to numeric form uisng pd.to_dateime function

#wrangle function

def wrangle(X):

X = X.copy()

# Convert week_start_date to numeric form

X['week_start_date'] = pd.to_datetime(X['week_start_date'], infer_datetime_format=True)

# Extract components from date_recorded, then drop the original column

X['year_recorded'] = X['week_start_date'].dt.year

X['month_recorded'] = X['week_start_date'].dt.month

#X['day_recorded'] = X['week_start_date'].dt.day

X = X.drop(columns='week_start_date')

X = X.drop(columns='year')

X = X.drop(columns='station_precip_mm')

#I engineered few features which represent standing water, high risk feature for mosquitos

#1. X['standing water feature 1'] = X['station_precip_mm'] / X['station_max_temp_c']

#Standing water features

X['total satellite vegetation index of city'] = X['ndvi_se'] + X['ndvi_sw'] + X['ndvi_ne'] + X['ndvi_nw']

#Standing water features

#Standing water feature 1 = 'NOAA GCN precipitation amount in kg per m2 reanalyzed' * (total vegetation, sum of all 4 parts of the city)

X['standing water feature 1'] = X['reanalysis_precip_amt_kg_per_m2'] * X['total satellite vegetation index of city']

#Standing water feature 2: 'NOAA GCN precipitation amount in kg per m2 reanalyzed'} * 'NOAA GCN mean relative humidity in pct reanalyzed'}

X['standing water feature 2'] = X['reanalysis_precip_amt_kg_per_m2'] * X['reanalysis_relative_humidity_percent']

#Standing water feature 3: 'NOAA GCN precipitation amount in kg per m2 reanalyzed'} * 'NOAA GCN mean relative humidity in pct reanalyzed'} * (total vegetation)

X['standing water feature 3'] = X['reanalysis_precip_amt_kg_per_m2'] * X['reanalysis_relative_humidity_percent'] * X['total satellite vegetation index of city']

#Standing water feature 4: 'NOAA GCN precipitation amount in kg per m2 reanalyzed'} / 'NOAA GCN max air temp reanalyzed'

X['standing water feature 4'] = X['reanalysis_precip_amt_kg_per_m2'] / X['reanalysis_max_air_temp_k']

#Standing water feature 5: ['NOAA GCN precipitation amount in kg per m2 reanalyzed'} * 'NOAA GCN mean relative humidity in pct reanalyzed'} * (total vegetation)]/['NOAA GCN max air temp reanalyzed']

X['standing water feature 5'] = X['reanalysis_precip_amt_kg_per_m2'] * X['reanalysis_relative_humidity_percent'] * X['total satellite vegetation index of city'] / X['reanalysis_max_air_temp_k']

#Rename columns

X.rename(columns= {'reanalysis_air_temp_k':'Mean air temperature in K'}, inplace=True)

X.rename(columns= {'reanalysis_min_air_temp_k':'Minimum air temperature in K'}, inplace=True)

X.rename(columns= {'weekofyear':'Week of Year'}, inplace=True)

X.rename(columns= {'station_diur_temp_rng_c':'Diurnal temperature range in C'}, inplace=True)

X.rename(columns= {'reanalysis_precip_amt_kg_per_m2':'Total precipitation kg/m2'}, inplace=True)

X.rename(columns= {'reanalysis_tdtr_k':'Diurnal temperature range in K'}, inplace=True)

X.rename(columns= {'reanalysis_max_air_temp_k':'Maximum air temperature in K'}, inplace=True)

X.rename(columns= {'year_recorded':'Year recorded'}, inplace=True)

X.rename(columns= {'reanalysis_relative_humidity_percent':'Mean relative humidity'}, inplace=True)

X.rename(columns= {'month_recorded':'Month recorded'}, inplace=True)

X.rename(columns= {'reanalysis_dew_point_temp_k':'Mean dew point temp in K'}, inplace=True)

X.rename(columns= {'precipitation_amt_mm':'Total precipitation in mm'}, inplace=True)

X.rename(columns= {'station_min_temp_c':'Minimum temp in C'}, inplace=True)

X.rename(columns= {'ndvi_se':'Southeast vegetation index'}, inplace=True)

X.rename(columns= {'ndvi_ne':'Northeast vegetation index'}, inplace=True)

X.rename(columns= {'ndvi_nw':'Northwest vegetation index'}, inplace=True)

X.rename(columns= {'ndvi_sw':'Southwest vegetation index'}, inplace=True)

X.rename(columns= {'reanalysis_avg_temp_k':'Average air temperature in K'}, inplace=True)

X.rename(columns= {'reanalysis_sat_precip_amt_mm':'Total precipitation in mm (2)'}, inplace=True)

X.rename(columns= {'reanalysis_specific_humidity_g_per_kg':'Mean specific humidity'}, inplace=True)

X.rename(columns= {'station_avg_temp_c':'Average temp in C'}, inplace=True)

X.rename(columns= {'station_max_temp_c':'Maximum temp in C'}, inplace=True)

X.rename(columns= {'total_cases':'Total dengue cases in the week'}, inplace=True)

#Drop columns

X = X.drop(columns='Year recorded')

X = X.drop(columns='Week of Year')

X = X.drop(columns='Month recorded')

X = X.drop(columns='Total precipitation in mm (2)')

X = X.drop(columns='Average temp in C')

X = X.drop(columns='Maximum temp in C')

X = X.drop(columns='Minimum temp in C')

X = X.drop(columns='Diurnal temperature range in C')

X = X.drop(columns='Average air temperature in K')

X = X.drop(columns='city')

# return the wrangled dataframe

return X

train = wrangle(train)

val = wrangle(val)

test = wrangle(test)

train.head().T

#Before we build the model to train on train dataset, log transform target variable due to skew

import numpy as np

target_log = np.log1p(train['Total dengue cases in the week'])

sns.distplot(target_log)

plt.title('Log-transformed target');

target_log_series = pd.Series(target_log)

train = train.assign(log_total_cases = target_log_series)

#drop total_cases target column while training the model

train = train.drop(columns='Total dengue cases in the week')

#Do the same log transformation with validation dataset

target_log_val = np.log1p(val['Total dengue cases in the week'])

target_log_val_series = pd.Series(target_log_val)

val = val.assign(log_total_cases = target_log_val_series)

val = val.drop(columns='Total dengue cases in the week')

#Fitting XGBoost Regresser model

#Define target and features

# The status_group column is the target

target = 'log_total_cases'

# Get a dataframe with all train columns except the target

train_features = train.drop(columns=[target])

# Get a list of the numeric features

numeric_features = train_features.select_dtypes(include='number').columns.tolist()

# Combine the lists

features = numeric_features

# Arrange data into X features matrix and y target vector

X_train = train[features]

y_train = train[target]

X_val = val[features]

y_val = val[target]

pip install category_encoders

from sklearn.pipeline import make_pipeline

import category_encoders as ce

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import StandardScaler

from sklearn.preprocessing import OneHotEncoder

import xgboost as xgb

from xgboost import XGBRegressor

from sklearn import model_selection, preprocessing

processor = make_pipeline(

SimpleImputer(strategy='mean')

)

X_train_processed = processor.fit_transform(X_train)

X_val_processed = processor.transform(X_val)

model = XGBRegressor(n_estimators=200, objective='reg:squarederror', n_jobs=-1)

eval_set = [(X_train_processed, y_train),

(X_val_processed, y_val)]

model.fit(X_train_processed, y_train, eval_set=eval_set, eval_metric='mae',

early_stopping_rounds=10)

results = model.evals_result()

train_error = results['validation_0']['mae']

val_error = results['validation_1']['mae']

iterations = range(1, len(train_error) + 1)

plt.figure(figsize=(10,7))

plt.plot(iterations, train_error, label='Train')

plt.plot(iterations, val_error, label='Validation')

plt.title('XGBoost Validation Curve')

plt.ylabel('Mean Absolute Error (log transformed)')

plt.xlabel('Model Complexity (n_estimators)')

plt.legend();

#predict on X_val

y_pred = model.predict(X_val_processed)

print('XGBoost Validation Mean Absolute Error, log transformed)', mean_absolute_error(y_val, y_pred))

#Transform y_pred back to original units from log transformed

y_pred_original = np.expm1(y_pred)

y_val_original = np.expm1(y_val)

print('XGBoost Validation Mean Absolute Error (non-log transformed)', mean_absolute_error(y_val_original, y_pred_original))

```

| true |

code

| 0.588357 | null | null | null | null |

|

# Neural networks with PyTorch

Deep learning networks tend to be massive with dozens or hundreds of layers, that's where the term "deep" comes from. You can build one of these deep networks using only weight matrices as we did in the previous notebook, but in general it's very cumbersome and difficult to implement. PyTorch has a nice module `nn` that provides a nice way to efficiently build large neural networks.

```

# Import necessary packages

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

import numpy as np

import torch

import helper

import matplotlib.pyplot as plt

```

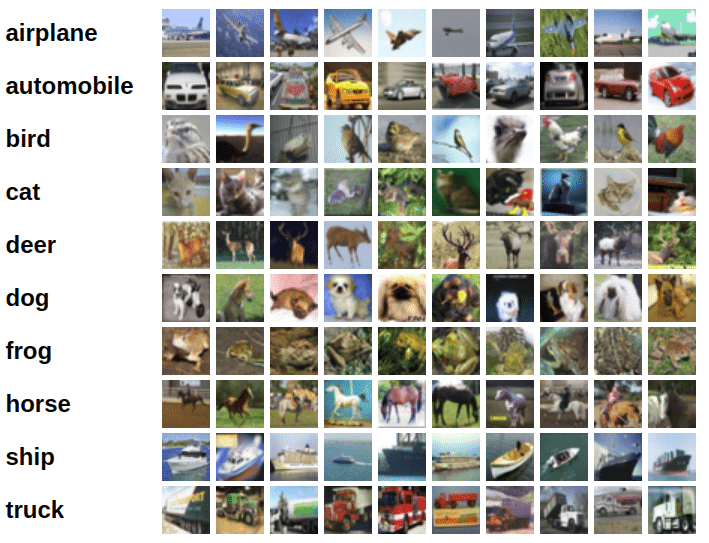

Now we're going to build a larger network that can solve a (formerly) difficult problem, identifying text in an image. Here we'll use the MNIST dataset which consists of greyscale handwritten digits. Each image is 28x28 pixels, you can see a sample below

<img src='assets/mnist.png'>

Our goal is to build a neural network that can take one of these images and predict the digit in the image.

First up, we need to get our dataset. This is provided through the `torchvision` package. The code below will download the MNIST dataset, then create training and test datasets for us. Don't worry too much about the details here, you'll learn more about this later.

```

### Run this cell

from torchvision import datasets, transforms

# Define a transform to normalize the data

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,)),

])

# Download and load the training data

trainset = datasets.MNIST('~/.pytorch/MNIST_data/', download=True, train=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=64, shuffle=True)

```

We have the training data loaded into `trainloader` and we make that an iterator with `iter(trainloader)`. Later, we'll use this to loop through the dataset for training, like

```python

for image, label in trainloader:

## do things with images and labels

```

You'll notice I created the `trainloader` with a batch size of 64, and `shuffle=True`. The batch size is the number of images we get in one iteration from the data loader and pass through our network, often called a *batch*. And `shuffle=True` tells it to shuffle the dataset every time we start going through the data loader again. But here I'm just grabbing the first batch so we can check out the data. We can see below that `images` is just a tensor with size `(64, 1, 28, 28)`. So, 64 images per batch, 1 color channel, and 28x28 images.

```

dataiter = iter(trainloader)

images, labels = dataiter.next()

print(type(images))

print(images.shape)

print(labels.shape)

```

This is what one of the images looks like.

```

plt.imshow(images[1].numpy().squeeze(), cmap='Greys_r');

```

First, let's try to build a simple network for this dataset using weight matrices and matrix multiplications. Then, we'll see how to do it using PyTorch's `nn` module which provides a much more convenient and powerful method for defining network architectures.

The networks you've seen so far are called *fully-connected* or *dense* networks. Each unit in one layer is connected to each unit in the next layer. In fully-connected networks, the input to each layer must be a one-dimensional vector (which can be stacked into a 2D tensor as a batch of multiple examples). However, our images are 28x28 2D tensors, so we need to convert them into 1D vectors. Thinking about sizes, we need to convert the batch of images with shape `(64, 1, 28, 28)` to a have a shape of `(64, 784)`, 784 is 28 times 28. This is typically called *flattening*, we flattened the 2D images into 1D vectors.

Previously you built a network with one output unit. Here we need 10 output units, one for each digit. We want our network to predict the digit shown in an image, so what we'll do is calculate probabilities that the image is of any one digit or class. This ends up being a discrete probability distribution over the classes (digits) that tells us the most likely class for the image. That means we need 10 output units for the 10 classes (digits). We'll see how to convert the network output into a probability distribution next.

> **Exercise:** Flatten the batch of images `images`. Then build a multi-layer network with 784 input units, 256 hidden units, and 10 output units using random tensors for the weights and biases. For now, use a sigmoid activation for the hidden layer. Leave the output layer without an activation, we'll add one that gives us a probability distribution next.

```

## Solution

def activation(x):

return 1/(1+torch.exp(-x))

# Flatten the input images

inputs = images.view(images.shape[0], -1)

# Create parameters

w1 = torch.randn(784, 256)

b1 = torch.randn(256)

w2 = torch.randn(256, 10)

b2 = torch.randn(10)

h = activation(torch.mm(inputs, w1) + b1)

out = torch.mm(h, w2) + b2

```

Now we have 10 outputs for our network. We want to pass in an image to our network and get out a probability distribution over the classes that tells us the likely class(es) the image belongs to. Something that looks like this:

<img src='assets/image_distribution.png' width=500px>

Here we see that the probability for each class is roughly the same. This is representing an untrained network, it hasn't seen any data yet so it just returns a uniform distribution with equal probabilities for each class.

To calculate this probability distribution, we often use the [**softmax** function](https://en.wikipedia.org/wiki/Softmax_function). Mathematically this looks like

$$

\Large \sigma(x_i) = \cfrac{e^{x_i}}{\sum_k^K{e^{x_k}}}

$$

What this does is squish each input $x_i$ between 0 and 1 and normalizes the values to give you a proper probability distribution where the probabilites sum up to one.

> **Exercise:** Implement a function `softmax` that performs the softmax calculation and returns probability distributions for each example in the batch. Note that you'll need to pay attention to the shapes when doing this. If you have a tensor `a` with shape `(64, 10)` and a tensor `b` with shape `(64,)`, doing `a/b` will give you an error because PyTorch will try to do the division across the columns (called broadcasting) but you'll get a size mismatch. The way to think about this is for each of the 64 examples, you only want to divide by one value, the sum in the denominator. So you need `b` to have a shape of `(64, 1)`. This way PyTorch will divide the 10 values in each row of `a` by the one value in each row of `b`. Pay attention to how you take the sum as well. You'll need to define the `dim` keyword in `torch.sum`. Setting `dim=0` takes the sum across the rows while `dim=1` takes the sum across the columns.

```

## Solution

def softmax(x):

return torch.exp(x)/torch.sum(torch.exp(x), dim=1).view(-1, 1)

probabilities = softmax(out)

# Does it have the right shape? Should be (64, 10)

print(probabilities.shape)

# Does it sum to 1?

print(probabilities.sum(dim=1))

```

## Building networks with PyTorch

PyTorch provides a module `nn` that makes building networks much simpler. Here I'll show you how to build the same one as above with 784 inputs, 256 hidden units, 10 output units and a softmax output.

```

from torch import nn

class Network(nn.Module):

def __init__(self):

super().__init__()

# Inputs to hidden layer linear transformation

self.hidden = nn.Linear(784, 256)

# Output layer, 10 units - one for each digit

self.output = nn.Linear(256, 10)

# Define sigmoid activation and softmax output

self.sigmoid = nn.Sigmoid()

self.softmax = nn.Softmax(dim=1)

def forward(self, x):

# Pass the input tensor through each of our operations

x = self.hidden(x)

x = self.sigmoid(x)

x = self.output(x)

x = self.softmax(x)

return x

```

Let's go through this bit by bit.

```python

class Network(nn.Module):

```

Here we're inheriting from `nn.Module`. Combined with `super().__init__()` this creates a class that tracks the architecture and provides a lot of useful methods and attributes. It is mandatory to inherit from `nn.Module` when you're creating a class for your network. The name of the class itself can be anything.

```python

self.hidden = nn.Linear(784, 256)

```

This line creates a module for a linear transformation, $x\mathbf{W} + b$, with 784 inputs and 256 outputs and assigns it to `self.hidden`. The module automatically creates the weight and bias tensors which we'll use in the `forward` method. You can access the weight and bias tensors once the network (`net`) is created with `net.hidden.weight` and `net.hidden.bias`.

```python

self.output = nn.Linear(256, 10)

```

Similarly, this creates another linear transformation with 256 inputs and 10 outputs.

```python

self.sigmoid = nn.Sigmoid()

self.softmax = nn.Softmax(dim=1)

```

Here I defined operations for the sigmoid activation and softmax output. Setting `dim=1` in `nn.Softmax(dim=1)` calculates softmax across the columns.

```python

def forward(self, x):

```

PyTorch networks created with `nn.Module` must have a `forward` method defined. It takes in a tensor `x` and passes it through the operations you defined in the `__init__` method.

```python

x = self.hidden(x)

x = self.sigmoid(x)

x = self.output(x)

x = self.softmax(x)

```

Here the input tensor `x` is passed through each operation a reassigned to `x`. We can see that the input tensor goes through the hidden layer, then a sigmoid function, then the output layer, and finally the softmax function. It doesn't matter what you name the variables here, as long as the inputs and outputs of the operations match the network architecture you want to build. The order in which you define things in the `__init__` method doesn't matter, but you'll need to sequence the operations correctly in the `forward` method.

Now we can create a `Network` object.

```

# Create the network and look at it's text representation

model = Network()

model

```

You can define the network somewhat more concisely and clearly using the `torch.nn.functional` module. This is the most common way you'll see networks defined as many operations are simple element-wise functions. We normally import this module as `F`, `import torch.nn.functional as F`.

```

import torch.nn.functional as F

class Network(nn.Module):

def __init__(self):

super().__init__()

# Inputs to hidden layer linear transformation

self.hidden = nn.Linear(784, 256)

# Output layer, 10 units - one for each digit

self.output = nn.Linear(256, 10)

def forward(self, x):

# Hidden layer with sigmoid activation

x = F.sigmoid(self.hidden(x))

# Output layer with softmax activation

x = F.softmax(self.output(x), dim=1)

return x

```

### Activation functions

So far we've only been looking at the softmax activation, but in general any function can be used as an activation function. The only requirement is that for a network to approximate a non-linear function, the activation functions must be non-linear. Here are a few more examples of common activation functions: Tanh (hyperbolic tangent), and ReLU (rectified linear unit).

<img src="assets/activation.png" width=700px>

In practice, the ReLU function is used almost exclusively as the activation function for hidden layers.

### Your Turn to Build a Network

<img src="assets/mlp_mnist.png" width=600px>

> **Exercise:** Create a network with 784 input units, a hidden layer with 128 units and a ReLU activation, then a hidden layer with 64 units and a ReLU activation, and finally an output layer with a softmax activation as shown above. You can use a ReLU activation with the `nn.ReLU` module or `F.relu` function.

It's good practice to name your layers by their type of network, for instance 'fc' to represent a fully-connected layer. As you code your solution, use `fc1`, `fc2`, and `fc3` as your layer names.

```

## Solution

class Network(nn.Module):

def __init__(self):

super().__init__()

# Defining the layers, 128, 64, 10 units each

self.fc1 = nn.Linear(784, 128)

self.fc2 = nn.Linear(128, 64)

# Output layer, 10 units - one for each digit

self.fc3 = nn.Linear(64, 10)

def forward(self, x):

''' Forward pass through the network, returns the output logits '''

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

x = F.relu(x)

x = self.fc3(x)

x = F.softmax(x, dim=1)

return x

model = Network()

model

```

### Initializing weights and biases

The weights and such are automatically initialized for you, but it's possible to customize how they are initialized. The weights and biases are tensors attached to the layer you defined, you can get them with `model.fc1.weight` for instance.

```

print(model.fc1.weight)

print(model.fc1.bias)

```

For custom initialization, we want to modify these tensors in place. These are actually autograd *Variables*, so we need to get back the actual tensors with `model.fc1.weight.data`. Once we have the tensors, we can fill them with zeros (for biases) or random normal values.

```

# Set biases to all zeros

model.fc1.bias.data.fill_(0)

# sample from random normal with standard dev = 0.01

model.fc1.weight.data.normal_(std=0.01)

```

### Forward pass

Now that we have a network, let's see what happens when we pass in an image.

```

# Grab some data

dataiter = iter(trainloader)

images, labels = dataiter.next()

# Resize images into a 1D vector, new shape is (batch size, color channels, image pixels)

images.resize_(64, 1, 784)

# or images.resize_(images.shape[0], 1, 784) to automatically get batch size

# Forward pass through the network

img_idx = 0

ps = model.forward(images[img_idx,:])

img = images[img_idx]

helper.view_classify(img.view(1, 28, 28), ps)

```

As you can see above, our network has basically no idea what this digit is. It's because we haven't trained it yet, all the weights are random!

### Using `nn.Sequential`

PyTorch provides a convenient way to build networks like this where a tensor is passed sequentially through operations, `nn.Sequential` ([documentation](https://pytorch.org/docs/master/nn.html#torch.nn.Sequential)). Using this to build the equivalent network:

```

# Hyperparameters for our network

input_size = 784

hidden_sizes = [128, 64]

output_size = 10

# Build a feed-forward network

model = nn.Sequential(nn.Linear(input_size, hidden_sizes[0]),

nn.ReLU(),

nn.Linear(hidden_sizes[0], hidden_sizes[1]),

nn.ReLU(),

nn.Linear(hidden_sizes[1], output_size),

nn.Softmax(dim=1))

print(model)

# Forward pass through the network and display output

images, labels = next(iter(trainloader))

images.resize_(images.shape[0], 1, 784)

ps = model.forward(images[0,:])

helper.view_classify(images[0].view(1, 28, 28), ps)

```