prompt

stringlengths 501

4.98M

| target

stringclasses 1

value | chunk_prompt

bool 1

class | kind

stringclasses 2

values | prob

float64 0.2

0.97

⌀ | path

stringlengths 10

394

⌀ | quality_prob

float64 0.4

0.99

⌀ | learning_prob

float64 0.15

1

⌀ | filename

stringlengths 4

221

⌀ |

|---|---|---|---|---|---|---|---|---|

# Distributed Federated Learning using PySyft

```

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

import syft as sy

hook = sy.TorchHook(torch)

bob = sy.VirtualWorker(hook, id='bob')

alice = sy.VirtualWorker(hook, id='alice')

jane = sy.VirtualWorker(hook, id='jane')

federated_train_loader = sy.FederatedDataLoader(

datasets.MNIST('data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

]))

.federate((bob, alice, jane)),

batch_size=32, shuffle=True)

test_loader = torch.utils.data.DataLoader(

datasets.MNIST('data', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=32, shuffle=True)

class Classifier(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(784, 256)

self.fc2 = nn.Linear(256, 64)

self.fc3 = nn.Linear(64, 10)

def forward(self, x):

x = x.view(x.shape[0], -1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return F.log_softmax(x, dim=1)

def train(model, federated_train_loader, optimizer, epochs):

model.train()

for epoch in range(epochs):

for batch_idx, (data, targets) in enumerate(federated_train_loader):

model.send(data.location)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, targets)

loss.backward()

optimizer.step()

model.get()

if batch_idx % 2 == 0:

loss = loss.get()

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * 32, len(federated_train_loader) * 32,

100. * batch_idx / len(federated_train_loader), loss.item()))

def test(model, test_loader):

model.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_loader:

output = model(data)

test_loss += F.nll_loss(output, target, reduction='sum').item() # sum up batch loss

pred = output.argmax(1, keepdim=True) # get the index of the max log-probability

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

model = Classifier()

optimizer = optim.SGD(model.parameters(), lr=0.005)

train(model, federated_train_loader, optimizer, 3)

test(model, test_loader)

```

| true |

code

| 0.881971 | null | null | null | null |

|

```

import pandas as pd

import numpy as np

import scipy.stats

from scipy.integrate import quad

from scipy.optimize import minimize

from scipy.special import expit, logit

from scipy.stats import norm

```

# Dataset

```

df = pd.read_csv("bank-note/bank-note/train.csv", header=None)

d = df.to_numpy()

X = d[:,:-1]

Y = d[:,-1]

X.shape, Y.shape

df = pd.read_csv("bank-note/bank-note/test.csv", header=None)

d = df.to_numpy()

Xtest = d[:,:-1]

Ytest = d[:,-1]

Xtest.shape, Ytest.shape

```

# Part 1

```

def initialise_w(initialise):

if(initialise == 'random'):

w = np.random.randn(d,1)

print("w is initialised from N[0,1]")

elif(initialise == 'zeros'):

w = np.zeros((d,1))

print("w is initialised as a zero vector")

else:

print("Method unknown")

return w

def compute_mu(X, w):

mu = expit(np.dot(X,w))

mu = mu.reshape(X.shape[0],1)

return mu

def first_derivative(w):

mu = compute_mu(X, w)

epsilon = 1e-12

grad = np.matmul(np.transpose(X), (mu-Y)) + w.reshape(d,1)

grad = grad.squeeze()

return(grad)

def second_deivative(w,X,y):

mu = compute_mu(X, w)

R = np.eye(n)

for i in range(n):

R[i,i] = mu[i,0] * (1-mu[i,0])

return(np.dot(np.dot(np.transpose(X),R),X) + np.eye(d))

def test(w, X, y):

n,d = X.shape

mu = compute_mu(X, w)

yhat = np.zeros((n,1)).astype(np.float64)

yhat[mu>0.5]=1

correct = np.sum(yhat==y)

return(correct,n)

def train(initialise):

np.random.seed(0)

w = initialise_w(initialise)

for j in range(100):

grad1 = first_derivative(w.squeeze()).reshape(d,1)

H = second_deivative(w, X, Y)

delta_w = np.dot(np.linalg.inv(H),grad1)

w = w - delta_w

diff = np.linalg.norm(delta_w)

correct,n = test(w, Xtest, Ytest)

print("Iteration : {} \t Accuracy : {}%".format(j,correct/n*100))

if(diff < 1e-5):

print("tolerance reached at the iteration : ",j)

break

print("Training done...")

print("Model weights : ", np.transpose(w))

n,d = X.shape

n1,d1 = Xtest.shape

Y = Y.reshape(n,1)

Ytest = Ytest.reshape(n1,1)

train('random')

```

# Part 2

```

# LBFGS

def compute_mu(X, w):

phi=np.dot(X,w)

mu = norm.cdf(phi)

mu = mu.reshape(X.shape[0],1)

return mu

def first_derivative(w):

mu = compute_mu(X, w)

epsilon = 1e-12

phi=np.dot(X,w)

grad_mu = X*(scipy.stats.norm.pdf(phi,0,1).reshape(-1,1))

return(np.sum((- Y*(1/(mu)) + (1-Y)*(1/(1+epsilon-mu)))*grad_mu,0) + w).squeeze()

def second_deivative(w,X,y):

mu = compute_mu(X, w)

R = np.eye(n)

phi=np.dot(X,w)

for i in range(n):

t1 = (y[i] - mu[i,0])/(mu[i,0] * (1-mu[i,0]))

t2 = scipy.stats.norm.pdf(phi[i,0],0,1)

t3 = (1-y[i])/np.power(1-mu[i,0],2) + y[i]/np.power(mu[i,0],2)

R[i,i] = t1*t2*np.dot(X[i],w) + t3*t2*t2

return(np.dot(np.dot(np.transpose(X),R),X) + np.eye(d))

def neg_log_posterior(w):

w=w.reshape(-1,1)

epsilon = 1e-12

mu = compute_mu(X, w)

prob_1 = Y*np.log(mu+epsilon)

prob_0 = (1-Y)*np.log(1-mu+epsilon)

log_like = np.sum(prob_1) + np.sum(prob_0)

w_norm = np.power(np.linalg.norm(w),2)

neg_log_pos = -log_like+w_norm/2

print("neg_log_posterior = {:.4f} \tlog_like = {:.4f} \tw_norm = {:.4f}".format(neg_log_pos, log_like, w_norm))

return(neg_log_pos)

def test(w, X, y):

n,d = X.shape

mu = compute_mu(X, w)

#print(mu.shape, n, d)

yhat = np.zeros((n,1)).astype(np.float64)

yhat[mu>0.5]=1

correct = np.sum(yhat==y)

return(correct,n)

res = minimize(neg_log_posterior, initialise_w('random'), method='BFGS', jac=first_derivative,

tol= 1e-5, options={'maxiter': 100})

correct,n = test(res.x, Xtest, Ytest)

print("\n_____________Model trained______________\n")

print("\nModel weights : ", res.x)

print("\n_____________Test Accuracy______________\n")

print("Accuracy : {}% ".format(correct/n*100))

```

# Part 3

```

def compute_mu(X, w):

phi=np.dot(X,w)

mu = norm.cdf(phi)

mu = mu.reshape(X.shape[0],1)

return mu

def first_derivative(w):

mu = compute_mu(X, w)

epsilon = 1e-12

phi=np.dot(X,w)

grad_mu = X*(scipy.stats.norm.pdf(phi,0,1).reshape(-1,1))

return(np.sum((- Y*(1/(mu)) + (1-Y)*(1/(1+epsilon-mu)))*grad_mu,0) + w).squeeze()

def second_deivative(w,X,y):

mu = compute_mu(X, w)

R = np.eye(n)

phi=np.dot(X,w)

for i in range(n):

t1 = (y[i] - mu[i,0])/(mu[i,0] * (1-mu[i,0]))

t2 = scipy.stats.norm.pdf(phi[i,0],0,1)

t3 = (1-y[i])/np.power(1-mu[i,0],2) + y[i]/np.power(mu[i,0],2)

R[i,i] = t1*t2*np.dot(X[i],w) + t3*t2*t2

return(np.dot(np.dot(np.transpose(X),R),X) + np.eye(d))

def neg_log_posterior(w):

w=w.reshape(-1,1)

epsilon = 1e-12

mu = compute_mu(X, w)

prob_1 = Y*np.log(mu+epsilon)

prob_0 = (1-Y)*np.log(1-mu+epsilon)

log_like = np.sum(prob_1) + np.sum(prob_0)

w_norm = np.power(np.linalg.norm(w),2)

neg_log_pos = -log_like+w_norm/2

print("neg_log_posterior = {:.4f} \tlog_like = {:.4f} \tw_norm = {:.4f}".format(neg_log_pos, log_like, w_norm))

return(neg_log_pos)

def test(w, X, y):

n,d = X.shape

mu = compute_mu(X, w)

#print(mu.shape, n, d)

yhat = np.zeros((n,1)).astype(np.float64)

yhat[mu>0.5]=1

correct = np.sum(yhat==y)

return(correct,n)

def train(initialise):

np.random.seed(0)

w = initialise_w(initialise)

for j in range(100):

grad1 = first_derivative(w.squeeze()).reshape(d,1)

H = second_deivative(w, X, Y)

delta_w = np.dot(np.linalg.inv(H),grad1)

w = w - delta_w

diff = np.linalg.norm(delta_w)

correct,n = test(w, Xtest, Ytest)

print("Iteration : {} \t Accuracy : {}%".format(j,correct/n*100))

if(diff < 1e-5):

print("tolerance reached at the iteration : ",j)

break

print("Training done...")

print("Model weights : ", np.transpose(w))

n,d = X.shape

n1,d1 = Xtest.shape

Y = Y.reshape(n,1)

Ytest = Ytest.reshape(n1,1)

train('zeros')

```

| true |

code

| 0.467453 | null | null | null | null |

|

### Clinical BCI Challenge-WCCI2020

- [website link](https://sites.google.com/view/bci-comp-wcci/?fbclid=IwAR37WLQ_xNd5qsZvktZCT8XJerHhmVb_bU5HDu69CnO85DE3iF0fs57vQ6M)

- [Dataset Link](https://github.com/5anirban9/Clinical-Brain-Computer-Interfaces-Challenge-WCCI-2020-Glasgow)

```

import mne

from scipy.io import loadmat

import scipy

import sklearn

import numpy as np

import pandas as pd

import glob

from mne.decoding import CSP

import os

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.svm import LinearSVC, SVC

from sklearn.model_selection import train_test_split, cross_val_score, GridSearchCV, StratifiedShuffleSplit

from sklearn.preprocessing import StandardScaler

from sklearn.compose import make_column_transformer, make_column_selector

from sklearn.pipeline import make_pipeline

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis as lda

import warnings

warnings.filterwarnings('ignore') # to ignore warnings

verbose = False # to universally just change it to true/false for different output display

mne.set_log_level(verbose=verbose) # to suppress large info outputs

# using kappa as evaluation metric

kappa = sklearn.metrics.make_scorer(sklearn.metrics.cohen_kappa_score) # kappa scorer

acc = sklearn.metrics.make_scorer(sklearn.metrics.accuracy_score) # accuracy scorer

scorer = kappa # just assign another scorer to replace kappa scorer

n_jobs = None # for multicore parallel processing, set it to 1 if cause memory issues, for full utilization set to -1

```

## Data Loading and Conversion to MNE Datatypes

[Mike Cohen Tutorials link for EEG Preprocessing](https://www.youtube.com/watch?v=uWB5tjhataY&list=PLn0OLiymPak2gDD-VDA90w9_iGDgOOb2o)

```

current_folder = globals()['_dh'][0] # a hack to get path of current folder in which juptyter file is located

data_path = os.path.join(current_folder, 'Data')

all_files = glob.glob(data_path + '/*.mat')

training_files = glob.glob(data_path + '/*T.mat')

evaluation_files = glob.glob(data_path + '/*E.mat')

len(all_files), len(training_files), len(evaluation_files) # if these return zero,then no file is loaded

def get_mne_epochs(filepath, verbose=verbose, t_start=2, fs=512, mode='train'):

'''

This function reads the EEG data from .mat file and convert it to MNE-Python Compatible epochs

data structure. It takes data from [0, 8] sec range and return it by setting t = 0 at cue onset

i.e. 3 seconds and dropping first two seconds so the output data is in [-1.0, 5.0] sec range. The

Details can be found in the preprocessing section of the attached document

'''

mat_data = loadmat(filepath) # read .mat file

eeg_data= mat_data['RawEEGData']

idx_start = fs*t_start

eeg_data = eeg_data[:, :, idx_start:]

event_id = {'left-hand': 1, 'right-hand': 2}

channel_names = ['F3', 'FC3', 'C3', 'CP3', 'P3', 'FCz', 'CPz', 'F4', 'FC4', 'C4', 'CP4', 'P4']

info = mne.create_info(ch_names=channel_names, sfreq=fs, ch_types='eeg')

epochs = mne.EpochsArray(eeg_data, info, verbose=verbose, tmin=t_start-3.0)

epochs.set_montage('standard_1020')

epochs.filter(1., None)

epochs.apply_baseline(baseline=(-.250, 0)) # linear baseline correction

if mode == 'train': # this in only applicable for training data

epochs.event_id = event_id

epochs.events[:,2] = mat_data['Labels'].ravel()

return epochs

def get_labels(filepath):

mat_data = loadmat(filepath) # read .mat file

return mat_data['Labels'].ravel()

epochs, labels = get_mne_epochs(training_files[0], verbose=verbose), get_labels(training_files[0])

data = epochs.get_data()

print('Shape of EEG Data: ', data.shape, '\t Shape of Labels: ', labels.shape)

```

### Training Data

```

# loading original data

epochs_list_train = []

for i in training_files:

epochs_list_train.append(get_mne_epochs(i, verbose=verbose))

```

### Evaluation Data

first 8 for single subject and last 2 are for cross subject

```

epochs_list_eval = []

for i in evaluation_files:

epochs_list_eval.append(get_mne_epochs(i, mode='test', verbose=verbose))

```

### Bandpass filtering of data

```

for epochs in epochs_list_train:

epochs.filter(7.0, 32.0)

for epochs in epochs_list_eval:

epochs.filter(7.0, 32.0)

```

## Lets try doing some classification

```

cv = StratifiedShuffleSplit(n_splits=5, random_state=0)

epochs = epochs_list_train[3]

psds, freqs = mne.time_frequency.psd_multitaper(epochs, tmin=0.5, tmax=4.5, fmin=8, fmax=30 ,n_jobs=1)

psds = 10 * np.log10(psds) # to convert powers to DB

labels = epochs.events[:,-1]

x_trainVal, x_test, y_trainVal, y_test = train_test_split(psds, labels.ravel(), shuffle=True, stratify=labels, random_state=0) to avoid confusing names and reusing x_trainVal

print('train set: features: ', x_trainVal.shape, 'labels: ', y_trainVal.shape)

print('Test set: features: ', x_test.shape, 'labels: ', y_test.shape)

y_train = y_trainVal

# using all channels

trials, channels, eeg = x_trainVal.shape

x_train = x_trainVal.reshape(trials, channels*eeg)

print('*'*10, 'Classification Scores Comparison with default Parameters' ,'*'*10)

print('#'*15, 'Using All Channels', '#'*15)

print('KNN : ', np.mean(cross_val_score(make_pipeline(StandardScaler(),KNeighborsClassifier()), x_train, y_train, cv=cv, scoring=scorer)))

print('Log-Regression: ', np.mean(cross_val_score(make_pipeline(StandardScaler(),LogisticRegression(max_iter=1000)), x_train, y_train, cv=cv, scoring=scorer)))

print('Linear SVM : ', np.mean(cross_val_score(make_pipeline(StandardScaler(),LinearSVC(random_state=0)), x_train, y_train, cv=cv, scoring=scorer)))

print('kernal SVM : ', np.mean(cross_val_score(make_pipeline(StandardScaler(), SVC(gamma='scale')), x_train, y_train, cv=cv, scoring=scorer)))

print('LDA : ', np.mean(cross_val_score(make_pipeline(StandardScaler(), lda()), x_train, y_train, cv=cv, scoring=scorer)))

```

## Grid Search

with [0.5, 4.5] seconds time interval and [8, 30] Hz freqs

```

cv = StratifiedShuffleSplit(10, random_state=0)

# for linear svm

param_grid_linear_svm = { 'linearsvc__C' : np.logspace(-4, 2, 15)}

# lda, auto shrinkage performs pretty well mostly

shrinkage = list(np.arange(0.1,1.01,0.1))

shrinkage.append('auto')

param_grid_lda = {'lineardiscriminantanalysis__shrinkage': shrinkage}

grids_linear_svm_list = [GridSearchCV(make_pipeline(StandardScaler(), LinearSVC(random_state=0)),

param_grid=param_grid_linear_svm, cv=cv, n_jobs=n_jobs, scoring=scorer)

for _ in range(len(training_files))]

grids_lda_list = [GridSearchCV(make_pipeline(StandardScaler(), lda(solver='eigen')),

param_grid=param_grid_lda, cv=cv, n_jobs=n_jobs, scoring=scorer)

for _ in range(len(training_files))]

def training_function(subject_index=0):

# this time training function trains on whole training set

print('-'*25, 'Training for Subject:', subject_index+1, '-'*25)

epochs = epochs_list_train[subject_index]

psds, freqs = mne.time_frequency.psd_multitaper(epochs, tmin=0.5, tmax=4.5, fmin=8, fmax=30 ,n_jobs=1)

psds = 10 * np.log10(psds)

psds = psds.reshape(psds.shape[0], -1)

labels = epochs.events[:,-1]

grids_linear_svm_list[subject_index].fit(psds, labels)

print('LinearSVM: Maximum Cross Validation Score = ', round(grids_linear_svm_list[subject_index].best_score_,3))

grids_lda_list[subject_index].fit(psds, labels)

print('LDA : Maximum Cross Validation Score = ', round(grids_lda_list[subject_index].best_score_,3))

print()

def evaluation_function(subject_index=0):

# prints the prediction counts for each class

epochs = epochs_list_eval[subject_index]

psds, freqs = mne.time_frequency.psd_multitaper(epochs, tmin=0.5, tmax=4.5, fmin=8, fmax=30 ,n_jobs=1)

psds = 10 * np.log10(psds)

psds = psds.reshape(psds.shape[0], -1)

preds_linear_svm = grids_linear_svm_list[subject_index].predict(psds)

preds_lda = grids_lda_list[subject_index].predict(psds)

print('-'*25, 'Predictions Counts Subject:', subject_index+1, '-'*25)

print('Linear SVM: Class 1 =', sum(preds_linear_svm==1), 'Class 2 =', sum(preds_linear_svm==2))

print('LDA : Class 1 =', sum(preds_lda==1), 'Class 2 =', sum(preds_lda==2))

print()

```

### It's Training Time

```

for subject in range(len(training_files)):

training_function(subject)

for subject in range(len(training_files)):

evaluation_function(subject)

```

### Results

svm always better except the last subject so only last entry for lda and all others for svm in excel file

| true |

code

| 0.557243 | null | null | null | null |

|

Lambda School Data Science

*Unit 4, Sprint 2, Module 4*

---

# Neural Network Frameworks (Prepare)

## Learning Objectives

* <a href="#p1">Part 1</a>: Implemenent Regularization Strategies

* <a href="#p2">Part 2</a>: Deploy a Keras Model

* <a href="#p3">Part 3</a>: Write a Custom Callback Function (Optional)

Today's class will also focus heavily on Callback objects. We will use a variety of callbacks to monitor and manipulate our models based on data that our model produces at the end of an epoch.

> A callback is an object that can perform actions at various stages of training (e.g. at the start or end of an epoch, before or after a single batch, etc). -- [Keras Documentation](https://keras.io/api/callbacks/)

# Regularization Strategies (Learn)

## Overview

Neural Networks are highly parameterized models and can be easily overfit to the training data. The most salient way to combat this problem is with regularization strategies.

There are four common ways of regularization in neural networks which we cover briefly. Here's a quick summary of how to apply them:

1. Always use EarlyStopping. This strategy will prevent your weights from being updated well past the point of their peak usefulness.

2. Use EarlyStopping, L1/L2 regularization and Dropout

3. Use EarlyStopping, Weight Constraint and Dropout

Weight Decay and Weigh Constraint accomplish similar purposes - preventing over fitting the parameters by regularizing the values. The mechanics are just slightly different. That's why you would not necessary want to apply them together.

## Follow Along

### Early Stopping

```

%load_ext tensorboard

from tensorflow.keras.datasets import fashion_mnist

(X_train, y_train), (X_test, y_test) = fashion_mnist.load_data()

import matplotlib.pyplot as plt

plt.title(y_train[2])

plt.imshow(X_train[2]);

X_train, X_test = X_train / 255., X_test / 255.

from tensorflow.keras.callbacks import EarlyStopping, TensorBoard

from tensorflow.keras.layers import Flatten, Dense

from tensorflow.keras.layers import ReLU

import tensorflow as tf

import os

logdir = os.path.join("logs", "EarlyStopping-Loss")

tensorboard_callback = tf.keras.callbacks.TensorBoard(logdir, histogram_freq=1)

stop = EarlyStopping(monitor='val_loss', min_delta=0.001, patience=3)

model = tf.keras.Sequential([

Flatten(input_shape=(28,28)),

Dense(128),

ReLU(negative_slope=.01),

Dense(128),

ReLU(negative_slope=.01),

Dense(128),

ReLU(negative_slope=.01),

Dense(10, activation='softmax')

])

model.compile(loss='sparse_categorical_crossentropy', optimizer='nadam', metrics=['accuracy'])

model.fit(X_train, y_train, epochs=99,

validation_data=(X_test,y_test),

callbacks=[tensorboard_callback, stop])

%tensorboard --logdir logs

```

### L1/L2 regularization

```python

Dense(64, input_dim=64, kernel_regularizer=regularizers.l2(0.01))

Dense(64, input_dim=64, kernel_regularizer=regularizers.l1(0.01))

```

Note:

The terms "L2 regularization" and "weight decay" are often used interchagebly, but they only mean the same thing for vanilla SGD optimization.

They mean different things for all other optimizers based on SGD (Adam, AdamW, RSMProp, etc).

See:

- https://www.fast.ai/2018/07/02/adam-weight-decay/

- https://arxiv.org/pdf/1711.05101.pdf

- https://bbabenko.github.io/weight-decay/

```

from tensorflow.keras import regularizers

```

### Weight Constraint

```python

tf.keras.constraints.MaxNorm(

max_value=2, axis=0

)

```

```

from tensorflow.keras.constraints import MaxNorm

```

### Dropout

```

from tensorflow.keras.layers import Dropout

%tensorboard --logdir logs

```

## Challenge

You will apply regularization strategies inside your neural network today, as you try to avoid overfitting it.

---

# Deploy (Learn)

## Overview

You've built a dope image classification model, but it's just sitting your Jupyter Notebook. What now? Well you deploy to some down stream application. TensorFlow supports three ways of deploying it's models:

- In-Browswer with TensorFlow.js

- API with TensorFlow Serving (TFX) or another Framework

- On-Device with TensorFlow Lite

You are already familiar with deploying a model as an API from Unit 3, so we will focus on deploying a model in browser. Both methods rely on the same core idea: save your weights and architecture information, load those parameters into application, and perform inference.

## Follow Along

### Train Your Model

### Save / Export Your Model

### Move Weights to Web Application

Not all models are small enough to work well in-browser. Many neural networks are deploy as micro-service APIs. Micro-service APIs are the architecture you studied during Unit 3.

## Challenge

You will be expected to be able to export your model weights and architecutre on the assignment.

# Custom Callbacks (Learn)

## Overview

Custom callbacks all you to access data at any point during the training: on batch end, on epoch end, on epoch start, on batch start. Our use case today is a simple one. Let's stop training once we reach a benchmark accuracy.

## Follow Along

## Challenge

Experiment with improving our custom callback function.

| true |

code

| 0.714952 | null | null | null | null |

|

# Video Super Resolution with OpenVINO

Super Resolution is the process of enhancing the quality of an image by increasing the pixel count using deep learning. This notebook applies Single Image Super Resolution (SISR) to frames in a 360p (480×360) video in 360p resolution. We use a model called [single-image-super-resolution-1032](https://docs.openvino.ai/latest/omz_models_model_single_image_super_resolution_1032.html) which is available from the Open Model Zoo. It is based on the research paper cited below.

Y. Liu et al., ["An Attention-Based Approach for Single Image Super Resolution,"](https://arxiv.org/abs/1807.06779) 2018 24th International Conference on Pattern Recognition (ICPR), 2018, pp. 2777-2784, doi: 10.1109/ICPR.2018.8545760.

**NOTE:** The Single Image Super Resolution (SISR) model used in this demo is not optimized for video. Results may vary depending on the video.

## Preparation

### Imports

```

import time

import urllib

from pathlib import Path

import cv2

import numpy as np

from IPython.display import HTML, FileLink, Pretty, ProgressBar, Video, clear_output, display

from openvino.inference_engine import IECore

from pytube import YouTube

```

### Settings

```

# Device to use for inference. For example, "CPU", or "GPU"

DEVICE = "CPU"

# 1032: 4x superresolution, 1033: 3x superresolution

MODEL_FILE = "model/single-image-super-resolution-1032.xml"

model_name = Path(MODEL_FILE).name

model_xml_path = Path(MODEL_FILE).with_suffix(".xml")

```

### Functions

```

def write_text_on_image(image: np.ndarray, text: str) -> np.ndarray:

"""

Write the specified text in the top left corner of the image

as white text with a black border.

:param image: image as numpy array with HWC shape, RGB or BGR

:param text: text to write

:return: image with written text, as numpy array

"""

font = cv2.FONT_HERSHEY_PLAIN

org = (20, 20)

font_scale = 4

font_color = (255, 255, 255)

line_type = 1

font_thickness = 2

text_color_bg = (0, 0, 0)

x, y = org

image = cv2.UMat(image)

(text_w, text_h), _ = cv2.getTextSize(

text=text, fontFace=font, fontScale=font_scale, thickness=font_thickness

)

result_im = cv2.rectangle(

img=image, pt1=org, pt2=(x + text_w, y + text_h), color=text_color_bg, thickness=-1

)

textim = cv2.putText(

img=result_im,

text=text,

org=(x, y + text_h + font_scale - 1),

fontFace=font,

fontScale=font_scale,

color=font_color,

thickness=font_thickness,

lineType=line_type,

)

return textim.get()

def load_image(path: str) -> np.ndarray:

"""

Loads an image from `path` and returns it as BGR numpy array.

:param path: path to an image filename or url

:return: image as numpy array, with BGR channel order

"""

if path.startswith("http"):

# Set User-Agent to Mozilla because some websites block requests

# with User-Agent Python

request = urllib.request.Request(url=path, headers={"User-Agent": "Mozilla/5.0"})

response = urllib.request.urlopen(url=request)

array = np.asarray(bytearray(response.read()), dtype="uint8")

image = cv2.imdecode(buf=array, flags=-1) # Loads the image as BGR

else:

image = cv2.imread(filename=path)

return image

def convert_result_to_image(result) -> np.ndarray:

"""

Convert network result of floating point numbers to image with integer

values from 0-255. Values outside this range are clipped to 0 and 255.

:param result: a single superresolution network result in N,C,H,W shape

"""

result = result.squeeze(0).transpose(1, 2, 0)

result *= 255

result[result < 0] = 0

result[result > 255] = 255

result = result.astype(np.uint8)

return result

```

## Load the Superresolution Model

Load the model in Inference Engine with `ie.read_network` and load it to the specified device with `ie.load_network`

```

ie = IECore()

net = ie.read_network(model=model_xml_path)

exec_net = ie.load_network(network=net, device_name=DEVICE)

```

Get information about network inputs and outputs. The Super Resolution model expects two inputs: 1) the input image, 2) a bicubic interpolation of the input image to the target size 1920x1080. It returns the super resolution version of the image in 1920x1800.

```

# Network inputs and outputs are dictionaries. Get the keys for the

# dictionaries.

original_image_key = list(exec_net.input_info)[0]

bicubic_image_key = list(exec_net.input_info)[1]

output_key = list(exec_net.outputs.keys())[0]

# Get the expected input and target shape. `.dims[2:]` returns the height

# and width. OpenCV's resize function expects the shape as (width, height),

# so we reverse the shape with `[::-1]` and convert it to a tuple

input_height, input_width = tuple(exec_net.input_info[original_image_key].tensor_desc.dims[2:])

target_height, target_width = tuple(exec_net.input_info[bicubic_image_key].tensor_desc.dims[2:])

upsample_factor = int(target_height / input_height)

print(f"The network expects inputs with a width of {input_width}, " f"height of {input_height}")

print(f"The network returns images with a width of {target_width}, " f"height of {target_height}")

print(

f"The image sides are upsampled by a factor {upsample_factor}. "

f"The new image is {upsample_factor**2} times as large as the "

"original image"

)

```

## Superresolution on Video

Download a YouTube\* video with PyTube and enhance the video quality with superresolution.

By default only the first 100 frames of the video are processed. Change NUM_FRAMES in the cell below to modify this.

**Note:**

- The resulting video does not contain audio.

- The input video should be a landscape video and have an input resolution of 360p (640x360) for the 1032 model, or 480p (720x480) for the 1033 model.

### Settings

```

VIDEO_DIR = "data"

OUTPUT_DIR = "output"

Path(OUTPUT_DIR).mkdir(exist_ok=True)

# Maximum number of frames to read from the input video. Set to 0 to read all frames.

NUM_FRAMES = 100

# The format for saving the result videos. vp09 is slow, but widely available.

# If you have FFMPEG installed, you can change FOURCC to `*"THEO"` to improve video writing speed.

FOURCC = cv2.VideoWriter_fourcc(*"vp09")

```

### Download and Prepare Video

```

# Use pytube to download a video. It downloads to the videos subdirectory.

# You can also place a local video there and comment out the following lines

VIDEO_URL = "https://www.youtube.com/watch?v=V8yS3WIkOrA"

yt = YouTube(VIDEO_URL)

# Use `yt.streams` to see all available streams. See the PyTube documentation

# https://python-pytube.readthedocs.io/en/latest/api.html for advanced

# filtering options

try:

Path(VIDEO_DIR).mkdir(exist_ok=True)

stream = yt.streams.filter(resolution="360p").first()

filename = Path(stream.default_filename.encode("ascii", "ignore").decode("ascii")).stem

stream.download(output_path=OUTPUT_DIR, filename=filename)

print(f"Video {filename} downloaded to {OUTPUT_DIR}")

# Create Path objects for the input video and the resulting videos

video_path = Path(stream.get_file_path(filename, OUTPUT_DIR))

except Exception:

# If PyTube fails, use a local video stored in the VIDEO_DIR directory

video_path = Path(rf"{VIDEO_DIR}/CEO Pat Gelsinger on Leading Intel.mp4")

# Path names for the result videos

superres_video_path = Path(f"{OUTPUT_DIR}/{video_path.stem}_superres.mp4")

bicubic_video_path = Path(f"{OUTPUT_DIR}/{video_path.stem}_bicubic.mp4")

comparison_video_path = Path(f"{OUTPUT_DIR}/{video_path.stem}_superres_comparison.mp4")

# Open the video and get the dimensions and the FPS

cap = cv2.VideoCapture(filename=str(video_path))

ret, image = cap.read()

if not ret:

raise ValueError(f"The video at '{video_path}' cannot be read.")

fps = cap.get(cv2.CAP_PROP_FPS)

frame_count = cap.get(cv2.CAP_PROP_FRAME_COUNT)

if NUM_FRAMES == 0:

total_frames = frame_count

else:

total_frames = min(frame_count, NUM_FRAMES)

original_frame_height, original_frame_width = image.shape[:2]

cap.release()

print(

f"The input video has a frame width of {original_frame_width}, "

f"frame height of {original_frame_height} and runs at {fps:.2f} fps"

)

```

Create superresolution video, bicubic video and comparison video. The superresolution video contains the enhanced video, upsampled with superresolution, the bicubic video is the input video upsampled with bicubic interpolation, the combination video sets the bicubic video and the superresolution side by side.

```

superres_video = cv2.VideoWriter(

filename=str(superres_video_path),

fourcc=FOURCC,

fps=fps,

frameSize=(target_width, target_height),

)

bicubic_video = cv2.VideoWriter(

filename=str(bicubic_video_path),

fourcc=FOURCC,

fps=fps,

frameSize=(target_width, target_height),

)

comparison_video = cv2.VideoWriter(

filename=str(comparison_video_path),

fourcc=FOURCC,

fps=fps,

frameSize=(target_width * 2, target_height),

)

```

### Do Inference

Read video frames and enhance them with superresolution. Save the superresolution video, the bicubic video and the comparison video to file.

The code in this cell reads the video frame by frame. Each frame is resized and reshaped to network input shape and upsampled with bicubic interpolation to target shape. Both the original and the bicubic image are propagated through the network. The network result is a numpy array with floating point values, with a shape of (1,3,1920,1080). This array is converted to an 8-bit image with shape (1080,1920,3) and written to `superres_video`. The bicubic image is written to `bicubic_video` for comparison. Lastly, the bicubic and result frames are combined side by side and written to `comparison_video`. A progress bar shows the progress of the process. Inference time is measured, as well as total time to process each frame, which includes inference time as well as the time it takes to process and write the video.

```

start_time = time.perf_counter()

frame_nr = 0

total_inference_duration = 0

progress_bar = ProgressBar(total=total_frames)

progress_bar.display()

cap = cv2.VideoCapture(filename=str(video_path))

try:

while cap.isOpened():

ret, image = cap.read()

if not ret:

cap.release()

break

if frame_nr >= total_frames:

break

# Resize the input image to network shape and convert from (H,W,C) to

# (N,C,H,W)

resized_image = cv2.resize(src=image, dsize=(input_width, input_height))

input_image_original = np.expand_dims(resized_image.transpose(2, 0, 1), axis=0)

# Resize and reshape the image to the target shape with bicubic

# interpolation

bicubic_image = cv2.resize(

src=image, dsize=(target_width, target_height), interpolation=cv2.INTER_CUBIC

)

input_image_bicubic = np.expand_dims(bicubic_image.transpose(2, 0, 1), axis=0)

# Do inference

inference_start_time = time.perf_counter()

result = exec_net.infer(

inputs={

original_image_key: input_image_original,

bicubic_image_key: input_image_bicubic,

}

)[output_key]

inference_stop_time = time.perf_counter()

inference_duration = inference_stop_time - inference_start_time

total_inference_duration += inference_duration

# Transform inference result into an image

result_frame = convert_result_to_image(result=result)

# Write resulting image and bicubic image to video

superres_video.write(image=result_frame)

bicubic_video.write(image=bicubic_image)

stacked_frame = np.hstack((bicubic_image, result_frame))

comparison_video.write(image=stacked_frame)

frame_nr = frame_nr + 1

# Update progress bar and status message

progress_bar.progress = frame_nr

progress_bar.update()

if frame_nr % 10 == 0 or frame_nr == total_frames:

clear_output(wait=True)

progress_bar.display()

display(

Pretty(

f"Processed frame {frame_nr}. Inference time: "

f"{inference_duration:.2f} seconds "

f"({1/inference_duration:.2f} FPS)"

)

)

except KeyboardInterrupt:

print("Processing interrupted.")

finally:

superres_video.release()

bicubic_video.release()

comparison_video.release()

end_time = time.perf_counter()

duration = end_time - start_time

print(f"Video's saved to {comparison_video_path.parent} directory.")

print(

f"Processed {frame_nr} frames in {duration:.2f} seconds. Total FPS "

f"(including video processing): {frame_nr/duration:.2f}. "

f"Inference FPS: {frame_nr/total_inference_duration:.2f}."

)

```

### Show Side-by-Side Video of Bicubic and Superresolution Version

```

if not comparison_video_path.exists():

raise ValueError("The comparison video does not exist.")

else:

video_link = FileLink(comparison_video_path)

video_link.html_link_str = "<a href='%s' download>%s</a>"

display(

HTML(

f"Showing side by side comparison. If you cannot see the video in "

"your browser, please click on the following link to download "

f"the video<br>{video_link._repr_html_()}"

)

)

display(Video(comparison_video_path, width=800, embed=True))

```

| true |

code

| 0.709114 | null | null | null | null |

|

# Notebook contents:

This notebook contains a lecture. The code for generating plots are found at the of the notebook. Links below.

- [presentation](#Session-1b:)

- [code for plots](#Code-for-plots)

# Session 2:

## Effective ML

*Andreas Bjerre-Nielsen*

## Vaaaamos

```

import warnings

from sklearn.exceptions import ConvergenceWarning

warnings.filterwarnings(action='ignore', category=ConvergenceWarning)

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

```

## Agenda

1. [model bias and variance](#Model-bias-and-variance)

1. [model building](#Model-building)

1. model selection

- [basic validation](#Model-selection)

- [cross validation](#Cross-validation)

- [tools for selection](#Tools-for-model-selection)

# Review

## Two agendas (1)

What are the objectives of empirical research?

1. *causation*: what is the effect of a particular variable on an outcome?

2. *prediction*: find some function that provides a good prediction of $y$ as a function of $x$

## Two agendas (2)

How might we express the agendas in a model?

$$ y = \alpha + \beta x + \varepsilon $$

- *causation*: interested in $\hat{\beta}$

- *prediction*: interested in $\hat{y}$

## Model fitting (1)

*How does over- and underfitting look like for regression?*

```

f_bias_var['regression'][2]

```

## Model fitting (2)

*What does underfitting and overfitting look like for classification?*

```

f_bias_var['classification'][2]

```

## What tools have seen?

- Supervised learning (having a target variable)

- Classification problems: Perceptron, Adaline, Logistic regression

- Regression problems: Linear regression

- We learned about optimization: gradient descent

- How can we say whether a model generalizes:

- We split data randomly into training and testing data.

## Fitting a polynomial (1)

Polyonomial: $f(x) = 2+8*x^4$

Try models of increasing order polynomials.

- Split data into train and test (50/50)

- For polynomial order 0 to 9:

- Iteration n: $y = \sum_{k=0}^{n}(\beta_k\cdot x^k)+\varepsilon$. (Taylor expansion)

- Estimate order n model on training data

- Evaluate with on test data with $\log RMSE$ ($= \log \sqrt{SSE/n}$)

## Fitting a polynomial (2)

We generate samples of data from true model (fourth order polynomial).

```

from sklearn.preprocessing import PolynomialFeatures

from sklearn.linear_model import LinearRegression

def true_fct(X):

return 2+X**4

n_samples = 25

np.random.seed(0)

X_train = np.random.normal(size=(n_samples,1))

y_train = true_fct(X_train).reshape(-1) + np.random.randn(n_samples)

X_test = np.random.normal(size=(n_samples,1))

y_test = true_fct(X_test).reshape(-1) + np.random.randn(n_samples)

```

## Fitting a polynomial (3)

We estimate the polynomials and store MSE for train and test:

```

from sklearn.metrics import mean_squared_error as mse

test_mse = []

train_mse = []

parameters = []

max_degree = 15

degrees = range(max_degree+1)

for p in degrees:

X_train_p = PolynomialFeatures(degree=p).fit_transform(X_train)

X_test_p = PolynomialFeatures(degree=p).fit_transform(X_test)

reg = LinearRegression().fit(X_train_p, y_train)

train_mse += [mse(reg.predict(X_train_p),y_train)]

test_mse += [mse(reg.predict(X_test_p),y_test)]

parameters.append(reg.coef_)

```

## Fitting a polynomial (4)

*So what happens to the model performance in- and out-of-sample?*

```

degree_index = pd.Index(degrees,name='Polynomial degree ~ model complexity')

ax = pd.DataFrame({'Train set':train_mse, 'Test set':test_mse})\

.set_index(degree_index).plot(figsize=(14,5), logy=True)

ax.set_ylabel('Mean squared error')

```

## Fitting a polynomial (5)

*Quiz: Why does it go wrong on the test data?*

- more spurious parameters

- (we include variables beyond those in true model, i.e. $x^4$ and the bias term)

- the coefficient size increases (next slide)

## Fitting a polynomial (6)

*What do you mean coefficient size increase?*

```

order_idx = pd.Index(range(len(degrees)),name='Polynomial order')

ax = pd.DataFrame(parameters,index=order_idx)\

.abs().mean(1).plot(figsize=(14,5),logy=True)

ax.set_ylabel('Mean parameter size')

```

## Fitting a polynomial (7)

*How else could we visualize this problem?*

```

f_bias_var['regression'][2]

```

# The curse of overfitting and regularization

## Looking for a remedy

*How might we solve the overfitting problem?*

- too many number of variables (spurious relations)

- excessive magnitude of the coefficient size of variables

Could we incorporate these two issues in our optimization problem?

## Regularization (1)

*Why do we regularize?*

- To mitigate overfitting > better model predictions

*How do we regularize?*

- We make models which are less complex:

- reducing the **number** of coefficient;

- reducing the **size** of the coefficients.

## Regularization (2)

*What does regularization look like?*

We add a penalty term our optimization procedure:

$$ \text{arg min}_\beta \, \underset{\text{MSE=SSE/n}}{\underbrace{E[(y_0 - \hat{f}(x_0))^2]}} + \underset{\text{penalty}}{\underbrace{\lambda \cdot R(\beta)}}$$

Introduction of penalties implies that increased model complexity has to be met with high increases precision of estimates.

## Regularization (3)

*What are some used penalty functions?*

The two most common penalty functions are L1 and L2 regularization.

- L1 regularization (***Lasso***): $R(\beta)=\sum_{j=1}^{p}|\beta_j|$

- Makes coefficients sparse, i.e. selects variables by removing some (if $\lambda$ is high)

- L2 regularization (***Ridge***): $R(\beta)=\sum_{j=1}^{p}\beta_j^2$

- Reduce coefficient size

- Fast due to analytical solution

*To note:* The *Elastic Net* uses a combination of L1 and L2 regularization.

## Regularization (4)

*How the Lasso (L1 reg.) deviates from OLS*

<center><img src='http://rasbt.github.io/mlxtend/user_guide/general_concepts/regularization-linear_files/l1.png' alt="Drawing" style="width: 800px;"/></center>

## Regularization (5)

*How the Ridge regression (L2 reg.) deviates from OLS*

<center><img src='http://rasbt.github.io/mlxtend/user_guide/general_concepts/regularization-linear_files/l2.png' alt="Drawing" style="width: 550px;"/></center>

## Regularization (6)

*How might we describe the $\lambda$ of Lasso and Ridge?*

These are hyperparameters that we can optimize over.

## Regularization (7)

*Is there a generalization of of Lasso and Ridge?*

Yes, the elastic net allows both types of regularization. Thererfore, it has two hyperparameters.

# Implementation details

## Underfitting remedies

*Is it possible to solve the underfitting problem?*

Yes, there are in general two ways.

- Using polynomial interactions of all features.

- This is known as Taylor expansion

- Note: we need to use regularization too curb impact of overfitting!

- Using non-linear models who can capture all patterns.

- These are called universal approximators

- Return to an overview of these in Session 14.

## Underfitting remedies (2)

*Some of the models we see here, e.g. Perceptrons, seem too simple - are they ever useful?*

- No, not for serious machine learning.

- But for exposition (your learning), yes.

- However, the perceptron and related models are building blocks for building neural networks.

## The devils in the details (1)

*So we just run regularization?*

We need to rescale our features:

- convert to zero mean:

- standardize to unit std:

Compute in Python:

- option 1: `StandardScaler` in `sklearn` (RECOMMENDED)

- option 2: `(X - np.mean(X)) / np.std(X)`

## The devils in the details (2)

*So we just scale our test and train?*

# NO

Fit to the distribution in the **training data first**, then rescale train and test! See more [here](https://stats.stackexchange.com/questions/174823/how-to-apply-standardization-normalization-to-train-and-testset-if-prediction-i).

## The devils in the details (3)

*So we just rescale before using polynomial features?*

# NO

Otherwise the interacted varaibles are not gaussian distributed.

## The devils in the details (4)

*Does sklearn's `PolynomialFeatures` work for more than variable?*

# Model bias and variance

## Bias and variance (1)

*How do we describe the modelling error?*

From [Wikipedia](https://en.wikipedia.org/wiki/Bias%E2%80%93variance_tradeoff) 2019:

- model **bias**: _an error from erroneous assumptions in the learning algorithm_

- high bias can cause an algorithm to miss the relevant relations between features and target outputs (**underfitting**)

- model **variance**: _an error from sensitivity to small fluctuations in the training set_

- high variance can cause an algorithm to model the random noise in the training data, rather than the intended outputs (**overfitting**).

## Bias and variance (2)

*So what is overfitting?*

Overfitting is: low bias / high variance

- traning our model captures all patterns but we also find some irrelevant

- reacts too much to training sample errors

- some errors are just noise, and thus we find too many spurious relations

- examples of causes:

- too much polynomial expansion of variables (`PolynomialFeatures`)

- non-linear/logistic without properly tuned hyperparameters:

- Decision Trees, Support Vector Machines or Neural Networks

## Bias and variance (3)

*So what is underfitting?*

Underfitting is: high bias / low variance

- oversimplification of models, cannot approximate all patterns found

- examples of causes:

- linear and logistic regression (without polynomial expansion)

## Bias and variance (4)

*Not so fast.. OLS is unbiased, right?*

Yes, OLS is unbiased. But...?

- But .. only by assumption..

- Requires we know the true form of the model.

- However, we never know do..

*What happens if we introduce regularization?*

- Then model is no longer unbiased.

- (if we assume the model is true)

# Model building

## Model pipelines (1)

*Is there a smart way to build ML models?*

Yes, we build a pipeline (input (tidy) -> target)

- Preprocessing data

- Standard: adding polynomials, imputation, rescaling

- Unsupervised learning

- Supervised learning

## Model pipelines (2)

*How does the pipeline look? Is there data leakage?*

<center><img src='https://github.com/rasbt/python-machine-learning-book-2nd-edition/raw/master/code/ch06/images/06_01.png' alt="Drawing" style="width: 700px;"/></center>

## Model pipelines (3)

*What are the advantages of using a pipeline?*

- Ensures good practice - we only fit on training data.

- No leakage of data from train to test!

- Much less code!

## Applying a model pipeline (1)

*What would this look like in Python?*

```

from sklearn.model_selection import train_test_split

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import PolynomialFeatures, StandardScaler

pipe_preproc = make_pipeline(PolynomialFeatures(),

StandardScaler())

print(pipe_preproc.steps[0])

print(pipe_preproc.steps[1])

```

## Applying a model pipeline (2)

*Does this remind you of something?*

# YES!

### Method chaining from Pandas

## Applying a model pipeline (3)

*Let's some load Boston house price data*

```

from sklearn.datasets import load_boston

boston = load_boston()

# print(boston['DESCR'])

# print('\n'.join(load_boston()['DESCR'].split('\n')[12:26]))

X = boston.data # features

y = boston.target # target

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=1)

```

## Applying a model pipeline (4)

*And how do I apply the pipe on the data?*

```

pipe_preproc = make_pipeline(PolynomialFeatures(),

StandardScaler()) # apply preproc - fit on train

X_train_prep = pipe_preproc.fit_transform(X_train) # transform training data

X_test_prep = pipe_preproc.transform(X_test) # transform test data

```

## Applying a model pipeline (5)

*What would it like look if we did use the pipe..?*

The more steps we have, the more code we save.

```

poly_trans = PolynomialFeatures()

scaler = StandardScaler()

# we call both transformations twice on both test and train

X_train_poly = poly_trans.fit_transform(X_train)

X_test_poly = poly_trans.transform(X_test)

X_train_prep_alt = scaler.fit_transform(X_train_poly)

X_test_prep_alt = scaler.transform(X_test_poly)

```

# Model selection

## Measuring the problem

*Does machine learning work out of the box?*

- In some cases ML works quite well out of the box.

- Often ML requires making careful choices.

- Note that automated machine learning packages and services exist.

- E.g. AutoML - this a hot research topic

*Which choices are to be made?*

- We need to pick model building hyperparameters.

- E.g elastic net hyperparameters: $\lambda$ for L1 and L2 regularization

- i.e. $\lambda$ for Lasso, Ridge and Elastic Net

## Model validation (1)

*How do we measure our model's performance for different hyperparameters?*

- Remember we cannot use the test set.

*Could we somehow mimick what we do with test data?*

- Yes, we can split the remaining non-test data into training and validation data:

- we train model for various hyperparameters on training data;

- pick the hyperparameters which performs best on validation data.

## Model validation (2)

*The non-test data is split into training and validation*

<center><img src='https://github.com/rasbt/python-machine-learning-book-2nd-edition/raw/master/code/ch06/images/06_02.png' alt="Drawing" style="width: 500px;"/></center>

## Model validation (3)

*What would this look like in Python?*

```

# splitting into development (2/3) and test data (1/3)

X_dev, X_test, y_dev, y_test = train_test_split(X, y, test_size=1/3, random_state=1)

# splitting development into train (1/3) and validation (1/3)

X_train, X_val, y_train, y_val = train_test_split(X_dev, y_dev, test_size=1/2, random_state=1)

```

## Model validation (4)

Let's train a linear regression model

```

from sklearn.linear_model import Lasso, LinearRegression

pipe_lr = make_pipeline(PolynomialFeatures(include_bias=True),

StandardScaler(),

LinearRegression())

pipe_lr.fit(X_dev, y_dev)

```

## Model validation (5)

Let's find the Lasso model which performs best in the validation set

```

from sklearn.metrics import mean_squared_error as mse

perform = []

lambdas = np.logspace(-4, 4, 33)

for lambda_ in lambdas:

pipe_lasso = make_pipeline(PolynomialFeatures(include_bias=True),

StandardScaler(),

Lasso(alpha=lambda_, random_state=1))

pipe_lasso.fit(X_train, y_train)

y_pred = pipe_lasso.predict(X_val)

perform.append(mse(y_pred, y_val))

hyperparam_perform = pd.Series(perform,index=lambdas)

optimal = hyperparam_perform.nsmallest(1)

print('Optimal lambda:', optimal.index[0])

print('Validation MSE: %.3f' % optimal.values[0])

```

## Model validation (6)

Let's compare the performance of the Lasso vs. Linear Regression

```

# insert optimal lambda into new model

pipe_lasso = make_pipeline(PolynomialFeatures(include_bias=False),

StandardScaler(),

Lasso(alpha=optimal.index[0]))

# fit new model on all of the development (non-test) data

pipe_lasso.fit(X_dev, y_dev)

# compare model performance on test data

print('Lasso', round(mse(pipe_lasso.predict(X_test),y_test), 1))

print('LinReg', round(mse(pipe_lr.predict(X_test),y_test), 1))

```

## Smarter validation

*Is this approach the smartest way for deciding on choice of hyperparameters?*

# NO

Our model choice depends a lot on which sample we pick. Could we use more of the data?

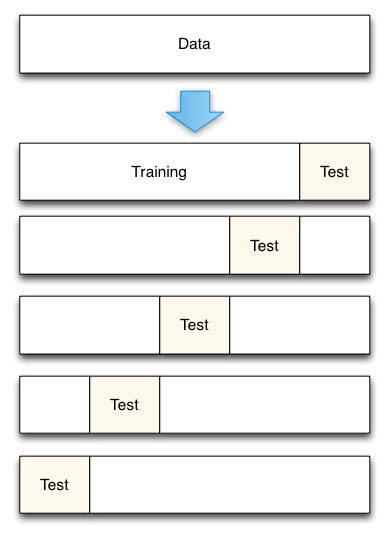

# Cross validation

## The holdout method

*How do we got the more out of the data?*

We reuse the data in the development set repeatedly

- We test on all the data

- Rotate which parts of data is used for test and train.

## Leave-one-out CV

*How do we got the most of the data?*

The most robust approach

- Each single observation in the training data we use the remaining data to train.

- Makes number of models equal to the number of observations

- Very computing intensive - does not scale!

LOOCV

## K fold method (1)

*How do balance computing time vs. overfitting?*

We split the sample into $K$ even sized test bins.

- For each test bin $k$ we use the remaining data for training.

Advantages:

- We use all our data for testing.

- Training is done with 100-(100/K) pct. of the data, i.e. 90 pct. for K=10.

## K fold method (2)

In K-fold cross validation we average the errors.

<center><img src='https://github.com/rasbt/python-machine-learning-book-2nd-edition/raw/master/code/ch06/images/06_03.png' alt="Drawing" style="width: 900px;"/></center>

## K fold method (3)

*How to do K-fold cross validation to select our model?*

We compute MSE for every lambda and every fold (nested for loop)

## K fold method (3)

Code for implementation

```

from sklearn.model_selection import KFold

kfolds = KFold(n_splits=10)

folds = list(kfolds.split(X_dev, y_dev))

# outer loop: lambdas

mseCV = []

for lambda_ in lambdas:

# inner loop: folds

mseCV_ = []

for train_idx, val_idx in folds:

# train model and compute MSE on test fold

pipe_lassoCV = make_pipeline(PolynomialFeatures(degree=2, include_bias=True),

StandardScaler(),

Lasso(alpha=lambda_, random_state=1))

X_train, y_train = X_dev[train_idx], y_dev[train_idx]

X_val, y_val = X_dev[val_idx], y_dev[val_idx]

pipe_lassoCV.fit(X_train, y_train)

mseCV_.append(mse(pipe_lassoCV.predict(X_val), y_val))

# store result

mseCV.append(mseCV_)

# convert to DataFrame

lambdaCV = pd.DataFrame(mseCV, index=lambdas)

```

# K fold method (4)

Training the model with optimal hyperparameters and compare MSE

```

# choose optimal hyperparameters

optimal_lambda = lambdaCV.mean(axis=1).nsmallest(1)

# retrain/re-estimate model using optimal hyperparameters

pipe_lassoCV = make_pipeline(PolynomialFeatures(include_bias=False),

StandardScaler(),

Lasso(alpha=optimal_lambda.index[0], random_state=1))

pipe_lassoCV.fit(X_dev,y_dev)

# compare performance

models = {'Lasso': pipe_lasso, 'Lasso CV': pipe_lassoCV, 'LinReg': pipe_lr}

for name, model in models.items():

score = mse(model.predict(X_test),y_test)

print(name, round(score, 1))

```

## K fold method (5)

*What else could we use cross-validation for?*

- Getting more evaluations of our model performance.

- We can cross validate at two levels:

- Outer: we make multiple splits of test and train/dev.

- Inner: within each train/dev. dataset we make cross validation to choose hyperparameters

# Tools for model selection

## Learning curves (1)

*What does a model that balances over- and underfitting look like?*

<center><img src='https://github.com/rasbt/python-machine-learning-book-2nd-edition/raw/master/code/ch06/images/06_04.png' alt="Drawing" style="width: 700px;"/></center>

## Learning curves (2)

*Is it easy to make learning curves in Python?*

```

from sklearn.model_selection import learning_curve

train_sizes, train_scores, test_scores = \

learning_curve(estimator=pipe_lassoCV,

X=X_dev,

y=y_dev,

train_sizes=np.arange(0.2, 1.05, .05),

scoring='neg_mean_squared_error',

cv=3)

mse_ = pd.DataFrame({'Train':-train_scores.mean(axis=1),

'Test':-test_scores.mean(axis=1)})\

.set_index(pd.Index(train_sizes,name='sample size'))

print(mse_.head(5))

```

## Learning curves (3)

```

f_learn, ax = plt.subplots(figsize=(10,4))

ax.plot(train_sizes,-test_scores.mean(1), alpha=0.25, linewidth=2, label ='Test', color='blue')

ax.plot(train_sizes,-train_scores.mean(1),alpha=0.25, linewidth=2, label='Train', color='orange')

ax.set_title('Mean performance')

ax.set_ylabel('Mean squared error')

ax.set_yscale('log')

ax.legend()

```

## Learning curves (4)

```

f_learn, ax = plt.subplots(figsize=(10,4))

plot_info = [(train_scores, 'Train','orange'), (test_scores, 'Test','blue')]

for scores, label, color in plot_info:

ax.fill_between(train_sizes, -scores.min(1), -scores.max(1),

alpha=0.25, label =label, color=color)

ax.set_title('Range of performance (min, max)')

ax.set_ylabel('Mean squared error')

ax.set_yscale('log')

ax.legend()

```

## Validation curves (1)

*Can we plot the optimal hyperparameters?*

```

from sklearn.model_selection import validation_curve

train_scores, test_scores = \

validation_curve(estimator=pipe_lasso,

X=X_dev,

y=y_dev,

param_name='lasso__alpha',

param_range=lambdas,

scoring='neg_mean_squared_error',

cv=3)

mse_score = pd.DataFrame({'Train':-train_scores.mean(axis=1),

'Validation':-test_scores.mean(axis=1),

'lambda':lambdas})\

.set_index('lambda')

print(mse_score.Validation.nsmallest(1))

```

## Validation curves (2)

```

f,ax = plt.subplots(figsize=(10,6))

mse_score.plot(logx=True, logy=True, ax=ax)

ax.axvline(mse_score.Validation.idxmin(), color='black',linestyle='--')

```

## Grid search (1)

*How do we search for two or more optimal parameters? (e.g. elastic net)*

- Goal: find the optimal parameter combination: $$\lambda_1^*,\lambda_2^*=\arg\min_{\lambda_1,\lambda_2}MSE^{CV}(X_{train},y_{train})$$

- Option 1: We can loop over the joint grid of parameters.

- One level for each parameter.

- Caveats: a lot of code / SLOW

- Option 2: sklearn has `GridSearchCV` has a tool which tests all parameter combinations.

## Grid search (2)

*How does this look in Python?*

```

from sklearn.model_selection import GridSearchCV

from sklearn.linear_model import ElasticNet

pipe_el = make_pipeline(PolynomialFeatures(include_bias=False),

StandardScaler(),

ElasticNet())

gs = GridSearchCV(estimator=pipe_el,

param_grid={'elasticnet__alpha':np.logspace(-4,4,10)*2,

'elasticnet__l1_ratio':np.linspace(0,1,10)},

scoring='neg_mean_squared_error',

n_jobs=4,

cv=10)

models['ElasicNetCV'] = gs.fit(X_dev, y_dev)

```

- Notation: double underscore between estimator and hyperparameter, e.g. 'est__hyperparam'

- Scoring: negative MSE as we're maximizing the score ~ minimize MSE.

## Grid search (3)

*What does the grid search yield?*

```

for name, model in models.items():

score = mse(model.predict(X_test),y_test)

print(name, round(score, 2))

print()

print('CV params:', gs.best_params_)

```

## Grid search (4)

*What if we have 10,000 parameter combinations?*

- Option 1: you buy a cluster on Amazon, learn how to parallelize across computers.

- Option 2: you drop some of the parameter values

- Option 3: `RandomizedSearchCV` searches a subset of the combinations.

## Miscellanous

*How do we get the coefficient from the models?*

```

lasso_model = pipe_lassoCV.steps[2][1] # extract model from pipe

lasso_model.coef_[0:13] # extract coeffiecients from model

```

# The end

[Return to agenda](#Agenda)

# Code for plots

```

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import requests

import seaborn as sns

plt.style.use('ggplot')

%matplotlib inline

SMALL_SIZE = 16

MEDIUM_SIZE = 18

BIGGER_SIZE = 20

plt.rc('font', size=SMALL_SIZE) # controls default text sizes

plt.rc('axes', titlesize=SMALL_SIZE) # fontsize of the axes title

plt.rc('axes', labelsize=MEDIUM_SIZE) # fontsize of the x and y labels

plt.rc('xtick', labelsize=SMALL_SIZE) # fontsize of the tick labels

plt.rc('ytick', labelsize=SMALL_SIZE) # fontsize of the tick labels

plt.rc('legend', fontsize=SMALL_SIZE) # legend fontsize

plt.rc('figure', titlesize=BIGGER_SIZE) # fontsize of the figure title

plt.rcParams['figure.figsize'] = 10, 4 # set default size of plots

```

### Plots of ML types

```

%run ../base/ML_plots.ipynb

```

| true |

code

| 0.58516 | null | null | null | null |

|

In [The Mean as Predictor](mean_meaning), we found that the mean had some good

properties as a single best predictor for a whole distribution.

* The mean gives a total prediction error of zero. Put otherwise, on average,

your prediction error is zero.

* The mean gives the lowest squared error. Put otherwise, the mean gives the

lowest average squared difference from the observed value.

Now we can consider what predictor we should use when predicting one set of values, from a different set of values.

We load our usual libraries.

```

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

# Make plots look a little bit more fancy

plt.style.use('fivethirtyeight')

# Print to 4 decimal places, show tiny values as 0

np.set_printoptions(precision=4, suppress=True)

import pandas as pd

```

We start with some [data on chronic kidney

disease]({{ site.baseurl }}/data/chronic_kidney_disease).

Download the data to your computer via this link: [ckd_clean.csv]({{

site.baseurl }}/data/ckd_clean.csv).

This is a data table with one row per patient and one column per test on that

patient. Many of columns are values from blood tests. Most of the patients

have chronic kidney disease.

To make things a bit easier this dataset is a version from which we have already dropped all missing values. See the dataset page linked above for more detail.

```

# Run this cell

ckd = pd.read_csv('ckd_clean.csv')

ckd.head()

```

We are interested in two columns from this data frame, "Packed Cell Volume" and "Hemoglobin".

[Packed Cell Volume](https://en.wikipedia.org/wiki/Hematocrit) (PCV) is a

measurement of the percentage of blood volume taken up by red blood cells. It

is a measurement of anemia, and anemia is a common consequence of chronic

kidney disease.

```

# Get the packed cell volume values as a Series.

pcv_series = ckd['Packed Cell Volume']

# Show the distribution.

pcv_series.hist()

```

"Hemoglobin" (HGB) is the concentration of the

[hemoglobin](https://en.wikipedia.org/wiki/Hemoglobin) molecule in blood, in

grams per deciliter. Hemoglobin is the iron-containing protein in red blood

cells that carries oxygen to the tissues.

```

# Get the hemoglobin concentration values as a Series.

hgb_series = ckd['Hemoglobin']

# Show the distribution.

hgb_series.hist()

```

We convert these Series into arrays, to make them simpler to work with. We do

this with the Numpy `array` function, that makes arrays from many other types

of object.

```

pcv = np.array(pcv_series)

hgb = np.array(hgb_series)

```

## Looking for straight lines

The [Wikipedia page for PCV](https://en.wikipedia.org/wiki/Hematocrit) says (at

the time of writing):

> An estimated hematocrit as a percentage may be derived by tripling the

> hemoglobin concentration in g/dL and dropping the units.

> [source](https://www.doctorslounge.com/hematology/labs/hematocrit.htm).

This rule-of-thumb suggests that the values for PCV will be roughly three times

the values for HGB.

Therefore, if we plot the HGB values on the x-axis of a plot, and the PCV

values on the y-axis, we should see something that is roughly compatible with a

straight line going through 0, 0, and with a slope of about 3.

Here is the plot. This time, for fun, we add a label to the X and Y axes with

`xlabel` and `ylabel`.

```

# Plot HGB on the x axis, PCV on the y axis

plt.plot(hgb, pcv, 'o')

plt.xlabel('Hemoglobin concentration')

plt.ylabel('Packed cell volume')

```

The `'o'` argument to the plot function above is a "plot marker". It tells

Matplotlib to plot the points as points, rather than joining them with lines.

The markers for the points will be filled circles, with `'o'`, but we can also

ask for other symbols such as plus marks (with `'+'`) and crosses (with `'x'`).

The line does look a bit like it has a slope of about 3. But - is that true?

Is the *best* slope 3? What slope would we find, if we looked for the *best*

slope? What could *best* mean, for *best slope*?

## Adjusting axes

We would like to see what this graph looks like in relation to the origin -

x=0, y=0. In order to this, we can add a `plt.axis` function call, like this:

```

# Plot HGB on the x axis, PCV on the y axis

plt.plot(hgb, pcv, 'o')

plt.xlabel('Hemoglobin concentration')

plt.ylabel('Packed cell volume')

# Set the x axis to go from 0 to 18, y axis from 0 to 55.

plt.axis([0, 18, 0, 55])

```

It does look plausible that this line goes through the origin, and that makes

sense. All hemoglobin is in red blood cells; we might expect the volume of red

blood cells to be zero when the hemoglobin concentration is zero.

## Putting points on plots

Before we go on, we will need some machinery to plot arbitrary points on plots.

In fact this works in exactly the same way as the points you have already seen

on plots. We use the `plot` function, with a suitable plot marker. The x

coordinates of the points go in the first argument, and the y coordinates go in

the second.

To plot a single point, pass a single x and y coordinate value:

```

plt.plot(hgb, pcv, 'o')

# A red point at x=5, y=40

plt.plot(5, 40, 'o', color='red')

```

To plot more than one point, pass multiple x and y coordinate values:

```

plt.plot(hgb, pcv, 'o')

# Two red points, one at [5, 40], the other at [10, 50]

plt.plot([5, 10], [40, 50], 'o', color='red')

```

## The mean as applied to plots

We want a straight line that fits these points.

The straight line should do the best job it can in *predicting* the PCV values from the HGB values.

We found that the mean was a good predictor for a distribution of values. We

could try and find a line or something similar that went through the mean of

the PCV values, at any given HGB value.

Let's split the HGB values up into bins centered on 7.5, 8.5, and so on. Then

we take the mean of all the PCV values corresponding to HGB values between 7

and 8, 8 and 9, and so on.

```

# The centers for our HGB bins

hgb_bin_centers = np.arange(7.5, 17.5)

hgb_bin_centers

# The number of bins

n_bins = len(hgb_bin_centers)

n_bins

```

Show the center of the bins on the x axis of the plot.

```

plt.plot(hgb, pcv, 'o')

plt.plot(hgb_bin_centers, np.zeros(n_bins), 'o', color='red')

```

Take the mean of the PCV values for each bin.

```

pcv_means = np.zeros(n_bins)

for i in np.arange(n_bins):

mid = hgb_bin_centers[i]

# Boolean identifing indices withing the HGB bin

fr_within_bin = (hgb >= mid - 0.5) & (hgb < mid + 0.5)

# Take the mean of the corresponding PCV values

pcv_means[i] = np.mean(pcv[fr_within_bin])

pcv_means

```

These means should be good predictors for PCV values, given an HGB value. We

check the bin of the HGB value and take the corresponding PCV mean as the

prediction.

Here is a plot of the means of PCV for every bin:

```

plt.plot(hgb, pcv, 'o')

plt.plot(hgb_bin_centers, pcv_means, 'o', color='red')

```

## Finding a predicting line

The means per bin give some prediction of the PCV values from the HGB. Can we

do better? Can we find a line that predicts the PCV data from the HGB data?

Remember, any line can be fully described by an *intercept* $c$ and a *slope*

$s$. A line predicts the $y$ values from the $x$ values, using the slope $s$

and the intercept $c$:

$$

y = c + x * s

$$

The *intercept* is the value of the line when x is equal to 0. It is therefore

where the line crosses the y axis.

In our case, let us assume the intercept is 0. We will assume PCV of 0 if

there is no hemoglobin.

Now we want to find a good *slope*. The *slope* is the amount that the y

values increase for a one unit increase in the x values. In our case, it is

the increase in the PCV for a 1 gram / deciliter increase in the HGB.

Let's guess the slope is 3, as Wikipedia told us it should be:

```

slope = 3

```

Remember our line prediction for y (PCV) is:

$$

y = c + x * s

$$

where x is the HGB. In our case we assume the intercept is 0, so:

```

pcv_predicted = hgb * slope

```

Plot the predictions in red on the original data in blue.

```

plt.plot(hgb, pcv, 'o')

plt.plot(hgb, pcv_predicted, 'o', color='red')

```

The red are the predictions, the blue are the original data. At each PCV value

we have a prediction, and therefore, an error in our prediction; the difference

between the predicted value and the actual values.

```

error = pcv - pcv_predicted

error[:10]

```

In this plot, for each point, we draw a thin dotted line between the prediction

of PCV for each point, and its actual value.

```

plt.plot(hgb, pcv, 'o')

plt.plot(hgb, pcv_predicted, 'o', color='red')

# Draw a line between predicted and actual

for i in np.arange(len(hgb)):

x = hgb[i]

y_0 = pcv_predicted[i]

y_1 = pcv[i]

plt.plot([x, x], [y_0, y_1], ':', color='black', linewidth=1)

```

## What is a good line?

We have guessed a slope, and so defined a line. We calculated the errors from

our guessed line.

How would we decide whether our slope was a good one? Put otherwise, how would

we decide when we have a good line?

A good line should have small prediction errors. That is, the line should give

a good prediction of the points. That is, the line should result in small

*errors*.

We would like a slope that gives us the smallest error.

## One metric for the line

[The Mean as Predictor](mean_meaning) section showed that the mean is the value

with the smallest squared distance from the other values in the distribution.

The mean is the predictor value that minimizes the sum of squared distances

from the other values.

We can use the same metric for our line. Instead of using a single vector as a

predictor, now we are using the values on the line as predictors. We want the

HGB slope, in our case, that gives the best predictors of the PCV values.

Specifically, we want the slope that gives the smallest sum of squares

difference between the line prediction and the actual values.

We have already calculated the prediction and error for our slope of 3, but

let's do it again, and then calculate the *sum of squares* of the error:

```

slope = 3

pcv_predicted = hgb * slope

error = pcv - pcv_predicted

# The sum of squared error

np.sum(error ** 2)

```

We are about to do this calculation many times, for many different slopes. We

need a *function*.

In the function below, we are using [function world](../07/functions)

to get the values of `hgb` and `pcv` defined here at the top level,

outside *function world*. The function can see these values, from

function world.

```

def sos_error(slope):

predicted = hgb * slope # 'hgb' comes from the top level

error = pcv - predicted # 'pcv' comes from the top level

return np.sum(error ** 2)

```

First check we get the same answer as the calculation above:

```

sos_error(3)

```

Does 3.5 give a higher or lower sum of squared error?

```

sos_error(3.5)

```

Now we can use the same strategy as we used in the [mean meaning](mean_meaning)

page, to try lots of slopes, and find the one that gives the smallest sum of

squared error.

```

# Slopes to try

some_slopes = np.arange(2, 4, 0.01)

n_slopes = len(some_slopes)

# Try all these slopes, calculate and record sum of squared error

sos_errors = np.zeros(n_slopes)

for i in np.arange(n_slopes):

slope = some_slopes[i]

sos_errors[i] = sos_error(slope)

# Show the first 10 values

sos_errors[:10]

```

We plot the slopes we have tried, on the x axis, against the sum of squared

error, on the y-axis.

```

plt.plot(some_slopes, sos_errors)

plt.xlabel('Candidate slopes')

plt.ylabel('Sum of squared error')

```

The minimum of the sum of squared error is:

```

np.min(sos_errors)

```

We want to find the slope that corresponds to this minimum. We can use

[argmin](where_and_argmin).

```

# Index of minumum value

i_of_min = np.argmin(sos_errors)

i_of_min

```

This is the index position of the minimum. We will therefore get the minimum

(again) if we index into the original array with the index we just found:

```

# Check we do in fact get the minimum at this index

sos_errors[i_of_min]

```

Now we can get and show the slope value that corresponds the minimum sum of

squared error:

```

best_slope = some_slopes[i_of_min]

best_slope

```