prompt

stringlengths 501

4.98M

| target

stringclasses 1

value | chunk_prompt

bool 1

class | kind

stringclasses 2

values | prob

float64 0.2

0.97

⌀ | path

stringlengths 10

394

⌀ | quality_prob

float64 0.4

0.99

⌀ | learning_prob

float64 0.15

1

⌀ | filename

stringlengths 4

221

⌀ |

|---|---|---|---|---|---|---|---|---|

```

%load_ext autoreload

%autoreload 2

%matplotlib inline

import numpy as np

import matplotlib.pyplot as plt

import freqopttest.util as util

import freqopttest.data as data

import freqopttest.kernel as kernel

import freqopttest.tst as tst

import freqopttest.glo as glo

import sys

# sample source

m = 2000

dim = 200

n = m

seed = 11

#ss = data.SSGaussMeanDiff(dim, my=1.0)

ss = data.SSGaussVarDiff(dim)

#ss = data.SSBlobs()

dim = ss.dim()

tst_data = ss.sample(m, seed=seed+1)

tr, te = tst_data.split_tr_te(tr_proportion=0.5, seed=100)

#te = tst_data

```

## smooth CF test

```

J = 7

alpha = 0.01

smooth_cf = tst.SmoothCFTest.create_randn(te, J, alpha=alpha, seed=seed)

smooth_cf.perform_test(te)

```

## grid search to choose the best Gaussian width

```

def randn(J, d, seed):

rand_state = np.random.get_state()

np.random.seed(seed)

M = np.random.randn(J, d)

np.random.set_state(rand_state)

return M

T_randn = randn(J, dim, seed)

mean_sd = tr.mean_std()

scales = 2.0**np.linspace(-4, 4, 30)

#list_gwidth = mean_sd*scales*(dim**0.5)

list_gwidth = np.hstack( (mean_sd*scales*(dim**0.5), 2**np.linspace(-8, 8, 20) ))

list_gwidth.sort()

besti, powers = tst.SmoothCFTest.grid_search_gwidth(tr, T_randn, list_gwidth, alpha)

# plot

plt.plot(list_gwidth, powers, 'o-')

plt.xscale('log', basex=2)

plt.xlabel('Gaussian width')

plt.ylabel('Test power')

plt.title('Mean std: %.3g. Best chosen: %.2g'%(mean_sd, list_gwidth[besti]) )

med = util.meddistance(tr.stack_xy())

print('med distance xy: %.3g'%med)

# actual test

best_width = list_gwidth[besti]

scf_grid = tst.SmoothCFTest(T_randn, best_width, alpha)

scf_grid.perform_test(te)

```

## optimize test frequencies

```

op = {'n_test_freqs': J, 'seed': seed, 'max_iter': 300,

'batch_proportion': 1.0, 'freqs_step_size': 0.1,

'gwidth_step_size': 0.01, 'tol_fun': 1e-4}

# optimize on the training set

test_freqs, gwidth, info = tst.SmoothCFTest.optimize_freqs_width(tr, alpha, **op)

scf_opt = tst.SmoothCFTest(test_freqs, gwidth, alpha=alpha)

scf_opt_test = scf_opt.perform_test(te)

scf_opt_test

# plot optimization results

# trajectories of the Gaussian width

gwidths = info['gwidths']

fig, axs = plt.subplots(2, 2, figsize=(10, 9))

axs[0, 0].plot(gwidths)

axs[0, 0].set_xlabel('iteration')

axs[0, 0].set_ylabel('Gaussian width')

axs[0, 0].set_title('Gaussian width evolution')

# evolution of objective values

objs = info['obj_values']

axs[0, 1].plot(objs)

axs[0, 1].set_title('Objective $\lambda(T)$')

# trajectories of the test locations

# iters x J. X Coordinates of all test locations

locs = info['test_freqs']

for coord in [0, 1]:

locs_d0 = locs[:, :, coord]

J = locs_d0.shape[1]

axs[1, coord].plot(locs_d0)

axs[1, coord].set_xlabel('iteration')

axs[1, coord].set_ylabel('index %d of test_locs'%(coord))

axs[1, coord].set_title('evolution of %d test locations'%J)

print('optimized width: %.3f'%gwidth)

```

## SCF: optimize just the Gaussian width

```

op_gwidth = {'max_iter': 300,'gwidth_step_size': 0.1,

'batch_proportion': 1.0, 'tol_fun': 1e-4}

# optimize on the training set

rand_state = np.random.get_state()

np.random.seed(seed=seed)

T0_randn = np.random.randn(J, dim)

np.random.set_state(rand_state)

med = util.meddistance(tr.stack_xy())

gwidth, info = tst.SmoothCFTest.optimize_gwidth(tr, T0_randn, med**2, **op_gwidth)

# trajectories of the Gaussian width

gwidths = info['gwidths']

fig, axs = plt.subplots(1, 2, figsize=(10, 4))

axs[0].plot(gwidths)

axs[0].set_xlabel('iteration')

axs[0].set_ylabel('Gaussian width')

axs[0].set_title('Gaussian width evolution')

# evolution of objective values

objs = info['obj_values']

axs[1].plot(objs)

axs[1].set_title('Objective $\lambda(T)$')

```

| true |

code

| 0.621512 | null | null | null | null |

|

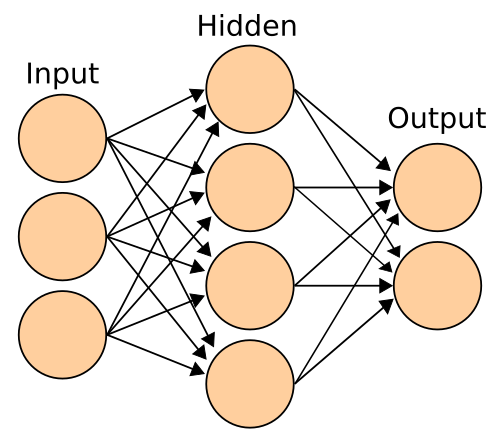

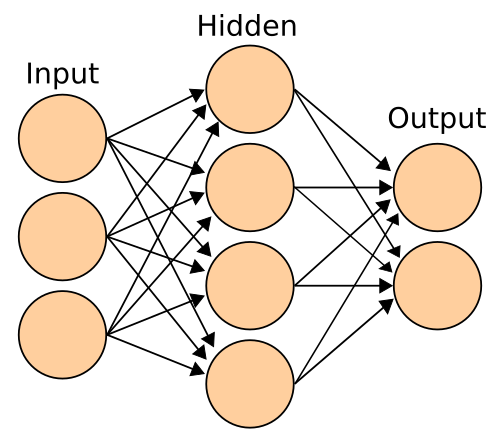

# T81-558: Applications of Deep Neural Networks

**Module 12: Deep Learning and Security**

* Instructor: [Jeff Heaton](https://sites.wustl.edu/jeffheaton/), McKelvey School of Engineering, [Washington University in St. Louis](https://engineering.wustl.edu/Programs/Pages/default.aspx)

* For more information visit the [class website](https://sites.wustl.edu/jeffheaton/t81-558/).

# Module Video Material

Main video lecture:

* [Part 12.1: Security and Information Assurance with Deep Learning](https://www.youtube.com/watch?v=UI8HX5GzpGQ&list=PLjy4p-07OYzulelvJ5KVaT2pDlxivl_BN&index=35)

* [Part 12.2: Programming KDD99 with Keras TensorFlow, Intrusion Detection System (IDS)](https://www.youtube.com/watch?v=2PAFVKA-OWY&list=PLjy4p-07OYzulelvJ5KVaT2pDlxivl_BN&index=36)

* Part 12.3: Security Project (coming soon)

# Helpful Functions

You will see these at the top of every module. These are simply a set of reusable functions that we will make use of. Each of them will be explained as the semester progresses. They are explained in greater detail as the course progresses. Class 4 contains a complete overview of these functions.

```

import base64

import os

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import requests

from sklearn import preprocessing

# Encode text values to dummy variables(i.e. [1,0,0],[0,1,0],[0,0,1] for red,green,blue)

def encode_text_dummy(df, name):

dummies = pd.get_dummies(df[name])

for x in dummies.columns:

dummy_name = f"{name}-{x}"

df[dummy_name] = dummies[x]

df.drop(name, axis=1, inplace=True)

# Encode text values to a single dummy variable. The new columns (which do not replace the old) will have a 1

# at every location where the original column (name) matches each of the target_values. One column is added for

# each target value.

def encode_text_single_dummy(df, name, target_values):

for tv in target_values:

l = list(df[name].astype(str))

l = [1 if str(x) == str(tv) else 0 for x in l]

name2 = f"{name}-{tv}"

df[name2] = l

# Encode text values to indexes(i.e. [1],[2],[3] for red,green,blue).

def encode_text_index(df, name):

le = preprocessing.LabelEncoder()

df[name] = le.fit_transform(df[name])

return le.classes_

# Encode a numeric column as zscores

def encode_numeric_zscore(df, name, mean=None, sd=None):

if mean is None:

mean = df[name].mean()

if sd is None:

sd = df[name].std()

df[name] = (df[name] - mean) / sd

# Convert all missing values in the specified column to the median

def missing_median(df, name):

med = df[name].median()

df[name] = df[name].fillna(med)

# Convert all missing values in the specified column to the default

def missing_default(df, name, default_value):

df[name] = df[name].fillna(default_value)

# Convert a Pandas dataframe to the x,y inputs that TensorFlow needs

def to_xy(df, target):

result = []

for x in df.columns:

if x != target:

result.append(x)

# find out the type of the target column. Is it really this hard? :(

target_type = df[target].dtypes

target_type = target_type[0] if hasattr(

target_type, '__iter__') else target_type

# Encode to int for classification, float otherwise. TensorFlow likes 32 bits.

if target_type in (np.int64, np.int32):

# Classification

dummies = pd.get_dummies(df[target])

return df[result].values.astype(np.float32), dummies.values.astype(np.float32)

# Regression

return df[result].values.astype(np.float32), df[[target]].values.astype(np.float32)

# Nicely formatted time string

def hms_string(sec_elapsed):

h = int(sec_elapsed / (60 * 60))

m = int((sec_elapsed % (60 * 60)) / 60)

s = sec_elapsed % 60

return f"{h}:{m:>02}:{s:>05.2f}"

# Regression chart.

def chart_regression(pred, y, sort=True):

t = pd.DataFrame({'pred': pred, 'y': y.flatten()})

if sort:

t.sort_values(by=['y'], inplace=True)

plt.plot(t['y'].tolist(), label='expected')

plt.plot(t['pred'].tolist(), label='prediction')

plt.ylabel('output')

plt.legend()

plt.show()

# Remove all rows where the specified column is +/- sd standard deviations

def remove_outliers(df, name, sd):

drop_rows = df.index[(np.abs(df[name] - df[name].mean())

>= (sd * df[name].std()))]

df.drop(drop_rows, axis=0, inplace=True)

# Encode a column to a range between normalized_low and normalized_high.

def encode_numeric_range(df, name, normalized_low=-1, normalized_high=1,

data_low=None, data_high=None):

if data_low is None:

data_low = min(df[name])

data_high = max(df[name])

df[name] = ((df[name] - data_low) / (data_high - data_low)) \

* (normalized_high - normalized_low) + normalized_low

# This function submits an assignment. You can submit an assignment as much as you like, only the final

# submission counts. The paramaters are as follows:

# data - Pandas dataframe output.

# key - Your student key that was emailed to you.

# no - The assignment class number, should be 1 through 1.

# source_file - The full path to your Python or IPYNB file. This must have "_class1" as part of its name.

# . The number must match your assignment number. For example "_class2" for class assignment #2.

def submit(data,key,no,source_file=None):

if source_file is None and '__file__' not in globals(): raise Exception('Must specify a filename when a Jupyter notebook.')

if source_file is None: source_file = __file__

suffix = '_class{}'.format(no)

if suffix not in source_file: raise Exception('{} must be part of the filename.'.format(suffix))

with open(source_file, "rb") as image_file:

encoded_python = base64.b64encode(image_file.read()).decode('ascii')

ext = os.path.splitext(source_file)[-1].lower()

if ext not in ['.ipynb','.py']: raise Exception("Source file is {} must be .py or .ipynb".format(ext))

r = requests.post("https://api.heatonresearch.com/assignment-submit",

headers={'x-api-key':key}, json={'csv':base64.b64encode(data.to_csv(index=False).encode('ascii')).decode("ascii"),

'assignment': no, 'ext':ext, 'py':encoded_python})

if r.status_code == 200:

print("Success: {}".format(r.text))

else: print("Failure: {}".format(r.text))

```

# The KDD-99 Dataset

The [KDD-99 dataset](http://kdd.ics.uci.edu/databases/kddcup99/kddcup99.html) is very famous in the security field and almost a "hello world" of intrusion detection systems in machine learning.

# Read in Raw KDD-99 Dataset

```

from keras.utils.data_utils import get_file

try:

path = get_file('kddcup.data_10_percent.gz', origin='http://kdd.ics.uci.edu/databases/kddcup99/kddcup.data_10_percent.gz')

except:

print('Error downloading')

raise

print(path)

# This file is a CSV, just no CSV extension or headers

# Download from: http://kdd.ics.uci.edu/databases/kddcup99/kddcup99.html

df = pd.read_csv(path, header=None)

print("Read {} rows.".format(len(df)))

# df = df.sample(frac=0.1, replace=False) # Uncomment this line to sample only 10% of the dataset

df.dropna(inplace=True,axis=1) # For now, just drop NA's (rows with missing values)

# The CSV file has no column heads, so add them

df.columns = [

'duration',

'protocol_type',

'service',

'flag',

'src_bytes',

'dst_bytes',

'land',

'wrong_fragment',

'urgent',

'hot',

'num_failed_logins',

'logged_in',

'num_compromised',

'root_shell',

'su_attempted',

'num_root',

'num_file_creations',

'num_shells',

'num_access_files',

'num_outbound_cmds',

'is_host_login',

'is_guest_login',

'count',

'srv_count',

'serror_rate',

'srv_serror_rate',

'rerror_rate',

'srv_rerror_rate',

'same_srv_rate',

'diff_srv_rate',

'srv_diff_host_rate',

'dst_host_count',

'dst_host_srv_count',

'dst_host_same_srv_rate',

'dst_host_diff_srv_rate',

'dst_host_same_src_port_rate',

'dst_host_srv_diff_host_rate',

'dst_host_serror_rate',

'dst_host_srv_serror_rate',

'dst_host_rerror_rate',

'dst_host_srv_rerror_rate',

'outcome'

]

# display 5 rows

df[0:5]

```

# Analyzing a Dataset

The following script can be used to give a high-level overview of how a dataset appears.

```

ENCODING = 'utf-8'

def expand_categories(values):

result = []

s = values.value_counts()

t = float(len(values))

for v in s.index:

result.append("{}:{}%".format(v,round(100*(s[v]/t),2)))

return "[{}]".format(",".join(result))

def analyze(filename):

print()

print("Analyzing: {}".format(filename))

df = pd.read_csv(filename,encoding=ENCODING)

cols = df.columns.values

total = float(len(df))

print("{} rows".format(int(total)))

for col in cols:

uniques = df[col].unique()

unique_count = len(uniques)

if unique_count>100:

print("** {}:{} ({}%)".format(col,unique_count,int(((unique_count)/total)*100)))

else:

print("** {}:{}".format(col,expand_categories(df[col])))

expand_categories(df[col])

# Analyze KDD-99

import tensorflow.contrib.learn as skflow

import pandas as pd

import os

import numpy as np

from sklearn import metrics

from scipy.stats import zscore

path = "./data/"

filename_read = os.path.join(path,"auto-mpg.csv")

```

# Encode the feature vector

Encode every row in the database. This is not instant!

```

# Now encode the feature vector

encode_numeric_zscore(df, 'duration')

encode_text_dummy(df, 'protocol_type')

encode_text_dummy(df, 'service')

encode_text_dummy(df, 'flag')

encode_numeric_zscore(df, 'src_bytes')

encode_numeric_zscore(df, 'dst_bytes')

encode_text_dummy(df, 'land')

encode_numeric_zscore(df, 'wrong_fragment')

encode_numeric_zscore(df, 'urgent')

encode_numeric_zscore(df, 'hot')

encode_numeric_zscore(df, 'num_failed_logins')

encode_text_dummy(df, 'logged_in')

encode_numeric_zscore(df, 'num_compromised')

encode_numeric_zscore(df, 'root_shell')

encode_numeric_zscore(df, 'su_attempted')

encode_numeric_zscore(df, 'num_root')

encode_numeric_zscore(df, 'num_file_creations')

encode_numeric_zscore(df, 'num_shells')

encode_numeric_zscore(df, 'num_access_files')

encode_numeric_zscore(df, 'num_outbound_cmds')

encode_text_dummy(df, 'is_host_login')

encode_text_dummy(df, 'is_guest_login')

encode_numeric_zscore(df, 'count')

encode_numeric_zscore(df, 'srv_count')

encode_numeric_zscore(df, 'serror_rate')

encode_numeric_zscore(df, 'srv_serror_rate')

encode_numeric_zscore(df, 'rerror_rate')

encode_numeric_zscore(df, 'srv_rerror_rate')

encode_numeric_zscore(df, 'same_srv_rate')

encode_numeric_zscore(df, 'diff_srv_rate')

encode_numeric_zscore(df, 'srv_diff_host_rate')

encode_numeric_zscore(df, 'dst_host_count')

encode_numeric_zscore(df, 'dst_host_srv_count')

encode_numeric_zscore(df, 'dst_host_same_srv_rate')

encode_numeric_zscore(df, 'dst_host_diff_srv_rate')

encode_numeric_zscore(df, 'dst_host_same_src_port_rate')

encode_numeric_zscore(df, 'dst_host_srv_diff_host_rate')

encode_numeric_zscore(df, 'dst_host_serror_rate')

encode_numeric_zscore(df, 'dst_host_srv_serror_rate')

encode_numeric_zscore(df, 'dst_host_rerror_rate')

encode_numeric_zscore(df, 'dst_host_srv_rerror_rate')

outcomes = encode_text_index(df, 'outcome')

num_classes = len(outcomes)

# display 5 rows

df.dropna(inplace=True,axis=1)

df[0:5]

# This is the numeric feature vector, as it goes to the neural net

```

# Train the Neural Network

```

import pandas as pd

import io

import requests

import numpy as np

import os

from sklearn.model_selection import train_test_split

from sklearn import metrics

from keras.models import Sequential

from keras.layers.core import Dense, Activation

from keras.callbacks import EarlyStopping

# Break into X (predictors) & y (prediction)

x, y = to_xy(df,'outcome')

# Create a test/train split. 25% test

# Split into train/test

x_train, x_test, y_train, y_test = train_test_split(

x, y, test_size=0.25, random_state=42)

# Create neural net

model = Sequential()

model.add(Dense(10, input_dim=x.shape[1], kernel_initializer='normal', activation='relu'))

model.add(Dense(50, input_dim=x.shape[1], kernel_initializer='normal', activation='relu'))

model.add(Dense(10, input_dim=x.shape[1], kernel_initializer='normal', activation='relu'))

model.add(Dense(1, kernel_initializer='normal'))

model.add(Dense(y.shape[1],activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam')

monitor = EarlyStopping(monitor='val_loss', min_delta=1e-3, patience=5, verbose=1, mode='auto')

model.fit(x_train,y_train,validation_data=(x_test,y_test),callbacks=[monitor],verbose=2,epochs=1000)

# Measure accuracy

pred = model.predict(x_test)

pred = np.argmax(pred,axis=1)

y_eval = np.argmax(y_test,axis=1)

score = metrics.accuracy_score(y_eval, pred)

print("Validation score: {}".format(score))

```

| true |

code

| 0.521837 | null | null | null | null |

|

<img src="https://raw.githubusercontent.com/Qiskit/qiskit-tutorials/master/images/qiskit-heading.png" alt="Note: In order for images to show up in this jupyter notebook you need to select File => Trusted Notebook" width="500 px" align="left">

## _*The Vaidman Detection Test: Interaction Free Measurement*_

The latest version of this notebook is available on https://github.com/Qiskit/qiskit-tutorial.

***

### Contributors

Alex Breitweiser

***

### Qiskit Package Versions

```

import qiskit

qiskit.__qiskit_version__

```

## Introduction

One surprising result of quantum mechanics is the ability to measure something without ever directly "observing" it. This interaction-free measurement cannot be reproduced in classical mechanics. The prototypical example is the [Elitzur–Vaidman Bomb Experiment](https://en.wikipedia.org/wiki/Elitzur%E2%80%93Vaidman_bomb_tester) - in which one wants to test whether bombs are active without detonating them. In this example we will test whether an unknown operation is null (the identity) or an X gate, corresponding to a dud or a live bomb.

### The Algorithm

The algorithm will use two qubits, $q_1$ and $q_2$, as well as a small parameter, $\epsilon = \frac{\pi}{n}$ for some integer $n$. Call the unknown gate, which is either the identity or an X gate, $G$, and assume we have it in a controlled form. The algorithm is then:

1. Start with both $q_1$ and $q_2$ in the $|0\rangle$ state

2. Rotate $q_1$ by $\epsilon$ about the Y axis

3. Apply a controlled $G$ on $q_2$, conditioned on $q_1$

4. Measure $q_2$

5. Repeat (2-4) $n$ times

6. Measure $q_1$

### Explanation and proof of correctness

There are two cases: Either the gate is the identity (a dud), or it is an X gate (a live bomb).

#### Case 1: Dud

After rotation, $q_1$ is now approximately

$$q_1 \approx |0\rangle + \frac{\epsilon}{2} |1\rangle$$

Since the unknown gate is the identity, the controlled gate leaves the two qubit state separable,

$$q_1 \times q_2 \approx (|0\rangle + \frac{\epsilon}{2} |1\rangle) \times |0\rangle$$

and measurement is trivial (we will always measure $|0\rangle$ for $q_2$).

Repetition will not change this result - we will always keep separability and $q_2$ will remain in $|0\rangle$.

After n steps, $q_1$ will flip by $\pi$ to $|1\rangle$, and so measuring it will certainly yield $1$. Therefore, the output register for a dud bomb will read:

$$000...01$$

#### Case 2: Live

Again, after rotation, $q_1$ is now approximately

$$q_1 \approx |0\rangle + \frac{\epsilon}{2} |1\rangle$$

But, since the unknown gate is now an X gate, the combined state after $G$ is now

$$q_1 \times q_2 \approx |00\rangle + \frac{\epsilon}{2} |11\rangle$$

Measuring $q_2$ now might yield $1$, in which case we have "measured" the live bomb (obtained a result which differs from that of a dud) and it explodes. However, this only happens with a probability proportional to $\epsilon^2$. In the vast majority of cases, we will measure $0$ and the entire system will collapse back to

$$q_1 \times q_2 = |00\rangle$$

After every step, the system will most likely return to the original state, and the final measurement of $q_1$ will yield $0$. Therefore, the most likely outcome of a live bomb is

$$000...00$$

which will identify a live bomb without ever "measuring" it. If we ever obtain a 1 in the bits preceding the final bit, we will have detonated the bomb, but this will only happen with probability of order

$$P \propto n \epsilon^2 \propto \epsilon$$

This probability may be made arbitrarily small at the cost of an arbitrarily long circuit.

## Generating Random Bombs

A test set must be generated to experiment on - this can be done by classical (pseudo)random number generation, but as long as we have access to a quantum computer we might as well take advantage of the ability to generate true randomness.

```

# useful additional packages

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

from collections import Counter #Use this to convert results from list to dict for histogram

# importing QISKit

from qiskit import QuantumCircuit, QuantumRegister, ClassicalRegister

from qiskit import execute, Aer, IBMQ

from qiskit.providers.ibmq import least_busy

from qiskit.tools.visualization import plot_histogram

# To use IBMQ Quantum Experience

IBMQ.load_accounts()

```

We will generate a test set of 50 "bombs", and each "bomb" will be run through a 20-step measurement circuit. We set up the program as explained in previous examples.

```

# Use local qasm simulator

backend = Aer.get_backend('qasm_simulator')

# Use the IBMQ Quantum Experience

# backend = least_busy(IBMQ.backends())

N = 50 # Number of bombs

steps = 20 # Number of steps for the algorithm, limited by maximum circuit depth

eps = np.pi / steps # Algorithm parameter, small

# Prototype circuit for bomb generation

q_gen = QuantumRegister(1, name='q_gen')

c_gen = ClassicalRegister(1, name='c_gen')

IFM_gen = QuantumCircuit(q_gen, c_gen, name='IFM_gen')

# Prototype circuit for bomb measurement

q = QuantumRegister(2, name='q')

c = ClassicalRegister(steps+1, name='c')

IFM_meas = QuantumCircuit(q, c, name='IFM_meas')

```

Generating a random bomb is achieved by simply applying a Hadamard gate to a $q_1$, which starts in $|0\rangle$, and then measuring. This randomly gives a $0$ or $1$, each with equal probability. We run one such circuit for each bomb, since circuits are currently limited to a single measurement.

```

# Quantum circuits to generate bombs

qc = []

circuits = ["IFM_gen"+str(i) for i in range(N)]

# NB: Can't have more than one measurement per circuit

for circuit in circuits:

IFM = QuantumCircuit(q_gen, c_gen, name=circuit)

IFM.h(q_gen[0]) #Turn the qubit into |0> + |1>

IFM.measure(q_gen[0], c_gen[0])

qc.append(IFM)

_ = [i.qasm() for i in qc] # Suppress the output

```

Note that, since we want to measure several discrete instances, we do *not* want to average over multiple shots. Averaging would yield partial bombs, but we assume bombs are discretely either live or dead.

```

result = execute(qc, backend=backend, shots=1).result() # Note that we only want one shot

bombs = []

for circuit in qc:

for key in result.get_counts(circuit): # Hack, there should only be one key, since there was only one shot

bombs.append(int(key))

#print(', '.join(('Live' if bomb else 'Dud' for bomb in bombs))) # Uncomment to print out "truth" of bombs

plot_histogram(Counter(('Live' if bomb else 'Dud' for bomb in bombs))) #Plotting bomb generation results

```

## Testing the Bombs

Here we implement the algorithm described above to measure the bombs. As with the generation of the bombs, it is currently impossible to take several measurements in a single circuit - therefore, it must be run on the simulator.

```

# Use local qasm simulator

backend = Aer.get_backend('qasm_simulator')

qc = []

circuits = ["IFM_meas"+str(i) for i in range(N)]

#Creating one measurement circuit for each bomb

for i in range(N):

bomb = bombs[i]

IFM = QuantumCircuit(q, c, name=circuits[i])

for step in range(steps):

IFM.ry(eps, q[0]) #First we rotate the control qubit by epsilon

if bomb: #If the bomb is live, the gate is a controlled X gate

IFM.cx(q[0],q[1])

#If the bomb is a dud, the gate is a controlled identity gate, which does nothing

IFM.measure(q[1], c[step]) #Now we measure to collapse the combined state

IFM.measure(q[0], c[steps])

qc.append(IFM)

_ = [i.qasm() for i in qc] # Suppress the output

result = execute(qc, backend=backend, shots=1, max_credits=5).result()

def get_status(counts):

# Return whether a bomb was a dud, was live but detonated, or was live and undetonated

# Note that registers are returned in reversed order

for key in counts:

if '1' in key[1:]:

#If we ever measure a '1' from the measurement qubit (q1), the bomb was measured and will detonate

return '!!BOOM!!'

elif key[0] == '1':

#If the control qubit (q0) was rotated to '1', the state never entangled because the bomb was a dud

return 'Dud'

else:

#If we only measured '0' for both the control and measurement qubit, the bomb was live but never set off

return 'Live'

results = {'Live': 0, 'Dud': 0, "!!BOOM!!": 0}

for circuit in qc:

status = get_status(result.get_counts(circuit))

results[status] += 1

plot_histogram(results)

```

| true |

code

| 0.488405 | null | null | null | null |

|

<!--BOOK_INFORMATION-->

<img align="left" style="padding-right:10px;" src="figures/PDSH-cover-small.png">

*This notebook contains an excerpt from the [Python Data Science Handbook](http://shop.oreilly.com/product/0636920034919.do) by Jake VanderPlas; the content is available [on GitHub](https://github.com/jakevdp/PythonDataScienceHandbook).*

*The text is released under the [CC-BY-NC-ND license](https://creativecommons.org/licenses/by-nc-nd/3.0/us/legalcode), and code is released under the [MIT license](https://opensource.org/licenses/MIT). If you find this content useful, please consider supporting the work by [buying the book](http://shop.oreilly.com/product/0636920034919.do)!*

<!--NAVIGATION-->

< [In Depth: Naive Bayes Classification](05.05-Naive-Bayes.ipynb) | [Contents](Index.ipynb) | [In-Depth: Support Vector Machines](05.07-Support-Vector-Machines.ipynb) >

<a href="https://colab.research.google.com/github/jakevdp/PythonDataScienceHandbook/blob/master/notebooks/05.06-Linear-Regression.ipynb"><img align="left" src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open in Colab" title="Open and Execute in Google Colaboratory"></a>

# In Depth: Linear Regression

Just as naive Bayes (discussed earlier in [In Depth: Naive Bayes Classification](05.05-Naive-Bayes.ipynb)) is a good starting point for classification tasks, linear regression models are a good starting point for regression tasks.

Such models are popular because they can be fit very quickly, and are very interpretable.

You are probably familiar with the simplest form of a linear regression model (i.e., fitting a straight line to data) but such models can be extended to model more complicated data behavior.

In this section we will start with a quick intuitive walk-through of the mathematics behind this well-known problem, before seeing how before moving on to see how linear models can be generalized to account for more complicated patterns in data.

We begin with the standard imports:

```

%matplotlib inline

import matplotlib.pyplot as plt

import seaborn as sns; sns.set()

import numpy as np

```

## Simple Linear Regression

We will start with the most familiar linear regression, a straight-line fit to data.

A straight-line fit is a model of the form

$$

y = ax + b

$$

where $a$ is commonly known as the *slope*, and $b$ is commonly known as the *intercept*.

Consider the following data, which is scattered about a line with a slope of 2 and an intercept of -5:

```

rng = np.random.RandomState(1)

x = 10 * rng.rand(50)

y = 2 * x - 5 + rng.randn(50)

plt.scatter(x, y);

```

We can use Scikit-Learn's ``LinearRegression`` estimator to fit this data and construct the best-fit line:

```

from sklearn.linear_model import LinearRegression

model = LinearRegression(fit_intercept=True)

model.fit(x[:, np.newaxis], y)

xfit = np.linspace(0, 10, 1000)

yfit = model.predict(xfit[:, np.newaxis])

plt.scatter(x, y)

plt.plot(xfit, yfit);

```

The slope and intercept of the data are contained in the model's fit parameters, which in Scikit-Learn are always marked by a trailing underscore.

Here the relevant parameters are ``coef_`` and ``intercept_``:

```

print("Model slope: ", model.coef_[0])

print("Model intercept:", model.intercept_)

```

We see that the results are very close to the inputs, as we might hope.

The ``LinearRegression`` estimator is much more capable than this, however—in addition to simple straight-line fits, it can also handle multidimensional linear models of the form

$$

y = a_0 + a_1 x_1 + a_2 x_2 + \cdots

$$

where there are multiple $x$ values.

Geometrically, this is akin to fitting a plane to points in three dimensions, or fitting a hyper-plane to points in higher dimensions.

The multidimensional nature of such regressions makes them more difficult to visualize, but we can see one of these fits in action by building some example data, using NumPy's matrix multiplication operator:

```

rng = np.random.RandomState(1)

X = 10 * rng.rand(100, 3)

y = 0.5 + np.dot(X, [1.5, -2., 1.])

model.fit(X, y)

print(model.intercept_)

print(model.coef_)

```

Here the $y$ data is constructed from three random $x$ values, and the linear regression recovers the coefficients used to construct the data.

In this way, we can use the single ``LinearRegression`` estimator to fit lines, planes, or hyperplanes to our data.

It still appears that this approach would be limited to strictly linear relationships between variables, but it turns out we can relax this as well.

## Basis Function Regression

One trick you can use to adapt linear regression to nonlinear relationships between variables is to transform the data according to *basis functions*.

We have seen one version of this before, in the ``PolynomialRegression`` pipeline used in [Hyperparameters and Model Validation](05.03-Hyperparameters-and-Model-Validation.ipynb) and [Feature Engineering](05.04-Feature-Engineering.ipynb).

The idea is to take our multidimensional linear model:

$$

y = a_0 + a_1 x_1 + a_2 x_2 + a_3 x_3 + \cdots

$$

and build the $x_1, x_2, x_3,$ and so on, from our single-dimensional input $x$.

That is, we let $x_n = f_n(x)$, where $f_n()$ is some function that transforms our data.

For example, if $f_n(x) = x^n$, our model becomes a polynomial regression:

$$

y = a_0 + a_1 x + a_2 x^2 + a_3 x^3 + \cdots

$$

Notice that this is *still a linear model*—the linearity refers to the fact that the coefficients $a_n$ never multiply or divide each other.

What we have effectively done is taken our one-dimensional $x$ values and projected them into a higher dimension, so that a linear fit can fit more complicated relationships between $x$ and $y$.

### Polynomial basis functions

This polynomial projection is useful enough that it is built into Scikit-Learn, using the ``PolynomialFeatures`` transformer:

```

from sklearn.preprocessing import PolynomialFeatures

x = np.array([2, 3, 4])

poly = PolynomialFeatures(3, include_bias=False)

poly.fit_transform(x[:, None])

```

We see here that the transformer has converted our one-dimensional array into a three-dimensional array by taking the exponent of each value.

This new, higher-dimensional data representation can then be plugged into a linear regression.

As we saw in [Feature Engineering](05.04-Feature-Engineering.ipynb), the cleanest way to accomplish this is to use a pipeline.

Let's make a 7th-degree polynomial model in this way:

```

from sklearn.pipeline import make_pipeline

poly_model = make_pipeline(PolynomialFeatures(7),

LinearRegression())

```

With this transform in place, we can use the linear model to fit much more complicated relationships between $x$ and $y$.

For example, here is a sine wave with noise:

```

rng = np.random.RandomState(1)

x = 10 * rng.rand(50)

y = np.sin(x) + 0.1 * rng.randn(50)

poly_model.fit(x[:, np.newaxis], y)

yfit = poly_model.predict(xfit[:, np.newaxis])

plt.scatter(x, y)

plt.plot(xfit, yfit);

```

Our linear model, through the use of 7th-order polynomial basis functions, can provide an excellent fit to this non-linear data!

### Gaussian basis functions

Of course, other basis functions are possible.

For example, one useful pattern is to fit a model that is not a sum of polynomial bases, but a sum of Gaussian bases.

The result might look something like the following figure:

[figure source in Appendix](#Gaussian-Basis)

The shaded regions in the plot are the scaled basis functions, and when added together they reproduce the smooth curve through the data.

These Gaussian basis functions are not built into Scikit-Learn, but we can write a custom transformer that will create them, as shown here and illustrated in the following figure (Scikit-Learn transformers are implemented as Python classes; reading Scikit-Learn's source is a good way to see how they can be created):

```

from sklearn.base import BaseEstimator, TransformerMixin

class GaussianFeatures(BaseEstimator, TransformerMixin):

"""Uniformly spaced Gaussian features for one-dimensional input"""

def __init__(self, N, width_factor=2.0):

self.N = N

self.width_factor = width_factor

@staticmethod

def _gauss_basis(x, y, width, axis=None):

arg = (x - y) / width

return np.exp(-0.5 * np.sum(arg ** 2, axis))

def fit(self, X, y=None):

# create N centers spread along the data range

self.centers_ = np.linspace(X.min(), X.max(), self.N)

self.width_ = self.width_factor * (self.centers_[1] - self.centers_[0])

return self

def transform(self, X):

return self._gauss_basis(X[:, :, np.newaxis], self.centers_,

self.width_, axis=1)

gauss_model = make_pipeline(GaussianFeatures(20),

LinearRegression())

gauss_model.fit(x[:, np.newaxis], y)

yfit = gauss_model.predict(xfit[:, np.newaxis])

plt.scatter(x, y)

plt.plot(xfit, yfit)

plt.xlim(0, 10);

```

We put this example here just to make clear that there is nothing magic about polynomial basis functions: if you have some sort of intuition into the generating process of your data that makes you think one basis or another might be appropriate, you can use them as well.

## Regularization

The introduction of basis functions into our linear regression makes the model much more flexible, but it also can very quickly lead to over-fitting (refer back to [Hyperparameters and Model Validation](05.03-Hyperparameters-and-Model-Validation.ipynb) for a discussion of this).

For example, if we choose too many Gaussian basis functions, we end up with results that don't look so good:

```

model = make_pipeline(GaussianFeatures(30),

LinearRegression())

model.fit(x[:, np.newaxis], y)

plt.scatter(x, y)

plt.plot(xfit, model.predict(xfit[:, np.newaxis]))

plt.xlim(0, 10)

plt.ylim(-1.5, 1.5);

```

With the data projected to the 30-dimensional basis, the model has far too much flexibility and goes to extreme values between locations where it is constrained by data.

We can see the reason for this if we plot the coefficients of the Gaussian bases with respect to their locations:

```

def basis_plot(model, title=None):

fig, ax = plt.subplots(2, sharex=True)

model.fit(x[:, np.newaxis], y)

ax[0].scatter(x, y)

ax[0].plot(xfit, model.predict(xfit[:, np.newaxis]))

ax[0].set(xlabel='x', ylabel='y', ylim=(-1.5, 1.5))

if title:

ax[0].set_title(title)

ax[1].plot(model.steps[0][1].centers_,

model.steps[1][1].coef_)

ax[1].set(xlabel='basis location',

ylabel='coefficient',

xlim=(0, 10))

model = make_pipeline(GaussianFeatures(30), LinearRegression())

basis_plot(model)

```

The lower panel of this figure shows the amplitude of the basis function at each location.

This is typical over-fitting behavior when basis functions overlap: the coefficients of adjacent basis functions blow up and cancel each other out.

We know that such behavior is problematic, and it would be nice if we could limit such spikes expliticly in the model by penalizing large values of the model parameters.

Such a penalty is known as *regularization*, and comes in several forms.

### Ridge regression ($L_2$ Regularization)

Perhaps the most common form of regularization is known as *ridge regression* or $L_2$ *regularization*, sometimes also called *Tikhonov regularization*.

This proceeds by penalizing the sum of squares (2-norms) of the model coefficients; in this case, the penalty on the model fit would be

$$

P = \alpha\sum_{n=1}^N \theta_n^2

$$

where $\alpha$ is a free parameter that controls the strength of the penalty.

This type of penalized model is built into Scikit-Learn with the ``Ridge`` estimator:

```

from sklearn.linear_model import Ridge

model = make_pipeline(GaussianFeatures(30), Ridge(alpha=0.1))

basis_plot(model, title='Ridge Regression')

```

The $\alpha$ parameter is essentially a knob controlling the complexity of the resulting model.

In the limit $\alpha \to 0$, we recover the standard linear regression result; in the limit $\alpha \to \infty$, all model responses will be suppressed.

One advantage of ridge regression in particular is that it can be computed very efficiently—at hardly more computational cost than the original linear regression model.

### Lasso regression ($L_1$ regularization)

Another very common type of regularization is known as lasso, and involves penalizing the sum of absolute values (1-norms) of regression coefficients:

$$

P = \alpha\sum_{n=1}^N |\theta_n|

$$

Though this is conceptually very similar to ridge regression, the results can differ surprisingly: for example, due to geometric reasons lasso regression tends to favor *sparse models* where possible: that is, it preferentially sets model coefficients to exactly zero.

We can see this behavior in duplicating the ridge regression figure, but using L1-normalized coefficients:

```

from sklearn.linear_model import Lasso

model = make_pipeline(GaussianFeatures(30), Lasso(alpha=0.001))

basis_plot(model, title='Lasso Regression')

```

With the lasso regression penalty, the majority of the coefficients are exactly zero, with the functional behavior being modeled by a small subset of the available basis functions.

As with ridge regularization, the $\alpha$ parameter tunes the strength of the penalty, and should be determined via, for example, cross-validation (refer back to [Hyperparameters and Model Validation](05.03-Hyperparameters-and-Model-Validation.ipynb) for a discussion of this).

## Example: Predicting Bicycle Traffic

As an example, let's take a look at whether we can predict the number of bicycle trips across Seattle's Fremont Bridge based on weather, season, and other factors.

We have seen this data already in [Working With Time Series](03.11-Working-with-Time-Series.ipynb).

In this section, we will join the bike data with another dataset, and try to determine the extent to which weather and seasonal factors—temperature, precipitation, and daylight hours—affect the volume of bicycle traffic through this corridor.

Fortunately, the NOAA makes available their daily [weather station data](http://www.ncdc.noaa.gov/cdo-web/search?datasetid=GHCND) (I used station ID USW00024233) and we can easily use Pandas to join the two data sources.

We will perform a simple linear regression to relate weather and other information to bicycle counts, in order to estimate how a change in any one of these parameters affects the number of riders on a given day.

In particular, this is an example of how the tools of Scikit-Learn can be used in a statistical modeling framework, in which the parameters of the model are assumed to have interpretable meaning.

As discussed previously, this is not a standard approach within machine learning, but such interpretation is possible for some models.

Let's start by loading the two datasets, indexing by date:

```

!sudo apt-get update

!apt-get -y install curl

!curl -o FremontBridge.csv https://data.seattle.gov/api/views/65db-xm6k/rows.csv?accessType=DOWNLOAD

# !wget -o FremontBridge.csv "https://data.seattle.gov/api/views/65db-xm6k/rows.csv?accessType=DOWNLOAD"

import pandas as pd

counts = pd.read_csv('FremontBridge.csv', index_col='Date', parse_dates=True)

weather = pd.read_csv('data/BicycleWeather.csv', index_col='DATE', parse_dates=True)

```

Next we will compute the total daily bicycle traffic, and put this in its own dataframe:

```

daily = counts.resample('d').sum()

daily['Total'] = daily.sum(axis=1)

daily = daily[['Total']] # remove other columns

```

We saw previously that the patterns of use generally vary from day to day; let's account for this in our data by adding binary columns that indicate the day of the week:

```

days = ['Mon', 'Tue', 'Wed', 'Thu', 'Fri', 'Sat', 'Sun']

for i in range(7):

daily[days[i]] = (daily.index.dayofweek == i).astype(float)

```

Similarly, we might expect riders to behave differently on holidays; let's add an indicator of this as well:

```

from pandas.tseries.holiday import USFederalHolidayCalendar

cal = USFederalHolidayCalendar()

holidays = cal.holidays('2012', '2016')

daily = daily.join(pd.Series(1, index=holidays, name='holiday'))

daily['holiday'].fillna(0, inplace=True)

```

We also might suspect that the hours of daylight would affect how many people ride; let's use the standard astronomical calculation to add this information:

```

from datetime import datetime

def hours_of_daylight(date, axis=23.44, latitude=47.61):

"""Compute the hours of daylight for the given date"""

days = (date - datetime(2000, 12, 21)).days

m = (1. - np.tan(np.radians(latitude))

* np.tan(np.radians(axis) * np.cos(days * 2 * np.pi / 365.25)))

return 24. * np.degrees(np.arccos(1 - np.clip(m, 0, 2))) / 180.

daily['daylight_hrs'] = list(map(hours_of_daylight, daily.index))

daily[['daylight_hrs']].plot()

plt.ylim(8, 17)

```

We can also add the average temperature and total precipitation to the data.

In addition to the inches of precipitation, let's add a flag that indicates whether a day is dry (has zero precipitation):

```

# temperatures are in 1/10 deg C; convert to C

weather['TMIN'] /= 10

weather['TMAX'] /= 10

weather['Temp (C)'] = 0.5 * (weather['TMIN'] + weather['TMAX'])

# precip is in 1/10 mm; convert to inches

weather['PRCP'] /= 254

weather['dry day'] = (weather['PRCP'] == 0).astype(int)

daily = daily.join(weather[['PRCP', 'Temp (C)', 'dry day']],rsuffix='0')

```

Finally, let's add a counter that increases from day 1, and measures how many years have passed.

This will let us measure any observed annual increase or decrease in daily crossings:

```

daily['annual'] = (daily.index - daily.index[0]).days / 365.

```

Now our data is in order, and we can take a look at it:

```

daily.head()

```

With this in place, we can choose the columns to use, and fit a linear regression model to our data.

We will set ``fit_intercept = False``, because the daily flags essentially operate as their own day-specific intercepts:

```

# Drop any rows with null values

daily.dropna(axis=0, how='any', inplace=True)

column_names = ['Mon', 'Tue', 'Wed', 'Thu', 'Fri', 'Sat', 'Sun', 'holiday',

'daylight_hrs', 'PRCP', 'dry day', 'Temp (C)', 'annual']

X = daily[column_names]

y = daily['Total']

model = LinearRegression(fit_intercept=False)

model.fit(X, y)

daily['predicted'] = model.predict(X)

```

Finally, we can compare the total and predicted bicycle traffic visually:

```

daily[['Total', 'predicted']].plot(alpha=0.5);

```

It is evident that we have missed some key features, especially during the summer time.

Either our features are not complete (i.e., people decide whether to ride to work based on more than just these) or there are some nonlinear relationships that we have failed to take into account (e.g., perhaps people ride less at both high and low temperatures).

Nevertheless, our rough approximation is enough to give us some insights, and we can take a look at the coefficients of the linear model to estimate how much each feature contributes to the daily bicycle count:

```

params = pd.Series(model.coef_, index=X.columns)

params

```

These numbers are difficult to interpret without some measure of their uncertainty.

We can compute these uncertainties quickly using bootstrap resamplings of the data:

```

from sklearn.utils import resample

np.random.seed(1)

err = np.std([model.fit(*resample(X, y)).coef_

for i in range(1000)], 0)

```

With these errors estimated, let's again look at the results:

```

print(pd.DataFrame({'effect': params.round(0),

'error': err.round(0)}))

```

We first see that there is a relatively stable trend in the weekly baseline: there are many more riders on weekdays than on weekends and holidays.

We see that for each additional hour of daylight, 129 ± 9 more people choose to ride; a temperature increase of one degree Celsius encourages 65 ± 4 people to grab their bicycle; a dry day means an average of 548 ± 33 more riders, and each inch of precipitation means 665 ± 62 more people leave their bike at home.

Once all these effects are accounted for, we see a modest increase of 27 ± 18 new daily riders each year.

Our model is almost certainly missing some relevant information. For example, nonlinear effects (such as effects of precipitation *and* cold temperature) and nonlinear trends within each variable (such as disinclination to ride at very cold and very hot temperatures) cannot be accounted for in this model.

Additionally, we have thrown away some of the finer-grained information (such as the difference between a rainy morning and a rainy afternoon), and we have ignored correlations between days (such as the possible effect of a rainy Tuesday on Wednesday's numbers, or the effect of an unexpected sunny day after a streak of rainy days).

These are all potentially interesting effects, and you now have the tools to begin exploring them if you wish!

<!--NAVIGATION-->

< [In Depth: Naive Bayes Classification](05.05-Naive-Bayes.ipynb) | [Contents](Index.ipynb) | [In-Depth: Support Vector Machines](05.07-Support-Vector-Machines.ipynb) >

<a href="https://colab.research.google.com/github/jakevdp/PythonDataScienceHandbook/blob/master/notebooks/05.06-Linear-Regression.ipynb"><img align="left" src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open in Colab" title="Open and Execute in Google Colaboratory"></a>

| true |

code

| 0.647185 | null | null | null | null |

|

# Analytics and demand forecasting for a multi-national retail store

## Notebook by Edward Warothe

### Introduction

General information about this analysis is in the readme file.

There are 4 datasets in these analysis: stores -has location, type and cluster information about the 54 stores in check; items which has family, class and perishable columns; transactions -has daily average transactions for each store; oil -has the daily average oil price per barrel; and train, which has date, store number, item number, unit sales and on promotion columns. We'll analyze all these to look for patterns and\or interesting features in the data.

At the moment of writing this article, I'm using a windows machine, core i7 with 8 GB RAM. Why is this information important? As you'll see the entire dataset takes around 5 GB in csv format which is too much for my machine to handle. My solution was to bucketize the train dataset into years (2013-2017). This method worked out especially well compared to several others I considered. I even have a short [medium article](https://eddywarothe.medium.com/is-your-data-too-big-for-your-ram-b3ed17a74095) comparing the different solutions I tried, results and compromises for each, and my choice, which is really important in case you come across a similar problem.

The images and charts are rendered using plotly's non-interactive renderer, (plotly.io svg). To get full plotly interactivity on your notebook (several hover tool capabilities), if you have an internet conection, delete the plotly.pio and pio.renderers.default='svg' lines below.

```

# Fisrt, we load the required python packages to use in the analyses.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import gc

import time

import pyarrow

from scipy.signal import savgol_filter

from fbprophet import Prophet

import plotly.graph_objects as go

import plotly.express as px

import plotly.io as pio

pio.renderers.default = 'svg'

import warnings

warnings.filterwarnings('ignore')

```

After loading the train dataset, I split it by year and persist it into my storage as a parquet file which preserves the data types I have preset and has approximately has read times 50% faster than csv. Before the split, I will separate the negative unit sale values from the positive ones. These negative values are from item returns. Later on, we'll analyze the returns data.

```

dtype = {'store_nbr':np.int16, 'item_nbr':np.int32, 'unit_sales':np.float32, 'onpromotion':object}

chunks = pd.read_csv('C:/favorita2/train.csv', parse_dates=['date'], dtype=dtype,

chunksize=1000000, usecols=[1,2,3,4,5])

# the below section is in comment form because running it consumes to much resources on my machine

'''

type(chunks)

train = pd.DataFrame()

count = 0

start = time.time()

for chunk in chunks:

train = pd.concat([train, chunk])

print('chunk', count, 'time taken', time.time()-start)

count += 1

returns = train[train['unit_sales']<0]

returns.to_csv('C:/returns.csv')

# then get the symmetric difference of the two

train = train.merge(returns,indicator = True, how='left').loc[lambda x: x['_merge']=='left_only']

# get the years by splitting

year1 = train[train['date'].between('2013-01-01', '2013-12-31')]

year2 = train[train['date'].between('2014-01-01', '2014-12-31')]

year3 = train[train['date'].between('2015-01-01', '2015-12-31')]

year4 = train[train['date'].between('2016-01-01', '2016-12-31')]

year5 = train[train['date'].between('2017-01-01', '2017-12-31')]

# it's failrly easy to save each as a parquet file for later reference and analysis

year1.to_parquet('C:/year1.parquet', engine = 'pyarrow', index=False)

# to load the dataset

year1 = pd.read_parquet('C:/year1.parquet')

'''

```

From this point, analysis becomes easier. Since our focus is on demand forecasting for certain items via time series analysis, we first answer some basic questions about our data :

1. Which items are getting popular as time goes? (so as to stock more of these items depending on the time thy're popular) 2. Which are getting less popular?

3. Which have consistently good sales?

4. What family do the preceding items belong to?

5. How does location affect sales?

6. How does oil price and transaction number affect sales?

7. Which items were returned most? How did returns affect sales?

8. What are the expected forecast sales for September 2017?

We answer these and many more in our quest to extract as much info as possible from the dataset.

Since loading the entire dataset takes up a lot of time and resources, we'll load the chunks, which have been split by year, from now on.

```

items = pd.read_csv('C:/favorita2/items.csv')

year1 = pd.read_parquet('C:/favorita2/year1.parquet')

year2 = pd.read_parquet('C:/favorita2/year2.parquet')

year3 = pd.read_parquet('C:/favorita2/year3.parquet')

year4 = pd.read_parquet('C:/favorita2/year4.parquet')

year5 = pd.read_parquet('C:/favorita2/year5.parquet')

```

#### 1. which items had increasing demand over the years? (increasing number of sales counts)

```

def get_counts(data):

# function to get item count in a particular year

item_count = data.item_nbr.value_counts().to_frame()

item_count = item_count.reset_index(level=0)

item_count.rename(columns={'index':'item_nbr', 'item_nbr':'counts'}, inplace=True)

return item_count

count1 = get_counts(year1)

count2 = get_counts(year2)

count3 = get_counts(year3)

count4 = get_counts(year4)

count5 = get_counts(year5)

count1.head()

```

Next we write a function to get the item count percentage difference, e.g between year1(2013) and year2(2014), for items featured in both years.

```

# get difference in item count for 2 consecutive years

def get_diff(data1, data2):

combined = data1.merge(data2, on = 'item_nbr', how = 'inner', suffixes = ('_a', '_b'))

combined['diff'] = ((combined['counts_b'] - combined['counts_a']) / combined['counts_a']).round(2)

return combined.sort_values(by='diff', ascending=False).merge(items, on='item_nbr', how='left')

diff = get_diff(count1, count2)

diff.head()

```

We can use the percentage differences between consecutive years to answer question 1. But we'll have to filter out other columns except item nbr and diff from the result returned by the get_diff function.

```

# get difference in item count for 2 consecutive years

def get_diff(data1, data2):

combined = data1.merge(data2, on = 'item_nbr', how = 'inner', suffixes = ('_a', '_b'))

combined['diff'] = ((combined['counts_b'] - combined['counts_a']) / combined['counts_a']).round(2)

return combined.sort_values(by='diff', ascending=False).merge(items, on='item_nbr', how='left')[['item_nbr', 'diff']]

diff1 = get_diff(count1,count2)

diff2 = get_diff(count2,count3)

diff3 = get_diff(count3,count4)

diff4 = get_diff(count4,count5)

dfs = [diff1, diff2, diff3, diff4]

from functools import reduce

df = reduce(lambda left,right: pd.merge(left,right, on='item_nbr'), dfs)

df.merge(items, on='item_nbr', how='inner').iloc[:,[0,1,2,3,4,5]].head(5)

```

The diff_x and diff_y keep repeating due to pandas merge behaviour for suffixes. In this case, the diff columns from left to right are percentage differences between the item counts from 2013 until 2017 respectively. That is, diff_x = 2014 item count - 2013 item count, and so on. Note we are limited in the number of items we can preview since the 'inner' parameter from the merge function only selects item numbers common to all the years. This will be remedied later by selecting the difference between the last 3 and 2 years.

#### 2. Which items were consistent perfomers? (those with consistent improvement in sales counts)

Note that since we're getting the differences between each year consecutively, positive values indicate an improvement from the previous year.

```

merged_counts = df.merge(items, on='item_nbr', how='inner').iloc[:,[0,1,2,3,4]]

merged_counts[(merged_counts > 0).all(1)].merge(items, on='item_nbr', how='left')

```

There are only three items that had increased transaction count over the years. The GroceryI item, 910928, is particularly interesting since it's constantly increasing in demand.

Lets have a look at the three years from 2014 since they had more common items.

```

dfs = [diff2, diff3, diff4]

from functools import reduce

df = reduce(lambda left,right: pd.merge(left,right, on='item_nbr'), dfs)

df.merge(items, on='item_nbr', how='inner').iloc[:,[0,1,2,3,4]].head(5)

merged_counts = df.merge(items, on='item_nbr', how='inner').iloc[:,[0,1,2,3]]

merged_counts[(merged_counts > 0).all(1)].merge(items, on='item_nbr', how='inner').sort_values(by=['diff','diff_y'], ascending=False).head(5)

```

There are 22 item that are on increasing demand from 2014 till 2017. We see that 2016 saw some big jumps in demand of select items as compared to the other years. The inventory and restocking options for this items should be a priority for the company.

Using this method, we can easily get the worst perfoming items across the last 4 years(2014 to 2017).

#### 3. Which items had consistently decreasing purchase counts? (which more or less equals demand)

```

merged_counts[(merged_counts.iloc[:,[1,2,3]] < 0).all(1)].merge(items, on='item_nbr', how='inner').family.value_counts()

```

There are 99 items which have had decreasing purchase counts over the last 4 years, with 64% of these items belonging to GroceryI and cleaning.

#### 4. Which items are in demand during the sales spikes?

The next question to answer in the demand planning process is when the spikes happen, and which items are in demand during these spikes. For this analysis, we'll make a time series object by averaging daily unit sales, and utilize the Savitsky-Golay filter to smooth our time series in order to get a view of the general trend and cyclical movements in the data.

```

items = pd.read_csv('C:/favorita2/items.csv')

year1 = pd.read_parquet('C:/favorita2/year1.parquet')

year2 = pd.read_parquet('C:/favorita2/year2.parquet')

year3 = pd.read_parquet('C:/favorita2/year3.parquet')

temp1 = pd.concat([data.groupby('date', as_index=False)['unit_sales'].mean() for data in [year1, year2, year3]])

del([year1, year2, year3])

import gc

gc.collect()

year4 = pd.read_parquet('C:/favorita2/year4.parquet')

year5 = pd.read_parquet('C:/favorita2/year5.parquet')

temp2 = pd.concat([data.groupby('date', as_index=False)['unit_sales'].mean() for data in [year4, year5]])

del([year4,year5])

df = pd.concat([temp1,temp2])

fig = go.Figure()

fig.add_trace(go.Scatter(x=df['date'], y=df['unit_sales']))

fig.add_trace(go.Scatter(x=df['date'],y=savgol_filter(df['unit_sales'],31,3)))

```

There 2 groups of spikes in the time series, one at 2/3rd of every mth-end mth, the other at

mid-mth period 15-17th. We filter spikes with a relatively high daily average unit sales value (12 and above) and see which items featured prominently during these days.

```

df['date'] = pd.to_datetime(df['date'])

spikes = df[df['unit_sales']>12]

spikes.info()

# since loading the entire dataset is out of the question, we 2013 and compare it to the spikes in 2016

y1_spikes = spikes[spikes['date'].dt.year == 2013].merge(year1, on='date', how='inner')

get_counts(y1_spikes).merge(items, on='item_nbr', how='left').iloc[:,[0,1,2]].head(200).family.value_counts()

```

Which stores were featured in these spikes?

```

y1_spikes.store_nbr.value_counts().head(5)

# lets compare to the spikes in 2016

y4_spikes = spikes[spikes['date'].dt.year == 2016].merge(year4, on='date', how='inner')

get_counts(y4_spikes).merge(items, on='item_nbr', how='left').iloc[:,[0,1,2]].head(200).family.value_counts()

y4_spikes.store_nbr.value_counts().head(5) # almost the same performance for stores compared

```

I also came across an interesting feature while looking at individual data spikes. During 2013 certain meat items were the most popular during these spikes as compared to beverage items during 2016.

```

topitems(year1, '2013-06-02').head(10)

topitems(year5, '2017-04-01').head(10)

```

#### 5. How does location affect sales? (What are the different sales trends in these locations)

The answer to this requires a visualization tool like streamlit, tableau or power BI to distinctly represent the different geographies. This is done at the end of this notebook.

#### 6. How did oil and transaction number affect sales?

We graph out a time series for both and look for changes in trend.

```

oil = pd.read_csv('C:/favorita2/oil.csv', parse_dates = [0])

oil.info() # dcoilwtico is the daily oil price

px.line(oil,x='date', y='dcoilwtico', color_discrete_map={'dcoilwtico':'ffa500'})

```

From the graph, we should see a increase in unit sales starting from mid-2014 but the general time series graph does not show a major increase in demand.

Although it would be better suited to look at each store individually, lets analyze the daily average transaction number.

```

transx = pd.read_csv('c:/favorita2/transactions.csv', parse_dates=[0])

transx.info()

grp_transx = transx.groupby('date', as_index=False)['transactions'].mean()

grp_transx.info()

fig = go.Figure()

fig.add_trace(go.Scatter(x=grp_transx['date'], y=grp_transx['transactions']))

fig.add_trace(go.Scatter(x=grp_transx['date'],y=savgol_filter(grp_transx['transactions'],29,3)))

```

The transactions are significant during the end of year holidays. Jan 1st 2014 shows an abnormal drop in average transactions and might warrant further analysis to ascertain the cause.

#### 7. How did returns affect sales?

Returns may signal problems with the items or delivery, and may cost the company in terms of lost revenue or even worse, lost customers. Questions to be answered in this analysis include: what items were returned mostwhen were they returned? is there a pattern in dates? common date? Are returns on the increase? decrease? Where? (a particular store? cluster?)

```

# to load the returns file

returns = pd.read_parquet('C:/favorita2/returns.parquet', engine='pyarrow')

returns.drop(['onpromotion'], axis=1, inplace=True) # do away with onpromotion col since it has only 8 items

returns.unit_sales = returns.unit_sales * -1

returns.info()

items = pd.read_csv('C:/favorita2/items.csv')

items_returned = returns.item_nbr.value_counts().to_frame() # might have defects: supplier or delivery/storage problem

items_returned.reset_index(level=0, inplace=True)

items_returned.rename(columns={'index':'item_nbr', 'item_nbr':'count'}, inplace=True)

items_returned.merge(items, on='item_nbr', how='left').head(15)

```

There were 2548 returns with 98% of those having been returned more than 10 times. The above table shows the top 15 returned items. Contrary to my expectation, most returns do not belong to the GroceryI or beverage item family but from Automotive and Home & Kitchen II. Since the specific items returned have been revealed, the next step for an analyst would be to find out the exact reason for the returns and avoid future errors if possible. Do the returns happen at a specific time? Let's find out:

```

import plotly.express as px

from scipy.signal import savgol_filter

return_date = returns.groupby('date', as_index=False)['item_nbr'].count()

px.line(return_date, x="date", y=savgol_filter(return_date.item_nbr, 29,3))

```

From the above plot, returns are certainly on the increase. This is not desirable since the transactions, and average unit sales time series graphs are almost constant through the five years in question. The causes for increasing trend should be checked and solutions delivered quickly to prevent lost revenue.

The days around April 23rd 2016 show an abnormally large increase in returns. These could be due to the effects of the earthquake on April 16th, as priorities changed for several people with regard to items needed from retail stores.

Which stores had most returned items?

```

store = pd.read_csv('C:/favorita2/stores.csv')

str_returns = returns.store_nbr.value_counts().to_frame().reset_index(level=0)

str_returns.rename(columns={'index':'store_nbr', 'store_nbr':'returns'}, inplace=True)

merged = str_returns.merge(store, on='store_nbr', how='left')

merged.head(7)

merged.type.value_counts()

```

store 18-type B, cluster 16 leads with 483 returns out of the top 27 stores with return counts exceeding 100. Type D and A stores are the majority of the stores with most returns. The states Pichincha and Quito led in terms of location, this could be because it has many stores per unit area as compared to other states.

Which stores lost the greatest revenue for returned items?

```

avg_returns = returns.groupby('store_nbr', as_index=False)['unit_sales'].sum().sort_values(by='unit_sales', ascending=False)

avg_returns.reset_index(drop=True, inplace=True)

avg_returns.merge(store, on='store_nbr', how='left').head(10)

```

Lets have a look into the first 3 stores:

stores that lead in the table had items with more monetary value as compared to the rest, returned

e.g a certain item could have been worth 10000 in unit sales as compared to most which had a unit sale value of 4.

It would be prudent to find out why these high value items are being returned to avoid future loss of revenue

The hight value in unit sales could also be an indicator that the item is popular and has many returns in a given time period.

e.g for store 18, 8 cleaning items led the count in terms of items returned

store 2 - groceryI items

#### 8. What are the forecast sales transactions for September 2017?

To answer this question we'll use the machine learning python algorithm Prophet which was developed by Facebook.

Why Prophet? Prophet is a great machine learning algorithm, it is fully automatic, light-weight, has holiday integration for time series, and relatively easy to understand since it explains the time series in terms of seasonality and trend.

We'll get the forecast for sales transactions as opposed to the forecast for the individual item sales because of a simple reason; computational power. The first week of January in train dataset contains over 1600 items with several having over 1000 counts over that single week. My machine takes over 10 minutes to fit and predict that one weeks worth of data. I'm working through better alternatives to prophet to handle that data.

In the meantime, let's predict the transaction volumes for the stores:

```

transx = pd.read_csv('C:/favorita2/transactions.csv', parse_dates=[0])

transx.rename(columns={'date':'ds', 'transactions':'y'}, inplace=True)

transx.info()

model = Prophet().fit(transx)

future = model.make_future_dataframe(periods=30)

forecast = model.predict(future)

model.plot(forecast);

model.plot_components(forecast);

```

The above graphs show the trends for our forecast according to each timeline;throughout the dataset timeframe, yearly and weekly.

Lets aggregate our visualization by store number for more actionable information.

```

import logging

logging.getLogger('fbprophet').setLevel(logging.WARNING)

grouped = transx.groupby('store_nbr')

final = pd.DataFrame()

for g in grouped.groups:

group = grouped.get_group(g)

m = Prophet(daily_seasonality=False)

m.fit(group)

future = m.make_future_dataframe(periods=30)

forecast = m.predict(future)

forecast = forecast.rename(columns={'yhat': 'store_'+str(g)})

final = pd.merge(final, forecast.set_index('ds'), how='outer', left_index=True, right_index=True)

final = final[['store_' + str(g) for g in grouped.groups.keys()]]

final = final.reset_index(level=0)

final.head()

final = final.replace(float('NaN'), 0)

stores = ['store_'+str(i) for i in range(1,55)]

px.line(final, x='ds', y=[store for store in final[stores]])

```

The above graph shows the daily transactions time series for each store, plus a 30 day forecast for the period between mid-August to mid-September. For better visibility, we'll use a smoothing function to get a glimpse of the trends for each store. I have created a [Power BI dashboard](https://github.com/Edwarothe/Demand-Planning-for-Corporacion-Favorita/blob/main/favorita.pbix) to show the trend dynamics of each store over the 5 year timeline. If you're not able to load the entire pbix file, [this](https://github.com/Edwarothe/Demand-Planning-for-Corporacion-Favorita/blob/main/favorita.pdf) is a pdf snapshot of the analysis.

| true |

code

| 0.33066 | null | null | null | null |

|

<a href="https://colab.research.google.com/github/pabloderen/SightlineStudy/blob/master/Sightline.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

```

#!/usr/bin/env python

# coding: utf-8

# # Collision analysis

import pandas as pd

import numpy as np

import itertools

import numba

from numba import vectorize

import os

import time

from numba import cuda

import multiprocessing as mp

from multiprocessing import Pool

from functools import partial

from numpy.lib import recfunctions as rfn

os.environ["OMP_NUM_THREADS"] = "10"

os.environ["OPENBLAS_MAIN_FREE"] = "10"

os.environ['NUMBAPRO_LIBDEVICE'] = "/usr/local/cuda-10.0/nvvm/libdevice"

os.environ['NUMBAPRO_NVVM'] = "/usr/local/cuda-10.0/nvvm/lib64/libnvvm.so"

```

# Main run:

Remember to upload the files

```

# Loger for python console

def log(message):

print('{} , {}'.format(time.time(), message))

@numba.jit(forceobj=True, parallel=True, nopython=True )

def FilterByBBX(Faces, line):

maxX = line[0]

maxY = line[1]

maxZ = line[2]

minX = line[3]

minY = line[4]

minZ = line[5]

midX = (Faces[:,0] + Faces[:,3])/2

mixY = (Faces[:,1] + Faces[:,4])/2

mixZ = (Faces[:,2] + Faces[:,5])/2

aa = np.where((midX >= minX) & ( mixY >= minY) & (mixZ >= minZ) & (midX <= maxX) & (mixY <= maxY) & (mixZ <= maxZ) )

return Faces[aa]

class BoundingBoxCreate():

def __init__(self, line):

XMax = max(line[3],line[0])

YMax = max(line[4],line[1])

ZMax = max(line[5],line[2])

XMin = min(line[3],line[0])

YMin = min(line[4],line[1])

ZMin = min(line[5],line[2])

self.box= np.array([XMax,YMax,ZMax,XMin,YMin,ZMin])

@numba.jit(nopython=True)

def split(aabb):

minX = aabb[3]

maxX = aabb[0]

minY = aabb[4]

maxY = aabb[1]

minZ = aabb[5]

maxZ = aabb[2]

centerX = (minX+ maxX)/2

centerY = (minY + maxY)/2

centerZ = (minZ+ maxY)/2

bbox0 = (maxX, maxY, maxZ, centerX, centerY, centerZ)

bbox1 = (centerX, maxY,maxZ, minX, centerY, centerZ)

bbox2 = (maxX, centerY, maxZ, centerX, minY, centerZ)

bbox3 = (centerX, centerY, maxZ, minX, minY, centerZ)

bbox4 = (maxX, maxY, centerZ, centerX, centerY, minZ)

bbox5 = (centerX, maxY,centerZ, minX, centerY, minZ)

bbox6 = (maxX, centerY, centerZ, centerX, minY, minZ)

bbox7 = (centerX, centerY, centerZ, minX, minY, minZ)

return bbox0, bbox1 , bbox2, bbox3, bbox4, bbox5, bbox6, bbox7

@numba.jit(nopython=True)

def XClipLine(d,vecMax, vecMin, v0, v1, f_low, f_high):

# Method for AABB vs line took from https://github.com/BSVino/MathForGameDevelopers/tree/line-box-intersection

# // Find the point of intersection in this dimension only as a fraction of the total vector

f_dim_low = (vecMin[d] - v0[d])/(v1[d] - v0[d] + 0.0000001)

f_dim_high = (vecMax[d] - v0[d])/(v1[d] - v0[d]+ 0.0000001)

# // Make sure low is less than high

if (f_dim_high < f_dim_low):

f_dim_high, f_dim_low = f_dim_low, f_dim_high

# // If this dimension's high is less than the low we got then we definitely missed.

if (f_dim_high < f_low):

return 0,0

# // Likewise if the low is less than the high.

if (f_dim_low > f_high):

return 0,0

# // Add the clip from this dimension to the previous results

f_low = max(f_dim_low, f_low)

f_high = min(f_dim_high, f_high)

if (f_low > f_high):

return 0,0

return f_low , f_high

@numba.jit(nopython=True)

def LineAABBIntersection(aabbBox,line):

# Method for AABB vs line took from https://github.com/BSVino/MathForGameDevelopers/tree/line-box-intersection

f_low = 0

f_high = 1

v0, v1 = (line[0], line[1], line[2]), (line[3], line[4], line[5])

vecMax = aabbBox[:3]

vecMin = aabbBox[-3:]

x = XClipLine(0, vecMax, vecMin , v0, v1, f_low, f_high)

if not x:

return False

x = XClipLine(1, vecMax, vecMin , v0, v1, x[0], x[1])

if x == (0,0) :

return False

x = XClipLine(2, vecMax, vecMin , v0, v1, x[0], x[1])

if x == (0,0):

return False

return True

# @numba.jit(forceobj=True, parallel=True)

def checkFaces(Faces, line):

for f in Faces:

if LineAABBIntersection(f,line):

return True

return False

# @numba.jit(forceobj=True, parallel=True)

def checklines(meshes, line):

global count

global totalLines

if (count % 10) == 0:

# print("=", end ="")

print("\r {} of {} total lines".format( str(count),totalLines ), end ="")

count = count + 1

for b in meshes:

#check if line line intersect with mesh

bbx = b.boundingBox

if LineAABBIntersection(bbx, line):

#if true split bbx in 4

splitted = b.parts

for s in splitted:

for ss in s.parts:

if LineAABBIntersection( ss.boundingBox , line):

for sss in ss.parts:

check = checkFaces(sss.faces,line)

if check:

return True

return False

from google.colab import drive

drive.mount('/content/drive')

```

# Mesh class

```

class Mesh():

def __init__(self, mesh, faces):

self.Id = mesh[6]

self.boundingBox = BoundingBoxCreate(mesh).box

self.parts = [MeshPart(self.Id, x, faces ) for x in split(self.boundingBox) ]

class MeshPart():

def __init__(self,Id, boundingBox,faces):

self.boundingBox = BoundingBoxCreate(boundingBox).box

ff = faces[faces[:,6] == Id]

filteredFaces = FilterByBBX(ff, boundingBox)

drop = np.delete(filteredFaces, np.s_[6:], axis=1)

self.parts = []

if drop.any():

self.parts = [MeshSubPart(Id, x, faces ) for x in split(self.boundingBox) ]

class MeshSubPart():

def __init__(self,Id, boundingBox,faces):

self.boundingBox = BoundingBoxCreate(boundingBox).box

ff = faces[faces[:,6] == Id]

filteredFaces = FilterByBBX(ff, boundingBox)

drop = np.delete(filteredFaces, np.s_[6:], axis=1)

self.parts = []

if drop.any():

self.parts = [MeshSubSubPart(Id, x, faces ) for x in split(self.boundingBox) ]

class MeshSubSubPart():

def __init__(self,Id, boundingBox,faces):

self.boundingBox = BoundingBoxCreate(boundingBox).box

ff = faces[faces[:,6] == Id]

filteredFaces = FilterByBBX(ff, boundingBox)