repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Satyamatury/wav2vec2-large-xls-r-300m-turkish-colab

|

Satyamatury

|

wav2vec2

| 19 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null |

['common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,066 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-turkish-colab

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

### Training results

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

7dcd310acccd5cea3233102df87df749

|

jonaskoenig/xtremedistil-l6-h384-uncased-future-time-references

|

jonaskoenig

|

bert

| 8 | 3 |

transformers

| 0 |

text-classification

| false | true | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,706 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# xtremedistil-l6-h384-uncased-future-time-references

This model is a fine-tuned version of [microsoft/xtremedistil-l6-h256-uncased](https://huggingface.co/microsoft/xtremedistil-l6-h256-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0279

- Train Binary Crossentropy: 0.4809

- Epoch: 9

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': 3e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Train Binary Crossentropy | Epoch |

|:----------:|:-------------------------:|:-----:|

| 0.0487 | 0.6401 | 0 |

| 0.0348 | 0.5925 | 1 |

| 0.0319 | 0.5393 | 2 |

| 0.0306 | 0.5168 | 3 |

| 0.0298 | 0.5045 | 4 |

| 0.0292 | 0.4970 | 5 |

| 0.0288 | 0.4916 | 6 |

| 0.0284 | 0.4878 | 7 |

| 0.0282 | 0.4836 | 8 |

| 0.0279 | 0.4809 | 9 |

### Framework versions

- Transformers 4.20.1

- TensorFlow 2.9.1

- Datasets 2.3.2

- Tokenizers 0.12.1

|

5997fb1cdff5b84a59c4465d736c5200

|

adsabs/astroBERT

|

adsabs

|

bert

| 12 | 140 |

transformers

| 0 |

fill-mask

| true | false | false |

mit

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 2,368 | false |

# ***astroBERT: a language model for astrophysics***

This public repository contains the work of the [NASA/ADS](https://ui.adsabs.harvard.edu/) on building an NLP language model tailored to astrophysics, along with tutorials and miscellaneous related files.

This model is **cased** (it treats `ads` and `ADS` differently).

## astroBERT models

0. **Base model**: Pretrained model on English language using a masked language modeling (MLM) and next sentence prediction (NSP) objective. It was introduced in [this paper at ADASS 2021](https://arxiv.org/abs/2112.00590) and made public at ADASS 2022.

1. **NER-DEAL model**: This model adds a token classification head to the base model finetuned on the [DEAL@WIESP2022 named entity recognition](https://ui.adsabs.harvard.edu/WIESP/2022/SharedTasks) task. Must be loaded from the `revision='NER-DEAL'` branch (see tutorial 2).

### Tutorials

0. [generate text embedding (for downstream tasks)](https://nbviewer.org/urls/huggingface.co/adsabs/astroBERT/raw/main/Tutorials/0_Embeddings.ipynb)

1. [use astroBERT for the Fill-Mask task](https://nbviewer.org/urls/huggingface.co/adsabs/astroBERT/raw/main/Tutorials/1_Fill-Mask.ipynb)

2. [make NER-DEAL predictions](https://nbviewer.org/urls/huggingface.co/adsabs/astroBERT/raw/main/Tutorials/2_NER_DEAL.ipynb)

### BibTeX

```bibtex

@ARTICLE{2021arXiv211200590G,

author = {{Grezes}, Felix and {Blanco-Cuaresma}, Sergi and {Accomazzi}, Alberto and {Kurtz}, Michael J. and {Shapurian}, Golnaz and {Henneken}, Edwin and {Grant}, Carolyn S. and {Thompson}, Donna M. and {Chyla}, Roman and {McDonald}, Stephen and {Hostetler}, Timothy W. and {Templeton}, Matthew R. and {Lockhart}, Kelly E. and {Martinovic}, Nemanja and {Chen}, Shinyi and {Tanner}, Chris and {Protopapas}, Pavlos},

title = "{Building astroBERT, a language model for Astronomy \& Astrophysics}",

journal = {arXiv e-prints},

keywords = {Computer Science - Computation and Language, Astrophysics - Instrumentation and Methods for Astrophysics},

year = 2021,

month = dec,

eid = {arXiv:2112.00590},

pages = {arXiv:2112.00590},

archivePrefix = {arXiv},

eprint = {2112.00590},

primaryClass = {cs.CL},

adsurl = {https://ui.adsabs.harvard.edu/abs/2021arXiv211200590G},

adsnote = {Provided by the SAO/NASA Astrophysics Data System}

}

```

|

0a57a838e39f4a51be3ddf20a2d0c15d

|

Imene/vit-base-patch16-384-wi5

|

Imene

|

vit

| 6 | 2 |

transformers

| 0 |

image-classification

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 2,950 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Imene/vit-base-patch16-384-wi5

This model is a fine-tuned version of [google/vit-base-patch16-384](https://huggingface.co/google/vit-base-patch16-384) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.4102

- Train Accuracy: 0.9755

- Train Top-3-accuracy: 0.9960

- Validation Loss: 1.9021

- Validation Accuracy: 0.4912

- Validation Top-3-accuracy: 0.7302

- Epoch: 8

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'AdamWeightDecay', 'config': {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 3e-05, 'decay_steps': 3180, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Train Accuracy | Train Top-3-accuracy | Validation Loss | Validation Accuracy | Validation Top-3-accuracy | Epoch |

|:----------:|:--------------:|:--------------------:|:---------------:|:-------------------:|:-------------------------:|:-----:|

| 4.2945 | 0.0568 | 0.1328 | 3.6233 | 0.1387 | 0.2916 | 0 |

| 3.1234 | 0.2437 | 0.4585 | 2.8657 | 0.3041 | 0.5330 | 1 |

| 2.4383 | 0.4182 | 0.6638 | 2.5499 | 0.3534 | 0.6048 | 2 |

| 1.9258 | 0.5698 | 0.7913 | 2.3046 | 0.4202 | 0.6583 | 3 |

| 1.4919 | 0.6963 | 0.8758 | 2.1349 | 0.4553 | 0.6784 | 4 |

| 1.1127 | 0.7992 | 0.9395 | 2.0878 | 0.4595 | 0.6809 | 5 |

| 0.8092 | 0.8889 | 0.9720 | 1.9460 | 0.4962 | 0.7210 | 6 |

| 0.5794 | 0.9419 | 0.9883 | 1.9478 | 0.4979 | 0.7201 | 7 |

| 0.4102 | 0.9755 | 0.9960 | 1.9021 | 0.4912 | 0.7302 | 8 |

### Framework versions

- Transformers 4.21.3

- TensorFlow 2.8.2

- Datasets 2.4.0

- Tokenizers 0.12.1

|

28acba3e9aa746ec209a0e1fef94c3cc

|

furusu/umamusume-classifier

|

furusu

|

vit

| 5 | 30 |

transformers

| 0 |

image-classification

| true | false | false |

apache-2.0

| null | null | null | 1 | 0 | 1 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 695 | false |

finetuned from https://huggingface.co/google/vit-base-patch16-224-in21k

dataset:26k images (train:21k valid:5k)

accuracy of validation dataset is 95%

```Python

from transformers import ViTFeatureExtractor, ViTForImageClassification

from PIL import Image

path = 'image_path'

image = Image.open(path)

feature_extractor = ViTFeatureExtractor.from_pretrained('furusu/umamusume-classifier')

model = ViTForImageClassification.from_pretrained('furusu/umamusume-classifier')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

predicted_class_idx = outputs.logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

```

|

ebc17430d7c805b25267733abf2df9b8

|

lighteternal/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext-finetuned-mnli

|

lighteternal

|

bert

| 13 | 355 |

transformers

| 3 |

text-classification

| true | false | false |

mit

|

['en']

|

['mnli']

| null | 0 | 0 | 0 | 0 | 1 | 1 | 0 |

['textual-entailment', 'nli', 'pytorch']

| false | true | true | 2,047 | false |

# BiomedNLP-PubMedBERT finetuned on textual entailment (NLI)

The [microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://huggingface.co/microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext?text=%5BMASK%5D+is+a+tumor+suppressor+gene) finetuned on the MNLI dataset. It should be useful in textual entailment tasks involving biomedical corpora.

## Usage

Given two sentences (a premise and a hypothesis), the model outputs the logits of entailment, neutral or contradiction.

You can test the model using the HuggingFace model widget on the side:

- Input two sentences (premise and hypothesis) one after the other.

- The model returns the probabilities of 3 labels: entailment(LABEL:0), neutral(LABEL:1) and contradiction(LABEL:2) respectively.

To use the model locally on your machine:

```python

# import torch

# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

from transformers import AutoTokenizer, AutoModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained("lighteternal/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext-finetuned-mnli")

model = AutoModelForSequenceClassification.from_pretrained("lighteternal/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext-finetuned-mnli")

premise = 'EpCAM is overexpressed in breast cancer'

hypothesis = 'EpCAM is downregulated in breast cancer.'

# run through model pre-trained on MNLI

x = tokenizer.encode(premise, hypothesis, return_tensors='pt',

truncation_strategy='only_first')

logits = model(x)[0]

probs = logits.softmax(dim=1)

print('Probabilities for entailment, neutral, contradiction \n', np.around(probs.cpu().

detach().numpy(),3))

# Probabilities for entailment, neutral, contradiction

# 0.001 0.001 0.998

```

## Metrics

Evaluation on classification accuracy (entailment, contradiction, neutral) on MNLI test set:

| Metric | Value |

| --- | --- |

| Accuracy | 0.8338|

See Training Metrics tab for detailed info.

|

95225240b0a8ca541daf60a08563fe52

|

heyyai/austinmichaelcraig0

|

heyyai

| null | 20 | 2 |

diffusers

| 0 |

text-to-image

| false | false | false |

creativeml-openrail-m

| null | null | null | 2 | 2 | 0 | 0 | 0 | 0 | 0 |

['text-to-image']

| false | true | true | 1,423 | false |

### austinmichaelcraig0 on Stable Diffusion via Dreambooth trained on the [fast-DreamBooth.ipynb by TheLastBen](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

#### Model by cormacncheese

This your the Stable Diffusion model fine-tuned the austinmichaelcraig0 concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt(s)`: **austinmichaelcraig0(0).jpg**

You can also train your own concepts and upload them to the library by using [the fast-DremaBooth.ipynb by TheLastBen](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb).

You can run your new concept via A1111 Colab :[Fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Or you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Sample pictures of this concept:

austinmichaelcraig0(0).jpg

.jpg)

|

a4778363d46e3f747429462a28744ff0

|

shripadbhat/whisper-tiny-hi-1000steps

|

shripadbhat

|

whisper

| 15 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['hi']

|

['mozilla-foundation/common_voice_11_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['whisper-event', 'generated_from_trainer']

| true | true | true | 1,904 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper tiny Hindi

This model is a fine-tuned version of [openai/whisper-tiny](https://huggingface.co/openai/whisper-tiny) on the Common Voice 11.0 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5538

- Wer: 41.5453

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 50

- training_steps: 1000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.7718 | 0.73 | 100 | 0.8130 | 55.6890 |

| 0.5169 | 1.47 | 200 | 0.6515 | 48.2517 |

| 0.3986 | 2.21 | 300 | 0.6001 | 44.9931 |

| 0.3824 | 2.94 | 400 | 0.5720 | 43.5171 |

| 0.3328 | 3.67 | 500 | 0.5632 | 42.5112 |

| 0.2919 | 4.41 | 600 | 0.5594 | 42.7863 |

| 0.2654 | 5.15 | 700 | 0.5552 | 41.6428 |

| 0.2618 | 5.88 | 800 | 0.5530 | 41.8893 |

| 0.2442 | 6.62 | 900 | 0.5539 | 41.5740 |

| 0.238 | 7.35 | 1000 | 0.5538 | 41.5453 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

7c22c8009ba10ad8dbc348929a65a7ee

|

deepset/xlm-roberta-base-squad2-distilled

|

deepset

|

xlm-roberta

| 8 | 4,534 |

transformers

| 4 |

question-answering

| true | false | false |

mit

|

['multilingual']

|

['squad_v2']

| null | 3 | 2 | 0 | 1 | 1 | 1 | 0 |

['exbert']

| false | true | true | 4,241 | false |

# deepset/xlm-roberta-base-squad2-distilled

- haystack's distillation feature was used for training. deepset/xlm-roberta-large-squad2 was used as the teacher model.

## Overview

**Language model:** deepset/xlm-roberta-base-squad2-distilled

**Language:** Multilingual

**Downstream-task:** Extractive QA

**Training data:** SQuAD 2.0

**Eval data:** SQuAD 2.0

**Code:** See [an example QA pipeline on Haystack](https://haystack.deepset.ai/tutorials/first-qa-system)

**Infrastructure**: 1x Tesla v100

## Hyperparameters

```

batch_size = 56

n_epochs = 4

max_seq_len = 384

learning_rate = 3e-5

lr_schedule = LinearWarmup

embeds_dropout_prob = 0.1

temperature = 3

distillation_loss_weight = 0.75

```

## Usage

### In Haystack

Haystack is an NLP framework by deepset. You can use this model in a Haystack pipeline to do question answering at scale (over many documents). To load the model in [Haystack](https://github.com/deepset-ai/haystack/):

```python

reader = FARMReader(model_name_or_path="deepset/xlm-roberta-base-squad2-distilled")

# or

reader = TransformersReader(model_name_or_path="deepset/xlm-roberta-base-squad2-distilled",tokenizer="deepset/xlm-roberta-base-squad2-distilled")

```

For a complete example of ``deepset/xlm-roberta-base-squad2-distilled`` being used for [question answering], check out the [Tutorials in Haystack Documentation](https://haystack.deepset.ai/tutorials/first-qa-system)

### In Transformers

```python

from transformers import AutoModelForQuestionAnswering, AutoTokenizer, pipeline

model_name = "deepset/xlm-roberta-base-squad2-distilled"

# a) Get predictions

nlp = pipeline('question-answering', model=model_name, tokenizer=model_name)

QA_input = {

'question': 'Why is model conversion important?',

'context': 'The option to convert models between FARM and transformers gives freedom to the user and let people easily switch between frameworks.'

}

res = nlp(QA_input)

# b) Load model & tokenizer

model = AutoModelForQuestionAnswering.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

```

## Performance

Evaluated on the SQuAD 2.0 dev set

```

"exact": 74.06721131980123%

"f1": 76.39919553344667%

```

## Authors

**Timo Möller:** timo.moeller@deepset.ai

**Julian Risch:** julian.risch@deepset.ai

**Malte Pietsch:** malte.pietsch@deepset.ai

**Michel Bartels:** michel.bartels@deepset.ai

## About us

<div class="grid lg:grid-cols-2 gap-x-4 gap-y-3">

<div class="w-full h-40 object-cover mb-2 rounded-lg flex items-center justify-center">

<img alt="" src="https://raw.githubusercontent.com/deepset-ai/.github/main/deepset-logo-colored.png" class="w-40"/>

</div>

<div class="w-full h-40 object-cover mb-2 rounded-lg flex items-center justify-center">

<img alt="" src="https://raw.githubusercontent.com/deepset-ai/.github/main/haystack-logo-colored.png" class="w-40"/>

</div>

</div>

[deepset](http://deepset.ai/) is the company behind the open-source NLP framework [Haystack](https://haystack.deepset.ai/) which is designed to help you build production ready NLP systems that use: Question answering, summarization, ranking etc.

Some of our other work:

- [Distilled roberta-base-squad2 (aka "tinyroberta-squad2")]([https://huggingface.co/deepset/tinyroberta-squad2)

- [German BERT (aka "bert-base-german-cased")](https://deepset.ai/german-bert)

- [GermanQuAD and GermanDPR datasets and models (aka "gelectra-base-germanquad", "gbert-base-germandpr")](https://deepset.ai/germanquad)

## Get in touch and join the Haystack community

<p>For more info on Haystack, visit our <strong><a href="https://github.com/deepset-ai/haystack">GitHub</a></strong> repo and <strong><a href="https://haystack.deepset.ai">Documentation</a></strong>.

We also have a <strong><a class="h-7" href="https://haystack.deepset.ai/community/join">Discord community open to everyone!</a></strong></p>

[Twitter](https://twitter.com/deepset_ai) | [LinkedIn](https://www.linkedin.com/company/deepset-ai/) | [Discord](https://haystack.deepset.ai/community/join) | [GitHub Discussions](https://github.com/deepset-ai/haystack/discussions) | [Website](https://deepset.ai)

By the way: [we're hiring!](http://www.deepset.ai/jobs)

|

67ad8aa6f3251354a306fe2336fd20b8

|

paola-md/distil-is

|

paola-md

|

roberta

| 6 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,577 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distil-is

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6082

- Rmse: 0.7799

- Mse: 0.6082

- Mae: 0.6023

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rmse | Mse | Mae |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|

| 0.6881 | 1.0 | 492 | 0.6534 | 0.8084 | 0.6534 | 0.5857 |

| 0.5923 | 2.0 | 984 | 0.6508 | 0.8067 | 0.6508 | 0.5852 |

| 0.5865 | 3.0 | 1476 | 0.6088 | 0.7803 | 0.6088 | 0.6096 |

| 0.5899 | 4.0 | 1968 | 0.6279 | 0.7924 | 0.6279 | 0.5853 |

| 0.5852 | 5.0 | 2460 | 0.6082 | 0.7799 | 0.6082 | 0.6023 |

### Framework versions

- Transformers 4.19.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 2.4.0

- Tokenizers 0.12.1

|

a8a4429344f2a845dd3caedb2d9f27e1

|

NickKolok/meryl-stryfe-20230101-0500-4800-steps_1

|

NickKolok

| null | 15 | 2 |

diffusers

| 0 |

text-to-image

| false | false | false |

creativeml-openrail-m

| null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['text-to-image']

| false | true | true | 7,619 | false |

### Meryl_Stryfe_20230101_0500_+4800_steps on Stable Diffusion via Dreambooth

#### model by NickKolok

This your the Stable Diffusion model fine-tuned the Meryl_Stryfe_20230101_0500_+4800_steps concept taught to Stable Diffusion with Dreambooth.

#It can be used by modifying the `instance_prompt`: **merylstryfetrigun**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

3e9855778807e68198a33f8c6e810b2b

|

ghatgetanuj/distilbert-base-uncased_cls_sst2

|

ghatgetanuj

|

distilbert

| 12 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,537 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased_cls_sst2

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5999

- Accuracy: 0.8933

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.2

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 433 | 0.2928 | 0.8773 |

| 0.4178 | 2.0 | 866 | 0.3301 | 0.8922 |

| 0.2046 | 3.0 | 1299 | 0.5088 | 0.8853 |

| 0.0805 | 4.0 | 1732 | 0.5780 | 0.8888 |

| 0.0159 | 5.0 | 2165 | 0.5999 | 0.8933 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

917a150a42b4fae0144a677bb024ce24

|

lmqg/mt5-small-ruquad-qag

|

lmqg

|

mt5

| 13 | 33 |

transformers

| 0 |

text2text-generation

| true | false | false |

cc-by-4.0

|

['ru']

|

['lmqg/qag_ruquad']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['questions and answers generation']

| true | true | true | 4,038 | false |

# Model Card of `lmqg/mt5-small-ruquad-qag`

This model is fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) for question & answer pair generation task on the [lmqg/qag_ruquad](https://huggingface.co/datasets/lmqg/qag_ruquad) (dataset_name: default) via [`lmqg`](https://github.com/asahi417/lm-question-generation).

### Overview

- **Language model:** [google/mt5-small](https://huggingface.co/google/mt5-small)

- **Language:** ru

- **Training data:** [lmqg/qag_ruquad](https://huggingface.co/datasets/lmqg/qag_ruquad) (default)

- **Online Demo:** [https://autoqg.net/](https://autoqg.net/)

- **Repository:** [https://github.com/asahi417/lm-question-generation](https://github.com/asahi417/lm-question-generation)

- **Paper:** [https://arxiv.org/abs/2210.03992](https://arxiv.org/abs/2210.03992)

### Usage

- With [`lmqg`](https://github.com/asahi417/lm-question-generation#lmqg-language-model-for-question-generation-)

```python

from lmqg import TransformersQG

# initialize model

model = TransformersQG(language="ru", model="lmqg/mt5-small-ruquad-qag")

# model prediction

question_answer_pairs = model.generate_qa("Нелишним будет отметить, что, развивая это направление, Д. И. Менделеев, поначалу априорно выдвинув идею о температуре, при которой высота мениска будет нулевой, в мае 1860 года провёл серию опытов.")

```

- With `transformers`

```python

from transformers import pipeline

pipe = pipeline("text2text-generation", "lmqg/mt5-small-ruquad-qag")

output = pipe("Нелишним будет отметить, что, развивая это направление, Д. И. Менделеев, поначалу априорно выдвинув идею о температуре, при которой высота мениска будет нулевой, в мае 1860 года провёл серию опытов.")

```

## Evaluation

- ***Metric (Question & Answer Generation)***: [raw metric file](https://huggingface.co/lmqg/mt5-small-ruquad-qag/raw/main/eval/metric.first.answer.paragraph.questions_answers.lmqg_qag_ruquad.default.json)

| | Score | Type | Dataset |

|:--------------------------------|--------:|:--------|:-------------------------------------------------------------------|

| QAAlignedF1Score (BERTScore) | 52.95 | default | [lmqg/qag_ruquad](https://huggingface.co/datasets/lmqg/qag_ruquad) |

| QAAlignedF1Score (MoverScore) | 38.59 | default | [lmqg/qag_ruquad](https://huggingface.co/datasets/lmqg/qag_ruquad) |

| QAAlignedPrecision (BERTScore) | 52.86 | default | [lmqg/qag_ruquad](https://huggingface.co/datasets/lmqg/qag_ruquad) |

| QAAlignedPrecision (MoverScore) | 38.57 | default | [lmqg/qag_ruquad](https://huggingface.co/datasets/lmqg/qag_ruquad) |

| QAAlignedRecall (BERTScore) | 53.06 | default | [lmqg/qag_ruquad](https://huggingface.co/datasets/lmqg/qag_ruquad) |

| QAAlignedRecall (MoverScore) | 38.62 | default | [lmqg/qag_ruquad](https://huggingface.co/datasets/lmqg/qag_ruquad) |

## Training hyperparameters

The following hyperparameters were used during fine-tuning:

- dataset_path: lmqg/qag_ruquad

- dataset_name: default

- input_types: ['paragraph']

- output_types: ['questions_answers']

- prefix_types: None

- model: google/mt5-small

- max_length: 512

- max_length_output: 256

- epoch: 12

- batch: 8

- lr: 0.001

- fp16: False

- random_seed: 1

- gradient_accumulation_steps: 16

- label_smoothing: 0.15

The full configuration can be found at [fine-tuning config file](https://huggingface.co/lmqg/mt5-small-ruquad-qag/raw/main/trainer_config.json).

## Citation

```

@inproceedings{ushio-etal-2022-generative,

title = "{G}enerative {L}anguage {M}odels for {P}aragraph-{L}evel {Q}uestion {G}eneration",

author = "Ushio, Asahi and

Alva-Manchego, Fernando and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

|

f24db018a4880ea31c5abf065529a79b

|

hkoll2/distilbert-base-uncased-finetuned-ner

|

hkoll2

|

distilbert

| 13 | 3 |

transformers

| 0 |

token-classification

| true | false | false |

apache-2.0

| null |

['conll2003']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,555 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-ner

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0629

- Precision: 0.9225

- Recall: 0.9340

- F1: 0.9282

- Accuracy: 0.9834

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2356 | 1.0 | 878 | 0.0704 | 0.9138 | 0.9187 | 0.9162 | 0.9807 |

| 0.054 | 2.0 | 1756 | 0.0620 | 0.9209 | 0.9329 | 0.9269 | 0.9827 |

| 0.0306 | 3.0 | 2634 | 0.0629 | 0.9225 | 0.9340 | 0.9282 | 0.9834 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

27222624910123096bd0b63638a009b0

|

ConvLab/t5-small-goal2dialogue-multiwoz21

|

ConvLab

|

t5

| 7 | 3 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

|

['en']

|

['ConvLab/multiwoz21']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['t5-small', 'text2text-generation', 'dialogue generation', 'conversational system', 'task-oriented dialog']

| true | true | true | 745 | false |

# t5-small-goal2dialogue-multiwoz21

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on [MultiWOZ 2.1](https://huggingface.co/datasets/ConvLab/multiwoz21).

Refer to [ConvLab-3](https://github.com/ConvLab/ConvLab-3) for model description and usage.

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 32

- eval_batch_size: 64

- seed: 42

- distributed_type: multi-GPU

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adafactor

- lr_scheduler_type: linear

- num_epochs: 10.0

### Framework versions

- Transformers 4.18.0

- Pytorch 1.10.2+cu102

- Datasets 1.18.3

- Tokenizers 0.11.0

|

b54b28761bbdc3859100e6e02b14665a

|

yanaiela/roberta-base-epoch_2

|

yanaiela

|

roberta

| 9 | 3 |

transformers

| 0 |

fill-mask

| true | false | false |

mit

|

['en']

|

['wikipedia', 'bookcorpus']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['roberta-base', 'roberta-base-epoch_2']

| false | true | true | 2,100 | false |

# RoBERTa, Intermediate Checkpoint - Epoch 2

This model is part of our reimplementation of the [RoBERTa model](https://arxiv.org/abs/1907.11692),

trained on Wikipedia and the Book Corpus only.

We train this model for almost 100K steps, corresponding to 83 epochs.

We provide the 84 checkpoints (including the randomly initialized weights before the training)

to provide the ability to study the training dynamics of such models, and other possible use-cases.

These models were trained in part of a work that studies how simple statistics from data,

such as co-occurrences affects model predictions, which are described in the paper

[Measuring Causal Effects of Data Statistics on Language Model's `Factual' Predictions](https://arxiv.org/abs/2207.14251).

This is RoBERTa-base epoch_2.

## Model Description

This model was captured during a reproduction of

[RoBERTa-base](https://huggingface.co/roberta-base), for English: it

is a Transformers model pretrained on a large corpus of English data, using the

Masked Language Modelling (MLM).

The intended uses, limitations, training data and training procedure for the fully trained model are similar

to [RoBERTa-base](https://huggingface.co/roberta-base). Two major

differences with the original model:

* We trained our model for 100K steps, instead of 500K

* We only use Wikipedia and the Book Corpus, as corpora which are publicly available.

### How to use

Using code from

[RoBERTa-base](https://huggingface.co/roberta-base), here is an example based on

PyTorch:

```

from transformers import pipeline

model = pipeline("fill-mask", model='yanaiela/roberta-base-epoch_83', device=-1, top_k=10)

model("Hello, I'm the <mask> RoBERTa-base language model")

```

## Citation info

```bibtex

@article{2207.14251,

Author = {Yanai Elazar and Nora Kassner and Shauli Ravfogel and Amir Feder and Abhilasha Ravichander and Marius Mosbach and Yonatan Belinkov and Hinrich Schütze and Yoav Goldberg},

Title = {Measuring Causal Effects of Data Statistics on Language Model's `Factual' Predictions},

Year = {2022},

Eprint = {arXiv:2207.14251},

}

```

|

6690a6fe0c54290683069c2712438f2a

|

weikunt/finetuned-ner

|

weikunt

|

deberta-v2

| 11 | 9 |

transformers

| 0 |

token-classification

| true | false | false |

cc-by-4.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 4,215 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuned-ner

This model is a fine-tuned version of [deepset/deberta-v3-base-squad2](https://huggingface.co/deepset/deberta-v3-base-squad2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4783

- Precision: 0.3264

- Recall: 0.3591

- F1: 0.3420

- Accuracy: 0.8925

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 30

- mixed_precision_training: Native AMP

- label_smoothing_factor: 0.05

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 39.8167 | 1.0 | 760 | 0.3957 | 0.1844 | 0.2909 | 0.2257 | 0.8499 |

| 21.7333 | 2.0 | 1520 | 0.3853 | 0.2118 | 0.3273 | 0.2571 | 0.8546 |

| 13.8859 | 3.0 | 2280 | 0.3631 | 0.2443 | 0.2909 | 0.2656 | 0.8789 |

| 20.6586 | 4.0 | 3040 | 0.3961 | 0.2946 | 0.3455 | 0.3180 | 0.8753 |

| 13.8654 | 5.0 | 3800 | 0.3821 | 0.2791 | 0.3273 | 0.3013 | 0.8877 |

| 12.6942 | 6.0 | 4560 | 0.4393 | 0.3122 | 0.3364 | 0.3239 | 0.8909 |

| 25.0549 | 7.0 | 5320 | 0.4542 | 0.3106 | 0.3727 | 0.3388 | 0.8824 |

| 5.6816 | 8.0 | 6080 | 0.4432 | 0.2820 | 0.3409 | 0.3086 | 0.8774 |

| 13.1296 | 9.0 | 6840 | 0.4509 | 0.2884 | 0.35 | 0.3162 | 0.8824 |

| 7.7173 | 10.0 | 7600 | 0.4265 | 0.3170 | 0.3818 | 0.3464 | 0.8919 |

| 6.7922 | 11.0 | 8360 | 0.4749 | 0.3320 | 0.3818 | 0.3552 | 0.8892 |

| 5.4287 | 12.0 | 9120 | 0.4564 | 0.2917 | 0.3818 | 0.3307 | 0.8805 |

| 7.4153 | 13.0 | 9880 | 0.4735 | 0.2963 | 0.3273 | 0.3110 | 0.8871 |

| 9.1154 | 14.0 | 10640 | 0.4553 | 0.3416 | 0.3773 | 0.3585 | 0.8894 |

| 5.999 | 15.0 | 11400 | 0.4489 | 0.3203 | 0.4091 | 0.3593 | 0.8880 |

| 9.5128 | 16.0 | 12160 | 0.4947 | 0.3164 | 0.3682 | 0.3403 | 0.8883 |

| 5.6713 | 17.0 | 12920 | 0.4705 | 0.3527 | 0.3864 | 0.3688 | 0.8919 |

| 12.2119 | 18.0 | 13680 | 0.4617 | 0.3123 | 0.3591 | 0.3340 | 0.8857 |

| 8.5658 | 19.0 | 14440 | 0.4764 | 0.3092 | 0.35 | 0.3284 | 0.8944 |

| 11.0664 | 20.0 | 15200 | 0.4557 | 0.3187 | 0.3636 | 0.3397 | 0.8905 |

| 6.7161 | 21.0 | 15960 | 0.4468 | 0.3210 | 0.3955 | 0.3544 | 0.8956 |

| 9.0448 | 22.0 | 16720 | 0.5120 | 0.2872 | 0.3682 | 0.3227 | 0.8792 |

| 6.573 | 23.0 | 17480 | 0.4990 | 0.3307 | 0.3773 | 0.3524 | 0.8869 |

| 5.0543 | 24.0 | 18240 | 0.4763 | 0.3028 | 0.3455 | 0.3227 | 0.8899 |

| 6.8797 | 25.0 | 19000 | 0.4814 | 0.2780 | 0.3273 | 0.3006 | 0.8913 |

| 7.7544 | 26.0 | 19760 | 0.4695 | 0.3024 | 0.3409 | 0.3205 | 0.8946 |

| 4.8346 | 27.0 | 20520 | 0.4849 | 0.3154 | 0.3455 | 0.3297 | 0.8931 |

| 4.4766 | 28.0 | 21280 | 0.4809 | 0.2925 | 0.3364 | 0.3129 | 0.8913 |

| 7.9149 | 29.0 | 22040 | 0.4756 | 0.3238 | 0.3591 | 0.3405 | 0.8930 |

| 7.3033 | 30.0 | 22800 | 0.4783 | 0.3264 | 0.3591 | 0.3420 | 0.8925 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.7.1

- Datasets 2.8.0

- Tokenizers 0.13.2

|

eaf80c8ab459bee2dc141dcd28f9f78e

|

fanzru/t5-small-finetuned-xlsum-10-epoch

|

fanzru

|

t5

| 9 | 1 |

transformers

| 1 |

text2text-generation

| true | false | false |

apache-2.0

| null |

['xlsum']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,380 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-finetuned-xlsum-10-epoch

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the xlsum dataset.

It achieves the following results on the evaluation set:

- Loss: 2.2204

- Rouge1: 31.6534

- Rouge2: 10.0563

- Rougel: 24.8104

- Rougelsum: 24.8732

- Gen Len: 18.7913

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:------:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| 2.6512 | 1.0 | 19158 | 2.3745 | 29.756 | 8.4006 | 22.9753 | 23.0287 | 18.8245 |

| 2.6012 | 2.0 | 38316 | 2.3183 | 30.5327 | 9.0206 | 23.7263 | 23.7805 | 18.813 |

| 2.5679 | 3.0 | 57474 | 2.2853 | 30.9771 | 9.4156 | 24.1555 | 24.2127 | 18.7905 |

| 2.5371 | 4.0 | 76632 | 2.2660 | 31.0578 | 9.5592 | 24.2983 | 24.3587 | 18.7941 |

| 2.5133 | 5.0 | 95790 | 2.2498 | 31.3756 | 9.7889 | 24.5317 | 24.5922 | 18.7971 |

| 2.4795 | 6.0 | 114948 | 2.2378 | 31.4961 | 9.8935 | 24.6648 | 24.7218 | 18.7929 |

| 2.4967 | 7.0 | 134106 | 2.2307 | 31.44 | 9.9125 | 24.6298 | 24.6824 | 18.8221 |

| 2.4678 | 8.0 | 153264 | 2.2250 | 31.5875 | 10.004 | 24.7581 | 24.8125 | 18.7809 |

| 2.46 | 9.0 | 172422 | 2.2217 | 31.6413 | 10.0311 | 24.8063 | 24.8641 | 18.7951 |

| 2.4494 | 10.0 | 191580 | 2.2204 | 31.6534 | 10.0563 | 24.8104 | 24.8732 | 18.7913 |

### Framework versions

- Transformers 4.13.0

- Pytorch 1.13.1+cpu

- Datasets 2.8.0

- Tokenizers 0.10.3

|

57a55ec9458b4ab81efe1ac9a287edd8

|

FOFer/distilbert-base-uncased-finetuned-squad

|

FOFer

|

distilbert

| 12 | 3 |

transformers

| 0 |

question-answering

| true | false | false |

apache-2.0

| null |

['squad_v2']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,288 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad_v2 dataset.

It achieves the following results on the evaluation set:

- Loss: 1.4306

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 1.2169 | 1.0 | 8235 | 1.1950 |

| 0.9396 | 2.0 | 16470 | 1.2540 |

| 0.7567 | 3.0 | 24705 | 1.4306 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.10.0+cu111

- Datasets 1.18.3

- Tokenizers 0.11.0

|

3407e41b67638c1762090980abbabe29

|

sd-concepts-library/chucky

|

sd-concepts-library

| null | 10 | 0 | null | 1 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,076 | false |

### Chucky on Stable Diffusion

This is the `<merc>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

f28de1ede5e71b17fb776d049eacc45f

|

NDugar/v3-Large-mnli

|

NDugar

|

deberta-v2

| 12 | 6 |

transformers

| 1 |

zero-shot-classification

| true | false | false |

mit

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['deberta-v1', 'deberta-mnli']

| false | true | true | 925 | false |

This model is a fine-tuned version of [microsoft/deberta-v3-large](https://huggingface.co/microsoft/deberta-v3-large) on the GLUE MNLI dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4103

- Accuracy: 0.9175

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 6e-06

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 50

- num_epochs: 2.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.3631 | 1.0 | 49088 | 0.3129 | 0.9130 |

| 0.2267 | 2.0 | 98176 | 0.4157 | 0.9153 |

### Framework versions

- Transformers 4.13.0.dev0

- Pytorch 1.10.0

- Datasets 1.15.2.dev0

- Tokenizers 0.10.3

|

bdaf98efccd4798fa413019de34739d9

|

gokuls/mobilebert_add_GLUE_Experiment_logit_kd_qqp

|

gokuls

|

mobilebert

| 17 | 2 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

|

['en']

|

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,455 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mobilebert_add_GLUE_Experiment_logit_kd_qqp

This model is a fine-tuned version of [google/mobilebert-uncased](https://huggingface.co/google/mobilebert-uncased) on the GLUE QQP dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8079

- Accuracy: 0.7570

- F1: 0.6049

- Combined Score: 0.6810

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Combined Score |

|:--------------------------:|:-----:|:-----:|:---------------:|:--------:|:------:|:--------------:|

| 1.2837 | 1.0 | 2843 | 1.2201 | 0.6318 | 0.0 | 0.3159 |

| 1.076 | 2.0 | 5686 | 0.8477 | 0.7443 | 0.5855 | 0.6649 |

| 0.866 | 3.0 | 8529 | 0.8217 | 0.7518 | 0.5924 | 0.6721 |

| 0.8317 | 4.0 | 11372 | 0.8136 | 0.7565 | 0.6243 | 0.6904 |

| 0.8122 | 5.0 | 14215 | 0.8126 | 0.7588 | 0.6352 | 0.6970 |

| 0.799 | 6.0 | 17058 | 0.8079 | 0.7570 | 0.6049 | 0.6810 |

| 386581134871678353408.0000 | 7.0 | 19901 | nan | 0.6318 | 0.0 | 0.3159 |

| 0.0 | 8.0 | 22744 | nan | 0.6318 | 0.0 | 0.3159 |

| 0.0 | 9.0 | 25587 | nan | 0.6318 | 0.0 | 0.3159 |

| 0.0 | 10.0 | 28430 | nan | 0.6318 | 0.0 | 0.3159 |

| 0.0 | 11.0 | 31273 | nan | 0.6318 | 0.0 | 0.3159 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.9.0

- Tokenizers 0.13.2

|

05ffd594bb36c9bef20c9e70c363b544

|

4m1g0/wav2vec2-large-xls-r-53m-gl-jupyter7

|

4m1g0

|

wav2vec2

| 13 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,325 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-53m-gl-jupyter7

This model is a fine-tuned version of [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1000

- Wer: 0.0639

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 60

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.8697 | 3.36 | 400 | 0.2631 | 0.2756 |

| 0.1569 | 6.72 | 800 | 0.1243 | 0.1300 |

| 0.0663 | 10.08 | 1200 | 0.1124 | 0.1153 |

| 0.0468 | 13.44 | 1600 | 0.1118 | 0.1037 |

| 0.0356 | 16.8 | 2000 | 0.1102 | 0.0978 |

| 0.0306 | 20.17 | 2400 | 0.1095 | 0.0935 |

| 0.0244 | 23.53 | 2800 | 0.1072 | 0.0844 |

| 0.0228 | 26.89 | 3200 | 0.1014 | 0.0874 |

| 0.0192 | 30.25 | 3600 | 0.1084 | 0.0831 |

| 0.0174 | 33.61 | 4000 | 0.1048 | 0.0772 |

| 0.0142 | 36.97 | 4400 | 0.1063 | 0.0764 |

| 0.0131 | 40.33 | 4800 | 0.1046 | 0.0770 |

| 0.0116 | 43.69 | 5200 | 0.0999 | 0.0716 |

| 0.0095 | 47.06 | 5600 | 0.1044 | 0.0729 |

| 0.0077 | 50.42 | 6000 | 0.1024 | 0.0670 |

| 0.0071 | 53.78 | 6400 | 0.0968 | 0.0631 |

| 0.0064 | 57.14 | 6800 | 0.1000 | 0.0639 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.9.1+cu111

- Datasets 1.18.3

- Tokenizers 0.10.3

|

31957f16d56213ef70c44aba2416abd3

|

sd-concepts-library/axe-tattoo

|

sd-concepts-library

| null | 14 | 0 |

transformers

| 0 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,226 | false |

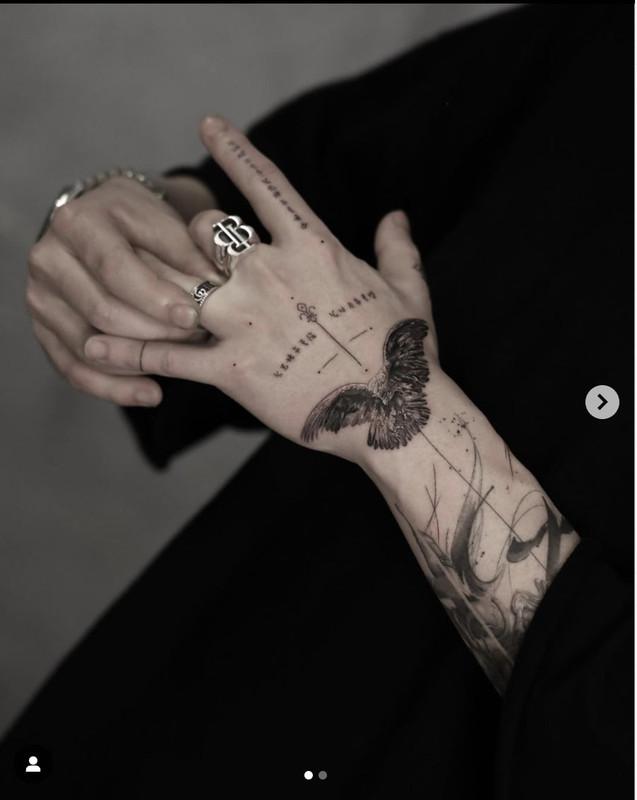

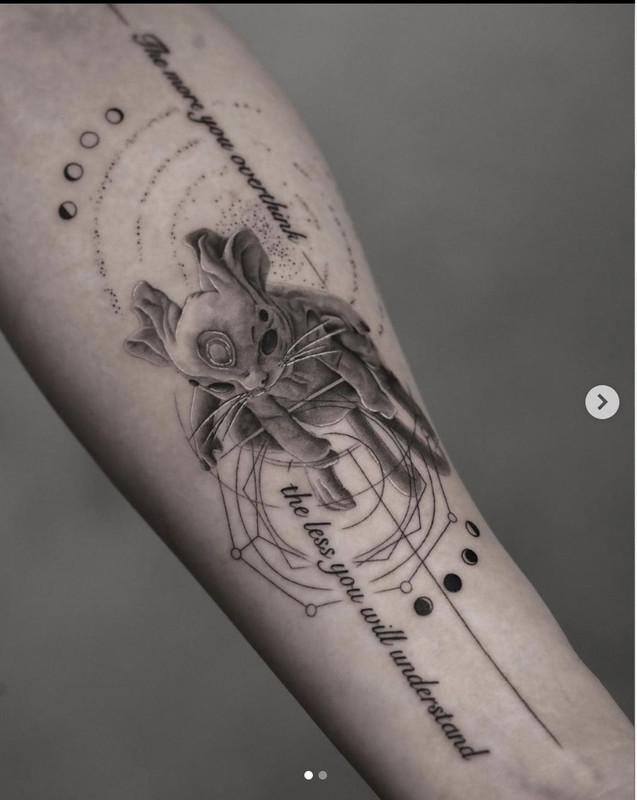

### axe_tattoo on Stable Diffusion

This is the `<axe-tattoo>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

9448fab08a6323d5520376d0ad20a9eb

|

Jeffsun/LSPV3

|

Jeffsun

| null | 30 | 0 |

diffusers

| 0 | null | false | false | false |

openrail

|

['en']

|

['Gustavosta/Stable-Diffusion-Prompts']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 952 | false |

prompt should contain: best quality, masterpiece, highrer,1girl, beautiful face

recommand:

DPM++2M Karras

nagative prompt (simple is better):(((simple background))),monochrome ,lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, lowres, bad anatomy, bad hands, text, error, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, ugly,pregnant,vore,duplicate,morbid,mut ilated,tran nsexual, hermaphrodite,long neck,mutated hands,poorly drawn hands,poorly drawn face,mutation,deformed,blurry,bad anatomy,bad proportions,malformed limbs,extra limbs,cloned face,disfigured,gross proportions, (((missing arms))),(((missing legs))), (((extra arms))),(((extra legs))),pubic hair, plump,bad legs,error legs,username,blurry,bad feet

|

be2f6753eddf2a47b42eb6ca8897543d

|

gokuls/bert-base-uncased-stsb

|

gokuls

|

bert

| 17 | 72 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

|

['en']

|

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,719 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-stsb

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the GLUE STSB dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4676

- Pearson: 0.8901

- Spearmanr: 0.8872

- Combined Score: 0.8887

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss | Pearson | Spearmanr | Combined Score |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:---------:|:--------------:|

| 2.3939 | 1.0 | 45 | 0.7358 | 0.8686 | 0.8653 | 0.8669 |

| 0.5084 | 2.0 | 90 | 0.4959 | 0.8835 | 0.8799 | 0.8817 |

| 0.3332 | 3.0 | 135 | 0.5002 | 0.8846 | 0.8815 | 0.8830 |

| 0.2202 | 4.0 | 180 | 0.4962 | 0.8854 | 0.8827 | 0.8840 |

| 0.1642 | 5.0 | 225 | 0.4848 | 0.8864 | 0.8839 | 0.8852 |

| 0.1312 | 6.0 | 270 | 0.4987 | 0.8872 | 0.8866 | 0.8869 |

| 0.1057 | 7.0 | 315 | 0.4840 | 0.8895 | 0.8848 | 0.8871 |

| 0.0935 | 8.0 | 360 | 0.4753 | 0.8887 | 0.8840 | 0.8863 |

| 0.0835 | 9.0 | 405 | 0.4676 | 0.8901 | 0.8872 | 0.8887 |

| 0.0749 | 10.0 | 450 | 0.4808 | 0.8901 | 0.8867 | 0.8884 |

| 0.0625 | 11.0 | 495 | 0.4760 | 0.8893 | 0.8857 | 0.8875 |

| 0.0607 | 12.0 | 540 | 0.5113 | 0.8899 | 0.8859 | 0.8879 |

| 0.0564 | 13.0 | 585 | 0.4918 | 0.8900 | 0.8860 | 0.8880 |

| 0.0495 | 14.0 | 630 | 0.4749 | 0.8905 | 0.8868 | 0.8887 |

| 0.0446 | 15.0 | 675 | 0.4889 | 0.8888 | 0.8856 | 0.8872 |

| 0.045 | 16.0 | 720 | 0.4680 | 0.8918 | 0.8889 | 0.8904 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.9.0

- Tokenizers 0.13.2

|

e6474110bd8d039ef1c1d52f532f430e

|

jonatasgrosman/exp_w2v2t_pl_vp-it_s474

|

jonatasgrosman

|

wav2vec2

| 10 | 6 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['pl']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'pl']

| false | true | true | 469 | false |

# exp_w2v2t_pl_vp-it_s474

Fine-tuned [facebook/wav2vec2-large-it-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-it-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (pl)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

b06ab4a11a893416724ba819cf07f3f9

|

marccgrau/whisper-small-allSNR-v8

|

marccgrau

|

whisper

| 13 | 3 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['de']

|

['marccgrau/sbbdata_allSNR']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['sbb-asr', 'generated_from_trainer']

| true | true | true | 1,599 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small German SBB all SNR - v8

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the SBB Dataset 05.01.2023 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0246

- Wer: 0.0235

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 100

- training_steps: 600

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 1.3694 | 0.36 | 100 | 0.2304 | 0.0495 |

| 0.0696 | 0.71 | 200 | 0.0311 | 0.0209 |

| 0.0324 | 1.07 | 300 | 0.0337 | 0.0298 |

| 0.0215 | 1.42 | 400 | 0.0254 | 0.0184 |

| 0.016 | 1.78 | 500 | 0.0279 | 0.0209 |

| 0.0113 | 2.14 | 600 | 0.0246 | 0.0235 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1

- Datasets 2.8.0

- Tokenizers 0.12.1

|

198d46b0949ab678ad9f87f27625dfd0

|

dbmdz/bert-base-german-europeana-uncased

|

dbmdz

|

bert

| 8 | 22 |

transformers

| 0 | null | true | true | true |

mit

|

['de']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['historic german']

| false | true | true | 2,334 | false |

# 🤗 + 📚 dbmdz BERT models

In this repository the MDZ Digital Library team (dbmdz) at the Bavarian State

Library open sources German Europeana BERT models 🎉

# German Europeana BERT

We use the open source [Europeana newspapers](http://www.europeana-newspapers.eu/)

that were provided by *The European Library*. The final

training corpus has a size of 51GB and consists of 8,035,986,369 tokens.

Detailed information about the data and pretraining steps can be found in

[this repository](https://github.com/stefan-it/europeana-bert).

## Model weights

Currently only PyTorch-[Transformers](https://github.com/huggingface/transformers)

compatible weights are available. If you need access to TensorFlow checkpoints,

please raise an issue!

| Model | Downloads

| ------------------------------------------ | ---------------------------------------------------------------------------------------------------------------

| `dbmdz/bert-base-german-europeana-uncased` | [`config.json`](https://cdn.huggingface.co/dbmdz/bert-base-german-europeana-uncased/config.json) • [`pytorch_model.bin`](https://cdn.huggingface.co/dbmdz/bert-base-german-europeana-uncased/pytorch_model.bin) • [`vocab.txt`](https://cdn.huggingface.co/dbmdz/bert-base-german-europeana-uncased/vocab.txt)

## Results

For results on Historic NER, please refer to [this repository](https://github.com/stefan-it/europeana-bert).

## Usage

With Transformers >= 2.3 our German Europeana BERT models can be loaded like:

```python

from transformers import AutoModel, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("dbmdz/bert-base-german-europeana-uncased")

model = AutoModel.from_pretrained("dbmdz/bert-base-german-europeana-uncased")

```

# Huggingface model hub

All models are available on the [Huggingface model hub](https://huggingface.co/dbmdz).

# Contact (Bugs, Feedback, Contribution and more)

For questions about our BERT models just open an issue

[here](https://github.com/dbmdz/berts/issues/new) 🤗

# Acknowledgments

Research supported with Cloud TPUs from Google's TensorFlow Research Cloud (TFRC).

Thanks for providing access to the TFRC ❤️

Thanks to the generous support from the [Hugging Face](https://huggingface.co/) team,

it is possible to download both cased and uncased models from their S3 storage 🤗

|

d0a4f951f0e70942ef7e38a75f083280

|

liyijing024/swin-base-patch4-window7-224-in22k-Chinese-finetuned

|

liyijing024

|

swin

| 9 | 13 |

transformers

| 0 |

image-classification

| true | false | false |

apache-2.0

| null |

['imagefolder']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,513 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-base-patch4-window7-224-in22k-Chinese-finetuned

This model is a fine-tuned version of [microsoft/swin-base-patch4-window7-224-in22k](https://huggingface.co/microsoft/swin-base-patch4-window7-224-in22k) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0000

- Accuracy: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 512

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.0121 | 0.99 | 140 | 0.0001 | 1.0 |

| 0.0103 | 1.99 | 280 | 0.0001 | 1.0 |

| 0.0049 | 2.99 | 420 | 0.0000 | 1.0 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.8.0+cu111

- Datasets 2.3.3.dev0

- Tokenizers 0.12.1

|

0b8799d352c6bd138d5e37e419af2a58

|

husnu/xtremedistil-l6-h256-uncased-TQUAD-finetuned_lr-2e-05_epochs-9

|

husnu

|

bert

| 12 | 5 |

transformers

| 0 |

question-answering

| true | false | false |

mit

| null |

['squad']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,647 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xtremedistil-l6-h256-uncased-TQUAD-finetuned_lr-2e-05_epochs-9

This model is a fine-tuned version of [microsoft/xtremedistil-l6-h256-uncased](https://huggingface.co/microsoft/xtremedistil-l6-h256-uncased) on the Turkish squad dataset.

It achieves the following results on the evaluation set:

- Loss: 2.2340

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 9

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 3.5236 | 1.0 | 1050 | 3.0042 |

| 2.8489 | 2.0 | 2100 | 2.5866 |

| 2.5485 | 3.0 | 3150 | 2.3526 |

| 2.4067 | 4.0 | 4200 | 2.3535 |

| 2.3091 | 5.0 | 5250 | 2.2862 |

| 2.2401 | 6.0 | 6300 | 2.3989 |

| 2.1715 | 7.0 | 7350 | 2.2284 |

| 2.1414 | 8.0 | 8400 | 2.2298 |

| 2.1221 | 9.0 | 9450 | 2.2340 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.0+cu111

- Datasets 1.17.0

- Tokenizers 0.10.3

|

eb15609aca5593c9cfb92aae5c8c58e5

|

kejian/final-mle

|

kejian

|

gpt2

| 49 | 4 |

transformers

| 0 | null | true | false | false |

apache-2.0

|

['en']

|

['kejian/codeparrot-train-more-filter-3.3b-cleaned']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 4,159 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# kejian/final-mle

This model was trained from scratch on the kejian/codeparrot-train-more-filter-3.3b-cleaned dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0008

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- training_steps: 50354

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.23.0

- Pytorch 1.13.0+cu116

- Datasets 2.0.0

- Tokenizers 0.12.1

# Full config

{'dataset': {'datasets': ['kejian/codeparrot-train-more-filter-3.3b-cleaned'],

'is_split_by_sentences': True},

'generation': {'batch_size': 64,

'metrics_configs': [{}, {'n': 1}, {}],

'scenario_configs': [{'display_as_html': True,

'generate_kwargs': {'do_sample': True,

'eos_token_id': 0,

'max_length': 640,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'unconditional',

'num_samples': 512},

{'display_as_html': True,

'generate_kwargs': {'do_sample': True,

'eos_token_id': 0,

'max_length': 272,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'functions',

'num_samples': 512,

'prompts_path': 'resources/functions_csnet.jsonl',

'use_prompt_for_scoring': True}],

'scorer_config': {}},

'kl_gpt3_callback': {'gpt3_kwargs': {'model_name': 'code-cushman-001'},

'max_tokens': 64,

'num_samples': 4096},

'model': {'from_scratch': True,

'gpt2_config_kwargs': {'reorder_and_upcast_attn': True,

'scale_attn_by': True},

'path_or_name': 'codeparrot/codeparrot-small'},

'objective': {'name': 'MLE'},

'tokenizer': {'path_or_name': 'codeparrot/codeparrot-small'},

'training': {'dataloader_num_workers': 0,

'effective_batch_size': 64,

'evaluation_strategy': 'no',

'fp16': True,

'hub_model_id': 'kejian/final-mle',

'hub_strategy': 'all_checkpoints',

'learning_rate': 0.0008,

'logging_first_step': True,

'logging_steps': 1,

'num_tokens': 3300000000.0,

'output_dir': 'training_output',

'per_device_train_batch_size': 16,

'push_to_hub': True,

'remove_unused_columns': False,

'save_steps': 5000,

'save_strategy': 'steps',

'seed': 42,

'warmup_ratio': 0.01,

'weight_decay': 0.1}}

# Wandb URL:

https://wandb.ai/kejian/uncategorized/runs/1oqdxrdb

|

9de0a29b9059262a3f37aeffb45bb1e3

|

ashesicsis1/xlsr-english

|

ashesicsis1

|

wav2vec2

| 13 | 6 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null |

['librispeech_asr']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,006 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlsr-english

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the librispeech_asr dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3098

- Wer: 0.1451

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.2453 | 2.37 | 400 | 0.5789 | 0.4447 |

| 0.3736 | 4.73 | 800 | 0.3737 | 0.2850 |

| 0.1712 | 7.1 | 1200 | 0.3038 | 0.2136 |

| 0.117 | 9.47 | 1600 | 0.3016 | 0.2072 |

| 0.0897 | 11.83 | 2000 | 0.3158 | 0.1920 |

| 0.074 | 14.2 | 2400 | 0.3137 | 0.1831 |

| 0.0595 | 16.57 | 2800 | 0.2967 | 0.1745 |

| 0.0493 | 18.93 | 3200 | 0.3192 | 0.1670 |

| 0.0413 | 21.3 | 3600 | 0.3176 | 0.1644 |

| 0.0322 | 23.67 | 4000 | 0.3079 | 0.1598 |

| 0.0296 | 26.04 | 4400 | 0.2978 | 0.1511 |

| 0.0235 | 28.4 | 4800 | 0.3098 | 0.1451 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

0cdbd6b5460b8087e04537fcefd94239

|

sd-concepts-library/party-girl

|

sd-concepts-library

| null | 11 | 0 | null | 6 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,246 | false |

### Party girl on Stable Diffusion