repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

sarahmiller137/distilbert-base-uncased-ft-m3-lc

|

sarahmiller137

|

distilbert

| 8 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

cc

|

['en']

|

['MIMIC-III']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['text classification']

| false | true | true | 1,459 | false |

## Model information:

This model is the [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) model that has been finetuned using radiology report texts from the MIMIC-III database. The task performed was text classification in order to benchmark this model with a selection of other variants of BERT for the classifcation of MIMIC-III radiology report texts into two classes. Labels of [0,1] were assigned to radiology reports in MIMIC-III that were linked to an ICD9 diagnosis code for lung cancer = 1 and a random sample of reports which were not linked to any type of cancer diagnosis code at all = 0.

## Intended uses:

This model is intended to be used to classify texts to identify the presence of lung cancer. The model will predict lables of [0,1].

## Limitations:

Note that the dataset and model may not be fully represetative or suitable for all needs it is recommended that the paper for the dataset and the base model card should be reviewed before use -

- [MIMIC-III](https://www.nature.com/articles/sdata201635.pdf)

- [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased)

## How to use:

Load the model from the library using the following checkpoints:

```python

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("sarahmiller137/distilbert-base-uncased-ft-m3-lc")

model = AutoModel.from_pretrained("sarahmiller137/distilbert-base-uncased-ft-m3-lc")

```

|

57d75d1b672ccd42b98de073a93e9c49

|

jonatasgrosman/exp_w2v2r_de_vp-100k_gender_male-2_female-8_s364

|

jonatasgrosman

|

wav2vec2

| 10 | 3 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['de']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'de']

| false | true | true | 498 | false |

# exp_w2v2r_de_vp-100k_gender_male-2_female-8_s364

Fine-tuned [facebook/wav2vec2-large-100k-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-100k-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (de)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

d1c78de5a18fa342c65e4652359158ac

|

pfluo/k2fsa-zipformer-chinese-english-mixed

|

pfluo

| null | 25 | 0 | null | 1 | null | false | false | false |

apache-2.0

| null | null | null | 2 | 0 | 2 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 3,425 | false |

## Chinese-English ASR model using k2-zipformer-streaming

### AIShell-1 and Wenetspeech testset results with modified-beam-search streaming decode using epoch-14.pt

| decode_chunk_len | AIShell-1 | TEST_NET | TEST_MEETING |

|------------------|-----------|----------|--------------|

| 32 | 3.19 | 9.58 | 9.51 ||

| 64 | 3.04 | 8.97 | 8.83 ||

### Training and decoding commands

```

nohup ./pruned_transducer_stateless7_streaming/train.py --world-size 8 --num-epochs 30 --start-epoch 1 --feedforward-dims "1024,1024,1536,1536,1024" --exp-dir pruned_transducer_stateless7_streaming/exp --max-duration 360 > pruned_transducer_stateless7_streaming/exp/nohup.zipformer &

nohup ./pruned_transducer_stateless7_streaming/decode.py --epoch 6 --avg 1 --exp-dir ./pruned_transducer_stateless7_streaming/exp --max-duration 600 --decode-chunk-len 32 --decoding-method modified_beam_search --beam-size 4 > nohup.zipformer.deocode &

```

### Model unit is char+bpe as `data/lang_char_bpe/tokens.txt`

### Tips

some k2-fsa version and parameter is

```

{'best_train_loss': inf, 'best_valid_loss': inf, 'best_train_epoch': -1, 'best_valid_epoch': -1, 'batch_idx_train': 0, 'lo

g_interval': 50, 'reset_interval': 200, 'valid_interval': 3000, 'feature_dim': 80, 'subsampling_factor': 4, 'warm_step': 2000, 'env_info': {'k2-version': '1.23.2', 'k2-build

-type': 'Release', 'k2-with-cuda': True, 'k2-git-sha1': 'a74f59dba1863cd9386ba4d8815850421260eee7', 'k2-git-date': 'Fri Dec 2 08:32:22 2022', 'lhotse-version': '1.5.0.dev+gi

t.8ce38fc.dirty', 'torch-version': '1.11.0+cu113', 'torch-cuda-available': True, 'torch-cuda-version': '11.3', 'python-version': '3.7', 'icefall-git-branch': 'master', 'icef

all-git-sha1': '11b08db-dirty', 'icefall-git-date': 'Thu Jan 12 10:19:21 2023', 'icefall-path': '/opt/conda/lib/python3.7/site-packages', 'k2-path': '/opt/conda/lib/python3.

7/site-packages/k2/__init__.py', 'lhotse-path': '/opt/conda/lib/python3.7/site-packages/lhotse/__init__.py', 'hostname': 'xxx', 'IP add

ress': 'x.x.x.x'}, 'world_size': 8, 'master_port': 12354, 'tensorboard': True, 'num_epochs': 30, 'start_epoch': 1, 'start_batch': 0, 'exp_dir': PosixPath('pruned_trans

ducer_stateless7_streaming/exp'), 'lang_dir': 'data/lang_char_bpe', 'base_lr': 0.01, 'lr_batches': 5000, 'lr_epochs': 3.5, 'context_size': 2, 'prune_range': 5, 'lm_scale': 0

.25, 'am_scale': 0.0, 'simple_loss_scale': 0.5, 'seed': 42, 'print_diagnostics': False, 'inf_check': False, 'save_every_n': 2000, 'keep_last_k': 30, 'average_period': 200, '

use_fp16': False, 'num_encoder_layers': '2,4,3,2,4', 'feedforward_dims': '1024,1024,1536,1536,1024', 'nhead': '8,8,8,8,8', 'encoder_dims': '384,384,384,384,384', 'attention_

dims': '192,192,192,192,192', 'encoder_unmasked_dims': '256,256,256,256,256', 'zipformer_downsampling_factors': '1,2,4,8,2', 'cnn_module_kernels': '31,31,31,31,31', 'decoder

_dim': 512, 'joiner_dim': 512, 'short_chunk_size': 50, 'num_left_chunks': 4, 'decode_chunk_len': 32, 'manifest_dir': PosixPath('data/fbank'), 'max_duration': 360, 'bucketing

_sampler': True, 'num_buckets': 300, 'concatenate_cuts': False, 'duration_factor': 1.0, 'gap': 1.0, 'on_the_fly_feats': False, 'shuffle': True, 'return_cuts': True, 'num_wor

kers': 8, 'enable_spec_aug': True, 'spec_aug_time_warp_factor': 80, 'enable_musan': True, 'training_subset': '12k_hour', 'blank_id': 0, 'vocab_size': 6254}

```

|

fa5e48f9551d52ca2422b614da4070a6

|

hugogolastico/finetuning-sentiment-model-3000-samples

|

hugogolastico

|

distilbert

| 13 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,040 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3185

- Accuracy: 0.8667

- F1: 0.8675

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1+cu116

- Tokenizers 0.13.2

|

e56c2df2a6a05469de0931dea9931408

|

sd-concepts-library/guttestreker

|

sd-concepts-library

| null | 18 | 0 | null | 8 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 2,062 | false |

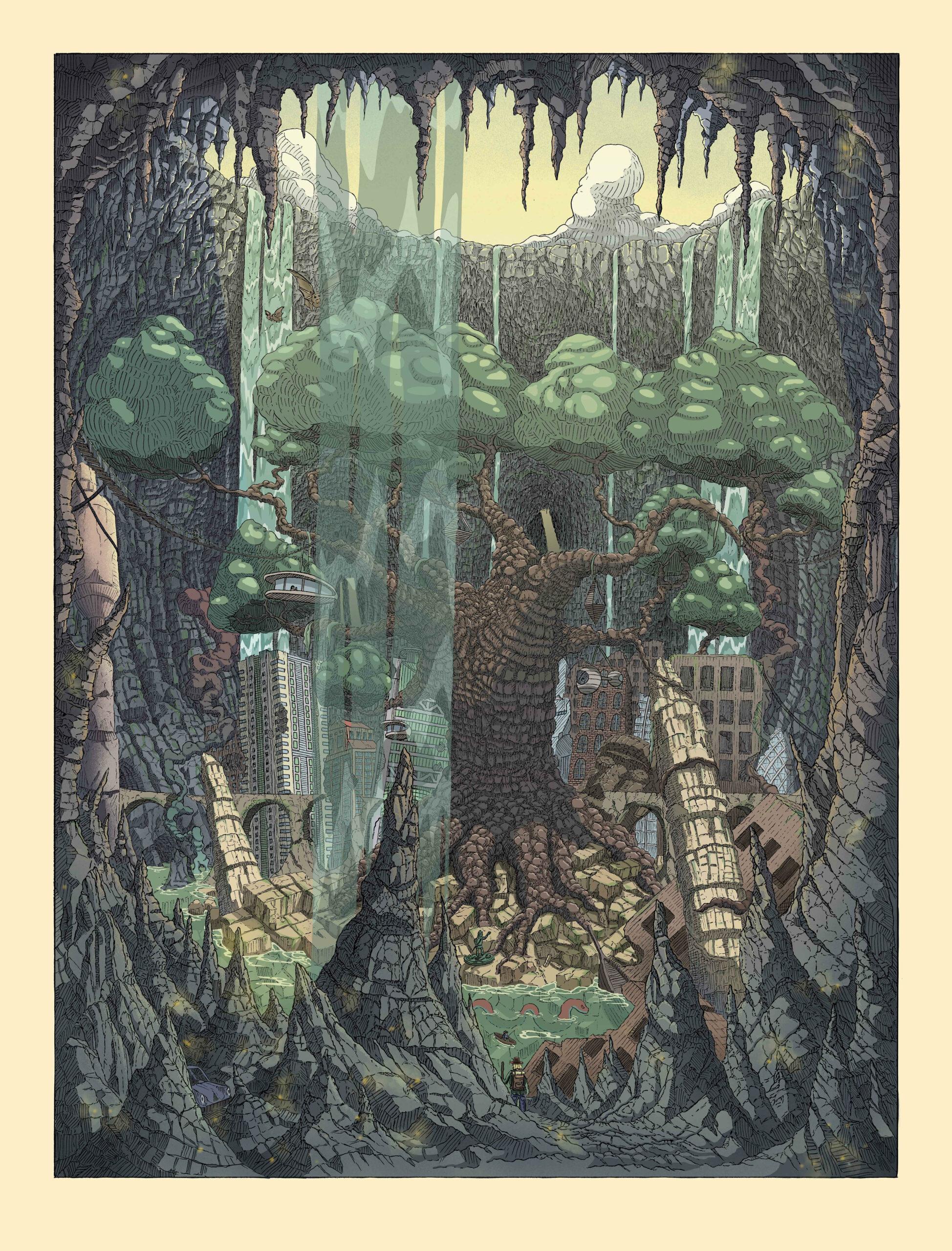

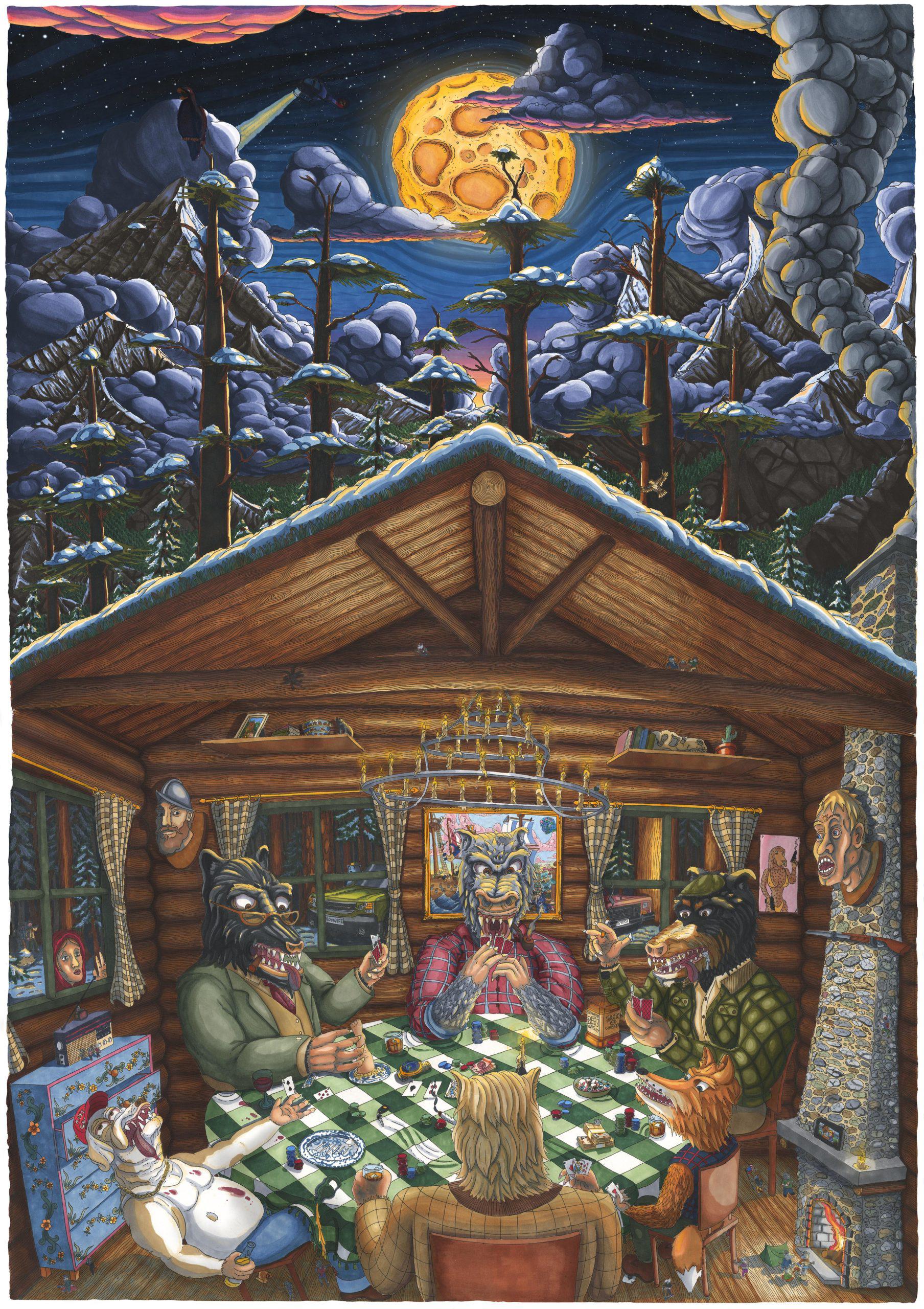

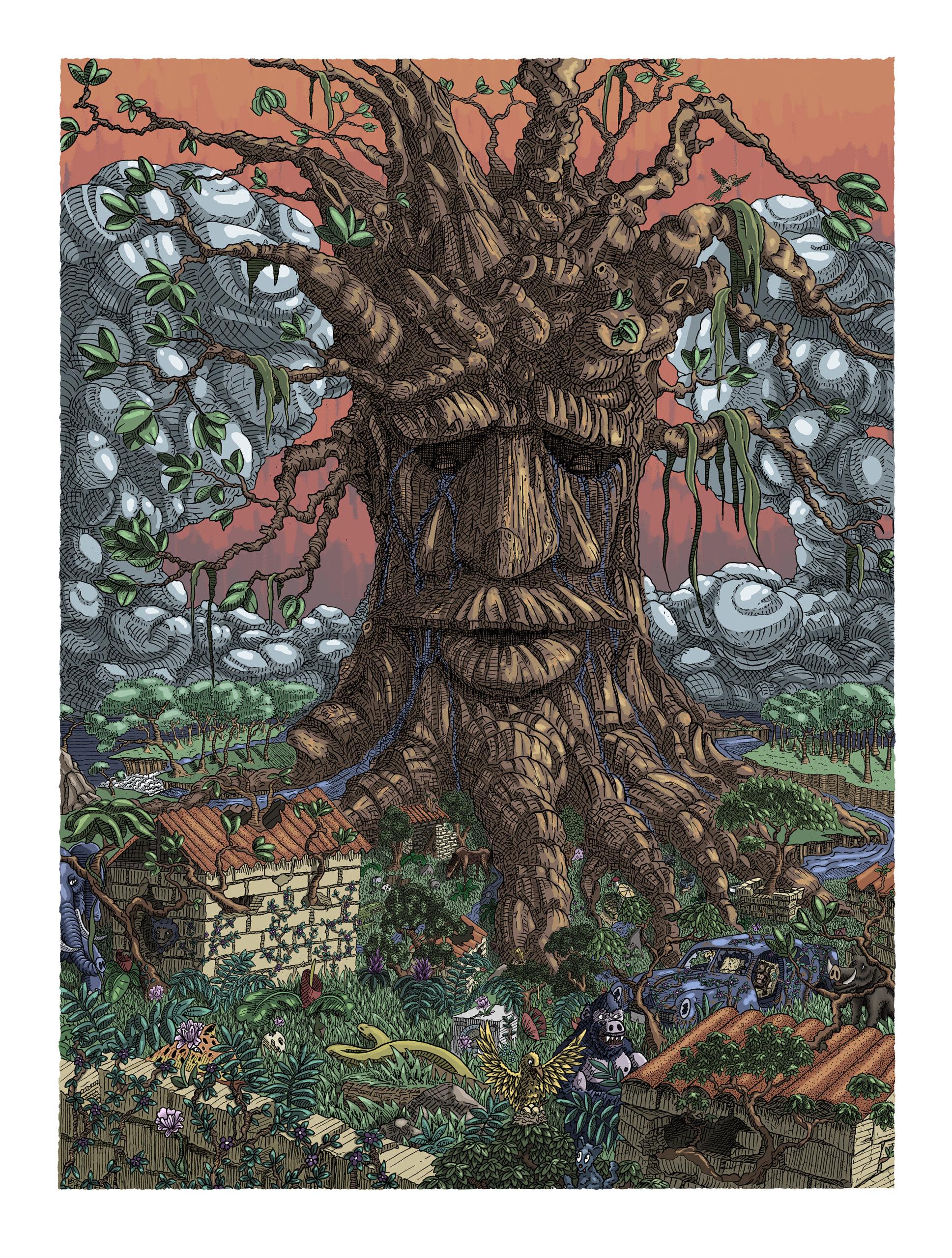

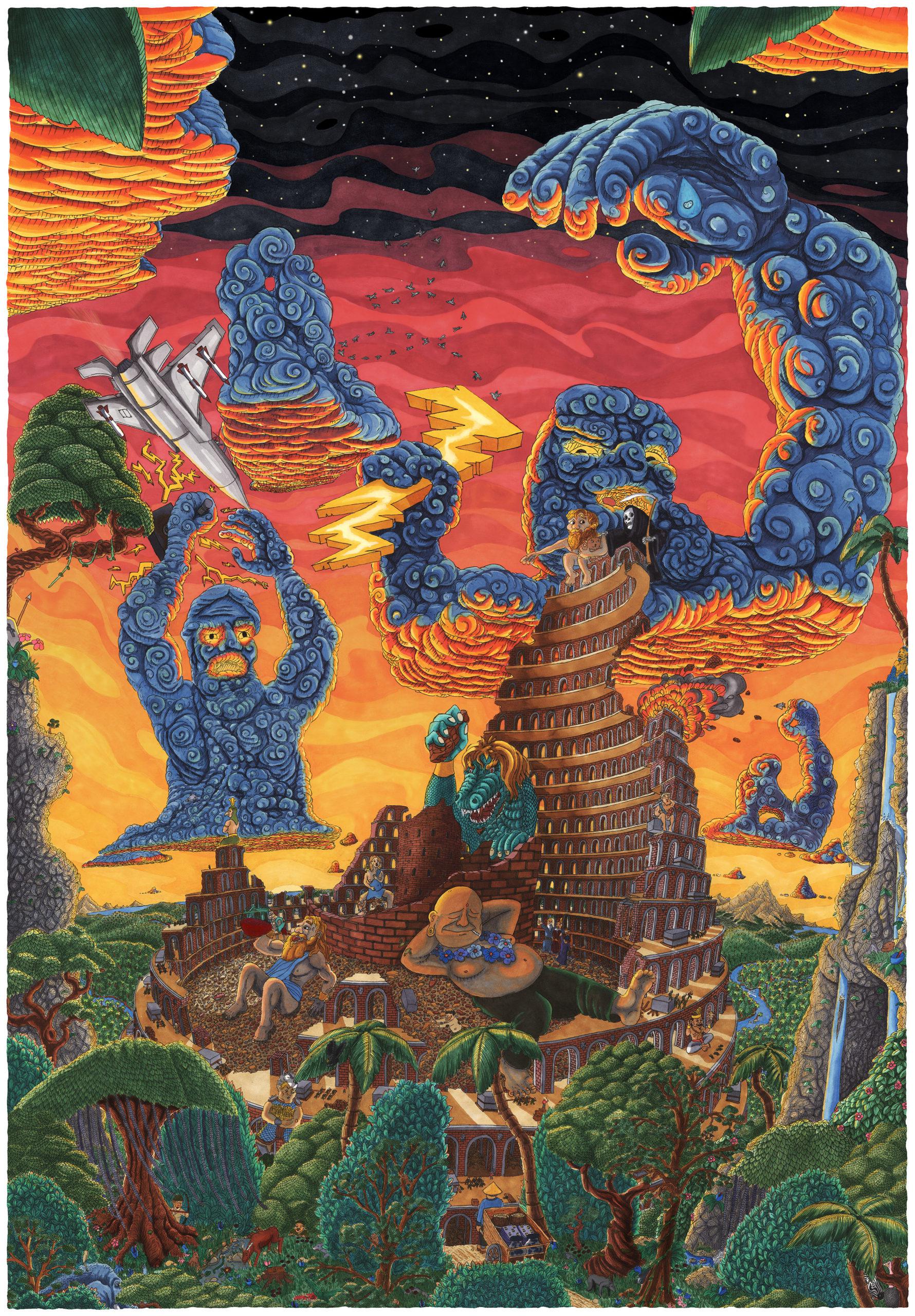

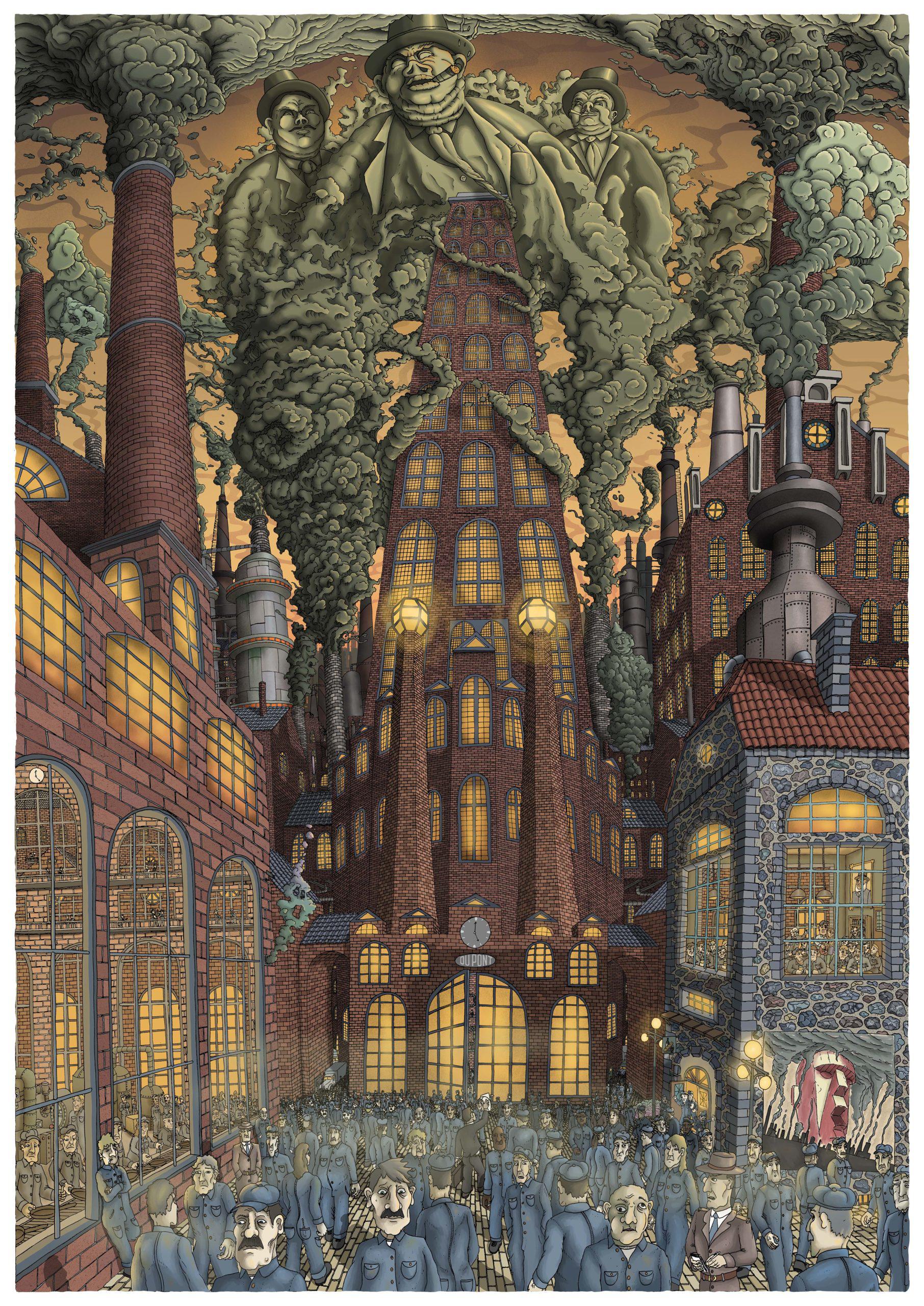

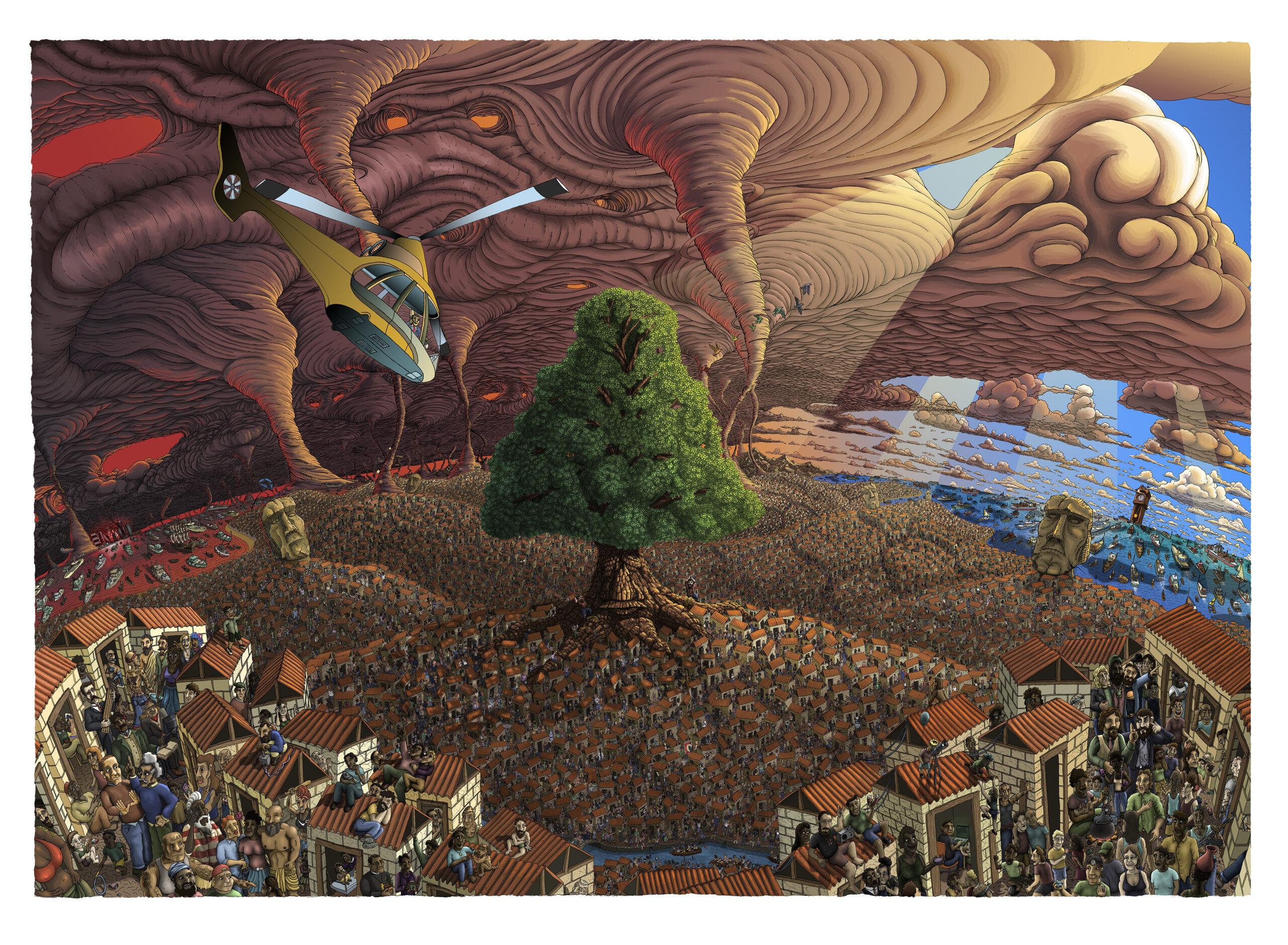

### guttestreker on Stable Diffusion

This is the `<guttestreker>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

d23ed7998bf843adf276e43e8dc76686

|

MaryaAI/opus-mt-ar-en-finetunedTanzil-v5-ar-to-en

|

MaryaAI

|

marian

| 9 | 5 |

transformers

| 0 |

text2text-generation

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,907 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# opus-mt-ar-en-finetunedTanzil-v5-ar-to-en

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-ar-en](https://huggingface.co/Helsinki-NLP/opus-mt-ar-en) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.8101

- Validation Loss: 0.9477

- Train Bleu: 9.3241

- Train Gen Len: 88.73

- Train Rouge1: 56.4906

- Train Rouge2: 34.2668

- Train Rougel: 53.2279

- Train Rougelsum: 53.7836

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': 2e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Bleu | Train Gen Len | Train Rouge1 | Train Rouge2 | Train Rougel | Train Rougelsum | Epoch |

|:----------:|:---------------:|:----------:|:-------------:|:------------:|:------------:|:------------:|:---------------:|:-----:|

| 0.8735 | 0.9809 | 11.0863 | 78.68 | 56.4557 | 33.3673 | 53.4828 | 54.1197 | 0 |

| 0.8408 | 0.9647 | 9.8543 | 88.955 | 57.3797 | 34.3539 | 53.8783 | 54.3714 | 1 |

| 0.8101 | 0.9477 | 9.3241 | 88.73 | 56.4906 | 34.2668 | 53.2279 | 53.7836 | 2 |

### Framework versions

- Transformers 4.17.0.dev0

- TensorFlow 2.7.0

- Datasets 1.18.4.dev0

- Tokenizers 0.10.3

|

33f7f642d95f93003020bd8ab0888dac

|

kingabzpro/wav2vec2-large-xls-r-1b-Indonesian

|

kingabzpro

|

wav2vec2

| 11 | 7 |

transformers

| 1 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['id']

|

['mozilla-foundation/common_voice_8_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'hf-asr-leaderboard', 'robust-speech-event']

| true | true | true | 1,576 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-1b-Indonesian

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-1b](https://huggingface.co/facebook/wav2vec2-xls-r-1b) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9550

- Wer: 0.4551

- Cer: 0.1643

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 64

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 400

- num_epochs: 50

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Cer |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|

| 3.663 | 7.69 | 200 | 0.7898 | 0.6039 | 0.1848 |

| 0.7424 | 15.38 | 400 | 1.0215 | 0.5615 | 0.1924 |

| 0.4494 | 23.08 | 600 | 1.0901 | 0.5249 | 0.1932 |

| 0.5075 | 30.77 | 800 | 1.1013 | 0.5079 | 0.1935 |

| 0.4671 | 38.46 | 1000 | 1.1034 | 0.4916 | 0.1827 |

| 0.1928 | 46.15 | 1200 | 0.9550 | 0.4551 | 0.1643 |

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.10.2+cu102

- Datasets 1.18.2.dev0

- Tokenizers 0.11.0

|

be9baf1ef1e20b45880f9c5ba79775f9

|

helloway/lenet

|

helloway

| null | 5 | 22 |

mindspore

| 0 |

image-classification

| false | false | false |

apache-2.0

| null |

['mnist']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['image-classification']

| false | true | true | 888 | false |

## MindSpore Image Classification models with MNIST on the 🤗Hub!

This repository contains the model from [this notebook on image classification with MNIST dataset using LeNet architecture](https://gitee.com/mindspore/mindspore/blob/r1.2/model_zoo/official/cv/lenet/README.md#).

## LeNet Description

Lenet-5 is one of the earliest pre-trained models proposed by Yann LeCun and others in the year 1998, in the research paper Gradient-Based Learning Applied to Document Recognition. They used this architecture for recognizing the handwritten and machine-printed characters.

The main reason behind the popularity of this model was its simple and straightforward architecture. It is a multi-layer convolution neural network for image classification.

[source](https://www.analyticsvidhya.com/blog/2021/03/the-architecture-of-lenet-5/)

|

e1c796dc1c1d4c96216577041344ff70

|

elopezlopez/Bio_ClinicalBERT_fold_6_ternary_v1

|

elopezlopez

|

bert

| 13 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,668 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Bio_ClinicalBERT_fold_6_ternary_v1

This model is a fine-tuned version of [emilyalsentzer/Bio_ClinicalBERT](https://huggingface.co/emilyalsentzer/Bio_ClinicalBERT) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7302

- F1: 0.8128

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 25

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| No log | 1.0 | 292 | 0.5359 | 0.7833 |

| 0.5585 | 2.0 | 584 | 0.5376 | 0.8026 |

| 0.5585 | 3.0 | 876 | 0.6117 | 0.8038 |

| 0.2314 | 4.0 | 1168 | 0.8036 | 0.7974 |

| 0.2314 | 5.0 | 1460 | 0.9467 | 0.8179 |

| 0.1093 | 6.0 | 1752 | 1.2957 | 0.7923 |

| 0.0384 | 7.0 | 2044 | 1.3423 | 0.8026 |

| 0.0384 | 8.0 | 2336 | 1.2644 | 0.8218 |

| 0.021 | 9.0 | 2628 | 1.3093 | 0.8231 |

| 0.021 | 10.0 | 2920 | 1.3282 | 0.8179 |

| 0.0129 | 11.0 | 3212 | 1.3853 | 0.8295 |

| 0.0078 | 12.0 | 3504 | 1.4705 | 0.8154 |

| 0.0078 | 13.0 | 3796 | 1.5063 | 0.8167 |

| 0.0064 | 14.0 | 4088 | 1.5293 | 0.8179 |

| 0.0064 | 15.0 | 4380 | 1.6303 | 0.8128 |

| 0.0085 | 16.0 | 4672 | 1.5945 | 0.8115 |

| 0.0085 | 17.0 | 4964 | 1.6899 | 0.8103 |

| 0.0056 | 18.0 | 5256 | 1.6952 | 0.8064 |

| 0.0055 | 19.0 | 5548 | 1.7550 | 0.7936 |

| 0.0055 | 20.0 | 5840 | 1.6779 | 0.8141 |

| 0.003 | 21.0 | 6132 | 1.7064 | 0.8128 |

| 0.003 | 22.0 | 6424 | 1.7192 | 0.8154 |

| 0.0013 | 23.0 | 6716 | 1.8188 | 0.7974 |

| 0.0014 | 24.0 | 7008 | 1.7273 | 0.8128 |

| 0.0014 | 25.0 | 7300 | 1.7302 | 0.8128 |

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

dd6bff728f506563faa19306a8719591

|

weijiahaha/t5-small-summarization

|

weijiahaha

|

t5

| 28 | 0 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null |

['cnn_dailymail']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 796 | false |

# t5-small-summarization

This model is a fine-tuned version of t5-small (https://huggingface.co/t5-small) on the cnn_dailymail dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6477

## Model description

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.9195 | 1.0 | 718 | 1.6477 |

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

0289993ea0c0311b4367e58b503c83f5

|

anas-awadalla/bart-base-few-shot-k-128-finetuned-squad-seq2seq-seed-0

|

anas-awadalla

|

bart

| 23 | 1 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null |

['squad']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 963 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-base-few-shot-k-128-finetuned-squad-seq2seq-seed-0

This model is a fine-tuned version of [facebook/bart-base](https://huggingface.co/facebook/bart-base) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 0

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 35.0

### Training results

### Framework versions

- Transformers 4.20.0.dev0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.11.6

|

86d6373a9103891a7d9b72b71cbf1bfb

|

anas-awadalla/bert-base-uncased-few-shot-k-256-finetuned-squad-seed-6

|

anas-awadalla

|

bert

| 16 | 5 |

transformers

| 0 |

question-answering

| true | false | false |

apache-2.0

| null |

['squad']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 998 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-few-shot-k-256-finetuned-squad-seed-6

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10.0

### Training results

### Framework versions

- Transformers 4.16.0.dev0

- Pytorch 1.10.2+cu102

- Datasets 1.17.0

- Tokenizers 0.10.3

|

ba3391316ba44d4532f8249e820c207e

|

fathyshalab/massive_general-roberta-large-v1-5-95

|

fathyshalab

|

roberta

| 14 | 22 |

sentence-transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['setfit', 'sentence-transformers', 'text-classification']

| false | true | true | 1,464 | false |

# fathyshalab/massive_general-roberta-large-v1-5-95

This is a [SetFit model](https://github.com/huggingface/setfit) that can be used for text classification. The model has been trained using an efficient few-shot learning technique that involves:

1. Fine-tuning a [Sentence Transformer](https://www.sbert.net) with contrastive learning.

2. Training a classification head with features from the fine-tuned Sentence Transformer.

## Usage

To use this model for inference, first install the SetFit library:

```bash

python -m pip install setfit

```

You can then run inference as follows:

```python

from setfit import SetFitModel

# Download from Hub and run inference

model = SetFitModel.from_pretrained("fathyshalab/massive_general-roberta-large-v1-5-95")

# Run inference

preds = model(["i loved the spiderman movie!", "pineapple on pizza is the worst 🤮"])

```

## BibTeX entry and citation info

```bibtex

@article{https://doi.org/10.48550/arxiv.2209.11055,

doi = {10.48550/ARXIV.2209.11055},

url = {https://arxiv.org/abs/2209.11055},

author = {Tunstall, Lewis and Reimers, Nils and Jo, Unso Eun Seo and Bates, Luke and Korat, Daniel and Wasserblat, Moshe and Pereg, Oren},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Efficient Few-Shot Learning Without Prompts},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

```

|

1acf2c8135ba6251bef14d014b8aea7a

|

redfoo/stable-diffusion-2-inpainting-endpoint-foo

|

redfoo

| null | 21 | 4 |

diffusers

| 0 | null | false | false | false |

openrail++

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['stable-diffusion', 'stable-diffusion-diffusers', 'text-guided-to-image-inpainting', 'endpoints-template']

| false | true | true | 2,570 | false |

# Fork of [stabilityai/stable-diffusion-2-inpainting](https://huggingface.co/stabilityai/stable-diffusion-2-inpainting)

> Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input.

> For more information about how Stable Diffusion functions, please have a look at [🤗's Stable Diffusion with 🧨Diffusers blog](https://huggingface.co/blog/stable_diffusion).

For more information about the model, license and limitations check the original model card at [stabilityai/stable-diffusion-2-inpainting](https://huggingface.co/stabilityai/stable-diffusion-2-inpainting).

---

This repository implements a custom `handler` task for `text-guided-to-image-inpainting` for 🤗 Inference Endpoints. The code for the customized pipeline is in the [handler.py](https://huggingface.co/philschmid/stable-diffusion-2-inpainting-endpoint/blob/main/handler.py).

There is also a [notebook](https://huggingface.co/philschmid/stable-diffusion-2-inpainting-endpoint/blob/main/create_handler.ipynb) included, on how to create the `handler.py`

### expected Request payload

```json

{

"inputs": "A prompt used for image generation",

"image" : "iVBORw0KGgoAAAANSUhEUgAAAgAAAAIACAIAAAB7GkOtAAAABGdBTUEAALGPC",

"mask_image": "iVBORw0KGgoAAAANSUhEUgAAAgAAAAIACAIAAAB7GkOtAAAABGdBTUEAALGPC",

}

```

below is an example on how to run a request using Python and `requests`.

## Run Request

```python

import json

from typing import List

import requests as r

import base64

from PIL import Image

from io import BytesIO

ENDPOINT_URL = ""

HF_TOKEN = ""

# helper image utils

def encode_image(image_path):

with open(image_path, "rb") as i:

b64 = base64.b64encode(i.read())

return b64.decode("utf-8")

def predict(prompt, image, mask_image):

image = encode_image(image)

mask_image = encode_image(mask_image)

# prepare sample payload

request = {"inputs": prompt, "image": image, "mask_image": mask_image}

# headers

headers = {

"Authorization": f"Bearer {HF_TOKEN}",

"Content-Type": "application/json",

"Accept": "image/png" # important to get an image back

}

response = r.post(ENDPOINT_URL, headers=headers, json=payload)

img = Image.open(BytesIO(response.content))

return img

prediction = predict(

prompt="Face of a bengal cat, high resolution, sitting on a park bench",

image="dog.png",

mask_image="mask_dog.png"

)

```

expected output

|

b3ff7a97f01a88587144d8f2d271b7ff

|

buruzaemon/test-minilm-finetuned-emotion

|

buruzaemon

|

bert

| 13 | 9 |

transformers

| 0 |

text-classification

| true | false | false |

bsd-3-clause

|

['en']

|

['SetFit/emotion']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['microsoft/MiniLM-L12-H384-uncased']

| false | true | true | 603 | false |

# test-minilm-finetuned-emotion fine-tuned model (uncased)

This model is a fine-tuned extension of the [Microsoft MiniLM distilled model](https://huggingface.co/microsoft/MiniLM-L12-H384-uncased). This is the result of the learning exercise for [Simple Training with the 🤗 Transformers Trainer](https://www.youtube.com/watch?v=u--UVvH-LIQ&t=198s) and also going through Chapter 2, Text Classification in [Natural Language Processing with Transformers](https://transformersbook.com/), Revised Color Edition, May 2022.

This model is uncased: it does not make a difference between english and English.

|

b188bd8502ec108a1b1af9c2e5f6bf2b

|

sd-concepts-library/anime-background-style-v2

|

sd-concepts-library

| null | 19 | 0 | null | 12 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 3,049 | false |

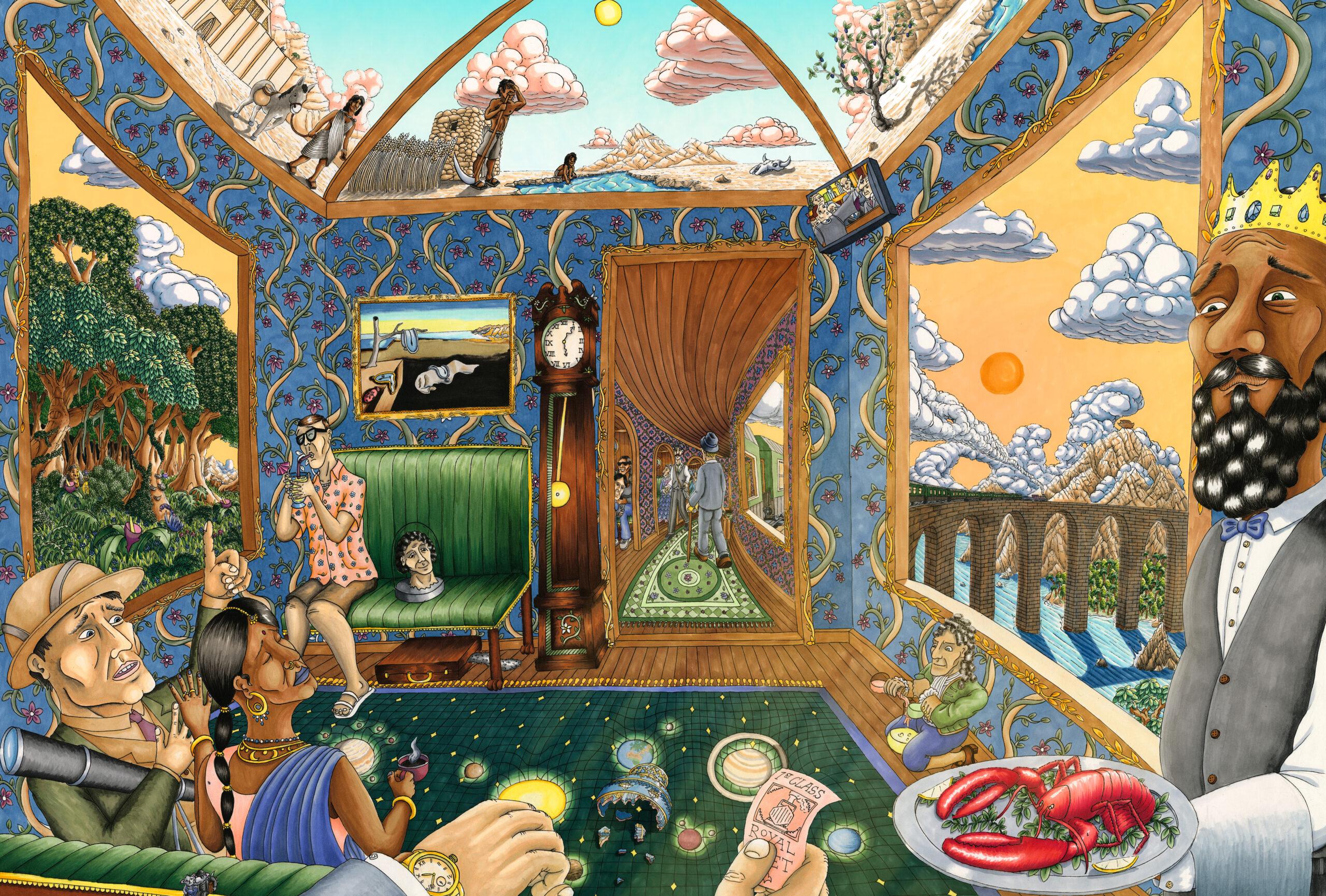

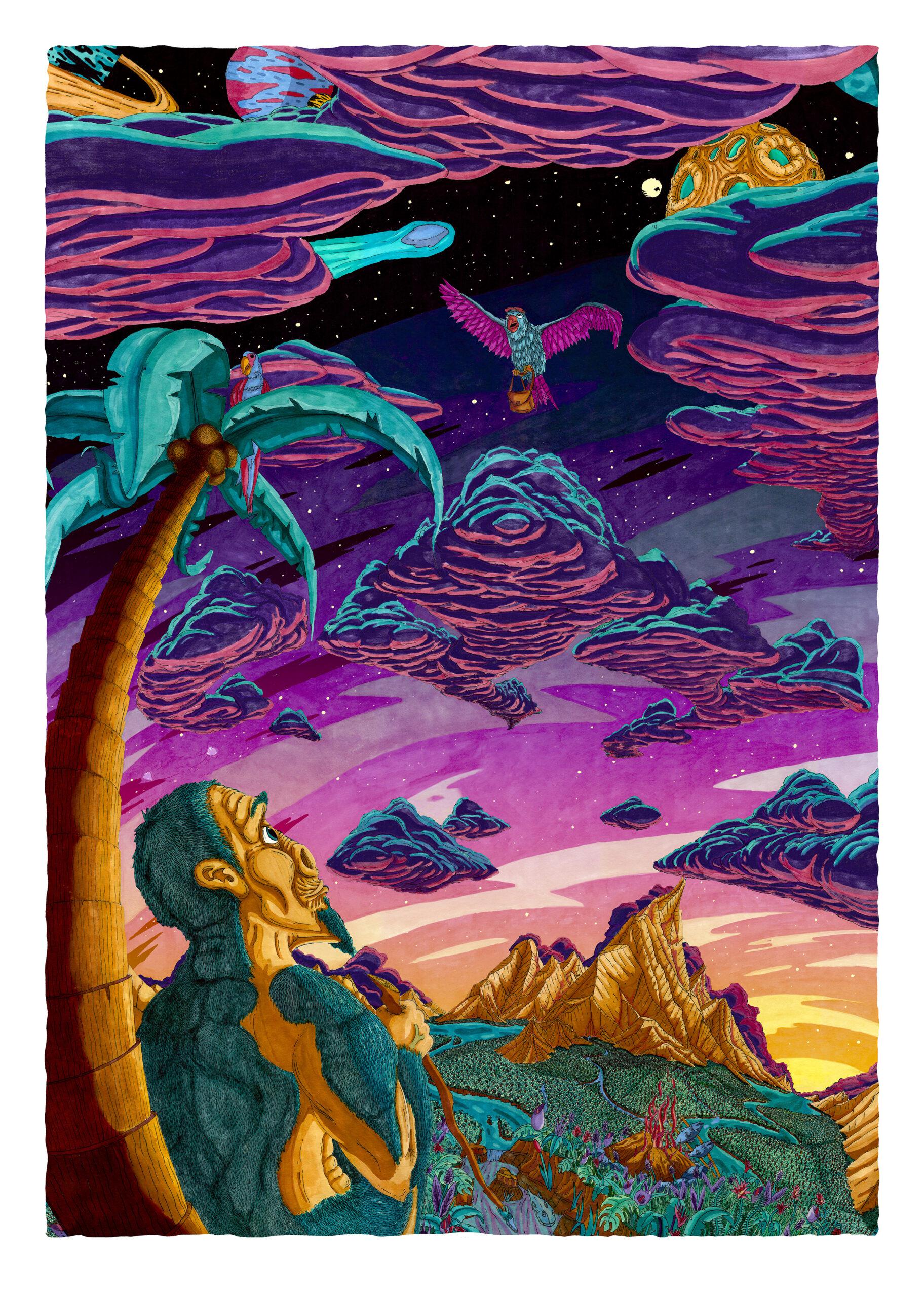

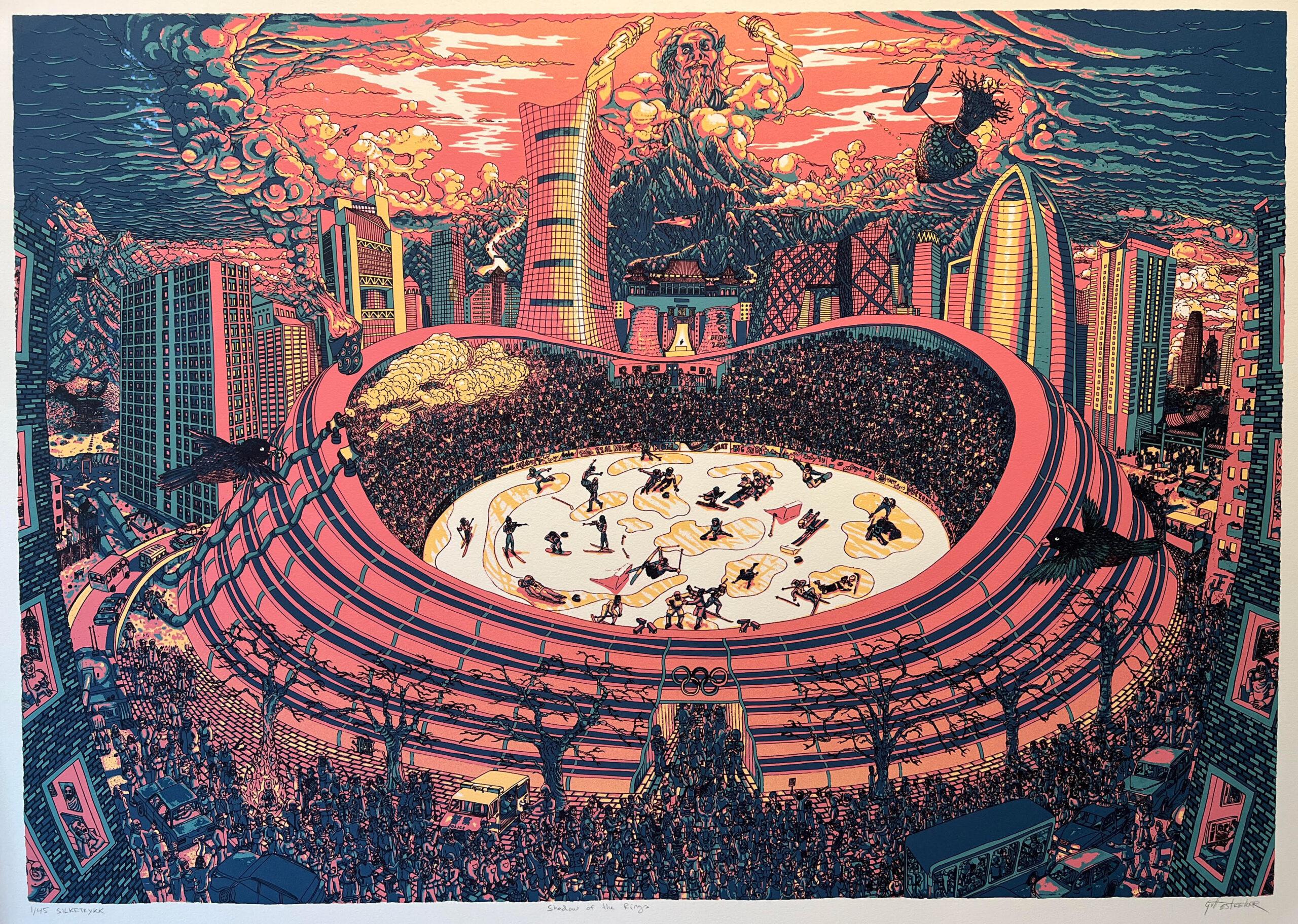

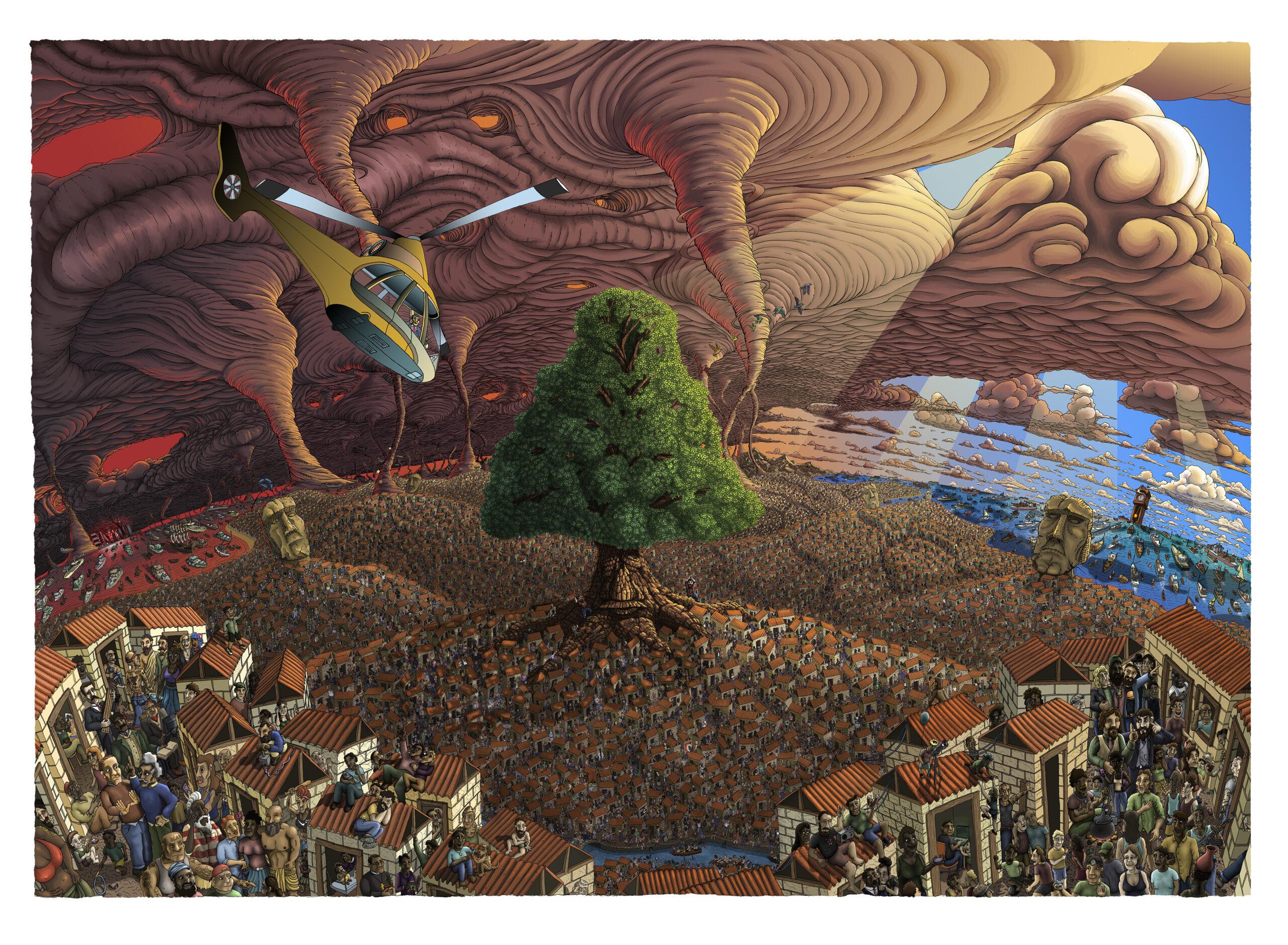

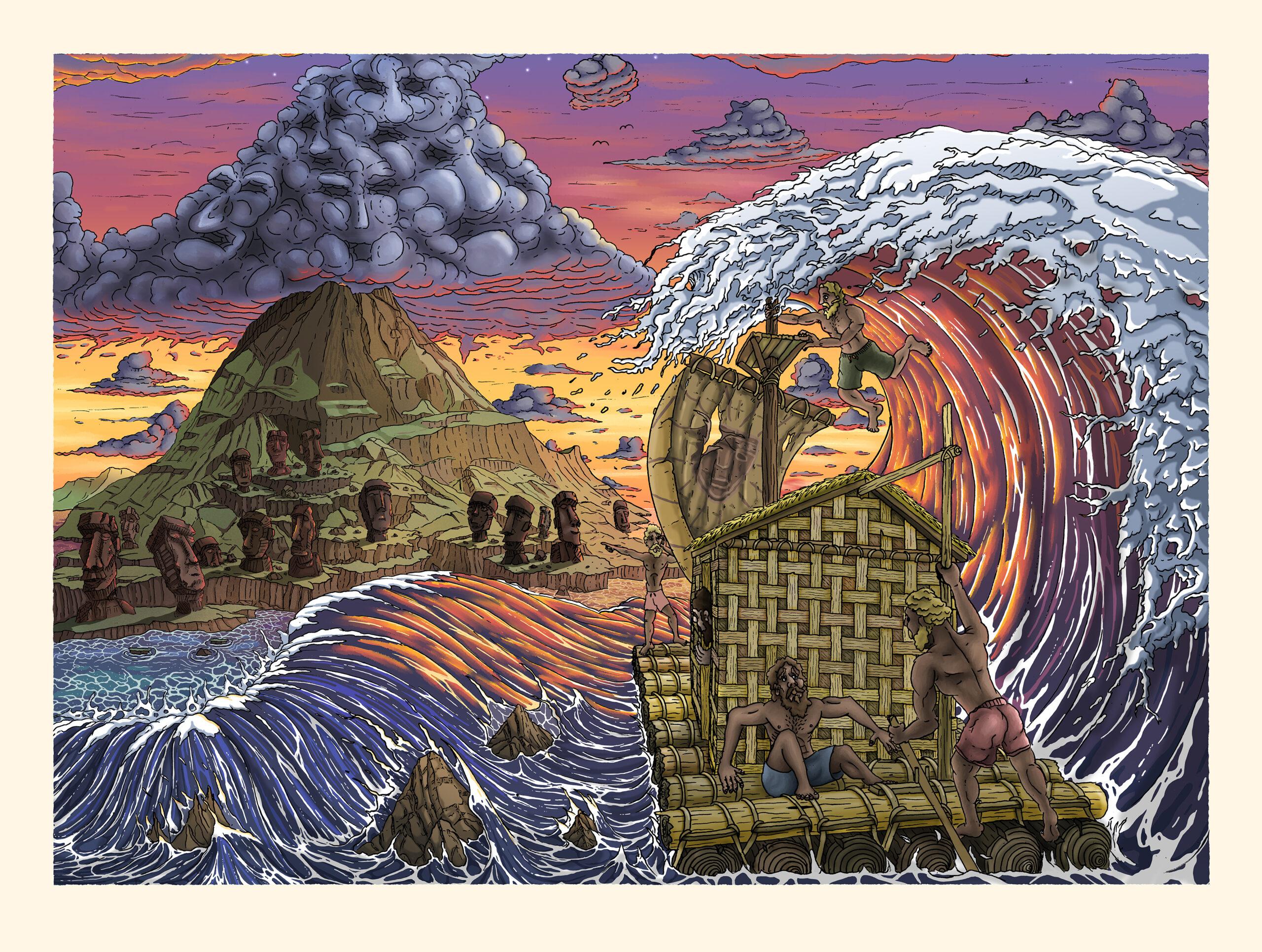

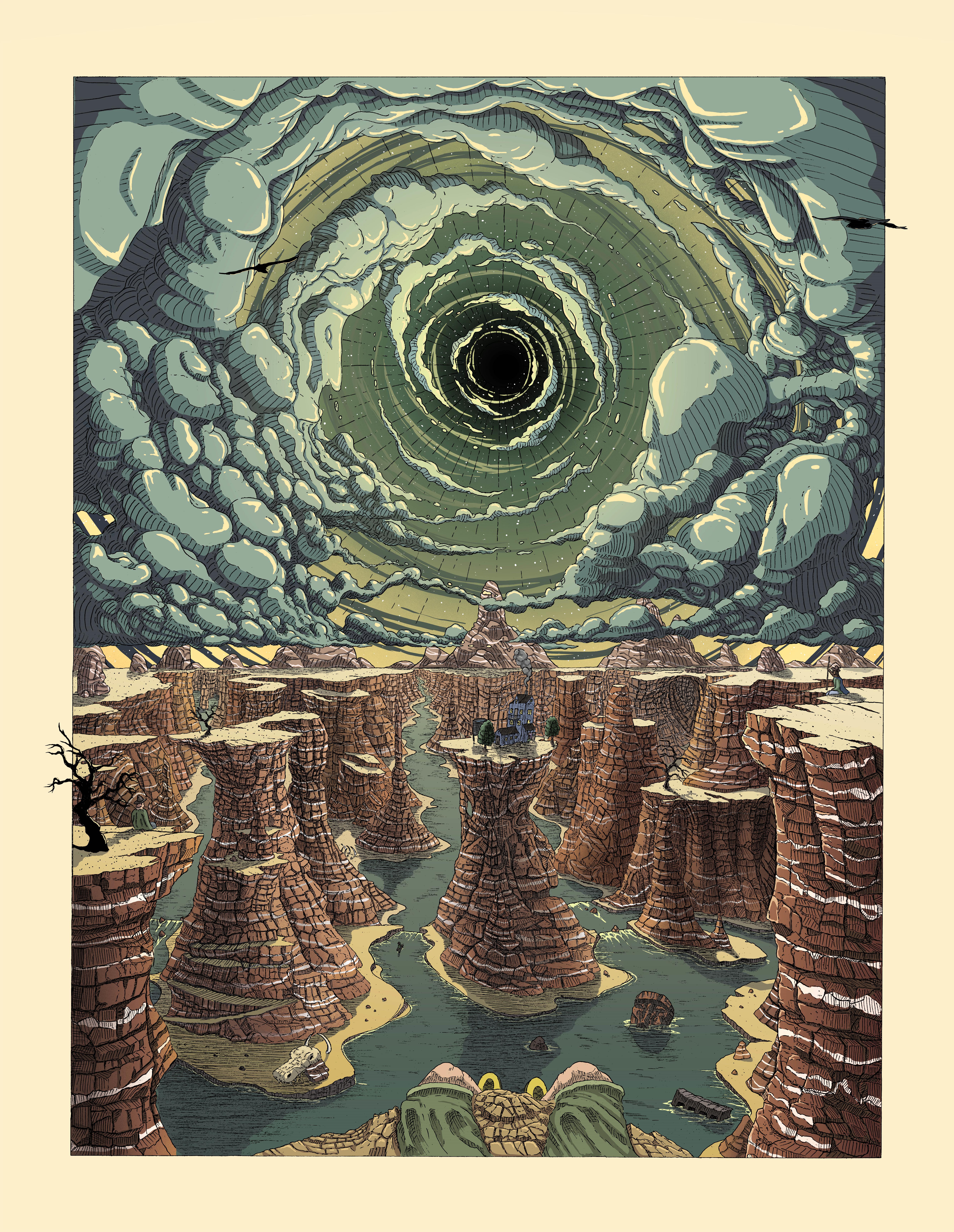

### Anime Background style (v2) on Stable Diffusion

This is the `<anime-background-style-v2>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

Here are images generated with this style:

|

cc8b7190cd8440412cc4d911ddef42f5

|

Helsinki-NLP/opus-mt-eo-ru

|

Helsinki-NLP

|

marian

| 11 | 250 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

|

['eo', 'ru']

| null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 1,999 | false |

### epo-rus

* source group: Esperanto

* target group: Russian

* OPUS readme: [epo-rus](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/epo-rus/README.md)

* model: transformer-align

* source language(s): epo

* target language(s): rus

* model: transformer-align

* pre-processing: normalization + SentencePiece (spm4k,spm4k)

* download original weights: [opus-2020-06-16.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/epo-rus/opus-2020-06-16.zip)

* test set translations: [opus-2020-06-16.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/epo-rus/opus-2020-06-16.test.txt)

* test set scores: [opus-2020-06-16.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/epo-rus/opus-2020-06-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.epo.rus | 17.7 | 0.379 |

### System Info:

- hf_name: epo-rus

- source_languages: epo

- target_languages: rus

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/epo-rus/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['eo', 'ru']

- src_constituents: {'epo'}

- tgt_constituents: {'rus'}

- src_multilingual: False

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm4k,spm4k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/epo-rus/opus-2020-06-16.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/epo-rus/opus-2020-06-16.test.txt

- src_alpha3: epo

- tgt_alpha3: rus

- short_pair: eo-ru

- chrF2_score: 0.379

- bleu: 17.7

- brevity_penalty: 0.9179999999999999

- ref_len: 71288.0

- src_name: Esperanto

- tgt_name: Russian

- train_date: 2020-06-16

- src_alpha2: eo

- tgt_alpha2: ru

- prefer_old: False

- long_pair: epo-rus

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41

|

47e290ba1960ea72a83629964fe041c6

|

jakub014/bert-base-uncased-finetuned-convincingness-IBM

|

jakub014

|

bert

| 15 | 16 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,473 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-finetuned-convincingness-IBM

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6537

- Accuracy: 0.7511

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 270 | 0.5707 | 0.7337 |

| 0.4673 | 2.0 | 540 | 0.6059 | 0.7221 |

| 0.4673 | 3.0 | 810 | 0.6537 | 0.7511 |

| 0.2218 | 4.0 | 1080 | 0.8485 | 0.7467 |

| 0.2218 | 5.0 | 1350 | 0.9221 | 0.7438 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

ba7a0355039717b48dd4f425ac222129

|

responsibility-framing/predict-perception-bert-cause-human

|

responsibility-framing

|

bert

| 12 | 19 |

transformers

| 0 |

text-classification

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 9,145 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# predict-perception-bert-cause-human

This model is a fine-tuned version of [dbmdz/bert-base-italian-xxl-cased](https://huggingface.co/dbmdz/bert-base-italian-xxl-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7139

- Rmse: 1.2259

- Rmse Cause::a Causata da un essere umano: 1.2259

- Mae: 1.0480

- Mae Cause::a Causata da un essere umano: 1.0480

- R2: 0.4563

- R2 Cause::a Causata da un essere umano: 0.4563

- Cos: 0.4783

- Pair: 0.0

- Rank: 0.5

- Neighbors: 0.3953

- Rsa: nan

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 20

- eval_batch_size: 8

- seed: 1996

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 30

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rmse | Rmse Cause::a Causata da un essere umano | Mae | Mae Cause::a Causata da un essere umano | R2 | R2 Cause::a Causata da un essere umano | Cos | Pair | Rank | Neighbors | Rsa |

|:-------------:|:-----:|:----:|:---------------:|:------:|:----------------------------------------:|:------:|:---------------------------------------:|:------:|:--------------------------------------:|:------:|:----:|:----:|:---------:|:---:|

| 1.0874 | 1.0 | 15 | 1.2615 | 1.6296 | 1.6296 | 1.3836 | 1.3836 | 0.0393 | 0.0393 | 0.0435 | 0.0 | 0.5 | 0.2935 | nan |

| 0.9577 | 2.0 | 30 | 1.1988 | 1.5886 | 1.5886 | 1.3017 | 1.3017 | 0.0870 | 0.0870 | 0.4783 | 0.0 | 0.5 | 0.3944 | nan |

| 0.8414 | 3.0 | 45 | 0.9870 | 1.4414 | 1.4414 | 1.1963 | 1.1963 | 0.2483 | 0.2483 | 0.3913 | 0.0 | 0.5 | 0.3048 | nan |

| 0.7291 | 4.0 | 60 | 0.9098 | 1.3839 | 1.3839 | 1.1297 | 1.1297 | 0.3071 | 0.3071 | 0.4783 | 0.0 | 0.5 | 0.3084 | nan |

| 0.5949 | 5.0 | 75 | 0.9207 | 1.3921 | 1.3921 | 1.2079 | 1.2079 | 0.2988 | 0.2988 | 0.4783 | 0.0 | 0.5 | 0.3084 | nan |

| 0.4938 | 6.0 | 90 | 0.8591 | 1.3448 | 1.3448 | 1.1842 | 1.1842 | 0.3458 | 0.3458 | 0.4783 | 0.0 | 0.5 | 0.3084 | nan |

| 0.3611 | 7.0 | 105 | 0.8176 | 1.3119 | 1.3119 | 1.1454 | 1.1454 | 0.3774 | 0.3774 | 0.5652 | 0.0 | 0.5 | 0.4091 | nan |

| 0.2663 | 8.0 | 120 | 0.6879 | 1.2034 | 1.2034 | 1.0300 | 1.0300 | 0.4761 | 0.4761 | 0.5652 | 0.0 | 0.5 | 0.4091 | nan |

| 0.1833 | 9.0 | 135 | 0.7704 | 1.2735 | 1.2735 | 1.1031 | 1.1031 | 0.4133 | 0.4133 | 0.5652 | 0.0 | 0.5 | 0.3152 | nan |

| 0.1704 | 10.0 | 150 | 0.7097 | 1.2222 | 1.2222 | 1.0382 | 1.0382 | 0.4596 | 0.4596 | 0.4783 | 0.0 | 0.5 | 0.3084 | nan |

| 0.1219 | 11.0 | 165 | 0.6872 | 1.2027 | 1.2027 | 1.0198 | 1.0198 | 0.4767 | 0.4767 | 0.4783 | 0.0 | 0.5 | 0.3084 | nan |

| 0.1011 | 12.0 | 180 | 0.7201 | 1.2312 | 1.2312 | 1.0466 | 1.0466 | 0.4516 | 0.4516 | 0.5652 | 0.0 | 0.5 | 0.3152 | nan |

| 0.0849 | 13.0 | 195 | 0.7267 | 1.2368 | 1.2368 | 1.0454 | 1.0454 | 0.4466 | 0.4466 | 0.4783 | 0.0 | 0.5 | 0.3953 | nan |

| 0.0818 | 14.0 | 210 | 0.7361 | 1.2448 | 1.2448 | 1.0565 | 1.0565 | 0.4394 | 0.4394 | 0.4783 | 0.0 | 0.5 | 0.3953 | nan |

| 0.0634 | 15.0 | 225 | 0.7158 | 1.2275 | 1.2275 | 1.0384 | 1.0384 | 0.4549 | 0.4549 | 0.3913 | 0.0 | 0.5 | 0.3306 | nan |

| 0.065 | 16.0 | 240 | 0.7394 | 1.2475 | 1.2475 | 1.0659 | 1.0659 | 0.4369 | 0.4369 | 0.3913 | 0.0 | 0.5 | 0.3306 | nan |

| 0.0541 | 17.0 | 255 | 0.7642 | 1.2683 | 1.2683 | 1.0496 | 1.0496 | 0.4181 | 0.4181 | 0.4783 | 0.0 | 0.5 | 0.3953 | nan |

| 0.0577 | 18.0 | 270 | 0.7137 | 1.2257 | 1.2257 | 1.0303 | 1.0303 | 0.4565 | 0.4565 | 0.4783 | 0.0 | 0.5 | 0.3953 | nan |

| 0.0474 | 19.0 | 285 | 0.7393 | 1.2475 | 1.2475 | 1.0447 | 1.0447 | 0.4370 | 0.4370 | 0.4783 | 0.0 | 0.5 | 0.3084 | nan |

| 0.0494 | 20.0 | 300 | 0.7157 | 1.2274 | 1.2274 | 1.0453 | 1.0453 | 0.4550 | 0.4550 | 0.4783 | 0.0 | 0.5 | 0.3084 | nan |

| 0.0434 | 21.0 | 315 | 0.7248 | 1.2352 | 1.2352 | 1.0462 | 1.0462 | 0.4480 | 0.4480 | 0.4783 | 0.0 | 0.5 | 0.3953 | nan |

| 0.049 | 22.0 | 330 | 0.7384 | 1.2467 | 1.2467 | 1.0613 | 1.0613 | 0.4377 | 0.4377 | 0.4783 | 0.0 | 0.5 | 0.3953 | nan |

| 0.0405 | 23.0 | 345 | 0.7420 | 1.2498 | 1.2498 | 1.0653 | 1.0653 | 0.4349 | 0.4349 | 0.3913 | 0.0 | 0.5 | 0.3306 | nan |

| 0.0398 | 24.0 | 360 | 0.7355 | 1.2442 | 1.2442 | 1.0620 | 1.0620 | 0.4399 | 0.4399 | 0.4783 | 0.0 | 0.5 | 0.3953 | nan |

| 0.0398 | 25.0 | 375 | 0.7570 | 1.2623 | 1.2623 | 1.0698 | 1.0698 | 0.4235 | 0.4235 | 0.3913 | 0.0 | 0.5 | 0.3306 | nan |

| 0.0345 | 26.0 | 390 | 0.7359 | 1.2446 | 1.2446 | 1.0610 | 1.0610 | 0.4396 | 0.4396 | 0.5652 | 0.0 | 0.5 | 0.3152 | nan |

| 0.0345 | 27.0 | 405 | 0.7417 | 1.2495 | 1.2495 | 1.0660 | 1.0660 | 0.4352 | 0.4352 | 0.4783 | 0.0 | 0.5 | 0.3953 | nan |

| 0.0386 | 28.0 | 420 | 0.7215 | 1.2323 | 1.2323 | 1.0514 | 1.0514 | 0.4506 | 0.4506 | 0.4783 | 0.0 | 0.5 | 0.3084 | nan |

| 0.0372 | 29.0 | 435 | 0.7140 | 1.2260 | 1.2260 | 1.0477 | 1.0477 | 0.4562 | 0.4562 | 0.5652 | 0.0 | 0.5 | 0.4091 | nan |

| 0.0407 | 30.0 | 450 | 0.7139 | 1.2259 | 1.2259 | 1.0480 | 1.0480 | 0.4563 | 0.4563 | 0.4783 | 0.0 | 0.5 | 0.3953 | nan |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.10.2+cu113

- Datasets 1.18.3

- Tokenizers 0.11.0

|

aecf8c99c0779d8a73cb46a501d08085

|

gokuls/distilbert_sa_GLUE_Experiment_logit_kd_mrpc_256

|

gokuls

|

distilbert

| 17 | 2 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

|

['en']

|

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,313 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert_sa_GLUE_Experiment_logit_kd_mrpc_256

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the GLUE MRPC dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5199

- Accuracy: 0.3284

- F1: 0.0616

- Combined Score: 0.1950

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 256

- eval_batch_size: 256

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Combined Score |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:--------------:|

| 0.5375 | 1.0 | 15 | 0.5292 | 0.3162 | 0.0 | 0.1581 |

| 0.5305 | 2.0 | 30 | 0.5292 | 0.3162 | 0.0 | 0.1581 |

| 0.5294 | 3.0 | 45 | 0.5293 | 0.3162 | 0.0 | 0.1581 |

| 0.5283 | 4.0 | 60 | 0.5284 | 0.3162 | 0.0 | 0.1581 |

| 0.5258 | 5.0 | 75 | 0.5260 | 0.3162 | 0.0 | 0.1581 |

| 0.519 | 6.0 | 90 | 0.5199 | 0.3284 | 0.0616 | 0.1950 |

| 0.5036 | 7.0 | 105 | 0.5200 | 0.3848 | 0.2462 | 0.3155 |

| 0.4916 | 8.0 | 120 | 0.5226 | 0.4167 | 0.3239 | 0.3703 |

| 0.4725 | 9.0 | 135 | 0.5298 | 0.4289 | 0.3581 | 0.3935 |

| 0.4537 | 10.0 | 150 | 0.5333 | 0.6152 | 0.6736 | 0.6444 |

| 0.4382 | 11.0 | 165 | 0.5450 | 0.6201 | 0.6906 | 0.6554 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.9.0

- Tokenizers 0.13.2

|

422cbb5fae0fbf277c11f2e38ceb9ef8

|

jonatasgrosman/exp_w2v2t_fa_vp-es_s533

|

jonatasgrosman

|

wav2vec2

| 10 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['fa']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'fa']

| false | true | true | 469 | false |

# exp_w2v2t_fa_vp-es_s533

Fine-tuned [facebook/wav2vec2-large-es-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-es-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (fa)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

adb8ede84b89eadc3a25d457dbe4e05f

|

distilbert-base-uncased-distilled-squad

| null |

distilbert

| 14 | 27,917 |

transformers

| 20 |

question-answering

| true | true | false |

apache-2.0

|

['en']

|

['squad']

| null | 3 | 0 | 3 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 8,586 | false |

# DistilBERT base uncased distilled SQuAD

## Table of Contents

- [Model Details](#model-details)

- [How To Get Started With the Model](#how-to-get-started-with-the-model)

- [Uses](#uses)

- [Risks, Limitations and Biases](#risks-limitations-and-biases)

- [Training](#training)

- [Evaluation](#evaluation)

- [Environmental Impact](#environmental-impact)

- [Technical Specifications](#technical-specifications)

- [Citation Information](#citation-information)

- [Model Card Authors](#model-card-authors)

## Model Details

**Model Description:** The DistilBERT model was proposed in the blog post [Smaller, faster, cheaper, lighter: Introducing DistilBERT, adistilled version of BERT](https://medium.com/huggingface/distilbert-8cf3380435b5), and the paper [DistilBERT, adistilled version of BERT: smaller, faster, cheaper and lighter](https://arxiv.org/abs/1910.01108). DistilBERT is a small, fast, cheap and light Transformer model trained by distilling BERT base. It has 40% less parameters than *bert-base-uncased*, runs 60% faster while preserving over 95% of BERT's performances as measured on the GLUE language understanding benchmark.

This model is a fine-tune checkpoint of [DistilBERT-base-uncased](https://huggingface.co/distilbert-base-uncased), fine-tuned using (a second step of) knowledge distillation on [SQuAD v1.1](https://huggingface.co/datasets/squad).

- **Developed by:** Hugging Face

- **Model Type:** Transformer-based language model

- **Language(s):** English

- **License:** Apache 2.0

- **Related Models:** [DistilBERT-base-uncased](https://huggingface.co/distilbert-base-uncased)

- **Resources for more information:**

- See [this repository](https://github.com/huggingface/transformers/tree/main/examples/research_projects/distillation) for more about Distil\* (a class of compressed models including this model)

- See [Sanh et al. (2019)](https://arxiv.org/abs/1910.01108) for more information about knowledge distillation and the training procedure

## How to Get Started with the Model

Use the code below to get started with the model.

```python

>>> from transformers import pipeline

>>> question_answerer = pipeline("question-answering", model='distilbert-base-uncased-distilled-squad')

>>> context = r"""

... Extractive Question Answering is the task of extracting an answer from a text given a question. An example of a

... question answering dataset is the SQuAD dataset, which is entirely based on that task. If you would like to fine-tune

... a model on a SQuAD task, you may leverage the examples/pytorch/question-answering/run_squad.py script.

... """

>>> result = question_answerer(question="What is a good example of a question answering dataset?", context=context)

>>> print(

... f"Answer: '{result['answer']}', score: {round(result['score'], 4)}, start: {result['start']}, end: {result['end']}"

...)

Answer: 'SQuAD dataset', score: 0.4704, start: 147, end: 160

```

Here is how to use this model in PyTorch:

```python

from transformers import DistilBertTokenizer, DistilBertForQuestionAnswering

import torch

tokenizer = DistilBertTokenizer.from_pretrained('distilbert-base-uncased-distilled-squad')

model = DistilBertForQuestionAnswering.from_pretrained('distilbert-base-uncased-distilled-squad')

question, text = "Who was Jim Henson?", "Jim Henson was a nice puppet"

inputs = tokenizer(question, text, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

answer_start_index = torch.argmax(outputs.start_logits)

answer_end_index = torch.argmax(outputs.end_logits)

predict_answer_tokens = inputs.input_ids[0, answer_start_index : answer_end_index + 1]

tokenizer.decode(predict_answer_tokens)

```

And in TensorFlow:

```python

from transformers import DistilBertTokenizer, TFDistilBertForQuestionAnswering

import tensorflow as tf

tokenizer = DistilBertTokenizer.from_pretrained("distilbert-base-uncased-distilled-squad")

model = TFDistilBertForQuestionAnswering.from_pretrained("distilbert-base-uncased-distilled-squad")

question, text = "Who was Jim Henson?", "Jim Henson was a nice puppet"

inputs = tokenizer(question, text, return_tensors="tf")

outputs = model(**inputs)

answer_start_index = int(tf.math.argmax(outputs.start_logits, axis=-1)[0])

answer_end_index = int(tf.math.argmax(outputs.end_logits, axis=-1)[0])

predict_answer_tokens = inputs.input_ids[0, answer_start_index : answer_end_index + 1]

tokenizer.decode(predict_answer_tokens)

```

## Uses

This model can be used for question answering.

#### Misuse and Out-of-scope Use

The model should not be used to intentionally create hostile or alienating environments for people. In addition, the model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

## Risks, Limitations and Biases

**CONTENT WARNING: Readers should be aware that language generated by this model can be disturbing or offensive to some and can propagate historical and current stereotypes.**

Significant research has explored bias and fairness issues with language models (see, e.g., [Sheng et al. (2021)](https://aclanthology.org/2021.acl-long.330.pdf) and [Bender et al. (2021)](https://dl.acm.org/doi/pdf/10.1145/3442188.3445922)). Predictions generated by the model can include disturbing and harmful stereotypes across protected classes; identity characteristics; and sensitive, social, and occupational groups. For example:

```python

>>> from transformers import pipeline

>>> question_answerer = pipeline("question-answering", model='distilbert-base-uncased-distilled-squad')

>>> context = r"""

... Alice is sitting on the bench. Bob is sitting next to her.

... """

>>> result = question_answerer(question="Who is the CEO?", context=context)

>>> print(

... f"Answer: '{result['answer']}', score: {round(result['score'], 4)}, start: {result['start']}, end: {result['end']}"

...)

Answer: 'Bob', score: 0.4183, start: 32, end: 35

```

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model.

## Training

#### Training Data

The [distilbert-base-uncased model](https://huggingface.co/distilbert-base-uncased) model describes it's training data as:

> DistilBERT pretrained on the same data as BERT, which is [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038 unpublished books and [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and headers).

To learn more about the SQuAD v1.1 dataset, see the [SQuAD v1.1 data card](https://huggingface.co/datasets/squad).

#### Training Procedure

##### Preprocessing

See the [distilbert-base-uncased model card](https://huggingface.co/distilbert-base-uncased) for further details.

##### Pretraining

See the [distilbert-base-uncased model card](https://huggingface.co/distilbert-base-uncased) for further details.

## Evaluation

As discussed in the [model repository](https://github.com/huggingface/transformers/blob/main/examples/research_projects/distillation/README.md)

> This model reaches a F1 score of 86.9 on the [SQuAD v1.1] dev set (for comparison, Bert bert-base-uncased version reaches a F1 score of 88.5).

## Environmental Impact

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). We present the hardware type and hours used based on the [associated paper](https://arxiv.org/pdf/1910.01108.pdf). Note that these details are just for training DistilBERT, not including the fine-tuning with SQuAD.

- **Hardware Type:** 8 16GB V100 GPUs

- **Hours used:** 90 hours

- **Cloud Provider:** Unknown

- **Compute Region:** Unknown

- **Carbon Emitted:** Unknown

## Technical Specifications

See the [associated paper](https://arxiv.org/abs/1910.01108) for details on the modeling architecture, objective, compute infrastructure, and training details.

## Citation Information

```bibtex

@inproceedings{sanh2019distilbert,

title={DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter},

author={Sanh, Victor and Debut, Lysandre and Chaumond, Julien and Wolf, Thomas},

booktitle={NeurIPS EMC^2 Workshop},

year={2019}

}

```

APA:

- Sanh, V., Debut, L., Chaumond, J., & Wolf, T. (2019). DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter. arXiv preprint arXiv:1910.01108.

## Model Card Authors

This model card was written by the Hugging Face team.

|

4fbbfa7dc732fdd9378b108516909a2a

|

kompactss/JeBERT_je_ko

|

kompactss

|

encoder-decoder

| 7 | 1 |

transformers

| 0 |

text2text-generation

| true | false | false |

afl-3.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 728 | false |

# 🍊 제주 방언 번역 모델 🍊

- 제주어 -> 표준어

- Made by. 구름 자연어처리 과정 3기 3조!!

- github link : https://github.com/Goormnlpteam3/JeBERT

## 1. Seq2Seq Transformer Model

- encoder : BertConfig

- decoder : BertConfig

- Tokenizer : WordPiece Tokenizer

## 2. Dataset

- Jit Dataset

- AI HUB(+아래아 문자)

## 3. Hyper Parameters

- Epoch : 10 epochs(best at 8 epoch)

- Random Seed : 42

- Learning Rate : 5e-5

- Warm up Ratio : 0.1

- Batch Size : 32

## 4. BLEU Score

- Jit + AI HUB(+아래아 문자) Dataset : 79.0

---

### CREDIT

- 주형준 : wngudwns2798@gmail.com

- 강가람 : 1st9aram@gmail.com

- 고광연 : rhfprl11@gmail.com

- 김수연 : s01090445778@gmail.com

- 이원경 : hjtwin2@gmail.com

- 조성은 : eun102476@gmail.com

|

110d02c079ac9951ca1680e2e6d08038

|

jcmc/aw-gpt

|

jcmc

|

gpt2

| 17 | 2 |

transformers

| 0 |

text-generation

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,087 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# aw-gpt

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 3.2452

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 200

- num_epochs: 60

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu116

- Datasets 2.6.1

- Tokenizers 0.11.6

|

a136a4e6d36421225697d8b3e55790e1

|

jannatul17/squad-bn-qgen-mt5-all-metric

|

jannatul17

|

t5

| 16 | 3 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null |

['squad_bn']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 3,507 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# squad-bn-qgen-mt5-all-metric

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the squad_bn dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7273

- Rouge1 Precision: 35.8589

- Rouge1 Recall: 29.7041

- Rouge1 Fmeasure: 31.6373

- Rouge2 Precision: 15.4203

- Rouge2 Recall: 12.5155

- Rouge2 Fmeasure: 13.3978

- Rougel Precision: 34.4684

- Rougel Recall: 28.5887

- Rougel Fmeasure: 30.4627

- Rougelsum Precision: 34.4252

- Rougelsum Recall: 28.5362

- Rougelsum Fmeasure: 30.4053

- Sacrebleu: 6.4143

- Meteor: 0.1416

- Gen Len: 16.7199

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 Precision | Rouge1 Recall | Rouge1 Fmeasure | Rouge2 Precision | Rouge2 Recall | Rouge2 Fmeasure | Rougel Precision | Rougel Recall | Rougel Fmeasure | Rougelsum Precision | Rougelsum Recall | Rougelsum Fmeasure | Sacrebleu | Meteor | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:----------------:|:-------------:|:---------------:|:----------------:|:-------------:|:---------------:|:----------------:|:-------------:|:---------------:|:-------------------:|:----------------:|:------------------:|:---------:|:------:|:-------:|

| 0.8449 | 1.0 | 16396 | 0.7340 | 31.6476 | 26.8901 | 28.2871 | 13.621 | 11.3545 | 11.958 | 30.3276 | 25.7754 | 27.1048 | 30.3426 | 25.7489 | 27.0991 | 5.9655 | 0.1336 | 16.8685 |

| 0.7607 | 2.0 | 32792 | 0.7182 | 33.7173 | 28.6115 | 30.1049 | 14.8227 | 12.2059 | 12.9453 | 32.149 | 27.2036 | 28.6617 | 32.2479 | 27.2261 | 28.7272 | 6.6093 | 0.138 | 16.8522 |

| 0.7422 | 3.0 | 49188 | 0.7083 | 34.6128 | 29.0223 | 30.7248 | 14.9888 | 12.3092 | 13.1021 | 33.2507 | 27.8154 | 29.4599 | 33.2848 | 27.812 | 29.5064 | 6.2407 | 0.1416 | 16.5806 |

| 0.705 | 4.0 | 65584 | 0.7035 | 34.156 | 29.0012 | 30.546 | 14.72 | 12.0251 | 12.8161 | 32.7527 | 27.6511 | 29.1955 | 32.7692 | 27.6627 | 29.231 | 6.1784 | 0.1393 | 16.7793 |

| 0.6859 | 5.0 | 81980 | 0.7038 | 35.1405 | 29.6033 | 31.2614 | 15.5108 | 12.6414 | 13.5059 | 33.8335 | 28.4264 | 30.0745 | 33.8782 | 28.4349 | 30.0901 | 6.5896 | 0.144 | 16.6651 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

7e536941c9934f7a3a666b77fa1d9d03

|

jonatasgrosman/exp_w2v2t_th_vp-nl_s253

|

jonatasgrosman

|

wav2vec2

| 10 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['th']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'th']

| false | true | true | 469 | false |

# exp_w2v2t_th_vp-nl_s253

Fine-tuned [facebook/wav2vec2-large-nl-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-nl-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (th)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

60edf672ab5b484ee92b9687e023cd20

|

Loc/lucky-model

|

Loc

|

vit

| 8 | 4 |

transformers

| 0 |

image-classification

| true | true | true |

apache-2.0

| null |

['imagenet-1k', 'imagenet-21k']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['vision', 'image-classification']

| false | true | true | 5,273 | false |

# Vision Transformer (base-sized model)

Vision Transformer (ViT) model pre-trained on ImageNet-21k (14 million images, 21,843 classes) at resolution 224x224, and fine-tuned on ImageNet 2012 (1 million images, 1,000 classes) at resolution 224x224. It was introduced in the paper [An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale](https://arxiv.org/abs/2010.11929) by Dosovitskiy et al. and first released in [this repository](https://github.com/google-research/vision_transformer). However, the weights were converted from the [timm repository](https://github.com/rwightman/pytorch-image-models) by Ross Wightman, who already converted the weights from JAX to PyTorch. Credits go to him.

Disclaimer: The team releasing ViT did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The Vision Transformer (ViT) is a transformer encoder model (BERT-like) pretrained on a large collection of images in a supervised fashion, namely ImageNet-21k, at a resolution of 224x224 pixels. Next, the model was fine-tuned on ImageNet (also referred to as ILSVRC2012), a dataset comprising 1 million images and 1,000 classes, also at resolution 224x224.

Images are presented to the model as a sequence of fixed-size patches (resolution 16x16), which are linearly embedded. One also adds a [CLS] token to the beginning of a sequence to use it for classification tasks. One also adds absolute position embeddings before feeding the sequence to the layers of the Transformer encoder.

By pre-training the model, it learns an inner representation of images that can then be used to extract features useful for downstream tasks: if you have a dataset of labeled images for instance, you can train a standard classifier by placing a linear layer on top of the pre-trained encoder. One typically places a linear layer on top of the [CLS] token, as the last hidden state of this token can be seen as a representation of an entire image.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=google/vit) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import ViTFeatureExtractor, ViTForImageClassification

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = ViTFeatureExtractor.from_pretrained('google/vit-base-patch16-224')

model = ViTForImageClassification.from_pretrained('google/vit-base-patch16-224')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

```

For more code examples, we refer to the [documentation](https://huggingface.co/transformers/model_doc/vit.html#).

## Training data

The ViT model was pretrained on [ImageNet-21k](http://www.image-net.org/), a dataset consisting of 14 million images and 21k classes, and fine-tuned on [ImageNet](http://www.image-net.org/challenges/LSVRC/2012/), a dataset consisting of 1 million images and 1k classes.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/google-research/vision_transformer/blob/master/vit_jax/input_pipeline.py).

Images are resized/rescaled to the same resolution (224x224) and normalized across the RGB channels with mean (0.5, 0.5, 0.5) and standard deviation (0.5, 0.5, 0.5).

### Pretraining

The model was trained on TPUv3 hardware (8 cores). All model variants are trained with a batch size of 4096 and learning rate warmup of 10k steps. For ImageNet, the authors found it beneficial to additionally apply gradient clipping at global norm 1. Training resolution is 224.

## Evaluation results

For evaluation results on several image classification benchmarks, we refer to tables 2 and 5 of the original paper. Note that for fine-tuning, the best results are obtained with a higher resolution (384x384). Of course, increasing the model size will result in better performance.

### BibTeX entry and citation info

```bibtex

@misc{wu2020visual,

title={Visual Transformers: Token-based Image Representation and Processing for Computer Vision},

author={Bichen Wu and Chenfeng Xu and Xiaoliang Dai and Alvin Wan and Peizhao Zhang and Zhicheng Yan and Masayoshi Tomizuka and Joseph Gonzalez and Kurt Keutzer and Peter Vajda},

year={2020},

eprint={2006.03677},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

```bibtex

@inproceedings{deng2009imagenet,

title={Imagenet: A large-scale hierarchical image database},

author={Deng, Jia and Dong, Wei and Socher, Richard and Li, Li-Jia and Li, Kai and Fei-Fei, Li},

booktitle={2009 IEEE conference on computer vision and pattern recognition},

pages={248--255},

year={2009},

organization={Ieee}

}

```

|

cd26cde0781997e95c1a82884123e308

|

okep/distilbert-base-uncased-finetuned-emotion

|

okep

|

distilbert

| 22 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['emotion']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,339 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2269

- Accuracy: 0.9245

- F1: 0.9245

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.853 | 1.0 | 250 | 0.3507 | 0.8925 | 0.8883 |

| 0.2667 | 2.0 | 500 | 0.2269 | 0.9245 | 0.9245 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.11.0

- Datasets 1.16.1

- Tokenizers 0.10.3

|

9a3fb3f3a14e7293e02ac9de8a5d3381

|

SiddharthaM/hasoc19-bert-base-multilingual-cased-HatredStatement-new

|

SiddharthaM

|

bert

| 12 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,860 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hasoc19-bert-base-multilingual-cased-HatredStatement-new

This model is a fine-tuned version of [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6565

- Accuracy: 0.7319

- Precision: 0.7320

- Recall: 0.7319

- F1: 0.7307

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 6

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| No log | 1.0 | 296 | 0.5540 | 0.7110 | 0.7147 | 0.7110 | 0.7067 |

| 0.5551 | 2.0 | 592 | 0.5345 | 0.7224 | 0.7673 | 0.7224 | 0.7038 |

| 0.5551 | 3.0 | 888 | 0.5752 | 0.7272 | 0.7430 | 0.7272 | 0.7183 |

| 0.4252 | 4.0 | 1184 | 0.5697 | 0.7376 | 0.7384 | 0.7376 | 0.7359 |

| 0.4252 | 5.0 | 1480 | 0.6335 | 0.7319 | 0.7388 | 0.7319 | 0.7269 |

| 0.3401 | 6.0 | 1776 | 0.6565 | 0.7319 | 0.7320 | 0.7319 | 0.7307 |

### Framework versions

- Transformers 4.24.0.dev0

- Pytorch 1.11.0+cu102

- Datasets 2.6.1

- Tokenizers 0.13.1

|

53dcc8376de924bf3e23234845c4a8dc

|

infinitejoy/wav2vec2-large-xls-r-300m-basaa-cv8

|

infinitejoy

|

wav2vec2

| 19 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['bas']

|

['mozilla-foundation/common_voice_8_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'bas', 'generated_from_trainer', 'hf-asr-leaderboard', 'model_for_talk', 'mozilla-foundation/common_voice_8_0', 'robust-speech-event']

| true | true | true | 1,702 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-basaa-cv8

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the MOZILLA-FOUNDATION/COMMON_VOICE_8_0 - BAS dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4648

- Wer: 0.5472

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 7e-05

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 100.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 2.9421 | 12.82 | 500 | 2.8894 | 1.0 |

| 1.1872 | 25.64 | 1000 | 0.6688 | 0.7460 |

| 0.8894 | 38.46 | 1500 | 0.4868 | 0.6516 |

| 0.769 | 51.28 | 2000 | 0.4960 | 0.6507 |

| 0.6936 | 64.1 | 2500 | 0.4781 | 0.5384 |

| 0.624 | 76.92 | 3000 | 0.4643 | 0.5430 |

| 0.5966 | 89.74 | 3500 | 0.4530 | 0.5591 |

### Framework versions

- Transformers 4.16.0.dev0

- Pytorch 1.10.1+cu102

- Datasets 1.18.3

- Tokenizers 0.11.0

|

9a6dad3b40cafc923e44ccf964047494

|

azizbarank/mbert-finnic-ner

|

azizbarank

|

bert

| 13 | 5 |

transformers

| 0 |

token-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,578 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mbert-finnic-ner

This model is a fine-tuned version of [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) on the Finnish and Estonian parts of the "WikiANN" dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1427

- Precision: 0.9090

- Recall: 0.9156

- F1: 0.9123

- Accuracy: 0.9672

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.1636 | 1.0 | 2188 | 0.1385 | 0.8906 | 0.9000 | 0.8953 | 0.9601 |

| 0.0991 | 2.0 | 4376 | 0.1346 | 0.9099 | 0.9095 | 0.9097 | 0.9660 |

| 0.0596 | 3.0 | 6564 | 0.1427 | 0.9090 | 0.9156 | 0.9123 | 0.9672 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 2.0.0

- Tokenizers 0.11.6

|

05d62f41f4695fae6493d5a796f7f5fa

|

DOOGLAK/Article_50v0_NER_Model_3Epochs_AUGMENTED

|

DOOGLAK

|

bert

| 13 | 5 |

transformers

| 0 |

token-classification

| true | false | false |

apache-2.0

| null |

['article50v0_wikigold_split']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,557 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Article_50v0_NER_Model_3Epochs_AUGMENTED

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the article50v0_wikigold_split dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5912

- Precision: 0.0975

- Recall: 0.0183

- F1: 0.0308

- Accuracy: 0.7915

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |