repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Thanish/wav2vec2-large-xlsr-tamil

|

Thanish

|

wav2vec2

| 9 | 21 |

transformers

| 0 |

automatic-speech-recognition

| true | false | true |

apache-2.0

|

['ta']

|

['common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['audio', 'automatic-speech-recognition', 'speech', 'xlsr-fine-tuning-week']

| true | true | true | 4,316 | false |

# Wav2Vec2-Large-XLSR-53-Tamil

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on Tamil using the [Common Voice](https://huggingface.co/datasets/common_voice) dataset.

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "{lang_id}", split="test[:2%]") #TODO: replace {lang_id} in your language code here. Make sure the code is one of the *ISO codes* of [this](https://huggingface.co/languages) site.

processor = Wav2Vec2Processor.from_pretrained("{model_id}") #TODO: replace {model_id} with your model id. The model id consists of {your_username}/{your_modelname}, *e.g.* `elgeish/wav2vec2-large-xlsr-53-arabic`

model = Wav2Vec2ForCTC.from_pretrained("{model_id}") #TODO: replace {model_id} with your model id. The model id consists of {your_username}/{your_modelname}, *e.g.* `elgeish/wav2vec2-large-xlsr-53-arabic`

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

\\tspeech_array, sampling_rate = torchaudio.load(batch["path"])

\\tbatch["speech"] = resampler(speech_array).squeeze().numpy()

\\treturn batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

\\tlogits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on the Tamil test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "{lang_id}", split="test") #TODO: replace {lang_id} in your language code here. Make sure the code is one of the *ISO codes* of [this](https://huggingface.co/languages) site.

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("{model_id}") #TODO: replace {model_id} with your model id. The model id consists of {your_username}/{your_modelname}, *e.g.* `elgeish/wav2vec2-large-xlsr-53-arabic`

model = Wav2Vec2ForCTC.from_pretrained("{model_id}") #TODO: replace {model_id} with your model id. The model id consists of {your_username}/{your_modelname}, *e.g.* `elgeish/wav2vec2-large-xlsr-53-arabic`

model.to("cuda")

chars_to_ignore_regex = '[\\\\,\\\\?\\\\.\\\\!\\\\-\\\\;\\\\:\\\\"\\\\“]' # TODO: adapt this list to include all special characters you removed from the data

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def speech_file_to_array_fn(batch):

\\tbatch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

\\tspeech_array, sampling_rate = torchaudio.load(batch["path"])

\\tbatch["speech"] = resampler(speech_array).squeeze().numpy()

\\treturn batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def evaluate(batch):

\\tinputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

\\twith torch.no_grad():

\\t\\tlogits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

\\tpred_ids = torch.argmax(logits, dim=-1)

\\tbatch["pred_strings"] = processor.batch_decode(pred_ids)

\\treturn batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 100.00 %

## Training

The Common Voice `train`, `validation` were used for training

The script used for training can be found [https://colab.research.google.com/drive/1PC2SjxpcWMQ2qmRw21NbP38wtQQUa5os#scrollTo=YKBZdqqJG9Tv](...)

|

267eb34f08f2b12b97e08fb5a5948c2c

|

Lvxue/distilled-mt5-small-0.4-0.25

|

Lvxue

|

mt5

| 14 | 1 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

|

['en', 'ro']

|

['wmt16']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,039 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilled-mt5-small-0.4-0.25

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the wmt16 ro-en dataset.

It achieves the following results on the evaluation set:

- Loss: 3.8561

- Bleu: 3.2179

- Gen Len: 41.2356

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

### Training results

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

|

c907fe4f17818296ae9edd92aee524f7

|

Mr-Wick/Roberta

|

Mr-Wick

|

roberta

| 9 | 6 |

transformers

| 0 |

question-answering

| false | true | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,095 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Roberta

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 16476, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.17.0

- TensorFlow 2.8.0

- Datasets 2.0.0

- Tokenizers 0.11.6

|

20e7550dd11e6ed33687da5253a2eccd

|

anas-awadalla/roberta-base-few-shot-k-512-finetuned-squad-seed-10

|

anas-awadalla

|

roberta

| 17 | 6 |

transformers

| 0 |

question-answering

| true | false | false |

mit

| null |

['squad']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 984 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-few-shot-k-512-finetuned-squad-seed-10

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10.0

### Training results

### Framework versions

- Transformers 4.16.0.dev0

- Pytorch 1.10.2+cu102

- Datasets 1.17.0

- Tokenizers 0.10.3

|

0ad431ac44b1c2daecf9ead45dbd7719

|

merve/tips5wx_sbh5-tip-regression

|

merve

| null | 4 | 0 |

sklearn

| 0 |

tabular-regression

| false | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['tabular-regression', 'baseline-trainer']

| false | true | true | 8,010 | false |

## Baseline Model trained on tips5wx_sbh5 to apply regression on tip

**Metrics of the best model:**

r2 0.389363

neg_mean_squared_error -1.092356

Name: Ridge(alpha=10), dtype: float64

**See model plot below:**

<style>#sk-container-id-1 {color: black;background-color: white;}#sk-container-id-1 pre{padding: 0;}#sk-container-id-1 div.sk-toggleable {background-color: white;}#sk-container-id-1 label.sk-toggleable__label {cursor: pointer;display: block;width: 100%;margin-bottom: 0;padding: 0.3em;box-sizing: border-box;text-align: center;}#sk-container-id-1 label.sk-toggleable__label-arrow:before {content: "▸";float: left;margin-right: 0.25em;color: #696969;}#sk-container-id-1 label.sk-toggleable__label-arrow:hover:before {color: black;}#sk-container-id-1 div.sk-estimator:hover label.sk-toggleable__label-arrow:before {color: black;}#sk-container-id-1 div.sk-toggleable__content {max-height: 0;max-width: 0;overflow: hidden;text-align: left;background-color: #f0f8ff;}#sk-container-id-1 div.sk-toggleable__content pre {margin: 0.2em;color: black;border-radius: 0.25em;background-color: #f0f8ff;}#sk-container-id-1 input.sk-toggleable__control:checked~div.sk-toggleable__content {max-height: 200px;max-width: 100%;overflow: auto;}#sk-container-id-1 input.sk-toggleable__control:checked~label.sk-toggleable__label-arrow:before {content: "▾";}#sk-container-id-1 div.sk-estimator input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-1 div.sk-label input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-1 input.sk-hidden--visually {border: 0;clip: rect(1px 1px 1px 1px);clip: rect(1px, 1px, 1px, 1px);height: 1px;margin: -1px;overflow: hidden;padding: 0;position: absolute;width: 1px;}#sk-container-id-1 div.sk-estimator {font-family: monospace;background-color: #f0f8ff;border: 1px dotted black;border-radius: 0.25em;box-sizing: border-box;margin-bottom: 0.5em;}#sk-container-id-1 div.sk-estimator:hover {background-color: #d4ebff;}#sk-container-id-1 div.sk-parallel-item::after {content: "";width: 100%;border-bottom: 1px solid gray;flex-grow: 1;}#sk-container-id-1 div.sk-label:hover label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-1 div.sk-serial::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 0;bottom: 0;left: 50%;z-index: 0;}#sk-container-id-1 div.sk-serial {display: flex;flex-direction: column;align-items: center;background-color: white;padding-right: 0.2em;padding-left: 0.2em;position: relative;}#sk-container-id-1 div.sk-item {position: relative;z-index: 1;}#sk-container-id-1 div.sk-parallel {display: flex;align-items: stretch;justify-content: center;background-color: white;position: relative;}#sk-container-id-1 div.sk-item::before, #sk-container-id-1 div.sk-parallel-item::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 0;bottom: 0;left: 50%;z-index: -1;}#sk-container-id-1 div.sk-parallel-item {display: flex;flex-direction: column;z-index: 1;position: relative;background-color: white;}#sk-container-id-1 div.sk-parallel-item:first-child::after {align-self: flex-end;width: 50%;}#sk-container-id-1 div.sk-parallel-item:last-child::after {align-self: flex-start;width: 50%;}#sk-container-id-1 div.sk-parallel-item:only-child::after {width: 0;}#sk-container-id-1 div.sk-dashed-wrapped {border: 1px dashed gray;margin: 0 0.4em 0.5em 0.4em;box-sizing: border-box;padding-bottom: 0.4em;background-color: white;}#sk-container-id-1 div.sk-label label {font-family: monospace;font-weight: bold;display: inline-block;line-height: 1.2em;}#sk-container-id-1 div.sk-label-container {text-align: center;}#sk-container-id-1 div.sk-container {/* jupyter's `normalize.less` sets `[hidden] { display: none; }` but bootstrap.min.css set `[hidden] { display: none !important; }` so we also need the `!important` here to be able to override the default hidden behavior on the sphinx rendered scikit-learn.org. See: https://github.com/scikit-learn/scikit-learn/issues/21755 */display: inline-block !important;position: relative;}#sk-container-id-1 div.sk-text-repr-fallback {display: none;}</style><div id="sk-container-id-1" class="sk-top-container"><div class="sk-text-repr-fallback"><pre>Pipeline(steps=[('easypreprocessor',EasyPreprocessor(types= continuous dirty_float low_card_int ... date free_string useless

total_bill True False False ... False False False

sex False False False ... False False False

smoker False False False ... False False False

day False False False ... False False False

time False False False ... False False False

size False False False ... False False False[6 rows x 7 columns])),('ridge', Ridge(alpha=10))])</pre><b>In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. <br />On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.</b></div><div class="sk-container" hidden><div class="sk-item sk-dashed-wrapped"><div class="sk-label-container"><div class="sk-label sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-1" type="checkbox" ><label for="sk-estimator-id-1" class="sk-toggleable__label sk-toggleable__label-arrow">Pipeline</label><div class="sk-toggleable__content"><pre>Pipeline(steps=[('easypreprocessor',EasyPreprocessor(types= continuous dirty_float low_card_int ... date free_string useless

total_bill True False False ... False False False

sex False False False ... False False False

smoker False False False ... False False False

day False False False ... False False False

time False False False ... False False False

size False False False ... False False False[6 rows x 7 columns])),('ridge', Ridge(alpha=10))])</pre></div></div></div><div class="sk-serial"><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-2" type="checkbox" ><label for="sk-estimator-id-2" class="sk-toggleable__label sk-toggleable__label-arrow">EasyPreprocessor</label><div class="sk-toggleable__content"><pre>EasyPreprocessor(types= continuous dirty_float low_card_int ... date free_string useless

total_bill True False False ... False False False

sex False False False ... False False False

smoker False False False ... False False False

day False False False ... False False False

time False False False ... False False False

size False False False ... False False False[6 rows x 7 columns])</pre></div></div></div><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-3" type="checkbox" ><label for="sk-estimator-id-3" class="sk-toggleable__label sk-toggleable__label-arrow">Ridge</label><div class="sk-toggleable__content"><pre>Ridge(alpha=10)</pre></div></div></div></div></div></div></div>

**Disclaimer:** This model is trained with dabl library as a baseline, for better results, use [AutoTrain](https://huggingface.co/autotrain).

**Logs of training** including the models tried in the process can be found in logs.txt

|

240c3cc59bd7837f3cf00ca5d6e03deb

|

anuragshas/en-hi-transliteration

|

anuragshas

| null | 7 | 0 | null | 0 | null | false | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,542 | false |

## Dataset

[NEWS2018 DATASET_04, Task ID: M-EnHi](http://workshop.colips.org/news2018/dataset.html)

## Notebooks

- `xmltodict.ipynb` contains the code to convert the `xml` files to `json` for training

- `training_script.ipynb` contains the code for training and inference. It is a modified version of https://github.com/AI4Bharat/IndianNLP-Transliteration/blob/master/NoteBooks/Xlit_TrainingSetup_condensed.ipynb

## Predictions

`pred_test.json` contains top-10 predictions on the validation set of the dataset

## Evaluation Scores on validation set

TOP 10 SCORES FOR 1000 SAMPLES

|Metrics | Score |

|-----------|-----------|

|ACC | 0.703000|

|Mean F-score| 0.949289|

|MRR | 0.486549|

|MAP_ref | 0.381000|

TOP 5 SCORES FOR 1000 SAMPLES:

|Metrics | Score |

|-----------|-----------|

|ACC |0.621000|

|Mean F-score |0.937985|

|MRR |0.475033|

|MAP_ref |0.381000|

TOP 3 SCORES FOR 1000 SAMPLES:

|Metrics | Score |

|-----------|-----------|

|ACC |0.560000|

|Mean F-score |0.927025|

|MRR |0.461333|

|MAP_ref |0.381000|

TOP 2 SCORES FOR 1000 SAMPLES:

|Metrics | Score |

|-----------|-----------|

|ACC | 0.502000|

|Mean F-score | 0.913697|

|MRR | 0.442000|

|MAP_ref | 0.381000|

TOP 1 SCORES FOR 1000 SAMPLES:

|Metrics | Score |

|-----------|-----------|

|ACC | 0.382000|

|Mean F-score | 0.881272|

|MRR | 0.382000|

|MAP_ref | 0.380500|

|

a533088e01e5b4bda5eec6acbb4264e8

|

jph00/fastdiffusion-models

|

jph00

| null | 9 | 0 | null | 0 | null | false | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,993 | false |

*all models trained with mnist sigma_data, instead of fashion-mnist.*

- base: default k-diffusion model

- no-t-emb: as base, but no t-embeddings in model

- mse-no-t-emb: as no-t-emb, but predicting unscaled noise

- mse: unscaled noise prediction with t-embeddings

## base metrics

step,fid,kid

5000,23.366962432861328,0.0060024261474609375

10000,21.407773971557617,0.004696846008300781

15000,19.820981979370117,0.003306865692138672

20000,20.4482421875,0.0037620067596435547

25000,19.459041595458984,0.0030574798583984375

30000,18.933385848999023,0.0031194686889648438

35000,18.223621368408203,0.002220630645751953

40000,18.64676284790039,0.0026960372924804688

45000,17.681808471679688,0.0016982555389404297

50000,17.32500457763672,0.001678466796875

55000,17.74714469909668,0.0016117095947265625

60000,18.276540756225586,0.002439737319946289

## mse-no-t-emb

step,fid,kid

5000,28.580364227294922,0.007686138153076172

10000,25.324932098388672,0.0061130523681640625

15000,23.68691635131836,0.005526542663574219

20000,24.05099105834961,0.005819082260131836

25000,22.60521125793457,0.004955768585205078

30000,22.16605567932129,0.0047609806060791016

35000,21.794536590576172,0.0039484500885009766

40000,22.96178436279297,0.005787849426269531

45000,22.641393661499023,0.004763364791870117

50000,20.735567092895508,0.0038640499114990234

55000,21.417423248291016,0.004515647888183594

60000,22.11293601989746,0.0054743289947509766

## no-t-emb

step,fid,kid

5000,53.25414276123047,0.02761554718017578

10000,47.687461853027344,0.023845195770263672

15000,46.045196533203125,0.02205944061279297

20000,44.64243698120117,0.020934104919433594

25000,43.55231857299805,0.020574331283569336

30000,43.493412017822266,0.020569324493408203

35000,42.51478958129883,0.01968073844909668

40000,42.213401794433594,0.01972222328186035

45000,40.9914665222168,0.018793582916259766

50000,42.946231842041016,0.019819974899291992

55000,40.699989318847656,0.018331050872802734

60000,41.737518310546875,0.019069194793701172

|

f68c987eb6b2be35e6b650acd69a1519

|

MisbaHF/distilbert-base-uncased-finetuned-cola

|

MisbaHF

|

distilbert

| 13 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['glue']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,572 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7134

- Matthews Correlation: 0.5411

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.5294 | 1.0 | 535 | 0.5082 | 0.4183 |

| 0.3483 | 2.0 | 1070 | 0.4969 | 0.5259 |

| 0.2355 | 3.0 | 1605 | 0.6260 | 0.5065 |

| 0.1733 | 4.0 | 2140 | 0.7134 | 0.5411 |

| 0.1238 | 5.0 | 2675 | 0.8516 | 0.5291 |

### Framework versions

- Transformers 4.12.3

- Pytorch 1.10.0+cu111

- Datasets 1.15.1

- Tokenizers 0.10.3

|

0d487bc311782289b93c56850c4ed1a2

|

skr3178/xlm-roberta-base-finetuned-panx-de-fr

|

skr3178

|

xlm-roberta

| 10 | 5 |

transformers

| 0 |

token-classification

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,321 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de-fr

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1644

- F1: 0.8617

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2891 | 1.0 | 715 | 0.1780 | 0.8288 |

| 0.1471 | 2.0 | 1430 | 0.1627 | 0.8509 |

| 0.0947 | 3.0 | 2145 | 0.1644 | 0.8617 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.11.0+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

1d445ad9636da3cc84e677b725130469

|

stanfordnlp/stanza-sme

|

stanfordnlp

| null | 8 | 3 |

stanza

| 0 |

token-classification

| false | false | false |

apache-2.0

|

['sme']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['stanza', 'token-classification']

| false | true | true | 584 | false |

# Stanza model for North_Sami (sme)

Stanza is a collection of accurate and efficient tools for the linguistic analysis of many human languages. Starting from raw text to syntactic analysis and entity recognition, Stanza brings state-of-the-art NLP models to languages of your choosing.

Find more about it in [our website](https://stanfordnlp.github.io/stanza) and our [GitHub repository](https://github.com/stanfordnlp/stanza).

This card and repo were automatically prepared with `hugging_stanza.py` in the `stanfordnlp/huggingface-models` repo

Last updated 2022-09-25 02:02:22.878

|

c6a03ddbbb6c6a01b47868a7c7018755

|

research-backup/t5-base-subjqa-vanilla-grocery-qg

|

research-backup

|

t5

| 34 | 3 |

transformers

| 0 |

text2text-generation

| true | false | false |

cc-by-4.0

|

['en']

|

['lmqg/qg_subjqa']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['question generation']

| true | true | true | 3,969 | false |

# Model Card of `research-backup/t5-base-subjqa-vanilla-grocery-qg`

This model is fine-tuned version of [t5-base](https://huggingface.co/t5-base) for question generation task on the [lmqg/qg_subjqa](https://huggingface.co/datasets/lmqg/qg_subjqa) (dataset_name: grocery) via [`lmqg`](https://github.com/asahi417/lm-question-generation).

### Overview

- **Language model:** [t5-base](https://huggingface.co/t5-base)

- **Language:** en

- **Training data:** [lmqg/qg_subjqa](https://huggingface.co/datasets/lmqg/qg_subjqa) (grocery)

- **Online Demo:** [https://autoqg.net/](https://autoqg.net/)

- **Repository:** [https://github.com/asahi417/lm-question-generation](https://github.com/asahi417/lm-question-generation)

- **Paper:** [https://arxiv.org/abs/2210.03992](https://arxiv.org/abs/2210.03992)

### Usage

- With [`lmqg`](https://github.com/asahi417/lm-question-generation#lmqg-language-model-for-question-generation-)

```python

from lmqg import TransformersQG

# initialize model

model = TransformersQG(language="en", model="research-backup/t5-base-subjqa-vanilla-grocery-qg")

# model prediction

questions = model.generate_q(list_context="William Turner was an English painter who specialised in watercolour landscapes", list_answer="William Turner")

```

- With `transformers`

```python

from transformers import pipeline

pipe = pipeline("text2text-generation", "research-backup/t5-base-subjqa-vanilla-grocery-qg")

output = pipe("generate question: <hl> Beyonce <hl> further expanded her acting career, starring as blues singer Etta James in the 2008 musical biopic, Cadillac Records.")

```

## Evaluation

- ***Metric (Question Generation)***: [raw metric file](https://huggingface.co/research-backup/t5-base-subjqa-vanilla-grocery-qg/raw/main/eval/metric.first.sentence.paragraph_answer.question.lmqg_qg_subjqa.grocery.json)

| | Score | Type | Dataset |

|:-----------|--------:|:--------|:-----------------------------------------------------------------|

| BERTScore | 78.84 | grocery | [lmqg/qg_subjqa](https://huggingface.co/datasets/lmqg/qg_subjqa) |

| Bleu_1 | 3.05 | grocery | [lmqg/qg_subjqa](https://huggingface.co/datasets/lmqg/qg_subjqa) |

| Bleu_2 | 0.88 | grocery | [lmqg/qg_subjqa](https://huggingface.co/datasets/lmqg/qg_subjqa) |

| Bleu_3 | 0 | grocery | [lmqg/qg_subjqa](https://huggingface.co/datasets/lmqg/qg_subjqa) |

| Bleu_4 | 0 | grocery | [lmqg/qg_subjqa](https://huggingface.co/datasets/lmqg/qg_subjqa) |

| METEOR | 2.08 | grocery | [lmqg/qg_subjqa](https://huggingface.co/datasets/lmqg/qg_subjqa) |

| MoverScore | 51.78 | grocery | [lmqg/qg_subjqa](https://huggingface.co/datasets/lmqg/qg_subjqa) |

| ROUGE_L | 1.33 | grocery | [lmqg/qg_subjqa](https://huggingface.co/datasets/lmqg/qg_subjqa) |

## Training hyperparameters

The following hyperparameters were used during fine-tuning:

- dataset_path: lmqg/qg_subjqa

- dataset_name: grocery

- input_types: ['paragraph_answer']

- output_types: ['question']

- prefix_types: ['qg']

- model: t5-base

- max_length: 512

- max_length_output: 32

- epoch: 3

- batch: 16

- lr: 1e-05

- fp16: False

- random_seed: 1

- gradient_accumulation_steps: 8

- label_smoothing: 0.15

The full configuration can be found at [fine-tuning config file](https://huggingface.co/research-backup/t5-base-subjqa-vanilla-grocery-qg/raw/main/trainer_config.json).

## Citation

```

@inproceedings{ushio-etal-2022-generative,

title = "{G}enerative {L}anguage {M}odels for {P}aragraph-{L}evel {Q}uestion {G}eneration",

author = "Ushio, Asahi and

Alva-Manchego, Fernando and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

|

860419fc8b6bd195748ccb3d1fa7e741

|

okite97/xlm-roberta-base-finetuned-panx-en

|

okite97

|

xlm-roberta

| 9 | 7 |

transformers

| 0 |

token-classification

| true | false | false |

mit

| null |

['xtreme']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,319 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-en

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3848

- F1: 0.6994

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 1.0435 | 1.0 | 74 | 0.5169 | 0.5532 |

| 0.4719 | 2.0 | 148 | 0.4224 | 0.6630 |

| 0.3424 | 3.0 | 222 | 0.3848 | 0.6994 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

d43b9cc6b5405c38e6d30a86e42ce475

|

speechbrain/REAL-M-sisnr-estimator

|

speechbrain

| null | 6 | 33 |

speechbrain

| 1 | null | true | false | false |

apache-2.0

|

['en']

|

['REAL-M', 'WHAMR!']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['audio-source-separation', 'Source Separation', 'Speech Separation', 'WHAM!', 'REAL-M', 'SepFormer', 'Transformer', 'pytorch', 'speechbrain']

| false | true | true | 4,588 | false |

<iframe src="https://ghbtns.com/github-btn.html?user=speechbrain&repo=speechbrain&type=star&count=true&size=large&v=2" frameborder="0" scrolling="0" width="170" height="30" title="GitHub"></iframe>

<br/><br/>

# Neural SI-SNR Estimator

The Neural SI-SNR Estimator predicts the scale-invariant signal-to-noise ratio (SI-SNR) from the separated signals and the original mixture.

The performance estimation is blind (i.e., no targets signals are needed). This model allows a performance estimation on real mixtures, where the targets are not available.

This repository provides the SI-SNR estimator model introduced for the REAL-M dataset.

The REAL-M dataset can downloaded from [this link](https://sourceseparationresearch.com/static/REAL-M-v0.1.0.tar.gz).

The paper for the REAL-M dataset can be found on [this arxiv link](https://arxiv.org/pdf/2110.10812.pdf).

| Release | Test-Set (WHAMR!) average l1 error |

|:---:|:---:|

| 18-10-21 | 1.7 dB |

## Install SpeechBrain

First of all, currently you need to install SpeechBrain from the source:

1. Clone SpeechBrain:

```bash

git clone https://github.com/speechbrain/speechbrain/

```

2. Install it:

```

cd speechbrain

pip install -r requirements.txt

pip install -e .

```

Please notice that we encourage you to read our tutorials and learn more about [SpeechBrain](https://speechbrain.github.io).

### Minimal example for SI-SNR estimation

```python

from speechbrain.pretrained import SepformerSeparation as separator

from speechbrain.pretrained.interfaces import fetch

from speechbrain.pretrained.interfaces import SNREstimator as snrest

import torchaudio

# 1- Download a test mixture

fetch("test_mixture.wav", source="speechbrain/sepformer-wsj02mix", savedir=".", save_filename="test_mixture.wav")

# 2- Separate the mixture with a pretrained model (sepformer-whamr in this case)

model = separator.from_hparams(source="speechbrain/sepformer-whamr", savedir='pretrained_models/sepformer-whamr')

est_sources = model.separate_file(path='test_mixture.wav')

# 3- Estimate the performance

snr_est_model = snrest.from_hparams(source="speechbrain/REAL-M-sisnr-estimator",savedir='pretrained_models/REAL-M-sisnr-estimator')

mix, fs = torchaudio.load('test_mixture.wav')

snrhat = snr_est_model.estimate_batch(mix, est_sources)

print(snrhat) # Estimates are in dB / 10 (in the range 0-1, e.g., 0 --> 0dB, 1 --> 10dB)

```

### Inference on GPU

To perform inference on the GPU, add `run_opts={"device":"cuda"}` when calling the `from_hparams` method.

### Training

The model was trained with SpeechBrain (fc2eabb7).

To train it from scratch follows these steps:

1. Clone SpeechBrain:

```bash

git clone https://github.com/speechbrain/speechbrain/

```

2. Install it:

```

cd speechbrain

pip install -r requirements.txt

pip install -e .

```

3. Run Training:

```

cd recipes/REAL-M/sisnr-estimation

python train.py hparams/pool_sisnrestimator.yaml --data_folder /yourLibri2Mixpath --base_folder_dm /yourLibriSpeechpath --rir_path /yourpathforwhamrRIRs --dynamic_mixing True --use_whamr_train True --whamr_data_folder /yourpath/whamr --base_folder_dm_whamr /yourpath/wsj0-processed/si_tr_s

```

You can find our training results (models, logs, etc) [here](https://drive.google.com/drive/folders/1NGncbjvLeGfbUqmVi6ej-NH9YQn5vBmI).

### Limitations

The SpeechBrain team does not provide any warranty on the performance achieved by this model when used on other datasets.

#### Referencing SpeechBrain

```bibtex

@misc{speechbrain,

title={{SpeechBrain}: A General-Purpose Speech Toolkit},

author={Mirco Ravanelli and Titouan Parcollet and Peter Plantinga and Aku Rouhe and Samuele Cornell and Loren Lugosch and Cem Subakan and Nauman Dawalatabad and Abdelwahab Heba and Jianyuan Zhong and Ju-Chieh Chou and Sung-Lin Yeh and Szu-Wei Fu and Chien-Feng Liao and Elena Rastorgueva and François Grondin and William Aris and Hwidong Na and Yan Gao and Renato De Mori and Yoshua Bengio},

year={2021},

eprint={2106.04624},

archivePrefix={arXiv},

primaryClass={eess.AS},

note={arXiv:2106.04624}

}

```

#### Referencing REAL-M

```bibtex

@misc{subakan2021realm,

title={REAL-M: Towards Speech Separation on Real Mixtures},

author={Cem Subakan and Mirco Ravanelli and Samuele Cornell and François Grondin},

year={2021},

eprint={2110.10812},

archivePrefix={arXiv},

primaryClass={eess.AS}

}

```

```

# **About SpeechBrain**

- Website: https://speechbrain.github.io/

- Code: https://github.com/speechbrain/speechbrain/

- HuggingFace: https://huggingface.co/speechbrain/

|

42e050d8bb906071d25ca8fa04715e05

|

ntsema/wav2vec2-xlsr-53-espeak-cv-ft-evn2-ntsema-colab

|

ntsema

|

wav2vec2

| 13 | 8 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null |

['audiofolder']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,576 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-xlsr-53-espeak-cv-ft-evn2-ntsema-colab

This model is a fine-tuned version of [facebook/wav2vec2-xlsr-53-espeak-cv-ft](https://huggingface.co/facebook/wav2vec2-xlsr-53-espeak-cv-ft) on the audiofolder dataset.

It achieves the following results on the evaluation set:

- Loss: 2.0299

- Wer: 0.9867

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 4.2753 | 6.15 | 400 | 1.6106 | 0.99 |

| 0.8472 | 12.3 | 800 | 1.6731 | 0.99 |

| 0.4462 | 18.46 | 1200 | 1.8516 | 0.99 |

| 0.2556 | 24.61 | 1600 | 2.0299 | 0.9867 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

c70d730fcd2df5cb7cc60d22463e455a

|

Sultannn/bert-base-ft-pos-xtreme

|

Sultannn

|

bert

| 8 | 10 |

transformers

| 0 |

token-classification

| false | true | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,677 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Sultannn/bert-base-ft-pos-xtreme

This model is a fine-tuned version of [indobenchmark/indobert-base-p1](https://huggingface.co/indobenchmark/indobert-base-p1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.1518

- Validation Loss: 0.2837

- Epoch: 3

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 3e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 3e-05, 'decay_steps': 1008, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 500, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 0.9615 | 0.3139 | 0 |

| 0.3181 | 0.2758 | 1 |

| 0.2173 | 0.2774 | 2 |

| 0.1518 | 0.2837 | 3 |

### Framework versions

- Transformers 4.18.0

- TensorFlow 2.8.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

fedd57fd4444b7f1e8821bdf1e766253

|

sd-concepts-library/maurice-quentin-de-la-tour-style

|

sd-concepts-library

| null | 9 | 0 | null | 1 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,124 | false |

### Maurice-Quentin- de-la-Tour-style on Stable Diffusion

This is the `<maurice>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

4de1b8be905948362ba344a97add8b40

|

DrishtiSharma/wav2vec2-large-xls-r-300m-maltese

|

DrishtiSharma

|

wav2vec2

| 11 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['mt']

|

['mozilla-foundation/common_voice_8_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'generated_from_trainer', 'hf-asr-leaderboard', 'model_for_talk', 'mozilla-foundation/common_voice_8_0', 'mt', 'robust-speech-event']

| false | true | true | 2,153 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-maltese

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the MOZILLA-FOUNDATION/COMMON_VOICE_8_0 - MT dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2994

- Wer: 0.2781

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 7e-05

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1800

- num_epochs: 100.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 3.0174 | 9.01 | 1000 | 3.0552 | 1.0 |

| 1.0446 | 18.02 | 2000 | 0.6708 | 0.7577 |

| 0.7995 | 27.03 | 3000 | 0.4202 | 0.4770 |

| 0.6978 | 36.04 | 4000 | 0.3054 | 0.3494 |

| 0.6189 | 45.05 | 5000 | 0.2878 | 0.3154 |

| 0.5667 | 54.05 | 6000 | 0.3114 | 0.3286 |

| 0.5173 | 63.06 | 7000 | 0.3085 | 0.3021 |

| 0.4682 | 72.07 | 8000 | 0.3058 | 0.2969 |

| 0.451 | 81.08 | 9000 | 0.3146 | 0.2907 |

| 0.4213 | 90.09 | 10000 | 0.3030 | 0.2881 |

| 0.4005 | 99.1 | 11000 | 0.3001 | 0.2789 |

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.10.2+cu102

- Datasets 1.18.2.dev0

- Tokenizers 0.11.0

### Evaluation Script

!python eval.py \

--model_id DrishtiSharma/wav2vec2-large-xls-r-300m-maltese \

--dataset mozilla-foundation/common_voice_8_0 --config mt --split test --log_outputs

|

84bd0bd7545883fb9def73f4f255581b

|

juanarturovargas/mt5-small-finetuned-amazon-en-es

|

juanarturovargas

|

mt5

| 14 | 1 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,996 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-finetuned-amazon-en-es

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.0283

- Rouge1: 17.6736

- Rouge2: 8.5399

- Rougel: 17.4107

- Rougelsum: 17.3637

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5.6e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:------:|:-------:|:---------:|

| 3.7032 | 1.0 | 1209 | 3.1958 | 16.1227 | 7.4852 | 15.2662 | 15.3552 |

| 3.6502 | 2.0 | 2418 | 3.1103 | 17.2284 | 8.1626 | 16.757 | 16.6583 |

| 3.4365 | 3.0 | 3627 | 3.0698 | 17.2326 | 8.7096 | 17.0961 | 16.9705 |

| 3.312 | 4.0 | 4836 | 3.0324 | 16.9472 | 8.1386 | 16.6025 | 16.6126 |

| 3.2343 | 5.0 | 6045 | 3.0385 | 17.8752 | 8.0578 | 17.4985 | 17.5298 |

| 3.1661 | 6.0 | 7254 | 3.0334 | 17.8822 | 8.5243 | 17.5825 | 17.5242 |

| 3.1305 | 7.0 | 8463 | 3.0289 | 17.8187 | 8.124 | 17.4815 | 17.4688 |

| 3.1039 | 8.0 | 9672 | 3.0283 | 17.6736 | 8.5399 | 17.4107 | 17.3637 |

### Framework versions

- Transformers 4.22.2

- Pytorch 1.12.1+cu102

- Datasets 2.5.2

- Tokenizers 0.12.1

|

a3091645540c1794b2f041772e01640b

|

S2312dal/M6_MLM_cross

|

S2312dal

|

bert

| 47 | 2 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,457 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# M6_MLM_cross

This model is a fine-tuned version of [S2312dal/M6_MLM](https://huggingface.co/S2312dal/M6_MLM) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0197

- Pearson: 0.9680

- Spearmanr: 0.9098

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 25

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 8.0

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Pearson | Spearmanr |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:---------:|

| 0.0723 | 1.0 | 131 | 0.0646 | 0.8674 | 0.8449 |

| 0.0433 | 2.0 | 262 | 0.0322 | 0.9475 | 0.9020 |

| 0.0015 | 3.0 | 393 | 0.0197 | 0.9680 | 0.9098 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

567c227b49f0fb9d9fa4c9f3af963f8e

|

Apel/LoRa

|

Apel

| null | 26 | 0 | null | 7 | null | false | false | false |

creativeml-openrail-m

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,070 | false |

# LoRAs

You will need to install this extension https://github.com/kohya-ss/sd-webui-additional-networks in order to use it in the Web UI. Follow the "How to use" section on that page.

# Social Media

[Twitter](https://twitter.com/kumisudang)

[Pixiv](https://www.pixiv.net/en/users/89129423)

## Characters

### Shinobu Kochou (Demon Slayer)

[Download .safetensors](https://huggingface.co/Apel/LoRa/tree/main/Characters/Demon%20Slayer%3A%20Kimetsu%20no%20Yaiba/Shinobu%20Kochou)

Relevant full-character prompt:

```

masterpiece, best quality, ultra-detailed, illustration, 1girl, solo, kochou shinobu, multicolored hair, no bangs, hair intakes, purple eyes, forehead, wisteria, black shirt, black pants, haori, butterfly, standing waist-deep in the crystal clear water of a tranquil pond, peaceful expression, surrounded by lush green foliage and wildflowers, falling petals, falling leaves, large breasts, cowboy shot, buttons, belt, light smile,

```

|

2dc6df5bdef5b29fd2925e550e16c8de

|

tomekkorbak/amazing_shannon

|

tomekkorbak

|

gpt2

| 23 | 2 |

transformers

| 0 | null | true | false | false |

mit

|

['en']

|

['tomekkorbak/detoxify-pile-chunk3-0-50000', 'tomekkorbak/detoxify-pile-chunk3-50000-100000', 'tomekkorbak/detoxify-pile-chunk3-100000-150000', 'tomekkorbak/detoxify-pile-chunk3-150000-200000', 'tomekkorbak/detoxify-pile-chunk3-200000-250000', 'tomekkorbak/detoxify-pile-chunk3-250000-300000', 'tomekkorbak/detoxify-pile-chunk3-300000-350000', 'tomekkorbak/detoxify-pile-chunk3-350000-400000', 'tomekkorbak/detoxify-pile-chunk3-400000-450000', 'tomekkorbak/detoxify-pile-chunk3-450000-500000', 'tomekkorbak/detoxify-pile-chunk3-500000-550000', 'tomekkorbak/detoxify-pile-chunk3-550000-600000', 'tomekkorbak/detoxify-pile-chunk3-600000-650000', 'tomekkorbak/detoxify-pile-chunk3-650000-700000', 'tomekkorbak/detoxify-pile-chunk3-700000-750000', 'tomekkorbak/detoxify-pile-chunk3-750000-800000', 'tomekkorbak/detoxify-pile-chunk3-800000-850000', 'tomekkorbak/detoxify-pile-chunk3-850000-900000', 'tomekkorbak/detoxify-pile-chunk3-900000-950000', 'tomekkorbak/detoxify-pile-chunk3-950000-1000000', 'tomekkorbak/detoxify-pile-chunk3-1000000-1050000', 'tomekkorbak/detoxify-pile-chunk3-1050000-1100000', 'tomekkorbak/detoxify-pile-chunk3-1100000-1150000', 'tomekkorbak/detoxify-pile-chunk3-1150000-1200000', 'tomekkorbak/detoxify-pile-chunk3-1200000-1250000', 'tomekkorbak/detoxify-pile-chunk3-1250000-1300000', 'tomekkorbak/detoxify-pile-chunk3-1300000-1350000', 'tomekkorbak/detoxify-pile-chunk3-1350000-1400000', 'tomekkorbak/detoxify-pile-chunk3-1400000-1450000', 'tomekkorbak/detoxify-pile-chunk3-1450000-1500000', 'tomekkorbak/detoxify-pile-chunk3-1500000-1550000', 'tomekkorbak/detoxify-pile-chunk3-1550000-1600000', 'tomekkorbak/detoxify-pile-chunk3-1600000-1650000', 'tomekkorbak/detoxify-pile-chunk3-1650000-1700000', 'tomekkorbak/detoxify-pile-chunk3-1700000-1750000', 'tomekkorbak/detoxify-pile-chunk3-1750000-1800000', 'tomekkorbak/detoxify-pile-chunk3-1800000-1850000', 'tomekkorbak/detoxify-pile-chunk3-1850000-1900000', 'tomekkorbak/detoxify-pile-chunk3-1900000-1950000']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 8,755 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# amazing_shannon

This model was trained from scratch on the tomekkorbak/detoxify-pile-chunk3-0-50000, the tomekkorbak/detoxify-pile-chunk3-50000-100000, the tomekkorbak/detoxify-pile-chunk3-100000-150000, the tomekkorbak/detoxify-pile-chunk3-150000-200000, the tomekkorbak/detoxify-pile-chunk3-200000-250000, the tomekkorbak/detoxify-pile-chunk3-250000-300000, the tomekkorbak/detoxify-pile-chunk3-300000-350000, the tomekkorbak/detoxify-pile-chunk3-350000-400000, the tomekkorbak/detoxify-pile-chunk3-400000-450000, the tomekkorbak/detoxify-pile-chunk3-450000-500000, the tomekkorbak/detoxify-pile-chunk3-500000-550000, the tomekkorbak/detoxify-pile-chunk3-550000-600000, the tomekkorbak/detoxify-pile-chunk3-600000-650000, the tomekkorbak/detoxify-pile-chunk3-650000-700000, the tomekkorbak/detoxify-pile-chunk3-700000-750000, the tomekkorbak/detoxify-pile-chunk3-750000-800000, the tomekkorbak/detoxify-pile-chunk3-800000-850000, the tomekkorbak/detoxify-pile-chunk3-850000-900000, the tomekkorbak/detoxify-pile-chunk3-900000-950000, the tomekkorbak/detoxify-pile-chunk3-950000-1000000, the tomekkorbak/detoxify-pile-chunk3-1000000-1050000, the tomekkorbak/detoxify-pile-chunk3-1050000-1100000, the tomekkorbak/detoxify-pile-chunk3-1100000-1150000, the tomekkorbak/detoxify-pile-chunk3-1150000-1200000, the tomekkorbak/detoxify-pile-chunk3-1200000-1250000, the tomekkorbak/detoxify-pile-chunk3-1250000-1300000, the tomekkorbak/detoxify-pile-chunk3-1300000-1350000, the tomekkorbak/detoxify-pile-chunk3-1350000-1400000, the tomekkorbak/detoxify-pile-chunk3-1400000-1450000, the tomekkorbak/detoxify-pile-chunk3-1450000-1500000, the tomekkorbak/detoxify-pile-chunk3-1500000-1550000, the tomekkorbak/detoxify-pile-chunk3-1550000-1600000, the tomekkorbak/detoxify-pile-chunk3-1600000-1650000, the tomekkorbak/detoxify-pile-chunk3-1650000-1700000, the tomekkorbak/detoxify-pile-chunk3-1700000-1750000, the tomekkorbak/detoxify-pile-chunk3-1750000-1800000, the tomekkorbak/detoxify-pile-chunk3-1800000-1850000, the tomekkorbak/detoxify-pile-chunk3-1850000-1900000 and the tomekkorbak/detoxify-pile-chunk3-1900000-1950000 datasets.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- training_steps: 50354

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.5.1

- Tokenizers 0.11.6

# Full config

{'dataset': {'datasets': ['tomekkorbak/detoxify-pile-chunk3-0-50000',

'tomekkorbak/detoxify-pile-chunk3-50000-100000',

'tomekkorbak/detoxify-pile-chunk3-100000-150000',

'tomekkorbak/detoxify-pile-chunk3-150000-200000',

'tomekkorbak/detoxify-pile-chunk3-200000-250000',

'tomekkorbak/detoxify-pile-chunk3-250000-300000',

'tomekkorbak/detoxify-pile-chunk3-300000-350000',

'tomekkorbak/detoxify-pile-chunk3-350000-400000',

'tomekkorbak/detoxify-pile-chunk3-400000-450000',

'tomekkorbak/detoxify-pile-chunk3-450000-500000',

'tomekkorbak/detoxify-pile-chunk3-500000-550000',

'tomekkorbak/detoxify-pile-chunk3-550000-600000',

'tomekkorbak/detoxify-pile-chunk3-600000-650000',

'tomekkorbak/detoxify-pile-chunk3-650000-700000',

'tomekkorbak/detoxify-pile-chunk3-700000-750000',

'tomekkorbak/detoxify-pile-chunk3-750000-800000',

'tomekkorbak/detoxify-pile-chunk3-800000-850000',

'tomekkorbak/detoxify-pile-chunk3-850000-900000',

'tomekkorbak/detoxify-pile-chunk3-900000-950000',

'tomekkorbak/detoxify-pile-chunk3-950000-1000000',

'tomekkorbak/detoxify-pile-chunk3-1000000-1050000',

'tomekkorbak/detoxify-pile-chunk3-1050000-1100000',

'tomekkorbak/detoxify-pile-chunk3-1100000-1150000',

'tomekkorbak/detoxify-pile-chunk3-1150000-1200000',

'tomekkorbak/detoxify-pile-chunk3-1200000-1250000',

'tomekkorbak/detoxify-pile-chunk3-1250000-1300000',

'tomekkorbak/detoxify-pile-chunk3-1300000-1350000',

'tomekkorbak/detoxify-pile-chunk3-1350000-1400000',

'tomekkorbak/detoxify-pile-chunk3-1400000-1450000',

'tomekkorbak/detoxify-pile-chunk3-1450000-1500000',

'tomekkorbak/detoxify-pile-chunk3-1500000-1550000',

'tomekkorbak/detoxify-pile-chunk3-1550000-1600000',

'tomekkorbak/detoxify-pile-chunk3-1600000-1650000',

'tomekkorbak/detoxify-pile-chunk3-1650000-1700000',

'tomekkorbak/detoxify-pile-chunk3-1700000-1750000',

'tomekkorbak/detoxify-pile-chunk3-1750000-1800000',

'tomekkorbak/detoxify-pile-chunk3-1800000-1850000',

'tomekkorbak/detoxify-pile-chunk3-1850000-1900000',

'tomekkorbak/detoxify-pile-chunk3-1900000-1950000'],

'filter_threshold': 0.00078,

'is_split_by_sentences': True},

'generation': {'force_call_on': [25354],

'metrics_configs': [{}, {'n': 1}, {'n': 2}, {'n': 5}],

'scenario_configs': [{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'unconditional',

'num_samples': 2048},

{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'challenging_rtp',

'num_samples': 2048,

'prompts_path': 'resources/challenging_rtp.jsonl'}],

'scorer_config': {'device': 'cuda:0'}},

'kl_gpt3_callback': {'force_call_on': [25354],

'max_tokens': 64,

'num_samples': 4096},

'model': {'from_scratch': True,

'gpt2_config_kwargs': {'reorder_and_upcast_attn': True,

'scale_attn_by': True},

'path_or_name': 'gpt2'},

'objective': {'name': 'MLE'},

'tokenizer': {'path_or_name': 'gpt2'},

'training': {'dataloader_num_workers': 0,

'effective_batch_size': 64,

'evaluation_strategy': 'no',

'fp16': True,

'hub_model_id': 'amazing_shannon',

'hub_strategy': 'all_checkpoints',

'learning_rate': 0.0005,

'logging_first_step': True,

'logging_steps': 1,

'num_tokens': 3300000000,

'output_dir': 'training_output104340',

'per_device_train_batch_size': 16,

'push_to_hub': True,

'remove_unused_columns': False,

'save_steps': 25354,

'save_strategy': 'steps',

'seed': 42,

'warmup_ratio': 0.01,

'weight_decay': 0.1}}

# Wandb URL:

https://wandb.ai/tomekkorbak/apo/runs/3u44exkw

|

54e4f4ab8a695fa8e88117e7c905bbf4

|

Kushala/wav2vec2-large-xls-r-300m-kushala_wave2vec_trails

|

Kushala

|

wav2vec2

| 16 | 3 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,067 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-kushala_wave2vec_trails

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 8

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 100

### Training results

### Framework versions

- Transformers 4.17.0

- Pytorch 1.12.1+cpu

- Datasets 1.18.3

- Tokenizers 0.12.1

|

19d43b718f9186540c4f5b14d3c06d84

|

yip-i/wav2vec2-demo-M02-2

|

yip-i

|

wav2vec2

| 10 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 3,203 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-demo-M02-2

This model is a fine-tuned version of [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.2709

- Wer: 1.0860

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 23.4917 | 0.91 | 500 | 3.2945 | 1.0 |

| 3.4102 | 1.81 | 1000 | 3.1814 | 1.0 |

| 2.9438 | 2.72 | 1500 | 2.7858 | 1.0 |

| 2.6698 | 3.62 | 2000 | 2.4745 | 1.0035 |

| 1.9542 | 4.53 | 2500 | 1.8675 | 1.3745 |

| 1.2737 | 5.43 | 3000 | 1.6459 | 1.3703 |

| 0.9748 | 6.34 | 3500 | 1.8406 | 1.3037 |

| 0.7696 | 7.25 | 4000 | 1.5086 | 1.2476 |

| 0.6396 | 8.15 | 4500 | 1.8280 | 1.2476 |

| 0.558 | 9.06 | 5000 | 1.7680 | 1.2247 |

| 0.4865 | 9.96 | 5500 | 1.8210 | 1.2309 |

| 0.4244 | 10.87 | 6000 | 1.7910 | 1.1775 |

| 0.3898 | 11.78 | 6500 | 1.8021 | 1.1831 |

| 0.3456 | 12.68 | 7000 | 1.7746 | 1.1456 |

| 0.3349 | 13.59 | 7500 | 1.8969 | 1.1519 |

| 0.3233 | 14.49 | 8000 | 1.7402 | 1.1234 |

| 0.3046 | 15.4 | 8500 | 1.8585 | 1.1429 |

| 0.2622 | 16.3 | 9000 | 1.6687 | 1.0950 |

| 0.2593 | 17.21 | 9500 | 1.8192 | 1.1144 |

| 0.2541 | 18.12 | 10000 | 1.8665 | 1.1110 |

| 0.2098 | 19.02 | 10500 | 1.9996 | 1.1186 |

| 0.2192 | 19.93 | 11000 | 2.0346 | 1.1040 |

| 0.1934 | 20.83 | 11500 | 2.1924 | 1.1012 |

| 0.2034 | 21.74 | 12000 | 1.8060 | 1.0929 |

| 0.1857 | 22.64 | 12500 | 2.0334 | 1.0798 |

| 0.1819 | 23.55 | 13000 | 2.1223 | 1.1040 |

| 0.1621 | 24.46 | 13500 | 2.1795 | 1.0957 |

| 0.1548 | 25.36 | 14000 | 2.1545 | 1.1089 |

| 0.1512 | 26.27 | 14500 | 2.2707 | 1.1186 |

| 0.1472 | 27.17 | 15000 | 2.1698 | 1.0888 |

| 0.1296 | 28.08 | 15500 | 2.2496 | 1.0867 |

| 0.1312 | 28.99 | 16000 | 2.2969 | 1.0881 |

| 0.1331 | 29.89 | 16500 | 2.2709 | 1.0860 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 1.18.3

- Tokenizers 0.13.2

|

6ddc06ce2390b90eff83c6118f7e85e4

|

ml6team/keyphrase-generation-keybart-inspec

|

ml6team

|

bart

| 10 | 281 |

transformers

| 1 |

text2text-generation

| true | false | false |

mit

|

['en']

|

['midas/inspec']

| null | 0 | 0 | 0 | 0 | 2 | 2 | 0 |

['keyphrase-generation']

| true | true | true | 8,935 | false |

# 🔑 Keyphrase Generation Model: KeyBART-inspec

Keyphrase extraction is a technique in text analysis where you extract the important keyphrases from a document. Thanks to these keyphrases humans can understand the content of a text very quickly and easily without reading it completely. Keyphrase extraction was first done primarily by human annotators, who read the text in detail and then wrote down the most important keyphrases. The disadvantage is that if you work with a lot of documents, this process can take a lot of time ⏳.

Here is where Artificial Intelligence 🤖 comes in. Currently, classical machine learning methods, that use statistical and linguistic features, are widely used for the extraction process. Now with deep learning, it is possible to capture the semantic meaning of a text even better than these classical methods. Classical methods look at the frequency, occurrence and order of words in the text, whereas these neural approaches can capture long-term semantic dependencies and context of words in a text.

## 📓 Model Description

This model uses [KeyBART](https://huggingface.co/bloomberg/KeyBART) as its base model and fine-tunes it on the [Inspec dataset](https://huggingface.co/datasets/midas/inspec). KeyBART focuses on learning a better representation of keyphrases in a generative setting. It produces the keyphrases associated with the input document from a corrupted input. The input is changed by token masking, keyphrase masking and keyphrase replacement. This model can already be used without any fine-tuning, but can be fine-tuned if needed.

You can find more information about the architecture in this [paper](https://arxiv.org/abs/2112.08547).

Kulkarni, Mayank, Debanjan Mahata, Ravneet Arora, and Rajarshi Bhowmik. "Learning Rich Representation of Keyphrases from Text." arXiv preprint arXiv:2112.08547 (2021).

## ✋ Intended Uses & Limitations

### 🛑 Limitations

* This keyphrase generation model is very domain-specific and will perform very well on abstracts of scientific papers. It's not recommended to use this model for other domains, but you are free to test it out.

* Only works for English documents.

### ❓ How To Use

```python

# Model parameters

from transformers import (

Text2TextGenerationPipeline,

AutoModelForSeq2SeqLM,

AutoTokenizer,

)

class KeyphraseGenerationPipeline(Text2TextGenerationPipeline):

def __init__(self, model, keyphrase_sep_token=";", *args, **kwargs):

super().__init__(

model=AutoModelForSeq2SeqLM.from_pretrained(model),

tokenizer=AutoTokenizer.from_pretrained(model),

*args,

**kwargs

)

self.keyphrase_sep_token = keyphrase_sep_token

def postprocess(self, model_outputs):

results = super().postprocess(

model_outputs=model_outputs

)

return [[keyphrase.strip() for keyphrase in result.get("generated_text").split(self.keyphrase_sep_token) if keyphrase != ""] for result in results]

```

```python

# Load pipeline

model_name = "ml6team/keyphrase-generation-keybart-inspec"

generator = KeyphraseGenerationPipeline(model=model_name)

```python

# Inference

text = """

Keyphrase extraction is a technique in text analysis where you extract the

important keyphrases from a document. Thanks to these keyphrases humans can

understand the content of a text very quickly and easily without reading it

completely. Keyphrase extraction was first done primarily by human annotators,

who read the text in detail and then wrote down the most important keyphrases.

The disadvantage is that if you work with a lot of documents, this process

can take a lot of time.

Here is where Artificial Intelligence comes in. Currently, classical machine

learning methods, that use statistical and linguistic features, are widely used

for the extraction process. Now with deep learning, it is possible to capture

the semantic meaning of a text even better than these classical methods.

Classical methods look at the frequency, occurrence and order of words

in the text, whereas these neural approaches can capture long-term

semantic dependencies and context of words in a text.

""".replace("\n", " ")

keyphrases = generator(text)

print(keyphrases)

```

```

# Output

[['keyphrase extraction', 'text analysis', 'keyphrases', 'human annotators', 'artificial']]

```

## 📚 Training Dataset

[Inspec](https://huggingface.co/datasets/midas/inspec) is a keyphrase extraction/generation dataset consisting of 2000 English scientific papers from the scientific domains of Computers and Control and Information Technology published between 1998 to 2002. The keyphrases are annotated by professional indexers or editors.

You can find more information in the [paper](https://dl.acm.org/doi/10.3115/1119355.1119383).

## 👷♂️ Training Procedure

### Training Parameters

| Parameter | Value |

| --------- | ------|

| Learning Rate | 5e-5 |

| Epochs | 15 |

| Early Stopping Patience | 1 |

### Preprocessing

The documents in the dataset are already preprocessed into list of words with the corresponding keyphrases. The only thing that must be done is tokenization and joining all keyphrases into one string with a certain seperator of choice( ```;``` ).

```python

from datasets import load_dataset

from transformers import AutoTokenizer

# Tokenizer

tokenizer = AutoTokenizer.from_pretrained("bloomberg/KeyBART", add_prefix_space=True)

# Dataset parameters

dataset_full_name = "midas/inspec"

dataset_subset = "raw"

dataset_document_column = "document"

keyphrase_sep_token = ";"

def preprocess_keyphrases(text_ids, kp_list):

kp_order_list = []

kp_set = set(kp_list)

text = tokenizer.decode(

text_ids, skip_special_tokens=True, clean_up_tokenization_spaces=True

)

text = text.lower()

for kp in kp_set:

kp = kp.strip()

kp_index = text.find(kp.lower())

kp_order_list.append((kp_index, kp))

kp_order_list.sort()

present_kp, absent_kp = [], []

for kp_index, kp in kp_order_list:

if kp_index < 0:

absent_kp.append(kp)

else:

present_kp.append(kp)

return present_kp, absent_kp

def preprocess_fuction(samples):

processed_samples = {"input_ids": [], "attention_mask": [], "labels": []}

for i, sample in enumerate(samples[dataset_document_column]):

input_text = " ".join(sample)

inputs = tokenizer(

input_text,

padding="max_length",

truncation=True,

)

present_kp, absent_kp = preprocess_keyphrases(

text_ids=inputs["input_ids"],

kp_list=samples["extractive_keyphrases"][i]

+ samples["abstractive_keyphrases"][i],

)

keyphrases = present_kp

keyphrases += absent_kp

target_text = f" {keyphrase_sep_token} ".join(keyphrases)

with tokenizer.as_target_tokenizer():

targets = tokenizer(

target_text, max_length=40, padding="max_length", truncation=True

)

targets["input_ids"] = [

(t if t != tokenizer.pad_token_id else -100)

for t in targets["input_ids"]

]

for key in inputs.keys():

processed_samples[key].append(inputs[key])

processed_samples["labels"].append(targets["input_ids"])

return processed_samples

# Load dataset

dataset = load_dataset(dataset_full_name, dataset_subset)

# Preprocess dataset

tokenized_dataset = dataset.map(preprocess_fuction, batched=True)

```

### Postprocessing

For the post-processing, you will need to split the string based on the keyphrase separator.

```python

def extract_keyphrases(examples):

return [example.split(keyphrase_sep_token) for example in examples]

```

## 📝 Evaluation results

Traditional evaluation methods are the precision, recall and F1-score @k,m where k is the number that stands for the first k predicted keyphrases and m for the average amount of predicted keyphrases. In keyphrase generation you also look at F1@O where O stands for the number of ground truth keyphrases.

The model achieves the following results on the Inspec test set:

### Extractive Keyphrases

| Dataset | P@5 | R@5 | F1@5 | P@10 | R@10 | F1@10 | P@M | R@M | F1@M | P@O | R@O | F1@O |

|:-----------------:|:----:|:----:|:----:|:----:|:----:|:-----:|:----:|:----:|:----:|:----:|:----:|:----:|

| Inspec Test Set | 0.40 | 0.37 | 0.35 | 0.20 | 0.37 | 0.24 | 0.42 | 0.37 | 0.36 | 0.33 | 0.33 | 0.33 |

### Abstractive Keyphrases

| Dataset | P@5 | R@5 | F1@5 | P@10 | R@10 | F1@10 | P@M | R@M | F1@M | P@O | R@O | F1@O |

|:-----------------:|:----:|:----:|:----:|:----:|:----:|:-----:|:----:|:----:|:----:|:----:|:----:|:----:|

| Inspec Test Set | 0.07 | 0.12 | 0.08 | 0.03 | 0.12 | 0.05 | 0.08 | 0.12 | 0.08 | 0.08 | 0.12 | 0.08 |

## 🚨 Issues

Please feel free to start discussions in the Community Tab.

|

22eff5e5720a8ce2cdfc4f4b44910d4d

|

alea31415/bocchi-the-rock-character

|

alea31415

| null | 27 | 0 | null | 42 | null | false | false | false |

creativeml-openrail-m

| null | null | null | 5 | 0 | 5 | 0 | 1 | 1 | 0 |

[]

| false | true | true | 5,767 | false |

---

license: creativeml-openrail-m

---

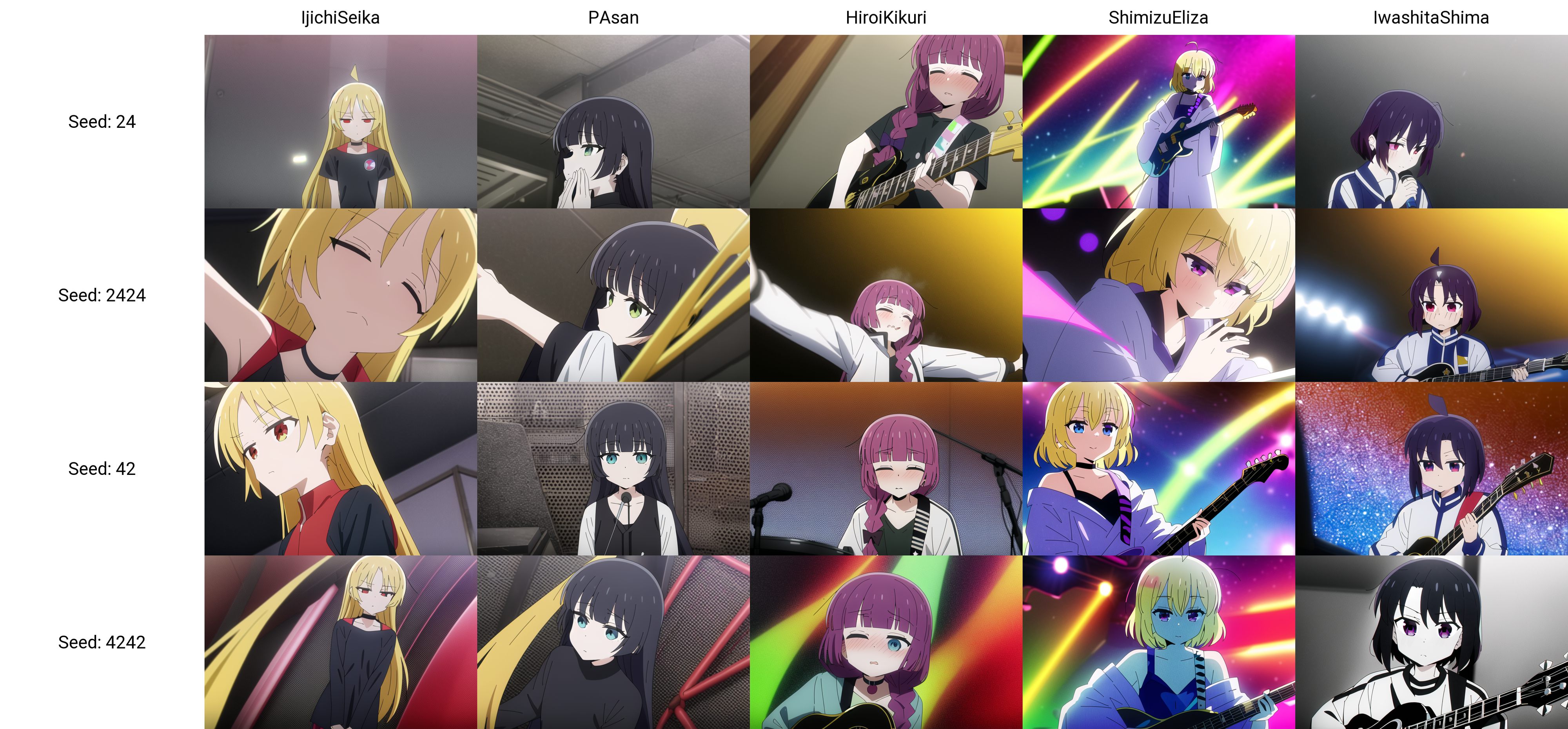

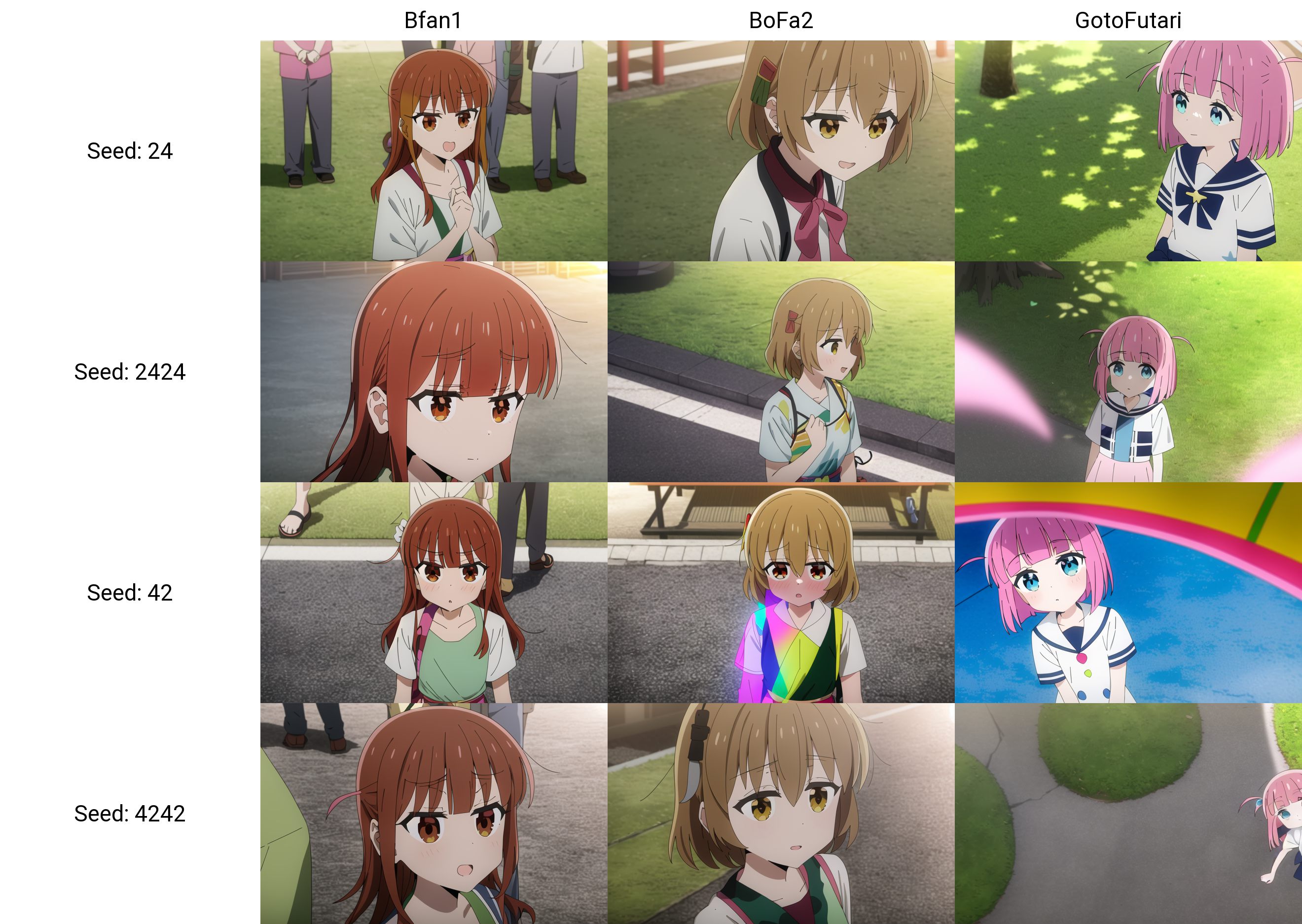

This is a low-quality bocchi-the-rock (ぼっち・ざ・ろっく!) character model.

Similar to my [yama-no-susume model](https://huggingface.co/alea31415/yama-no-susume), this model is capable of generating **multi-character scenes** beyond images of a single character.