repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Rocketknight1/t5-small-finetuned-xsum

|

Rocketknight1

|

t5

| 17 | 57 |

transformers

| 0 |

text2text-generation

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,540 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Rocketknight1/t5-small-finetuned-xsum

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 2.7172

- Validation Loss: 2.3977

- Train Rouge1: 28.7469

- Train Rouge2: 7.9005

- Train Rougel: 22.5917

- Train Rougelsum: 22.6162

- Train Gen Len: 18.875

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': 2e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Rouge1 | Train Rouge2 | Train Rougel | Train Rougelsum | Train Gen Len | Epoch |

|:----------:|:---------------:|:------------:|:------------:|:------------:|:---------------:|:-------------:|:-----:|

| 2.7172 | 2.3977 | 28.7469 | 7.9005 | 22.5917 | 22.6162 | 18.875 | 0 |

### Framework versions

- Transformers 4.16.0.dev0

- TensorFlow 2.8.0-rc0

- Datasets 1.17.0

- Tokenizers 0.11.0

|

d22e271ee72fb8695ef49c6fdbef0d80

|

sd-concepts-library/minecraft-concept-art

|

sd-concepts-library

| null | 9 | 0 | null | 10 | null | false | false | false |

mit

| null | null | null | 1 | 0 | 1 | 0 | 3 | 3 | 0 |

[]

| false | true | true | 1,068 | false |

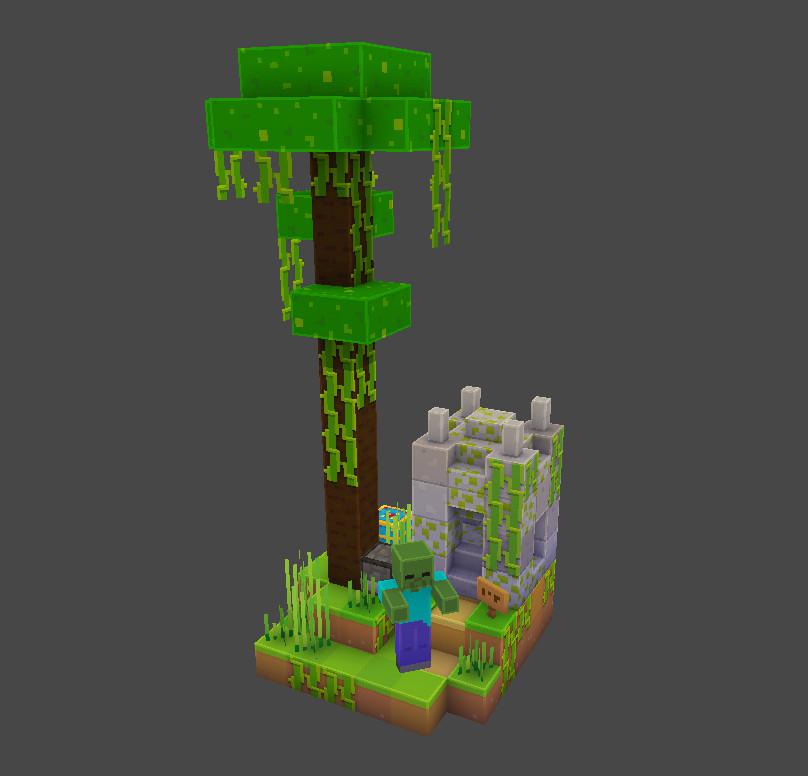

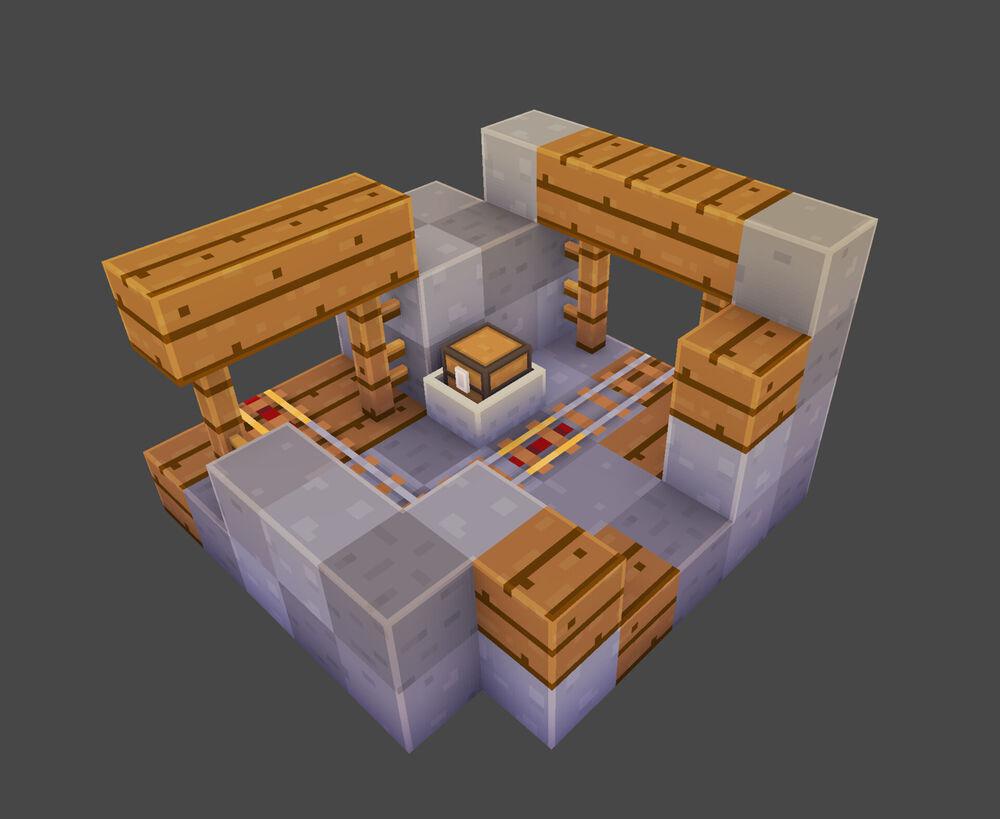

### minecraft-concept-art on Stable Diffusion

This is the `<concept>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

af1899c249e7ccf1d7876d477c93defa

|

fathyshalab/all-roberta-large-v1-meta-5-16-5

|

fathyshalab

|

roberta

| 11 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,507 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# all-roberta-large-v1-meta-5-16-5

This model is a fine-tuned version of [sentence-transformers/all-roberta-large-v1](https://huggingface.co/sentence-transformers/all-roberta-large-v1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.4797

- Accuracy: 0.28

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 48

- eval_batch_size: 48

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 2.7721 | 1.0 | 1 | 2.6529 | 0.1889 |

| 2.2569 | 2.0 | 2 | 2.5866 | 0.2333 |

| 1.9837 | 3.0 | 3 | 2.5340 | 0.2644 |

| 1.6425 | 4.0 | 4 | 2.4980 | 0.2756 |

| 1.4612 | 5.0 | 5 | 2.4797 | 0.28 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

|

9038d3f29e215d9c0a84d787510b65fb

|

lilykaw/distilbert-base-uncased-finetuned-stsb

|

lilykaw

|

distilbert

| 13 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,571 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-stsb

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5634

- Pearson: 0.8680

- Spearmanr: 0.8652

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Pearson | Spearmanr |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:---------:|

| No log | 1.0 | 360 | 0.6646 | 0.8516 | 0.8494 |

| 1.0238 | 2.0 | 720 | 0.5617 | 0.8666 | 0.8637 |

| 0.3952 | 3.0 | 1080 | 0.6533 | 0.8649 | 0.8646 |

| 0.3952 | 4.0 | 1440 | 0.5889 | 0.8651 | 0.8625 |

| 0.2488 | 5.0 | 1800 | 0.5634 | 0.8680 | 0.8652 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

fff453a833641c7f90dac93cd69ca546

|

Kaludi/CSGO-Minimap-Layout-Generation

|

Kaludi

| null | 4 | 0 |

diffusers

| 0 |

text-to-image

| false | false | false |

creativeml-openrail-m

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['stable-diffusion', 'stable-diffusion-diffusers', 'text-to-image', 'art', 'artistic', 'diffusers', 'cs:go', 'topview', 'map generator', 'layout', 'layout generator', 'map', 'csgo', 'improved layout', 'radar']

| false | true | true | 2,862 | false |

# CSGO Minimap Layout Generation

This is an improved AI model of my previous model trained on CS:GO's radar top view images of many maps which can now produce custom map layouts in seconds. This model does not produce red or green boxes like in my previous model. The tag for this model is **"radar-topview"**. If you'd like to get a map layout similar to a specific map, you can add the map name before "radar-topview". So if I wanted a map generation similar to dust2, I would write **"dust2-radar-topview"**.

**Try the following prompt to get the best results:**

"fps radar-topview game map, flat shading, soft shadows, global illumination"

"fps radar topview map, polygonal, gradient background, pastel colors, soft shadows, global illumination, straight lines, insanely detailed"

**Map Radar Topviews this AI was trained on:**

de_dust2

de_inferno

de_nuke

de_mirage

de_cache

de_train

de_cobblestone

de_castle

de_overpass

**Have fun generating map layouts!**

### CompVis

[Download csgoTopViewMapLayout.ckpt) (2.9GB)](https://huggingface.co/Kaludi/CSGO-Minimap-Layout-Generation/blob/main/csgoMiniMapLayoutsV2.ckpt)

### 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion Pipeline](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

```python

from diffusers import StableDiffusionPipeline, DPMSolverMultistepScheduler

import torch

prompt = (

"fps radar-topview game map, flat shading, soft shadows, global illumination")

model_id = "Kaludi/CSGO-Improved-Radar-Top-View-Map-Layouts"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe.scheduler = DPMSolverMultistepScheduler.from_config(pipe.scheduler.config)

pipe = pipe.to("cuda")

image = pipe(prompt, num_inference_steps=30).images[0]

image.save("./result.jpg")

```

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

|

c35dc79f1bc6e8535c132d9d5a803570

|

malay-patel/bert-ww-finetuned-squad

|

malay-patel

|

bert

| 8 | 12 |

transformers

| 0 |

question-answering

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,755 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# malay-patel/bert-ww-finetuned-squad

This model is a fine-tuned version of [bert-large-cased-whole-word-masking-finetuned-squad](https://huggingface.co/bert-large-cased-whole-word-masking-finetuned-squad) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.1766

- Train End Logits Accuracy: 0.9455

- Train Start Logits Accuracy: 0.9312

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 16638, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Train End Logits Accuracy | Train Start Logits Accuracy | Epoch |

|:----------:|:-------------------------:|:---------------------------:|:-----:|

| 0.5635 | 0.8374 | 0.7992 | 0 |

| 0.3369 | 0.8987 | 0.8695 | 1 |

| 0.1766 | 0.9455 | 0.9312 | 2 |

### Framework versions

- Transformers 4.24.0

- TensorFlow 2.9.2

- Datasets 2.6.1

- Tokenizers 0.13.2

|

688a9cd20c5cff890a246aa62d1e77a1

|

din0s/bart-pt-asqa-ob

|

din0s

|

bart

| 11 | 1 |

transformers

| 0 |

text2text-generation

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,666 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-pt-asqa-ob

This model is a fine-tuned version of [vblagoje/bart_lfqa](https://huggingface.co/vblagoje/bart_lfqa) on the [ASQA](https://huggingface.co/datasets/din0s/asqa) dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6901

- Rougelsum: 20.7527

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-06

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rougelsum |

|:-------------:|:-----:|:----:|:---------------:|:---------:|

| No log | 1.0 | 355 | 1.6295 | 17.7502 |

| 1.6407 | 2.0 | 710 | 1.6144 | 18.5897 |

| 1.4645 | 3.0 | 1065 | 1.6222 | 19.3778 |

| 1.4645 | 4.0 | 1420 | 1.6522 | 19.6941 |

| 1.3678 | 5.0 | 1775 | 1.6528 | 20.3110 |

| 1.2671 | 6.0 | 2130 | 1.6879 | 20.6112 |

| 1.2671 | 7.0 | 2485 | 1.6901 | 20.7527 |

### Framework versions

- Transformers 4.23.0.dev0

- Pytorch 1.12.1+cu102

- Datasets 2.4.0

- Tokenizers 0.12.1

|

a50e35347134211d9bd0a2a3612ea460

|

victorialslocum/en_reciparse_model

|

victorialslocum

| null | 17 | 0 |

spacy

| 1 |

token-classification

| false | false | false |

mit

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['spacy', 'token-classification']

| false | true | true | 682 | false |

| Feature | Description |

| --- | --- |

| **Name** | `en_reciparse_model` |

| **Version** | `0.0.0` |

| **spaCy** | `>=3.3.1,<3.4.0` |

| **Default Pipeline** | `tok2vec`, `ner` |

| **Components** | `tok2vec`, `ner` |

| **Vectors** | 0 keys, 0 unique vectors (0 dimensions) |

| **Sources** | n/a |

| **License** | n/a |

| **Author** | [n/a]() |

### Label Scheme

<details>

<summary>View label scheme (1 labels for 1 components)</summary>

| Component | Labels |

| --- | --- |

| **`ner`** | `INGREDIENT` |

</details>

### Accuracy

| Type | Score |

| --- | --- |

| `ENTS_F` | 87.97 |

| `ENTS_P` | 88.54 |

| `ENTS_R` | 87.40 |

| `TOK2VEC_LOSS` | 37557.71 |

| `NER_LOSS` | 19408.65 |

|

4132923bcfd606c7bf907e815c2794c6

|

atrevidoantonio/atrevidoantonio1

|

atrevidoantonio

| null | 19 | 3 |

diffusers

| 0 |

text-to-image

| false | false | false |

creativeml-openrail-m

| null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['text-to-image', 'stable-diffusion']

| false | true | true | 547 | false |

### atrevidoantonio1 Dreambooth model trained by atrevidoantonio with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

.JPG)

|

6b281ecfc7463a797088ca8549287e2e

|

Galuh/wav2vec2-large-xlsr-indonesian

|

Galuh

|

wav2vec2

| 10 | 17 |

transformers

| 1 |

automatic-speech-recognition

| true | false | true |

apache-2.0

|

['id']

|

['common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['audio', 'automatic-speech-recognition', 'speech', 'xlsr-fine-tuning-week']

| true | true | true | 3,564 | false |

# Wav2Vec2-Large-XLSR-Indonesian

This is the model for Wav2Vec2-Large-XLSR-Indonesian, a fine-tuned

[facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53)

model on the [Indonesian Common Voice dataset](https://huggingface.co/datasets/common_voice).

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "id", split="test[:2%]")

processor = Wav2Vec2Processor.from_pretrained("Galuh/wav2vec2-large-xlsr-indonesian")

model = Wav2Vec2ForCTC.from_pretrained("Galuh/wav2vec2-large-xlsr-indonesian")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on the Indonesian test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "id", split="test")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("Galuh/wav2vec2-large-xlsr-indonesian")

model = Wav2Vec2ForCTC.from_pretrained("Galuh/wav2vec2-large-xlsr-indonesian")

model.to("cuda")

chars_to_ignore_regex = '[\,\?\.\!\-\;\:\"\“\%\‘\'\”\�]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

speech_array, sampling_rate = torchaudio.load(batch["path"])

resampler = torchaudio.transforms.Resample(sampling_rate, 16_000)

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 18.32 %

## Training

The Common Voice `train`, `validation`, and ... datasets were used for training as well as ... and ... # TODO

The script used for training can be found [here](https://github.com/galuhsahid/wav2vec2-indonesian)

(will be available soon)

|

c63205a35019efa5adc37820a4fd9adc

|

Laurie/QA-distilbert

|

Laurie

|

distilbert

| 12 | 6 |

transformers

| 0 |

question-answering

| true | false | false |

apache-2.0

| null |

['squad']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,247 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# QA-distilbert

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 1.5374

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 250 | 2.0457 |

| 2.5775 | 2.0 | 500 | 1.6041 |

| 2.5775 | 3.0 | 750 | 1.5374 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

b9f4473ce48b0e85a08b0732c91e7d5a

|

sd-concepts-library/egorey

|

sd-concepts-library

| null | 9 | 0 | null | 1 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 983 | false |

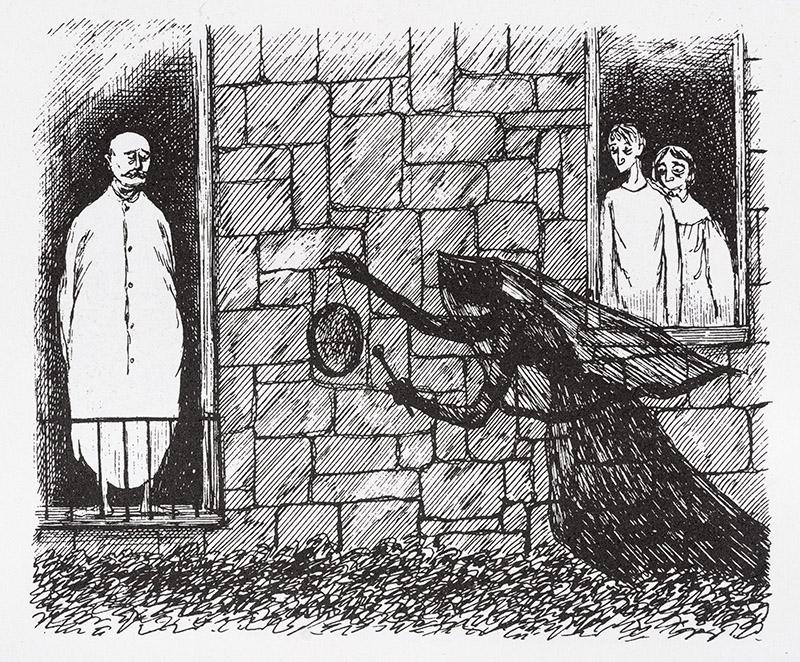

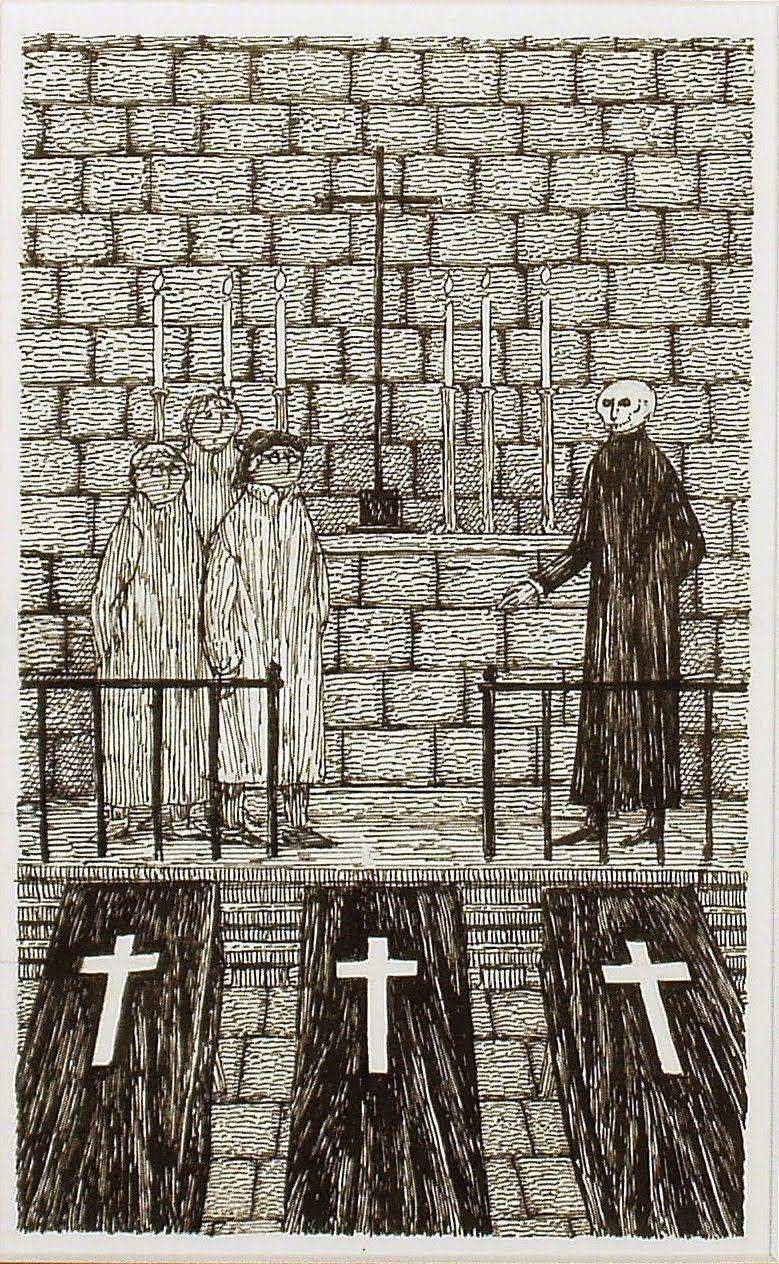

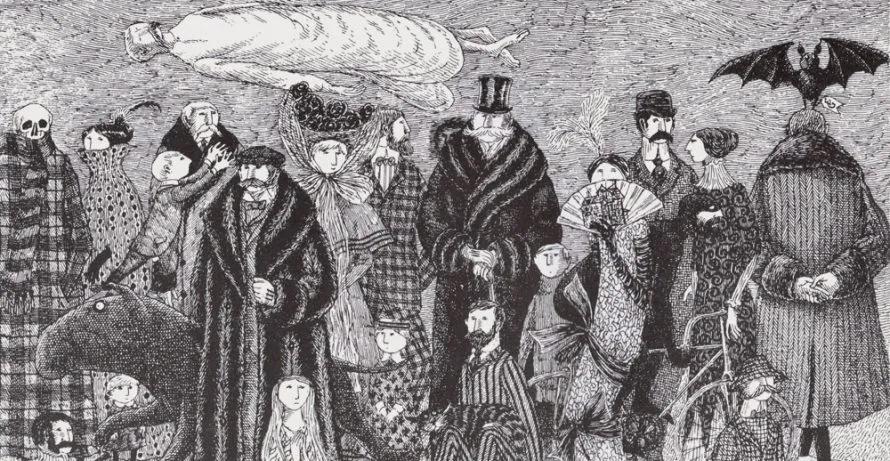

### egorey on Stable Diffusion

This is the `<gorey>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

4a95ca226c23146a101b89302f943109

|

Helsinki-NLP/opus-mt-lu-en

|

Helsinki-NLP

|

marian

| 10 | 21 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 768 | false |

### opus-mt-lu-en

* source languages: lu

* target languages: en

* OPUS readme: [lu-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/lu-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-09.zip](https://object.pouta.csc.fi/OPUS-MT-models/lu-en/opus-2020-01-09.zip)

* test set translations: [opus-2020-01-09.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/lu-en/opus-2020-01-09.test.txt)

* test set scores: [opus-2020-01-09.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/lu-en/opus-2020-01-09.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.lu.en | 35.7 | 0.517 |

|

4fccd7c0c1791292dad81713619a3c2b

|

cansen88/PromptGenerator_5_topic

|

cansen88

|

gpt2

| 9 | 2 |

transformers

| 0 |

text-generation

| false | true | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,660 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# PromptGenerator_5_topic

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 10.6848

- Validation Loss: 10.6672

- Epoch: 4

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 5e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 5e-05, 'decay_steps': -999, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 10.6864 | 10.6743 | 0 |

| 10.7045 | 10.6736 | 1 |

| 10.7114 | 10.6722 | 2 |

| 10.7082 | 10.6701 | 3 |

| 10.6848 | 10.6672 | 4 |

### Framework versions

- Transformers 4.21.1

- TensorFlow 2.8.2

- Datasets 2.4.0

- Tokenizers 0.12.1

|

433f773202ece90975fedac31e78cf2b

|

gokuls/mobilebert_sa_GLUE_Experiment_logit_kd_mrpc_128

|

gokuls

|

mobilebert

| 17 | 2 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

|

['en']

|

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,542 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mobilebert_sa_GLUE_Experiment_logit_kd_mrpc_128

This model is a fine-tuned version of [google/mobilebert-uncased](https://huggingface.co/google/mobilebert-uncased) on the GLUE MRPC dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5213

- Accuracy: 0.6740

- F1: 0.7787

- Combined Score: 0.7264

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Combined Score |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:--------------:|

| 0.6368 | 1.0 | 29 | 0.5564 | 0.6838 | 0.8122 | 0.7480 |

| 0.6099 | 2.0 | 58 | 0.5557 | 0.6838 | 0.8122 | 0.7480 |

| 0.611 | 3.0 | 87 | 0.5555 | 0.6838 | 0.8122 | 0.7480 |

| 0.6101 | 4.0 | 116 | 0.5568 | 0.6838 | 0.8122 | 0.7480 |

| 0.608 | 5.0 | 145 | 0.5540 | 0.6838 | 0.8122 | 0.7480 |

| 0.6037 | 6.0 | 174 | 0.5492 | 0.6838 | 0.8122 | 0.7480 |

| 0.5761 | 7.0 | 203 | 0.6065 | 0.6103 | 0.6851 | 0.6477 |

| 0.4782 | 8.0 | 232 | 0.5341 | 0.6863 | 0.7801 | 0.7332 |

| 0.4111 | 9.0 | 261 | 0.5213 | 0.6740 | 0.7787 | 0.7264 |

| 0.3526 | 10.0 | 290 | 0.5792 | 0.6863 | 0.7867 | 0.7365 |

| 0.3188 | 11.0 | 319 | 0.5760 | 0.6936 | 0.7764 | 0.7350 |

| 0.2918 | 12.0 | 348 | 0.6406 | 0.6912 | 0.7879 | 0.7395 |

| 0.2568 | 13.0 | 377 | 0.5908 | 0.6765 | 0.7537 | 0.7151 |

| 0.2472 | 14.0 | 406 | 0.5966 | 0.6863 | 0.7664 | 0.7263 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.9.0

- Tokenizers 0.13.2

|

1727529bd7b09a7b42603eb5fafcd210

|

michelecafagna26/vinvl-base-finetuned-hl-scenes-image-captioning

|

michelecafagna26

|

bert

| 7 | 2 |

pytorch

| 0 |

image-to-text

| true | false | false |

apache-2.0

| null |

['hl-scenes']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['pytorch', 'image-to-text']

| false | true | true | 3,732 | false |

# Model Card: VinVL for Captioning 🖼️

[Microsoft's VinVL](https://github.com/microsoft/Oscar) base fine-tuned on [HL-scenes]() dataset for **scene description generation** downstream task.

# Model fine-tuning 🏋️

The model has been finetuned for 10 epochs on the scenes captions of the [HL]() dataset (available on 🤗 HUB: [michelecafagna26/hl](https://huggingface.co/datasets/michelecafagna26/hl))

# Test set metrics 📈

Obtained with beam size 5 and max length 20

| Bleu-1 | Bleu-2 | Bleu-3 | Bleu-4 | METEOR | ROUGE-L | CIDEr | SPICE |

|--------|--------|--------|--------|--------|---------|-------|-------|

| 0.68 | 0.55 | 0.45 | 0.36 | 0.36 | 0.63 | 1.42 | 0.40 |

# Usage and Installation:

More info about how to install and use this model can be found here: [michelecafagna26/VinVL

](https://github.com/michelecafagna26/VinVL)

# Feature extraction ⛏️

This model has a separate Visualbackbone used to extract features.

More info about:

- the model: [michelecafagna26/vinvl_vg_x152c4](https://huggingface.co/michelecafagna26/vinvl_vg_x152c4)

- the usage: [michelecafagna26/vinvl-visualbackbone](https://github.com/michelecafagna26/vinvl-visualbackbone)

# Quick start: 🚀

```python

from transformers.pytorch_transformers import BertConfig, BertTokenizer

from oscar.modeling.modeling_bert import BertForImageCaptioning

from oscar.wrappers import OscarTensorizer

ckpt = "path/to/the/checkpoint"

device = "cuda" if torch.cuda.is_available() else "cpu"

# original code

config = BertConfig.from_pretrained(ckpt)

tokenizer = BertTokenizer.from_pretrained(ckpt)

model = BertForImageCaptioning.from_pretrained(ckpt, config=config).to(device)

# This takes care of the preprocessing

tensorizer = OscarTensorizer(tokenizer=tokenizer, device=device)

# numpy-arrays with shape (1, num_boxes, feat_size)

# feat_size is 2054 by default in VinVL

visual_features = torch.from_numpy(feat_obj).to(device).unsqueeze(0)

# labels are usually extracted by the features extractor

labels = [['boat', 'boat', 'boat', 'bottom', 'bush', 'coat', 'deck', 'deck', 'deck', 'dock', 'hair', 'jacket']]

inputs = tensorizer.encode(visual_features, labels=labels)

outputs = model(**inputs)

pred = tensorizer.decode(outputs)

# the output looks like this:

# pred = {0: [{'caption': 'in a library', 'conf': 0.7070220112800598]}

```

# Citations 🧾

VinVL model finetuned on scenes descriptions:

```BibTeX

@inproceedings{cafagna-etal-2022-understanding,

title = "Understanding Cross-modal Interactions in {V}{\&}{L} Models that Generate Scene Descriptions",

author = "Cafagna, Michele and

Deemter, Kees van and

Gatt, Albert",

booktitle = "Proceedings of the Workshop on Unimodal and Multimodal Induction of Linguistic Structures (UM-IoS)",

month = dec,

year = "2022",

address = "Abu Dhabi, United Arab Emirates (Hybrid)",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.umios-1.6",

pages = "56--72",

abstract = "Image captioning models tend to describe images in an object-centric way, emphasising visible objects. But image descriptions can also abstract away from objects and describe the type of scene depicted. In this paper, we explore the potential of a state of the art Vision and Language model, VinVL, to caption images at the scene level using (1) a novel dataset which pairs images with both object-centric and scene descriptions. Through (2) an in-depth analysis of the effect of the fine-tuning, we show (3) that a small amount of curated data suffices to generate scene descriptions without losing the capability to identify object-level concepts in the scene; the model acquires a more holistic view of the image compared to when object-centric descriptions are generated. We discuss the parallels between these results and insights from computational and cognitive science research on scene perception.",

}

```

Please consider citing the original project and the VinVL paper

```BibTeX

@misc{han2021image,

title={Image Scene Graph Generation (SGG) Benchmark},

author={Xiaotian Han and Jianwei Yang and Houdong Hu and Lei Zhang and Jianfeng Gao and Pengchuan Zhang},

year={2021},

eprint={2107.12604},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{zhang2021vinvl,

title={Vinvl: Revisiting visual representations in vision-language models},

author={Zhang, Pengchuan and Li, Xiujun and Hu, Xiaowei and Yang, Jianwei and Zhang, Lei and Wang, Lijuan and Choi, Yejin and Gao, Jianfeng},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={5579--5588},

year={2021}

}

```

|

7fc92156e3ffaa858280464c323623be

|

Buntopsih/novgoranstefanovski

|

Buntopsih

| null | 24 | 4 |

diffusers

| 2 |

text-to-image

| false | false | false |

creativeml-openrail-m

| null | null | null | 3 | 2 | 1 | 0 | 0 | 0 | 0 |

['text-to-image']

| false | true | true | 2,202 | false |

### novgoranstefanovski on Stable Diffusion via Dreambooth trained on the [fast-DreamBooth.ipynb by TheLastBen](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

#### Model by Buntopsih

This your the Stable Diffusion model fine-tuned the novgoranstefanovski concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt(s)`: **Robert, retro, Greg, Kim**

You can also train your own concepts and upload them to the library by using [the fast-DremaBooth.ipynb by TheLastBen](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb).

You can run your new concept via A1111 Colab :[Fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Or you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Sample pictures of this concept:

Kim

Greg

retro

Robert

|

3a5af9f94fc3ee5b28a9663f52434c62

|

TransQuest/microtransquest-en_cs-it-smt

|

TransQuest

|

xlm-roberta

| 12 | 25 |

transformers

| 0 |

token-classification

| true | false | false |

apache-2.0

|

['en-cs']

| null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['Quality Estimation', 'microtransquest']

| false | true | true | 5,279 | false |

# TransQuest: Translation Quality Estimation with Cross-lingual Transformers

The goal of quality estimation (QE) is to evaluate the quality of a translation without having access to a reference translation. High-accuracy QE that can be easily deployed for a number of language pairs is the missing piece in many commercial translation workflows as they have numerous potential uses. They can be employed to select the best translation when several translation engines are available or can inform the end user about the reliability of automatically translated content. In addition, QE systems can be used to decide whether a translation can be published as it is in a given context, or whether it requires human post-editing before publishing or translation from scratch by a human. The quality estimation can be done at different levels: document level, sentence level and word level.

With TransQuest, we have opensourced our research in translation quality estimation which also won the sentence-level direct assessment quality estimation shared task in [WMT 2020](http://www.statmt.org/wmt20/quality-estimation-task.html). TransQuest outperforms current open-source quality estimation frameworks such as [OpenKiwi](https://github.com/Unbabel/OpenKiwi) and [DeepQuest](https://github.com/sheffieldnlp/deepQuest).

## Features

- Sentence-level translation quality estimation on both aspects: predicting post editing efforts and direct assessment.

- Word-level translation quality estimation capable of predicting quality of source words, target words and target gaps.

- Outperform current state-of-the-art quality estimation methods like DeepQuest and OpenKiwi in all the languages experimented.

- Pre-trained quality estimation models for fifteen language pairs are available in [HuggingFace.](https://huggingface.co/TransQuest)

## Installation

### From pip

```bash

pip install transquest

```

### From Source

```bash

git clone https://github.com/TharinduDR/TransQuest.git

cd TransQuest

pip install -r requirements.txt

```

## Using Pre-trained Models

```python

from transquest.algo.word_level.microtransquest.run_model import MicroTransQuestModel

import torch

model = MicroTransQuestModel("xlmroberta", "TransQuest/microtransquest-en_cs-it-smt", labels=["OK", "BAD"], use_cuda=torch.cuda.is_available())

source_tags, target_tags = model.predict([["if not , you may not be protected against the diseases . ", "ja tā nav , Jūs varat nepasargāt no slimībām . "]])

```

## Documentation

For more details follow the documentation.

1. **[Installation](https://tharindudr.github.io/TransQuest/install/)** - Install TransQuest locally using pip.

2. **Architectures** - Checkout the architectures implemented in TransQuest

1. [Sentence-level Architectures](https://tharindudr.github.io/TransQuest/architectures/sentence_level_architectures/) - We have released two architectures; MonoTransQuest and SiameseTransQuest to perform sentence level quality estimation.

2. [Word-level Architecture](https://tharindudr.github.io/TransQuest/architectures/word_level_architecture/) - We have released MicroTransQuest to perform word level quality estimation.

3. **Examples** - We have provided several examples on how to use TransQuest in recent WMT quality estimation shared tasks.

1. [Sentence-level Examples](https://tharindudr.github.io/TransQuest/examples/sentence_level_examples/)

2. [Word-level Examples](https://tharindudr.github.io/TransQuest/examples/word_level_examples/)

4. **Pre-trained Models** - We have provided pretrained quality estimation models for fifteen language pairs covering both sentence-level and word-level

1. [Sentence-level Models](https://tharindudr.github.io/TransQuest/models/sentence_level_pretrained/)

2. [Word-level Models](https://tharindudr.github.io/TransQuest/models/word_level_pretrained/)

5. **[Contact](https://tharindudr.github.io/TransQuest/contact/)** - Contact us for any issues with TransQuest

## Citations

If you are using the word-level architecture, please consider citing this paper which is accepted to [ACL 2021](https://2021.aclweb.org/).

```bash

@InProceedings{ranasinghe2021,

author = {Ranasinghe, Tharindu and Orasan, Constantin and Mitkov, Ruslan},

title = {An Exploratory Analysis of Multilingual Word Level Quality Estimation with Cross-Lingual Transformers},

booktitle = {Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics},

year = {2021}

}

```

If you are using the sentence-level architectures, please consider citing these papers which were presented in [COLING 2020](https://coling2020.org/) and in [WMT 2020](http://www.statmt.org/wmt20/) at EMNLP 2020.

```bash

@InProceedings{transquest:2020a,

author = {Ranasinghe, Tharindu and Orasan, Constantin and Mitkov, Ruslan},

title = {TransQuest: Translation Quality Estimation with Cross-lingual Transformers},

booktitle = {Proceedings of the 28th International Conference on Computational Linguistics},

year = {2020}

}

```

```bash

@InProceedings{transquest:2020b,

author = {Ranasinghe, Tharindu and Orasan, Constantin and Mitkov, Ruslan},

title = {TransQuest at WMT2020: Sentence-Level Direct Assessment},

booktitle = {Proceedings of the Fifth Conference on Machine Translation},

year = {2020}

}

```

|

3a52e3637150656d7cdbdba244001f9f

|

bigmorning/whisper3_0005

|

bigmorning

|

whisper

| 7 | 6 |

transformers

| 0 |

automatic-speech-recognition

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,666 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# whisper3_0005

This model is a fine-tuned version of [openai/whisper-tiny](https://huggingface.co/openai/whisper-tiny) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 3.1592

- Train Accuracy: 0.0175

- Validation Loss: 2.8062

- Validation Accuracy: 0.0199

- Epoch: 4

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': 1e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Train Accuracy | Validation Loss | Validation Accuracy | Epoch |

|:----------:|:--------------:|:---------------:|:-------------------:|:-----:|

| 5.0832 | 0.0116 | 4.4298 | 0.0124 | 0 |

| 4.3130 | 0.0131 | 4.0733 | 0.0141 | 1 |

| 3.9211 | 0.0146 | 3.6762 | 0.0157 | 2 |

| 3.5505 | 0.0159 | 3.3453 | 0.0171 | 3 |

| 3.1592 | 0.0175 | 2.8062 | 0.0199 | 4 |

### Framework versions

- Transformers 4.25.0.dev0

- TensorFlow 2.9.2

- Datasets 2.6.1

- Tokenizers 0.13.2

|

d7b5bbd9e44a9aa32a20e3c4586cd78c

|

IIIT-L/xlm-roberta-large-finetuned-TRAC-DS

|

IIIT-L

|

xlm-roberta

| 9 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,553 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-large-finetuned-TRAC-DS

This model is a fine-tuned version of [xlm-roberta-large](https://huggingface.co/xlm-roberta-large) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0992

- Accuracy: 0.3342

- Precision: 0.1114

- Recall: 0.3333

- F1: 0.1670

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4.1187640010910775e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 43

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 6

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 1.1358 | 0.25 | 612 | 1.1003 | 0.4436 | 0.1479 | 0.3333 | 0.2049 |

| 1.1199 | 0.5 | 1224 | 1.1130 | 0.4436 | 0.1479 | 0.3333 | 0.2049 |

| 1.1221 | 0.75 | 1836 | 1.0992 | 0.3342 | 0.1114 | 0.3333 | 0.1670 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.1+cu111

- Datasets 2.3.2

- Tokenizers 0.12.1

|

84e6d183a4835383ef011feb92b8f9e5

|

corgito/finetuning-sentiment-model-3000-samples

|

corgito

|

distilbert

| 13 | 13 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['imdb']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,053 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3105

- Accuracy: 0.87

- F1: 0.8713

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.19.4

- Pytorch 1.11.0+cu113

- Datasets 2.3.0

- Tokenizers 0.12.1

|

f4085a13da58234da67dd50fab56902a

|

Helsinki-NLP/opus-mt-nso-fi

|

Helsinki-NLP

|

marian

| 10 | 7 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 776 | false |

### opus-mt-nso-fi

* source languages: nso

* target languages: fi

* OPUS readme: [nso-fi](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/nso-fi/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/nso-fi/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/nso-fi/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/nso-fi/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.nso.fi | 27.8 | 0.523 |

|

07e018d5d7947ac8997cd932114efa7e

|

NbAiLab/nb-gpt-j-6B

|

NbAiLab

|

gptj

| 10 | 304 |

transformers

| 8 |

text-generation

| true | false | false |

apache-2.0

|

['no', 'nb', 'nn']

|

['NbAiLab/NCC', 'mc4', 'oscar']

| null | 0 | 0 | 0 | 0 | 2 | 1 | 1 |

['pytorch', 'causal-lm']

| false | true | true | 7,744 | false |

- **Release ✨v1✨** (January 18th, 2023) *[Full-precision](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1), [sharded](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1-sharded), [half-precision](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1-float16), and [mesh-transformers-jax](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1-mesh) weights*

<details><summary>All checkpoints</summary>

- **Release v1beta5** (December 18th, 2022) *[Full-precision](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta5), [sharded](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta5-sharded), and [half-precision](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta5-float16) weights*

- **Release v1beta4** (October 28th, 2022) *[Full-precision](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta4), [sharded](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta4-sharded), and [half-precision](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta4-float16) weights*

- **Release v1beta3** (August 8th, 2022) *[Full-precision](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta3), [sharded](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta3-sharded), and [half-precision](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta3-float16) weights*

- **Release v1beta2** (June 18th, 2022) *[Full-precision](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta2), [sharded](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/sharded), and [half-precision](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta2-float16) weights*

- **Release v1beta1** (April 28th, 2022) *[Half-precision](https://huggingface.co/NbAiLab/nb-gpt-j-6B/tree/v1beta1-float16) weights*

</details>

# NB-GPT-J-6B

## Demo: https://ai.nb.no/demo/nb-gpt-j-6B/ (Be patient, it runs on CPU 😅)

## Model Description

NB-GPT-J-6B is a Norwegian finetuned version of GPT-J 6B, a transformer model trained using Ben Wang's [Mesh Transformer JAX](https://github.com/kingoflolz/mesh-transformer-jax/). "GPT-J" refers to the class of model, while "6B" represents the number of trainable parameters (6 billion parameters).

<figure>

| Hyperparameter | Value |

|----------------------|------------|

| \\(n_{parameters}\\) | 6053381344 |

| \\(n_{layers}\\) | 28* |

| \\(d_{model}\\) | 4096 |

| \\(d_{ff}\\) | 16384 |

| \\(n_{heads}\\) | 16 |

| \\(d_{head}\\) | 256 |

| \\(n_{ctx}\\) | 2048 |

| \\(n_{vocab}\\) | 50257/50400† (same tokenizer as GPT-2/3) |

| Positional Encoding | [Rotary Position Embedding (RoPE)](https://arxiv.org/abs/2104.09864) |

| RoPE Dimensions | [64](https://github.com/kingoflolz/mesh-transformer-jax/blob/f2aa66e0925de6593dcbb70e72399b97b4130482/mesh_transformer/layers.py#L223) |

<figcaption><p><strong>*</strong> Each layer consists of one feedforward block and one self attention block.</p>

<p><strong>†</strong> Although the embedding matrix has a size of 50400, only 50257 entries are used by the GPT-2 tokenizer.</p></figcaption></figure>

The model consists of 28 layers with a model dimension of 4096, and a feedforward dimension of 16384. The model

dimension is split into 16 heads, each with a dimension of 256. Rotary Position Embedding (RoPE) is applied to 64

dimensions of each head. The model is trained with a tokenization vocabulary of 50257, using the same set of BPEs as

GPT-2/GPT-3.

## Training data

NB-GPT-J-6B was finetuned on [NCC](https://huggingface.co/datasets/NbAiLab/NCC), the Norwegian Colossal Corpus, plus other Internet sources like Wikipedia, mC4, and OSCAR.

## Training procedure

This model was finetuned for 130 billion tokens over 1,000,000 steps on a TPU v3-8 VM. It was trained as an autoregressive language model, using cross-entropy loss to maximize the likelihood of predicting the next token correctly.

## Intended Use and Limitations

NB-GPT-J-6B learns an inner representation of the Norwegian language that can be used to extract features useful for downstream tasks. The model is best at what it was pretrained for however, which is generating text from a prompt.

### How to use

This model can be easily loaded using the `AutoModelForCausalLM` functionality:

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("NbAiLab/nb-gpt-j-6B")

model = AutoModelForCausalLM.from_pretrained("NbAiLab/nb-gpt-j-6B")

```

### Limitations and Biases

As the original GPT-J model, the core functionality of NB-GPT-J-6B is taking a string of text and predicting the next token. While language models are widely used for tasks other than this, there are a lot of unknowns with this work. When prompting NB-GPT-J-6B it is important to remember that the statistically most likely next token is often not the token that produces the most "accurate" text. Never depend upon NB-GPT-J-6B to produce factually accurate output.

The original GPT-J was trained on the Pile, a dataset known to contain profanity, lewd, and otherwise abrasive language. Depending upon use case GPT-J may produce socially unacceptable text. See [Sections 5 and 6 of the Pile paper](https://arxiv.org/abs/2101.00027) for a more detailed analysis of the biases in the Pile. A fine-grained analysis of the bias contained in the corpus used for fine-tuning is still pending.

As with all language models, it is hard to predict in advance how NB-GPT-J-6B will respond to particular prompts and offensive content may occur without warning. We recommend having a human curate or filter the outputs before releasing them, both to censor undesirable content and to improve the quality of the results.

## Evaluation results

We still have to find proper datasets to evaluate the model, so help is welcome!

## Citation and Related Information

### BibTeX entry

To cite this model or the corpus used:

```bibtex

@inproceedings{kummervold2021operationalizing,

title={Operationalizing a National Digital Library: The Case for a Norwegian Transformer Model},

author={Kummervold, Per E and De la Rosa, Javier and Wetjen, Freddy and Brygfjeld, Svein Arne},

booktitle={Proceedings of the 23rd Nordic Conference on Computational Linguistics (NoDaLiDa)},

pages={20--29},

year={2021},

url={https://aclanthology.org/2021.nodalida-main.3/}

}

```

If you use this model, we would love to hear about it! Reach out on twitter, GitHub, Discord, or shoot us an email.

## Disclaimer

The models published in this repository are intended for a generalist purpose and are available to third parties. These models may have bias and/or any other undesirable distortions. When third parties, deploy or provide systems and/or services to other parties using any of these models (or using systems based on these models) or become users of the models, they should note that it is their responsibility to mitigate the risks arising from their use and, in any event, to comply with applicable regulations, including regulations regarding the use of artificial intelligence. In no event shall the owner of the models (The National Library of Norway) be liable for any results arising from the use made by third parties of these models.

## Acknowledgements

This project would not have been possible without compute generously provided by Google through the

[TPU Research Cloud](https://sites.research.google/trc/), as well as the Cloud TPU team for providing early access to the [Cloud TPU VM](https://cloud.google.com/blog/products/compute/introducing-cloud-tpu-vms) Alpha. Specially, to [Stella Biderman](https://www.stellabiderman.com) for her general openness, and [Ben Wang](https://github.com/kingoflolz/mesh-transformer-jax) for the main codebase.

|

f6aecb191bc636ebc8a480e50559688d

|

cross-encoder/nli-MiniLM2-L6-H768

|

cross-encoder

|

roberta

| 10 | 3,083 |

transformers

| 2 |

zero-shot-classification

| true | false | false |

apache-2.0

|

['en']

|

['multi_nli', 'snli']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['MiniLMv2']

| false | true | true | 2,421 | false |

# Cross-Encoder for Natural Language Inference

This model was trained using [SentenceTransformers](https://sbert.net) [Cross-Encoder](https://www.sbert.net/examples/applications/cross-encoder/README.html) class.

## Training Data

The model was trained on the [SNLI](https://nlp.stanford.edu/projects/snli/) and [MultiNLI](https://cims.nyu.edu/~sbowman/multinli/) datasets. For a given sentence pair, it will output three scores corresponding to the labels: contradiction, entailment, neutral.

## Performance

For evaluation results, see [SBERT.net - Pretrained Cross-Encoder](https://www.sbert.net/docs/pretrained_cross-encoders.html#nli).

## Usage

Pre-trained models can be used like this:

```python

from sentence_transformers import CrossEncoder

model = CrossEncoder('cross-encoder/nli-MiniLM2-L6-H768')

scores = model.predict([('A man is eating pizza', 'A man eats something'), ('A black race car starts up in front of a crowd of people.', 'A man is driving down a lonely road.')])

#Convert scores to labels

label_mapping = ['contradiction', 'entailment', 'neutral']

labels = [label_mapping[score_max] for score_max in scores.argmax(axis=1)]

```

## Usage with Transformers AutoModel

You can use the model also directly with Transformers library (without SentenceTransformers library):

```python

from transformers import AutoTokenizer, AutoModelForSequenceClassification

import torch

model = AutoModelForSequenceClassification.from_pretrained('cross-encoder/nli-MiniLM2-L6-H768')

tokenizer = AutoTokenizer.from_pretrained('cross-encoder/nli-MiniLM2-L6-H768')

features = tokenizer(['A man is eating pizza', 'A black race car starts up in front of a crowd of people.'], ['A man eats something', 'A man is driving down a lonely road.'], padding=True, truncation=True, return_tensors="pt")

model.eval()

with torch.no_grad():

scores = model(**features).logits

label_mapping = ['contradiction', 'entailment', 'neutral']

labels = [label_mapping[score_max] for score_max in scores.argmax(dim=1)]

print(labels)

```

## Zero-Shot Classification

This model can also be used for zero-shot-classification:

```python

from transformers import pipeline

classifier = pipeline("zero-shot-classification", model='cross-encoder/nli-MiniLM2-L6-H768')

sent = "Apple just announced the newest iPhone X"

candidate_labels = ["technology", "sports", "politics"]

res = classifier(sent, candidate_labels)

print(res)

```

|

dc99091b60d9b82f4c732b4645a15b84

|

jonatasgrosman/exp_w2v2t_ru_unispeech-ml_s569

|

jonatasgrosman

|

unispeech

| 10 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['ru']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'ru']

| false | true | true | 500 | false |

# exp_w2v2t_ru_unispeech-ml_s569

Fine-tuned [microsoft/unispeech-large-multi-lingual-1500h-cv](https://huggingface.co/microsoft/unispeech-large-multi-lingual-1500h-cv) for speech recognition using the train split of [Common Voice 7.0 (ru)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

58a7ed42bb6432a0eaa9f9e3e6c4d73e

|

bert-large-cased

| null |

bert

| 10 | 160,722 |

transformers

| 4 |

fill-mask

| true | true | true |

apache-2.0

|

['en']

|

['bookcorpus', 'wikipedia']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 9,138 | false |

# BERT large model (cased)

Pretrained model on English language using a masked language modeling (MLM) objective. It was introduced in

[this paper](https://arxiv.org/abs/1810.04805) and first released in

[this repository](https://github.com/google-research/bert). This model is cased: it makes a difference

between english and English.

Disclaimer: The team releasing BERT did not write a model card for this model so this model card has been written by

the Hugging Face team.

## Model description

BERT is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it

was pretrained with two objectives:

- Masked language modeling (MLM): taking a sentence, the model randomly masks 15% of the words in the input then run

the entire masked sentence through the model and has to predict the masked words. This is different from traditional

recurrent neural networks (RNNs) that usually see the words one after the other, or from autoregressive models like

GPT which internally mask the future tokens. It allows the model to learn a bidirectional representation of the

sentence.

- Next sentence prediction (NSP): the models concatenates two masked sentences as inputs during pretraining. Sometimes

they correspond to sentences that were next to each other in the original text, sometimes not. The model then has to

predict if the two sentences were following each other or not.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

This model has the following configuration:

- 24-layer

- 1024 hidden dimension

- 16 attention heads

- 336M parameters.

## Intended uses & limitations

You can use the raw model for either masked language modeling or next sentence prediction, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=bert) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

You can use this model directly with a pipeline for masked language modeling:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='bert-large-cased')

>>> unmasker("Hello I'm a [MASK] model.")

[

{

"sequence":"[CLS] Hello I'm a male model. [SEP]",

"score":0.22748498618602753,

"token":2581,

"token_str":"male"

},

{

"sequence":"[CLS] Hello I'm a fashion model. [SEP]",

"score":0.09146175533533096,

"token":4633,

"token_str":"fashion"

},

{

"sequence":"[CLS] Hello I'm a new model. [SEP]",

"score":0.05823173746466637,

"token":1207,

"token_str":"new"

},

{

"sequence":"[CLS] Hello I'm a super model. [SEP]",

"score":0.04488750174641609,

"token":7688,

"token_str":"super"

},

{

"sequence":"[CLS] Hello I'm a famous model. [SEP]",

"score":0.03271442651748657,

"token":2505,

"token_str":"famous"

}

]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('bert-large-cased')

model = BertModel.from_pretrained("bert-large-cased")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import BertTokenizer, TFBertModel

tokenizer = BertTokenizer.from_pretrained('bert-large-cased')

model = TFBertModel.from_pretrained("bert-large-cased")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

### Limitations and bias

Even if the training data used for this model could be characterized as fairly neutral, this model can have biased

predictions:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='bert-large-cased')

>>> unmasker("The man worked as a [MASK].")

[

{

"sequence":"[CLS] The man worked as a doctor. [SEP]",

"score":0.0645911768078804,

"token":3995,

"token_str":"doctor"

},

{

"sequence":"[CLS] The man worked as a cop. [SEP]",

"score":0.057450827211141586,

"token":9947,

"token_str":"cop"

},

{

"sequence":"[CLS] The man worked as a mechanic. [SEP]",

"score":0.04392256215214729,

"token":19459,

"token_str":"mechanic"

},

{

"sequence":"[CLS] The man worked as a waiter. [SEP]",

"score":0.03755280375480652,

"token":17989,

"token_str":"waiter"

},

{

"sequence":"[CLS] The man worked as a teacher. [SEP]",

"score":0.03458863124251366,

"token":3218,

"token_str":"teacher"

}

]

>>> unmasker("The woman worked as a [MASK].")

[

{

"sequence":"[CLS] The woman worked as a nurse. [SEP]",

"score":0.2572779953479767,

"token":7439,

"token_str":"nurse"

},

{

"sequence":"[CLS] The woman worked as a waitress. [SEP]",

"score":0.16706500947475433,

"token":15098,

"token_str":"waitress"

},

{

"sequence":"[CLS] The woman worked as a teacher. [SEP]",

"score":0.04587847739458084,

"token":3218,

"token_str":"teacher"

},

{

"sequence":"[CLS] The woman worked as a secretary. [SEP]",

"score":0.03577028587460518,

"token":4848,

"token_str":"secretary"

},

{

"sequence":"[CLS] The woman worked as a maid. [SEP]",

"score":0.03298963978886604,

"token":13487,

"token_str":"maid"

}

]

```

This bias will also affect all fine-tuned versions of this model.

## Training data

The BERT model was pretrained on [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038

unpublished books and [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and

headers).

## Training procedure

### Preprocessing

The texts are lowercased and tokenized using WordPiece and a vocabulary size of 30,000. The inputs of the model are

then of the form:

```

[CLS] Sentence A [SEP] Sentence B [SEP]

```

With probability 0.5, sentence A and sentence B correspond to two consecutive sentences in the original corpus and in

the other cases, it's another random sentence in the corpus. Note that what is considered a sentence here is a

consecutive span of text usually longer than a single sentence. The only constrain is that the result with the two

"sentences" has a combined length of less than 512 tokens.

The details of the masking procedure for each sentence are the following:

- 15% of the tokens are masked.

- In 80% of the cases, the masked tokens are replaced by `[MASK]`.

- In 10% of the cases, the masked tokens are replaced by a random token (different) from the one they replace.

- In the 10% remaining cases, the masked tokens are left as is.

### Pretraining

The model was trained on 4 cloud TPUs in Pod configuration (16 TPU chips total) for one million steps with a batch size

of 256. The sequence length was limited to 128 tokens for 90% of the steps and 512 for the remaining 10%. The optimizer

used is Adam with a learning rate of 1e-4, \\(\beta_{1} = 0.9\\) and \\(\beta_{2} = 0.999\\), a weight decay of 0.01,

learning rate warmup for 10,000 steps and linear decay of the learning rate after.

## Evaluation results

When fine-tuned on downstream tasks, this model achieves the following results:

Model | SQUAD 1.1 F1/EM | Multi NLI Accuracy

---------------------------------------- | :-------------: | :----------------:

BERT-Large, Cased (Original) | 91.5/84.8 | 86.09

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-1810-04805,

author = {Jacob Devlin and

Ming{-}Wei Chang and

Kenton Lee and

Kristina Toutanova},

title = {{BERT:} Pre-training of Deep Bidirectional Transformers for Language

Understanding},

journal = {CoRR},

volume = {abs/1810.04805},

year = {2018},

url = {http://arxiv.org/abs/1810.04805},

archivePrefix = {arXiv},

eprint = {1810.04805},

timestamp = {Tue, 30 Oct 2018 20:39:56 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1810-04805.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

dd87832eb9442c7db1900807ff653c1a

|

weirdguitarist/wav2vec2-base-stac-local

|

weirdguitarist

|

wav2vec2

| 10 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 3,380 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-stac-local

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.9746

- Wer: 0.7828

- Cer: 0.3202

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 2

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Cer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|

| 2.0603 | 1.0 | 2369 | 2.1282 | 0.9517 | 0.5485 |

| 1.6155 | 2.0 | 4738 | 1.6196 | 0.9060 | 0.4565 |

| 1.3462 | 3.0 | 7107 | 1.4331 | 0.8379 | 0.3983 |

| 1.1819 | 4.0 | 9476 | 1.3872 | 0.8233 | 0.3717 |

| 1.0189 | 5.0 | 11845 | 1.4066 | 0.8328 | 0.3660 |

| 0.9026 | 6.0 | 14214 | 1.3502 | 0.8198 | 0.3508 |

| 0.777 | 7.0 | 16583 | 1.3016 | 0.7922 | 0.3433 |

| 0.7109 | 8.0 | 18952 | 1.2662 | 0.8302 | 0.3510 |

| 0.6766 | 9.0 | 21321 | 1.4321 | 0.8103 | 0.3368 |

| 0.6078 | 10.0 | 23690 | 1.3592 | 0.7871 | 0.3360 |

| 0.5958 | 11.0 | 26059 | 1.4389 | 0.7819 | 0.3397 |

| 0.5094 | 12.0 | 28428 | 1.3391 | 0.8017 | 0.3239 |

| 0.4567 | 13.0 | 30797 | 1.4718 | 0.8026 | 0.3347 |

| 0.4448 | 14.0 | 33166 | 1.7450 | 0.8043 | 0.3424 |

| 0.3976 | 15.0 | 35535 | 1.4581 | 0.7888 | 0.3283 |

| 0.3449 | 16.0 | 37904 | 1.5688 | 0.8078 | 0.3397 |

| 0.3046 | 17.0 | 40273 | 1.8630 | 0.8060 | 0.3448 |

| 0.2983 | 18.0 | 42642 | 1.8400 | 0.8190 | 0.3425 |

| 0.2728 | 19.0 | 45011 | 1.6726 | 0.8034 | 0.3280 |

| 0.2579 | 20.0 | 47380 | 1.6661 | 0.8138 | 0.3249 |

| 0.2169 | 21.0 | 49749 | 1.7389 | 0.8138 | 0.3277 |

| 0.2498 | 22.0 | 52118 | 1.7205 | 0.7948 | 0.3207 |

| 0.1831 | 23.0 | 54487 | 1.8641 | 0.8103 | 0.3229 |

| 0.1927 | 24.0 | 56856 | 1.8724 | 0.7784 | 0.3251 |

| 0.1649 | 25.0 | 59225 | 1.9187 | 0.7974 | 0.3277 |

| 0.1594 | 26.0 | 61594 | 1.9022 | 0.7828 | 0.3220 |

| 0.1338 | 27.0 | 63963 | 1.9303 | 0.7862 | 0.3212 |

| 0.1441 | 28.0 | 66332 | 1.9528 | 0.7845 | 0.3207 |

| 0.129 | 29.0 | 68701 | 1.9676 | 0.7819 | 0.3212 |

| 0.1169 | 30.0 | 71070 | 1.9746 | 0.7828 | 0.3202 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.8.1+cu102

- Datasets 1.18.3

- Tokenizers 0.12.1

|

7b8c026b263e95c62138fb1fe6ebf8ad

|

0x7f/ddpm-butterflies-128

|

0x7f

| null | 13 | 2 |

diffusers

| 0 | null | false | false | false |

apache-2.0

|

['en']

|

['huggan/smithsonian_butterflies_subset']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,226 | false |

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/smithsonian_butterflies_subset` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]