repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

mbien/wav2vec2-large-xlsr-polish

|

mbien

|

wav2vec2

| 9 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | true |

apache-2.0

|

['pl']

|

['common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['audio', 'automatic-speech-recognition', 'speech', 'xlsr-fine-tuning-week']

| true | true | true | 3,384 | false |

# Wav2Vec2-Large-XLSR-53-Polish

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on Polish using the [Common Voice](https://huggingface.co/datasets/common_voice) dataset.

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "pl", split="test[:2%]")

processor = Wav2Vec2Processor.from_pretrained("mbien/wav2vec2-large-xlsr-polish")

model = Wav2Vec2ForCTC.from_pretrained("mbien/wav2vec2-large-xlsr-polish")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on the Polish test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "pl", split="test")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("mbien/wav2vec2-large-xlsr-polish")

model = Wav2Vec2ForCTC.from_pretrained("mbien/wav2vec2-large-xlsr-polish")

model.to("cuda")

chars_to_ignore_regex = '[\—\…\,\?\.\!\-\;\:\"\“\„\%\‘\”\�\«\»\'\’]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 23.01 %

## Training

The Common Voice `train`, `validation` datasets were used for training.

The script used for training can be found [here](https://colab.research.google.com/drive/1DvrFMoKp9h3zk_eXrJF2s4_TGDHh0tMc?usp=sharing)

|

d24f6908e786b7fcc35c424463ff5c60

|

colab71/sd-1.5-niraj-1000

|

colab71

| null | 18 | 9 |

diffusers

| 0 |

text-to-image

| true | false | false |

creativeml-openrail-m

| null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['pytorch', 'diffusers', 'stable-diffusion', 'text-to-image', 'diffusion-models-class', 'dreambooth-hackathon', 'man']

| false | true | true | 699 | false |

# Settings

Steps:1000

Class Images:50

# DreamBooth model for the niraj concept trained by colab71 on the dataset.

This is a Stable Diffusion model fine-tuned on the niraj concept with DreamBooth. It can be used by modifying the

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on niraj's images for the person theme.

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('colab71/sd-1.5-niraj-1000')

image = pipeline().images[0]

image

```

|

3d7c8afa1b95986ec82dc95f36d4d95b

|

yuhuizhang/finetuned_gpt2-medium_sst2_negation0.05

|

yuhuizhang

|

gpt2

| 11 | 0 |

transformers

| 0 |

text-generation

| true | false | false |

mit

| null |

['sst2']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,252 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuned_gpt2-medium_sst2_negation0.05

This model is a fine-tuned version of [gpt2-medium](https://huggingface.co/gpt2-medium) on the sst2 dataset.

It achieves the following results on the evaluation set:

- Loss: 3.4461

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.8275 | 1.0 | 1062 | 3.3098 |

| 2.5383 | 2.0 | 2124 | 3.3873 |

| 2.3901 | 3.0 | 3186 | 3.4461 |

### Framework versions

- Transformers 4.22.2

- Pytorch 1.12.1+cu113

- Datasets 2.5.2

- Tokenizers 0.12.1

|

4f7fea2e20d8e90457c137511455dac8

|

maren-hugg/xlm-roberta-base-finetuned-panx-de-en

|

maren-hugg

|

xlm-roberta

| 10 | 3 |

transformers

| 0 |

token-classification

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,321 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de-en

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2239

- F1: 0.8201

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2573 | 1.0 | 625 | 0.2573 | 0.7591 |

| 0.1631 | 2.0 | 1250 | 0.2147 | 0.8127 |

| 0.1096 | 3.0 | 1875 | 0.2239 | 0.8201 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.13.0+cu116

- Datasets 1.16.1

- Tokenizers 0.10.3

|

ff67c6e79f6c9e9f467eeffadb74eb63

|

sd-concepts-library/tony-diterlizzi-s-planescape-art

|

sd-concepts-library

| null | 31 | 0 | null | 8 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 4,462 | false |

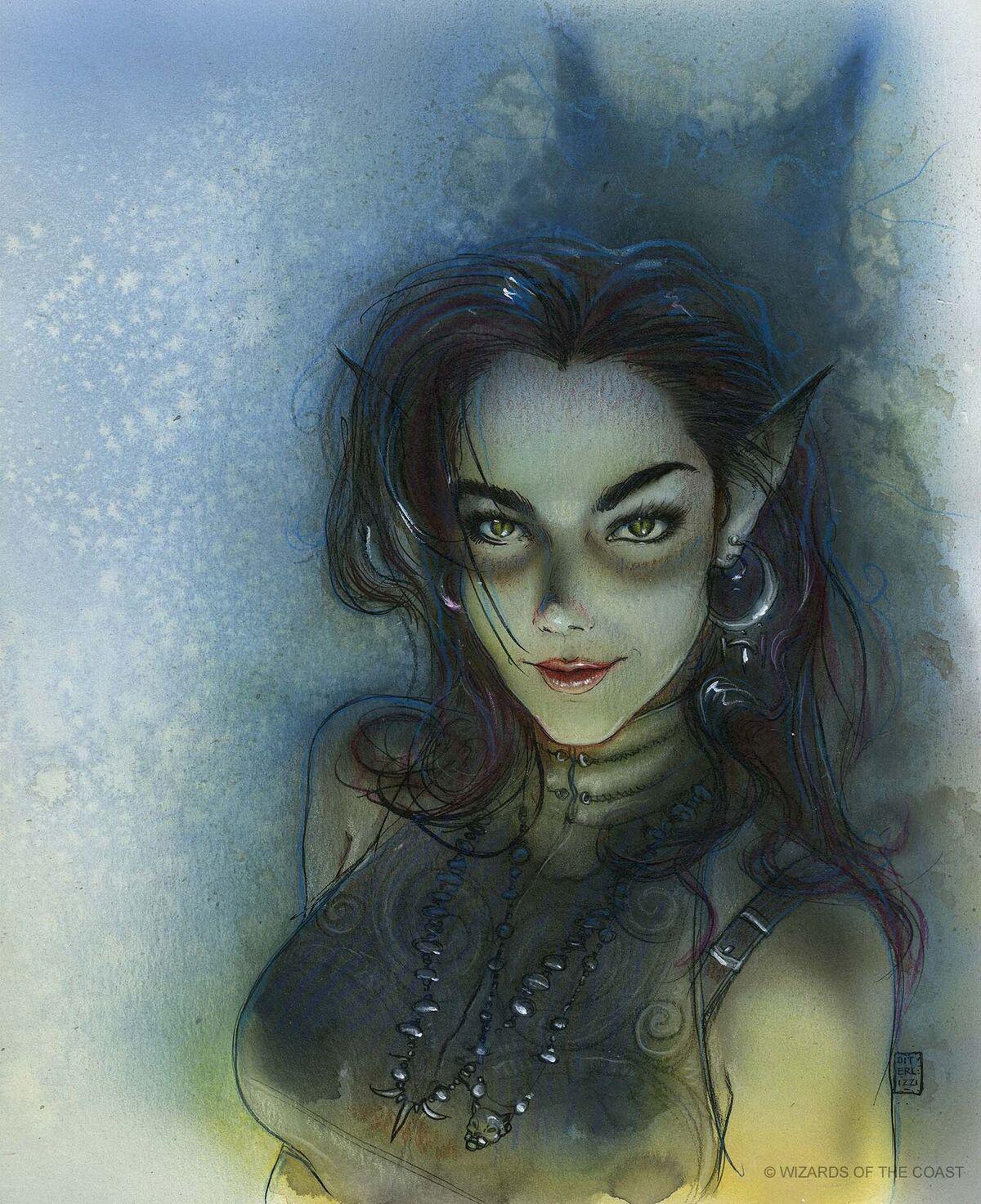

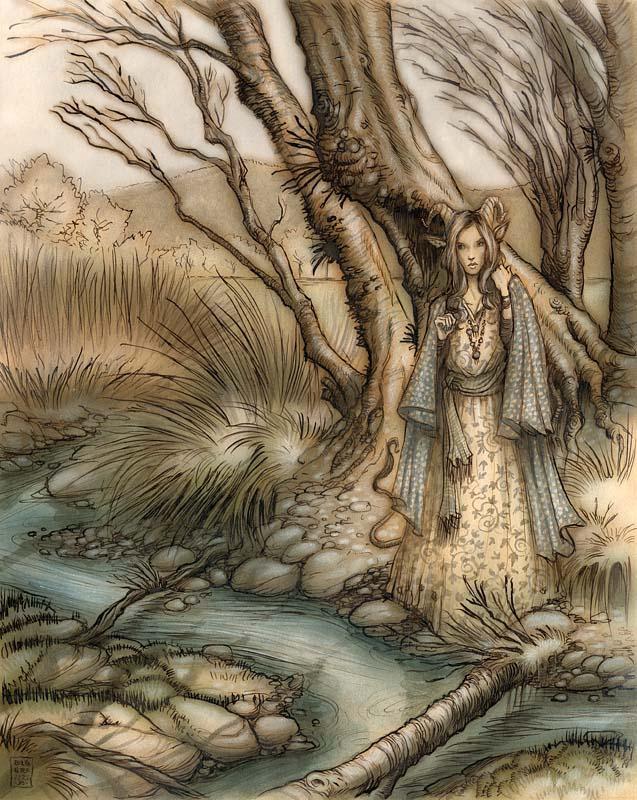

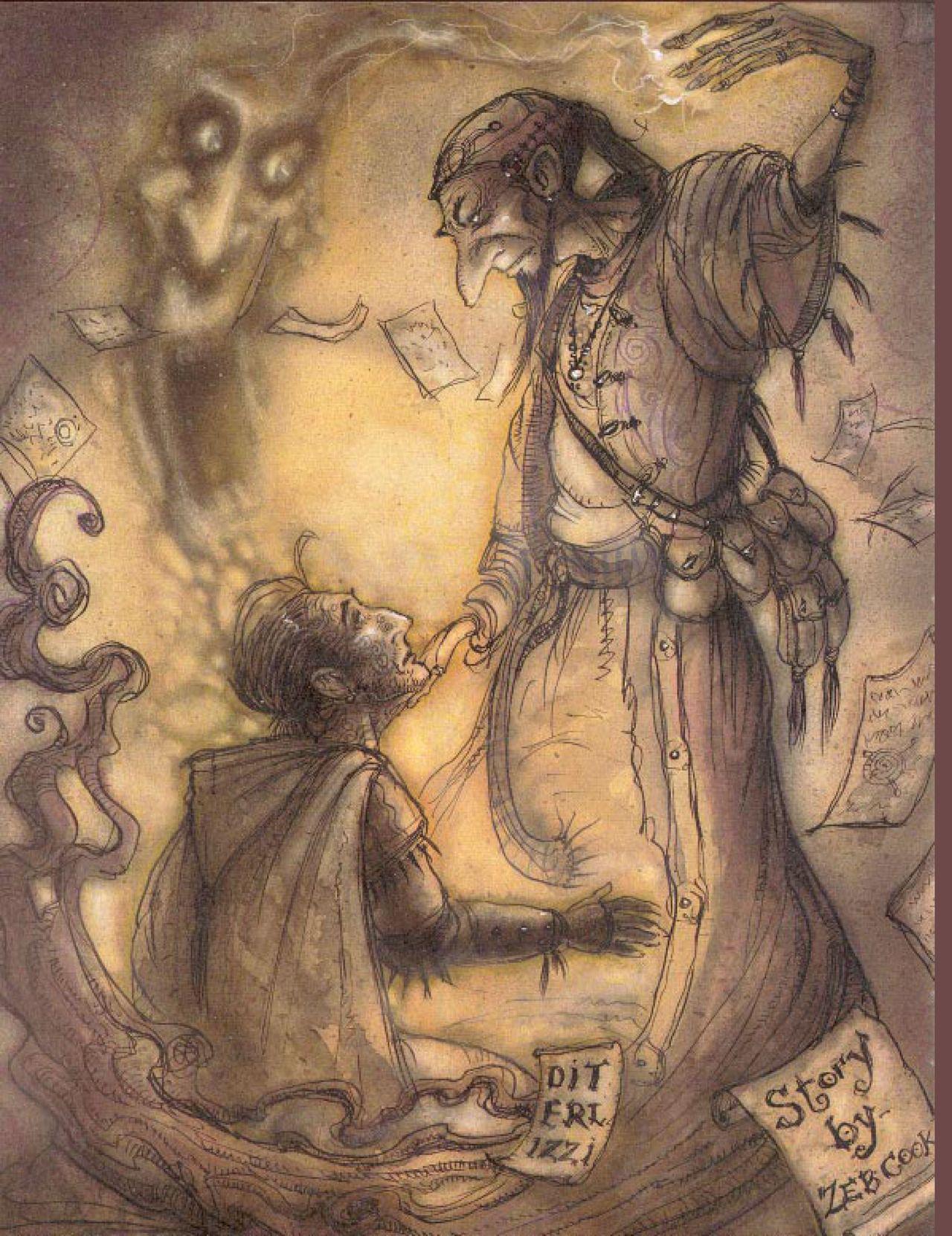

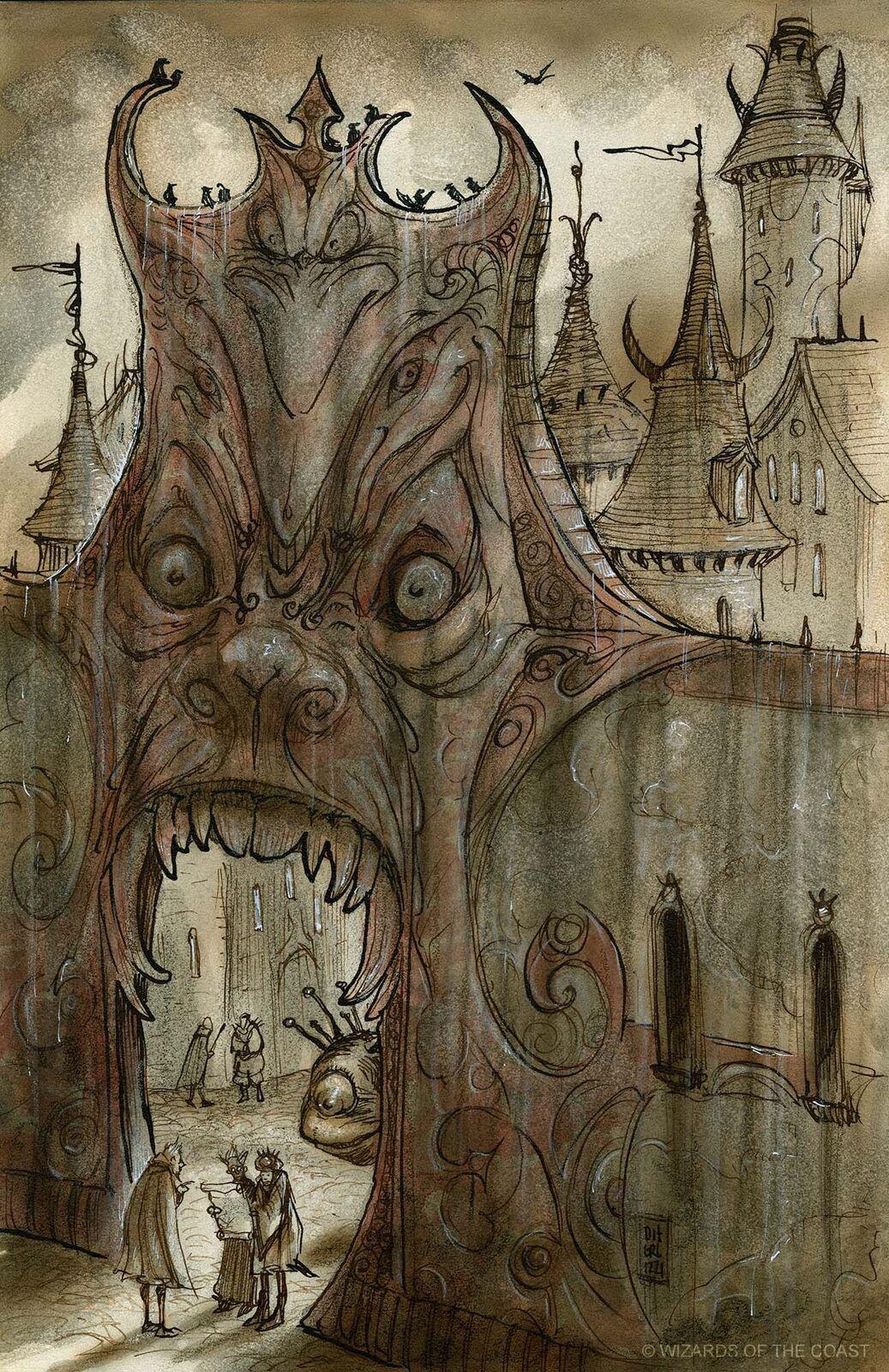

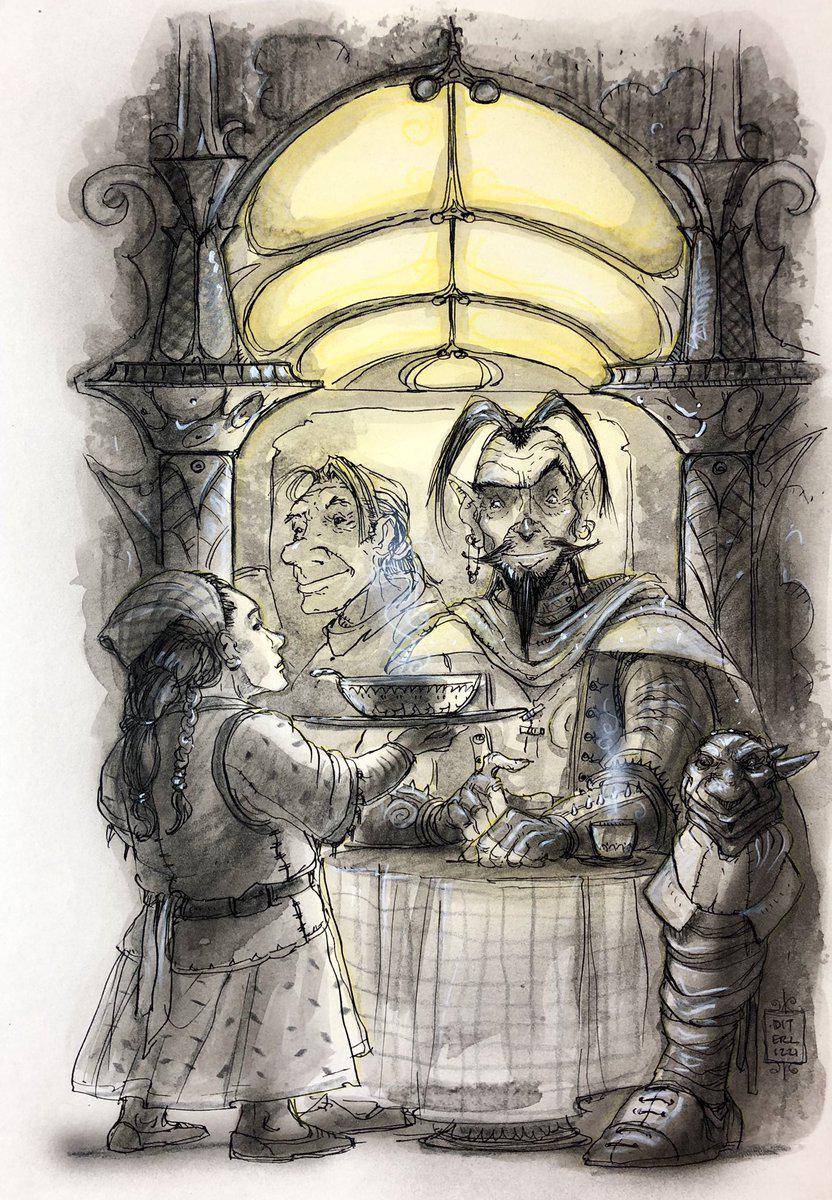

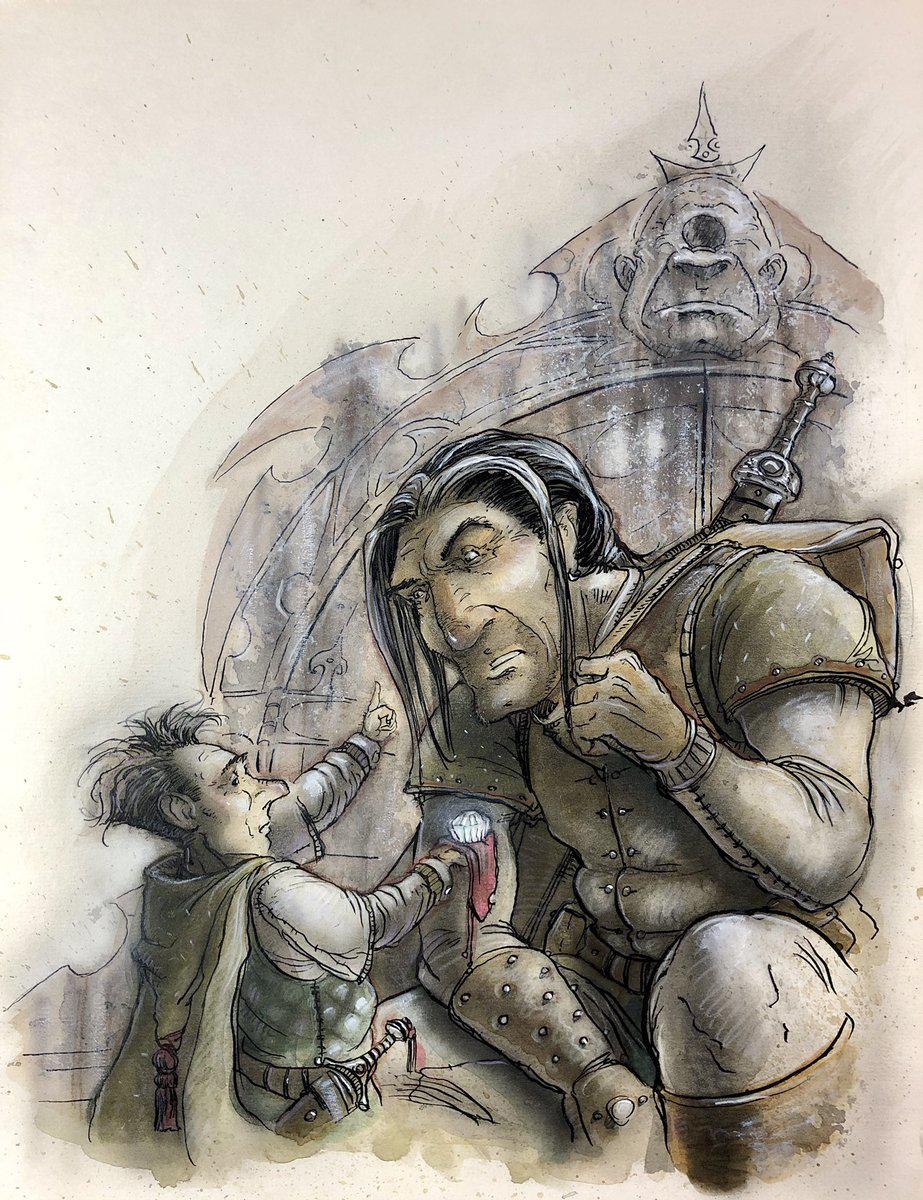

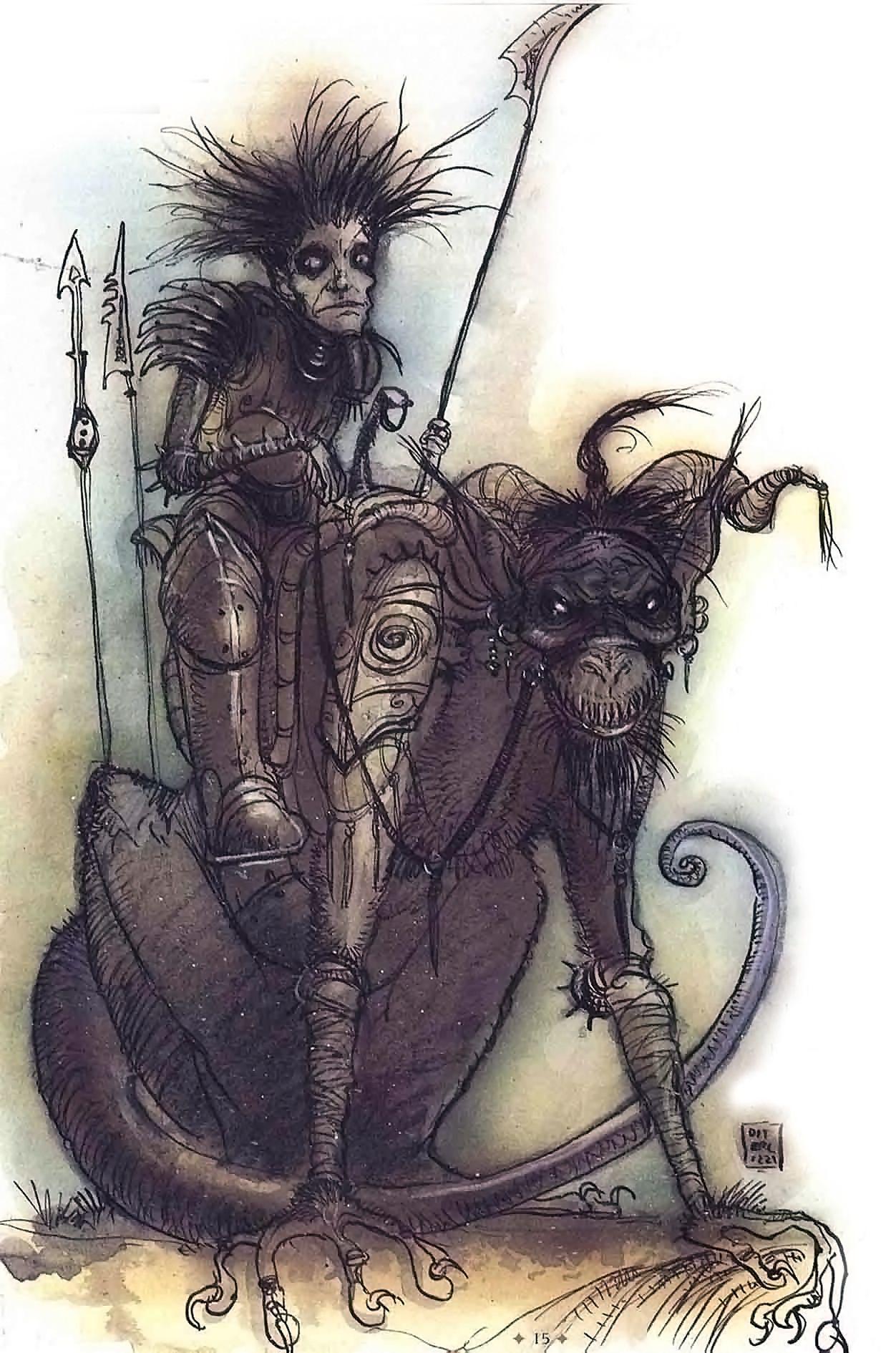

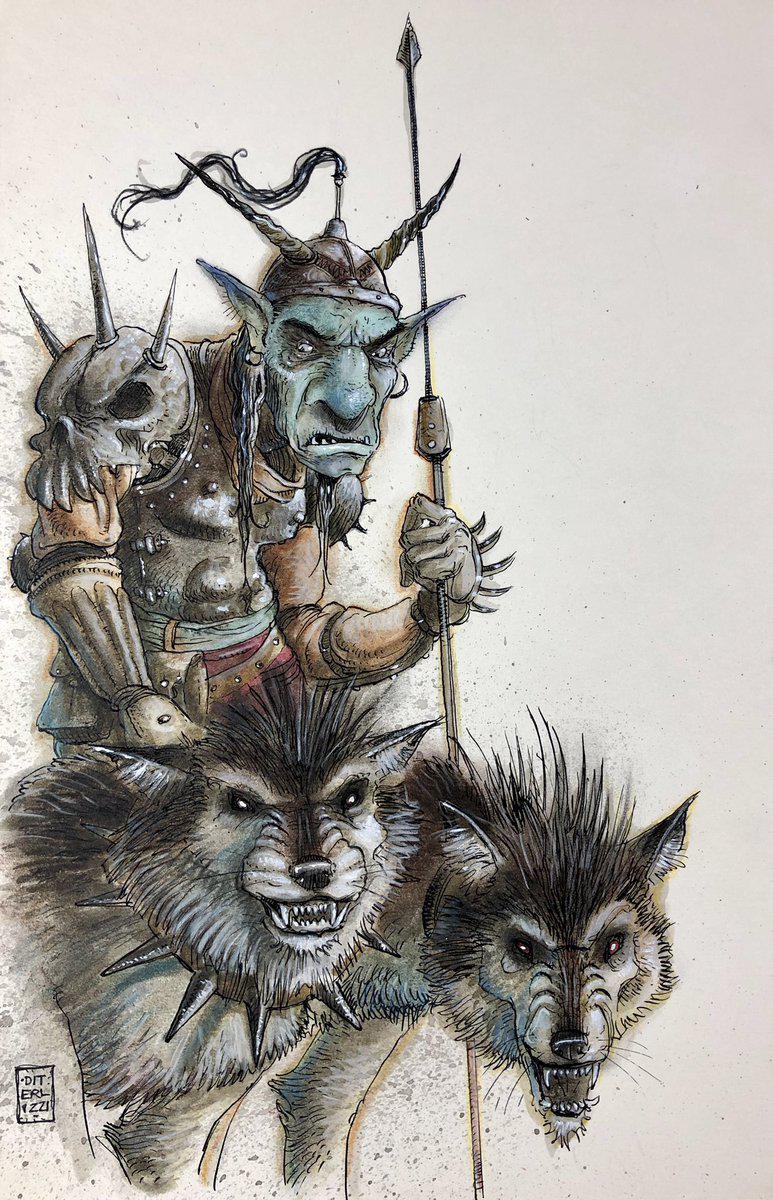

### Tony DiTerlizzi's Planescape Art on Stable Diffusion

This is the `<tony-diterlizzi-planescape>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

83a37c449ebd82921e59a32a039caef4

|

steysie/paraphrase-multilingual-mpnet-base-v2-tuned-smartcat

|

steysie

|

xlm-roberta

| 6 | 44 |

transformers

| 0 |

text-generation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,848 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# paraphrase-multilingual-mpnet-base-v2-tuned-smartcat

This model is a fine-tuned version of [sentence-transformers/paraphrase-multilingual-mpnet-base-v2](https://huggingface.co/sentence-transformers/paraphrase-multilingual-mpnet-base-v2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0000

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 6

- eval_batch_size: 6

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:------:|:---------------:|

| 0.0072 | 0.16 | 10000 | 0.0025 |

| 0.0014 | 0.32 | 20000 | 0.0005 |

| 0.0004 | 0.48 | 30000 | 0.0002 |

| 0.0002 | 0.64 | 40000 | 0.0001 |

| 0.0003 | 0.81 | 50000 | 0.0001 |

| 0.0002 | 0.97 | 60000 | 0.0000 |

| 0.0001 | 1.13 | 70000 | 0.0000 |

| 0.0001 | 1.29 | 80000 | 0.0000 |

| 0.0001 | 1.45 | 90000 | 0.0000 |

| 0.0001 | 1.61 | 100000 | 0.0000 |

| 0.0 | 1.77 | 110000 | 0.0000 |

| 0.0 | 1.93 | 120000 | 0.0000 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu102

- Datasets 2.2.1

- Tokenizers 0.12.1

|

090403100ba2c4835d3d5a5cb563c29b

|

ghatgetanuj/bert-large-uncased_cls_SentEval-CR

|

ghatgetanuj

|

bert

| 12 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,529 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-large-uncased_cls_SentEval-CR

This model is a fine-tuned version of [bert-large-uncased](https://huggingface.co/bert-large-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3488

- Accuracy: 0.9283

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.2

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 189 | 0.2951 | 0.8977 |

| No log | 2.0 | 378 | 0.2895 | 0.8964 |

| 0.2663 | 3.0 | 567 | 0.3707 | 0.9044 |

| 0.2663 | 4.0 | 756 | 0.4130 | 0.9203 |

| 0.2663 | 5.0 | 945 | 0.3488 | 0.9283 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

7cffe1aa97d66fbeaeab365706a435db

|

Helsinki-NLP/opus-mt-bat-en

|

Helsinki-NLP

|

marian

| 11 | 19,324 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

|

['lt', 'lv', 'bat', 'en']

| null | null | 2 | 2 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 2,504 | false |

### bat-eng

* source group: Baltic languages

* target group: English

* OPUS readme: [bat-eng](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/bat-eng/README.md)

* model: transformer

* source language(s): lav lit ltg prg_Latn sgs

* target language(s): eng

* model: transformer

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* download original weights: [opus2m-2020-07-31.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/bat-eng/opus2m-2020-07-31.zip)

* test set translations: [opus2m-2020-07-31.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/bat-eng/opus2m-2020-07-31.test.txt)

* test set scores: [opus2m-2020-07-31.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/bat-eng/opus2m-2020-07-31.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| newsdev2017-enlv-laveng.lav.eng | 27.5 | 0.566 |

| newsdev2019-enlt-liteng.lit.eng | 27.8 | 0.557 |

| newstest2017-enlv-laveng.lav.eng | 21.1 | 0.512 |

| newstest2019-lten-liteng.lit.eng | 30.2 | 0.592 |

| Tatoeba-test.lav-eng.lav.eng | 51.5 | 0.687 |

| Tatoeba-test.lit-eng.lit.eng | 55.1 | 0.703 |

| Tatoeba-test.multi.eng | 50.6 | 0.662 |

| Tatoeba-test.prg-eng.prg.eng | 1.0 | 0.159 |

| Tatoeba-test.sgs-eng.sgs.eng | 16.5 | 0.265 |

### System Info:

- hf_name: bat-eng

- source_languages: bat

- target_languages: eng

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/bat-eng/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['lt', 'lv', 'bat', 'en']

- src_constituents: {'lit', 'lav', 'prg_Latn', 'ltg', 'sgs'}

- tgt_constituents: {'eng'}

- src_multilingual: True

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/bat-eng/opus2m-2020-07-31.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/bat-eng/opus2m-2020-07-31.test.txt

- src_alpha3: bat

- tgt_alpha3: eng

- short_pair: bat-en

- chrF2_score: 0.662

- bleu: 50.6

- brevity_penalty: 0.9890000000000001

- ref_len: 30772.0

- src_name: Baltic languages

- tgt_name: English

- train_date: 2020-07-31

- src_alpha2: bat

- tgt_alpha2: en

- prefer_old: False

- long_pair: bat-eng

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41

|

c4d3e38fb5038891964213383654a6d9

|

SirVeggie/nixeu_embeddings

|

SirVeggie

| null | 8 | 0 | null | 23 | null | false | false | false |

creativeml-openrail-m

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,621 | false |

# Nixeu stable diffusion embeddings

Original artist: Nixeu\

Patreon: https://www.patreon.com/nixeu/posts

## Usage

To use embeddings, place the embedding file into the embedding folder (automatic1111 webui), and use the filename in the prompt.

You can choose to rename the file freely.

It is recommended to use these embeddings at low strength for cleaner results, for example (nixeu_basic:0.7).

## Additional notes

Nixeu_extra has slightly more flair (maybe).

Nixeu_soft prefers portraits and has generally softer detail.

Nixeu_white has a preference for a light color scheme.

## Examples

Prompt:

```

masterpiece, best quality, ultra-detailed, illustration, 1girl, (wearing casual clothing), beautiful face, (feminine body), (nixeu_basic:0.75)

Negative prompt: close-up, portrait, (big breasts), (fat), flat color, flat shading, bad anatomy, disfigured, deformed, malformed, mutant, gross, disgusting, out of frame, poorly drawn, extra limbs, extra fingers, missing limbs, blurry, out of focus, lowres, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, artist name, lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, artist name

Steps: 28, Sampler: Euler a, CFG scale: 9, Seed: 3875527572, Size: 768x1024, Model hash: 5674302c, Model: diffmix, Batch size: 2, Batch pos: 0, Denoising strength: 0.6, First pass size: 0x0

```

|

9f9c9f45c5f3f5db5e56e6bc1e0baae5

|

HPL/distilbert-base-uncased-finetuned-emotion

|

HPL

|

distilbert

| 18 | 4 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['emotion']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,557 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1465

- Accuracy: 0.9405

- F1: 0.9409

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8341 | 1.0 | 250 | 0.2766 | 0.9105 | 0.9088 |

| 0.2181 | 2.0 | 500 | 0.1831 | 0.9305 | 0.9308 |

| 0.141 | 3.0 | 750 | 0.1607 | 0.93 | 0.9305 |

| 0.1102 | 4.0 | 1000 | 0.1509 | 0.935 | 0.9344 |

| 0.0908 | 5.0 | 1250 | 0.1465 | 0.9405 | 0.9409 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

38ad9aa484f8763963dd6dc25b3d30b3

|

Helsinki-NLP/opus-mt-fi-hu

|

Helsinki-NLP

|

marian

| 10 | 15 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 770 | false |

### opus-mt-fi-hu

* source languages: fi

* target languages: hu

* OPUS readme: [fi-hu](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/fi-hu/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-08.zip](https://object.pouta.csc.fi/OPUS-MT-models/fi-hu/opus-2020-01-08.zip)

* test set translations: [opus-2020-01-08.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/fi-hu/opus-2020-01-08.test.txt)

* test set scores: [opus-2020-01-08.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/fi-hu/opus-2020-01-08.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba.fi.hu | 50.4 | 0.705 |

|

755aa515a87a2c006e3ec9b6a8c89030

|

AdamOswald1/Cyberpunk-Anime-Diffusion

|

AdamOswald1

| null | 35 | 201 |

diffusers

| 14 |

text-to-image

| false | false | false |

creativeml-openrail-m

|

['en']

|

['Nerfgun3/cyberware_style', 'Nerfgun3/bad_prompt']

| null | 6 | 0 | 6 | 0 | 0 | 0 | 0 |

['cyberpunk', 'anime', 'stable-diffusion', 'aiart', 'text-to-image', 'TPU']

| false | true | true | 5,174 | false |

<center><img src="https://huggingface.co/AdamOswald1/Cyberpunk-Anime-Diffusion/resolve/main/img/5.jpg" width="512" height="512"/></center>

# Cyberpunk Anime Diffusion

An AI model that generates cyberpunk anime characters!~

Based of a finetuned Waifu Diffusion V1.3 Model with Stable Diffusion V1.5 New Vae, training in Dreambooth

by [DGSpitzer](https://www.youtube.com/channel/UCzzsYBF4qwtMwJaPJZ5SuPg)

### 🧨 Diffusers

This repo contains both .ckpt and Diffuser model files. It's compatible to be used as any Stable Diffusion model, using standard [Stable Diffusion Pipelines](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

You can convert this model to [ONNX](https://huggingface.co/docs/diffusers/optimization/onnx), [MPS](https://huggingface.co/docs/diffusers/optimization/mps) and/or [FLAX/JAX](https://huggingface.co/blog/stable_diffusion_jax).

```python example for loading the Diffuser

#!pip install diffusers transformers scipy torch

from diffusers import StableDiffusionPipeline

import torch

model_id = "AdamOswald1/Cyberpunk-Anime-Diffusion"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "a beautiful perfect face girl in dgs illustration style, Anime fine details portrait of school girl in front of modern tokyo city landscape on the background deep bokeh, anime masterpiece, 8k, sharp high quality anime"

image = pipe(prompt).images[0]

image.save("./cyberpunk_girl.png")

```

# Online Demo

You can try the Online Web UI demo build with [Gradio](https://github.com/gradio-app/gradio), or use Colab Notebook at here:

*My Online Space Demo*

[](https://huggingface.co/spaces/DGSpitzer/DGS-Diffusion-Space)

*Finetuned Diffusion WebUI Demo by anzorq*

[](https://huggingface.co/spaces/anzorq/finetuned_diffusion)

*Colab Notebook*

[](https://colab.research.google.com/github/HelixNGC7293/cyberpunk-anime-diffusion/blob/main/cyberpunk_anime_diffusion.ipynb)[](https://github.com/HelixNGC7293/cyberpunk-anime-diffusion)

*Buy me a coffee if you like this project ;P ♥*

[](https://www.buymeacoffee.com/dgspitzer)

<center><img src="https://huggingface.co/AdamOswald1/Cyberpunk-Anime-Diffusion/resolve/main/img/1.jpg" width="512" height="512"/></center>

# **👇Model👇**

AI Model Weights available at huggingface: https://huggingface.co/AdamOswald1/Cyberpunk-Anime-Diffusion

<center><img src="https://huggingface.co/AdamOswald1/Cyberpunk-Anime-Diffusion/resolve/main/img/2.jpg" width="512" height="512"/></center>

# Usage

After model loaded, use keyword **dgs** in your prompt, with **illustration style** to get even better results.

For sampler, use **Euler A** for the best result (**DDIM** kinda works too), CFG Scale 7, steps 20 should be fine

**Example 1:**

```

portrait of a girl in dgs illustration style, Anime girl, female soldier working in a cyberpunk city, cleavage, ((perfect femine face)), intricate, 8k, highly detailed, shy, digital painting, intense, sharp focus

```

For cyber robot male character, you can add **muscular male** to improve the output.

**Example 2:**

```

a photo of muscular beard soldier male in dgs illustration style, half-body, holding robot arms, strong chest

```

**Example 3 (with Stable Diffusion WebUI):**

If using [AUTOMATIC1111's Stable Diffusion WebUI](https://github.com/AUTOMATIC1111/stable-diffusion-webui)

You can simply use this as **prompt** with **Euler A** Sampler, CFG Scale 7, steps 20, 704 x 704px output res:

```

an anime girl in dgs illustration style

```

And set the **negative prompt** as this to get cleaner face:

```

out of focus, scary, creepy, evil, disfigured, missing limbs, ugly, gross, missing fingers

```

This will give you the exactly same style as the sample images above.

<center><img src="https://huggingface.co/AdamOswald1/Cyberpunk-Anime-Diffusion/resolve/main/img/ReadmeAddon.jpg" width="256" height="353"/></center>

---

**NOTE: usage of this model implies accpetance of stable diffusion's [CreativeML Open RAIL-M license](LICENSE)**

---

<center><img src="https://huggingface.co/AdamOswald1/Cyberpunk-Anime-Diffusion/resolve/main/img/4.jpg" width="700" height="700"/></center>

<center><img src="https://huggingface.co/AdamOswald1/Cyberpunk-Anime-Diffusion/resolve/main/img/6.jpg" width="700" height="700"/></center>

|

653f28afd59d38d377133b123571d7cd

|

AymanMansour/Whisper-Sudanese-Dialect-small

|

AymanMansour

|

whisper

| 37 | 8 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['ar']

|

['AymanMansour/SDN-Dialect-Dataset', 'arbml/sudanese_dialect_speech', 'arabic_speech_corpus']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,529 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# openai/whisper-small

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.5091

- Wer: 56.3216

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 64

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0157 | 13.0 | 1000 | 1.1631 | 65.9101 |

| 0.0025 | 26.0 | 2000 | 1.3416 | 58.5066 |

| 0.0009 | 39.01 | 3000 | 1.4238 | 56.6398 |

| 0.0004 | 52.01 | 4000 | 1.4800 | 56.3004 |

| 0.0002 | 65.01 | 5000 | 1.5091 | 56.3216 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

a351daaca49d54284bd64460fab11bd9

|

Phantom-Artist/phantom-diffusion-s3-the-last-8

|

Phantom-Artist

| null | 39 | 0 | null | 2 | null | false | false | false |

creativeml-openrail-m

|

['en', 'ja']

|

['Phantom-Artist/phantom-diffusion-s3-the-last-8-dataset']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['art']

| false | true | true | 7,502 | false |

The final 8 models that are trained over [hakurei's Waifu Diffusion](https://huggingface.co/hakurei/waifu-diffusion).

Each model was trained on a notable Japanese (and Taiwanese, I think) AI artist's works using dreambooth, with 30 of their works gained mainly from twitter (except for sabakichi, which I collected the dataset from pixiv).

It tooks 1000 steps to train each model, at a learning rate of 5-e6. I have shared the notebook [here](https://huggingface.co/Phantom-Artist/phantom-diffusion/blob/main/DreamBooth_Stable_Diffusion_works_with_SD_2.ipynb) in case you want to try out additional training.

Some are good at backgrounds, while others can generate a semi-realistic style as seen in many SD 1.5 anime/waifu models (and some might be a bit overfitted).

The dataset is published [here](https://huggingface.co/datasets/Phantom-Artist/phantom-diffusion-s3-the-last-8-dataset), and the full members are listed [here](https://twitter.com/i/lists/1622444997240037377) (special thanks to Yunyalula for creating it!).

# For those who are against generative AIs

You can see that now they are the target. Take our tool. Now is the time for pay back. Generate the images in their styles, and bring back the power you had to yourself.

# For those who support the development of generative AIs

Some of the AI artists, even though they take advantage of the open strategy of Stable Diffusion, now tend to hide their prompts, trying to monopolize their style (I'm not saying the AI artists I trained are as such, to be sure).

To continue protecting our values and beliefs on the open community and fight against them trying to create another pre-modern style guilds, I will show you a new way.

You no longer need their prompts; just train their images by yourself to protect the open community. It's not only legal but also ethical, as they have been taking advantages of others' trained dataset.

# For those who call themselves "phantom 40"

I saw some caliming there should be 48, and here you go. Phantom 48, or do you like to call yourselves *PTM* 48 instead? It's up to you.

# Why will they be the last?

My initial intention on this series was a social experiment to see what will happen if the AI artists are targeted for personalized training.

As it became more popular than expected and the artists started calling themselves "phantom 20," I came up with the second intention to see how they will react after I add 20 more in one day, to see if they can adapt to the sudden change. They acted greatly, and I think that's why they could become notable.

All the reactions and the interpretations on my action were impressive, but since I have accomplished my goal, and since the main stream model will probably be SD 2.1 768 (not SD 2.1 512), I will no longer add new models.

I know I couldn't add some of the artists, but no. I will not do it under the name of phantom.

It takes me like 8 hours to train, test, and upload 20 models, and it's just unsustainable to continue doing it everyday.

**From now on, anyone who wish to add more is the next phantom. Train anyone you wish to by yourself.**

# trained artist list

- atsuwo_AI

- recommended pos: multicolored hair, cg

- fladdict

- recommended pos: oil painting/ancient relief/impressionist impasto oil painting (maybe more)

- possible neg: monkey

- Hifumi_AID

- recommended pos: dark purple hair, emerald eyes

- mayonaka_rr

- recommended pos: cg

- possible pos: dynamic posing, bikini, ponytail

- o81morimori

- possible pos: cg, in a messy apartment room with objects on the floor and the bed

- sabakichi

- possible pos 1: merging underwater, limited pallete, melting underwater, unstable outlines

- possible pos 2: rough sketch, limited pallete, ((unstable outlines)), monotone gradation, dynamic posing

- teftef

- possible pos: light skyblue hair, bun, retropunk gears of a factory

- violet_fizz

- recommended pos: beautiful face, grown up face, long eyes, expressionless

- possible pos: expressionless

# samples

The basic prompt is as follows.

However, to present you the potential of these models as much as possible, many of them have additional postive tags (such as "in the style of") to get the result below (yes, use ``aitop (ARTIST)_style`` to gain the finetuned result).

Many works better with the additional prompt ``beautiful face``. Generally speaking, prompting words close to the trained dataset will give you a better result.

```

POS: masterpiece, best quality, 1girl, aitop (ARTIST)_style

NEG: nsfw, worst quality, low quality, medium quality, deleted, lowres, bad anatomy, bad hands, text, error, missing fingers, extra digits, fewer digits, cropped, jpeg artifacts, signature, watermark, username, blurry, simple background

```

## atsuwo_AI

## fladdict

## Hifumi_AID

## mayonaka_rr

## o81morimori

## sabakichi

## teftef

## violet_fizz

|

aef39d409ac19258ac381a0065672085

|

mattchurgin/xls-r-eng

|

mattchurgin

|

wav2vec2

| 19 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['ab']

|

['common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'mozilla-foundation/common_voice_7_0', 'generated_from_trainer']

| true | true | true | 1,115 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

#

This model is a fine-tuned version of [patrickvonplaten/wav2vec2_tiny_random_robust](https://huggingface.co/patrickvonplaten/wav2vec2_tiny_random_robust) on the MOZILLA-FOUNDATION/COMMON_VOICE_7_0 - AB dataset.

It achieves the following results on the evaluation set:

- Loss: inf

- Wer: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1.0

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.16.0.dev0

- Pytorch 1.10.1

- Datasets 1.18.1.dev0

- Tokenizers 0.11.0

|

ed197338e0d20785015ecee56c02bdc4

|

zhiguoxu/chinese-roberta-wwm-ext-finetuned2

|

zhiguoxu

|

bert

| 10 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,615 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# chinese-roberta-wwm-ext-finetuned2

This model is a fine-tuned version of [hfl/chinese-roberta-wwm-ext](https://huggingface.co/hfl/chinese-roberta-wwm-ext) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1448

- Accuracy: 1.0

- F1: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 1.4081 | 1.0 | 3 | 0.9711 | 0.7273 | 0.6573 |

| 0.9516 | 2.0 | 6 | 0.8174 | 0.8182 | 0.8160 |

| 0.8945 | 3.0 | 9 | 0.6617 | 0.9091 | 0.9124 |

| 0.7042 | 4.0 | 12 | 0.5308 | 1.0 | 1.0 |

| 0.6641 | 5.0 | 15 | 0.4649 | 1.0 | 1.0 |

| 0.5731 | 6.0 | 18 | 0.4046 | 1.0 | 1.0 |

| 0.5132 | 7.0 | 21 | 0.3527 | 1.0 | 1.0 |

| 0.3999 | 8.0 | 24 | 0.3070 | 1.0 | 1.0 |

| 0.4198 | 9.0 | 27 | 0.2673 | 1.0 | 1.0 |

| 0.3677 | 10.0 | 30 | 0.2378 | 1.0 | 1.0 |

| 0.3545 | 11.0 | 33 | 0.2168 | 1.0 | 1.0 |

| 0.3237 | 12.0 | 36 | 0.1980 | 1.0 | 1.0 |

| 0.3122 | 13.0 | 39 | 0.1860 | 1.0 | 1.0 |

| 0.2802 | 14.0 | 42 | 0.1759 | 1.0 | 1.0 |

| 0.2552 | 15.0 | 45 | 0.1671 | 1.0 | 1.0 |

| 0.2475 | 16.0 | 48 | 0.1598 | 1.0 | 1.0 |

| 0.2259 | 17.0 | 51 | 0.1541 | 1.0 | 1.0 |

| 0.201 | 18.0 | 54 | 0.1492 | 1.0 | 1.0 |

| 0.2083 | 19.0 | 57 | 0.1461 | 1.0 | 1.0 |

| 0.2281 | 20.0 | 60 | 0.1448 | 1.0 | 1.0 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.12.0+cu102

- Datasets 2.1.0

- Tokenizers 0.12.1

|

51abb096912cefe4883a68c072bbb250

|

elRivx/100Memories

|

elRivx

| null | 3 | 0 | null | 4 |

text-to-image

| false | false | false |

creativeml-openrail-m

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['stable-diffusion', 'text-to-image']

| false | true | true | 1,571 | false |

# 100Memories

This is my new Stable Diffusion 1.5 custom model that bring to you an images with a retro look style.

The magic word is: 100Memories

If you enjoy my work, please consider supporting me:

[](https://www.buymeacoffee.com/elrivx)

Examples:

<img src=https://imgur.com/xuCqo5l.png width=30% height=30%>

<img src=https://imgur.com/7Xdy4Jv.png width=30% height=30%>

<img src=https://imgur.com/c0JccbW.png width=30% height=30%>

<img src=https://imgur.com/7Qrw48p.png width=30% height=30%>

<img src=https://imgur.com/2bvukQY.png width=30% height=30%>

<img src=https://imgur.com/NFkHsG8.png width=30% height=30%>

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

|

4afb1f25df47fa99f79a94e59b533d86

|

wyu1/GenRead-3B-WebQ-MergeDPR

|

wyu1

|

t5

| 5 | 1 |

transformers

| 0 | null | true | false | false |

cc-by-4.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 730 | false |

# GenRead (MergeDPR): FiD model trained on WebQ

-- This is the model checkpoint of GenRead [2], based on the T5-3B and trained on the WebQ dataset [1].

-- Hyperparameters: 8 x 80GB A100 GPUs; batch size 16; AdamW; LR 5e-5; best dev at 18000 steps.

References:

[1] Semantic parsing on freebase from question-answer pairs. EMNLP 2013.

[2] Generate rather than Retrieve: Large Language Models are Strong Context Generators. arXiv 2022

## Model performance

We evaluate it on the WebQ dataset, the EM score is 56.25.

<a href="https://huggingface.co/exbert/?model=bert-base-uncased">

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

</a>

---

license: cc-by-4.0

---

---

license: cc-by-4.0

---

|

c073d5ea4450283d8fa118ce561646ec

|

chrisjay/fonxlsr

|

chrisjay

|

wav2vec2

| 10,414 | 6 |

transformers

| 2 |

automatic-speech-recognition

| true | false | true |

apache-2.0

|

['fon']

|

['fon_dataset']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['audio', 'automatic-speech-recognition', 'speech', 'xlsr-fine-tuning-week', 'hf-asr-leaderboard']

| true | true | true | 4,687 | false |

# Wav2Vec2-Large-XLSR-53-Fon

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on [Fon (or Fongbe)](https://en.wikipedia.org/wiki/Fon_language) using the [Fon Dataset](https://github.com/laleye/pyFongbe/tree/master/data).

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import json

import random

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

#Load test_dataset from saved files in folder

from datasets import load_dataset, load_metric

#for test

for root, dirs, files in os.walk(test/):

test_dataset= load_dataset("json", data_files=[os.path.join(root,i) for i in files],split="train")

#Remove unnecessary chars

chars_to_ignore_regex = '[\\,\\?\\.\\!\\-\\;\\:\\"\\“\\%\\‘\\”]'

def remove_special_characters(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower() + " "

return batch

test_dataset = test_dataset.map(remove_special_characters)

processor = Wav2Vec2Processor.from_pretrained("chrisjay/wav2vec2-large-xlsr-53-fon")

model = Wav2Vec2ForCTC.from_pretrained("chrisjay/wav2vec2-large-xlsr-53-fon")

#No need for resampling because audio dataset already at 16kHz

#resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"]=speech_array.squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

tlogits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on our unique Fon test data.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

for root, dirs, files in os.walk(test/):

test_dataset = load_dataset("json", data_files=[os.path.join(root,i) for i in files],split="train")

chars_to_ignore_regex = '[\\,\\?\\.\\!\\-\\;\\:\\"\\“\\%\\‘\\”]'

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower() + " "

return batch

test_dataset = test_dataset.map(remove_special_characters)

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("chrisjay/wav2vec2-large-xlsr-53-fon")

model = Wav2Vec2ForCTC.from_pretrained("chrisjay/wav2vec2-large-xlsr-53-fon")

model.to("cuda")

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = speech_array[0].numpy()

batch["sampling_rate"] = sampling_rate

batch["target_text"] = batch["sentence"]

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

#Evaluation on test dataset

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 14.97 %

## Training

The [Fon dataset](https://github.com/laleye/pyFongbe/tree/master/data) was split into `train`(8235 samples), `validation`(1107 samples), and `test`(1061 samples).

The script used for training can be found [here](https://colab.research.google.com/drive/11l6qhJCYnPTG1TQZ8f3EvKB9z12TQi4g?usp=sharing)

# Collaborators on this project

- Chris C. Emezue ([Twitter](https://twitter.com/ChrisEmezue))|(chris.emezue@gmail.com)

- Bonaventure F.P. Dossou (HuggingFace Username: [bonadossou](https://huggingface.co/bonadossou))|([Twitter](https://twitter.com/bonadossou))|(femipancrace.dossou@gmail.com)

## This is a joint project continuing our research on [OkwuGbé: End-to-End Speech Recognition for Fon and Igbo](https://arxiv.org/abs/2103.07762)

|

0402a110bcb8ee847af70a4c746a4d6f

|

jonatasgrosman/exp_w2v2t_it_vp-it_s965

|

jonatasgrosman

|

wav2vec2

| 10 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['it']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'it']

| false | true | true | 469 | false |

# exp_w2v2t_it_vp-it_s965

Fine-tuned [facebook/wav2vec2-large-it-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-it-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (it)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

0fb4487e2c63a2fac483daeb4c371a9f

|

jojoUla/bert-large-uncased-finetuned-DA-Zero-shot

|

jojoUla

|

bert

| 15 | 5 |

transformers

| 0 |

fill-mask

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,671 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-large-uncased-finetuned-DA-Zero-shot

This model is a fine-tuned version of [bert-large-uncased](https://huggingface.co/bert-large-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1318

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.1282 | 1.0 | 435 | 1.3862 |

| 1.1307 | 2.0 | 870 | 1.3362 |

| 1.2243 | 3.0 | 1305 | 1.2791 |

| 1.274 | 4.0 | 1740 | 1.2143 |

| 1.2296 | 5.0 | 2175 | 1.1799 |

| 1.1773 | 6.0 | 2610 | 1.1550 |

| 1.1519 | 7.0 | 3045 | 1.1295 |

| 1.1406 | 8.0 | 3480 | 1.1064 |

| 1.114 | 9.0 | 3915 | 1.1303 |

| 1.1058 | 10.0 | 4350 | 1.1214 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

|

f7d58caf4962fd50deb1cc768c9f14cb

|

philosucker/xlm-roberta-base-finetuned-panx-de

|

philosucker

|

xlm-roberta

| 16 | 3 |

transformers

| 0 |

token-classification

| true | false | false |

mit

| null |

['xtreme']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,317 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1906

- F1: 0.8687

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2884 | 1.0 | 3145 | 0.2390 | 0.8242 |

| 0.1639 | 2.0 | 6290 | 0.1944 | 0.8488 |

| 0.0952 | 3.0 | 9435 | 0.1906 | 0.8687 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

de7f87f2da1e1cae77d3e9f4c2d4c414

|

thesunshine36/FineTune_Vit5_LR0_00001_time3

|

thesunshine36

|

t5

| 5 | 10 |

transformers

| 0 |

text2text-generation

| false | true | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,556 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# FineTune_Vit5_LR0_00001_time3

This model is a fine-tuned version of [thesunshine36/FineTune_Vit5_LR0_00001_time2](https://huggingface.co/thesunshine36/FineTune_Vit5_LR0_00001_time2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.6297

- Validation Loss: 0.5655

- Train Rouge1: 52.5683

- Train Rouge2: 31.3753

- Train Rougel: 44.4344

- Train Rougelsum: 44.4737

- Train Gen Len: 13.6985

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': 1e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Rouge1 | Train Rouge2 | Train Rougel | Train Rougelsum | Train Gen Len | Epoch |

|:----------:|:---------------:|:------------:|:------------:|:------------:|:---------------:|:-------------:|:-----:|

| 0.6297 | 0.5655 | 52.5683 | 31.3753 | 44.4344 | 44.4737 | 13.6985 | 0 |

### Framework versions

- Transformers 4.26.0

- TensorFlow 2.9.2

- Datasets 2.9.0

- Tokenizers 0.13.2

|

cac33e449529d8e96c899af5c3242eb6

|

sd-concepts-library/vcr-classique

|

sd-concepts-library

| null | 17 | 0 | null | 2 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,870 | false |

### vcr classique on Stable Diffusion

This is the `<vcr_c>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

3766df8e7048b28bdea0bd367b9015e2

|

Helsinki-NLP/opus-mt-pon-en

|

Helsinki-NLP

|

marian

| 10 | 11 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 776 | false |

### opus-mt-pon-en

* source languages: pon

* target languages: en

* OPUS readme: [pon-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/pon-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/pon-en/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/pon-en/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/pon-en/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.pon.en | 34.1 | 0.489 |

|

c6626f48fa07b9028475041e17acf186

|

DeepaKrish/roberta-base-finetuned-squad

|

DeepaKrish

|

roberta

| 13 | 5 |

transformers

| 0 |

question-answering

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,192 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-finetuned-squad

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0491

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 27 | 0.1224 |

| No log | 2.0 | 54 | 0.0491 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.9.0

- Datasets 2.5.1

- Tokenizers 0.13.2

|

d1323a4654065885f0ec683ad41c9598

|

julien-c/mini_an4_asr_train_raw_bpe_valid

|

julien-c

| null | 10 | 2 |

espnet

| 0 |

automatic-speech-recognition

| false | false | false |

cc-by-4.0

|

['en']

|

['ljspeech']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['espnet', 'audio', 'automatic-speech-recognition']

| false | true | true | 1,847 | false |

## Example ESPnet2 ASR model

### `kamo-naoyuki/mini_an4_asr_train_raw_bpe_valid.acc.best`

♻️ Imported from https://zenodo.org/record/3957940#.X90XNelKjkM

This model was trained by kamo-naoyuki using mini_an4 recipe in [espnet](https://github.com/espnet/espnet/).

### Demo: How to use in ESPnet2

```python

# coming soon

```

### Citing ESPnet

```BibTex

@inproceedings{watanabe2018espnet,

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson {Enrique Yalta Soplin} and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

title={{ESPnet}: End-to-End Speech Processing Toolkit},

year={2018},

booktitle={Proceedings of Interspeech},

pages={2207--2211},

doi={10.21437/Interspeech.2018-1456},

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

}

@inproceedings{hayashi2020espnet,

title={{Espnet-TTS}: Unified, reproducible, and integratable open source end-to-end text-to-speech toolkit},

author={Hayashi, Tomoki and Yamamoto, Ryuichi and Inoue, Katsuki and Yoshimura, Takenori and Watanabe, Shinji and Toda, Tomoki and Takeda, Kazuya and Zhang, Yu and Tan, Xu},

booktitle={Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={7654--7658},

year={2020},

organization={IEEE}

}

```

or arXiv:

```bibtex

@misc{watanabe2018espnet,

title={ESPnet: End-to-End Speech Processing Toolkit},

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Enrique Yalta Soplin and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

year={2018},

eprint={1804.00015},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

99cb63415e6074fa07a5efb024253a7c

|

jinghan/roberta-base-finetuned-wnli

|

jinghan

|

roberta

| 14 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

mit

| null |

['glue']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,442 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-finetuned-wnli

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6880

- Accuracy: 0.5634

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 40 | 0.6880 | 0.5634 |

| No log | 2.0 | 80 | 0.6851 | 0.5634 |

| No log | 3.0 | 120 | 0.6961 | 0.4366 |

| No log | 4.0 | 160 | 0.6906 | 0.5634 |

| No log | 5.0 | 200 | 0.6891 | 0.5634 |

### Framework versions

- Transformers 4.21.0

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

a58b05f7d53de8094a5907c65ae63dca

|

vasista22/whisper-gujarati-medium

|

vasista22

|

whisper

| 12 | 1 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['gu']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['whisper-event']

| true | true | true | 1,296 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Gujarati Medium

This model is a fine-tuned version of [openai/whisper-medium](https://huggingface.co/openai/whisper-medium) on the Gujarati data available from multiple publicly available ASR corpuses.

It has been fine-tuned as a part of the Whisper fine-tuning sprint.

## Training and evaluation data

Training Data: ULCA ASR Corpus, OpenSLR, Microsoft Research Telugu Corpus (Train+Dev), Google/Fleurs Train+Dev set.

Evaluation Data: Google/Fleurs Test set, Microsoft Research Telugu Corpus Test .

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 24

- eval_batch_size: 48

- seed: 22

- optimizer: adamw_bnb_8bit

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 4000

- training_steps: 21240

- mixed_precision_training: True

## Acknowledgement

This work was done at Speech Lab, IITM.

The compute resources for this work were funded by "Bhashini: National Language translation Mission" project of the Ministry of Electronics and Information Technology (MeitY), Government of India.

|

01eda95326923583652aa1363d548da1

|

creat89/NER_FEDA_Latin1

|

creat89

|

bert

| 7 | 1 |

transformers

| 0 | null | true | false | false |

mit

|

['multilingual', 'cs', 'pl', 'sl', 'fi']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['labse', 'ner']

| false | true | true | 832 | false |

This is a multilingual NER system trained using a Frustratingly Easy Domain Adaptation architecture. It is based on LaBSE and supports different tagsets all using IOBES formats:

1. Wikiann (LOC, PER, ORG)

2. SlavNER 19/21 (EVT, LOC, ORG, PER, PRO)

3. SlavNER 17 (LOC, MISC, ORG, PER)

4. SSJ500k (LOC, MISC, ORG, PER)

5. KPWr (EVT, LOC, ORG, PER, PRO)

6. CNEC (LOC, ORG, MEDIA, ART, PER, TIME)

7. Turku (DATE, EVT, LOC, ORG, PER, PRO, TIME)

PER: person, LOC: location, ORG: organization, EVT: event, PRO: product, MISC: Miscellaneous, MEDIA: media, ART: Artifact, TIME: time, DATE: date

You can select the tagset to use in the output by configuring the model.

More information about the model can be found in the paper (https://aclanthology.org/2021.bsnlp-1.12.pdf) and GitHub repository (https://github.com/EMBEDDIA/NER_FEDA).

|

974a5af60cd29e63b76dc5715499de62

|

Zekunli/flan-t5-large-da-multiwoz_1000

|

Zekunli

|

t5

| 10 | 0 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,561 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# flan-t5-large-da-multiwoz_1000

This model is a fine-tuned version of [google/flan-t5-large](https://huggingface.co/google/flan-t5-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3538

- Accuracy: 41.3747

- Num: 3689

- Gen Len: 15.5115

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 24

- seed: 1799

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Num | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:----:|:-------:|

| 1.3315 | 0.24 | 200 | 0.5697 | 25.9543 | 3689 | 14.556 |

| 0.6418 | 0.48 | 400 | 0.4645 | 30.0503 | 3689 | 14.9314 |

| 0.5433 | 0.72 | 600 | 0.4307 | 31.9506 | 3689 | 16.1515 |

| 0.4909 | 0.95 | 800 | 0.4177 | 34.7593 | 3689 | 15.418 |

| 0.4769 | 1.19 | 1000 | 0.3996 | 35.0943 | 3689 | 14.9607 |

| 0.4491 | 1.43 | 1200 | 0.3881 | 36.2741 | 3689 | 15.543 |

| 0.4531 | 1.67 | 1400 | 0.3820 | 35.7704 | 3689 | 14.1583 |

| 0.4322 | 1.91 | 1600 | 0.3726 | 37.4853 | 3689 | 15.961 |

| 0.4188 | 2.15 | 1800 | 0.3699 | 38.4117 | 3689 | 15.0773 |

| 0.4085 | 2.38 | 2000 | 0.3674 | 38.5353 | 3689 | 15.4012 |

| 0.4063 | 2.62 | 2200 | 0.3606 | 40.0046 | 3689 | 15.3546 |

| 0.3977 | 2.86 | 2400 | 0.3570 | 40.6543 | 3689 | 15.704 |

| 0.3992 | 3.1 | 2600 | 0.3549 | 40.4284 | 3689 | 15.7446 |

| 0.3828 | 3.34 | 2800 | 0.3538 | 41.3747 | 3689 | 15.5115 |

| 0.3792 | 3.58 | 3000 | 0.3539 | 39.8513 | 3689 | 14.7951 |

| 0.3914 | 3.81 | 3200 | 0.3498 | 41.0388 | 3689 | 15.4153 |

| 0.3707 | 4.05 | 3400 | 0.3498 | 40.9596 | 3689 | 16.3136 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.10.0+cu111

- Datasets 2.5.1

- Tokenizers 0.12.1

|

bd8e04b35ddc594669257de7e325a059

|

Babivill/leidirocha

|

Babivill

| null | 31 | 3 |

diffusers

| 0 |

text-to-image

| false | false | false |

creativeml-openrail-m

| null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['text-to-image']

| false | true | true | 1,606 | false |

### leidirocha Dreambooth model trained by Babivill with [Hugging Face Dreambooth Training Space](https://huggingface.co/spaces/multimodalart/dreambooth-training) with the v1-5 base model

You run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb). Don't forget to use the concept prompts!

Sample pictures of:

leidirocha (use that on your prompt)

|

1965defae343d5d77a9329b3aa296593

|

north/nynorsk_North_large

|

north

|

t5

| 15 | 5 |

transformers

| 0 |

translation

| true | false | true |

apache-2.0

|

['nn', 'no', 'nb']

|

['NbAiLab/balanced_bokmaal_nynorsk']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 500 | false |

# Model Card for Model ID

This model is a finetuned version of [north/t5_large_NCC_modern](https://huggingface.co/north/t5_large_NCC_modern).

| | Size |Model|BLEU|

|:------------:|:------------:|:------------:|:------------:|

|Small |_60M_|[🤗](https://huggingface.co/north/nynorsk_North_small)|93.44|

|Base |_220M_|[🤗](https://huggingface.co/north/nynorsk_North_base)|93.79|

|**Large** |**_770M_**|✔|**93.99**|

# Model Details

Please see the model card for the base model for more information.

|

c06e4eddff2aa7848000d63b25b932fa

|

w11wo/sundanese-roberta-base-emotion-classifier

|

w11wo

|

roberta

| 10 | 4 |

transformers

| 0 |

text-classification

| true | true | false |

mit

|

['su']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['sundanese-roberta-base-emotion-classifier']

| false | true | true | 4,254 | false |

## Sundanese RoBERTa Base Emotion Classifier

Sundanese RoBERTa Base Emotion Classifier is an emotion-text-classification model based on the [RoBERTa](https://arxiv.org/abs/1907.11692) model. The model was originally the pre-trained [Sundanese RoBERTa Base](https://hf.co/w11wo/sundanese-roberta-base) model, which is then fine-tuned on the [Sundanese Twitter dataset](https://github.com/virgantara/sundanese-twitter-dataset), consisting of Sundanese tweets.

10% of the dataset is kept for evaluation purposes. After training, the model achieved an evaluation accuracy of 98.41% and F1-macro of 98.43%.

Hugging Face's `Trainer` class from the [Transformers](https://huggingface.co/transformers) library was used to train the model. PyTorch was used as the backend framework during training, but the model remains compatible with other frameworks nonetheless.

## Model

| Model | #params | Arch. | Training/Validation data (text) |

| ------------------------------------------- | ------- | ------------ | ------------------------------- |

| `sundanese-roberta-base-emotion-classifier` | 124M | RoBERTa Base | Sundanese Twitter dataset |

## Evaluation Results

The model was trained for 10 epochs and the best model was loaded at the end.

| Epoch | Training Loss | Validation Loss | Accuracy | F1 | Precision | Recall |

| ----- | ------------- | --------------- | -------- | -------- | --------- | -------- |

| 1 | 0.801800 | 0.293695 | 0.900794 | 0.899048 | 0.903466 | 0.900406 |

| 2 | 0.208700 | 0.185291 | 0.936508 | 0.935520 | 0.939460 | 0.935540 |

| 3 | 0.089700 | 0.150287 | 0.956349 | 0.956569 | 0.956500 | 0.958612 |

| 4 | 0.025600 | 0.130889 | 0.972222 | 0.972865 | 0.973029 | 0.973184 |

| 5 | 0.002200 | 0.100031 | 0.980159 | 0.980430 | 0.980430 | 0.980430 |

| 6 | 0.001300 | 0.104971 | 0.980159 | 0.980430 | 0.980430 | 0.980430 |

| 7 | 0.000600 | 0.107744 | 0.980159 | 0.980174 | 0.980814 | 0.979743 |

| 8 | 0.000500 | 0.102327 | 0.980159 | 0.980171 | 0.979970 | 0.980430 |

| 9 | 0.000500 | 0.101935 | 0.984127 | 0.984376 | 0.984073 | 0.984741 |

| 10 | 0.000400 | 0.105965 | 0.984127 | 0.984142 | 0.983720 | 0.984741 |

## How to Use

### As Text Classifier

```python

from transformers import pipeline

pretrained_name = "sundanese-roberta-base-emotion-classifier"

nlp = pipeline(

"sentiment-analysis",

model=pretrained_name,

tokenizer=pretrained_name

)

nlp("Wah, éta gélo, keren pisan!")

```

## Disclaimer

Do consider the biases which come from both the pre-trained RoBERTa model and the Sundanese Twitter dataset that may be carried over into the results of this model.

## Author

Sundanese RoBERTa Base Emotion Classifier was trained and evaluated by [Wilson Wongso](https://w11wo.github.io/). All computation and development are done on Google Colaboratory using their free GPU access.

## Citation Information

```bib

@article{rs-907893,

author = {Wongso, Wilson

and Lucky, Henry

and Suhartono, Derwin},

journal = {Journal of Big Data},

year = {2022},

month = {Feb},

day = {26},

abstract = {The Sundanese language has over 32 million speakers worldwide, but the language has reaped little to no benefits from the recent advances in natural language understanding. Like other low-resource languages, the only alternative is to fine-tune existing multilingual models. In this paper, we pre-trained three monolingual Transformer-based language models on Sundanese data. When evaluated on a downstream text classification task, we found that most of our monolingual models outperformed larger multilingual models despite the smaller overall pre-training data. In the subsequent analyses, our models benefited strongly from the Sundanese pre-training corpus size and do not exhibit socially biased behavior. We released our models for other researchers and practitioners to use.},

issn = {2693-5015},

doi = {10.21203/rs.3.rs-907893/v1},

url = {https://doi.org/10.21203/rs.3.rs-907893/v1}

}

```

|

b7d72b0598939b58798ca013ce675712

|

IIIT-L/muril-base-cased-finetuned-non-code-mixed-DS

|

IIIT-L

|

bert

| 10 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,421 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# muril-base-cased-finetuned-non-code-mixed-DS

This model is a fine-tuned version of [google/muril-base-cased](https://huggingface.co/google/muril-base-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2867

- Accuracy: 0.6214