repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

mediabiasgroup/DA-RoBERTa-BABE

|

mediabiasgroup

|

roberta

| 9 | 11 |

transformers

| 0 |

text-classification

| true | false | false |

afl-3.0

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 692 | false |

# Please cite as

```

@InProceedings{Spinde2021f,

title = "Neural Media Bias Detection Using Distant Supervision With {BABE} - Bias Annotations By Experts",

author = "Spinde, Timo and

Plank, Manuel and

Krieger, Jan-David and

Ruas, Terry and

Gipp, Bela and

Aizawa, Akiko",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2021",

month = nov,

year = "2021",

address = "Punta Cana, Dominican Republic",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.findings-emnlp.101",

doi = "10.18653/v1/2021.findings-emnlp.101",

pages = "1166--1177",

}

```

|

83f5ab1ffb9b32b1c6d8fda3609d0fd7

|

kornwtp/ConGen-BERT-Small

|

kornwtp

|

bert

| 8 | 2 |

sentence-transformers

| 0 |

sentence-similarity

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['sentence-transformers', 'feature-extraction', 'sentence-similarity', 'transformers']

| false | true | true | 1,432 | false |

# kornwtp/ConGen-BERT-Small

This is a [ConGen](https://github.com/KornWtp/ConGen) model: It maps sentences to a 512 dimensional dense vector space and can be used for tasks like semantic search.

## Usage

Using this model becomes easy when you have [ConGen](https://github.com/KornWtp/ConGen) installed:

```

pip install -U git+https://github.com/KornWtp/ConGen.git

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('kornwtp/ConGen-BERT-Small')

embeddings = model.encode(sentences)

print(embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [Semantic Textual Similarity](https://github.com/KornWtp/ConGen#main-results---sts)

## Citing & Authors

```bibtex

@inproceedings{limkonchotiwat-etal-2022-congen,

title = "{ConGen}: Unsupervised Control and Generalization Distillation For Sentence Representation",

author = "Limkonchotiwat, Peerat and

Ponwitayarat, Wuttikorn and

Lowphansirikul, Lalita and

Udomcharoenchaikit, Can and

Chuangsuwanich, Ekapol and

Nutanong, Sarana",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2022",

year = "2022",

publisher = "Association for Computational Linguistics",

}

```

|

123dcc54441f40da08e09e7484b4df1f

|

FritzOS/TEdetection_distiBERT_mLM_V2_shuffleplus3

|

FritzOS

|

distilbert

| 4 | 2 |

transformers

| 0 |

fill-mask

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,366 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# TEdetection_distiBERT_mLM_V2_shuffleplus3

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 5e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 5e-05, 'decay_steps': 208018, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.19.2

- TensorFlow 2.8.2

- Datasets 2.2.2

- Tokenizers 0.12.1

|

bdf5e35c403c7efb50da8153691d4a01

|

coreml/coreml-Analog-Diffusion

|

coreml

| null | 6 | 0 | null | 3 |

text-to-image

| false | false | false |

creativeml-openrail-m

| null | null | null | 1 | 0 | 1 | 0 | 1 | 1 | 0 |

['coreml', 'stable-diffusion', 'text-to-image']

| false | true | true | 1,865 | false |

# Core ML Converted Model

This model was converted to Core ML for use on Apple Silicon devices by following Apple's instructions [here](https://github.com/apple/ml-stable-diffusion#-converting-models-to-core-ml).<br>

Provide the model to an app such as [Mochi Diffusion](https://github.com/godly-devotion/MochiDiffusion) to generate images.<br>

`split_einsum` version is compatible with all compute unit options including Neural Engine.<br>

`original` version is only compatible with CPU & GPU option.

**Analog Diffusion**

[*CKPT DOWNLOAD LINK*](https://huggingface.co/wavymulder/Analog-Diffusion/resolve/main/analog-diffusion-1.0.ckpt) - This is a dreambooth model trained on a diverse set of analog photographs.

In your prompt, use the activation token: `analog style`

You may need to use the words `blur` `haze` `naked` in your negative prompts. My dataset did not include any NSFW material but the model seems to be pretty horny. Note that using `blur` and `haze` in your negative prompt can give a sharper image but also a less pronounced analog film effect.

Trained from 1.5 with VAE.

Please see [this document where I share the parameters (prompt, sampler, seed, etc.) used for all example images.](https://huggingface.co/wavymulder/Analog-Diffusion/resolve/main/parameters_used_examples.txt)

## Gradio

We support a [Gradio](https://github.com/gradio-app/gradio) Web UI to run Analog-Diffusion:

[Open in Spaces](https://huggingface.co/spaces/akhaliq/Analog-Diffusion)

Here's a [link to non-cherrypicked batches.](https://imgur.com/a/7iOgTFv)

|

33390b8f0a80a7d072a48c74e21d3fff

|

OAOA/DifFace

|

OAOA

| null | 35 | 82 |

diffusers

| 0 | null | true | false | false |

other

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['pytorch', 'diffusers', 'face image enhancement']

| false | true | true | 3,282 | false |

# DifFace: Blind Face Restoration with Diffused Error Contraction

**Paper**: [DifFace: Blind Face Restoration with Diffused Error Contraction](https://arxiv.org/abs/2212.06512)

**Authors**: Zongsheng Yue, Chen Change Loy

**Abstract**:

*While deep learning-based methods for blind face restoration have achieved unprecedented success, they still suffer from two major limitations. First, most of them deteriorate when facing complex degradations out of their training data. Second, these methods require multiple constraints, e.g., fidelity, perceptual, and adversarial losses, which require laborious hyper-parameter tuning to stabilize and balance their influences. In this work, we propose a novel method named DifFace that is capable of coping with unseen and complex degradations more gracefully without complicated loss designs. The key of our method is to establish a posterior distribution from the observed low-quality (LQ) image to its high-quality (HQ) counterpart. In particular, we design a transition distribution from the LQ image to the intermediate state of a pre-trained diffusion model and then gradually transmit from this intermediate state to the HQ target by recursively applying a pre-trained diffusion model. The transition distribution only relies on a restoration backbone that is trained with L2 loss on some synthetic data, which favorably avoids the cumbersome training process in existing methods. Moreover, the transition distribution can contract the error of the restoration backbone and thus makes our method more robust to unknown degradations. Comprehensive experiments show that DifFace is superior to current state-of-the-art methods, especially in cases with severe degradations.*

## Inference

```python

# !pip install diffusers

from diffusers import DifFacePipeline

model_id = "OAOA/DifFace"

# load model and scheduler

pipe = DifFacePipeline.from_pretrained(model_id)

pipe = pipe.to("cuda")

im_lr = cv2.imread(im_path) # read the low quality face image

im_sr = pipe(im_lr, num_inference_steps=250, started_steps=100, aligned=True)['images'][0]

image[0].save("restorated_difface.png") # save the result

```

<!--For more in-detail information, please have a look at the [official inference example](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/diffusers_intro.ipynb)-->

## Training

If you want to train your own model, please have a look at the [official training example](https://github.com/zsyOAOA/DifFace).

## Samples

[<img src="assets/Solvay_conference.png" width="805px"/>](https://imgsli.com/MTM5NTgw)

[<img src="assets/Hepburn.png" height="555px" width="400px"/>](https://imgsli.com/MTM5NTc5) [<img src="assets/oldimg_05.png" height="555px" width="400px"/>](https://imgsli.com/MTM5NTgy)

<img src="cropped_faces/0368.png" height="200px" width="200px"/><img src="assets/0368.png" height="200px" width="200px"/> <img src="cropped_faces/0885.png" height="200px" width="200px"/><img src="assets/0885.png" height="200px" width="200px"/>

<img src="cropped_faces/0729.png" height="200px" width="200px"/><img src="assets/0729.png" height="200px" width="200px"/> <img src="cropped_faces/0934.png" height="200px" width="200px"/><img src="assets/0934.png" height="200px" width="200px"/>

|

cd6cae50085efaf60bb501b97ab5c210

|

SEUNGWON1/distilroberta-base-finetuned-wikitext2

|

SEUNGWON1

|

roberta

| 9 | 4 |

transformers

| 0 |

fill-mask

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,267 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilroberta-base-finetuned-wikitext2

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.8340

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.0843 | 1.0 | 2406 | 1.9226 |

| 1.9913 | 2.0 | 4812 | 1.8820 |

| 1.9597 | 3.0 | 7218 | 1.8214 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

204d417a3d37fc79af071a9f54f15d96

|

irfan-noordin/segformer-b0-finetuned-segments-sidewalk-oct-22

|

irfan-noordin

|

segformer

| 9 | 9 |

transformers

| 0 |

image-segmentation

| true | false | false |

other

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['vision', 'image-segmentation', 'generated_from_trainer']

| true | true | true | 78,218 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# segformer-b0-finetuned-segments-sidewalk-oct-22

This model is a fine-tuned version of [nvidia/mit-b0](https://huggingface.co/nvidia/mit-b0) on the segments/sidewalk-semantic dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9249

- Mean Iou: 0.1675

- Mean Accuracy: 0.2109

- Overall Accuracy: 0.7776

- Accuracy Unlabeled: nan

- Accuracy Flat-road: 0.8631

- Accuracy Flat-sidewalk: 0.9423

- Accuracy Flat-crosswalk: 0.0

- Accuracy Flat-cyclinglane: 0.4704

- Accuracy Flat-parkingdriveway: 0.1421

- Accuracy Flat-railtrack: 0.0

- Accuracy Flat-curb: 0.0061

- Accuracy Human-person: 0.0

- Accuracy Human-rider: 0.0

- Accuracy Vehicle-car: 0.8937

- Accuracy Vehicle-truck: 0.0

- Accuracy Vehicle-bus: 0.0

- Accuracy Vehicle-tramtrain: 0.0

- Accuracy Vehicle-motorcycle: 0.0

- Accuracy Vehicle-bicycle: 0.0

- Accuracy Vehicle-caravan: 0.0

- Accuracy Vehicle-cartrailer: 0.0

- Accuracy Construction-building: 0.9143

- Accuracy Construction-door: 0.0

- Accuracy Construction-wall: 0.0055

- Accuracy Construction-fenceguardrail: 0.0

- Accuracy Construction-bridge: 0.0

- Accuracy Construction-tunnel: nan

- Accuracy Construction-stairs: 0.0

- Accuracy Object-pole: 0.0

- Accuracy Object-trafficsign: 0.0

- Accuracy Object-trafficlight: 0.0

- Accuracy Nature-vegetation: 0.9291

- Accuracy Nature-terrain: 0.8710

- Accuracy Sky: 0.9207

- Accuracy Void-ground: 0.0

- Accuracy Void-dynamic: 0.0

- Accuracy Void-static: 0.0

- Accuracy Void-unclear: 0.0

- Iou Unlabeled: nan

- Iou Flat-road: 0.6127

- Iou Flat-sidewalk: 0.8192

- Iou Flat-crosswalk: 0.0

- Iou Flat-cyclinglane: 0.4256

- Iou Flat-parkingdriveway: 0.1262

- Iou Flat-railtrack: 0.0

- Iou Flat-curb: 0.0061

- Iou Human-person: 0.0

- Iou Human-rider: 0.0

- Iou Vehicle-car: 0.6655

- Iou Vehicle-truck: 0.0

- Iou Vehicle-bus: 0.0

- Iou Vehicle-tramtrain: 0.0

- Iou Vehicle-motorcycle: 0.0

- Iou Vehicle-bicycle: 0.0

- Iou Vehicle-caravan: 0.0

- Iou Vehicle-cartrailer: 0.0

- Iou Construction-building: 0.5666

- Iou Construction-door: 0.0

- Iou Construction-wall: 0.0054

- Iou Construction-fenceguardrail: 0.0

- Iou Construction-bridge: 0.0

- Iou Construction-tunnel: nan

- Iou Construction-stairs: 0.0

- Iou Object-pole: 0.0

- Iou Object-trafficsign: 0.0

- Iou Object-trafficlight: 0.0

- Iou Nature-vegetation: 0.7875

- Iou Nature-terrain: 0.6912

- Iou Sky: 0.8218

- Iou Void-ground: 0.0

- Iou Void-dynamic: 0.0

- Iou Void-static: 0.0

- Iou Void-unclear: 0.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 6e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Mean Iou | Mean Accuracy | Overall Accuracy | Accuracy Unlabeled | Accuracy Flat-road | Accuracy Flat-sidewalk | Accuracy Flat-crosswalk | Accuracy Flat-cyclinglane | Accuracy Flat-parkingdriveway | Accuracy Flat-railtrack | Accuracy Flat-curb | Accuracy Human-person | Accuracy Human-rider | Accuracy Vehicle-car | Accuracy Vehicle-truck | Accuracy Vehicle-bus | Accuracy Vehicle-tramtrain | Accuracy Vehicle-motorcycle | Accuracy Vehicle-bicycle | Accuracy Vehicle-caravan | Accuracy Vehicle-cartrailer | Accuracy Construction-building | Accuracy Construction-door | Accuracy Construction-wall | Accuracy Construction-fenceguardrail | Accuracy Construction-bridge | Accuracy Construction-tunnel | Accuracy Construction-stairs | Accuracy Object-pole | Accuracy Object-trafficsign | Accuracy Object-trafficlight | Accuracy Nature-vegetation | Accuracy Nature-terrain | Accuracy Sky | Accuracy Void-ground | Accuracy Void-dynamic | Accuracy Void-static | Accuracy Void-unclear | Iou Unlabeled | Iou Flat-road | Iou Flat-sidewalk | Iou Flat-crosswalk | Iou Flat-cyclinglane | Iou Flat-parkingdriveway | Iou Flat-railtrack | Iou Flat-curb | Iou Human-person | Iou Human-rider | Iou Vehicle-car | Iou Vehicle-truck | Iou Vehicle-bus | Iou Vehicle-tramtrain | Iou Vehicle-motorcycle | Iou Vehicle-bicycle | Iou Vehicle-caravan | Iou Vehicle-cartrailer | Iou Construction-building | Iou Construction-door | Iou Construction-wall | Iou Construction-fenceguardrail | Iou Construction-bridge | Iou Construction-tunnel | Iou Construction-stairs | Iou Object-pole | Iou Object-trafficsign | Iou Object-trafficlight | Iou Nature-vegetation | Iou Nature-terrain | Iou Sky | Iou Void-ground | Iou Void-dynamic | Iou Void-static | Iou Void-unclear |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:-------------:|:----------------:|:------------------:|:------------------:|:----------------------:|:-----------------------:|:-------------------------:|:-----------------------------:|:-----------------------:|:------------------:|:---------------------:|:--------------------:|:--------------------:|:----------------------:|:--------------------:|:--------------------------:|:---------------------------:|:------------------------:|:------------------------:|:---------------------------:|:------------------------------:|:--------------------------:|:--------------------------:|:------------------------------------:|:----------------------------:|:----------------------------:|:----------------------------:|:--------------------:|:---------------------------:|:----------------------------:|:--------------------------:|:-----------------------:|:------------:|:--------------------:|:---------------------:|:--------------------:|:---------------------:|:-------------:|:-------------:|:-----------------:|:------------------:|:--------------------:|:------------------------:|:------------------:|:-------------:|:----------------:|:---------------:|:---------------:|:-----------------:|:---------------:|:---------------------:|:----------------------:|:-------------------:|:-------------------:|:----------------------:|:-------------------------:|:---------------------:|:---------------------:|:-------------------------------:|:-----------------------:|:-----------------------:|:-----------------------:|:---------------:|:----------------------:|:-----------------------:|:---------------------:|:------------------:|:-------:|:---------------:|:----------------:|:---------------:|:----------------:|

| 2.832 | 0.05 | 20 | 3.1768 | 0.0700 | 0.1095 | 0.5718 | nan | 0.1365 | 0.9472 | 0.0019 | 0.0006 | 0.0004 | 0.0 | 0.0205 | 0.0 | 0.0 | 0.2074 | 0.0 | 0.0 | 0.0 | 0.0017 | 0.0001 | 0.0 | 0.0 | 0.7360 | 0.0 | 0.0235 | 0.0050 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9559 | 0.0429 | 0.5329 | 0.0010 | 0.0 | 0.0 | 0.0 | 0.0 | 0.1260 | 0.5906 | 0.0016 | 0.0006 | 0.0004 | 0.0 | 0.0175 | 0.0 | 0.0 | 0.2006 | 0.0 | 0.0 | 0.0 | 0.0003 | 0.0001 | 0.0 | 0.0 | 0.3729 | 0.0 | 0.0209 | 0.0044 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5778 | 0.0408 | 0.4932 | 0.0009 | 0.0 | 0.0 | 0.0 |

| 2.3224 | 0.1 | 40 | 2.4686 | 0.0885 | 0.1321 | 0.6347 | nan | 0.5225 | 0.9260 | 0.0005 | 0.0001 | 0.0006 | 0.0 | 0.0113 | 0.0 | 0.0 | 0.3738 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8191 | 0.0 | 0.0263 | 0.0012 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9649 | 0.0701 | 0.6434 | 0.0002 | 0.0 | 0.0 | 0.0 | 0.0 | 0.4240 | 0.6602 | 0.0005 | 0.0001 | 0.0006 | 0.0 | 0.0109 | 0.0 | 0.0 | 0.3292 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.3962 | 0.0 | 0.0260 | 0.0011 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6019 | 0.0617 | 0.5862 | 0.0001 | 0.0 | 0.0 | 0.0 |

| 2.1961 | 0.15 | 60 | 1.9886 | 0.0988 | 0.1431 | 0.6500 | nan | 0.5168 | 0.9319 | 0.0 | 0.0001 | 0.0000 | 0.0 | 0.0032 | 0.0 | 0.0 | 0.5761 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8325 | 0.0 | 0.0132 | 0.0003 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9612 | 0.1260 | 0.7625 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.3929 | 0.6721 | 0.0 | 0.0001 | 0.0000 | 0.0 | 0.0032 | 0.0 | 0.0 | 0.4609 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.4375 | 0.0 | 0.0131 | 0.0002 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6342 | 0.1108 | 0.6353 | 0.0 | 0.0 | 0.0 | 0.0 |

| 2.2964 | 0.2 | 80 | 2.0597 | 0.1066 | 0.1503 | 0.6682 | nan | 0.6577 | 0.9207 | 0.0 | 0.0000 | 0.0002 | 0.0 | 0.0044 | 0.0 | 0.0 | 0.5257 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8466 | 0.0 | 0.0094 | 0.0001 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9526 | 0.2022 | 0.8392 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.4276 | 0.7093 | 0.0 | 0.0000 | 0.0002 | 0.0 | 0.0044 | 0.0 | 0.0 | 0.4438 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.4488 | 0.0 | 0.0093 | 0.0001 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6560 | 0.1833 | 0.7408 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.9751 | 0.25 | 100 | 1.7493 | 0.1186 | 0.1645 | 0.6944 | nan | 0.7604 | 0.9146 | 0.0 | 0.0004 | 0.0012 | 0.0 | 0.0016 | 0.0 | 0.0 | 0.7381 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8273 | 0.0 | 0.0026 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9636 | 0.3289 | 0.8909 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.4904 | 0.7490 | 0.0 | 0.0004 | 0.0012 | 0.0 | 0.0016 | 0.0 | 0.0 | 0.5465 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.4913 | 0.0 | 0.0026 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.6542 | 0.2761 | 0.7004 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.7626 | 0.3 | 120 | 1.5608 | 0.1295 | 0.1752 | 0.7118 | nan | 0.8168 | 0.9102 | 0.0 | 0.0002 | 0.0025 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.8094 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8362 | 0.0 | 0.0030 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9492 | 0.5677 | 0.8861 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.4958 | 0.7592 | 0.0 | 0.0002 | 0.0025 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.5680 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5095 | 0.0 | 0.0030 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7082 | 0.4878 | 0.7392 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.32 | 0.35 | 140 | 1.5048 | 0.1323 | 0.1797 | 0.7181 | nan | 0.7883 | 0.9260 | 0.0 | 0.0000 | 0.0037 | 0.0 | 0.0000 | 0.0 | 0.0 | 0.8711 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8590 | 0.0 | 0.0022 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9128 | 0.7088 | 0.8576 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5141 | 0.7598 | 0.0 | 0.0000 | 0.0037 | 0.0 | 0.0000 | 0.0 | 0.0 | 0.5287 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5016 | 0.0 | 0.0022 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7458 | 0.5602 | 0.7499 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.6464 | 0.4 | 160 | 1.3886 | 0.1342 | 0.1783 | 0.7217 | nan | 0.7859 | 0.9390 | 0.0 | 0.0 | 0.0059 | 0.0 | 0.0 | 0.0 | 0.0 | 0.7401 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8508 | 0.0 | 0.0010 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9368 | 0.7223 | 0.9025 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5173 | 0.7561 | 0.0 | 0.0 | 0.0058 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5846 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5059 | 0.0 | 0.0010 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7366 | 0.5802 | 0.7401 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.4757 | 0.45 | 180 | 1.3649 | 0.1367 | 0.1840 | 0.7255 | nan | 0.8587 | 0.9185 | 0.0 | 0.0001 | 0.0039 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8588 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8337 | 0.0 | 0.0014 | 0.0001 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9036 | 0.7809 | 0.9138 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5077 | 0.7693 | 0.0 | 0.0001 | 0.0039 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5980 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5264 | 0.0 | 0.0014 | 0.0001 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7521 | 0.6078 | 0.7438 | 0.0 | 0.0 | 0.0 | 0.0 |

| 2.0018 | 0.5 | 200 | 1.3118 | 0.1353 | 0.1839 | 0.7242 | nan | 0.7797 | 0.9457 | 0.0 | 0.0029 | 0.0057 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8345 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8509 | 0.0 | 0.0018 | 0.0001 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.8704 | 0.8688 | 0.9069 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5321 | 0.7602 | 0.0 | 0.0029 | 0.0057 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6060 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5276 | 0.0 | 0.0018 | 0.0001 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7133 | 0.5551 | 0.7593 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.4636 | 0.55 | 220 | 1.2729 | 0.1330 | 0.1797 | 0.7249 | nan | 0.8619 | 0.9203 | 0.0 | 0.0015 | 0.0067 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8903 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8514 | 0.0 | 0.0031 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9447 | 0.5448 | 0.9040 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5249 | 0.7844 | 0.0 | 0.0015 | 0.0066 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5735 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5336 | 0.0 | 0.0031 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7136 | 0.4869 | 0.7613 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.1856 | 0.6 | 240 | 1.2551 | 0.1382 | 0.1828 | 0.7274 | nan | 0.7497 | 0.9518 | 0.0 | 0.0005 | 0.0048 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8893 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8153 | 0.0 | 0.0048 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9475 | 0.7597 | 0.9107 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5097 | 0.7477 | 0.0 | 0.0005 | 0.0047 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6172 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5527 | 0.0 | 0.0048 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7293 | 0.6250 | 0.7703 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.4577 | 0.65 | 260 | 1.1862 | 0.1387 | 0.1848 | 0.7304 | nan | 0.8842 | 0.9065 | 0.0 | 0.0001 | 0.0024 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8566 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8632 | 0.0 | 0.0006 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9442 | 0.7313 | 0.9080 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5121 | 0.7833 | 0.0 | 0.0001 | 0.0024 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6297 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5381 | 0.0 | 0.0006 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7437 | 0.6199 | 0.7486 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.0748 | 0.7 | 280 | 1.2000 | 0.1391 | 0.1846 | 0.7301 | nan | 0.7249 | 0.9690 | 0.0 | 0.0005 | 0.0064 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8909 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8656 | 0.0 | 0.0014 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.8917 | 0.8362 | 0.9065 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5306 | 0.7403 | 0.0 | 0.0005 | 0.0063 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6223 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5491 | 0.0 | 0.0014 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7566 | 0.6061 | 0.7761 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.642 | 0.75 | 300 | 1.1452 | 0.1432 | 0.1880 | 0.7409 | nan | 0.8682 | 0.9389 | 0.0 | 0.0030 | 0.0062 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8605 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8759 | 0.0 | 0.0020 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9092 | 0.8515 | 0.8892 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5333 | 0.7905 | 0.0 | 0.0030 | 0.0062 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6393 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5418 | 0.0 | 0.0020 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7655 | 0.6551 | 0.7893 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.2166 | 0.8 | 320 | 1.1450 | 0.1388 | 0.1849 | 0.7391 | nan | 0.8516 | 0.9460 | 0.0 | 0.0043 | 0.0060 | 0.0 | 0.0000 | 0.0 | 0.0 | 0.8944 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8803 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9283 | 0.6849 | 0.9071 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5584 | 0.7932 | 0.0 | 0.0043 | 0.0060 | 0.0 | 0.0000 | 0.0 | 0.0 | 0.5844 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5259 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7548 | 0.5985 | 0.7549 | 0.0 | 0.0 | 0.0 | 0.0 |

| 2.1346 | 0.85 | 340 | 1.1215 | 0.1428 | 0.1887 | 0.7411 | nan | 0.7956 | 0.9551 | 0.0 | 0.0145 | 0.0098 | 0.0 | 0.0000 | 0.0 | 0.0 | 0.8646 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8884 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9131 | 0.8828 | 0.9024 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5611 | 0.7721 | 0.0 | 0.0145 | 0.0097 | 0.0 | 0.0000 | 0.0 | 0.0 | 0.6313 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5405 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7563 | 0.6337 | 0.7917 | 0.0 | 0.0 | 0.0 | 0.0 |

| 0.8351 | 0.9 | 360 | 1.1012 | 0.1433 | 0.1896 | 0.7449 | nan | 0.8723 | 0.9432 | 0.0 | 0.0025 | 0.0114 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8822 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8662 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9213 | 0.8361 | 0.9201 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5472 | 0.7989 | 0.0 | 0.0025 | 0.0113 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6277 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5416 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7666 | 0.6674 | 0.7664 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.152 | 0.95 | 380 | 1.1045 | 0.1452 | 0.1891 | 0.7453 | nan | 0.8827 | 0.9332 | 0.0 | 0.0457 | 0.0124 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8396 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8848 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9399 | 0.7910 | 0.9107 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5462 | 0.7966 | 0.0 | 0.0457 | 0.0123 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6494 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5395 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7636 | 0.6627 | 0.7763 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.2062 | 1.0 | 400 | 1.0607 | 0.1469 | 0.1897 | 0.7482 | nan | 0.8192 | 0.9644 | 0.0 | 0.0944 | 0.0198 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8406 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8821 | 0.0 | 0.0006 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9193 | 0.8054 | 0.9137 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5772 | 0.7742 | 0.0 | 0.0941 | 0.0195 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6414 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5360 | 0.0 | 0.0006 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7740 | 0.6591 | 0.7710 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.0116 | 1.05 | 420 | 1.0503 | 0.1493 | 0.1950 | 0.7554 | nan | 0.8686 | 0.9478 | 0.0 | 0.2033 | 0.0295 | 0.0 | 0.0 | 0.0 | 0.0 | 0.9166 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8409 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9414 | 0.7667 | 0.9196 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5809 | 0.8022 | 0.0 | 0.1995 | 0.0287 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5916 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5517 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7628 | 0.6441 | 0.7652 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.009 | 1.1 | 440 | 1.0723 | 0.1529 | 0.1958 | 0.7553 | nan | 0.7797 | 0.9670 | 0.0 | 0.2214 | 0.0547 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.8978 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8927 | 0.0 | 0.0000 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9274 | 0.8016 | 0.9176 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5898 | 0.7717 | 0.0 | 0.2157 | 0.0526 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.6389 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5499 | 0.0 | 0.0000 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7760 | 0.6697 | 0.7818 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.1496 | 1.15 | 460 | 1.0417 | 0.1571 | 0.2017 | 0.7607 | nan | 0.7736 | 0.9645 | 0.0 | 0.3606 | 0.0669 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.8775 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8801 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9098 | 0.8906 | 0.9326 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6102 | 0.7737 | 0.0 | 0.3374 | 0.0634 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.6549 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5538 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7682 | 0.6437 | 0.7772 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.4669 | 1.2 | 480 | 1.0161 | 0.1566 | 0.2024 | 0.7637 | nan | 0.8236 | 0.9531 | 0.0 | 0.3507 | 0.0584 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.9165 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8675 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9263 | 0.8597 | 0.9222 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6005 | 0.7983 | 0.0 | 0.3296 | 0.0556 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.6153 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5498 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7752 | 0.6654 | 0.7770 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.075 | 1.25 | 500 | 1.0124 | 0.1556 | 0.2000 | 0.7634 | nan | 0.8521 | 0.9499 | 0.0 | 0.3154 | 0.0410 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.8944 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8618 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9442 | 0.8133 | 0.9290 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5910 | 0.8068 | 0.0 | 0.2992 | 0.0394 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.6338 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5507 | 0.0 | 0.0001 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7689 | 0.6697 | 0.7737 | 0.0 | 0.0 | 0.0 | 0.0 |

| 0.888 | 1.3 | 520 | 0.9797 | 0.1597 | 0.2028 | 0.7677 | nan | 0.8590 | 0.9472 | 0.0 | 0.3534 | 0.0469 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.8900 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8807 | 0.0 | 0.0005 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9379 | 0.8578 | 0.9187 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5908 | 0.8056 | 0.0 | 0.3311 | 0.0448 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.6598 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5676 | 0.0 | 0.0005 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7712 | 0.6912 | 0.8088 | 0.0 | 0.0 | 0.0 | 0.0 |

| 0.8099 | 1.35 | 540 | 0.9760 | 0.1589 | 0.2026 | 0.7678 | nan | 0.8526 | 0.9534 | 0.0 | 0.3370 | 0.0313 | 0.0 | 0.0000 | 0.0 | 0.0 | 0.9235 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8862 | 0.0 | 0.0005 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9252 | 0.8551 | 0.9206 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5954 | 0.8014 | 0.0 | 0.3188 | 0.0303 | 0.0 | 0.0000 | 0.0 | 0.0 | 0.6382 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5706 | 0.0 | 0.0005 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7830 | 0.6934 | 0.8122 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.1998 | 1.4 | 560 | 0.9815 | 0.1578 | 0.2030 | 0.7631 | nan | 0.8956 | 0.9250 | 0.0 | 0.3267 | 0.0461 | 0.0 | 0.0004 | 0.0 | 0.0 | 0.8929 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8956 | 0.0 | 0.0002 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9206 | 0.8669 | 0.9275 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5656 | 0.8136 | 0.0 | 0.3102 | 0.0440 | 0.0 | 0.0004 | 0.0 | 0.0 | 0.6574 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5524 | 0.0 | 0.0002 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7894 | 0.6940 | 0.7818 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.5591 | 1.45 | 580 | 0.9654 | 0.1618 | 0.2043 | 0.7698 | nan | 0.8198 | 0.9655 | 0.0 | 0.3715 | 0.0848 | 0.0 | 0.0003 | 0.0 | 0.0 | 0.8935 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8965 | 0.0 | 0.0013 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9146 | 0.8730 | 0.9198 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6182 | 0.7898 | 0.0 | 0.3467 | 0.0792 | 0.0 | 0.0003 | 0.0 | 0.0 | 0.6590 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5647 | 0.0 | 0.0013 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7871 | 0.6835 | 0.8101 | 0.0 | 0.0 | 0.0 | 0.0 |

| 0.861 | 1.5 | 600 | 0.9622 | 0.1607 | 0.2045 | 0.7689 | nan | 0.8163 | 0.9648 | 0.0 | 0.3780 | 0.0907 | 0.0 | 0.0002 | 0.0 | 0.0 | 0.9187 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8714 | 0.0 | 0.0006 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9229 | 0.8485 | 0.9361 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6180 | 0.7903 | 0.0 | 0.3541 | 0.0844 | 0.0 | 0.0002 | 0.0 | 0.0 | 0.6307 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5609 | 0.0 | 0.0006 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7854 | 0.6904 | 0.7884 | 0.0 | 0.0 | 0.0 | 0.0 |

| 0.8335 | 1.55 | 620 | 0.9569 | 0.1598 | 0.2050 | 0.7686 | nan | 0.8421 | 0.9561 | 0.0 | 0.3493 | 0.0928 | 0.0 | 0.0012 | 0.0 | 0.0 | 0.9261 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8753 | 0.0 | 0.0013 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9172 | 0.8688 | 0.9335 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6069 | 0.8031 | 0.0 | 0.3306 | 0.0860 | 0.0 | 0.0012 | 0.0 | 0.0 | 0.6123 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5618 | 0.0 | 0.0013 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7851 | 0.6911 | 0.7950 | 0.0 | 0.0 | 0.0 | 0.0 |

| 0.9988 | 1.6 | 640 | 0.9337 | 0.1611 | 0.2050 | 0.7711 | nan | 0.8595 | 0.9538 | 0.0 | 0.3512 | 0.0928 | 0.0 | 0.0006 | 0.0 | 0.0 | 0.8962 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8854 | 0.0 | 0.0004 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9281 | 0.8594 | 0.9367 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6062 | 0.8105 | 0.0 | 0.3310 | 0.0868 | 0.0 | 0.0006 | 0.0 | 0.0 | 0.6565 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5596 | 0.0 | 0.0004 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7819 | 0.6958 | 0.7880 | 0.0 | 0.0 | 0.0 | 0.0 |

| 0.966 | 1.65 | 660 | 0.9322 | 0.1612 | 0.2051 | 0.7707 | nan | 0.8706 | 0.9494 | 0.0 | 0.3470 | 0.0997 | 0.0 | 0.0005 | 0.0 | 0.0 | 0.8905 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8722 | 0.0 | 0.0016 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9347 | 0.8652 | 0.9364 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.5953 | 0.8136 | 0.0 | 0.3281 | 0.0922 | 0.0 | 0.0005 | 0.0 | 0.0 | 0.6654 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5696 | 0.0 | 0.0016 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7756 | 0.6890 | 0.7885 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.2154 | 1.7 | 680 | 0.9373 | 0.1611 | 0.2048 | 0.7710 | nan | 0.8448 | 0.9577 | 0.0 | 0.3717 | 0.1010 | 0.0 | 0.0007 | 0.0 | 0.0 | 0.9173 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8613 | 0.0 | 0.0026 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9411 | 0.8371 | 0.9246 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6096 | 0.8056 | 0.0 | 0.3487 | 0.0930 | 0.0 | 0.0007 | 0.0 | 0.0 | 0.6272 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5696 | 0.0 | 0.0026 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7762 | 0.6911 | 0.7931 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.7979 | 1.75 | 700 | 0.9429 | 0.1622 | 0.2067 | 0.7717 | nan | 0.8496 | 0.9548 | 0.0 | 0.3821 | 0.1182 | 0.0 | 0.0013 | 0.0 | 0.0 | 0.9071 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8803 | 0.0 | 0.0043 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9202 | 0.8812 | 0.9204 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6104 | 0.8088 | 0.0 | 0.3583 | 0.1074 | 0.0 | 0.0013 | 0.0 | 0.0 | 0.6410 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5675 | 0.0 | 0.0043 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7784 | 0.6767 | 0.7994 | 0.0 | 0.0 | 0.0 | 0.0 |

| 0.8366 | 1.8 | 720 | 0.9379 | 0.1645 | 0.2075 | 0.7745 | nan | 0.8359 | 0.9580 | 0.0 | 0.4130 | 0.1275 | 0.0 | 0.0021 | 0.0 | 0.0 | 0.8998 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8704 | 0.0 | 0.0088 | 0.0 | 0.0 | nan | 0.0 | 0.0000 | 0.0 | 0.0 | 0.9450 | 0.8617 | 0.9251 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6227 | 0.8035 | 0.0 | 0.3850 | 0.1147 | 0.0 | 0.0021 | 0.0 | 0.0 | 0.6544 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5777 | 0.0 | 0.0088 | 0.0 | 0.0 | nan | 0.0 | 0.0000 | 0.0 | 0.0 | 0.7682 | 0.6867 | 0.8055 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.0448 | 1.85 | 740 | 0.9419 | 0.1659 | 0.2087 | 0.7769 | nan | 0.8483 | 0.9532 | 0.0 | 0.4442 | 0.1387 | 0.0 | 0.0028 | 0.0 | 0.0 | 0.8986 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8865 | 0.0 | 0.0042 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9458 | 0.8442 | 0.9215 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6240 | 0.8122 | 0.0 | 0.4077 | 0.1237 | 0.0 | 0.0028 | 0.0 | 0.0 | 0.6529 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5700 | 0.0 | 0.0041 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7767 | 0.6938 | 0.8070 | 0.0 | 0.0 | 0.0 | 0.0 |

| 0.9737 | 1.9 | 760 | 0.9193 | 0.1664 | 0.2082 | 0.7772 | nan | 0.8420 | 0.9586 | 0.0 | 0.4353 | 0.1193 | 0.0 | 0.0010 | 0.0 | 0.0 | 0.9082 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8955 | 0.0 | 0.0079 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9385 | 0.8464 | 0.9190 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6232 | 0.8053 | 0.0 | 0.4022 | 0.1088 | 0.0 | 0.0010 | 0.0 | 0.0 | 0.6549 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5766 | 0.0 | 0.0079 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7843 | 0.7077 | 0.8180 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.0716 | 1.95 | 780 | 0.9170 | 0.1672 | 0.2098 | 0.7785 | nan | 0.8434 | 0.9539 | 0.0 | 0.4671 | 0.1283 | 0.0 | 0.0037 | 0.0 | 0.0 | 0.9012 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.8984 | 0.0 | 0.0058 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9398 | 0.8661 | 0.9157 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6242 | 0.8106 | 0.0 | 0.4232 | 0.1156 | 0.0 | 0.0037 | 0.0 | 0.0 | 0.6631 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5777 | 0.0 | 0.0057 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7811 | 0.6920 | 0.8223 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.4144 | 2.0 | 800 | 0.9249 | 0.1675 | 0.2109 | 0.7776 | nan | 0.8631 | 0.9423 | 0.0 | 0.4704 | 0.1421 | 0.0 | 0.0061 | 0.0 | 0.0 | 0.8937 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.9143 | 0.0 | 0.0055 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.9291 | 0.8710 | 0.9207 | 0.0 | 0.0 | 0.0 | 0.0 | nan | 0.6127 | 0.8192 | 0.0 | 0.4256 | 0.1262 | 0.0 | 0.0061 | 0.0 | 0.0 | 0.6655 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5666 | 0.0 | 0.0054 | 0.0 | 0.0 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 0.7875 | 0.6912 | 0.8218 | 0.0 | 0.0 | 0.0 | 0.0 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.6.1

- Tokenizers 0.12.1

|

a5c06b28f2ae5e684024c5083e3c215f

|

aajrami/bert-mlm-small

|

aajrami

|

roberta

| 9 | 6 |

transformers

| 0 |

feature-extraction

| true | false | false |

cc-by-4.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['bert']

| false | true | true | 809 | false |

## bert-mlm-small

A small-size BERT Language Model with an **MLM** pre-training objective. For more details about the pre-training objective and the pre-training hyperparameters, please refer to [How does the pre-training objective affect what large language models learn about linguistic properties?](https://aclanthology.org/2022.acl-short.16/)

## License

CC BY 4.0

## Citation

If you use this model, please cite the following paper:

```

@inproceedings{alajrami2022does,

title={How does the pre-training objective affect what large language models learn about linguistic properties?},

author={Alajrami, Ahmed and Aletras, Nikolaos},

booktitle={Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers)},

pages={131--147},

year={2022}

}

```

|

a02af933518036cfa3cb079203dc6bfd

|

gokuls/mobilebert_sa_GLUE_Experiment_qnli

|

gokuls

|

mobilebert

| 23 | 6 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

|

['en']

|

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,680 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mobilebert_sa_GLUE_Experiment_qnli

This model is a fine-tuned version of [google/mobilebert-uncased](https://huggingface.co/google/mobilebert-uncased) on the GLUE QNLI dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6487

- Accuracy: 0.6094

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.6754 | 1.0 | 819 | 0.6491 | 0.6178 |

| 0.6369 | 2.0 | 1638 | 0.6487 | 0.6094 |

| 0.6125 | 3.0 | 2457 | 0.6555 | 0.6088 |

| 0.5942 | 4.0 | 3276 | 0.6647 | 0.6028 |

| 0.5805 | 5.0 | 4095 | 0.6735 | 0.5934 |

| 0.5689 | 6.0 | 4914 | 0.6893 | 0.5978 |

| 0.5587 | 7.0 | 5733 | 0.7055 | 0.5896 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.8.0

- Tokenizers 0.13.2

|

3abb13f57a674d451274f36da239fa58

|

alirezafarashah/wav2vec2-base-ks-2sec

|

alirezafarashah

|

wav2vec2

| 10 | 3 |

transformers

| 0 |

audio-classification

| true | false | false |

apache-2.0

| null |

['superb']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,555 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-ks-2sec

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the superb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0880

- Accuracy: 0.9822

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Accuracy | Validation Loss |

|:-------------:|:-----:|:----:|:--------:|:---------------:|

| 0.5003 | 1.0 | 399 | 0.9643 | 0.4284 |

| 0.1868 | 2.0 | 798 | 0.9748 | 0.1628 |

| 0.1413 | 3.0 | 1197 | 0.9796 | 0.1128 |

| 0.1021 | 4.0 | 1596 | 0.9813 | 0.0940 |

| 0.1089 | 5.0 | 1995 | 0.0880 | 0.9822 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu111

- Datasets 1.14.0

- Tokenizers 0.10.3

|

7f8f3ac3c4ef317d84436282eb816e0e

|

kuttersn/gpt2-finetuned-redditComments

|

kuttersn

|

gpt2

| 9 | 4 |

transformers

| 0 |

text-generation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,252 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-finetuned-redditComments

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.8418

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 3.9535 | 1.0 | 4320 | 3.8888 |

| 3.8832 | 2.0 | 8640 | 3.8523 |

| 3.8708 | 3.0 | 12960 | 3.8418 |

### Framework versions

- Transformers 4.21.0.dev0

- Pytorch 1.12.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

f1beaeec6b92a0a529b4faae79a82e56

|

PiyarSquare/stable_diffusion_silz

|

PiyarSquare

| null | 8 | 0 | null | 17 | null | false | false | false |

creativeml-openrail-m

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,250 | false |

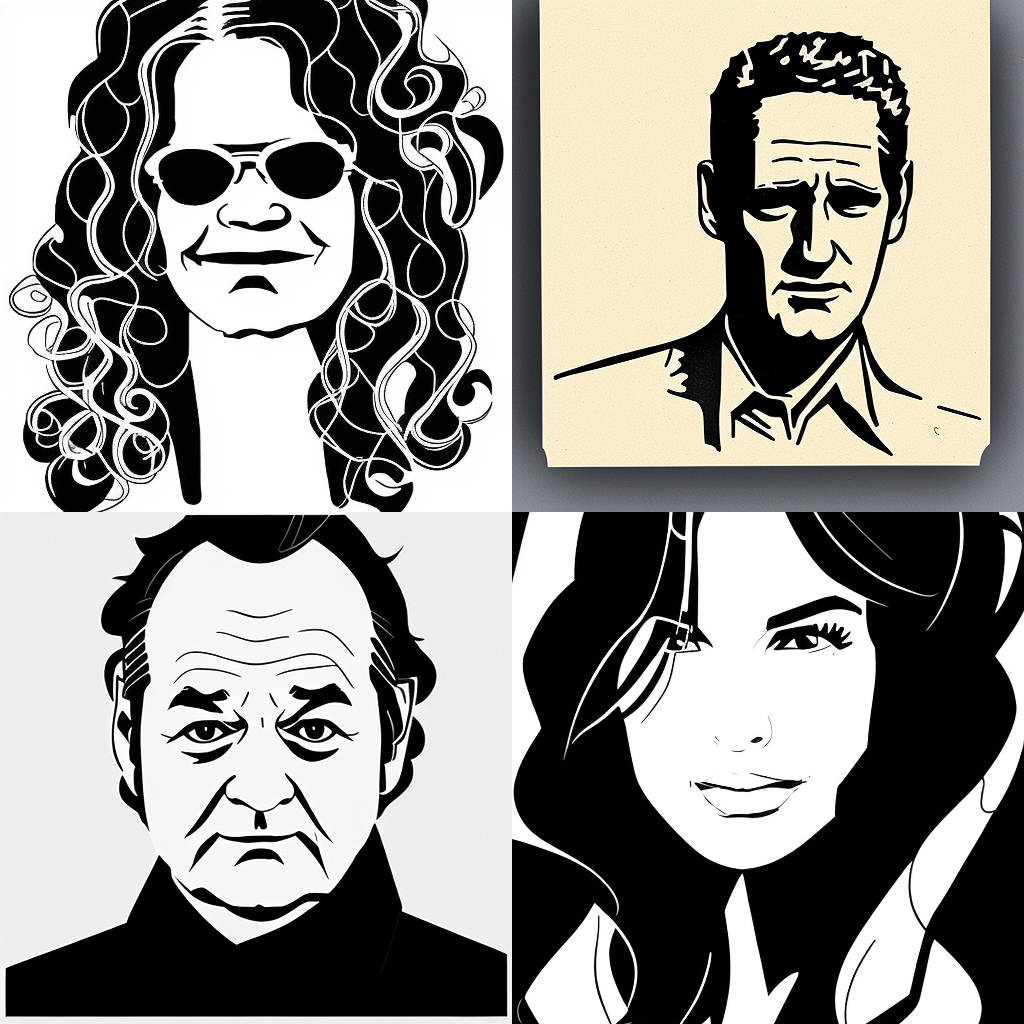

# 📜🗡️ Silhouette/Cricut style

This is a fine-tuned Stable Diffusion model designed for cutting machines.

Use **silz style** in your prompts.

### Sample images:

Based on StableDiffusion 1.5 model

### Training

Made with [automatic1111 webui](https://github.com/AUTOMATIC1111/stable-diffusion-webui) + [d8ahazard dreambooth extension](https://github.com/d8ahazard/sd_dreambooth_extension) + [nitrosocke guide](https://github.com/nitrosocke/dreambooth-training-guide).

82 training images at 1e-6 learning rate for 8200 steps.

Without prior preservation.

Inspired by [Fictiverse's PaperCut model](https://huggingface.co/Fictiverse/Stable_Diffusion_PaperCut_Model) and [txt2vector script](https://github.com/GeorgLegato/Txt2Vectorgraphics).

|

9a0e51931ab33e6af275a3274cf9ef6a

|

KoichiYasuoka/roberta-large-english-upos

|

KoichiYasuoka

|

roberta

| 10 | 1,915 |

transformers

| 1 |

token-classification

| true | false | false |

cc-by-sa-4.0

|

['en']

|

['universal_dependencies']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['english', 'token-classification', 'pos', 'dependency-parsing']

| false | true | true | 865 | false |

# roberta-large-english-upos

## Model Description

This is a RoBERTa model pre-trained with [UD_English](https://universaldependencies.org/en/) for POS-tagging and dependency-parsing, derived from [roberta-large](https://huggingface.co/roberta-large). Every word is tagged by [UPOS](https://universaldependencies.org/u/pos/) (Universal Part-Of-Speech).

## How to Use

```py

from transformers import AutoTokenizer,AutoModelForTokenClassification

tokenizer=AutoTokenizer.from_pretrained("KoichiYasuoka/roberta-large-english-upos")

model=AutoModelForTokenClassification.from_pretrained("KoichiYasuoka/roberta-large-english-upos")

```

or

```py

import esupar

nlp=esupar.load("KoichiYasuoka/roberta-large-english-upos")

```

## See Also

[esupar](https://github.com/KoichiYasuoka/esupar): Tokenizer POS-tagger and Dependency-parser with BERT/RoBERTa/DeBERTa models

|

74a7b4eb153a7e3825cd71b91d60dddd

|

emfa/danish-bert-botxo-danish-finetuned-hatespeech

|

emfa

|

bert

| 17 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

cc-by-4.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,562 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# danish-bert-botxo-danish-finetuned-hatespeech

This model is for a university project and is uploaded for sharing between students. It is training on a danish hate speech labeled training set. Feel free to use it, but as of now, we don't promise any good results ;-)

This model is a fine-tuned version of [Maltehb/danish-bert-botxo](https://huggingface.co/Maltehb/danish-bert-botxo) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3584

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 315 | 0.3285 |

| 0.2879 | 2.0 | 630 | 0.3288 |

| 0.2879 | 3.0 | 945 | 0.3178 |

| 0.1371 | 4.0 | 1260 | 0.3584 |

### Framework versions

- Transformers 4.12.5

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

02ba40c2482b516cd100785d164ef345

|

ytsai25/bert-finetuned-ner

|

ytsai25

|

bert

| 8 | 6 |

transformers

| 0 |

token-classification

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,423 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# ytsai25/bert-finetuned-ner

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0240

- Validation Loss: 0.0613

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 1017, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 0.1218 | 0.0592 | 0 |

| 0.0398 | 0.0602 | 1 |

| 0.0240 | 0.0613 | 2 |

### Framework versions

- Transformers 4.18.0

- TensorFlow 2.8.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

7c42eac51670c00284bc94a39c06f3df

|

azizbarank/distilbert-base-turkish-cased-sentiment

|

azizbarank

|

distilbert

| 13 | 40 |

transformers

| 0 |

text-classification

| true | false | false |

mit

| null |

['sepidmnorozy/Turkish_sentiment']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,163 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-turkish-cased-sentiment

This model is a fine-tuned version of [dbmdz/distilbert-base-turkish-cased](https://huggingface.co/dbmdz/distilbert-base-turkish-cased) on the [sepidmnorozy/Turkish_sentiment](https://huggingface.co/datasets/sepidmnorozy/Turkish_sentiment) dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4141

- Accuracy: 0.855

- F1: 0.8797

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.22.2

- Pytorch 1.9.1

- Datasets 2.5.1

- Tokenizers 0.12.1

|

8274b9782c49955efaea4329ff7e3986

|

joaoalvarenga/model-sid-voxforge-cv-cetuc-0

|

joaoalvarenga

|

wav2vec2

| 10 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | true |

apache-2.0

|

['pt']

|

['common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['audio', 'speech', 'wav2vec2', 'pt', 'apache-2.0', 'portuguese-speech-corpus', 'automatic-speech-recognition', 'speech', 'xlsr-fine-tuning-week', 'PyTorch']

| true | true | true | 3,410 | false |

# Wav2Vec2-Large-XLSR-53-Portuguese

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on Portuguese using the [Common Voice](https://huggingface.co/datasets/common_voice) dataset.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "pt", split="test[:2%]")

processor = Wav2Vec2Processor.from_pretrained("joorock12/wav2vec2-large-xlsr-portuguese-a")

model = Wav2Vec2ForCTC.from_pretrained("joorock12/wav2vec2-large-xlsr-portuguese-a")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on the Portuguese test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "pt", split="test")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("joorock12/wav2vec2-large-xlsr-portuguese-a")

model = Wav2Vec2ForCTC.from_pretrained("joorock12/wav2vec2-large-xlsr-portuguese-a")

model.to("cuda")

chars_to_ignore_regex = '[\,\?\.\!\-\;\:\"\“\'\�]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result (wer)**: 15.037146%

## Training

The Common Voice `train`, `validation` datasets were used for training.

The script used for training can be found at: https://github.com/joaoalvarenga/wav2vec2-large-xlsr-53-portuguese/blob/main/fine-tuning.py

|

af40d28e8224ff34004643c2ee3980f1

|

Raccourci/xlm-sustainability-sentiment

|

Raccourci

|

xlm-roberta

| 19 | 12 |

transformers

| 0 |

text-classification

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,949 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-sustainability-sentiment

This model is a fine-tuned version of [Raccourci/fairguest-bert](https://huggingface.co/Raccourci/fairguest-bert) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2997

- F1: 0.9335

- Roc Auc: 0.9335

- Accuracy: 0.9335

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 | Roc Auc | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:------:|:-------:|:--------:|

| No log | 0.98 | 15 | 0.5221 | 0.7173 | 0.7173 | 0.7173 |

| No log | 1.98 | 30 | 0.3833 | 0.7365 | 0.7308 | 0.7089 |

| No log | 2.98 | 45 | 0.3204 | 0.9030 | 0.9012 | 0.8836 |

| No log | 3.98 | 60 | 0.2861 | 0.8960 | 0.8960 | 0.8960 |

| No log | 4.98 | 75 | 0.2223 | 0.9125 | 0.9127 | 0.9106 |

| No log | 5.98 | 90 | 0.2499 | 0.9210 | 0.9210 | 0.9210 |

| No log | 6.98 | 105 | 0.2168 | 0.9293 | 0.9293 | 0.9293 |

| No log | 7.98 | 120 | 0.2122 | 0.9376 | 0.9376 | 0.9376 |

| No log | 8.98 | 135 | 0.2303 | 0.9335 | 0.9335 | 0.9335 |

| No log | 9.98 | 150 | 0.2455 | 0.9314 | 0.9314 | 0.9314 |

| No log | 10.98 | 165 | 0.2278 | 0.9335 | 0.9335 | 0.9335 |

| No log | 11.98 | 180 | 0.2593 | 0.9304 | 0.9304 | 0.9293 |

| No log | 12.98 | 195 | 0.2494 | 0.9397 | 0.9397 | 0.9397 |

| No log | 13.98 | 210 | 0.2579 | 0.9314 | 0.9314 | 0.9314 |

| No log | 14.98 | 225 | 0.2633 | 0.9356 | 0.9356 | 0.9356 |

| No log | 15.98 | 240 | 0.2918 | 0.9283 | 0.9283 | 0.9272 |

| No log | 16.98 | 255 | 0.2714 | 0.9356 | 0.9356 | 0.9356 |

| No log | 17.98 | 270 | 0.3034 | 0.9356 | 0.9356 | 0.9356 |

| No log | 18.98 | 285 | 0.3050 | 0.9325 | 0.9324 | 0.9314 |

| No log | 19.98 | 300 | 0.2997 | 0.9335 | 0.9335 | 0.9335 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

1ddfb22cf5559f38cf30bc23e80523b1

|

izumi-lab/electra-small-japanese-fin-discriminator

|

izumi-lab

|

electra

| 7 | 989 |

transformers

| 0 | null | true | false | false |

cc-by-sa-4.0

|

['ja']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['finance']

| false | true | true | 2,193 | false |

# ELECTRA small Japanese finance discriminator

This is a [ELECTRA](https://github.com/google-research/electra) model pretrained on texts in the Japanese language.

The codes for the pretraining are available at [retarfi/language-pretraining](https://github.com/retarfi/language-pretraining/tree/v1.0).

## Model architecture

The model architecture is the same as ELECTRA small in the [original ELECTRA implementation](https://github.com/google-research/electra); 12 layers, 256 dimensions of hidden states, and 4 attention heads.

## Training Data

The models are trained on the Japanese version of Wikipedia.

The training corpus is generated from the Japanese version of Wikipedia, using Wikipedia dump file as of June 1, 2021.

The Wikipedia corpus file is 2.9GB, consisting of approximately 20M sentences.

The financial corpus consists of 2 corpora:

- Summaries of financial results from October 9, 2012, to December 31, 2020

- Securities reports from February 8, 2018, to December 31, 2020

The financial corpus file is 5.2GB, consisting of approximately 27M sentences.

## Tokenization

The texts are first tokenized by MeCab with IPA dictionary and then split into subwords by the WordPiece algorithm.

The vocabulary size is 32768.

## Training

The models are trained with the same configuration as ELECTRA small in the [original ELECTRA paper](https://arxiv.org/abs/2003.10555) except size; 128 tokens per instance, 128 instances per batch, and 1M training steps.

The size of the generator is the same of the discriminator.

## Citation

```

@article{Suzuki-etal-2023-ipm,

title = {Constructing and analyzing domain-specific language model for financial text mining}

author = {Masahiro Suzuki and Hiroki Sakaji and Masanori Hirano and Kiyoshi Izumi},

journal = {Information Processing & Management},

volume = {60},

number = {2},

pages = {103194},

year = {2023},

doi = {10.1016/j.ipm.2022.103194}

}

```

## Licenses

The pretrained models are distributed under the terms of the [Creative Commons Attribution-ShareAlike 4.0](https://creativecommons.org/licenses/by-sa/4.0/).

## Acknowledgments

This work was supported by JSPS KAKENHI Grant Number JP21K12010.

|

f49e68e43be4fa5672c9f4984a909f63

|

Davincilee/closure_system_door_inne-bert-base-uncased

|

Davincilee

|

bert

| 16 | 4 |

transformers

| 0 |

fill-mask

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,135 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# closure_system_door_inne-bert-base-uncased

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7907

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 7

- eval_batch_size: 7

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.7321 | 1.0 | 2 | 2.5801 |

| 2.6039 | 2.0 | 4 | 2.0081 |

| 2.4556 | 3.0 | 6 | 2.3329 |

| 2.3587 | 4.0 | 8 | 2.4156 |

| 2.2565 | 5.0 | 10 | 2.0009 |

| 2.3489 | 6.0 | 12 | 1.7774 |

| 2.2622 | 7.0 | 14 | 2.2064 |

| 2.415 | 8.0 | 16 | 1.9671 |

| 2.1873 | 9.0 | 18 | 2.0729 |

| 2.2377 | 10.0 | 20 | 2.0052 |

| 2.352 | 11.0 | 22 | 1.9614 |

| 2.2347 | 12.0 | 24 | 2.2437 |

| 2.1113 | 13.0 | 26 | 1.7145 |

| 2.1939 | 14.0 | 28 | 1.5418 |

| 2.0645 | 15.0 | 30 | 2.1882 |

| 2.1499 | 16.0 | 32 | 2.0266 |

| 2.1432 | 17.0 | 34 | 2.3583 |

| 2.0656 | 18.0 | 36 | 2.3147 |

| 2.0348 | 19.0 | 38 | 2.2807 |

| 2.0502 | 20.0 | 40 | 1.7122 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

65323f1e82ea6fe32ccf168d0cb0be37

|

Shobhank-iiitdwd/Distiled-roberta-squad2-QA

|

Shobhank-iiitdwd

|

roberta

| 10 | 5 |

transformers

| 0 |

question-answering

| true | false | false |

cc-by-4.0

|

['en']

|

['squad_v2']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| true | true | true | 2,491 | false |

# Distiled-roberta-squad2

This is the *distilled* version of the [roberta-base-squad2-QA](https://huggingface.co/Shobhank-iiitdwd/Distiled-roberta-squad2-QA) model. This model has a comparable prediction quality and runs at twice the speed of the base model.

## Overview

**Language model:** Distiled-roberta-squad2-QA

**Language:** English

**Downstream-task:** Extractive QA

**Training data:** SQuAD 2.0

**Eval data:** SQuAD 2.0

## Hyperparameters

```

batch_size = 96

n_epochs = 4

base_LM_model = "Shobhank-iiitdwd/Distiled-roberta-squad2-QA"

max_seq_len = 384

learning_rate = 3e-5

lr_schedule = LinearWarmup

warmup_proportion = 0.2

doc_stride = 128

max_query_length = 64

distillation_loss_weight = 0.75

temperature = 1.5

teacher = "Shobhank-iiitdwd/Distiled-roberta-squad2-QA"

```

## Distillation

This model was distilled using the TinyBERT approach.Firstly, we have performed intermediate layer distillation with roberta-base as the teacher which resulted in Distiles-roberta.

Secondly, we have performed task-specific distillation with [roberta-base-squad2](https://huggingface.co/Shobhank-iiitdwd/roberta-squad2-QA) as the teacher for further intermediate layer distillation on an augmented version of SQuADv2 and then with [roberta-large-squad2](https://huggingface.co/Shobhank-iiitdwd/Distiled-roberta-squad2-QA) as the teacher for prediction layer distillation.

## Usage

### In Transformers

```python

from transformers import AutoModelForQuestionAnswering, AutoTokenizer, pipeline

model_name = "Shobhank-iiitdwd/Distiled-roberta-squad2-QA"

# a) Get predictions

nlp = pipeline('question-answering', model=model_name, tokenizer=model_name)

QA_input = {

'question': 'Why is model conversion important?',

'context': 'The option to convert models between FARM and transformers gives freedom to the user and let people easily switch between frameworks.'

}

res = nlp(QA_input)

# b) Load model & tokenizer

model = AutoModelForQuestionAnswering.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

```

## Performance

Evaluated on the SQuAD 2.0 dev set with the [official eval script](https://worksheets.codalab.org/rest/bundles/0x6b567e1cf2e041ec80d7098f031c5c9e/contents/blob/).

```

"exact": 78.69114798281817,

"f1": 81.9198998536977,

"total": 11873,

"HasAns_exact": 76.19770580296895,

"HasAns_f1": 82.66446878592329,

"HasAns_total": 5928,

"NoAns_exact": 81.17746005046257,

"NoAns_f1": 81.17746005046257,

"NoAns_total": 5945

```

|

dea682c9d8496c8654344ceeffaff397

|

dranzerstar/SD-textual-inversion-embeddings-repo

|

dranzerstar

| null | 303 | 0 |

diffusers

| 47 | null | false | false | false |

creativeml-openrail-m

| null | null | null | 0 | 0 | 0 | 0 | 1 | 1 | 0 |

['LoRa', 'embeddings']

| false | true | true | 1,974 | false |

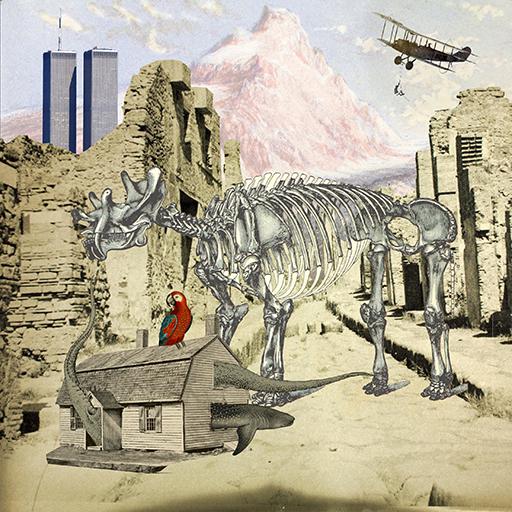

### SD-textual-inversion-embeddings/Lora repo

### Lora Networks

Still Exploring on this training process

prompt: masterpiece, best_quality, clear details,1girl, cowboy_shot, simple_background

with respective LoRa net

### Lora characters and outfits

using char-* and outfit-* togeather

masterpiece, best_quality, clear details,1girl, reverse_outfit (pasties) (maebari) high_heels \<lora:outfit-reverseoutfit:1\>, (fullbody), looking_at_viewer, floor , \<lora:char-seia:0.9\>,

----

### Textual inversion embeddings

### stable diffusion emeddings of characters and outfits

Check each image's PNG info inthe preview folder for excat gen params

# Sample of shinymas/character embeddings

generated with the same prompt with interchanging character phrase (char-X)

prompt: masterpiece, best_quality, clear details, char-kogane ,shirt,1girl,upper body

Negative prompt: fake_animal_ears, bad_prompt:0.8, (Cropped head), (Extra hands), (extra legs), (cropped), (missing legs), (duplicate), (morbid), cropped, (error), (bad anatomy), text, jpeg artifacts, (ugly), (morbid), (blurry), (low quality), (long leg), (poorly drawn), (bad proportions),

# Sample of char-toru wearing various outfit embeddings

generated with the same prompt with interchanging outfit phrase (char-X)

prompt: masterpiece, best_quality, clear details, illustration of char-toru standing wearing outfit-null:1.1, ((full body)) , (smile),(solo), boots, floor,

Negative prompt: fake_animal_ears, bad_prompt:0.8, (Cropped head), (Extra hands), (extra legs), (cropped), (missing legs), (duplicate), (morbid), cropped, (error), (bad anatomy), text, jpeg artifacts, (ugly), (morbid), (blurry), (low quality), (long leg), (poorly drawn), (bad proportions),

---

license: osl-3.0

---

|

1688bfd73b0a5f777c4e455de0bdb39a

|

jonatasgrosman/exp_w2v2t_et_unispeech-sat_s108

|

jonatasgrosman

|

unispeech-sat

| 10 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['et']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'et']

| false | true | true | 463 | false |

# exp_w2v2t_et_unispeech-sat_s108

Fine-tuned [microsoft/unispeech-sat-large](https://huggingface.co/microsoft/unispeech-sat-large) for speech recognition using the train split of [Common Voice 7.0 (et)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

4cd8c12037349cbb916ee69838e71d1a

|

teddy322/wav2vec2-large-xls-r-300m-kor-11385-3

|

teddy322

|

wav2vec2

| 15 | 0 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null |

['zeroth_korean_asr']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,332 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-kor-11385-3

This model is a fine-tuned version of [teddy322/wav2vec2-large-xls-r-300m-kor-11385-2](https://huggingface.co/teddy322/wav2vec2-large-xls-r-300m-kor-11385-2) on the zeroth_korean_asr dataset.

It achieves the following results on the evaluation set:

- eval_loss: 0.2425

- eval_wer: 0.1495