Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

3,401 | 95,229 |

ONNX Exporter for circular padding mode in convolution ops

|

module: onnx, triaged

|

### 🚀 The feature, motivation and pitch

Circular/wrap-around padding has recently been added to the Pad operator in ONNX opset 19:

https://github.com/onnx/onnx/blob/main/docs/Operators.md#Pad

When exporting convolution operators with `padding_mode` set to `circular`, one can directly make use of the new Pad operator instead of having to do concatenation.

### Alternatives

_No response_

### Additional context

_No response_

| 0 |

3,402 | 95,225 |

Remove conda virtualenv from the docker image

|

oncall: binaries, triaged, module: docker

|

### 🚀 The feature, motivation and pitch

Current docker image contains a conda virtualenv (`base`), in which all the libs are installed.

Since a docker image is already a kind a separated environement, I think a conda virtualenv is superfluous.

Conda could be removed, and pytorch could be installed in the native python installl with `pip`.

This could simplify the docker image, and possibly lighten it.

Unless there is another reason to use conda which I'm not aware of ?

cc @ezyang @seemethere @malfet

| 0 |

3,403 | 95,210 |

Add parallel attention layers and Multi-Query Attention (MQA) from PaLM to the fast path for transformers

|

oncall: transformer/mha

|

### 🚀 The feature, motivation and pitch

Parallel attention layers and MQA are introduced in PaLM [1]. The “standard” encoder layer, as currently implemented in PyTorch, follows the following structure:

Serial attention (with default `norm_first=False`):

`z = LN(x + Attention(x)); y = LN(z + MLP(z))`

A parallel attention layer from PaLM, on the other hand, is implemented as follows:

Parallel attention (with default `norm_first=False`):

`y = LN(x + Attention(x) + MLP(x))`

Parallel attention (with `norm_first=True`):

`y = x + Attention(LN(x)) + MLP(LN(x))`

As for MQA, the description from [1] is pretty concise and explains the advantage as well: “The standard Transformer formulation uses k attention heads, where the input vector for each timestep is linearly projected into “query”, “key”, and “value” tensors of shape [k, h], where h is the attention head size. Here, the key/value projections are shared for each head, i.e. “key” and “value” are projected to [1, h], but “query” is still projected to shape [k, h]. We have found that this has a neutral effect on model quality and training speed (Shazeer, 2019), but results in a significant cost savings at autoregressive decoding time. This is because standard multi-headed attention has low efficiency on accelerator hardware during auto-regressive decoding, because the key/value tensors are not shared between examples, and only a single token is decoded at a time.”

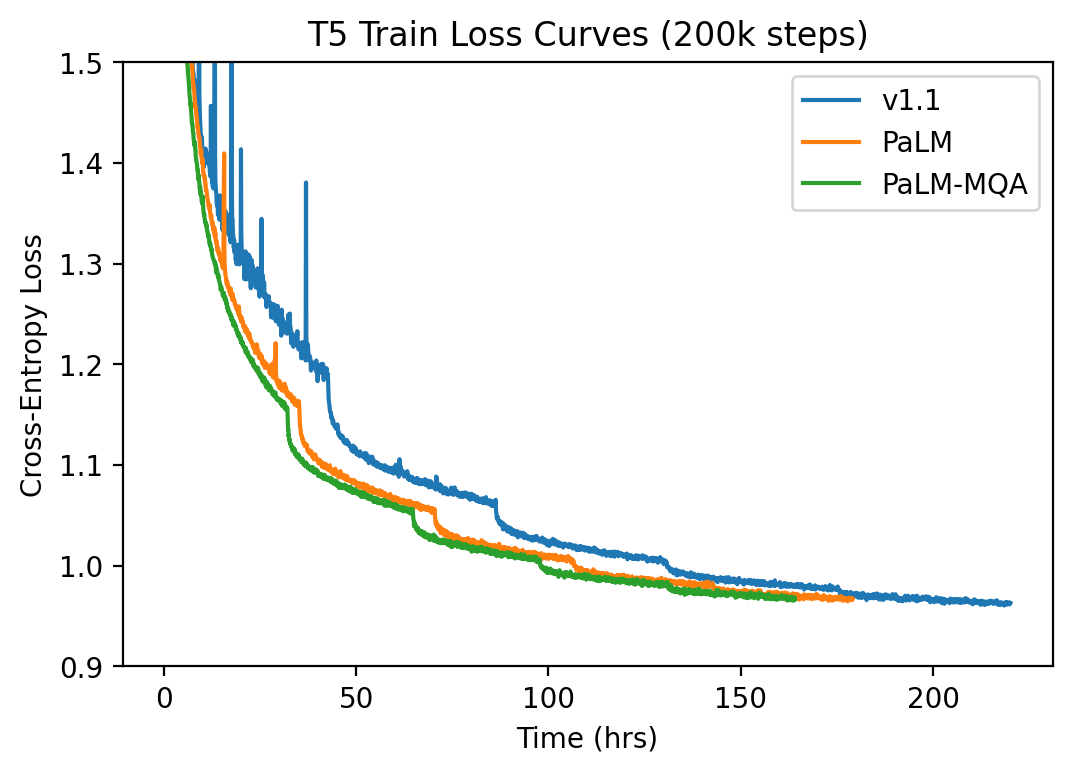

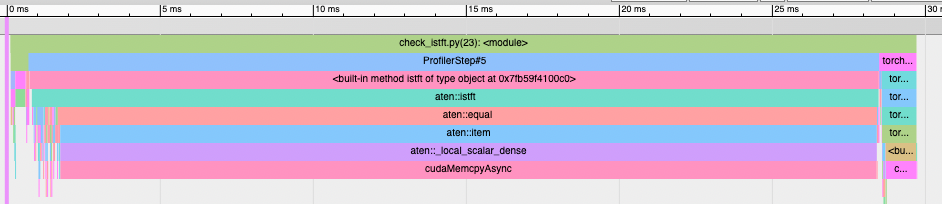

In our own experiments, we have seen a 23% speedup in training for T5-large when using parallel attention, with the same convergence, due to the ability to fuse the attention and feed-forward projection layers into a single matmul operation. Furthermore, adding MQA on top shaves off an extra 10% training time. See the image below for our training loss over time.

Of course, this is not free, and we have found that for larger models (3B and 11B parameters), memory can peak high enough that it can OOM an A100 GPU, even when running with FSDP.

As we build larger models and optimize them for inference, we would like to take advantage of the fast path for Transformers already available for the serial implementation, given the already observed advantage in training and the claimed speed-up on inference from using MQA, as well as explore ways to make this approach more memory efficient in PyTorch.

We envision two ways in which this can happen:

1. Through new parameters in both nn.TransformerEncoderLayer and nn.TransformerDecoderLayer (e.g. booleans `use_parallel_attention` and `use_mqa`), that would route the code through the parallel path and/or MQA.

1. New layers nn.TransformerParallelEncoderLayer and nn.TransformerParallelDecoderLayer

Both paths would eventually call the native `transformer_encoder_layer_forward` (https://github.com/pytorch/pytorch/blob/0dceaf07cd1236859953b6f85a61dc4411d10f87/aten/src/ATen/native/transformers/transformer.cpp#L65) function (or a new parallel version of it that ensures kernel fusing) if all conditions to hit the fast path are met.

cc: @daviswer @supriyogit @raghukiran1224 @mudhakar @mayank31398 @cpuhrsch @HamidShojanazeri

References:

[1] [PaLM: Scaling Language Models with Pathways](https://arxiv.org/pdf/2204.02311.pdf)

### Alternatives

_No response_

### Additional context

_No response_

cc @jbschlosser @bhosmer @cpuhrsch @erichan1

| 15 |

3,404 | 95,207 |

new backend privateuseone with "to" op

|

triaged, module: backend

|

### 🐛 Describe the bug

when I use a new backend with the key of privateuseone, and we have implement for the op of "to" with backend like this. I think it is an error with the dispatchkey of "AutogradPrivateUse1", So I do some tests for the bakcend.

### test_code

I add to_dtype func based on test cpp_extensions/open_registration_extension.cpp, link

https://github.com/heidongxianhua/pytorch/commit/fdb57dac418ec849dfd7900b1b69e815840b06b5

And when run the test with python3 test_cpp_extensions_open_device_registration.py, It dose not work and got error message is here. I check the to_dtype func have been registered for PrivateUse1 backend

```

Fail to import hypothesis in common_utils, tests are not derandomized

Using /root/.cache/torch_extensions/py38_cpu as PyTorch extensions root...

Creating extension directory /root/.cache/torch_extensions/py38_cpu/custom_device_extension...

Emitting ninja build file /root/.cache/torch_extensions/py38_cpu/custom_device_extension/build.ninja...

Building extension module custom_device_extension...

Allowing ninja to set a default number of workers... (overridable by setting the environment variable MAX_JOBS=N)

[1/2] c++ -MMD -MF open_registration_extension.o.d -DTORCH_EXTENSION_NAME=custom_device_extension -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\"_gcc\" -DPYBIND11_STDLIB=\"_libstdcpp\" -DPYBIND11_BUILD_ABI=\"_cxxabi1011\" -I/home/shibo/device_type/pytorch_shibo/test/cpp_extensions -isystem /root/anaconda3/envs/shibo2/lib/python3.8/site-packages/torch/include -isystem /root/anaconda3/envs/shibo2/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -isystem /root/anaconda3/envs/shibo2/lib/python3.8/site-packages/torch/include/TH -isystem /root/anaconda3/envs/shibo2/lib/python3.8/site-packages/torch/include/THC -isystem /root/anaconda3/envs/shibo2/include/python3.8 -D_GLIBCXX_USE_CXX11_ABI=1 -fPIC -std=c++17 -g -c /home/shibo/device_type/pytorch_shibo/test/cpp_extensions/open_registration_extension.cpp -o open_registration_extension.o

[2/2] c++ open_registration_extension.o -shared -L/root/anaconda3/envs/shibo2/lib/python3.8/site-packages/torch/lib -lc10 -ltorch_cpu -ltorch -ltorch_python -o custom_device_extension.so

Loading extension module custom_device_extension...

E

======================================================================

ERROR: test_open_device_registration (__main__.TestCppExtensionOpenRgistration)

----------------------------------------------------------------------

Traceback (most recent call last):

File "test_cpp_extensions_open_device_registration.py", line 78, in test_open_device_registration

y_int32 = y.to(torch.int32)

NotImplementedError: Could not run 'aten::to.dtype' with arguments from the 'AutogradPrivateUse1' backend. This could be because the operator doesn't exist for this backend, or was omitted during the selective/custom build process (if using custom build). If you are a Facebook employee using PyTorch on mobile, please visit https://fburl.com/ptmfixes for possible resolutions. 'aten::to.dtype' is only available for these backends: [CPU, CUDA, HIP, XLA, MPS, IPU, XPU, HPU, VE, Lazy, Meta, MTIA, PrivateUse1, PrivateUse2, PrivateUse3, FPGA, ORT, Vulkan, Metal, QuantizedCPU, QuantizedCUDA, QuantizedHIP, QuantizedXLA, QuantizedMPS, QuantizedIPU, QuantizedXPU, QuantizedHPU, QuantizedVE, QuantizedLazy, QuantizedMeta, QuantizedMTIA, QuantizedPrivateUse1, QuantizedPrivateUse2, QuantizedPrivateUse3, CustomRNGKeyId, MkldnnCPU, SparseCPU, SparseCUDA, SparseHIP, SparseXLA, SparseMPS, SparseIPU, SparseXPU, SparseHPU, SparseVE, SparseLazy, SparseMeta, SparseMTIA, SparsePrivateUse1, SparsePrivateUse2, SparsePrivateUse3, SparseCsrCPU, SparseCsrCUDA, NestedTensorCPU, NestedTensorCUDA, NestedTensorHIP, NestedTensorXLA, NestedTensorMPS, NestedTensorIPU, NestedTensorXPU, NestedTensorHPU, NestedTensorVE, NestedTensorLazy, NestedTensorMeta, NestedTensorMTIA, NestedTensorPrivateUse1, NestedTensorPrivateUse2, NestedTensorPrivateUse3, BackendSelect, Python, FuncTorchDynamicLayerBackMode, Functionalize, Named, Conjugate, Negative, ZeroTensor, ADInplaceOrView, AutogradOther, AutogradCPU, AutogradCUDA, AutogradHIP, AutogradXLA, AutogradMPS, AutogradIPU, AutogradXPU, AutogradHPU, AutogradVE, AutogradLazy, AutogradMeta, AutogradMTIA, AutogradPrivateUse2, AutogradPrivateUse3, AutogradNestedTensor, Tracer, AutocastCPU, AutocastCUDA, FuncTorchBatched, FuncTorchVmapMode, Batched, VmapMode, FuncTorchGradWrapper, PythonTLSSnapshot, FuncTorchDynamicLayerFrontMode, PythonDispatcher]

Undefined: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

CPU: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

CUDA: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

HIP: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

XLA: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

MPS: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

IPU: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

XPU: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

HPU: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

VE: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

Lazy: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

Meta: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

MTIA: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

PrivateUse1: registered at /home/shibo/device_type/pytorch_shibo/test/cpp_extensions/open_registration_extension.cpp:90 [kernel]

PrivateUse2: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

PrivateUse3: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

FPGA: registered at /home/shibo/device_type/pytorch_test/build/aten/src/ATen/RegisterCompositeImplicitAutograd.cpp:7140 [math kernel]

............

```

### Versions

```

Collecting environment information...

PyTorch version: 2.0.0a0+git900db22

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.4 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: 8.0.1 (based on LLVM 8.0.1)

CMake version: version 3.24.1

Libc version: glibc-2.27

Python version: 3.8.13 (default, Mar 28 2022, 11:38:47) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-4.15.0-76-generic-x86_64-with-glibc2.17

Is CUDA available: False

CUDA runtime version: No CUDA

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: False

Versions of relevant libraries:

[pip3] numpy==1.24.2

[pip3] torch==2.0.0a0+git2f0b0c5

[pip3] torch-npu==2.0.0

[conda] numpy 1.24.2 pypi_0 pypi

[conda] torch 2.0.0a0+git900db22 pypi_0 pypi

[conda] torch-npu 2.0.0 pypi_0 pypi

```

| 6 |

3,405 | 95,197 |

Pytorch 2.0 [compile] scatter_add bf16 Compiled Fx GraphModule failed

|

triaged, module: bfloat16, oncall: pt2, module: cpu inductor

|

### 🐛 Describe the bug

Please find minifier code below to reproduce the issue:

```

import os

from math import inf

import torch

from torch import tensor, device

import torch.fx as fx

import functools

import torch._dynamo

from torch._dynamo.debug_utils import run_fwd_maybe_bwd

from torch._dynamo.backends.registry import lookup_backend

from torch._dynamo.testing import rand_strided

import torch._dynamo.config

import torch._inductor.config

import torch._functorch.config

torch._dynamo.config.load_config(b'\x80\x02}q\x00(X\x0b\x00\x00\x00output_codeq\x01\x89X\r\x00\x00\x00log_file_nameq\x02NX\x07\x00\x00\x00verboseq\x03\x89X\x11\x00\x00\x00output_graph_codeq\x04\x89X\x12\x00\x00\x00verify_correctnessq\x05\x89X\x12\x00\x00\x00minimum_call_countq\x06K\x01X\x15\x00\x00\x00dead_code_eliminationq\x07\x88X\x10\x00\x00\x00cache_size_limitq\x08K@X\x14\x00\x00\x00specialize_int_floatq\t\x88X\x0e\x00\x00\x00dynamic_shapesq\n\x89X\x10\x00\x00\x00guard_nn_modulesq\x0b\x89X\x1b\x00\x00\x00traceable_tensor_subclassesq\x0cc__builtin__\nset\nq\r]q\x0e\x85q\x0fRq\x10X\x0f\x00\x00\x00suppress_errorsq\x11\x89X\x15\x00\x00\x00replay_record_enabledq\x12\x89X \x00\x00\x00rewrite_assert_with_torch_assertq\x13\x88X\x12\x00\x00\x00print_graph_breaksq\x14\x89X\x07\x00\x00\x00disableq\x15\x89X*\x00\x00\x00allowed_functions_module_string_ignorelistq\x16h\r]q\x17(X\x0b\x00\x00\x00torch._refsq\x18X\x0c\x00\x00\x00torch._primsq\x19X\r\x00\x00\x00torch.testingq\x1aX\x13\x00\x00\x00torch.distributionsq\x1bX\r\x00\x00\x00torch._decompq\x1ce\x85q\x1dRq\x1eX\x12\x00\x00\x00repro_forward_onlyq\x1f\x89X\x0f\x00\x00\x00repro_toleranceq G?PbM\xd2\xf1\xa9\xfcX\x16\x00\x00\x00capture_scalar_outputsq!\x89X\x19\x00\x00\x00enforce_cond_guards_matchq"\x88X\x0c\x00\x00\x00optimize_ddpq#\x88X\x1a\x00\x00\x00raise_on_ctx_manager_usageq$\x88X\x1c\x00\x00\x00raise_on_unsafe_aot_autogradq%\x89X\x18\x00\x00\x00error_on_nested_fx_traceq&\x88X\t\x00\x00\x00allow_rnnq\'\x89X\x08\x00\x00\x00base_dirq(X1\x00\x00\x00/home/jthakur/.pt_2_0/lib/python3.8/site-packagesq)X\x0e\x00\x00\x00debug_dir_rootq*Xm\x00\x00\x00/home/jthakur/qnpu/1.9.0-413/src/pytorch-training-tests/tests/torch_feature_val/single_op/torch_compile_debugq+X)\x00\x00\x00DO_NOT_USE_legacy_non_fake_example_inputsq,\x89X\x13\x00\x00\x00_save_config_ignoreq-h\r]q.(X\x12\x00\x00\x00constant_functionsq/X\x0b\x00\x00\x00repro_afterq0X!\x00\x00\x00skipfiles_inline_module_allowlistq1X\x0b\x00\x00\x00repro_levelq2e\x85q3Rq4u.')

torch._inductor.config.load_config(b'\x80\x02}q\x00(X\x05\x00\x00\x00debugq\x01\x89X\x10\x00\x00\x00disable_progressq\x02\x88X\x10\x00\x00\x00verbose_progressq\x03\x89X\x0b\x00\x00\x00cpp_wrapperq\x04\x89X\x03\x00\x00\x00dceq\x05\x89X\x14\x00\x00\x00static_weight_shapesq\x06\x88X\x0c\x00\x00\x00size_assertsq\x07\x88X\x10\x00\x00\x00pick_loop_ordersq\x08\x88X\x0f\x00\x00\x00inplace_buffersq\t\x88X\x11\x00\x00\x00benchmark_harnessq\n\x88X\x0f\x00\x00\x00epilogue_fusionq\x0b\x89X\x15\x00\x00\x00epilogue_fusion_firstq\x0c\x89X\x0f\x00\x00\x00pattern_matcherq\r\x88X\n\x00\x00\x00reorderingq\x0e\x89X\x0c\x00\x00\x00max_autotuneq\x0f\x89X\x17\x00\x00\x00realize_reads_thresholdq\x10K\x04X\x17\x00\x00\x00realize_bytes_thresholdq\x11M\xd0\x07X\x1b\x00\x00\x00realize_acc_reads_thresholdq\x12K\x08X\x0f\x00\x00\x00fallback_randomq\x13\x89X\x12\x00\x00\x00implicit_fallbacksq\x14\x88X\r\x00\x00\x00prefuse_nodesq\x15\x88X\x0b\x00\x00\x00tune_layoutq\x16\x89X\x11\x00\x00\x00aggressive_fusionq\x17\x89X\x0f\x00\x00\x00max_fusion_sizeq\x18K@X\x1b\x00\x00\x00unroll_reductions_thresholdq\x19K\x08X\x0e\x00\x00\x00comment_originq\x1a\x89X\x0f\x00\x00\x00compile_threadsq\x1bK\x0cX\x13\x00\x00\x00kernel_name_max_opsq\x1cK\nX\r\x00\x00\x00shape_paddingq\x1d\x89X\x0e\x00\x00\x00permute_fusionq\x1e\x89X\x1a\x00\x00\x00profiler_mark_wrapper_callq\x1f\x89X\x0b\x00\x00\x00cpp.threadsq J\xff\xff\xff\xffX\x13\x00\x00\x00cpp.dynamic_threadsq!\x89X\x0b\x00\x00\x00cpp.simdlenq"NX\x12\x00\x00\x00cpp.min_chunk_sizeq#M\x00\x10X\x07\x00\x00\x00cpp.cxxq$NX\x03\x00\x00\x00g++q%\x86q&X\x19\x00\x00\x00cpp.enable_kernel_profileq\'\x89X\x12\x00\x00\x00cpp.weight_prepackq(\x88X\x11\x00\x00\x00triton.cudagraphsq)\x89X\x17\x00\x00\x00triton.debug_sync_graphq*\x89X\x18\x00\x00\x00triton.debug_sync_kernelq+\x89X\x12\x00\x00\x00triton.convolutionq,X\x04\x00\x00\x00atenq-X\x15\x00\x00\x00triton.dense_indexingq.\x89X\x10\x00\x00\x00triton.max_tilesq/K\x02X\x19\x00\x00\x00triton.autotune_pointwiseq0\x88X\'\x00\x00\x00triton.tiling_prevents_pointwise_fusionq1\x88X\'\x00\x00\x00triton.tiling_prevents_reduction_fusionq2\x88X\x1b\x00\x00\x00triton.ordered_kernel_namesq3\x89X\x1f\x00\x00\x00triton.descriptive_kernel_namesq4\x89X\r\x00\x00\x00trace.enabledq5\x89X\x0f\x00\x00\x00trace.debug_logq6\x88X\x0e\x00\x00\x00trace.info_logq7\x89X\x0e\x00\x00\x00trace.fx_graphq8\x88X\x1a\x00\x00\x00trace.fx_graph_transformedq9\x88X\x13\x00\x00\x00trace.ir_pre_fusionq:\x88X\x14\x00\x00\x00trace.ir_post_fusionq;\x88X\x11\x00\x00\x00trace.output_codeq<\x88X\x13\x00\x00\x00trace.graph_diagramq=\x89X\x15\x00\x00\x00trace.compile_profileq>\x89X\x10\x00\x00\x00trace.upload_tarq?Nu.')

torch._functorch.config.load_config(b'\x80\x02}q\x00(X\x11\x00\x00\x00use_functionalizeq\x01\x88X\x0f\x00\x00\x00use_fake_tensorq\x02\x88X\x16\x00\x00\x00fake_tensor_allow_metaq\x03\x88X\x0c\x00\x00\x00debug_assertq\x04\x88X\x14\x00\x00\x00debug_fake_cross_refq\x05\x89X\x11\x00\x00\x00debug_partitionerq\x06\x89X\x0c\x00\x00\x00debug_graphsq\x07\x89X\x0b\x00\x00\x00debug_jointq\x08\x89X\x12\x00\x00\x00use_dynamic_shapesq\t\x89X\x14\x00\x00\x00static_weight_shapesq\n\x88X\x03\x00\x00\x00cseq\x0b\x88X\x10\x00\x00\x00max_dist_from_bwq\x0cK\x03X\t\x00\x00\x00log_levelq\rK\x14u.')

# REPLACEABLE COMMENT FOR TESTING PURPOSES

args = [((129, 129), (129, 1), torch.int64, 'cpu', False), ((129, 129), (129, 1), torch.bfloat16, 'cpu', True), ((129, 129), (129, 1), torch.bfloat16, 'cpu', True)]

args = [rand_strided(sh, st, dt, dev).requires_grad_(rg) for (sh, st, dt, dev, rg) in args]

from torch.nn import *

class Repro(torch.nn.Module):

def __init__(self):

super().__init__()

def forward(self, op_inputs_dict_index_ : torch.Tensor, op_inputs_dict_src_ : torch.Tensor, op_inputs_dict_input_ : torch.Tensor):

scatter_add = torch.scatter_add(index = op_inputs_dict_index_, dim = -2, src = op_inputs_dict_src_, input = op_inputs_dict_input_); op_inputs_dict_index_ = op_inputs_dict_src_ = op_inputs_dict_input_ = None

return (scatter_add,)

mod = Repro()

# Setup debug minifier compiler

torch._dynamo.debug_utils.MINIFIER_SPAWNED = True

compiler_fn = lookup_backend("dynamo_minifier_backend")

dynamo_minifier_backend = functools.partial(

compiler_fn,

compiler_name="inductor",

)

opt_mod = torch._dynamo.optimize(dynamo_minifier_backend)(mod)

with torch.cuda.amp.autocast(enabled=False):

opt_mod(*args)

```

Error:

```

File "/usr/lib/python3.8/concurrent/futures/thread.py", line 57, in run

result = self.fn(*self.args, **self.kwargs)

File "/home/jthakur/.pt_2_0/lib/python3.8/site-packages/torch/_inductor/codecache.py", line 691, in task

return CppCodeCache.load(source_code).kernel

File "/home/jthakur/.pt_2_0/lib/python3.8/site-packages/torch/_inductor/codecache.py", line 506, in load

raise exc.CppCompileError(cmd, e.output) from e

torch._inductor.exc.CppCompileError: C++ compile error

```

Same graph works fine in eager mode execution

### Versions

Versions of relevant libraries:

[pip3] numpy==1.24.1

[pip3] torch==2.0.0.dev20230209+cpu

[pip3] torchaudio==2.0.0.dev20230209+cpu

[pip3] torchvision==0.15.0.dev20230209+cpu

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 1 |

3,406 | 95,194 |

High Cuda Memory Consumption for Simple ResNet50 Inference

|

oncall: jit

|

### 🐛 Describe the bug

I run a simple inference of a jit::traced Resnet50 model with the C++ API and record a peak GPU memory consumption of 1.2gb. The inference script is taken from the docs https://pytorch.org/tutorials/advanced/cpp_export.html and looks as follows:

```

#include <torch/script.h> // One-stop header.

#include <iostream>

#include <memory>

#include <vector>

int main(int argc, const char* argv[]) {

if (argc != 2) {

std::cerr << "usage: example-app <path-to-exported-script-module>\n";

return -1;

}

{

c10::InferenceMode guard;

torch::jit::script::Module module;

try {

// Deserialize the ScriptModule from a file using

// torch::jit::load().

module = torch::jit::load(argv[1]);

module.to(at::kCUDA);

} catch (const c10::Error& e) {

std::cerr << "error loading the model\n";

return -1;

}

std::vector<torch::jit::IValue> inputs;

at::Tensor rand = torch::rand({1, 3, 224, 224});

at::Tensor rand2 = at::_cast_Half(rand);

inputs.push_back(rand2.to(at::kCUDA));

at::Tensor output = module.forward(inputs).toTensor();

std::cout << output.slice(/*dim=*/1, /*start=*/0, /*end=*/5) << '\n';

}

std::cout << "ok\n";

}

```

When running the script with a traced model input (converted to half), the memory consumption goes to 1.2gb. As a comparison:

- A back-of-the-envelope calculation: The model has about 40m parameters and the input is of size 3x224x224 , so I think a very, very memory-efficient inference should be at ~100-150 mb.

- TensorRT uses ~260mb for the inference.

We would like to use the libtorch for inference in production and I would be great if there was a way to reduce the memory consumption to ~500mb. Is there a way to do that?

### Versions

build-version in the libtorch folder shows:

```

1.13.1+cu116

```

Should I collect other relevant info?

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel

| 0 |

3,407 | 95,189 |

Pytorch 2.0 [compile] index_add bf16 compilation error

|

triaged, module: bfloat16, oncall: pt2, module: cpu inductor

|

### 🐛 Describe the bug

Please use below minifier code to reproduce the issue

```

import os

from math import inf

import torch

from torch import tensor, device

import torch.fx as fx

import functools

import torch._dynamo

from torch._dynamo.debug_utils import run_fwd_maybe_bwd

from torch._dynamo.backends.registry import lookup_backend

from torch._dynamo.testing import rand_strided

import torch._dynamo.config

import torch._inductor.config

import torch._functorch.config

torch._dynamo.config.load_config(b'\x80\x02}q\x00(X\x0b\x00\x00\x00output_codeq\x01\x89X\r\x00\x00\x00log_file_nameq\x02NX\x07\x00\x00\x00verboseq\x03\x89X\x11\x00\x00\x00output_graph_codeq\x04\x89X\x12\x00\x00\x00verify_correctnessq\x05\x89X\x12\x00\x00\x00minimum_call_countq\x06K\x01X\x15\x00\x00\x00dead_code_eliminationq\x07\x88X\x10\x00\x00\x00cache_size_limitq\x08M\xb8\x0bX\x14\x00\x00\x00specialize_int_floatq\t\x88X\x0e\x00\x00\x00dynamic_shapesq\n\x89X\x10\x00\x00\x00guard_nn_modulesq\x0b\x89X\x1b\x00\x00\x00traceable_tensor_subclassesq\x0cc__builtin__\nset\nq\r]q\x0e\x85q\x0fRq\x10X\x0f\x00\x00\x00suppress_errorsq\x11\x89X\x15\x00\x00\x00replay_record_enabledq\x12\x89X \x00\x00\x00rewrite_assert_with_torch_assertq\x13\x88X\x12\x00\x00\x00print_graph_breaksq\x14\x89X\x07\x00\x00\x00disableq\x15\x89X*\x00\x00\x00allowed_functions_module_string_ignorelistq\x16h\r]q\x17(X\x0c\x00\x00\x00torch._primsq\x18X\x0b\x00\x00\x00torch._refsq\x19X\r\x00\x00\x00torch.testingq\x1aX\x13\x00\x00\x00torch.distributionsq\x1bX\r\x00\x00\x00torch._decompq\x1ce\x85q\x1dRq\x1eX\x12\x00\x00\x00repro_forward_onlyq\x1f\x89X\x0f\x00\x00\x00repro_toleranceq G?PbM\xd2\xf1\xa9\xfcX\x16\x00\x00\x00capture_scalar_outputsq!\x89X\x19\x00\x00\x00enforce_cond_guards_matchq"\x88X\x0c\x00\x00\x00optimize_ddpq#\x88X\x1a\x00\x00\x00raise_on_ctx_manager_usageq$\x88X\x1c\x00\x00\x00raise_on_unsafe_aot_autogradq%\x89X\x18\x00\x00\x00error_on_nested_fx_traceq&\x88X\t\x00\x00\x00allow_rnnq\'\x89X\x08\x00\x00\x00base_dirq(X1\x00\x00\x00/home/jthakur/.pt_2_0/lib/python3.8/site-packagesq)X\x0e\x00\x00\x00debug_dir_rootq*Xm\x00\x00\x00/home/jthakur/qnpu/1.9.0-413/src/pytorch-training-tests/tests/torch_feature_val/single_op/torch_compile_debugq+X)\x00\x00\x00DO_NOT_USE_legacy_non_fake_example_inputsq,\x89X\x13\x00\x00\x00_save_config_ignoreq-h\r]q.(X\x12\x00\x00\x00constant_functionsq/X\x0b\x00\x00\x00repro_afterq0X!\x00\x00\x00skipfiles_inline_module_allowlistq1X\x0b\x00\x00\x00repro_levelq2e\x85q3Rq4u.')

torch._inductor.config.load_config(b'\x80\x02}q\x00(X\x05\x00\x00\x00debugq\x01\x89X\x10\x00\x00\x00disable_progressq\x02\x88X\x10\x00\x00\x00verbose_progressq\x03\x89X\x0b\x00\x00\x00cpp_wrapperq\x04\x89X\x03\x00\x00\x00dceq\x05\x89X\x14\x00\x00\x00static_weight_shapesq\x06\x88X\x0c\x00\x00\x00size_assertsq\x07\x88X\x10\x00\x00\x00pick_loop_ordersq\x08\x88X\x0f\x00\x00\x00inplace_buffersq\t\x88X\x11\x00\x00\x00benchmark_harnessq\n\x88X\x0f\x00\x00\x00epilogue_fusionq\x0b\x89X\x15\x00\x00\x00epilogue_fusion_firstq\x0c\x89X\x0f\x00\x00\x00pattern_matcherq\r\x88X\n\x00\x00\x00reorderingq\x0e\x89X\x0c\x00\x00\x00max_autotuneq\x0f\x89X\x17\x00\x00\x00realize_reads_thresholdq\x10K\x04X\x17\x00\x00\x00realize_bytes_thresholdq\x11M\xd0\x07X\x1b\x00\x00\x00realize_acc_reads_thresholdq\x12K\x08X\x0f\x00\x00\x00fallback_randomq\x13\x89X\x12\x00\x00\x00implicit_fallbacksq\x14\x88X\r\x00\x00\x00prefuse_nodesq\x15\x88X\x0b\x00\x00\x00tune_layoutq\x16\x89X\x11\x00\x00\x00aggressive_fusionq\x17\x89X\x0f\x00\x00\x00max_fusion_sizeq\x18K@X\x1b\x00\x00\x00unroll_reductions_thresholdq\x19K\x08X\x0e\x00\x00\x00comment_originq\x1a\x89X\x0f\x00\x00\x00compile_threadsq\x1bK\x0cX\x13\x00\x00\x00kernel_name_max_opsq\x1cK\nX\r\x00\x00\x00shape_paddingq\x1d\x89X\x0e\x00\x00\x00permute_fusionq\x1e\x89X\x1a\x00\x00\x00profiler_mark_wrapper_callq\x1f\x89X\x0b\x00\x00\x00cpp.threadsq J\xff\xff\xff\xffX\x13\x00\x00\x00cpp.dynamic_threadsq!\x89X\x0b\x00\x00\x00cpp.simdlenq"NX\x12\x00\x00\x00cpp.min_chunk_sizeq#M\x00\x10X\x07\x00\x00\x00cpp.cxxq$NX\x03\x00\x00\x00g++q%\x86q&X\x19\x00\x00\x00cpp.enable_kernel_profileq\'\x89X\x12\x00\x00\x00cpp.weight_prepackq(\x88X\x11\x00\x00\x00triton.cudagraphsq)\x89X\x17\x00\x00\x00triton.debug_sync_graphq*\x89X\x18\x00\x00\x00triton.debug_sync_kernelq+\x89X\x12\x00\x00\x00triton.convolutionq,X\x04\x00\x00\x00atenq-X\x15\x00\x00\x00triton.dense_indexingq.\x89X\x10\x00\x00\x00triton.max_tilesq/K\x02X\x19\x00\x00\x00triton.autotune_pointwiseq0\x88X\'\x00\x00\x00triton.tiling_prevents_pointwise_fusionq1\x88X\'\x00\x00\x00triton.tiling_prevents_reduction_fusionq2\x88X\x1b\x00\x00\x00triton.ordered_kernel_namesq3\x89X\x1f\x00\x00\x00triton.descriptive_kernel_namesq4\x89X\r\x00\x00\x00trace.enabledq5\x89X\x0f\x00\x00\x00trace.debug_logq6\x88X\x0e\x00\x00\x00trace.info_logq7\x89X\x0e\x00\x00\x00trace.fx_graphq8\x88X\x1a\x00\x00\x00trace.fx_graph_transformedq9\x88X\x13\x00\x00\x00trace.ir_pre_fusionq:\x88X\x14\x00\x00\x00trace.ir_post_fusionq;\x88X\x11\x00\x00\x00trace.output_codeq<\x88X\x13\x00\x00\x00trace.graph_diagramq=\x89X\x15\x00\x00\x00trace.compile_profileq>\x89X\x10\x00\x00\x00trace.upload_tarq?Nu.')

torch._functorch.config.load_config(b'\x80\x02}q\x00(X\x11\x00\x00\x00use_functionalizeq\x01\x88X\x0f\x00\x00\x00use_fake_tensorq\x02\x88X\x16\x00\x00\x00fake_tensor_allow_metaq\x03\x88X\x0c\x00\x00\x00debug_assertq\x04\x88X\x14\x00\x00\x00debug_fake_cross_refq\x05\x89X\x11\x00\x00\x00debug_partitionerq\x06\x89X\x0c\x00\x00\x00debug_graphsq\x07\x89X\x0b\x00\x00\x00debug_jointq\x08\x89X\x12\x00\x00\x00use_dynamic_shapesq\t\x89X\x14\x00\x00\x00static_weight_shapesq\n\x88X\x03\x00\x00\x00cseq\x0b\x88X\x10\x00\x00\x00max_dist_from_bwq\x0cK\x03X\t\x00\x00\x00log_levelq\rK\x14u.')

# REPLACEABLE COMMENT FOR TESTING PURPOSES

args = [((3,), (1,), torch.int64, 'cpu', False), ((4, 3, 32, 8), (768, 256, 8, 1), torch.bfloat16, 'cpu', True), ((4, 16, 32, 8), (4096, 256, 8, 1), torch.bfloat16, 'cpu', True)]

args = [rand_strided(sh, st, dt, dev).requires_grad_(rg) for (sh, st, dt, dev, rg) in args]

from torch.nn import *

class Repro(torch.nn.Module):

def __init__(self):

super().__init__()

def forward(self, op_inputs_dict_index_ : torch.Tensor, op_inputs_dict_source_ : torch.Tensor, op_inputs_dict_input_ : torch.Tensor):

index_add = torch.index_add(dim = 1, index = op_inputs_dict_index_, source = op_inputs_dict_source_, alpha = 2, input = op_inputs_dict_input_); op_inputs_dict_index_ = op_inputs_dict_source_ = op_inputs_dict_input_ = None

return (index_add,)

mod = Repro()

# Setup debug minifier compiler

torch._dynamo.debug_utils.MINIFIER_SPAWNED = True

compiler_fn = lookup_backend("dynamo_minifier_backend")

dynamo_minifier_backend = functools.partial(

compiler_fn,

compiler_name="inductor",

)

opt_mod = torch._dynamo.optimize(dynamo_minifier_backend)(mod)

with torch.cuda.amp.autocast(enabled=False):

opt_mod(*args)

```

Error:

```

return CppCodeCache.load(source_code).kernel

File "/home/jthakur/.pt_2_0/lib/python3.8/site-packages/torch/_inductor/codecache.py", line 506, in load

raise exc.CppCompileError(cmd, e.output) from e

torch._inductor.exc.CppCompileError: C++ compile error

```

### Versions

Versions of relevant libraries:

[pip3] numpy==1.24.1

[pip3] torch==2.0.0.dev20230209+cpu

[pip3] torchaudio==2.0.0.dev20230209+cpu

[pip3] torchvision==0.15.0.dev20230209+cpu

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 6 |

3,408 | 95,186 |

Pytorch 2.0 [compile] as_strided inplace causes out of bounds for storage

|

triaged, oncall: pt2, module: dynamo

|

### 🐛 Describe the bug

Please use below code to reproduce error:

```

import os

from math import inf

import torch

from torch import tensor, device

import torch.fx as fx

import functools

import torch._dynamo

from torch._dynamo.debug_utils import run_fwd_maybe_bwd

from torch._dynamo.backends.registry import lookup_backend

from torch._dynamo.testing import rand_strided

import torch._dynamo.config

import torch._inductor.config

import torch._functorch.config

torch._dynamo.config.load_config(b'\x80\x02}q\x00(X\x0b\x00\x00\x00output_codeq\x01\x89X\r\x00\x00\x00log_file_nameq\x02NX\x07\x00\x00\x00verboseq\x03\x89X\x11\x00\x00\x00output_graph_codeq\x04\x89X\x12\x00\x00\x00verify_correctnessq\x05\x89X\x12\x00\x00\x00minimum_call_countq\x06K\x01X\x15\x00\x00\x00dead_code_eliminationq\x07\x88X\x10\x00\x00\x00cache_size_limitq\x08M\xb8\x0bX\x14\x00\x00\x00specialize_int_floatq\t\x88X\x0e\x00\x00\x00dynamic_shapesq\n\x89X\x10\x00\x00\x00guard_nn_modulesq\x0b\x89X\x1b\x00\x00\x00traceable_tensor_subclassesq\x0cc__builtin__\nset\nq\r]q\x0e\x85q\x0fRq\x10X\x0f\x00\x00\x00suppress_errorsq\x11\x89X\x15\x00\x00\x00replay_record_enabledq\x12\x89X \x00\x00\x00rewrite_assert_with_torch_assertq\x13\x88X\x12\x00\x00\x00print_graph_breaksq\x14\x89X\x07\x00\x00\x00disableq\x15\x89X*\x00\x00\x00allowed_functions_module_string_ignorelistq\x16h\r]q\x17(X\x0b\x00\x00\x00torch._refsq\x18X\r\x00\x00\x00torch._decompq\x19X\x13\x00\x00\x00torch.distributionsq\x1aX\x0c\x00\x00\x00torch._primsq\x1bX\r\x00\x00\x00torch.testingq\x1ce\x85q\x1dRq\x1eX\x12\x00\x00\x00repro_forward_onlyq\x1f\x89X\x0f\x00\x00\x00repro_toleranceq G?PbM\xd2\xf1\xa9\xfcX\x16\x00\x00\x00capture_scalar_outputsq!\x89X\x19\x00\x00\x00enforce_cond_guards_matchq"\x88X\x0c\x00\x00\x00optimize_ddpq#\x88X\x1a\x00\x00\x00raise_on_ctx_manager_usageq$\x88X\x1c\x00\x00\x00raise_on_unsafe_aot_autogradq%\x89X\x18\x00\x00\x00error_on_nested_fx_traceq&\x88X\t\x00\x00\x00allow_rnnq\'\x89X\x08\x00\x00\x00base_dirq(X1\x00\x00\x00/home/jthakur/.pt_2_0/lib/python3.8/site-packagesq)X\x0e\x00\x00\x00debug_dir_rootq*Xm\x00\x00\x00/home/jthakur/qnpu/1.9.0-413/src/pytorch-training-tests/tests/torch_feature_val/single_op/torch_compile_debugq+X)\x00\x00\x00DO_NOT_USE_legacy_non_fake_example_inputsq,\x89X\x13\x00\x00\x00_save_config_ignoreq-h\r]q.(X!\x00\x00\x00skipfiles_inline_module_allowlistq/X\x0b\x00\x00\x00repro_afterq0X\x0b\x00\x00\x00repro_levelq1X\x12\x00\x00\x00constant_functionsq2e\x85q3Rq4u.')

torch._inductor.config.load_config(b'\x80\x02}q\x00(X\x05\x00\x00\x00debugq\x01\x89X\x10\x00\x00\x00disable_progressq\x02\x88X\x10\x00\x00\x00verbose_progressq\x03\x89X\x0b\x00\x00\x00cpp_wrapperq\x04\x89X\x03\x00\x00\x00dceq\x05\x89X\x14\x00\x00\x00static_weight_shapesq\x06\x88X\x0c\x00\x00\x00size_assertsq\x07\x88X\x10\x00\x00\x00pick_loop_ordersq\x08\x88X\x0f\x00\x00\x00inplace_buffersq\t\x88X\x11\x00\x00\x00benchmark_harnessq\n\x88X\x0f\x00\x00\x00epilogue_fusionq\x0b\x89X\x15\x00\x00\x00epilogue_fusion_firstq\x0c\x89X\x0f\x00\x00\x00pattern_matcherq\r\x88X\n\x00\x00\x00reorderingq\x0e\x89X\x0c\x00\x00\x00max_autotuneq\x0f\x89X\x17\x00\x00\x00realize_reads_thresholdq\x10K\x04X\x17\x00\x00\x00realize_bytes_thresholdq\x11M\xd0\x07X\x1b\x00\x00\x00realize_acc_reads_thresholdq\x12K\x08X\x0f\x00\x00\x00fallback_randomq\x13\x89X\x12\x00\x00\x00implicit_fallbacksq\x14\x88X\r\x00\x00\x00prefuse_nodesq\x15\x88X\x0b\x00\x00\x00tune_layoutq\x16\x89X\x11\x00\x00\x00aggressive_fusionq\x17\x89X\x0f\x00\x00\x00max_fusion_sizeq\x18K@X\x1b\x00\x00\x00unroll_reductions_thresholdq\x19K\x08X\x0e\x00\x00\x00comment_originq\x1a\x89X\x0f\x00\x00\x00compile_threadsq\x1bK\x0cX\x13\x00\x00\x00kernel_name_max_opsq\x1cK\nX\r\x00\x00\x00shape_paddingq\x1d\x89X\x0e\x00\x00\x00permute_fusionq\x1e\x89X\x1a\x00\x00\x00profiler_mark_wrapper_callq\x1f\x89X\x0b\x00\x00\x00cpp.threadsq J\xff\xff\xff\xffX\x13\x00\x00\x00cpp.dynamic_threadsq!\x89X\x0b\x00\x00\x00cpp.simdlenq"NX\x12\x00\x00\x00cpp.min_chunk_sizeq#M\x00\x10X\x07\x00\x00\x00cpp.cxxq$NX\x03\x00\x00\x00g++q%\x86q&X\x19\x00\x00\x00cpp.enable_kernel_profileq\'\x89X\x12\x00\x00\x00cpp.weight_prepackq(\x88X\x11\x00\x00\x00triton.cudagraphsq)\x89X\x17\x00\x00\x00triton.debug_sync_graphq*\x89X\x18\x00\x00\x00triton.debug_sync_kernelq+\x89X\x12\x00\x00\x00triton.convolutionq,X\x04\x00\x00\x00atenq-X\x15\x00\x00\x00triton.dense_indexingq.\x89X\x10\x00\x00\x00triton.max_tilesq/K\x02X\x19\x00\x00\x00triton.autotune_pointwiseq0\x88X\'\x00\x00\x00triton.tiling_prevents_pointwise_fusionq1\x88X\'\x00\x00\x00triton.tiling_prevents_reduction_fusionq2\x88X\x1b\x00\x00\x00triton.ordered_kernel_namesq3\x89X\x1f\x00\x00\x00triton.descriptive_kernel_namesq4\x89X\r\x00\x00\x00trace.enabledq5\x89X\x0f\x00\x00\x00trace.debug_logq6\x88X\x0e\x00\x00\x00trace.info_logq7\x89X\x0e\x00\x00\x00trace.fx_graphq8\x88X\x1a\x00\x00\x00trace.fx_graph_transformedq9\x88X\x13\x00\x00\x00trace.ir_pre_fusionq:\x88X\x14\x00\x00\x00trace.ir_post_fusionq;\x88X\x11\x00\x00\x00trace.output_codeq<\x88X\x13\x00\x00\x00trace.graph_diagramq=\x89X\x15\x00\x00\x00trace.compile_profileq>\x89X\x10\x00\x00\x00trace.upload_tarq?Nu.')

torch._functorch.config.load_config(b'\x80\x02}q\x00(X\x11\x00\x00\x00use_functionalizeq\x01\x88X\x0f\x00\x00\x00use_fake_tensorq\x02\x88X\x16\x00\x00\x00fake_tensor_allow_metaq\x03\x88X\x0c\x00\x00\x00debug_assertq\x04\x88X\x14\x00\x00\x00debug_fake_cross_refq\x05\x89X\x11\x00\x00\x00debug_partitionerq\x06\x89X\x0c\x00\x00\x00debug_graphsq\x07\x89X\x0b\x00\x00\x00debug_jointq\x08\x89X\x12\x00\x00\x00use_dynamic_shapesq\t\x89X\x14\x00\x00\x00static_weight_shapesq\n\x88X\x03\x00\x00\x00cseq\x0b\x88X\x10\x00\x00\x00max_dist_from_bwq\x0cK\x03X\t\x00\x00\x00log_levelq\rK\x14u.')

# REPLACEABLE COMMENT FOR TESTING PURPOSES

args = [((2, 2), (2, 2), torch.float32, 'cpu', False)]

args = [rand_strided(sh, st, dt, dev).requires_grad_(rg) for (sh, st, dt, dev, rg) in args]

from torch.nn import *

class Repro(torch.nn.Module):

def __init__(self):

super().__init__()

def forward(self, in_out_tensor : torch.Tensor):

as_strided_ = in_out_tensor.as_strided_(size = [2, 2], stride = [2, 2], storage_offset = 7); in_out_tensor = None

return (as_strided_,)

mod = Repro()

# Setup debug minifier compiler

torch._dynamo.debug_utils.MINIFIER_SPAWNED = True

compiler_fn = lookup_backend("dynamo_minifier_backend")

dynamo_minifier_backend = functools.partial(

compiler_fn,

compiler_name="inductor",

)

opt_mod = torch._dynamo.optimize(dynamo_minifier_backend)(mod)

with torch.cuda.amp.autocast(enabled=False):

opt_mod(*args)

```

The same code works fine in eager mode. This issue only seen in compile mode.

Error:

```

File "/home/jthakur/.pt_2_0/lib/python3.8/site-packages/torch/_ops.py", line 284, in __call__

return self._op(*args, **kwargs or {})

RuntimeError: setStorage: sizes [2, 2], strides [2, 2], storage offset 7, and itemsize 4 requiring a storage size of 48 are out of bounds for storage of size 20

The above exception was the direct cause of the following exception:

```

### Versions

Versions of relevant libraries:

[pip3] numpy==1.24.1

[pip3] torch==2.0.0.dev20230209+cpu

[pip3] torchaudio==2.0.0.dev20230209+cpu

[pip3] torchvision==0.15.0.dev20230209+cpu

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh @voznesenskym @yanboliang @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @desertfire

| 1 |

3,409 | 95,172 |

DISABLED test_memory_format_nn_ConvTranspose2d_cuda_complex32 (__main__.TestModuleCUDA)

|

module: nn, triaged, module: flaky-tests, skipped

|

Platforms: rocm

This test was disabled because it is failing in CI. See [recent examples](https://hud.pytorch.org/failure/test_memory_format_nn_ConvTranspose2d_cuda_complex32) and the most recent trunk [workflow logs](https://github.com/pytorch/pytorch/runs/11473508755).

Over the past 72 hours, it has flakily failed in 2 workflow(s).

**Debugging instructions (after clicking on the recent samples link):**

To find relevant log snippets:

1. Click on the workflow logs linked above

2. Grep for `test_memory_format_nn_ConvTranspose2d_cuda_complex32`

Test file path: `test_modules.py`

cc @albanD @mruberry @jbschlosser @walterddr @saketh-are

| 5 |

3,410 | 95,169 |

COO @ COO tries to allocate way too much memory on CUDA

|

module: sparse, module: cuda, triaged, matrix multiplication

|

### 🐛 Describe the bug

```python

In [1]: import torch

In [2]: x = torch.rand(1000, 1000, device='cuda')

In [3]: %timeit x @ x

19.7 µs ± 10.9 µs per loop (mean ± std. dev. of 7 runs, 1 loop each)

In [4]: %timeit x @ x.to_sparse()

21 ms ± 598 µs per loop (mean ± std. dev. of 7 runs, 1 loop each)

In [5]: %timeit x.to_sparse() @ x

30.9 ms ± 72.5 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

In [6]: %timeit x.to_sparse() @ x.to_sparse()

---------------------------------------------------------------------------

OutOfMemoryError Traceback (most recent call last)

Input In [6], in <cell line: 1>()

----> 1 get_ipython().run_line_magic('timeit', 'x.to_sparse() @ x.to_sparse()')

File ~/.conda/envs/pytorch-cuda-dev-nik/lib/python3.10/site-packages/IPython/core/interactiveshell.py:2305, in InteractiveShell.run_line_magic(self, magic_name, line, _stack_depth)

2303 kwargs['local_ns'] = self.get_local_scope(stack_depth)

2304 with self.builtin_trap:

-> 2305 result = fn(*args, **kwargs)

2306 return result

File ~/.conda/envs/pytorch-cuda-dev-nik/lib/python3.10/site-packages/IPython/core/magics/execution.py:1162, in ExecutionMagics.timeit(self, line, cell, local_ns)

1160 for index in range(0, 10):

1161 number = 10 ** index

-> 1162 time_number = timer.timeit(number)

1163 if time_number >= 0.2:

1164 break

File ~/.conda/envs/pytorch-cuda-dev-nik/lib/python3.10/site-packages/IPython/core/magics/execution.py:156, in Timer.timeit(self, number)

154 gc.disable()

155 try:

--> 156 timing = self.inner(it, self.timer)

157 finally:

158 if gcold:

File <magic-timeit>:1, in inner(_it, _timer)

OutOfMemoryError: CUDA out of memory. Tried to allocate 22.37 GiB (GPU 0; 5.78 GiB total capacity; 71.10 MiB already allocated; 4.99 GiB free; 86.00 MiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

```

### Versions

Current master.

cc @alexsamardzic @pearu @cpuhrsch @amjames @bhosmer @ngimel

| 0 |

3,411 | 95,161 |

AOTAutograd based torch.compile doesn't capture manual seed setting in the graph

|

triaged, oncall: pt2, module: dynamo

|

### 🐛 Describe the bug

The following code breaks with AOTautograd based 2.0 torch.compile -

```

from typing import List

import torch

import torch._dynamo as dynamo

from torch._functorch.aot_autograd import aot_module_simplified

dynamo.reset()

def my_non_aot_compiler(gm: torch.fx.GraphModule, example_inputs: List[torch.Tensor]):

print(gm.code)

return gm.forward

def my_aot_compiler(gm: torch.fx.GraphModule, example_inputs: List[torch.Tensor]):

def my_compiler(gm: torch.fx.GraphModule, example_inputs: List[torch.Tensor]):

print(gm.code)

return gm.forward

# Invoke AOTAutograd

return aot_module_simplified(

gm,

example_inputs,

fw_compiler=my_compiler

)

def my_example():

torch.manual_seed(0)

d_float32 = torch.rand((8, 8), device="cpu")

return d_float32 + d_float32

compiled_fn = torch.compile(backend=my_aot_compiler)(my_example)

#compiled_fn = torch.compile(backend=my_non_aot_compiler)(my_example)

r1 = compiled_fn()

r2 = compiled_fn()

print("Results match? ", torch.allclose(r1, r2, atol = 0.001, rtol = 0.001))

```

When ```my_aot_compiler``` is used, the result is wrong as the graph doesn't capture torch.manual_seed -

```

def forward(self):

rand = torch.ops.aten.rand.default([8, 8], device = device(type='cpu'), pin_memory = False)

add = torch.ops.aten.add.Tensor(rand, rand); rand = None

return (add,)

Results match? False

/usr/local/lib/python3.8/dist-packages/torch/_functorch/aot_autograd.py:1251: UserWarning: Your compiler for AOTAutograd is returning a a function that doesn't take boxed arguments. Please wrap it with functorch.compile.make_boxed_func or handle the boxed arguments yourself. See https://github.com/pytorch/pytorch/pull/83137#issuecomment-1211320670 for rationale.

warnings.warn(

```

However, when a non-AOT autograd based compiler ```my_non_aot_compiler``` is used, torch.manual_seed is captured in the graph and the result is correct -

```

def forward(self):

manual_seed = torch.random.manual_seed(0)

rand = torch.rand((8, 8), device = 'cpu')

add = rand + rand; rand = None

return (add,)

Results match? True

```

### Versions

PyTorch version: 2.0.0.dev20230220+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: 10.0.0-4ubuntu1

CMake version: version 3.25.2

Libc version: glibc-2.31

Python version: 3.8.10 (default, Nov 14 2022, 12:59:47) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.10.147+-x86_64-with-glibc2.29

Is CUDA available: True

CUDA runtime version: 11.6.124

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: Tesla T4

Nvidia driver version: 510.47.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.4.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 46 bits physical, 48 bits virtual

CPU(s): 2

On-line CPU(s) list: 0,1

Thread(s) per core: 2

Core(s) per socket: 1

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 85

Model name: Intel(R) Xeon(R) CPU @ 2.00GHz

Stepping: 3

CPU MHz: 2000.140

BogoMIPS: 4000.28

Hypervisor vendor: KVM

Virtualization type: full

L1d cache: 32 KiB

L1i cache: 32 KiB

L2 cache: 1 MiB

L3 cache: 38.5 MiB

NUMA node0 CPU(s): 0,1

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Mitigation; PTE Inversion

Vulnerability Mds: Vulnerable; SMT Host state unknown

Vulnerability Meltdown: Vulnerable

Vulnerability Mmio stale data: Vulnerable

Vulnerability Retbleed: Vulnerable

Vulnerability Spec store bypass: Vulnerable

Vulnerability Spectre v1: Vulnerable: __user pointer sanitization and usercopy barriers only; no swapgs barriers

Vulnerability Spectre v2: Vulnerable, IBPB: disabled, STIBP: disabled, PBRSB-eIBRS: Not affected

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Vulnerable

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc cpuid tsc_known_freq pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch invpcid_single ssbd ibrs ibpb stibp fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm mpx avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves arat md_clear arch_capabilities

Versions of relevant libraries:

[pip3] numpy==1.24.2

[pip3] pytorch-triton==2.0.0+c8bfe3f548

[pip3] torch==2.0.0.dev20230220+cu117

[pip3] torchaudio==0.13.1+cu116

[pip3] torchsummary==1.5.1

[pip3] torchtext==0.14.1

[pip3] torchvision==0.14.1+cu116

[conda] Could not collect

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh @voznesenskym @yanboliang @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @desertfire

| 2 |

3,412 | 95,160 |

Reversing along a dimension, similarly to numpy

|

feature, triaged, module: numpy, module: advanced indexing

|

### 🚀 The feature, motivation and pitch

In `numpy` you can reverse an array as `arr[::-1]` along any dimension. The same in `torch` raises an error: `ValueError: step must be greater than zero`. It would be useful if both operated the same way.

### Alternatives

_No response_

### Additional context

_No response_

cc @mruberry @rgommers

| 0 |

3,413 | 95,146 |

Whether to consider native support for intel gpu?

|

triaged, module: intel

|

### 🚀 The feature, motivation and pitch

I hope that the support for intel gpu can be implemented like cuda, rather than in the form of plug-ins。I wonder if you have such plans in the future

### Alternatives

_No response_

### Additional context

_No response_

cc @frank-wei @jgong5 @mingfeima @XiaobingSuper @sanchitintel @ashokei @jingxu10

| 2 |

3,414 | 95,135 |

Add local version identifier to wheel file names

|

module: build, triaged

|

### 🚀 The feature, motivation and pitch

Hello 👋 Thank you for maintaining pytorch 🙇 It's a joy to use and I'm very excited about the 2.0 release!

I've found a tiny quality of life improvement surrounding the precompiled wheel available at https://download.pytorch.org/whl/ .

Currently users are unable to tell `pip` that a specific compiled version of torch should be re-installed from a different whl.

For example;

```

# Install torch

$ pip install 'torch>=1.13.0+cpu' --find-links https://download.pytorch.org/whl/cpu/torch_stable.html

Looking in indexes: https://alexander.vaneck:****@artifactory.paigeai.net/artifactory/api/pypi/pypi/simple

Looking in links: https://download.pytorch.org/whl/cpu/torch_stable.html

Collecting torch>=1.13.0+cpu

Using cached https://artifactory.paigeai.net/artifactory/api/pypi/pypi/packages/packages/82/d8/0547f8a22a0c8aeb7e7e5e321892f1dcf93ea021829a99f1a25f1f535871/torch-1.13.1-cp310-none-macosx_10_9_x86_64.whl (135.3 MB)

...

Installing collected packages: typing-extensions, torch

Successfully installed torch-1.13.1 typing-extensions-4.5.0

# Then later install torch for cu116

$ pip install 'torch>=1.13.0+cu116' --find-links https://download.pytorch.org/whl/cu116/torch_stable.html

Looking in indexes: https://alexander.vaneck:****@artifactory.paigeai.net/artifactory/api/pypi/pypi/simple

Looking in links: https://download.pytorch.org/whl/cu116/torch_stable.html

Requirement already satisfied: torch>=1.13.0+cu116 in ./.virtualenvs/torch-links/lib/python3.10/site-packages (1.13.1)

Requirement already satisfied: typing-extensions in ./.virtualenvs/torch-links/lib/python3.10/site-packages (from torch>=1.13.0+cu116) (4.5.0)

# We now hold CPU torch for CUDA 11.6 enabled environment.

```

It would be mighty handy if the wheels provided would include the [local version identifiers](https://peps.python.org/pep-0440/#local-version-identifiers) for the environment they are built in, f.e. `torch-1.13.1+cu116-cp39-cp39-manylinux2014_aarch64.whl`. And as local version identifiers are optional it would have no impact on the current dependency resolution.

### Alternatives

_No response_

### Additional context

_No response_

cc @malfet @seemethere

| 2 |

3,415 | 95,132 |

Differentiate with regard a subset of the input

|

feature, module: autograd, triaged

|

### 🚀 The feature, motivation and pitch

Hi,

When I want to differentiate with regard to the first element in a batch, I get an error, as shown in the following example:

```python

n, d = 10, 3

lin1 = nn.Linear(d, d)

# case 1

x = torch.randn(d).requires_grad_()

y = lin1(x)

vec = torch.ones(d)

gr = torch.autograd.grad(y, x, vec, retain_graph=True)[0]

print(gr.shape) # returns: torch.Size([3])

# case 2

x = torch.randn(n, d).requires_grad_()

y = lin1(x)

vec = torch.ones(d)

gr = torch.autograd.grad(y[0], x[0], vec, retain_graph=True)[0]

# Raises Error:

# RuntimeError: One of the differentiated Tensors appears to not have been used in the graph. Set allow_unused=True if this is the desired behavior.

```

so taking x[i] removes it from the computation graph, I tried to clone it or to use .narrow but in vain!

y[0] only depends on x[0], so I don’t want to compute the gradient with regard to the full input!

Is there a way to do this efficiently?

### Alternatives

_No response_

### Additional context

_No response_

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 4 |

3,416 | 95,129 |

Default value of `validate_args` is set to `True` when passed as `None` in `Multinomial`

|

module: distributions, triaged

|

Running

https://github.com/pytorch/pytorch/blob/17d0b7f532c4b3cd4af22ee0cb25ff12dada85cb/torch/distributions/multinomial.py#L40-L41

produces

```

ValueError: Expected value argument (Tensor of shape (4,)) to be within the support (Multinomial()) of the distribution Multinomial(), but found invalid values:

tensor([2, 5, 2, 1])

```

I believe it is because the default value of `validate_args` is set to `True`.

cc @fritzo @neerajprad @alicanb @nikitaved

| 2 |

3,417 | 95,124 |

`INTERNAL ASSERT FAILED` -When using the PyTorch docker environment released by pytorch, a Vulcan support issue occurs

|

module: build, triaged, module: docker

|

### 🐛 Describe the bug

When I run `torch.nn.Linear` or `torch.nn.ConvTranspose2d` with `optimize_for_mobile` in the packaged docker environment [released by pytorch](https://hub.docker.com/u/pytorch), a Vulcan support issue occurs, and then crashes.

It is similar to [#86791](https://github.com/pytorch/pytorch/issues/86791).

It seems that the currently released docker environments may have some flags not enabled during building, resulting in some functions not working properly (regardless of `runtime` environment or `devel` environment).

I wonder if this is expected behavior and if I always need to manually build pytorch from the source to enable some special support.

### To Reproduce

```python

from torch import nn

import torch

from torch.utils.mobile_optimizer import optimize_for_mobile

with torch.no_grad():

x = torch.ones(1, 3, 32, 32)

def test():

tmp_result= torch.nn.ConvTranspose2d(3, 3, kernel_size=1)

# tmp_result= torch.nn.Linear(32, 32)

return tmp_result

model = test()

optimized_traced = optimize_for_mobile(torch.jit.trace(model, x), backend='vulkan')

```

The error result is

```

Traceback (most recent call last):

File "test.py", line 15, in <module>

optimized_traced = optimize_for_mobile(torch.jit.trace(model, x), backend='vulkan')

File "/opt/conda/lib/python3.7/site-packages/torch/utils/mobile_optimizer.py", line 67, in optimize_for_mobile

optimized_cpp_module = torch._C._jit_pass_vulkan_optimize_for_mobile(script_module._c, preserved_methods_str)

RuntimeError: 0 INTERNAL ASSERT FAILED at "/opt/conda/conda-bld/pytorch_1656352464346/work/torch/csrc/jit/ir/alias_analysis.cpp":608, please report a bug to PyTorch. We don't have an op for vulkan_prepack::linear_prepack but it isn't a special case. Argument types: Tensor, Tensor,

```

### Expected behavior

It is hoped that the docker environments released by pytorch can be built with these flags to enable corresponding support and ensure that all torch functions are implemented as expected.

### Versions

docker pull command:

```

docker pull pytorch/pytorch:1.10.0-cuda11.3-cudnn8-devel

docker pull pytorch/pytorch:1.12.0-cuda11.3-cudnn8-runtime

docker pull pytorch/pytorch:1.13.0-cuda11.6-cudnn8-runtime

```

<details>

<summary>pytorch 1.10.0</summary>

<pre><code>

[pip3] numpy==1.21.2

[pip3] torch==1.10.0

[pip3] torch-tb-profiler==0.4.0

[pip3] torchelastic==0.2.0

[pip3] torchtext==0.11.0

[pip3] torchvision==0.11.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 ha36c431_9 nvidia

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.3.0 h06a4308_520

[conda] mkl-service 2.4.0 py37h7f8727e_0

[conda] mkl_fft 1.3.1 py37hd3c417c_0

[conda] mkl_random 1.2.2 py37h51133e4_0

[conda] numpy 1.21.2 py37h20f2e39_0

[conda] numpy-base 1.21.2 py37h79a1101_0

[conda] pytorch 1.10.0 py3.7_cuda11.3_cudnn8.2.0_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torch-tb-profiler 0.4.0 pypi_0 pypi

[conda] torchelastic 0.2.0 pypi_0 pypi

[conda] torchtext 0.11.0 py37 pytorch

[conda] torchvision 0.11.0 py37_cu113 pytorch</code></pre>

</details>

<details>

<summary>pytorch 1.12.0</summary>

<pre><code>

[pip3] numpy==1.21.5

[pip3] torch==1.12.0

[pip3] torchelastic==0.2.0

[pip3] torchtext==0.13.0

[pip3] torchvision==0.13.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 ha36c431_9 nvidia

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py37h7f8727e_0

[conda] mkl_fft 1.3.1 py37hd3c417c_0

[conda] mkl_random 1.2.2 py37h51133e4_0

[conda] numpy 1.21.5 py37he7a7128_2

[conda] numpy-base 1.21.5 py37hf524024_2

[conda] pytorch 1.12.0 py3.7_cuda11.3_cudnn8.3.2_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchelastic 0.2.0 pypi_0 pypi

[conda] torchtext 0.13.0 py37 pytorch

[conda] torchvision 0.13.0 py37_cu113 pytorch</code></pre>

</details>

<details>

<summary>pytorch 1.13.0</summary>

<pre><code>

[pip3] numpy==1.22.3

[pip3] torch==1.13.0

[pip3] torchtext==0.14.0

[pip3] torchvision==0.14.0

[conda] blas 1.0 mkl

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.22.3 py39he7a7128_0

[conda] numpy-base 1.22.3 py39hf524024_0

[conda] pytorch 1.13.0 py3.9_cuda11.6_cudnn8.3.2_0 pytorch

[conda] pytorch-cuda 11.6 h867d48c_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchtext 0.14.0 py39 pytorch

[conda] torchvision 0.14.0 py39_cu116 pytorch</code></pre>

</details>

cc @malfet @seemethere

| 0 |

3,418 | 95,122 |

CosineAnnealingWarmRestarts but restarts are becoming more frequent

|

triaged, module: LrScheduler

|

I'm training a model on a large dataset (1 epoch is more than 3000 iterations). An ideal learning rate scheduler is CosineAnnealingWarmRestarts in my case but it would be better if it would make restarts more frequently as training goes further. I tried to pass 0.5 for `T_mult` but it didn't work. I got this error `ValueError: Expected integer T_mult >= 1, but got 0.5`. I think it can be implemented safely with integer division `T_0 // T_mult`. Is there any special reason not to implement this functionality?

| 0 |

3,419 | 95,121 |

cuda 12 support request.

|

module: cuda, triaged

|

### 🚀 The feature, motivation and pitch

graphical card is 4070ti (which is released 2023.1.4)

cuda version 12.

can't find correct version on https://pytorch.org/get-started/locally/

### Alternatives

_No response_

### Additional context

_No response_

cc @ngimel

| 4 |

3,420 | 95,116 |

When using `ceil_mode=True`, `torch.nn.AvgPool1d` could get negative shape.

|

module: bc-breaking, triaged, module: shape checking, topic: bc breaking

|

### 🐛 Describe the bug

It is similar to [#88464](https://github.com/pytorch/pytorch/issues/88464), `torch.nn.AvgPool1d` can also get negative shape with specific input shape when `ceil_mode=True` in Pytorch 1.9.0\1.10.0\1.11.0\1.12.0\1.13.0.

The reason for this behavior seems to be that the calculation with `ceil_mode` parameter by both `torch.nn.AvgPool` and `torch.nn.MaxPool` are similar.

### To Reproduce

```python

import torch

def test():

tmp_result= torch.nn.AvgPool1d(kernel_size=4, stride=1, padding=0, ceil_mode=True)

return tmp_result

m = test()

input = torch.randn(20, 16, 1)

output = m(input)

```

The error result is

```

Traceback (most recent call last): File "vanilla.py", line 9, in <module>

output = m(input)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/pooling.py", line 544, in forward

self.count_include_pad)

RuntimeError: Given input size: (16x1x1). Calculated output size: (16x1x-2). Output size is too small

```

### Expected behavior

It is hoped that after solving the problem of `MaxPool1d`, this similar bug of `AvgPool1d` can be fixed together.

### Versions

<details>

<summary>pytorch 1.9.0</summary>

<pre><code>

[pip3] numpy==1.20.2

[pip3] torch==1.9.0

[pip3] torchelastic==0.2.0

[pip3] torchtext==0.10.0

[pip3] torchvision==0.10.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.1.74 h6bb024c_0 nvidia

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.2.0 h06a4308_296

[conda] mkl-service 2.3.0 py37h27cfd23_1

[conda] mkl_fft 1.3.0 py37h42c9631_2

[conda] mkl_random 1.2.1 py37ha9443f7_2

[conda] numpy 1.20.2 py37h2d18471_0

[conda] numpy-base 1.20.2 py37hfae3a4d_0

[conda] pytorch 1.9.0 py3.7_cuda11.1_cudnn8.0.5_0 pytorch

[conda] torchelastic 0.2.0 pypi_0 pypi

[conda] torchtext 0.10.0 py37 pytorch

[conda] torchvision 0.10.0 py37_cu111 pytorch</code></pre>

</details>

<details>

<summary>pytorch 1.10.0</summary>

<pre><code>

[pip3] numpy==1.21.2

[pip3] torch==1.10.0

[pip3] torch-tb-profiler==0.4.0

[pip3] torchelastic==0.2.0

[pip3] torchtext==0.11.0

[pip3] torchvision==0.11.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 ha36c431_9 nvidia

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.3.0 h06a4308_520

[conda] mkl-service 2.4.0 py37h7f8727e_0

[conda] mkl_fft 1.3.1 py37hd3c417c_0

[conda] mkl_random 1.2.2 py37h51133e4_0

[conda] numpy 1.21.2 py37h20f2e39_0

[conda] numpy-base 1.21.2 py37h79a1101_0

[conda] pytorch 1.10.0 py3.7_cuda11.3_cudnn8.2.0_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torch-tb-profiler 0.4.0 pypi_0 pypi

[conda] torchelastic 0.2.0 pypi_0 pypi

[conda] torchtext 0.11.0 py37 pytorch

[conda] torchvision 0.11.0 py37_cu113 pytorch</code></pre>

</details>

<details>

<summary>pytorch 1.11.0</summary>

<pre><code>

[pip3] numpy==1.21.6

[pip3] pytorch-lightning==1.6.3

[pip3] torch==1.11.0

[pip3] torch-tb-profiler==0.4.0

[pip3] torchaudio==0.11.0

[pip3] torchmetrics==0.9.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 h2bc3f7f_2

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py38h7f8727e_0

[conda] mkl_fft 1.3.1 py38hd3c417c_0

[conda] mkl_random 1.2.2 py38h51133e4_0

[conda] numpy 1.19.5 pypi_0 pypi

[conda] numpy-base 1.21.5 py38hf524024_1

[conda] pytorch 1.11.0 py3.8_cuda11.3_cudnn8.2.0_0 pytorch

[conda] pytorch-lightning 1.6.3 pypi_0 pypi

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torch 1.10.0 pypi_0 pypi

[conda] torch-tb-profiler 0.4.0 pypi_0 pypi

[conda] torchaudio 0.11.0 py38_cu113 pytorch

[conda] torchmetrics 0.9.0 pypi_0 pypi

[conda] torchvision 0.12.0 py38_cu113 pytorch</code></pre>

</details>

<details>

<summary>pytorch 1.12.0</summary>

<pre><code>

[pip3] numpy==1.21.5

[pip3] torch==1.12.0

[pip3] torchelastic==0.2.0

[pip3] torchtext==0.13.0

[pip3] torchvision==0.13.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 ha36c431_9 nvidia

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py37h7f8727e_0

[conda] mkl_fft 1.3.1 py37hd3c417c_0

[conda] mkl_random 1.2.2 py37h51133e4_0

[conda] numpy 1.21.5 py37he7a7128_2

[conda] numpy-base 1.21.5 py37hf524024_2

[conda] pytorch 1.12.0 py3.7_cuda11.3_cudnn8.3.2_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchelastic 0.2.0 pypi_0 pypi

[conda] torchtext 0.13.0 py37 pytorch

[conda] torchvision 0.13.0 py37_cu113 pytorch</code></pre>

</details>

<details>

<summary>pytorch 1.13.0</summary>

<pre><code>

[pip3] numpy==1.22.3

[pip3] torch==1.13.0

[pip3] torchtext==0.14.0

[pip3] torchvision==0.14.0

[conda] blas 1.0 mkl

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.22.3 py39he7a7128_0

[conda] numpy-base 1.22.3 py39hf524024_0

[conda] pytorch 1.13.0 py3.9_cuda11.6_cudnn8.3.2_0 pytorch

[conda] pytorch-cuda 11.6 h867d48c_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchtext 0.14.0 py39 pytorch

[conda] torchvision 0.14.0 py39_cu116 pytorch</code></pre>

</details>

cc @ezyang @gchanan @zou3519

| 0 |

3,421 | 95,112 |

Proposal: `@capture`: Unified API for capturing functions across `{fx, proxy_tensor, dynamo}`

|

module: onnx, feature, triaged, oncall: pt2, module: functorch, module: dynamo

|

> ```python

> # replaces `@fx.wrap`

> @fx.capture

> def my_traced_op(x):

> pass

>

> # new API, similar to `@fx.wrap`. `make_fx` will capture this function.

> @proxy_tensor.capture

> def my_traced_op(x):

> pass

>

> # replaces `dynamo.allow_in_graph`

> @dynamo.capture

> def my_traced_op(x):

> pass

> ```

>

> Can we break backwards compatibility on `wrap`? It is a terrible API due to its name.

> `capture` could be the preferred new API, and `wrap` will simply alias it, then we can deprecate `wrap` slowly.

_Originally posted by @jon-chuang in https://github.com/pytorch/pytorch/issues/94461#issuecomment-1435530121_

Examples of user demand for custom op tracing:

1. https://github.com/pytorch/pytorch/issues/95021

2. https://github.com/pytorch/pytorch/pull/94867

Question: is there overlap between `@dynamo.capture` and `@proxy_tensor.capture`? Whether the fx graph traced by dynamo is traced again by `make_fx` depends on the backend (e.g. `aot_eager`, `inductor`). So I suppose that to trace an op through `torch.compile` and into a backend like aot_eager which uses `make_fx`, one should wrap with both, correct? @jansel

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh @zou3519 @Chillee @samdow @kshitij12345 @janeyx99 @voznesenskym @yanboliang @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @desertfire

| 7 |

3,422 | 95,108 |

`torch.nn.LazyLinear` crash when using torch.bfloat16 dtype in pytorch 1.12.0 and 1.13.0

|

module: nn, triaged, intel

|

### 🐛 Describe the bug

In Pytorch 1.12.0 and 1.13.0, `torch.nn.LazyLinear` will crash when using `torch.bfloat16` dtype, but the same codes run well with `torch.float32`.

I think this issue may have a similar root cause to [#88658](https://github.com/pytorch/pytorch/issues/88658).

In addition, both `torch.nn.Linear` and `torch.nn.LazyLinear` have other interesting errors in Pytorch 1.10.0 and 1.11.0.

When they are using `torch.bfloat16`, they will crash with `RuntimeError: could not create a primitive`, which is a frequent error in PyTorch issues.

This also seems to be another unexpected behavior.

### To Reproduce

```python

from torch import nn

import torch

def test():

tmp_result= torch.nn.LazyLinear(out_features=250880, bias=False, dtype=torch.bfloat16)

return tmp_result

lm_head = test()

input=torch.ones(size=(8,1024,1536), dtype=torch.bfloat16)

output=lm_head(input)

```

The result in 1.12.0 and 1.13.0 is

```

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/lazy.py:178: UserWarning: Lazy modules are a new feature under heavy development so changes to the API or functionality can happen at any moment.

warnings.warn('Lazy modules are a new feature under heavy development '

Traceback (most recent call last):

File "vanilla.py", line 9, in <module>

output=lm_head(input)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1148, in _call_impl

result = forward_call(*input, **kwargs)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/linear.py", line 114, in forward

return F.linear(input, self.weight, self.bias)

RuntimeError: [enforce fail at alloc_cpu.cpp:66] . DefaultCPUAllocator: can't allocate memory: you tried to allocate 263066747008 bytes. Error code 12 (Cannot allocate memory)

```

The result in 1.10.0 and 1.11.0 is

```

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/lazy.py:178: UserWarning: Lazy modules are a new feature under heavy development so changes to the API or functionality can happen at any moment.

warnings.warn('Lazy modules are a new feature under heavy development '

Traceback (most recent call last):

File "vanilla.py", line 9, in <module>

output=lm_head(input)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1120, in _call_impl

result = forward_call(*input, **kwargs)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/linear.py", line 103, in forward

return F.linear(input, self.weight, self.bias)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/functional.py", line 1848, in linear

return torch._C._nn.linear(input, weight, bias)

RuntimeError: could not create a primitive

```

### Expected behavior

Maybe it should have a similar performance with the codes with `float32` dtype, as shown in follows (It only raises a warning for Lazy module):

```

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/lazy.py:178: UserWarning: Lazy modules are a new feature under heavy development so changes to the API or functionality can happen at any moment.

warnings.warn('Lazy modules are a new feature under heavy development '

```

### Versions

I use docker to get Pytorch environments.

`docker pull pytorch/pytorch:1.10.0-cuda11.3-cudnn8-devel;

docker pull pytorch/pytorch:1.12.0-cuda11.3-cudnn8-runtime;

docker pull pytorch/pytorch:1.13.0-cuda11.6-cudnn8-runtime`

GPU models and configuration: RTX 3090

pytorch 1.10.0

```

[pip3] numpy==1.21.2

[pip3] torch==1.10.0

[pip3] torch-tb-profiler==0.4.0

[pip3] torchelastic==0.2.0

[pip3] torchtext==0.11.0

[pip3] torchvision==0.11.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 ha36c431_9 nvidia

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.3.0 h06a4308_520

[conda] mkl-service 2.4.0 py37h7f8727e_0

[conda] mkl_fft 1.3.1 py37hd3c417c_0

[conda] mkl_random 1.2.2 py37h51133e4_0

[conda] numpy 1.21.2 py37h20f2e39_0

[conda] numpy-base 1.21.2 py37h79a1101_0

[conda] pytorch 1.10.0 py3.7_cuda11.3_cudnn8.2.0_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torch-tb-profiler 0.4.0 pypi_0 pypi

[conda] torchelastic 0.2.0 pypi_0 pypi

[conda] torchtext 0.11.0 py37 pytorch

[conda] torchvision 0.11.0 py37_cu113 pytorch

```

pytorch 1.12.0

```

[pip3] numpy==1.21.5

[pip3] torch==1.12.0

[pip3] torchelastic==0.2.0

[pip3] torchtext==0.13.0

[pip3] torchvision==0.13.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 ha36c431_9 nvidia

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py37h7f8727e_0

[conda] mkl_fft 1.3.1 py37hd3c417c_0

[conda] mkl_random 1.2.2 py37h51133e4_0

[conda] numpy 1.21.5 py37he7a7128_2

[conda] numpy-base 1.21.5 py37hf524024_2

[conda] pytorch 1.12.0 py3.7_cuda11.3_cudnn8.3.2_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchelastic 0.2.0 pypi_0 pypi

[conda] torchtext 0.13.0 py37 pytorch

[conda] torchvision 0.13.0 py37_cu113 pytorch

```

pytorch 1.13.0

```

[pip3] numpy==1.22.3

[pip3] torch==1.13.0

[pip3] torchtext==0.14.0

[pip3] torchvision==0.14.0

[conda] blas 1.0 mkl

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.22.3 py39he7a7128_0

[conda] numpy-base 1.22.3 py39hf524024_0

[conda] pytorch 1.13.0 py3.9_cuda11.6_cudnn8.3.2_0 pytorch

[conda] pytorch-cuda 11.6 h867d48c_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchtext 0.14.0 py39 pytorch

[conda] torchvision 0.14.0 py39_cu116 pytorch

```

cc @albanD @mruberry @jbschlosser @walterddr @saketh-are @jgong5 @mingfeima @XiaobingSuper @sanchitintel @ashokei @jingxu10

| 2 |

3,423 | 95,103 |

AOTAutograd can add extra as_strided() calls when graph outputs alias inputs

|

module: autograd, triaged, module: functionalization, oncall: pt2

|

A simple example:

```

import torch

@torch.compile(backend="aot_eager")

def f(x):

return x.unsqueeze(-1)

a = torch.ones(4)

b = a.view(2, 2)

out = f(b)

```

If you put a breakpoint at [this line](https://github.com/pytorch/pytorch/blob/c16b2916f15d7160c0254580f18007eb0c373abc/torch/_functorch/aot_autograd.py#L498) of aot_autograd.py, you'll see it getting hit.

Context: When AOTAutograd sees a graph with an output that aliases an input, it tries to "replay" the view on the input in eager mode, outside of the compiled `autograd.Function` object. It makes a best effort to replay the exact same set of views as the original code, but it isn't always able to.

The reason it can't in this case is because the input to the graph, `b`, is itself a view of another tensor, `a`. Morally, all we want to do is replay the call to `b.unsqueeze()`. Autograd has some view replay logic, but it requires us to replay *all* of the views from `out`'s base, including the views from the base (a) to the graph input (b). Since that view was created in eager mode, the view that autograd records is an as_strided, so we're forced to run an as_strided() call as part of view replay.

as_strided has a slower backward formula compared to most other view ops. If we want to optimize this, there are a few things that we can do:

(1) Wait for `view_strided()`: @dagitses is working on a compositional view_strided() op that we should be able to replace this as_strided() call with, that should have a more efficient backward formula

(2) make autograd's view replay API smarter (`_view_func`). What we could do is allow for you to do "partial view-replay". E.g. in the above example, if I ran `out._view_func(b)`, it would be nice if it just performed a single call to `.unsqueeze()` instead of needing to go through the entire view chain.

(3) Manually keep track of view chains in AOTAutograd. This would take more work, but in theory we could track all the metadata we need on exactly what the chain of view ops from an input to an output is during tracing, and manually replay it at runtime, instead of relying on autograd's view-replay.

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7 @soumith @msaroufim @wconstab @ngimel @davidberard98

| 3 |

3,424 | 95,100 |

RuntimeError: view_as_complex is only supported for half, float and double tensors, but got a tensor of scalar type: BFloat16

|

triaged, module: complex, module: bfloat16

|

### 🐛 Describe the bug

BFloat16 should support view_as_complex

```

In [4]: torch.view_as_complex(torch.rand(32,2))

Out[4]:

tensor([0.5686+0.0719j, 0.4356+0.3737j, 0.5070+0.0710j, 0.3190+0.9922j,

0.0286+0.7838j, 0.3424+0.2291j, 0.6538+0.0426j, 0.7645+0.3523j,

0.7656+0.2561j, 0.3005+0.6489j, 0.1265+0.3116j, 0.6779+0.5047j,

0.7420+0.0151j, 0.5485+0.3000j, 0.5363+0.5574j, 0.8676+0.4026j,

0.9972+0.7556j, 0.7337+0.4260j, 0.1703+0.0922j, 0.9353+0.2052j,

0.1261+0.3311j, 0.1574+0.9259j, 0.9021+0.9478j, 0.4329+0.4403j,

0.7340+0.8674j, 0.9771+0.0980j, 0.0575+0.2011j, 0.8210+0.5589j,

0.3849+0.4482j, 0.2834+0.1872j, 0.7534+0.9229j, 0.4024+0.0708j])

In [5]: torch.view_as_complex(torch.rand(32,2).bfloat16())

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

Cell In[5], line 1