Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

3,501 | 94,541 |

DISABLED test_pickle_nn_RNN_eval_mode_cuda_float64 (__main__.TestModuleCUDA)

|

module: rnn, triaged

|

Platforms: linux

This test was disabled because it is failing on master ([recent examples](http://torch-ci.com/failure/test_pickle_nn_RNN_eval_mode_cuda_float64%2CTestModuleCUDA)).

cc @zou3519

| 1 |

3,502 | 94,511 |

Performance does not meet expectations when training OPT-30 with FSDP, there may be problems with cpu offloading

|

oncall: distributed, module: fsdp

|

### 🐛 Describe the bug

### Code

```python

import os

import argparse

import functools

import torch

from itertools import chain

import torch.nn as nn

import torch.optim as optim

from transformers import (

OPTForCausalLM,

AutoTokenizer,

default_data_collator,

)

from transformers.models.opt.modeling_opt import OPTDecoderLayer, OPTAttention

from datasets import load_dataset

from torch.utils.data import DataLoader

from torch.optim.lr_scheduler import StepLR

import torch.distributed as dist

import torch.multiprocessing as mp

from torch.distributed.fsdp import (

MixedPrecision,

FullyShardedDataParallel as FSDP

)

from torch.distributed.fsdp.fully_sharded_data_parallel import (

CPUOffload,

)

from torch.distributed.fsdp.wrap import (

size_based_auto_wrap_policy,

transformer_auto_wrap_policy,

)

from torch.distributed.algorithms._checkpoint.checkpoint_wrapper import (

checkpoint_wrapper,

)

def getDataset():

raw_datasets = load_dataset("wikitext", "wikitext-2-v1")

tokenizer = AutoTokenizer.from_pretrained("facebook/opt-30b")

column_names = raw_datasets["train"].column_names

text_column_name = "text" if "text" in column_names else column_names[0]

def tokenize_function(examples):

return tokenizer(examples[text_column_name])

tokenized_datasets = raw_datasets.map(

tokenize_function,

batched=True,

num_proc=1,

remove_columns=column_names,

load_from_cache_file=False,

desc="Running tokenizer on dataset",

)

def group_texts(examples):

# Concatenate all texts.

concatenated_examples = {

k: list(chain(*examples[k])) for k in examples.keys()}

total_length = len(concatenated_examples[list(examples.keys())[0]])

# We drop the small remainder, we could add padding if the model supported it instead of this drop, you can

# customize this part to your needs.

if total_length >= 1024:

total_length = (total_length // 1024) * 1024

# Split by chunks of max_len.

result = {

k: [t[i: i + 1024]

for i in range(0, total_length, 1024)]

for k, t in concatenated_examples.items()

}

result["labels"] = result["input_ids"].copy()

return result

lm_datasets = tokenized_datasets.map(

group_texts,

batched=True,

num_proc=1,

load_from_cache_file=False,

desc=f"Grouping texts in chunks of {1024}",

)

return lm_datasets["train"]

def setup(rank, world_size):

os.environ['MASTER_ADDR'] = 'localhost'

os.environ['MASTER_PORT'] = '12355'

# initialize the process group

dist.init_process_group("nccl", rank=rank, world_size=world_size)

def cleanup():

dist.destroy_process_group()

def train(args, model, rank, world_size, train_loader, optimizer, epoch):

model.train()

ddp_loss = torch.zeros(2).to(rank)

for batch_idx, batch in enumerate(train_loader):

input_ids = batch["input_ids"].to(rank)

attention_mask = batch["attention_mask"].to(rank)

labels = batch["labels"].to(rank)

outputs = model(input_ids=input_ids,

attention_mask=attention_mask, labels=labels)

optimizer.zero_grad()

loss = outputs.loss

loss.backward()

optimizer.step()

ddp_loss[0] += loss.item()

ddp_loss[1] += len(input_ids)

if rank == 0:

print(batch_idx, " *"*10)

dist.all_reduce(ddp_loss, op=dist.ReduceOp.SUM)

if rank == 0:

print('Train Epoch: {} \tLoss: {:.6f}'.format(

epoch, ddp_loss[0] / ddp_loss[1]))

def fsdp_main(rank, world_size, args):

setup(rank, world_size)

train_dataset = getDataset()

train_loader = DataLoader(

train_dataset, collate_fn=default_data_collator,

batch_size=1, num_workers=1

)

my_auto_wrap_policy = functools.partial(

size_based_auto_wrap_policy, min_num_params=100000

)

# my_auto_wrap_policy = functools.partial(

# transformer_auto_wrap_policy, transformer_layer_cls={

# OPTDecoderLayer, OPTAttention, nn.LayerNorm, nn.Linear}

# )

torch.cuda.set_device(rank)

init_start_event = torch.cuda.Event(enable_timing=True)

init_end_event = torch.cuda.Event(enable_timing=True)

if rank == 0:

print("*"*10+"loading to cpu"+"*"*10)

model = OPTForCausalLM.from_pretrained("facebook/opt-30b")

model = checkpoint_wrapper(model, offload_to_cpu=True)

model = FSDP(model,

cpu_offload=CPUOffload(CPUOffload(offload_params=True)),

auto_wrap_policy=my_auto_wrap_policy,

mixed_precision=MixedPrecision(param_dtype=torch.float16,

reduce_dtype=torch.float16,

buffer_dtype=torch.float16,

keep_low_precision_grads=True)

)

if rank == 0:

print("*"*10+"print the fsdp model"+"*"*10)

print(model)

print_file = open("./model", 'w')

print(model, file=print_file)

print()

optimizer = optim.Adam(model.parameters(), lr=args.lr)

# optimizer = optim.SGD(model.parameters(), lr=args.lr)

scheduler = StepLR(optimizer, step_size=1, gamma=args.gamma)

init_start_event.record()

for epoch in range(1, args.epochs + 1):

train(args, model, rank, world_size, train_loader,

optimizer, epoch)

scheduler.step()

init_end_event.record()

if rank == 0:

print(

f"CUDA event elapsed time: {init_start_event.elapsed_time(init_end_event) / 1000}sec")

print(f"{model}")

cleanup()

if __name__ == '__main__':

# Training settings

parser = argparse.ArgumentParser(description='PyTorch OPT Example')

parser.add_argument('--batch-size', type=int, default=1, metavar='N',

help='input batch size for training (default: 64)')

parser.add_argument('--epochs', type=int, default=1, metavar='N',

help='number of epochs to train (default: 14)')

parser.add_argument('--lr', type=float, default=1.0, metavar='LR',

help='learning rate (default: 1.0)')

parser.add_argument('--gamma', type=float, default=0.7, metavar='M',

help='Learning rate step gamma (default: 0.7)')

parser.add_argument('--no-cuda', action='store_true', default=False,

help='disables CUDA training')

parser.add_argument('--seed', type=int, default=1, metavar='S',

help='random seed (default: 1)')

args = parser.parse_args()

torch.manual_seed(args.seed)

WORLD_SIZE = torch.cuda.device_count()

mp.spawn(fsdp_main,

args=(WORLD_SIZE, args),

nprocs=WORLD_SIZE,

join=True)

```

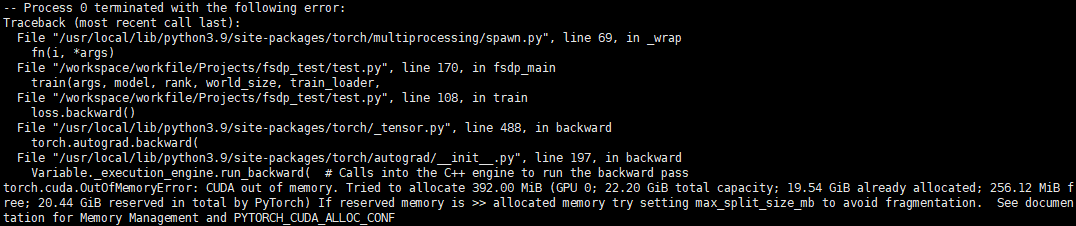

### Bug

The GPU memory in the forward stage is normal, but the GPU memory overflows in the backward stage. According to the principle of fsdp, it is judged that the memory usage of the GPU should not overflow at this time.

### Versions

host with 4 A10 GPU, 236 CPU cores and 974G memory

torch==1.13.1+cu116

transformers==4.26.0

cc @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @H-Huang @kwen2501 @awgu

| 6 |

3,503 | 94,504 |

[mypy] skipping mypy for a few torch/fx and torch/_subclass files

|

module: lint, triaged

|

### 🐛 Describe the bug

In PR https://github.com/pytorch/pytorch/pull/94173, the below files were failing on mypy. The PR doesn't change these files but probably some import causes mypy to be run on these files and they fail.

Since it is not a regression, the PR now excludes those files from mypy checks. This issue is to track the same.

Files:

```

torch/fx/proxy.py

torch/fx/passes/shape_prop.py

torch/fx/node.py

torch/fx/experimental/symbolic_shapes.py

torch/fx/experimental/proxy_tensor.py

torch/_subclasses/fake_utils.py

torch/_subclasses/fake_tensor.py

```

### Versions

https://github.com/pytorch/pytorch/pull/94173

| 0 |

3,504 | 94,496 |

Dynamo captures only CUDA streams in FX graph

|

triaged, module: dynamo

|

### 🐛 Describe the bug

The current Dynamo captures torch.cuda.stream with https://github.com/pytorch/pytorch/pull/93808. However, for other backends with streams, the capture wouldn't happen. There should be a mechanism for Dynamo to recognize other backend streams.

### Versions

PyTorch version: 2.0.0.dev20230208+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: 10.0.0-4ubuntu1

CMake version: version 3.25.2

Libc version: glibc-2.31

Python version: 3.8.10 (default, Nov 14 2022, 12:59:47) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.10.147+-x86_64-with-glibc2.29

Is CUDA available: True

CUDA runtime version: 11.2.152

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: Tesla T4

Nvidia driver version: 510.47.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.1.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.1.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.1.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.1.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.1.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.1.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.1.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 46 bits physical, 48 bits virtual

CPU(s): 2

On-line CPU(s) list: 0,1

Thread(s) per core: 2

Core(s) per socket: 1

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 85

Model name: Intel(R) Xeon(R) CPU @ 2.00GHz

Stepping: 3

CPU MHz: 2000.168

BogoMIPS: 4000.33

Hypervisor vendor: KVM

Virtualization type: full

L1d cache: 32 KiB

L1i cache: 32 KiB

L2 cache: 1 MiB

L3 cache: 38.5 MiB

NUMA node0 CPU(s): 0,1

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Mitigation; PTE Inversion

Vulnerability Mds: Vulnerable; SMT Host state unknown

Vulnerability Meltdown: Vulnerable

Vulnerability Mmio stale data: Vulnerable

Vulnerability Retbleed: Vulnerable

Vulnerability Spec store bypass: Vulnerable

Vulnerability Spectre v1: Vulnerable: __user pointer sanitization and usercopy barriers only; no swapgs barriers

Vulnerability Spectre v2: Vulnerable, IBPB: disabled, STIBP: disabled, PBRSB-eIBRS: Not affected

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Vulnerable

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc cpuid tsc_known_freq pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch invpcid_single ssbd ibrs ibpb stibp fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm mpx avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves arat md_clear arch_capabilities

Versions of relevant libraries:

[pip3] numpy==1.24.2

[pip3] pytorch-triton==2.0.0+0d7e753227

[pip3] torch==2.0.0.dev20230208+cu117

[pip3] torchaudio==0.13.1+cu116

[pip3] torchsummary==1.5.1

[pip3] torchtext==0.14.1

[pip3] torchvision==0.14.1+cu116

[conda] Could not collect

cc @mlazos @soumith @voznesenskym @yanboliang @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @desertfire

| 3 |

3,505 | 94,474 |

pybind11 SymNode binding is a footgun py::cast

|

triaged, module: pybind

|

### 🐛 Describe the bug

Say you have a SymNode and you want to convert it into a PyObject. You might try `py::cast` it. But that will give you a `_C.SymNode`; if it was a Python SymNode you wanted it to unwrap directly. Big footgun.

### Versions

master

| 0 |

3,506 | 94,471 |

[Functionalization] `index_reduce_` op tests with functionalization enabled

|

triaged, module: meta tensors, module: functionalization

|

### 🐛 Describe the bug

With functionalization, the existing `index_reduce_` python op test (https://github.com/pytorch/pytorch/blob/master/test/test_torch.py#L3035) fails.

To reproduce (this is one of the sample inputs to the `index_reduce_` test linked above):

```

import torch

import functorch

def test():

dest = torch.tensor([[[ 0.0322, 1.2734, -3.4688, 8.1875, -4.2500],

[-5.6250, 1.3828, 7.7188, 0.3887, 5.5312],

[ 3.2344, 5.0312, -7.4062, 1.2422, -0.1719],

[-6.0312, 6.2188, -1.1641, -0.3203, 0.2637]],

[[-6.9688, -3.5938, 2.6406, 4.3125, 0.1348],

[ 4.5000, -0.5938, -5.5312, -1.8281, 1.1562],

[ 1.5781, -1.7891, 3.8906, 1.2969, 1.9688],

[-6.5000, 2.4375, -4.8125, 3.0312, 1.9453]],

[[ 0.2002, 7.7188, 1.5547, -7.6875, -2.5781],

[-4.1562, 1.8125, 6.5625, 8.2500, 5.4062],

[ 4.2812, 6.5625, -3.3906, 1.7266, 8.8750],

[-6.9375, 7.0625, 3.4844, -7.9375, 8.5625]]], dtype=torch.bfloat16)

idx = torch.tensor([], dtype=torch.int64)

src = torch.empty((3, 4, 0), dtype=torch.bfloat16)

dest.index_reduce_(2, idx, src, 'mean', include_self=False)

```

Output:

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/opt/conda/lib/python3.8/site-packages/torch/_functorch/vmap.py", line 39, in fn

return f(*args, **kwargs)

File "/opt/conda/lib/python3.8/site-packages/torch/_functorch/eager_transforms.py", line 1582, in wrapped

func_outputs = func(*func_args, **func_kwargs)

File "<stdin>", line 18, in test

File "/opt/conda/lib/python3.8/site-packages/torch/_decomp/decompositions.py", line 3314, in inplace_op

out = outplace_op(*args, **kwargs)

File "/opt/conda/lib/python3.8/site-packages/torch/_ops.py", line 499, in __call__

return self._op(*args, **kwargs or {})

IndexError: select(): index 0 out of range for tensor of size [3, 4, 0] at dimension 2

```

Full dispatch trace logs: https://gist.github.com/wonjoolee95/d6c2c31df8a3342ddbf56523c0eeab66

Full dispatch trace logs without functionalization: https://gist.github.com/wonjoolee95/8a9c2543b0b017f9df049da57fc84dce

The error itself seems to be clear -- due to some index out-bound-error, as the code tries to access index 0 at dimension 2 of shape [3, 4, 0] as mentioned in the error logs; however, this only happens when functionalization is enabled. The last few bits of the dispatch trace seems suspicious. Without functionalization, the dispatch looks like:

```

[call] op=[aten::index_reduce_], key=[AutogradCPU]

[redispatch] op=[aten::index_reduce_], key=[ADInplaceOrView]

[redispatch] op=[aten::index_reduce_], key=[CPU]

[call] op=[aten::to.dtype], key=[CPU]

[call] op=[aten::index_fill_.int_Scalar], key=[CPU]

[call] op=[aten::as_strided], key=[CPU]

[call] op=[aten::as_strided], key=[CPU]

```

However, with functionalization, it looks like:

```

[call] op=[aten::index_reduce_], key=[FuncTorchDynamicLayerFrontMode]

[callBoxed] op=[aten::index_reduce_], key=[Functionalize]

[call] op=[aten::index_reduce_], key=[Meta]

[callBoxed] op=[aten::index_reduce], key=[Meta]

[call] op=[aten::select.int], key=[Meta]

[call] op=[aten::as_strided], key=[Meta]

[call] op=[aten::select.int], key=[Meta]

```

Just looking at it at a high-level, seems like functionalization now decomposes into `select.int` that might deal with indices differently compared to the previous ops?

Please let me know if you need any more information.

cc @ezyang @eellison @bdhirsh @soumith @alanwaketan

### Versions

Nightly

| 10 |

3,507 | 94,457 |

LSTM on CPU is significantly slower on PyTorch compared to other frameworks

|

module: performance, module: cpu, triaged

|

### 🐛 Describe the bug

Hello everybody.

I’ve been experimenting with different models and different frameworks, and I’ve noticed that, when using CPU, training a LSTM model on the IMDB dataset is 3x to 5x slower on PyTorch (around 739 seconds) compared to the Keras and TensorFlow implementations (around 201 seconds and around 135 seconds, respectively). Moreover, I’ve also noticed that the first epoch takes significantly more time than the rest of the epochs:

```

-PyTorch: Epoch 1 done in 235.0469572544098s

-PyTorch: Epoch 2 done in 125.87335634231567s

-PyTorch: Epoch 3 done in 125.26632475852966s

-PyTorch: Epoch 4 done in 126.59195327758789s

-PyTorch: Epoch 5 done in 126.00697541236877s

```

Which doesn’t occur when using the other frameworks:

Keras:

```

Epoch 1/5

98/98 [==============================] - 41s 408ms/step - loss: 0.5280 - accuracy: 0.7300

Epoch 2/5

98/98 [==============================] - 40s 404ms/step - loss: 0.3441 - accuracy: 0.8566

Epoch 3/5

98/98 [==============================] - 40s 406ms/step - loss: 0.2384 - accuracy: 0.9080

Epoch 4/5

98/98 [==============================] - 40s 406ms/step - loss: 0.1625 - accuracy: 0.9386

Epoch 5/5

98/98 [==============================] - 40s 406ms/step - loss: 0.1176 - accuracy: 0.9580

```

TensorFlow:

```

-TensorFlow: Epoch 1 done in 37.287458419799805s

-TensorFlow: Epoch 2 done in 36.93708920478821s

-TensorFlow: Epoch 3 done in 36.85307550430298s

-TensorFlow: Epoch 4 done in 37.23605704307556s

-TensorFlow: Epoch 5 done in 37.04216718673706s

```

While using GPU, the problem seems to disappear.

PyTorch:

```

-PyTorch: Epoch 1 done in 2.6681089401245117s

-PyTorch: Epoch 2 done in 2.623263120651245s

-PyTorch: Epoch 3 done in 2.6285109519958496s

-PyTorch: Epoch 4 done in 2.6813976764678955s

-PyTorch: Epoch 5 done in 2.6470844745635986s

```

Keras:

```

Epoch 1/5

98/98 [==============================] - 6s 44ms/step - loss: 0.5434 - accuracy: 0.7220

Epoch 2/5

98/98 [==============================] - 4s 44ms/step - loss: 0.4673 - accuracy: 0.7822

Epoch 3/5

98/98 [==============================] - 4s 45ms/step - loss: 0.2500 - accuracy: 0.8998

Epoch 4/5

98/98 [==============================] - 4s 46ms/step - loss: 0.1581 - accuracy: 0.9434

Epoch 5/5

98/98 [==============================] - 4s 46ms/step - loss: 0.0985 - accuracy: 0.9660

```

TensorFlow:

```

-TensorFlow: Epoch 1 done in 4.04967999458313s

-TensorFlow: Epoch 2 done in 2.443302869796753s

-TensorFlow: Epoch 3 done in 2.450983762741089s

-TensorFlow: Epoch 4 done in 2.4626052379608154s

-TensorFlow: Epoch 5 done in 2.4663102626800537s

```

Here’s the information on my PyTorch build:

```

PyTorch built with:

- GCC 7.3

- C++ Version: 201402

- Intel(R) Math Kernel Library Version 2020.0.0 Product Build 20191122 for Intel(R) 64 architecture applications

- Intel(R) MKL-DNN v2.2.3 (Git Hash 7336ca9f055cf1bfa13efb658fe15dc9b41f0740)

- OpenMP 201511 (a.k.a. OpenMP 4.5)

- LAPACK is enabled (usually provided by MKL)

- NNPACK is enabled

- CPU capability usage: AVX2

- CUDA Runtime 10.2

- NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_70,code=sm_70

- CuDNN 7.6.5

- Magma 2.5.2

- Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=10.2, CUDNN_VERSION=7.6.5, CXX_COMPILER=/opt/rh/devtoolset-7/root/usr/bin/c++, CXX_FLAGS= -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -fopenmp -DNDEBUG -DUSE_KINETO -DUSE_FBGEMM -DUSE_QNNPACK -DUSE_PYTORCH_QNNPACK -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO -O2 -fPIC -Wno-narrowing -Wall -Wextra -Werror=return-type -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wno-sign-compare -Wno-unused-parameter -Wno-unused-variable -Wno-unused-function -Wno-unused-result -Wno-unused-local-typedefs -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Wno-stringop-overflow, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.10.0, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=ON, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=ON, USE_OPENMP=ON

```

Here’s the model’s code:

```

class PyTorchLSTMMod(torch.nn.Module):

"""This class implements the LSTM model using PyTorch.

Arguments

---------

initializer: function

The weight initialization function from the torch.nn.init module that is used to initialize

the initial weights of the models.

vocabulary_size: int

The number of words that are to be considered among the words that used most frequently.

embedding_size: int

The number of dimensions to which the words will be mapped to.

hidden_size: int

The number of features of the hidden state.

dropout: float

The dropout rate that will be considered during training.

"""

def __init__(self, initializer, vocabulary_size, embedding_size, hidden_size, dropout):

super().__init__()

self.embed = torch.nn.Embedding(num_embeddings=vocabulary_size, embedding_dim=embedding_size)

self.dropout1 = torch.nn.Dropout(dropout)

self.lstm = torch.nn.LSTM(input_size=embedding_size, hidden_size=hidden_size, batch_first=True)

initializer(self.lstm.weight_ih_l0)

torch.nn.init.orthogonal_(self.lstm.weight_hh_l0)

self.dropout2 = torch.nn.Dropout(dropout)

self.fc = torch.nn.Linear(in_features=hidden_size, out_features=1)

def forward(self, inputs, is_training=False):

"""This function implements the forward pass of the model.

Arguments

---------

inputs: Tensor

The set of samples the model is to infer.

is_training: boolean

This indicates whether the forward pass is occuring during training

(i.e., if we should consider dropout).

"""

x = inputs

x = self.embed(x)

if is_training:

x = self.dropout1(x)

o, (h, c) = self.lstm(x)

out = h[-1]

if is_training:

out = self.dropout2(out)

f = self.fc(out)

return f.flatten()#torch.sigmoid(f).flatten()

def train_pytorch(self, optimizer, epoch, train_loader, device, data_type, log_interval):

"""This function implements a single epoch of the training process of the PyTorch model.

Arguments

---------

self: PyTorchLSTMMod

The model that is to be trained.

optimizer: torch.nn.optim

The optimizer to be used during the training process.

epoch: int

The epoch associated with the training process.

train_loader: DataLoader

The DataLoader that is used to load the training data during the training process.

Note that the DataLoader loads the data according to the batch size

defined with it was initialized.

device: string

The string that indicates which device is to be used at runtime (i.e., GPU or CPU).

data_type: string

This string indicates whether mixed precision is to be used or not.

log_interval: int

The interval at which the model logs the process of the training process

in terms of number of batches passed through the model.

"""

self.train()

epoch_start = time.time()

loss_fn = torch.nn.BCEWithLogitsLoss()

if data_type == 'mixed':

scaler = torch.cuda.amp.GradScaler()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

if data_type == 'mixed':

with torch.cuda.amp.autocast():

output = self(data, is_training=True)

loss = loss_fn(output, target)

scaler.scale(loss).backward()

scaler.step(optimizer)

scaler.update()

else:

output = self(data, is_training=True)

loss = loss_fn(output, target)

loss.backward()

optimizer.step()

if log_interval == -1:

continue

if batch_idx % log_interval == 0:

print('Train set, Epoch {}\tLoss: {:.6f}'.format(

epoch, loss.item()))

print("-PyTorch: Epoch {} done in {}s\n".format(epoch, time.time() - epoch_start))

def test_pytorch(self, test_loader, device, data_type):

"""This function implements the testing process of the PyTorch model and returns the accuracy

obtained on the testing dataset.

Arguments

---------

model: torch.nn.Module

The model that is to be tested.

test_loader: DataLoader

The DataLoader that is used to load the testing data during the testing process.

Note that the DataLoader loads the data according to the batch size

defined with it was initialized.

device: string

The string that indicates which device is to be used at runtime (i.e., GPU or CPU).

data_type: string

This string indicates whether mixed precision is to be used or not.

"""

self.eval()

with torch.no_grad():

#Loss and correct prediction accumulators

test_loss = 0

correct = 0

total = 0

loss_fn = torch.nn.BCEWithLogitsLoss()

for data, target in test_loader:

data, target = data.to(device), target.to(device)

if data_type == 'mixed':

with torch.cuda.amp.autocast():

outputs = self(data).detach()

test_loss += loss_fn(outputs, target).detach()

preds = (outputs >= 0.5).float() == target

correct += preds.sum().item()

total += preds.size(0)

else:

outputs = self(data).detach()

test_loss += loss_fn(outputs, target).detach()

preds = (outputs >= 0.5).float() == target

correct += preds.sum().item()

total += preds.size(0)

#Print log

test_loss /= len(test_loader.dataset)

print('\nTest set, Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * (correct / total)))

return 100. * (correct / total)

```

I'm on a Ubuntu 18.04.4 system equipped with an NVIDIA Quadro RTX 4000 GPU with 8GB of VRAM and an Intel(R) Core(TM) i9-9900K CPU running at 3.60GHz. I've already tried to run this code on separate machines, but the behavior seems to occur only on the system described above. I've also try to play around with number of threads, to no avail.

I have also created a repo for the sake of reproducibility: https://github.com/jd2151171/pytorch_question

Any ideas what could be the cause of this?

Thanks!

### Versions

The version of relevant libraries are:

numpy==1.19.5

torch==1.10.0

torchaudio==0.10.0

torchvision==0.11.1

mkl==2022.2.1

mkl-fft==1.3.0

mkl-random==1.2.1

mkl-service==2.4.0

cc @ngimel @jgong5 @mingfeima @XiaobingSuper @sanchitintel @ashokei @jingxu10

| 4 |

3,508 | 94,454 |

Document and promise reproducibility torch.randn / torch.rand / torch.randint family behavior on CPU devices

|

feature, triaged, module: random

|

### 🚀 The feature, motivation and pitch

In PyTorch's documentation: https://pytorch.org/docs/stable/notes/randomness.html#reproducibility The reproduciblity of RNG is not guaranteed cross different releases / commits / platforms. While it is difficult to guarantee reproducibility with exotic hardware or GPU, it is beneficial, and practically unchanged for CPU on PyTorch end.

This pitch suggests we revisit some of our CPU implementations, and further promise stability / reproducibility for CPU-based RNG. In the context of where I am coming from (generative AI), what comes to known as "the seed" helps many creators to verify images generated by other creators and to build new work upon that. The noise tensor init from that seed is often small in size (4x64x64) thus performance is not a concern.

#### Counterargument

This pitch will force us to fix on using MT19937 family of RNGs as the starting point. While it is robust, there may be future, better, faster RNGs that better suit.

The community already moved on to use GPU-based RNG, which makes this pitch moot. There is no stability / reproducibility whatsoever with GPU RNGs, making this suggestion a fool.

#### Questions

Upon further investigate current PyTorch implementation, there are some questions on whether the current implementation on CPU is optimal. For example, when number of elements smaller than 16, we currently sample from double precision and then cast back to float when fill in `torch.randn([15], dtype=torch.float)`: https://github.com/pytorch/pytorch/blob/master/aten/src/ATen/native/cpu/DistributionTemplates.h#L192 When there are more than 16 elements, we use float throughout.

### Alternatives

There are a few alternatives:

#### Declare a particular RNG mode that is guaranteed reproducibility cross releases / commits / platforms.

This helps us to continue iterate on main RNG implementation while let whoever wants stability to opt-in. It does incur the cost of maintaining another implementation eventually.

#### Don't guarantee any stability / reproducibility.

Continue doing this will not break things. But the stability / reproducibility is practically guaranteed due to very little change in CPU RNG implementation. This may risk a future when we actually break RNG reproducibility (because we can), there are incompatibility concerns when upgrade.

I won't discuss the situation where we guarantee stability / reproducibility cross hardware, as that may not be practical at all.

### Additional context

_No response_

cc @pbelevich

| 0 |

3,509 | 94,451 |

`jacrev` raise "Cannot access storage of TensorWrapper" error when computing the grad of `storage`

|

module: autograd, triaged, actionable, module: functorch

|

### 🐛 Describe the bug

`jacrev` raise "Cannot access storage of TensorWrapper" error when computing the grad of `storage`. By contrast, the `torch.autograd.jacobian` will return the gradient without any error

```py

import torch

from torch.autograd.functional import jacobian

from torch.func import jacrev, jacfwd

torch.manual_seed(420)

a = torch.zeros((3, 3)).bfloat16()

def func(a):

def TEMP_FUNC(a):

"""[WIP] BFloat16 support on CPU

"""

b = a * 2

b.storage()

return b

return TEMP_FUNC(a)

test_inputs = [a]

print(func(a))

# tensor([[0., 0., 0.],

# [0., 0., 0.],

# [0., 0., 0.]], dtype=torch.bfloat16)

print(jacobian(func, a, vectorize=True, strategy="reverse-mode"))

# succeed

print(jacrev(func)(a))

# NotImplementedError: Cannot access storage of TensorWrapper

```

### Versions

```

PyTorch version: 2.0.0.dev20230105

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04.1 LTS (x86_64)

GCC version: (Ubuntu 11.3.0-1ubuntu1~22.04) 11.3.0

Clang version: Could not collect

CMake version: version 3.22.1

Libc version: glibc-2.35

Python version: 3.9.15 (main, Nov 24 2022, 14:31:59) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-5.15.0-56-generic-x86_64-with-glibc2.35

Is CUDA available: True

CUDA runtime version: 11.7.99

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: NVIDIA GeForce RTX 3090

GPU 1: NVIDIA GeForce RTX 3090

GPU 2: NVIDIA GeForce RTX 3090

Nvidia driver version: 515.86.01

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.4.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.5

[pip3] torch==2.0.0.dev20230105

[pip3] torchaudio==2.0.0.dev20230105

[pip3] torchvision==0.15.0.dev20230105

[conda] blas 1.0 mkl

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.23.5 py39h14f4228_0

[conda] numpy-base 1.23.5 py39h31eccc5_0

[conda] pytorch 2.0.0.dev20230105 py3.9_cuda11.7_cudnn8.5.0_0 pytorch-nightly

[conda] pytorch-cuda 11.7 h67b0de4_2 pytorch-nightly

[conda] pytorch-mutex 1.0 cuda pytorch-nightly

[conda] torchaudio 2.0.0.dev20230105 py39_cu117 pytorch-nightly

[conda] torchtriton 2.0.0+0d7e753227 py39 pytorch-nightly

[conda] torchvision 0.15.0.dev20230105 py39_cu117 pytorch-nightly

```

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7 @Chillee @samdow @soumith @kshitij12345 @janeyx99

| 1 |

3,510 | 94,450 |

Pickling OneCycleLR.state_dict() with an unpickleable optimizer will result in an error.

|

module: optimizer, module: pickle, triaged, needs research

|

### 🐛 Describe the bug

OneCycleLR.state_dict() returns a bound method of OneCycleLR. Pickling the state_dict() also pickles the optimizer object attached to the OneCycleLR class instance. This can result in a pickling fail if the attached optimizer itself isn't pickleable.

gist can be found here: https://gist.github.com/MikhailKardash/69c8e98c0e23dc01c99627a43a84981d

### Versions

PyTorch version: 1.9.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04.1 LTS (x86_64)

GCC version: (Ubuntu 11.3.0-1ubuntu1~22.04) 11.3.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.35

Python version: 3.8.15 (default, Nov 24 2022, 15:19:38) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-5.15.79.1-microsoft-standard-WSL2-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to:

GPU models and configuration: GPU 0: NVIDIA T1200 Laptop GPU

Nvidia driver version: 517.13

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 39 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 16

On-line CPU(s) list: 0-15

Vendor ID: GenuineIntel

Model name: 11th Gen Intel(R) Core(TM) i7-11850H @ 2.50GHz

CPU family: 6

Model: 141

Thread(s) per core: 2

Core(s) per socket: 8

Socket(s): 1

Stepping: 1

BogoMIPS: 4992.00

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid pni pclmulqdq ssse3 fma cx16 pdcm pcid sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch invpcid_single ssbd ibrs ibpb stibp ibrs_enhanced fsgsbase bmi1 avx2 smep bmi2 erms invpcid avx512f avx512dq rdseed adx smap avx512ifma clflushopt clwb avx512cd sha_ni avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves avx512vbmi umip avx512_vbmi2 gfni vaes vpclmulqdq avx512_vnni avx512_bitalg avx512_vpopcntdq rdpid fsrm avx512_vp2intersect flush_l1d arch_capabilities

Hypervisor vendor: Microsoft

Virtualization type: full

L1d cache: 384 KiB (8 instances)

L1i cache: 256 KiB (8 instances)

L2 cache: 10 MiB (8 instances)

L3 cache: 24 MiB (1 instance)

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Not affected

Vulnerability Retbleed: Mitigation; Enhanced IBRS

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl and seccomp

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Enhanced IBRS, IBPB conditional, RSB filling, PBRSB-eIBRS SW sequence

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Versions of relevant libraries:

[pip3] mypy==0.910

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.23.5

[pip3] pytorch-lightning==1.5.9

[pip3] torch==1.9.0

[pip3] torchaudio==0.13.1

[pip3] torchmetrics==0.11.0

[pip3] torchvision==0.10.0

[conda] _tflow_select 2.3.0 mkl

[conda] blas 1.0 mkl

[conda] cpuonly 2.0 0 pytorch

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py38h7f8727e_0

[conda] mkl_fft 1.3.1 py38hd3c417c_0

[conda] mkl_random 1.2.2 py38h51133e4_0

[conda] numpy 1.23.5 py38h14f4228_0

[conda] numpy-base 1.23.5 py38h31eccc5_0

[conda] pytorch-lightning 1.5.9 pypi_0 pypi

[conda] pytorch-mutex 1.0 cpu pytorch

[conda] torch 1.9.0 pypi_0 pypi

[conda] torchaudio 0.13.1 py38_cpu pytorch

[conda] torchmetrics 0.11.0 pypi_0 pypi

[conda] torchvision 0.10.0 pypi_0 pypi

cc @vincentqb @jbschlosser @albanD @janeyx99

| 1 |

3,511 | 94,443 |

A better error msg for `cuda.jiterator` when input is on `cpu`

|

triaged, module: jiterator

|

### 🐛 Describe the bug

A better error msg may be needed for `cuda.jiterator` when input is on `cpu`. Now it will raise an INTERNAL ASSERT FAILED, such as

```py

import torch

torch.manual_seed(420)

x = torch.rand(3)

y = torch.rand(3)

def func(x, y):

fn = torch.cuda.jiterator._create_multi_output_jit_fn(

"""

template <typename T>

T binary_2outputs(T i0, T i1, T& out0, T& out1) {

out0 = i0 + i1;

out1 = i0 - i1;

}

""",

num_outputs=2)

out0, out1 = fn(x, y)

return out0, out1

func(x, y)

# RuntimeError: t == DeviceType::CUDA INTERNAL ASSERT FAILED at

# "/opt/conda/conda-bld/pytorch_1672906354936/work/c10/cuda/impl/CUDAGuardImpl.h":25,

# please report a bug to PyTorch.

```

### Versions

```

PyTorch version: 2.0.0.dev20230105

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04.1 LTS (x86_64)

GCC version: (Ubuntu 11.3.0-1ubuntu1~22.04) 11.3.0

Clang version: Could not collect

CMake version: version 3.22.1

Libc version: glibc-2.35

Python version: 3.9.15 (main, Nov 24 2022, 14:31:59) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-5.15.0-56-generic-x86_64-with-glibc2.35

Is CUDA available: True

CUDA runtime version: 11.7.99

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: NVIDIA GeForce RTX 3090

GPU 1: NVIDIA GeForce RTX 3090

GPU 2: NVIDIA GeForce RTX 3090

Nvidia driver version: 515.86.01

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.4.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.5

[pip3] torch==2.0.0.dev20230105

[pip3] torchaudio==2.0.0.dev20230105

[pip3] torchvision==0.15.0.dev20230105

[conda] blas 1.0 mkl

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.23.5 py39h14f4228_0

[conda] numpy-base 1.23.5 py39h31eccc5_0

[conda] pytorch 2.0.0.dev20230105 py3.9_cuda11.7_cudnn8.5.0_0 pytorch-nightly

[conda] pytorch-cuda 11.7 h67b0de4_2 pytorch-nightly

[conda] pytorch-mutex 1.0 cuda pytorch-nightly

[conda] torchaudio 2.0.0.dev20230105 py39_cu117 pytorch-nightly

[conda] torchtriton 2.0.0+0d7e753227 py39 pytorch-nightly

[conda] torchvision 0.15.0.dev20230105 py39_cu117 pytorch-nightly

```

cc @mruberry @ngimel

| 1 |

3,512 | 94,441 |

`get_debug_state` a script function causes INTERNAL ASSERT FAILED

|

oncall: jit, triaged

|

### 🐛 Describe the bug

`get_debug_state` a script function causes INTERNAL ASSERT FAILED

```py

import torch

input = torch.randn(1, 2, 3)

def func(input):

trace = torch.jit.trace(lambda x: x * x, [input])

script_fn = torch.jit.script(trace)

script_fn.get_debug_state()

func(input)

# RuntimeError: optimized_plan_ INTERNAL ASSERT FAILED

# at "/opt/conda/conda-bld/pytorch_1672906354936/work/torch/csrc/jit/runtime/profiling_graph_executor_impl.cpp":697,

# please report a bug to PyTorch.

```

### Versions

```

PyTorch version: 2.0.0.dev20230105

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04.1 LTS (x86_64)

GCC version: (Ubuntu 11.3.0-1ubuntu1~22.04) 11.3.0

Clang version: Could not collect

CMake version: version 3.22.1

Libc version: glibc-2.35

Python version: 3.9.15 (main, Nov 24 2022, 14:31:59) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-5.15.0-56-generic-x86_64-with-glibc2.35

Is CUDA available: True

CUDA runtime version: 11.7.99

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: NVIDIA GeForce RTX 3090

GPU 1: NVIDIA GeForce RTX 3090

GPU 2: NVIDIA GeForce RTX 3090

Nvidia driver version: 515.86.01

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.4.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.5

[pip3] torch==2.0.0.dev20230105

[pip3] torchaudio==2.0.0.dev20230105

[pip3] torchvision==0.15.0.dev20230105

[conda] blas 1.0 mkl

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.23.5 py39h14f4228_0

[conda] numpy-base 1.23.5 py39h31eccc5_0

[conda] pytorch 2.0.0.dev20230105 py3.9_cuda11.7_cudnn8.5.0_0 pytorch-nightly

[conda] pytorch-cuda 11.7 h67b0de4_2 pytorch-nightly

[conda] pytorch-mutex 1.0 cuda pytorch-nightly

[conda] torchaudio 2.0.0.dev20230105 py39_cu117 pytorch-nightly

[conda] torchtriton 2.0.0+0d7e753227 py39 pytorch-nightly

[conda] torchvision 0.15.0.dev20230105 py39_cu117 pytorch-nightly

```

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel

| 1 |

3,513 | 94,434 |

Exporting the operator 'aten::_transformer_encoder_layer_fwd' to ONNX opset version 13 is not supported

|

module: onnx, low priority, triaged, onnx-needs-info

|

### 🐛 Describe the bug

I just wanted to export to onnx torch.nn.TransformerEncoder, and got this type of error

raise errors.UnsupportedOperatorError(

torch.onnx.errors.UnsupportedOperatorError: Exporting the operator 'aten::_transformer_encoder_layer_fwd' to ONNX opset version 13 is not supported.)

### Versions

numpy==1.24.1

pytorch-lightning==1.9.0

torch==1.13.1

torchaudio==0.13.1

torchdata==0.5.1

torchmetrics==0.11.1

torchvision==0.14.1

| 8 |

3,514 | 94,429 |

[RFC]FSDP API should make limit_all_gathers and forward_prefetch both default to be True

|

triaged, module: fsdp

|

### 🚀 The feature, motivation and pitch

limit_all_gathers=True can avoid over-prefetch when CPU thread is fast;

forward_prefetch=True can help more prefetch when CPU thread is slow;

so basically we can always explicitly prefetch but with rate limiter;

We probably need to make number of all_gathers be the same for forward_prefetch and limit_all_gathers code paths.

Have both to be True in default should work fine no matter CPU thread is fast or slow, so that users do not tune these by themselves. We can do some experiments to confirm this.

### Alternatives

_No response_

### Additional context

_No response_

cc @mrshenli @rohan-varma @awgu

| 1 |

3,515 | 94,428 |

nn.TransformerEncoderLayer fastpath (BetterTransformer) is much slower with src_key_padding_mask

|

oncall: transformer/mha

|

### 🐛 Describe the bug

TransformerEncoder runs much slower with src_key_padding_mask than without any padding. On v100, it takes ~8.8ms for bert-base batch size 1 seq 128 with mask set while only takes ~4.5ms without mask.

```

import torch

import timeit

def test_transformerencoder_fastpath():

"""

Test TransformerEncoder fastpath output matches slowpath output

"""

torch.manual_seed(1234)

nhead = 12

d_model = 768

dim_feedforward = 4 * d_model

batch_first = True

device = "cuda"

model = torch.nn.TransformerEncoder(

torch.nn.TransformerEncoderLayer(

d_model=d_model,

nhead=nhead,

dim_feedforward=dim_feedforward,

batch_first=batch_first),

num_layers=12,

).to(device).half().eval()

# each input is (input, mask)

input_value = torch.rand(8, 128, d_model)

mask_value = [ [0] * 128] + [[0] * 64 + [1] * 64] * 7

input = torch.tensor(input_value, device=device, dtype=torch.get_default_dtype()).half() # half input

src_key_padding_mask = torch.tensor(mask_value, device=device, dtype=torch.bool) # bool mask

with torch.no_grad():

print(f'''With mask: {timeit.timeit("model(input, src_key_padding_mask=src_key_padding_mask)", globals=locals(), number=1000)}"''')

print(f'''Without mask: {timeit.timeit("model(input)", globals=locals(), number=1000)}''')

test_transformerencoder_fastpath()

```

### Versions

ollecting environment information...

PyTorch version: 1.13.1+cu116

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.25.0

Libc version: glibc-2.31

Python version: 3.8.10 (default, Nov 14 2022, 12:59:47) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.15.0-1031-azure-x86_64-with-glibc2.29

Is CUDA available: True

CUDA runtime version: 11.6.124

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: Tesla V100-PCIE-16GB

Nvidia driver version: 510.108.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.4.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 46 bits physical, 48 bits virtual

CPU(s): 6

On-line CPU(s) list: 0-5

Thread(s) per core: 1

Core(s) per socket: 6

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 79

Model name: Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz

Stepping: 1

CPU MHz: 2593.993

BogoMIPS: 5187.98

Hypervisor vendor: Microsoft

Virtualization type: full

L1d cache: 192 KiB

L1i cache: 192 KiB

L2 cache: 1.5 MiB

L3 cache: 35 MiB

NUMA node0 CPU(s): 0-5

Vulnerability Itlb multihit: KVM: Mitigation: VMX unsupported

Vulnerability L1tf: Mitigation; PTE Inversion

Vulnerability Mds: Mitigation; Clear CPU buffers; SMT Host state unknown

Vulnerability Meltdown: Mitigation; PTI

Vulnerability Mmio stale data: Vulnerable: Clear CPU buffers attempted, no microcode; SMT Host state unknown

Vulnerability Retbleed: Not affected

Vulnerability Spec store bypass: Vulnerable

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Retpolines, STIBP disabled, RSB filling, PBRSB-eIBRS Not affected

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Mitigation; Clear CPU buffers; SMT Host state unknown

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology cpuid pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch invpcid_single pti fsgsbase bmi1 hle avx2 smep bmi2 erms invpcid rtm rdseed adx smap xsaveopt md_clear

Versions of relevant libraries:

[pip3] numpy==1.24.1

[pip3] torch==1.13.1+cu116

[conda] Could not collect

cc @jbschlosser @bhosmer @cpuhrsch @erichan1

| 2 |

3,516 | 94,414 |

[fake_tensor] torch._subclasses.fake_tensor.DynamicOutputShapeException when calling torch.nonzero using aot_function

|

triaged, oncall: pt2, module: dynamic shapes, module: graph breaks

|

### 🐛 Describe the bug

This issue appears related to https://github.com/pytorch/torchdynamo/issues/1886 but for torch.nonzero instead of torch.repeat_interleave (there are probably others as well).

For some reason using torch._dynamo.optimize works but using aot_function does not. I think this has something to do with needing a graph break but I'm not sure.

It's possible my minimal use case here is too simple; my real use case involves a bunch of computation and then using torch.nonzero to precompute tensor indices that are used later. I'm using aot_function to try and automatically generate code for the forward and backward passes. As a workaround I'm able to split my input into two functions, one that contains everything before the torch.nonzero call and another that takes the resulting indices from the torch.nonzero call as a parameter.

### Error logs

Failed to collect metadata on function, produced code may be suboptimal. Known situations this can occur are inference mode only compilation involving resize_ or prims (!schema.hasAnyAliasInfo() INTERNAL ASSERT FAILED); if your situation looks different please file a bug to PyTorch.

Traceback (most recent call last):

File "/torch/_functorch/aot_autograd.py", line 1381, in aot_wrapper_dedupe

fw_metadata, _out = run_functionalized_fw_and_collect_metadata(flat_fn)(

File "/torch/_functorch/aot_autograd.py", line 578, in inner

flat_f_outs = f(*flat_f_args)

File "/torch/_functorch/aot_autograd.py", line 2314, in flat_fn

tree_out = fn(*args, **kwargs)

File "<stdin>", line 2, in f

File "/torch/utils/_stats.py", line 15, in wrapper

return fn(*args, **kwargs)

File "/torch/_subclasses/fake_tensor.py", line 928, in __torch_dispatch__

op_impl_out = op_impl(self, func, *args, **kwargs)

File "/torch/_subclasses/fake_tensor.py", line 379, in dyn_shape

raise DynamicOutputShapeException(func)

torch._subclasses.fake_tensor.DynamicOutputShapeException: aten.nonzero.default

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/torch/_functorch/aot_autograd.py", line 2334, in returned_function

compiled_fn = create_aot_dispatcher_function(

File "/torch/2.0.0-03c7/lib/python3.10/site-packages/torch/_dynamo/utils.py", line 163, in time_wrapper

r = func(*args, **kwargs)

File "/torch/_functorch/aot_autograd.py", line 2184, in create_aot_dispatcher_function

compiled_fn = compiler_fn(flat_fn, fake_flat_args, aot_config)

File "/torch/_functorch/aot_autograd.py", line 1504, in aot_wrapper_dedupe

compiled_fn = compiler_fn(wrapped_flat_fn, deduped_flat_args, aot_config)

File "/torch/2.0.0-03c7/lib/python3.10/site-packages/torch/_functorch/aot_autograd.py", line 1056, in aot_dispatch_base

fw_module = make_fx(flat_fn, aot_config.decompositions)(*tmp_flat_args)

File "/torch/2.0.0-03c7/lib/python3.10/site-packages/torch/fx/experimental/proxy_tensor.py", line 716, in wrapped

t = dispatch_trace(wrap_key(func, args, fx_tracer), tracer=fx_tracer, concrete_args=tuple(phs))

File "/torch/2.0.0-03c7/lib/python3.10/site-packages/torch/_dynamo/eval_frame.py", line 209, in _fn

return fn(*args, **kwargs)

File "/torch/2.0.0-03c7/lib/python3.10/site-packages/torch/fx/experimental/proxy_tensor.py", line 450, in dispatch_trace

graph = tracer.trace(root, concrete_args)

File "/torch/2.0.0-03c7/lib/python3.10/site-packages/torch/_dynamo/eval_frame.py", line 209, in _fn

return fn(*args, **kwargs)

File "/torch/fx/_symbolic_trace.py", line 778, in trace

(self.create_arg(fn(*args)),),

File "/torch/fx/experimental/proxy_tensor.py", line 466, in wrapped

out = f(*tensors)

File "<string>", line 1, in <lambda>

File "/torch/_functorch/aot_autograd.py", line 1502, in wrapped_flat_fn

return flat_fn(*add_dupe_args(args))

File "/torch/_functorch/aot_autograd.py", line 2314, in flat_fn

tree_out = fn(*args, **kwargs)

File "<stdin>", line 2, in f

File "/torch/utils/_stats.py", line 15, in wrapper

return fn(*args, **kwargs)

File "/torch/fx/experimental/proxy_tensor.py", line 494, in __torch_dispatch__

return self.inner_torch_dispatch(func, types, args, kwargs)

File "/torch/2.0.0-03c7/lib/python3.10/site-packages/torch/fx/experimental/proxy_tensor.py", line 519, in inner_torch_dispatch

out = proxy_call(self, func, args, kwargs)

File "/torch/fx/experimental/proxy_tensor.py", line 352, in proxy_call

out = func(*args, **kwargs)

File "/torch/_ops.py", line 284, in __call__

return self._op(*args, **kwargs or {})

File "/torch/utils/_stats.py", line 15, in wrapper

return fn(*args, **kwargs)

File "/torch/_subclasses/fake_tensor.py", line 928, in __torch_dispatch__

op_impl_out = op_impl(self, func, *args, **kwargs)

File "/torch/_subclasses/fake_tensor.py", line 379, in dyn_shape

raise DynamicOutputShapeException(func)

torch._subclasses.fake_tensor.DynamicOutputShapeException: aten.nonzero.default

### Minified repro

import torch

import functorch

from typing import List

import torch._dynamo

from functorch.compile import aot_function, aot_module

def f(x):

return torch.nonzero(x > 0)

x = torch.ones([4,4])

x[0][3] = 0

x[1][2] = 0

opt_fn = torch._dynamo.optimize("eager")(f)

y = opt_fn(x) #Works

def my_compiler(gm: torch.fx.GraphModule, example_inputs: List[torch.Tensor]):

print(gm.code)

return gm.forward

aot_fun = aot_function(f,fw_compiler=my_compiler,bw_compiler=my_compiler)

y1 = aot_fun(x) #Error

### Versions

>>> print(torch.__version__)

2.0.0.dev20230207+cu117

>>> torch.version.git_version

'1530b798ceeff749a7cc5833d9d9627778bd998a'

cc @ezyang @msaroufim @wconstab @bdhirsh @anijain2305 @soumith @ngimel

| 10 |

3,517 | 94,397 |

jacfwd and jacrev are fundamentally broken for complex inputs

|

module: autograd, triaged, module: complex, complex_autograd, module: functorch

|

### 🐛 Describe the bug

Follow up of https://github.com/pytorch/pytorch/issues/90499

Consider a map `f : C -> C`. `f` is called holomorphic if it's complex differentiable. By the Cauchy-Riemann equations), one shows that this is equivalent to it being real differentiable as functions `f : R^2 -> R^2` and their `2 x 2` Jacobian being representable using one complex number. Now, there are functions that are not holomorphic, the simplest of them being `x.conj()`. These are functions whose Jacobian *cannot* be represented using complex numbers. For example the Jacobian of `x.conj()` is given by the matrix `[[1, 0], [0, 1]]`. The Jacobian vector product at a vector `v \in C` is given by `v.conj()`. There is no complex number `z` such that `z*v = v.conj()`.

All this says that `jacfwd` and `jacrev` should *always* return a real Jacobian, as there is no easy test for when a function is holomorphic. More on this a possible APIs moving forward at the end though.

A few examples that are broken ATM.

```python

>>> x = torch.tensor(0.5+1.7j, dtype=torch.complex64)

>>> jacfwd(torch.conj)(x) # should return tensor([[ 1, 0], [ 0, -1]])

tensor(1.-0.j)

>>> jacrev(torch.conj)(x) # should return tensor([[ 1, 0], [ 0, -1]])

tensor(1.-0.j)

>>> jacfwd(torch.abs)(x) # should return tensor([[0.2822, 0.9594]])

tensor(0.2822)

>>> jacrev(torch.abs)(x) # almost! Should return tensor([[0.2822], [0.9594]])

tensor(0.2822+0.9594j)

>>> jacfwd(torch.Tensor.cfloat)(torch.ones(())) # should return tensor([[1, 0]])

tensor(1.+0.j)

>>> jacrev(torch.Tensor.cfloat)(torch.ones(())) # should return tensor([[1], [0]])

tensor(1.)

```

Note that there is no non-constant holomorphic function from `R -> C` or `C -> R` so none of the functions above are holomorphic and PyTorch should never return complex "Jacobians".

There is no easy way to test whether a function is holomorphic, but we do know that a composition of holomorphic functions is holomorphic. As such, a possible API for this would be to have a kwarg `holomorphic: bool = False` that if set to `True` it tries to return a complex Jacobian if possible. This would be implemented by having a list of functions which are holomorphic, and making sure these are the only ones called in the computaiton of `jacfwd` / `jacrev`.

### Versions

master

cc @ezyang @gchanan @zou3519 @albanD @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7 @anjali411 @dylanbespalko @mruberry @Chillee @samdow @soumith @kshitij12345 @janeyx99

| 30 |

3,518 | 94,395 |

`func.jacrev()` should be implemented as `func.jacfwd().mT.contiguous()`

|

triaged, module: complex, module: functorch

|

### 🐛 Describe the bug

Forward AD is (should be?) faster to execute theoretically than backward AD, because it does not need to create a graph, save intermediate tensors, etc. Furthermore its formulas are simpler than those for the backward, so they should be faster for that reason as well.

We would also not have issues like https://github.com/pytorch/pytorch/issues/90499.

### Versions

master

cc @ezyang @anjali411 @dylanbespalko @mruberry @Lezcano @nikitaved @zou3519 @Chillee @samdow @soumith @kshitij12345 @janeyx99

| 7 |

3,519 | 94,392 |

[pt20][eager] Lamb optimizer cannot be used in the compiled function

|

triaged, oncall: pt2, module: dynamo

|

### 🐛 Describe the bug

When I using `torch.compile` to compile a method including the `step` process of the `Lamb` optimizer. It would fail at the second iteration.

### Error logs

```python

[2023-02-08 19:17:53,174] torch._dynamo.variables.torch: [WARNING] Profiler will be ignored

Traceback (most recent call last):

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/convert_frame.py", line 324, in _compile

out_code = transform_code_object(code, transform)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/bytecode_transformation.py", line 361, in transform_code_object

transformations(instructions, code_options)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/convert_frame.py", line 311, in transform

tracer.run()

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/symbolic_convert.py", line 1683, in run

super().run()

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/symbolic_convert.py", line 569, in run

and self.step()

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/symbolic_convert.py", line 532, in step

getattr(self, inst.opname)(inst)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/symbolic_convert.py", line 338, in wrapper

return inner_fn(self, inst)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/symbolic_convert.py", line 144, in impl

self.push(fn_var.call_function(self, self.popn(nargs), {}))

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/variables/builtin.py", line 495, in call_function

result = handler(tx, *args, **kwargs)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/variables/builtin.py", line 703, in call_getitem

return args[0].call_method(tx, "__getitem__", args[1:], kwargs)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/variables/dicts.py", line 68, in call_method

return self.getitem_const(args[0])

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/variables/dicts.py", line 53, in getitem_const

return self.items[ConstDictVariable.get_key(arg)].add_options(self, arg)

KeyError: exp_avg_sq

from user code:

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/timm/optim/lamb.py", line 161, in step

exp_avg, exp_avg_sq = state['exp_avg'], state['exp_avg_sq']

Set torch._dynamo.config.verbose=True for more information

You can suppress this exception and fall back to eager by setting:

torch._dynamo.config.suppress_errors = True

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/nvme/mazerun/repro.py", line 31, in <module>

opt(data, optimizer)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/eval_frame.py", line 209, in _fn

return fn(*args, **kwargs)

File "/nvme/mazerun/repro.py", line 20, in train_step

loss.backward()

File "/nvme/mazerun/repro.py", line 21, in <graph break in train_step>

optimizer.step()

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/optim/optimizer.py", line 265, in wrapper

out = func(*args, **kwargs)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/utils/_contextlib.py", line 115, in decorate_context

return func(*args, **kwargs)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/eval_frame.py", line 330, in catch_errors

return callback(frame, cache_size, hooks)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/convert_frame.py", line 404, in _convert_frame

result = inner_convert(frame, cache_size, hooks)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/convert_frame.py", line 104, in _fn

return fn(*args, **kwargs)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/convert_frame.py", line 262, in _convert_frame_assert

return _compile(

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/utils.py", line 163, in time_wrapper

r = func(*args, **kwargs)

File "/nvme/mazerun/.conda/envs/torch2.0/lib/python3.10/site-packages/torch/_dynamo/convert_frame.py", line 394, in _compile

raise InternalTorchDynamoError() from e

torch._dynamo.exc.InternalTorchDynamoError

```

### Minified repro

```python

from timm.optim import Lamb

import torch

import torch.nn as nn

class Repro(nn.Module):

def __init__(self):

super().__init__()

self.linear1 = nn.Linear(4, 4)

self.linear2 = nn.Linear(4, 4)

def forward(self, x):

x = self.linear1(x)

x = self.linear2(x)

return x

def train_step(self, x, optimizer):

loss = self(x).mean()

loss.backward()

optimizer.step()

if __name__ == "__main__":

model = Repro().cuda()

optimizer = Lamb(model.parameters())

opt = torch.compile(model.train_step, backend='eager')

data = torch.rand(2, 4).cuda()

for i in range(2):

opt(data, optimizer)

```

### Versions

```

timm 0.6.12

torch 2.0.0.dev20230207+cu117

torchvision 0.15.0.dev20230207+cu117

numpy 1.24.2

```

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh @voznesenskym @yanboliang @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @desertfire

| 1 |

3,520 | 94,388 |

Inconsistent results when using torch.Tensor.bernoulli with float instead of Tensor probabilities

|

module: distributions, triaged, module: random, module: determinism

|

### 🐛 Describe the bug

When using `torch.Tensor.bernoulli` with a float value for `p` the results does not match the results when doing bernoulli manually (see case B) or when using a Tensor storing the probabilities.

I created 4 tests:

- B: manual bernoulli

- C: the test that fails, using `p` as float

- D: using a Tensor for probabilities

- E: same, with inplace bernoulli

```python

import torch

A = torch.zeros(15)

p = 0.75

torch.manual_seed(314159)

R = torch.rand_like(A)

B = (R < p).to(torch.float)

print('B:', B)

torch.manual_seed(314159)

C = A.bernoulli(p)

print('C:', C)

torch.manual_seed(314159)

p_ = torch.ones_like(A) * p

D = p_.bernoulli()

print('D:', D)

torch.manual_seed(314159)

E = A.detach().clone().bernoulli_(p_)

print('E:', E)

print()

print('Summary')

print('B == C', (B == C).all())

print('B == D', (B == D).all())

print('B == E', (B == E).all())

```

Output:

```

B: tensor([1., 0., 1., 1., 1., 0., 1., 1., 1., 1., 1., 1., 1., 1., 1.])

C: tensor([1., 0., 0., 1., 1., 0., 1., 1., 1., 1., 1., 1., 1., 1., 1.])

D: tensor([1., 0., 1., 1., 1., 0., 1., 1., 1., 1., 1., 1., 1., 1., 1.])

E: tensor([1., 0., 1., 1., 1., 0., 1., 1., 1., 1., 1., 1., 1., 1., 1.])

Summary

B == C tensor(False)

B == D tensor(True)

B == E tensor(True)

```

As you can see, the 3rd value in case C is different from the other cases. You can also increase the size of A, same result. If you look at `R`, the 3rd value is not even close to 0.75, so it cannot be a numerical problem.

### Versions

Collecting environment information...

PyTorch version: 1.13.1+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: CentOS Linux 7 (Core) (x86_64)

GCC version: (GCC) 10.3.0

Clang version: Could not collect

CMake version: version 3.24.2

Libc version: glibc-2.17

Python version: 3.7.12 (default, Feb 6 2022, 20:29:18) [GCC 10.2.1 20210130 (Red Hat 10.2.1-11)] (64-bit runtime)

Python platform: Linux-3.10.0-1160.76.1.el7.x86_64-x86_64-with-centos-7.9.2009-Core

Is CUDA available: False

CUDA runtime version: 11.4.120

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.21.6

[pip3] pytorch-lightning==1.6.4

[pip3] torch==1.13.1

[pip3] torchmetrics==0.9.1

[pip3] torchvision==0.14.1

[conda] Could not collect

cc @fritzo @neerajprad @alicanb @nikitaved @pbelevich @mruberry @kurtamohler

| 1 |

3,521 | 94,378 |

[dynamo] equivalent conditions get different optimized code

|

triaged, oncall: pt2, module: dynamo

|

### 🐛 Describe the bug

When I run the following example code, I got three fx graphs. One is to get the result of `ta.sum() < 0`, the other two are to get the result of `3 * ta + tb` and `ta + 3 * tb` respectively. When I change the condition to `0 > ta.sum()`, dynamo fails to get a single fx graph and keeps the original bytecode.

```

import torch

import torch._dynamo as torchdynamo

import logging

torchdynamo.config.log_level = logging.INFO

torchdynamo.config.output_code = True

@torchdynamo.optimize("eager")

def toy_example(ta, tb):

if ta.sum() < 0:

return ta + 3 * tb

else:

return 3 * ta + tb

x = torch.randn(4, 4)

y = torch.randn(4, 4)

toy_example(x, y)

```

The output bytecode when the condition is `ta.sum() < 0`.

```

9 0 LOAD_GLOBAL 1 (__compiled_fn_0)

2 LOAD_FAST 0 (ta)

4 CALL_FUNCTION 1

6 UNPACK_SEQUENCE 1

8 POP_JUMP_IF_FALSE 20

10 LOAD_GLOBAL 2 (__resume_at_12_1)

12 LOAD_FAST 0 (ta)

14 LOAD_FAST 1 (tb)

16 CALL_FUNCTION 2

18 RETURN_VALUE

>> 20 LOAD_GLOBAL 3 (__resume_at_24_2)

22 LOAD_FAST 0 (ta)

24 LOAD_FAST 1 (tb)

26 CALL_FUNCTION 2

28 RETURN_VALUE

```

The output bytecode when the condition is `0 > ta.sum()`.

```

11 0 LOAD_CONST 1 (0)

2 LOAD_FAST 0 (ta)

4 LOAD_ATTR 0 (sum)

6 CALL_FUNCTION 0

8 COMPARE_OP 4 (>)

10 POP_JUMP_IF_FALSE 24

12 12 LOAD_FAST 0 (ta)

14 LOAD_CONST 2 (3)

16 LOAD_FAST 1 (tb)

18 BINARY_MULTIPLY

20 BINARY_ADD

22 RETURN_VALUE

14 >> 24 LOAD_CONST 2 (3)

26 LOAD_FAST 0 (ta)

28 BINARY_MULTIPLY

30 LOAD_FAST 1 (tb)

32 BINARY_ADD

34 RETURN_VALUE

```

I wonder whether it is a bug or feature, its behavior just changes though the two conditions are equivalent.

### Versions

Collecting environment information...

PyTorch version: 2.0.0.dev20230207+cu118

Is debug build: False

CUDA used to build PyTorch: 11.8

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.25.0

Libc version: glibc-2.31

Python version: 3.9.16 (main, Jan 11 2023, 16:05:54) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-5.15.0-58-generic-x86_64-with-glibc2.31

Is CUDA available: True

CUDA runtime version: 11.8.89

GPU models and configuration:

GPU 0: NVIDIA A100 80GB PCIe

GPU 1: NVIDIA A100 80GB PCIe

GPU 2: NVIDIA A100 80GB PCIe

GPU 3: NVIDIA A100 80GB PCIe

GPU 4: NVIDIA A100 80GB PCIe

GPU 5: NVIDIA A100 80GB PCIe

GPU 6: NVIDIA A100 80GB PCIe

GPU 7: NVIDIA A100 80GB PCIe

Nvidia driver version: 520.61.05

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.24.1

[pip3] pytorch-triton==2.0.0+0d7e753227

[pip3] torch==2.0.0.dev20230207+cu118

[pip3] torchaudio==2.0.0.dev20230205+cu118

[pip3] torchdynamo==1.14.0.dev0

[pip3] torchtriton==2.0.0+f16138d447

[pip3] torchvision==0.15.0.dev20230205+cu118

[conda] numpy 1.24.1 pypi_0 pypi

[conda] pytorch-triton 2.0.0+0d7e753227 pypi_0 pypi

[conda] torch 2.0.0.dev20230207+cu118 pypi_0 pypi

[conda] torchaudio 2.0.0.dev20230205+cu118 pypi_0 pypi

[conda] torchdynamo 1.14.0.dev0 pypi_0 pypi

[conda] torchtriton 2.0.0+f16138d447 pypi_0 pypi

[conda] torchvision 0.15.0.dev20230205+cu118 pypi_0 pypi

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh @voznesenskym @yanboliang @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @desertfire

| 1 |

3,522 | 94,374 |

[fx] const_fold.split_const_subgraphs leads to UserWarning

|

triaged, module: fx

|

### 🐛 Describe the bug

```python

import functorch

import torch

from torch.fx.experimental import const_fold

from functools import partial

torch.manual_seed(0)

def fn(x, y):

z = x + torch.ones_like(x)

z1 = z.sin().cos().exp().log()

z2 = z1 * y

z3 = x + 2 * y

return z2, z3

x = torch.randn(3, 1)

y = torch.randn(3, 1)

par_fn = partial(fn, x)

graph = functorch.make_fx(par_fn)(y)

mod_folded: const_fold.FoldedGraphModule = const_fold.split_const_subgraphs(graph)

```

Output

```

torch/fx/experimental/const_fold.py:250: UserWarning: Attempted to insert a get_attr Node with no underlying reference in the owning GraphModule! Call GraphModule.add_submodule to add the necessary submodule, GraphModule.add_parameter to add the necessary Parameter, or nn.Module.register_buffer to add the necessary buffer

new_node = root_const_gm.graph.get_attr(in_node.target)

```

### Versions

master

cc @ezyang @SherlockNoMad @soumith @EikanWang @jgong5 @wenzhe-nrv

| 1 |

3,523 | 94,371 |

QAT + torch.autocast does not work with default settings, missing fused fake_quant support for half

|

oncall: quantization, low priority, triaged

|

### 🐛 Describe the bug

QAT + torch.autocast should be composable. It currently doesn't work with default settings of QAT:

```

import torch

import torch.nn as nn

from torch.ao.quantization.quantize_fx import prepare_fx

from troch.ao.quantization import get_default_qat_qconfig_mapping

m = nn.Sequential(nn.Linear(1, 1)).cuda()

data = torch.randn(1, 1).cuda()

# note: setting version to 0, which disables fused fake_quant, works without issues

qconfig_mapping = get_default_qat_qconfig_mapping('fbgemm', version=1)

mp = quantize_fx.prepare_fx(m, qconfig_mapping, (data,))

with torch.autocast('cuda'):

res = mp(data)

res.sum().backward()

```

The script above fails with this:

```

Traceback (most recent call last):

File "/data/users/vasiliy/pytorch/../tmp/test.py", line 23, in <module>

res = mp(data)

File "/data/users/vasiliy/pytorch/torch/fx/graph_module.py", line 660, in call_wrapped

return self._wrapped_call(self, *args, **kwargs)

File "/data/users/vasiliy/pytorch/torch/fx/graph_module.py", line 279, in __call__

raise e

File "/data/users/vasiliy/pytorch/torch/fx/graph_module.py", line 269, in __call__