Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

3,701 | 92,375 |

operations failed in TorchScript interpreter

|

oncall: jit

|

### 🐛 Describe the bug

when I use torch.jit._get_trace_graph to trace my model on GPU,it happens that

The PyTorch internal failed reason is:

The following operation failed in the TorchScript interpreter.

Traceback of TorchScript (most recent call last):

File "/xxxx/python37/lib/python3.7/site-packages/torchvision/models/detection/roi_heads.py", line 466, in _onnx_paste_masks_in_image_loop

res_append = torch.zeros(0, im_h, im_w)

for i in range(masks.size(0)):

mask_res = _onnx_paste_mask_in_image(masks[i][0], boxes[i], im_h, im_w)

~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

mask_res = mask_res.unsqueeze(0)

res_append = torch.cat((res_append, mask_res))

File "/xxx/site-packages/torchvision/models/detection/roi_heads.py", line 430, in _onnx_paste_mask_in_image

zero = torch.zeros(1, dtype=torch.int64)

w = box[2] - box[0] + one

~~~~~~~~~~~~~~~~~~~~~ <--- HERE

h = box[3] - box[1] + one

w = torch.max(torch.cat((w, one)))

RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cpu!

### Versions

pytorch 1.12

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel

| 0 |

3,702 | 92,350 |

TypeError: no implementation found for 'torch._ops.aten.max.default' on types that implement __torch_dispatch__: [<class 'torch.masked.maskedtensor.core.MaskedTensor'>]

|

triaged, module: masked operators

|

### 🐛 Describe the bug

My problem with MaskedTensor is that the stacktrace is extremely incorrect and vague, I am unable to debug the source of any error. It claims here that the "max" is not supported but there is no max in the code, and it doesn't even reference a relevant line, there is no way the for loop line is erroring as there is no pytorch code there.

```

/usr/local/lib/python3.8/dist-packages/torch/masked/maskedtensor/core.py:299: UserWarning: max is not implemented in __torch_dispatch__ for MaskedTensor.

If you would like this operator to be supported, please file an issue for a feature request at https://github.com/pytorch/maskedtensor/issues with a minimal reproducible code snippet.

In the case that the semantics for the operator are not trivial, it would be appreciated to also include a proposal for the semantics.

warnings.warn(msg)

Traceback (most recent call last):

File "adam_aug_lagrangian.py", line 90, in <module>

for outermost_training_iter in range(num_training_iters):

File "/usr/local/lib/python3.8/dist-packages/torch/masked/maskedtensor/core.py", line 274, in __torch_function__

ret = func(*args, **kwargs)

TypeError: no implementation found for 'torch._ops.aten.max.default' on types that implement __torch_dispatch__: [<class 'torch.masked.maskedtensor.core.MaskedTensor'>]

```

### Versions

```

Collecting environment information...

PyTorch version: 1.13.1+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.16.3

Libc version: glibc-2.31

Python version: 3.8.10 (default, Nov 14 2022, 12:59:47) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-132-generic-x86_64-with-glibc2.29

Is CUDA available: True

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: NVIDIA RTX A5000

Nvidia driver version: 465.19.01

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.1

[pip3] pytorch-minimize==0.0.2

[pip3] torch==1.13.1

[conda] Could not collect

```

| 0 |

3,703 | 93,511 |

support setattr of arbitrary user provided types in tracing

|

triaged, bug, oncall: pt2

|

### 🐛 Describe the bug

Dynamo already support patching nn.Module attribute outside of forward call (e.g. during model initialization): https://github.com/pytorch/pytorch/pull/91018 . But some use cases (e.g. detectrons's RCNN model) need patch nn.Module attribute in forward method ( fb internal link: https://fburl.com/code/vvekrxl6 ). Dynamo does not support this right now.

### Error logs

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/shunting/cpython/build/install/lib/python3.9/importlib/__init__.py", line 169, in reload

_bootstrap._exec(spec, module)

File "<frozen importlib._bootstrap>", line 613, in _exec

File "<frozen importlib._bootstrap_external>", line 790, in exec_module

File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed

File "/home/shunting/learn/misc.py", line 20, in <module>

gm, guards = dynamo.export(MyModule(), *inputs, aten_graph=True, tracing_mode="symbolic")

File "/home/shunting/pytorch/torch/_dynamo/eval_frame.py", line 616, in export

result_traced = opt_f(*args, **kwargs)

File "/home/shunting/pytorch/torch/nn/modules/module.py", line 1482, in _call_impl

return forward_call(*args, **kwargs)

File "/home/shunting/pytorch/torch/_dynamo/eval_frame.py", line 82, in forward

return self.dynamo_ctx(self._orig_mod.forward)(*args, **kwargs)

File "/home/shunting/pytorch/torch/_dynamo/eval_frame.py", line 211, in _fn

return fn(*args, **kwargs)

File "/home/shunting/pytorch/torch/_dynamo/eval_frame.py", line 332, in catch_errors

return callback(frame, cache_size, hooks)

File "/home/shunting/pytorch/torch/_dynamo/convert_frame.py", line 103, in _fn

return fn(*args, **kwargs)

File "/home/shunting/pytorch/torch/_dynamo/utils.py", line 90, in time_wrapper

r = func(*args, **kwargs)

File "/home/shunting/pytorch/torch/_dynamo/convert_frame.py", line 339, in _convert_frame_assert

return _compile(

File "/home/shunting/pytorch/torch/_dynamo/convert_frame.py", line 398, in _compile

out_code = transform_code_object(code, transform)

File "/home/shunting/pytorch/torch/_dynamo/bytecode_transformation.py", line 341, in transform_code_object

transformations(instructions, code_options)

File "/home/shunting/pytorch/torch/_dynamo/convert_frame.py", line 385, in transform

tracer.run()

File "/home/shunting/pytorch/torch/_dynamo/symbolic_convert.py", line 1686, in run

super().run()

File "/home/shunting/pytorch/torch/_dynamo/symbolic_convert.py", line 537, in run

and self.step()

File "/home/shunting/pytorch/torch/_dynamo/symbolic_convert.py", line 500, in step

getattr(self, inst.opname)(inst)

File "/home/shunting/pytorch/torch/_dynamo/symbolic_convert.py", line 1048, in STORE_ATTR

BuiltinVariable(setattr)

File "/home/shunting/pytorch/torch/_dynamo/variables/builtin.py", line 375, in call_function

return super().call_function(tx, args, kwargs)

File "/home/shunting/pytorch/torch/_dynamo/variables/base.py", line 230, in call_function

unimplemented(f"call_function {self} {args} {kwargs}")

File "/home/shunting/pytorch/torch/_dynamo/exc.py", line 67, in unimplemented

raise Unsupported(msg)

torch._dynamo.exc.Unsupported: call_function BuiltinVariable(setattr) [UserDefinedClassVariable(), ConstantVariable(str), GetAttrVariable(UserDefinedClassVariable(), run_cos)] {}

from user code:

File "/home/shunting/learn/misc.py", line 10, in forward

MyModule.run = MyModule.run_cos

Set torch._dynamo.config.verbose=True for more information

You can suppress this exception and fall back to eager by setting:

torch._dynamo.config.suppress_errors = True

### Minified repro

```

import torch

from torch import nn

import torch._dynamo as dynamo

class MyModule(nn.Module):

def __init__(self):

super().__init__()

def forward(self, x):

MyModule.run = MyModule.run_cos

return self.run(x)

def run(self, x):

return torch.sin(x)

def run_cos(self, x):

return torch.cos(x)

inputs = [torch.rand(5)]

gm, guards = dynamo.export(MyModule(), *inputs, aten_graph=True, tracing_mode="symbolic")

print(f"Graph is {gm.graph}")

```

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 4 |

3,704 | 92,339 |

fft.fftshift, fft.ifftshift, roll not implemented

|

triaged, module: fft, module: mps

|

### 🚀 The feature, motivation and pitch

I am working on Bragg CDI reconstruction and the algorithm uses fftshift and ifftshift. I would like to be able to run the code on MPS device.

### Alternatives

I can implement the functions with roll but it's also not supported for the MPS device.

### Additional context

_No response_

cc @mruberry @peterbell10 @kulinseth @albanD @malfet @DenisVieriu97 @razarmehr @abhudev

| 7 |

3,705 | 92,331 |

backward(inputs= does not need to execute grad_fn of the inputs

|

module: bc-breaking, module: autograd, triaged, actionable, topic: bc breaking

|

Per title, we currently execute grad_fn of the inputs when running `.backward()` with the `inputs=` argument. We should avoid unnecessarily doing this extra compute since it is not actually necessary for the computation of the gradients wrt the inputs.

This is bc-breaking. This is mostly a bug-fix but a case where users may depend on this behavior is if they depend on hooks registered to Node to execute for the backward(inputs= case. Fixing this will require some changes to how we compute `needed` in the engine.

cc @ezyang @gchanan @albanD @zou3519 @gqchen @pearu @nikitaved @Lezcano @Varal7

| 1 |

3,706 | 92,330 |

Simplify module backward hooks to use multi-grad hooks instead

|

module: bc-breaking, module: autograd, module: nn, triaged, needs research, topic: bc breaking

|

Since modules can take in multiple inputs and return multiple outputs, currently we use a dummy custom autograd Function to ensure that there is a single grad_fn Node that we can attach our hook to. A multi-grad hook can be used to solve this issue in a less hacky way.

Note that this would be a bc-breaking change, as the hooks would be register to tensor instead of grad_fn, and the recorded backward graph would be different.

cc @ezyang @gchanan @albanD @zou3519 @gqchen @pearu @nikitaved @Lezcano @Varal7 @mruberry @jbschlosser @walterddr @mikaylagawarecki @saketh-are

| 2 |

3,707 | 92,310 |

[Releng] Windows AMI needs to be pinned for release

|

high priority, oncall: releng, triaged

|

### 🐛 Describe the bug

Windows AMI needs to be pinned for release.

### Versions

nightly, test

cc @ezyang @gchanan @zou3519

| 0 |

3,708 | 92,302 |

Cost & performance estimation for Windows Arm64 compilation

|

module: windows, triaged

|

This issue is about cost & performance estimation of CI/CD pipeline for building Windows Arm64 Pytorch.

Scope: In case current analyses is not sufficient, we want more detail analyses, but not with maximum precision.

cc @peterjc123 @mszhanyi @skyline75489 @nbcsm

| 0 |

3,709 | 92,294 |

jit.fork stalls multiprocessing dataloader

|

oncall: jit, module: dataloader, module: data

|

### 🐛 Describe the bug

Using `torch.jit.fork` with multiprocessing dataloaders stalls the dataloader.

I would expect one of the following:

1. The dataloader would simply work.

2. An error would result from trying to run `jit.fork` outside of the main process.

3. The documentation would warn of incompatability between `jit.fork` and parallel dataloaders.

Option #2 and/or #3 would probably be most realistic, due to the nature of the `multiprocessing` module.

``` python

import torch as th

def foo(x):

return x+1

@th.jit.script

def jit_fork_foo(x):

return th.jit.wait(th.jit.fork(foo, x))

class Dataset(th.utils.data.IterableDataset):

def __iter__(self):

while True:

yield jit_fork_foo(th.tensor([1]))

ds = Dataset()

dl_nomp = th.utils.data.DataLoader(ds, batch_size=2, num_workers=0)

dl_mp = th.utils.data.DataLoader(ds, batch_size=2, num_workers=2)

print("No multiprocessing ", next(iter(dl_nomp))) #returns as expected

print("multiprocessing ", next(iter(dl_mp))) #stalls

```

### Versions

```PyTorch version: 1.14.0a0+410ce96

Is debug build: False

CUDA used to build PyTorch: 11.8

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.24.1

Libc version: glibc-2.31

Python version: 3.8.10 (default, Nov 14 2022, 12:59:47) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-125-generic-x86_64-with-glibc2.29

Is CUDA available: True

CUDA runtime version: 11.8.89

CUDA_MODULE_LOADING set to: LAZY

Nvidia driver version: 470.141.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.7.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.24.1

[pip3] pytorch-quantization==2.1.2

[pip3] torch==1.14.0a0+410ce96

[pip3] torch-tensorrt==1.3.0a0

[pip3] torchtext==0.13.0a0+fae8e8c

[pip3] torchvision==0.15.0a0

```

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel @SsnL @VitalyFedyunin @ejguan @NivekT

| 1 |

3,710 | 93,510 |

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation

|

triaged, bug, oncall: pt2

|

### 🐛 Describe the bug

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.HalfTensor [1024]] is at version 24; expected version 23 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

### Error logs

[2023-01-17 16:14:06,580] torch._inductor.ir: [WARNING] DeviceCopy

[2023-01-17 16:14:06,584] torch._inductor.ir: [WARNING] DeviceCopy

[2023-01-17 16:14:06,587] torch._inductor.ir: [WARNING] DeviceCopy

[2023-01-17 16:14:06,617] torch._inductor.ir: [WARNING] DeviceCopy

[2023-01-17 16:14:06,638] torch._inductor.ir: [WARNING] DeviceCopy

[2023-01-17 16:14:06,638] torch._inductor.ir: [WARNING] DeviceCopy

[2023-01-17 16:14:06,671] torch._inductor.ir: [WARNING] DeviceCopy

[2023-01-17 16:14:06,679] torch._inductor.ir: [WARNING] DeviceCopy

[2023-01-17 16:14:06,726] torch._inductor.compile_fx: [WARNING] skipping cudagraphs due to multiple devices

[2023-01-17 16:14:06,736] torch._inductor.compile_fx: [WARNING] skipping cudagraphs due to multiple devices

[2023-01-17 16:14:06,743] torch._inductor.compile_fx: [WARNING] skipping cudagraphs due to multiple devices

[2023-01-17 16:14:06,759] torch._inductor.compile_fx: [WARNING] skipping cudagraphs due to multiple devices

[2023-01-17 16:14:06,778] torch._inductor.compile_fx: [WARNING] skipping cudagraphs due to multiple devices

[2023-01-17 16:14:06,787] torch._inductor.compile_fx: [WARNING] skipping cudagraphs due to multiple devices

[2023-01-17 16:14:06,820] torch._inductor.compile_fx: [WARNING] skipping cudagraphs due to multiple devices

[2023-01-17 16:14:06,831] torch._inductor.compile_fx: [WARNING] skipping cudagraphs due to multiple devices

/home/tiger/.local/lib/python3.7/site-packages/torch/cuda/graphs.py:82: UserWarning: The CUDA Graph is empty. This ususally means that the graph was attempted to be captured on wrong device or stream. (Triggered internally at ../aten/src/ATen/cuda/CUDAGraph.cpp:191.)

super(CUDAGraph, self).capture_end()

/home/tiger/.local/lib/python3.7/site-packages/torch/cuda/graphs.py:82: UserWarning: The CUDA Graph is empty. This ususally means that the graph was attempted to be captured on wrong device or stream. (Triggered internally at ../aten/src/ATen/cuda/CUDAGraph.cpp:191.)

super(CUDAGraph, self).capture_end()

/home/tiger/.local/lib/python3.7/site-packages/torch/cuda/graphs.py:82: UserWarning: The CUDA Graph is empty. This ususally means that the graph was attempted to be captured on wrong device or stream. (Triggered internally at ../aten/src/ATen/cuda/CUDAGraph.cpp:191.)

super(CUDAGraph, self).capture_end()

/home/tiger/.local/lib/python3.7/site-packages/torch/cuda/graphs.py:82: UserWarning: The CUDA Graph is empty. This ususally means that the graph was attempted to be captured on wrong device or stream. (Triggered internally at ../aten/src/ATen/cuda/CUDAGraph.cpp:191.)

super(CUDAGraph, self).capture_end()

/home/tiger/.local/lib/python3.7/site-packages/torch/cuda/graphs.py:82: UserWarning: The CUDA Graph is empty. This ususally means that the graph was attempted to be captured on wrong device or stream. (Triggered internally at ../aten/src/ATen/cuda/CUDAGraph.cpp:191.)

super(CUDAGraph, self).capture_end()

/home/tiger/.local/lib/python3.7/site-packages/torch/cuda/graphs.py:82: UserWarning: The CUDA Graph is empty. This ususally means that the graph was attempted to be captured on wrong device or stream. (Triggered internally at ../aten/src/ATen/cuda/CUDAGraph.cpp:191.)

super(CUDAGraph, self).capture_end()

/home/tiger/.local/lib/python3.7/site-packages/torch/cuda/graphs.py:82: UserWarning: The CUDA Graph is empty. This ususally means that the graph was attempted to be captured on wrong device or stream. (Triggered internally at ../aten/src/ATen/cuda/CUDAGraph.cpp:191.)

super(CUDAGraph, self).capture_end()

/home/tiger/.local/lib/python3.7/site-packages/torch/cuda/graphs.py:82: UserWarning: The CUDA Graph is empty. This ususally means that the graph was attempted to be captured on wrong device or stream. (Triggered internally at ../aten/src/ATen/cuda/CUDAGraph.cpp:191.)

super(CUDAGraph, self).capture_end()

Traceback (most recent call last):

File "fsdp_pretrain.py", line 423, in <module>

Traceback (most recent call last):

File "fsdp_pretrain.py", line 423, in <module>

main(args)

File "fsdp_pretrain.py", line 318, in main

main(args)

File "fsdp_pretrain.py", line 318, in main

scaler.scale(loss).backward()

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_tensor.py", line 489, in backward

self, gradient, retain_graph, create_graph, inputs=inputsscaler.scale(loss).backward()

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/__init__.py", line 199, in backward

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_tensor.py", line 489, in backward

allow_unreachable=True, accumulate_grad=True) # Calls into the C++ engine to run the backward pass

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/function.py", line 276, in apply

self, gradient, retain_graph, create_graph, inputs=inputs

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/__init__.py", line 199, in backward

return user_fn(self, *args)

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_functorch/aot_autograd.py", line 1848, in backward

allow_unreachable=True, accumulate_grad=True) # Calls into the C++ engine to run the backward pass

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/function.py", line 276, in apply

return user_fn(self, *args)

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_functorch/aot_autograd.py", line 1848, in backward

list(ctx.symints) + list(ctx.saved_tensors) + list(contiguous_args)

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.HalfTensor [1024]] is at version 24; expected version 23 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

list(ctx.symints) + list(ctx.saved_tensors) + list(contiguous_args)

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.HalfTensor [1024]] is at version 24; expected version 23 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

Traceback (most recent call last):

File "fsdp_pretrain.py", line 423, in <module>

Traceback (most recent call last):

File "fsdp_pretrain.py", line 423, in <module>

main(args)

File "fsdp_pretrain.py", line 318, in main

scaler.scale(loss).backward()

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_tensor.py", line 489, in backward

self, gradient, retain_graph, create_graph, inputs=inputs

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/__init__.py", line 199, in backward

allow_unreachable=True, accumulate_grad=True) # Calls into the C++ engine to run the backward pass

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/function.py", line 276, in apply

return user_fn(self, *args)

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_functorch/aot_autograd.py", line 1848, in backward

list(ctx.symints) + list(ctx.saved_tensors) + list(contiguous_args)main(args)

RuntimeError File "fsdp_pretrain.py", line 318, in main

: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.HalfTensor [1024]] is at version 24; expected version 23 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

scaler.scale(loss).backward()

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_tensor.py", line 489, in backward

self, gradient, retain_graph, create_graph, inputs=inputs

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/__init__.py", line 199, in backward

allow_unreachable=True, accumulate_grad=True) # Calls into the C++ engine to run the backward pass

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/function.py", line 276, in apply

return user_fn(self, *args)

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_functorch/aot_autograd.py", line 1848, in backward

list(ctx.symints) + list(ctx.saved_tensors) + list(contiguous_args)

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.HalfTensor [1024]] is at version 24; expected version 23 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

Traceback (most recent call last):

File "fsdp_pretrain.py", line 423, in <module>

main(args)

File "fsdp_pretrain.py", line 318, in main

scaler.scale(loss).backward()

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_tensor.py", line 489, in backward

self, gradient, retain_graph, create_graph, inputs=inputs

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/__init__.py", line 199, in backward

allow_unreachable=True, accumulate_grad=True) # Calls into the C++ engine to run the backward pass

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/function.py", line 276, in apply

return user_fn(self, *args)

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_functorch/aot_autograd.py", line 1848, in backward

list(ctx.symints) + list(ctx.saved_tensors) + list(contiguous_args)

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.HalfTensor [1024]] is at version 24; expected version 23 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

Traceback (most recent call last):

File "fsdp_pretrain.py", line 423, in <module>

main(args)

File "fsdp_pretrain.py", line 318, in main

scaler.scale(loss).backward()

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_tensor.py", line 489, in backward

self, gradient, retain_graph, create_graph, inputs=inputs

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/__init__.py", line 199, in backward

allow_unreachable=True, accumulate_grad=True) # Calls into the C++ engine to run the backward pass

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/function.py", line 276, in apply

return user_fn(self, *args)

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_functorch/aot_autograd.py", line 1848, in backward

list(ctx.symints) + list(ctx.saved_tensors) + list(contiguous_args)

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.HalfTensor [1024]] is at version 24; expected version 23 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

text: Unable to write to output stream.

text: Unable to write to output stream.

text: Unable to write to output stream.

text: Unable to write to output stream.

text: Unable to write to output stream.

text: Unable to write to output stream.

text: Unable to write to output stream.

text: Unable to write to output stream.

text: Unable to write to output stream.

text: Unable to write to output stream.

text: Unable to write to output stream.

text: Unable to write to output stream.

text: Unable to write to output stream.

Traceback (most recent call last):

File "fsdp_pretrain.py", line 423, in <module>

Traceback (most recent call last):

File "fsdp_pretrain.py", line 423, in <module>

text: Unable to write to output stream.

text: Unable to write to output stream.

main(args)

File "fsdp_pretrain.py", line 318, in main

main(args)

File "fsdp_pretrain.py", line 318, in main

scaler.scale(loss).backward()

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_tensor.py", line 489, in backward

self, gradient, retain_graph, create_graph, inputs=inputs

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/__init__.py", line 199, in backward

allow_unreachable=True, accumulate_grad=True) # Calls into the C++ engine to run the backward pass

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/function.py", line 276, in apply

return user_fn(self, *args)

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_functorch/aot_autograd.py", line 1848, in backward

scaler.scale(loss).backward()

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_tensor.py", line 489, in backward

self, gradient, retain_graph, create_graph, inputs=inputs

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/__init__.py", line 199, in backward

allow_unreachable=True, accumulate_grad=True) # Calls into the C++ engine to run the backward pass

File "/home/tiger/.local/lib/python3.7/site-packages/torch/autograd/function.py", line 276, in apply

return user_fn(self, *args)

File "/home/tiger/.local/lib/python3.7/site-packages/torch/_functorch/aot_autograd.py", line 1848, in backward

list(ctx.symints) + list(ctx.saved_tensors) + list(contiguous_args)

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.HalfTensor [1024]] is at version 24; expected version 23 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

list(ctx.symints) + list(ctx.saved_tensors) + list(contiguous_args)

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.HalfTensor [1024]] is at version 24; expected version 23 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 4 |

3,711 | 92,292 |

"Get Started" tells us to use the anaconda installer for PyTorch 3.x - but this should be python 3.x

|

oncall: binaries

|

### 📚 The doc issue

[Anaconda](https://pytorch.org/get-started/locally/#anaconda)

To install Anaconda, you will use the [64-bit graphical installer](https://www.anaconda.com/download/#windows) for PyTorch 3.x.

### Suggest a potential alternative/fix

[Anaconda](https://pytorch.org/get-started/locally/#anaconda)

To install Anaconda, you will use the [64-bit graphical installer](https://www.anaconda.com/download/#windows) for Python 3.x.

Tiny issue (o: hope the feedback helps

cc @ezyang @seemethere @malfet

| 1 |

3,712 | 92,285 |

InstanceNorm operator support for Vulkan devices

|

triaged, module: vulkan

|

### 🚀 The feature, motivation and pitch

At present, only BatchNorm op is supported for Vulkan drivers. The model file throws an error when using InstanceNorm on a Vulkan device ( Adreno )

### Alternatives

I have replaced InstanceNorm with BatchNorm for the time being

### Additional context

_No response_

| 1 |

3,713 | 92,273 |

Always install cpu version automatically

|

oncall: binaries

|

### 🐛 Describe the bug

Hi!

I use following cmd to install pytorch

```

conda install pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=10.2 -c pytorch

```

however I got the result:

```

## Package Plan ##

environment location: D:\anaconda3

added / updated specs:

- cudatoolkit=10.2

- pytorch==1.12.1

- torchaudio==0.12.1

- torchvision==0.13.1

The following packages will be downloaded:

package | build

---------------------------|-----------------

conda-22.9.0 | py38haa95532_0 888 KB defaults

cudatoolkit-10.2.89 | h74a9793_1 317.2 MB defaults

libuv-1.40.0 | he774522_0 255 KB defaults

pytorch-1.12.1 | py3.8_cpu_0 133.8 MB pytorch

pytorch-mutex-1.0 | cpu 3 KB pytorch

torchaudio-0.12.1 | py38_cpu 3.5 MB pytorch

torchvision-0.13.1 | py38_cpu 6.2 MB pytorch

------------------------------------------------------------

Total: 461.9 MB

The following NEW packages will be INSTALLED:

cudatoolkit anaconda/pkgs/main/win-64::cudatoolkit-10.2.89-h74a9793_1

libuv anaconda/pkgs/main/win-64::libuv-1.40.0-he774522_0

pytorch pytorch/win-64::pytorch-1.12.1-py3.8_cpu_0

pytorch-mutex pytorch/noarch::pytorch-mutex-1.0-cpu

torchaudio pytorch/win-64::torchaudio-0.12.1-py38_cpu

torchvision pytorch/win-64::torchvision-0.13.1-py38_cpu

The following packages will be UPDATED:

conda pkgs/main::conda-4.9.2-py38haa95532_0 --> anaconda/pkgs/main::conda-22.9.0-py38haa95532_0

Proceed ([y]/n)?

```

I wonder why conda install cpu version automatically? How to install gpu version?

This is my hardware information:

```

Tue Jan 17 10:04:01 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 516.94 Driver Version: 516.94 CUDA Version: 11.7 |

|-------------------------------+----------------------+----------------------+

| GPU Name TCC/WDDM | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... WDDM | 00000000:65:00.0 On | N/A |

| 0% 43C P8 20W / 370W | 885MiB / 24576MiB | 6% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

```

Could you please give me some advice? Thanks in advance.

### Versions

pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=10.2

cc @ezyang @seemethere @malfet

| 3 |

3,714 | 92,260 |

distributions.Beta returning incorrect results at 0 and 1

|

module: distributions, triaged

|

### 🐛 Describe the bug

The beta distribution has support either on [0, 1] or, for some implementations, on (0, 1). However, the PyTorch version appears to have support on [0, 1] but it returns `-inf` or `nan` on those values.

```

In [31]: beta = torch.distributions.Beta(torch.tensor([1.0]), torch.tensor([1.0]), validate_args=True)

In [32]: beta.support

Out[32]: Interval(lower_bound=0.0, upper_bound=1.0)

In [33]: beta.log_prob(torch.tensor([1.0]))

Out[33]: tensor([nan])

In [34]: beta.log_prob(torch.tensor([0.0]))

Out[34]: tensor([nan])

In [35]: beta = torch.distributions.Beta(torch.tensor([2.0]), torch.tensor([2.0]), validate_args=True)

In [36]: beta.log_prob(torch.tensor([0.0]))

Out[36]: tensor([-inf])

In [37]: beta.log_prob(torch.tensor([1.0]))

Out[37]: tensor([-inf])

```

Note that we did not get a `ValueError` when we passed 0 and 1 even though `validate_args=True` was set, but we did get `-inf` and/or `nan` even though that's not the correct answer. Also, the `support` seems to indicate that 0 and 1 are legal (though I couldn't find documentation of the `Interval` class so I'm not sure if that's supposed to be inclusive or exclusive).

### Versions

PyTorch version: 1.13.0+cu116

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Debian GNU/Linux 11 (bullseye) (x86_64)

GCC version: (Debian 10.2.1-6) 10.2.1 20210110

Clang version: Could not collect

CMake version: version 3.18.4

Libc version: glibc-2.31

Python version: 3.10.6 (main, Oct 11 2022, 01:13:46) [GCC 10.2.1 20210110] (64-bit runtime)

Python platform: Linux-5.10.0-20-cloud-amd64-x86_64-with-glibc2.31

Is CUDA available: True

CUDA runtime version: 11.8.89

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: NVIDIA A100-SXM4-40GB

Nvidia driver version: 520.61.05

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.24.1

[pip3] torch==1.13.0+cu116

[pip3] torchvision==0.14.0+cu116

[conda] Could not collect

cc @fritzo @neerajprad @alicanb @nikitaved

| 2 |

3,715 | 92,259 |

[discussion] Fused MLPs

|

feature, triaged, oncall: pt2, module: inductor

|

### 🚀 The feature, motivation and pitch

I just stumbled on https://twitter.com/DrJimFan/status/1615018393601716224, there is https://github.com/NVlabs/tiny-cuda-nn which fuses small MLPs for fast training and inference. Can Inductor / Triton generate similar PTX / memory placement?

It seems at least as a good comparison target for benchmark

### Alternatives

_No response_

### Additional context

_No response_

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh @mlazos @voznesenskym @yanboliang @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @chunyuan-w @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @peterbell10 @desertfire

| 1 |

3,716 | 92,252 |

`model.to("cuda:0")` does not release all CPU memory

|

module: memory usage, triaged

|

### 🐛 Describe the bug

As per title, placing a model on GPU does not seem to release all allocated CPU memory:

```python

import os, psutil

import torch

import gc

from transformers import AutoModelForSpeechSeq2Seq

import torch

process = psutil.Process(os.getpid())

print(f"Before loading model on CPU: {process.memory_info().rss / 1e6:.2f} MB")

model = AutoModelForSpeechSeq2Seq.from_pretrained("openai/whisper-medium")

print(f"After loading model on CPU: {process.memory_info().rss / 1e6:.2f} MB")

model = model.to("cuda:0")

gc.collect()

print(f"After to('cuda:0'): {process.memory_info().rss / 1e6:.2f} MB")

for name, param in model.named_parameters():

assert param.device == torch.device("cuda:0")

```

prints:

```

Before loading model on CPU: 424.26 MB

After loading model on CPU: 3500.97 MB

After to('cuda:0'): 1472.24 MB

```

Is this expected? The model is about 3 GB.

The same can be checked with `free -h`.

### Versions

PyTorch version: 1.13.1+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04.1 LTS (x86_64)

GCC version: (Ubuntu 11.3.0-1ubuntu1~22.04) 11.3.0

Clang version: 14.0.0-1ubuntu1

CMake version: version 3.25.0

Libc version: glibc-2.35

Python version: 3.9.12 (main, Apr 5 2022, 06:56:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.15.0-57-generic-x86_64-with-glibc2.35

Is CUDA available: True

CUDA runtime version: 11.7.99

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3060 Laptop GPU

Nvidia driver version: 515.86.01

cuDNN version: Probably one of the following:

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_adv_train.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_ops_train.so.8.7.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.22.4

[pip3] torch==1.13.1

[pip3] torch-model-archiver==0.6.1

[pip3] torch-tb-profiler==0.4.0

[pip3] torch-workflow-archiver==0.2.5

[pip3] torchaudio==0.13.0

[pip3] torchinfo==1.7.0

[pip3] torchserve==0.6.1

[pip3] torchtriton==2.0.0+0d7e753227

[pip3] torchvision==0.14.0

[conda] cudatoolkit 11.3.1 h2bc3f7f_2 anaconda

[conda] numpy 1.22.4 pypi_0 pypi

[conda] torch 1.13.1 pypi_0 pypi

[conda] torch-model-archiver 0.6.1 pypi_0 pypi

[conda] torch-tb-profiler 0.4.0 dev_0 <develop>

[conda] torch-workflow-archiver 0.2.5 pypi_0 pypi

[conda] torchaudio 0.13.0 pypi_0 pypi

[conda] torchinfo 1.7.0 pypi_0 pypi

[conda] torchserve 0.6.1 pypi_0 pypi

[conda] torchtriton 2.0.0+0d7e753227 pypi_0 pypi

[conda] torchvision 0.14.0 pypi_0 pypi

| 0 |

3,717 | 92,251 |

`torch.load(..., map_location="cuda:0")` allocates memory on both CPU and GPU

|

module: serialization, triaged, module: python frontend

|

### 🐛 Describe the bug

As per title, using `torch.load(..., map_location="cuda:0")` allocates memory on CPU while I think it should not.

Reproduce:

```

wget https://huggingface.co/openai/whisper-medium/resolve/main/pytorch_model.bin

```

Then:

```python

import os, psutil

import gc

import torch

process = psutil.Process(os.getpid())

print(f"Before torch.load: {process.memory_info().rss / 1e6:.2f} MB")

res = torch.load("/path/to/pytorch_model.bin", map_location=torch.device("cuda:0"))

gc.collect()

print(f"After torch.load: {process.memory_info().rss / 1e6:.2f} MB")

```

prints:

```

Before torch.load: 290.95 MB

After torch.load: 3355.66 MB

```

and you can check with `nvidia-smi` that memory is reserved as well on GPU.

### Versions

PyTorch version: 1.13.1+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04.1 LTS (x86_64)

GCC version: (Ubuntu 11.3.0-1ubuntu1~22.04) 11.3.0

Clang version: 14.0.0-1ubuntu1

CMake version: version 3.25.0

Libc version: glibc-2.35

Python version: 3.9.12 (main, Apr 5 2022, 06:56:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.15.0-57-generic-x86_64-with-glibc2.35

Is CUDA available: True

CUDA runtime version: 11.7.99

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3060 Laptop GPU

Nvidia driver version: 515.86.01

cuDNN version: Probably one of the following:

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_adv_train.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8.7.0

/usr/local/cuda-11.7/targets/x86_64-linux/lib/libcudnn_ops_train.so.8.7.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.22.4

[pip3] torch==1.13.1

[pip3] torch-model-archiver==0.6.1

[pip3] torch-tb-profiler==0.4.0

[pip3] torch-workflow-archiver==0.2.5

[pip3] torchaudio==0.13.0

[pip3] torchinfo==1.7.0

[pip3] torchserve==0.6.1

[pip3] torchtriton==2.0.0+0d7e753227

[pip3] torchvision==0.14.0

[conda] cudatoolkit 11.3.1 h2bc3f7f_2 anaconda

[conda] numpy 1.22.4 pypi_0 pypi

[conda] torch 1.13.1 pypi_0 pypi

[conda] torch-model-archiver 0.6.1 pypi_0 pypi

[conda] torch-tb-profiler 0.4.0 dev_0 <develop>

[conda] torch-workflow-archiver 0.2.5 pypi_0 pypi

[conda] torchaudio 0.13.0 pypi_0 pypi

[conda] torchinfo 1.7.0 pypi_0 pypi

[conda] torchserve 0.6.1 pypi_0 pypi

[conda] torchtriton 2.0.0+0d7e753227 pypi_0 pypi

[conda] torchvision 0.14.0 pypi_0 pypi

cc @mruberry @albanD

| 3 |

3,718 | 92,250 |

torch.cuda.is_available() returns True even if the CUDA hardware can't run pytorch

|

module: cuda, triaged

|

### 🐛 Describe the bug

My desktop has an ancient GPU that cannot be used with PyTorch. Nevertheless `torch.cuda.is_available()` returns True; I get an error later on when I try to run any computation.

I would have hoped to receive False from `torch.cuda.is_available()`.

I'm sorry that this example is rather long; it arose when I tried running the Quickstart tutorial and I don't yet know enough about PyTorch to strip this down to a minimal example.

```python

torch.cuda.is_available()

```

```

True

```

```python

device="cuda"

# Define model

class NeuralNetwork(nn.Module):

def __init__(self):

super().__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28*28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10)

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

model = NeuralNetwork().to(device)

```

```

/home/peridot/software/miniconda3/envs/ml-torch/lib/python3.10/site-packages/torch/cuda/__init__.py:132: UserWarning:

Found GPU0 Quadro K600 which is of cuda capability 3.0.

PyTorch no longer supports this GPU because it is too old.

The minimum cuda capability supported by this library is 3.7.

warnings.warn(old_gpu_warn % (d, name, major, minor, min_arch // 10, min_arch % 10))

```

If I then go on to do an actual calculation I get a RuntimeError:

```python

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=1e-3)

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

model.train()

for batch, (X, y) in enumerate(dataloader):

X, y = X.to(device), y.to(device)

# Compute prediction error

pred = model(X)

loss = loss_fn(pred, y)

# Backpropagation

optimizer.zero_grad()

loss.backward()

optimizer.step()

if batch % 100 == 0:

loss, current = loss.item(), batch * len(X)

print(f"loss: {loss:>7f} [{current:>5d}/{size:>5d}]")

# Download training data from open datasets.

training_data = datasets.FashionMNIST(

root="data",

train=True,

download=True,

transform=ToTensor(),

)

train_dataloader = DataLoader(training_data, shuffle=True)

train(train_dataloader, model, loss_fn, optimizer)

```

```

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

Cell In[3], line 54

45 training_data = datasets.FashionMNIST(

46 root="data",

47 train=True,

48 download=True,

49 transform=ToTensor(),

50 )

52 train_dataloader = DataLoader(training_data, shuffle=True)

---> 54 train(train_dataloader, model, loss_fn, optimizer)

Cell In[3], line 32, in train(dataloader, model, loss_fn, optimizer)

29 X, y = X.to(device), y.to(device)

31 # Compute prediction error

---> 32 pred = model(X)

33 loss = loss_fn(pred, y)

35 # Backpropagation

File ~/software/miniconda3/envs/ml-torch/lib/python3.10/site-packages/torch/nn/modules/module.py:1194, in Module._call_impl(self, *input, **kwargs)

1190 # If we don't have any hooks, we want to skip the rest of the logic in

1191 # this function, and just call forward.

1192 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1193 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1194 return forward_call(*input, **kwargs)

1195 # Do not call functions when jit is used

1196 full_backward_hooks, non_full_backward_hooks = [], []

Cell In[3], line 17, in NeuralNetwork.forward(self, x)

15 def forward(self, x):

16 x = self.flatten(x)

---> 17 logits = self.linear_relu_stack(x)

18 return logits

File ~/software/miniconda3/envs/ml-torch/lib/python3.10/site-packages/torch/nn/modules/module.py:1194, in Module._call_impl(self, *input, **kwargs)

1190 # If we don't have any hooks, we want to skip the rest of the logic in

1191 # this function, and just call forward.

1192 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1193 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1194 return forward_call(*input, **kwargs)

1195 # Do not call functions when jit is used

1196 full_backward_hooks, non_full_backward_hooks = [], []

File ~/software/miniconda3/envs/ml-torch/lib/python3.10/site-packages/torch/nn/modules/container.py:204, in Sequential.forward(self, input)

202 def forward(self, input):

203 for module in self:

--> 204 input = module(input)

205 return input

File ~/software/miniconda3/envs/ml-torch/lib/python3.10/site-packages/torch/nn/modules/module.py:1194, in Module._call_impl(self, *input, **kwargs)

1190 # If we don't have any hooks, we want to skip the rest of the logic in

1191 # this function, and just call forward.

1192 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1193 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1194 return forward_call(*input, **kwargs)

1195 # Do not call functions when jit is used

1196 full_backward_hooks, non_full_backward_hooks = [], []

File ~/software/miniconda3/envs/ml-torch/lib/python3.10/site-packages/torch/nn/modules/linear.py:114, in Linear.forward(self, input)

113 def forward(self, input: Tensor) -> Tensor:

--> 114 return F.linear(input, self.weight, self.bias)

RuntimeError: CUDA error: CUBLAS_STATUS_ALLOC_FAILED when calling `cublasCreate(handle)`

```

### Versions

Collecting environment information...

/home/peridot/software/miniconda3/envs/ml-torch/lib/python3.10/site-packages/torch/cuda/__init__.py:132: UserWarning:

Found GPU0 Quadro K600 which is of cuda capability 3.0.

PyTorch no longer supports this GPU because it is too old.

The minimum cuda capability supported by this library is 3.7.

warnings.warn(old_gpu_warn % (d, name, major, minor, min_arch // 10, min_arch % 10))

PyTorch version: 1.13.1

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.10 (x86_64)

GCC version: (Ubuntu 12.2.0-3ubuntu1) 12.2.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.36

Python version: 3.10.8 (main, Nov 24 2022, 14:13:03) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-5.19.0-28-generic-x86_64-with-glibc2.36

Is CUDA available: True

CUDA runtime version: 11.6.124

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: Quadro K600

Nvidia driver version: 470.161.03

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy==0.991

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.24.1

[pip3] torch==1.13.1

[pip3] torchaudio==0.13.1

[pip3] torchvision==0.14.1

[conda] blas 1.0 mkl

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py310h7f8727e_0

[conda] mkl_fft 1.3.1 py310hd6ae3a3_0

[conda] mkl_random 1.2.2 py310h00e6091_0

[conda] numpy 1.23.5 py310hd5efca6_0

[conda] numpy-base 1.23.5 py310h8e6c178_0

[conda] pytorch 1.13.1 py3.10_cuda11.6_cudnn8.3.2_0 pytorch

[conda] pytorch-cuda 11.6 h867d48c_1 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchaudio 0.13.1 py310_cu116 pytorch

[conda] torchvision 0.14.1 py310_cu116 pytorch

cc @ngimel

| 3 |

3,719 | 92,246 |

test_qnnpack_add fails

|

oncall: mobile, module: xnnpack

|

### 🐛 Describe the bug

The test fails with this:

```

test_qnnpack_add (quantization.core.test_quantized_op.TestQNNPackOps) ... Falsifying example: test_qnnpack_add(

A=(array([1.], dtype=float32), (1.0, 0, torch.qint8)),

zero_point=127,

scale_A=0.057,

scale_B=0.008,

scale_C=0.003,

self=<quantization.core.test_quantized_op.TestQNNPackOps testMethod=test_qnnpack_add>,

)

FAIL

```

The test_qnnpack_add_broadcast fails due to similar reasons.

With a bit of code reading I found this:

- `qA.dequantize()` and `qB.dequantize()` are zero due to the scaling and a zero_point of 127

- Hence the [groundtruth C](https://github.com/pytorch/pytorch/blob/76c88364ed6ffcef41743c8370d6ff1306e0100d/test/quantization/core/test_quantized_op.py#L5914) is also zero which is passed to `_quantize` yielding `qC = [0]`

- But the `qC_qnnp.int_repr = [127]`

More intermediates:

```

qA = tensor([0.], size=(1,), dtype=torch.qint8,

quantization_scheme=torch.per_tensor_affine, scale=0.057, zero_point=127)

qB = tensor([0.], size=(1,), dtype=torch.qint8,

quantization_scheme=torch.per_tensor_affine, scale=0.008, zero_point=127)

qA/qB.dequantize() = tensor([0.])

qC_qnnp = tensor([0.3810], size=(1,), dtype=torch.qint8,

quantization_scheme=torch.per_tensor_affine, scale=0.003, zero_point=0)

qC_qnnp.int_repr() = tensor([127], dtype=torch.int8)

qC_qnnp.dequantize() = tensor([0.3810])

```

### Versions

PyTorch 1.12.1

cc @jerryzh168 @jianyuh @raghuramank100 @jamesr66a @vkuzo @jgong5 @Xia-Weiwen @leslie-fang-intel

| 3 |

3,720 | 92,245 |

CapabilityBasedPartitioner incorrectly sorts the graph, causing optimizer return/output node to be first

|

triaged, module: fx, oncall: pt2

|

### 🐛 Describe the bug

Hello. I prepared code to test custom backends for torch.compile functionality. One of the things my backend is doing is using fx.Interpreter to go through all operations (via fake tensors) and propagate device info. Then I use this information to cluster some of the operations via CapabilityBasedPartitioner. For purpose of this bug I have simplified the code, it is available here:

https://gist.github.com/kbadz-pl/9bff0459fa37f4231125c0163aec4b89

Issue is that when I try to run partitioning and merging over optimizer code, it seems to wrongly treat return/output node because it has no arguments/predecessors. It ends up being first and effectively causing optimizer to not run at all (checked in the run traces).

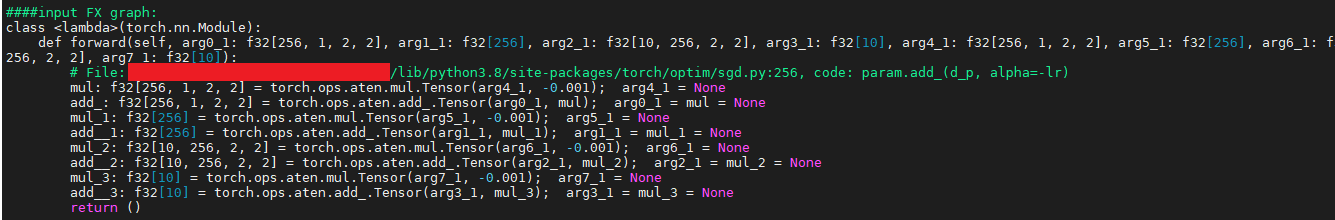

SGD graph before partitioning:

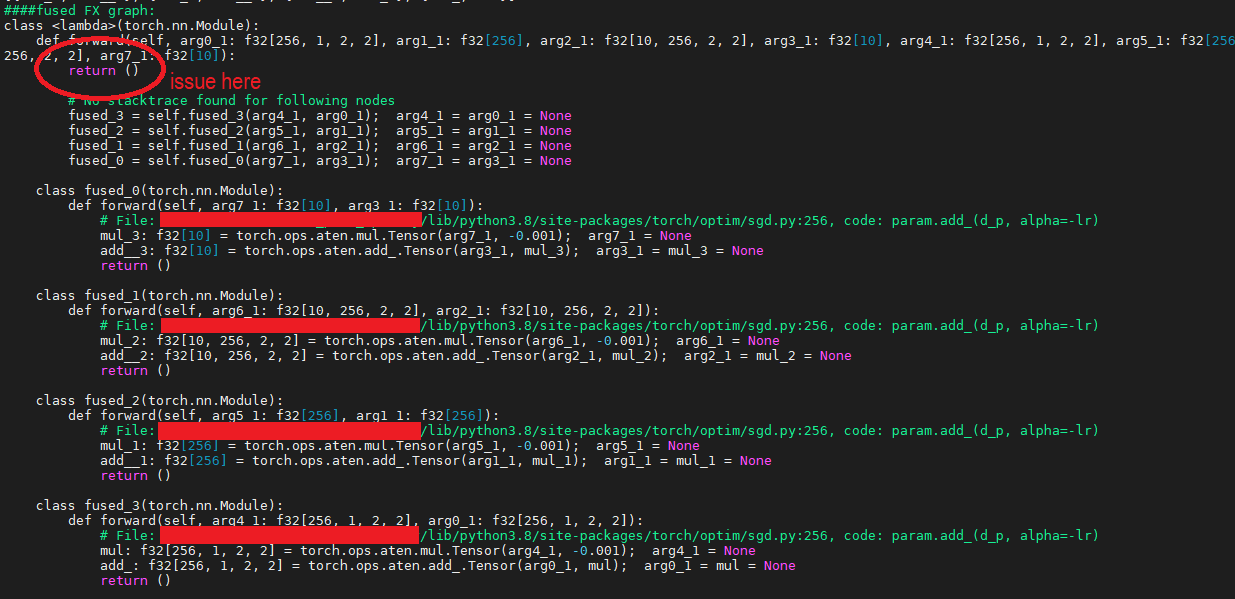

SGD graph after partitioning and merging:

### Versions

PyTorch version: 2.0.0.dev20230116+cpu

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.3 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.16.3

Libc version: glibc-2.31

Python version: 3.8.10 (default, Nov 14 2022, 12:59:47) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-126-generic-x86_64-with-glibc2.29

Is CUDA available: False

CUDA runtime version: No CUDA

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.24.1

[pip3] torch==2.0.0.dev20230116+cpu

[pip3] torchaudio==2.0.0.dev20230115+cpu

[pip3] torchvision==0.15.0.dev20230115+cpu

[conda] Could not collect

cc @ezyang @SherlockNoMad @soumith @EikanWang @jgong5 @wenzhe-nrv @msaroufim @wconstab @ngimel @bdhirsh

| 6 |

3,721 | 92,244 |

Infinite recursion when tracing through lift_fresh_copy OP in Adam optimizer

|

module: optimizer, triaged, oncall: pt2, module: fakeTensor

|

### 🐛 Describe the bug

Hello. I prepared code to test custom backends for torch.compile functionality. One of the things my backend is doing is using fx.Interpreter to go through all operations (via fake tensors) and propagate device info. Then I use this information to cluster some of the operations via CapabilityBasedPartitioner. For purpose of this bug I have simplified the code, it is available here: https://gist.github.com/kbadz-pl/53d6cf36ac467039a0fa3170e7d5157b

Issue is that when fx.Interpreter tries to run_node over **lift_fresh_copy** operation, it goes into infinite recursion and crashes.

I did not see such issue with other OPs. Issue does not occur for example for SGD (it does not contain such OP) or by disabling fake tensors. You can test it by changing to SGD in line 106 or disabling fake tensors in line 15 of my file.

### Versions

PyTorch version: 2.0.0.dev20230116+cpu

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.3 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.16.3

Libc version: glibc-2.31

Python version: 3.8.10 (default, Nov 14 2022, 12:59:47) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-126-generic-x86_64-with-glibc2.29

Is CUDA available: False

CUDA runtime version: No CUDA

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.24.1

[pip3] torch==2.0.0.dev20230116+cpu

[pip3] torchaudio==2.0.0.dev20230115+cpu

[pip3] torchvision==0.15.0.dev20230115+cpu

[conda] Could not collect

cc @vincentqb @jbschlosser @albanD @janeyx99 @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 6 |

3,722 | 92,230 |

Add torch::jit::ScriptModule to the C++ API

|

oncall: jit

|

### 🚀 The feature, motivation and pitch

I'd like to implement a model in C++ with some submodules defined in C++ being subclasses of `torch::nn::Module` and a submodule being a `torch::jit::Module`. This is a common use case when your design your own model based around some well known backbone for which a torchscripted version is available.

I'm not sure about the best way to do this, but I believe that I must build my own wrapper subclassing `torch::nn::Module` around the JIT module. Such wrapper would register the parameters of the JIT module, and build recursively wrappers around its submodules. The wrapper would also need to overide `train()`, `eval()`, `to()` ... and delegate them to the JIT module. A bit ugly but it should work.

The thing is, I'm not really developing in C++, but in Java (or whatever language using a binding to the C++ API). In that case, writing a C++ subclass of `torch::nn::Module` that wraps the `torch::jit::Module` is not an available option.

So is it possible, either to make `torch::jit::Module` inherit from `torch:nn::Module` (I guess not) or provide a ready to be used C++ `torch::jit::ScriptModule` wrapper class, just like the Python counterpart ?

### Alternatives

_No response_

### Additional context

_No response_

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel

| 0 |

3,723 | 92,226 |

Hijacked package names from nightly repository

|

oncall: binaries, security

|

### 🐛 Describe the bug

In response to this

https://pytorch.org/blog/compromised-nightly-dependency/

I checked if there are other package names in the pytorch nightly package index. I found two package names that I could register on pypi (torchaudio-nightly, pytorch-csprng).

I reported this to facebook's bugbounty program (as your security policy says), however it seems facebook no longer is responsible here (see also #91570). Facebook's security team came to the conclusion that the issue is not severe.

Nevertheless I now have registered these package names on pypi and I am wondering what to do with them. I would prefer to transmit ownership of the account to the pytorch team so you can decide what to do with them and if you want to keep them registered to block the names.

### Versions

irrelevant/nightly

cc @ezyang @seemethere @malfet

| 3 |

3,724 | 92,223 |

Improve make_fx tracing speed

|

module: performance, triaged, module: ProxyTensor

|

### 🚀 The feature, motivation and pitch

We observed that tracing a model using make_fx is sometimes much slower than actually running a model, and the observation has been consistent across different model sizes. For our big model sometimes we see a 30s+ tracing time.

While we understand tracing is usually only done once throughout the training process, the slow tracing speed has a direct impact on startup time, which leads to a very unpleasant debug-test experience.

```

import torch

from functorch import make_fx

import time

def main():

model = ...

input = ...

start = time.perf_counter()

model(input)

end = time.perf_counter()

print(f"time to execute {end - start}")

start = time.perf_counter()

traced = make_fx(model)(input)

end = time.perf_counter()

print(f"time to trace {end - start}")

```

```

time to execute 0.04433171300000005

time to trace 0.47819954299999967

```

### Alternatives

_No response_

### Additional context

_No response_

cc @ngimel @ezyang @SherlockNoMad @soumith @EikanWang @jgong5 @wenzhe-nrv

| 0 |

3,725 | 92,217 |

false INTERNAL ASSERT FAILED at "../c10/cuda/CUDAGraphsC10Utils.h":73, please report a bug to PyTorch. Unknown CUDA graph CaptureStatus32680

|

triaged, module: cuda graphs

|

### 🐛 Describe the bug

hello i used this code in colab:

seed = 72

torch.manual_seed(seed) # Insert any integer

torch.cuda.manual_seed(seed) # Insert any integer

i got a Error says:

RuntimeError: false INTERNAL ASSERT FAILED at "../c10/cuda/CUDAGraphsC10Utils.h":73, please report a bug to PyTorch. Unknown CUDA graph CaptureStatus32680

### Versions

wget https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py

# For security purposes, please check the contents of collect_env.py before running it.

python collect_env.py

cc @mcarilli @ezyang

| 0 |

3,726 | 92,206 |

RuntimeError: derivative for aten::mps_linear_backward is not implemented

|

module: autograd, triaged, actionable, module: mps

|

### 🐛 Describe the bug

when I calculate the second derivative of the model, using my MacBook Air with M1, the bug is shown as below

<img width="1017" alt="image" src="https://user-images.githubusercontent.com/87766834/212471926-f4df0537-ac7e-42dc-b80e-a9d063314a14.png">

Another question: what amazes me most is that when I using the other computer with Windows PyTorch on cuda, the codes can run successfully...

I just want to know how to solve the problem on my MacBook shown in the picture above.

### Versions

15 u_pred = model(f_tmp)

16 du_df=torch.autograd.grad(outputs =u_pred, inputs = f_tmp,

17 grad_outputs = torch.ones_like(u_pred).to(device),

18 create_graph = True,

19 allow_unused=True

20 )[0]

---> 21 ddu_ddf=torch.autograd.grad(outputs =du_df, inputs = f_tmp,

22 grad_outputs = torch.ones_like(du_df).to(device),

23 create_graph = True,

24 allow_unused=True

25 )[0]

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7 @kulinseth @malfet @DenisVieriu97 @razarmehr @abhudev

| 9 |

3,727 | 93,509 |

Triton Autotuning Cache-Clearing Adds 256MB Memory Overhead

|

triaged, bug, oncall: pt2

|

### 🐛 Describe the bug

In inductor autotuning, we invoke triton's do_bench which internally allocates a [256mb tensor](https://github.com/openai/triton/blob/259f4c5f7d4ecb8c64c519fe669318a15d6f75f2/python/triton/testing.py#L162) that is used to clear the cache.

From a practical standpoint, 256 MB is insignificant on a 40GB machine, however for a 12GB RTX 3080 this is a 2% overhead. Additionally, it's a non-linear factor, which throws off memory usage stats for small batch sizes. It has a big effect on the torchbench performance dashboard because low batch sizes are being used. E.g. `resnet18` is at .69 compression with autotuning, and ~1 without.

We could pass a handle to other allocated memory that is not being used in the autotuned kernel to avoid the additional allocation.

### Error logs

_No response_

### Minified repro

_No response_

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 0 |

3,728 | 92,175 |

test_fx_passes generate bad test names

|

module: tests, oncall: fx

|

I am not sure how they get generated but some tests are named things like `test_partitioner_fn_<function TestPartitionFunctions_forward13 at 0x7f35079131a0>_expected_partition_[['add_2', 'add_1', 'add']]_bookend_non_compute_pass_False`.

I am amazed that this doesn't break anything very loudly. But this is definitely not going to work well with all the infra around detecting flaky tests and disabling broken things (the memory address there...).

These tests should be updated to have sane names!

cc @mruberry @ezyang @SherlockNoMad @EikanWang @jgong5 @wenzhe-nrv

| 0 |

3,729 | 92,173 |

"multi device" tests get skipped in standard CI

|

module: ci, module: tests, triaged

|

## Concern

The general concern is that people think they're adding tests to CI but these tests are getting skipped, which is bamboozling. Specifically, I wonder how many tests there are across our codebase that require multiple GPUs but are NOT on the multigpu shard, meaning they are always skipped (our runners only have 1 GPU).

This stemmed from my noticing the `@deviceCountAtLeast(2)` decorator while reviewing a PR, and then a brief search revealed that there are 18 instances of this decorator throughout our codebase. There may other ways people guard/skip tests based on # devices (such as with if statements/with TEST_MULTIGPU).

To add another layer to this onion, even if people knew about the multigpu shard and how to add a test to it, the multigpu config runs on a periodic cadence, so people may not realize that these tests they added never even ran on the CI of their PR.

## Potential Next Steps

1. Document in deviceCountAtLeast that these do NOT run in most CI, and that tests with this decorator should be added to https://github.com/pytorch/pytorch/blob/master/.jenkins/pytorch/multigpu-test.sh

2. Run existing unrun tests like `test_clip_grad_norm_multi_device` on the multigpu config

3. I understand that the multigpu shard was historically hardware-constrained. @pytorch/pytorch-dev-infra Is there a near future where multigpu can run on trunk instead of periodic?

cc @seemethere @malfet @pytorch/pytorch-dev-infra @mruberry

| 12 |

3,730 | 92,171 |

PyTorch 1.13.1 hangs with `torch.distributed.init_process_group`

|

oncall: distributed

|

### 🐛 Describe the bug

Hi there,

We're upgrading from `PyTorch` `1.12.1` to `1.13.1`, and find that it hangs with `torch.distributed.init_process_group` with `1.13.1` while not `1.12.1`, with the following source code when I turn on `CUDA_LAUNCH_BLOCKING=1`:

```

def ddp_init(self, core):

import torch.distributed as dist

# ...

init_method = f"tcp://{ip}:{main_port}"

dist.init_process_group(

self.cfg.ddp_dist_backend,

rank=core.rank(),

world_size=core.size(),

init_method=init_method,

)

dist.barrier()

```

where `self.cfg.ddp_dist_backend = nccl`.

- We look at https://discuss.pytorch.org/t/torch-distributed-init-process-group-hangs-with-4-gpus-with-backend-nccl-but-not-gloo/149061/1, and `IOMMU` should be `disabled` as:

```

ubuntu@compute-dy-worker-80:~$ sudo lspci -vvv | grep ACSCtl

ubuntu@compute-dy-worker-80:~$

```

### Versions

```

root@compute-dy-worker-80:/# python collect_env.py

Collecting environment information...

PyTorch version: 1.13.1+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.24.3

Libc version: glibc-2.31

Python version: 3.9.13 | packaged by conda-forge | (main, May 27 2022, 16:58:50) [GCC 10.3.0] (64-bit runtime)

Python platform: Linux-5.4.0-1080-aws-x86_64-with-glibc2.31

Is CUDA available: True

CUDA runtime version: 11.7.99

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: NVIDIA A100-SXM4-40GB

GPU 1: NVIDIA A100-SXM4-40GB

GPU 2: NVIDIA A100-SXM4-40GB

GPU 3: NVIDIA A100-SXM4-40GB

GPU 4: NVIDIA A100-SXM4-40GB

GPU 5: NVIDIA A100-SXM4-40GB

GPU 6: NVIDIA A100-SXM4-40GB

GPU 7: NVIDIA A100-SXM4-40GB

Nvidia driver version: 510.47.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.5.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.5

[pip3] sagemaker-pytorch-training==2.7.0

[pip3] torch==1.13.1+cu117

[pip3] torchaudio==0.13.1+cu117

[pip3] torchdata==0.5.1

[pip3] torchnet==0.0.4

[pip3] torchvision==0.14.1+cu117

[conda] Could not collect

root@compute-dy-worker-80:/#

```

```

root@compute-dy-worker-80:/# pip show conda

Name: conda

Version: 22.11.1

Summary: OS-agnostic, system-level binary package manager.

Home-page: https://github.com/conda/conda

Author: Anaconda, Inc.

Author-email: conda@continuum.io

License: BSD-3-Clause

Location: /opt/conda/lib/python3.9/site-packages

Requires: pluggy, pycosat, requests, ruamel.yaml, tqdm

Required-by: mamba

root@compute-dy-worker-80:/#

```

Thank you.

cc @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @H-Huang @kwen2501 @awgu

| 1 |

3,731 | 92,151 |

Exception in distributed context doesn't propagate to child processes launched with multiprocessing

|

oncall: distributed

|

### 🐛 Describe the bug

I'm using `torch.distributed` to launch multi GPU trainings and `torch.multiprocessing` in data preparation but I have an issue if an exception is raised in a child process.

Here is an example:

- I use 2 devices (launching my script using `torchrun --nproc_per_node=2 myscript.py`)

- on each device, the main process (called main:0 and main:1) starts a child process (called child:0 and child:1)

- if child raises an exception, I catch this exception to raise it in main aswell

- then, main:0 is killed thanks to SIGTERM

- but, child:0 isn't killed and thus it becomes a zombie process that keeps using resources and blocks the end of the whole process

Here a snippet that you can run using `torchrun --nproc_per_node=2 tmp.py` on a machine with at least 2 GPUs to reproduce this issue:

```python

# tmp.py

import time

import torch

from torch import multiprocessing

def main():

torch.distributed.init_process_group("nccl")

device = torch.device(torch.distributed.get_rank())

torch.cuda.set_device(device)

ctx = multiprocessing.get_context("spawn")

exc_queue = ctx.Queue(1)

torch.Tensor([1]).to(device) # useful to display active processes in nvidia-smi

process = ctx.Process(target=subprocess, args=(device, exc_queue), daemon=True)

process.start()

print(f"main:{device.index} started")

process.join()

print(f"child:{device.index} has joined")

if not exc_queue.empty():

exc = exc_queue.get()

raise RuntimeError("subprocess failed") from exc

print(f"main:{device.index} finished")

def subprocess(device: torch.device, exc_queue: multiprocessing.Queue):

try:

torch.Tensor([1]).to(device) # useful to display active processes in nvidia-smi

print(f"child:{device.index} started")

if device.index:

# emulating an exception that occurs only on a single device

time.sleep(3)

raise RuntimeError()

while True:

# emulating the "normal" behavior of the subprocess such as reading data

print(f"child:{device.index} in loop")

time.sleep(4)

except Exception as exc:

exc_queue.put(exc)

raise exc

if __name__ == "__main__":

main()

```

Here is the traceback I got:

```pyhon

$ torchrun --nproc_per_node=2 tmp.py

WARNING:torch.distributed.run:

*****************************************

Setting OMP_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

*****************************************

main:0 started

main:1 started

child:0 started

child:0 in loop

child:1 started

Process SpawnProcess-1:

Traceback (most recent call last):

File "/opt/rh/rh-python38/root/usr/lib64/python3.8/multiprocessing/process.py", line 315, in _bootstrap

self.run()

File "/opt/rh/rh-python38/root/usr/lib64/python3.8/multiprocessing/process.py", line 108, in run

self._target(*self._args, **self._kwargs)

File "./tmp.py", line 40, in subprocess

raise exc

File "./tmp.py", line 33, in subprocess

raise RuntimeError()

RuntimeError

child:1 has joined

RuntimeError

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "tmp.py", line 44, in <module>

main()

File "tmp.py", line 22, in main

raise RuntimeError("subprocess failed") from exc

RuntimeError: subprocess failed

child:0 in loop

WARNING:torch.distributed.elastic.multiprocessing.api:Sending process 178624 closing signal SIGTERM

ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: 1) local_rank: 1 (pid: 178625) of binary: ./venv/bin/python

Traceback (most recent call last):

File "./venv/bin/torchrun", line 8, in <module>

sys.exit(main())

File "./venv/lib64/python3.8/site-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py", line 345, in wrapper

return f(*args, **kwargs)

File "./venv/lib64/python3.8/site-packages/torch/distributed/run.py", line 761, in main

run(args)

File "./venv/lib64/python3.8/site-packages/torch/distributed/run.py", line 752, in run

elastic_launch(

File "./venv/lib64/python3.8/site-packages/torch/distributed/launcher/api.py", line 131, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "./venv/lib64/python3.8/site-packages/torch/distributed/launcher/api.py", line 245, in launch_agent

raise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

============================================================

tmp.py FAILED

------------------------------------------------------------

Failures:

<NO_OTHER_FAILURES>

------------------------------------------------------------

Root Cause (first observed failure):

[0]:

time : 2023-01-13_07:23:42

host : bv4sxk2.pnp.melodis.com

rank : 1 (local_rank: 1)

exitcode : 1 (pid: 178625)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

============================================================

$ child:0 in loop

child:0 in loop

child:0 in loop

... # the loop keeps running endlessly

```

When looking at nvidia-smi after all processes have been killed except child:0 I see child:0 process still occupying memory.

Is there a way to make sure all children processes are killed when one of the main processes is killed in a distributed context ?

Thanks

### Versions

```

Collecting environment information...

PyTorch version: 1.12.1+cu116

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: CentOS Linux 7 (Core) (x86_64)

GCC version: (GCC) 9.3.1 20200408 (Red Hat 9.3.1-2)

Clang version: 14.0.6 ( 14.0.6-4sh.el7)

CMake version: version 3.24.3

Libc version: glibc-2.17

Python version: 3.8.13 (default, Aug 16 2022, 12:16:29) [GCC 9.3.1 20200408 (Red Hat 9.3.1-2)] (64-bit runtime)

Python platform: Linux-3.10.0-693.2.2.el7.x86_64-x86_64-with-glibc2.2.5

Is CUDA available: True

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to:

GPU models and configuration:

GPU 0: NVIDIA Tesla P100-PCIE-16GB

GPU 1: NVIDIA Tesla P100-PCIE-16GB

Nvidia driver version: 465.19.01

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A