modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-05 12:28:30

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 539

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-05 12:28:13

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

Prisma-Multimodal/sparse-autoencoder-clip-b-32-sae-vanilla-x64-layer-8-hook_resid_post-l1-0.0001

|

Prisma-Multimodal

| 2024-11-01T16:23:15Z | 22 | 0 |

torch

|

[

"torch",

"clip",

"vision",

"transformers",

"interpretability",

"sparse autoencoder",

"sae",

"mechanistic interpretability",

"feature-extraction",

"en",

"license:apache-2.0",

"region:us"

] |

feature-extraction

| 2024-11-01T16:23:04Z |

---

language: en

tags:

- clip

- vision

- transformers

- interpretability

- sparse autoencoder

- sae

- mechanistic interpretability

license: apache-2.0

library_name: torch

pipeline_tag: feature-extraction

metrics:

- type: explained_variance

value: 77.9

pretty_name: Explained Variance %

range:

min: 0

max: 100

- type: l0

value: 156.154

pretty_name: L0

---

# CLIP-B-32 Sparse Autoencoder x64 vanilla - L1:0.0001

### Training Details

- Base Model: CLIP-ViT-B-32 (LAION DataComp.XL-s13B-b90K)

- Layer: 8

- Component: hook_resid_post

### Model Architecture

- Input Dimension: 768

- SAE Dimension: 49,152

- Expansion Factor: x64 (vanilla architecture)

- Activation Function: ReLU

- Initialization: encoder_transpose_decoder

- Context Size: 50 tokens

### Performance Metrics

- L1 Coefficient: 0.0001

- L0 Sparsity: 156.1541

- Explained Variance: 0.7787 (77.87%)

### Training Configuration

- Learning Rate: 0.0004

- LR Scheduler: Cosine Annealing with Warmup (200 steps)

- Epochs: 10

- Gradient Clipping: 1.0

- Device: NVIDIA Quadro RTX 8000

**Experiment Tracking:**

- Weights & Biases Run ID: aoa9e6a9

- Full experiment details: https://wandb.ai/perceptual-alignment/clip/runs/aoa9e6a9/overview

- Git Commit: e22dd02726b74a054a779a4805b96059d83244aa

## Citation

```bibtex

@misc{2024josephsparseautoencoders,

title={Sparse Autoencoders for CLIP-ViT-B-32},

author={Joseph, Sonia},

year={2024},

publisher={Prisma-Multimodal},

url={https://huggingface.co/Prisma-Multimodal},

note={Layer 8, hook_resid_post, Run ID: aoa9e6a9}

}

|

sulaimank/wav2vec-xlsr-cv-grain-lg_grn_only_v2

|

sulaimank

| 2024-11-01T16:20:25Z | 17 | 0 |

transformers

|

[

"transformers",

"safetensors",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"base_model:facebook/wav2vec2-xls-r-300m",

"base_model:finetune:facebook/wav2vec2-xls-r-300m",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2024-11-01T04:58:01Z |

---

library_name: transformers

license: apache-2.0

base_model: facebook/wav2vec2-xls-r-300m

tags:

- generated_from_trainer

metrics:

- wer

model-index:

- name: wav2vec-xlsr-cv-grain-lg_grn_only_v2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec-xlsr-cv-grain-lg_grn_only_v2

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0604

- Wer: 0.0276

- Cer: 0.0085

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 24

- eval_batch_size: 12

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 48

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 100

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Cer |

|:-------------:|:-------:|:-----:|:---------------:|:------:|:------:|

| 6.8998 | 0.9984 | 321 | 2.7793 | 1.0 | 0.8727 |

| 3.2905 | 2.0 | 643 | 0.8365 | 0.9015 | 0.2478 |

| 1.26 | 2.9984 | 964 | 0.3066 | 0.4268 | 0.0856 |

| 0.6344 | 4.0 | 1286 | 0.1856 | 0.2137 | 0.0451 |

| 0.4164 | 4.9984 | 1607 | 0.1513 | 0.1649 | 0.0364 |

| 0.3006 | 6.0 | 1929 | 0.1271 | 0.1274 | 0.0285 |

| 0.2414 | 6.9984 | 2250 | 0.1111 | 0.1083 | 0.0251 |

| 0.2035 | 8.0 | 2572 | 0.1076 | 0.0992 | 0.0228 |

| 0.169 | 8.9984 | 2893 | 0.1076 | 0.0931 | 0.0213 |

| 0.1501 | 10.0 | 3215 | 0.1007 | 0.0920 | 0.0213 |

| 0.1291 | 10.9984 | 3536 | 0.0892 | 0.0772 | 0.0185 |

| 0.1122 | 12.0 | 3858 | 0.0917 | 0.0746 | 0.0180 |

| 0.1053 | 12.9984 | 4179 | 0.0903 | 0.0707 | 0.0173 |

| 0.0972 | 14.0 | 4501 | 0.0863 | 0.0673 | 0.0164 |

| 0.0847 | 14.9984 | 4822 | 0.0849 | 0.0616 | 0.0157 |

| 0.0754 | 16.0 | 5144 | 0.0870 | 0.0657 | 0.0158 |

| 0.0751 | 16.9984 | 5465 | 0.0830 | 0.0610 | 0.0154 |

| 0.0722 | 18.0 | 5787 | 0.0922 | 0.0621 | 0.0159 |

| 0.0665 | 18.9984 | 6108 | 0.0784 | 0.0601 | 0.0153 |

| 0.0634 | 20.0 | 6430 | 0.0856 | 0.0545 | 0.0146 |

| 0.0601 | 20.9984 | 6751 | 0.0881 | 0.0584 | 0.0151 |

| 0.0545 | 22.0 | 7073 | 0.0876 | 0.0558 | 0.0144 |

| 0.0503 | 22.9984 | 7394 | 0.0815 | 0.0523 | 0.0137 |

| 0.0511 | 24.0 | 7716 | 0.0842 | 0.0521 | 0.0140 |

| 0.0477 | 24.9984 | 8037 | 0.0808 | 0.0532 | 0.0151 |

| 0.0433 | 26.0 | 8359 | 0.0770 | 0.0482 | 0.0125 |

| 0.0441 | 26.9984 | 8680 | 0.0803 | 0.0510 | 0.0137 |

| 0.0424 | 28.0 | 9002 | 0.0771 | 0.0460 | 0.0123 |

| 0.0373 | 28.9984 | 9323 | 0.0727 | 0.0462 | 0.0122 |

| 0.0376 | 30.0 | 9645 | 0.0768 | 0.0525 | 0.0134 |

| 0.0325 | 30.9984 | 9966 | 0.0801 | 0.0508 | 0.0134 |

| 0.0371 | 32.0 | 10288 | 0.0714 | 0.0445 | 0.0118 |

| 0.0339 | 32.9984 | 10609 | 0.0738 | 0.0458 | 0.0122 |

| 0.0329 | 34.0 | 10931 | 0.0672 | 0.0388 | 0.0104 |

| 0.0294 | 34.9984 | 11252 | 0.0750 | 0.0408 | 0.0113 |

| 0.0322 | 36.0 | 11574 | 0.0768 | 0.0423 | 0.0117 |

| 0.028 | 36.9984 | 11895 | 0.0735 | 0.0386 | 0.0117 |

| 0.0279 | 38.0 | 12217 | 0.0756 | 0.0414 | 0.0122 |

| 0.0259 | 38.9984 | 12538 | 0.0842 | 0.0495 | 0.0135 |

| 0.0273 | 40.0 | 12860 | 0.0775 | 0.0456 | 0.0131 |

| 0.026 | 40.9984 | 13181 | 0.0729 | 0.0427 | 0.0119 |

| 0.0247 | 42.0 | 13503 | 0.0728 | 0.0410 | 0.0115 |

| 0.0247 | 42.9984 | 13824 | 0.0709 | 0.0430 | 0.0118 |

| 0.023 | 44.0 | 14146 | 0.0632 | 0.0362 | 0.0101 |

| 0.0206 | 44.9984 | 14467 | 0.0675 | 0.0347 | 0.0106 |

| 0.0203 | 46.0 | 14789 | 0.0750 | 0.0419 | 0.0125 |

| 0.0215 | 46.9984 | 15110 | 0.0644 | 0.0358 | 0.0104 |

| 0.0172 | 48.0 | 15432 | 0.0693 | 0.0332 | 0.0098 |

| 0.0191 | 48.9984 | 15753 | 0.0694 | 0.0341 | 0.0102 |

| 0.0175 | 50.0 | 16075 | 0.0716 | 0.0369 | 0.0108 |

| 0.018 | 50.9984 | 16396 | 0.0635 | 0.0351 | 0.0101 |

| 0.0162 | 52.0 | 16718 | 0.0711 | 0.0382 | 0.0106 |

| 0.0167 | 52.9984 | 17039 | 0.0605 | 0.0343 | 0.0097 |

| 0.0173 | 54.0 | 17361 | 0.0699 | 0.0321 | 0.0097 |

| 0.0157 | 54.9984 | 17682 | 0.0726 | 0.0330 | 0.0100 |

| 0.0128 | 56.0 | 18004 | 0.0693 | 0.0323 | 0.0096 |

| 0.0169 | 56.9984 | 18325 | 0.0602 | 0.0306 | 0.0092 |

| 0.014 | 58.0 | 18647 | 0.0638 | 0.0332 | 0.0097 |

| 0.0133 | 58.9984 | 18968 | 0.0630 | 0.0325 | 0.0097 |

| 0.0151 | 60.0 | 19290 | 0.0645 | 0.0328 | 0.0098 |

| 0.0137 | 60.9984 | 19611 | 0.0642 | 0.0351 | 0.0098 |

| 0.0135 | 62.0 | 19933 | 0.0569 | 0.0284 | 0.0084 |

| 0.0119 | 62.9984 | 20254 | 0.0595 | 0.0308 | 0.0088 |

| 0.011 | 64.0 | 20576 | 0.0601 | 0.0263 | 0.0086 |

| 0.0113 | 64.9984 | 20897 | 0.0639 | 0.0282 | 0.0090 |

| 0.0125 | 66.0 | 21219 | 0.0588 | 0.0291 | 0.0090 |

| 0.0103 | 66.9984 | 21540 | 0.0632 | 0.0289 | 0.0090 |

| 0.0094 | 68.0 | 21862 | 0.0600 | 0.0282 | 0.0087 |

| 0.0098 | 68.9984 | 22183 | 0.0615 | 0.0278 | 0.0085 |

| 0.0089 | 70.0 | 22505 | 0.0598 | 0.0278 | 0.0084 |

| 0.0105 | 70.9984 | 22826 | 0.0611 | 0.0291 | 0.0081 |

| 0.0083 | 72.0 | 23148 | 0.0623 | 0.0293 | 0.0084 |

| 0.0092 | 72.9984 | 23469 | 0.0590 | 0.0302 | 0.0090 |

| 0.0068 | 74.0 | 23791 | 0.0604 | 0.0276 | 0.0085 |

### Framework versions

- Transformers 4.46.1

- Pytorch 2.1.0+cu118

- Datasets 3.1.0

- Tokenizers 0.20.1

|

LocalDoc/LaBSE-small-AZ

|

LocalDoc

| 2024-11-01T16:14:17Z | 22 | 0 | null |

[

"safetensors",

"bert",

"sentence-similarity",

"en",

"az",

"base_model:sentence-transformers/LaBSE",

"base_model:finetune:sentence-transformers/LaBSE",

"doi:10.57967/hf/3417",

"license:apache-2.0",

"region:us"

] |

sentence-similarity

| 2024-11-01T15:41:06Z |

---

license: apache-2.0

language:

- en

- az

base_model:

- sentence-transformers/LaBSE

pipeline_tag: sentence-similarity

---

# Small LaBSE for English-Azerbaijani

This is an optimized version of [LaBSE](https://huggingface.co/sentence-transformers/LaBSE)

# Benchmark

| STSBenchmark | biosses-sts | sickr-sts | sts12-sts | sts13-sts | sts15-sts | sts16-sts | Average Pearson | Model |

|--------------|-------------|-----------|-----------|-----------|-----------|-----------|-----------------|--------------------------------------|

| 0.7363 | 0.8148 | 0.7067 | 0.7050 | 0.6535 | 0.7514 | 0.7070 | 0.7250 | sentence-transformers/LaBSE |

| 0.7400 | 0.8216 | 0.6946 | 0.7098 | 0.6781 | 0.7637 | 0.7222 | 0.7329 | LocalDoc/LaBSE-small-AZ |

| 0.5830 | 0.2486 | 0.5921 | 0.5593 | 0.5559 | 0.5404 | 0.5289 | 0.5155 | antoinelouis/colbert-xm |

| 0.7572 | 0.8139 | 0.7328 | 0.7646 | 0.6318 | 0.7542 | 0.7092 | 0.7377 | intfloat/multilingual-e5-large-instruct |

| 0.7485 | 0.7714 | 0.7271 | 0.7170 | 0.6496 | 0.7570 | 0.7255 | 0.7280 | intfloat/multilingual-e5-large |

| 0.6960 | 0.8185 | 0.6950 | 0.6752 | 0.5899 | 0.7186 | 0.6790 | 0.6960 | intfloat/multilingual-e5-base |

| 0.7376 | 0.7917 | 0.7190 | 0.7441 | 0.6286 | 0.7461 | 0.7026 | 0.7242 | intfloat/multilingual-e5-small |

| 0.7927 | 0.6672 | 0.7758 | 0.8122 | 0.7312 | 0.7831 | 0.7416 | 0.7577 | BAAI/bge-m3 |

[STS-Benchmark](https://github.com/LocalDoc-Azerbaijan/STS-Benchmark)

## How to Use

```python

from transformers import AutoTokenizer, AutoModel

import torch

# Load model and tokenizer

tokenizer = AutoTokenizer.from_pretrained("LocalDoc/LaBSE-small-AZ")

model = AutoModel.from_pretrained("LocalDoc/LaBSE-small-AZ")

# Prepare texts

texts = [

"Hello world",

"Salam dünya"

]

# Tokenize and generate embeddings

encoded = tokenizer(texts, padding=True, truncation=True, return_tensors="pt")

with torch.no_grad():

embeddings = model(**encoded).pooler_output

# Compute similarity

similarity = torch.nn.functional.cosine_similarity(embeddings[0], embeddings[1], dim=0)

```

|

RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf

|

RichardErkhov

| 2024-11-01T16:11:57Z | 20 | 0 | null |

[

"gguf",

"arxiv:2101.00027",

"arxiv:2201.07311",

"endpoints_compatible",

"region:us"

] | null | 2024-11-01T15:31:15Z |

Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

pythia-2.8b-v0 - GGUF

- Model creator: https://huggingface.co/EleutherAI/

- Original model: https://huggingface.co/EleutherAI/pythia-2.8b-v0/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [pythia-2.8b-v0.Q2_K.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q2_K.gguf) | Q2_K | 1.01GB |

| [pythia-2.8b-v0.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q3_K_S.gguf) | Q3_K_S | 1.16GB |

| [pythia-2.8b-v0.Q3_K.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q3_K.gguf) | Q3_K | 1.38GB |

| [pythia-2.8b-v0.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q3_K_M.gguf) | Q3_K_M | 1.38GB |

| [pythia-2.8b-v0.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q3_K_L.gguf) | Q3_K_L | 1.49GB |

| [pythia-2.8b-v0.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.IQ4_XS.gguf) | IQ4_XS | 1.43GB |

| [pythia-2.8b-v0.Q4_0.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q4_0.gguf) | Q4_0 | 1.49GB |

| [pythia-2.8b-v0.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.IQ4_NL.gguf) | IQ4_NL | 1.5GB |

| [pythia-2.8b-v0.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q4_K_S.gguf) | Q4_K_S | 1.5GB |

| [pythia-2.8b-v0.Q4_K.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q4_K.gguf) | Q4_K | 1.66GB |

| [pythia-2.8b-v0.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q4_K_M.gguf) | Q4_K_M | 1.66GB |

| [pythia-2.8b-v0.Q4_1.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q4_1.gguf) | Q4_1 | 1.64GB |

| [pythia-2.8b-v0.Q5_0.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q5_0.gguf) | Q5_0 | 1.8GB |

| [pythia-2.8b-v0.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q5_K_S.gguf) | Q5_K_S | 1.8GB |

| [pythia-2.8b-v0.Q5_K.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q5_K.gguf) | Q5_K | 1.93GB |

| [pythia-2.8b-v0.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q5_K_M.gguf) | Q5_K_M | 1.93GB |

| [pythia-2.8b-v0.Q5_1.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q5_1.gguf) | Q5_1 | 1.95GB |

| [pythia-2.8b-v0.Q6_K.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q6_K.gguf) | Q6_K | 2.13GB |

| [pythia-2.8b-v0.Q8_0.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-v0-gguf/blob/main/pythia-2.8b-v0.Q8_0.gguf) | Q8_0 | 2.75GB |

Original model description:

---

language:

- en

tags:

- pytorch

- causal-lm

- pythia

- pythia_v0

license: apache-2.0

datasets:

- the_pile

---

The *Pythia Scaling Suite* is a collection of models developed to facilitate

interpretability research. It contains two sets of eight models of sizes

70M, 160M, 410M, 1B, 1.4B, 2.8B, 6.9B, and 12B. For each size, there are two

models: one trained on the Pile, and one trained on the Pile after the dataset

has been globally deduplicated. All 8 model sizes are trained on the exact

same data, in the exact same order. All Pythia models are available

[on Hugging Face](https://huggingface.co/models?other=pythia).

The Pythia model suite was deliberately designed to promote scientific

research on large language models, especially interpretability research.

Despite not centering downstream performance as a design goal, we find the

models <a href="#evaluations">match or exceed</a> the performance of

similar and same-sized models, such as those in the OPT and GPT-Neo suites.

Please note that all models in the *Pythia* suite were renamed in January

2023. For clarity, a <a href="#naming-convention-and-parameter-count">table

comparing the old and new names</a> is provided in this model card, together

with exact parameter counts.

## Pythia-2.8B

### Model Details

- Developed by: [EleutherAI](http://eleuther.ai)

- Model type: Transformer-based Language Model

- Language: English

- Learn more: [Pythia's GitHub repository](https://github.com/EleutherAI/pythia)

for training procedure, config files, and details on how to use.

- Library: [GPT-NeoX](https://github.com/EleutherAI/gpt-neox)

- License: Apache 2.0

- Contact: to ask questions about this model, join the [EleutherAI

Discord](https://discord.gg/zBGx3azzUn), and post them in `#release-discussion`.

Please read the existing *Pythia* documentation before asking about it in the

EleutherAI Discord. For general correspondence: [contact@eleuther.

ai](mailto:contact@eleuther.ai).

<figure>

| Pythia model | Non-Embedding Params | Layers | Model Dim | Heads | Batch Size | Learning Rate | Equivalent Models |

| -----------: | -------------------: | :----: | :-------: | :---: | :--------: | :-------------------: | :--------------------: |

| 70M | 18,915,328 | 6 | 512 | 8 | 2M | 1.0 x 10<sup>-3</sup> | — |

| 160M | 85,056,000 | 12 | 768 | 12 | 4M | 6.0 x 10<sup>-4</sup> | GPT-Neo 125M, OPT-125M |

| 410M | 302,311,424 | 24 | 1024 | 16 | 4M | 3.0 x 10<sup>-4</sup> | OPT-350M |

| 1.0B | 805,736,448 | 16 | 2048 | 8 | 2M | 3.0 x 10<sup>-4</sup> | — |

| 1.4B | 1,208,602,624 | 24 | 2048 | 16 | 4M | 2.0 x 10<sup>-4</sup> | GPT-Neo 1.3B, OPT-1.3B |

| 2.8B | 2,517,652,480 | 32 | 2560 | 32 | 2M | 1.6 x 10<sup>-4</sup> | GPT-Neo 2.7B, OPT-2.7B |

| 6.9B | 6,444,163,072 | 32 | 4096 | 32 | 2M | 1.2 x 10<sup>-4</sup> | OPT-6.7B |

| 12B | 11,327,027,200 | 36 | 5120 | 40 | 2M | 1.2 x 10<sup>-4</sup> | — |

<figcaption>Engineering details for the <i>Pythia Suite</i>. Deduped and

non-deduped models of a given size have the same hyperparameters. “Equivalent”

models have <b>exactly</b> the same architecture, and the same number of

non-embedding parameters.</figcaption>

</figure>

### Uses and Limitations

#### Intended Use

The primary intended use of Pythia is research on the behavior, functionality,

and limitations of large language models. This suite is intended to provide

a controlled setting for performing scientific experiments. To enable the

study of how language models change over the course of training, we provide

143 evenly spaced intermediate checkpoints per model. These checkpoints are

hosted on Hugging Face as branches. Note that branch `143000` corresponds

exactly to the model checkpoint on the `main` branch of each model.

You may also further fine-tune and adapt Pythia-2.8B for deployment,

as long as your use is in accordance with the Apache 2.0 license. Pythia

models work with the Hugging Face [Transformers

Library](https://huggingface.co/docs/transformers/index). If you decide to use

pre-trained Pythia-2.8B as a basis for your fine-tuned model, please

conduct your own risk and bias assessment.

#### Out-of-scope use

The Pythia Suite is **not** intended for deployment. It is not a in itself

a product and cannot be used for human-facing interactions.

Pythia models are English-language only, and are not suitable for translation

or generating text in other languages.

Pythia-2.8B has not been fine-tuned for downstream contexts in which

language models are commonly deployed, such as writing genre prose,

or commercial chatbots. This means Pythia-2.8B will **not**

respond to a given prompt the way a product like ChatGPT does. This is because,

unlike this model, ChatGPT was fine-tuned using methods such as Reinforcement

Learning from Human Feedback (RLHF) to better “understand” human instructions.

#### Limitations and biases

The core functionality of a large language model is to take a string of text

and predict the next token. The token deemed statistically most likely by the

model need not produce the most “accurate” text. Never rely on

Pythia-2.8B to produce factually accurate output.

This model was trained on [the Pile](https://pile.eleuther.ai/), a dataset

known to contain profanity and texts that are lewd or otherwise offensive.

See [Section 6 of the Pile paper](https://arxiv.org/abs/2101.00027) for a

discussion of documented biases with regards to gender, religion, and race.

Pythia-2.8B may produce socially unacceptable or undesirable text, *even if*

the prompt itself does not include anything explicitly offensive.

If you plan on using text generated through, for example, the Hosted Inference

API, we recommend having a human curate the outputs of this language model

before presenting it to other people. Please inform your audience that the

text was generated by Pythia-2.8B.

### Quickstart

Pythia models can be loaded and used via the following code, demonstrated here

for the third `pythia-70m-deduped` checkpoint:

```python

from transformers import GPTNeoXForCausalLM, AutoTokenizer

model = GPTNeoXForCausalLM.from_pretrained(

"EleutherAI/pythia-70m-deduped",

revision="step3000",

cache_dir="./pythia-70m-deduped/step3000",

)

tokenizer = AutoTokenizer.from_pretrained(

"EleutherAI/pythia-70m-deduped",

revision="step3000",

cache_dir="./pythia-70m-deduped/step3000",

)

inputs = tokenizer("Hello, I am", return_tensors="pt")

tokens = model.generate(**inputs)

tokenizer.decode(tokens[0])

```

Revision/branch `step143000` corresponds exactly to the model checkpoint on

the `main` branch of each model.<br>

For more information on how to use all Pythia models, see [documentation on

GitHub](https://github.com/EleutherAI/pythia).

### Training

#### Training data

[The Pile](https://pile.eleuther.ai/) is a 825GiB general-purpose dataset in

English. It was created by EleutherAI specifically for training large language

models. It contains texts from 22 diverse sources, roughly broken down into

five categories: academic writing (e.g. arXiv), internet (e.g. CommonCrawl),

prose (e.g. Project Gutenberg), dialogue (e.g. YouTube subtitles), and

miscellaneous (e.g. GitHub, Enron Emails). See [the Pile

paper](https://arxiv.org/abs/2101.00027) for a breakdown of all data sources,

methodology, and a discussion of ethical implications. Consult [the

datasheet](https://arxiv.org/abs/2201.07311) for more detailed documentation

about the Pile and its component datasets. The Pile can be downloaded from

the [official website](https://pile.eleuther.ai/), or from a [community

mirror](https://the-eye.eu/public/AI/pile/).<br>

The Pile was **not** deduplicated before being used to train Pythia-2.8B.

#### Training procedure

All models were trained on the exact same data, in the exact same order. Each

model saw 299,892,736,000 tokens during training, and 143 checkpoints for each

model are saved every 2,097,152,000 tokens, spaced evenly throughout training.

This corresponds to training for just under 1 epoch on the Pile for

non-deduplicated models, and about 1.5 epochs on the deduplicated Pile.

All *Pythia* models trained for the equivalent of 143000 steps at a batch size

of 2,097,152 tokens. Two batch sizes were used: 2M and 4M. Models with a batch

size of 4M tokens listed were originally trained for 71500 steps instead, with

checkpoints every 500 steps. The checkpoints on Hugging Face are renamed for

consistency with all 2M batch models, so `step1000` is the first checkpoint

for `pythia-1.4b` that was saved (corresponding to step 500 in training), and

`step1000` is likewise the first `pythia-6.9b` checkpoint that was saved

(corresponding to 1000 “actual” steps).<br>

See [GitHub](https://github.com/EleutherAI/pythia) for more details on training

procedure, including [how to reproduce

it](https://github.com/EleutherAI/pythia/blob/main/README.md#reproducing-training).<br>

Pythia uses the same tokenizer as [GPT-NeoX-

20B](https://huggingface.co/EleutherAI/gpt-neox-20b).

### Evaluations

All 16 *Pythia* models were evaluated using the [LM Evaluation

Harness](https://github.com/EleutherAI/lm-evaluation-harness). You can access

the results by model and step at `results/json/*` in the [GitHub

repository](https://github.com/EleutherAI/pythia/tree/main/results/json).<br>

Expand the sections below to see plots of evaluation results for all

Pythia and Pythia-deduped models compared with OPT and BLOOM.

<details>

<summary>LAMBADA – OpenAI</summary>

<img src="/EleutherAI/pythia-12b/resolve/main/eval_plots/lambada_openai.png" style="width:auto"/>

</details>

<details>

<summary>Physical Interaction: Question Answering (PIQA)</summary>

<img src="/EleutherAI/pythia-12b/resolve/main/eval_plots/piqa.png" style="width:auto"/>

</details>

<details>

<summary>WinoGrande</summary>

<img src="/EleutherAI/pythia-12b/resolve/main/eval_plots/winogrande.png" style="width:auto"/>

</details>

<details>

<summary>AI2 Reasoning Challenge—Challenge Set</summary>

<img src="/EleutherAI/pythia-12b/resolve/main/eval_plots/arc_challenge.png" style="width:auto"/>

</details>

<details>

<summary>SciQ</summary>

<img src="/EleutherAI/pythia-12b/resolve/main/eval_plots/sciq.png" style="width:auto"/>

</details>

### Naming convention and parameter count

*Pythia* models were renamed in January 2023. It is possible that the old

naming convention still persists in some documentation by accident. The

current naming convention (70M, 160M, etc.) is based on total parameter count.

<figure style="width:32em">

| current Pythia suffix | old suffix | total params | non-embedding params |

| --------------------: | ---------: | -------------: | -------------------: |

| 70M | 19M | 70,426,624 | 18,915,328 |

| 160M | 125M | 162,322,944 | 85,056,000 |

| 410M | 350M | 405,334,016 | 302,311,424 |

| 1B | 800M | 1,011,781,632 | 805,736,448 |

| 1.4B | 1.3B | 1,414,647,808 | 1,208,602,624 |

| 2.8B | 2.7B | 2,775,208,960 | 2,517,652,480 |

| 6.9B | 6.7B | 6,857,302,016 | 6,444,163,072 |

| 12B | 13B | 11,846,072,320 | 11,327,027,200 |

</figure>

|

Haesteining/Phi3smallv6

|

Haesteining

| 2024-11-01T16:10:37Z | 39 | 0 |

transformers

|

[

"transformers",

"safetensors",

"phi3",

"text-generation",

"custom_code",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-11-01T16:05:14Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

Daniyal100/adefkfe

|

Daniyal100

| 2024-11-01T16:10:36Z | 7 | 1 |

diffusers

|

[

"diffusers",

"flux",

"lora",

"replicate",

"text-to-image",

"en",

"base_model:black-forest-labs/FLUX.1-dev",

"base_model:adapter:black-forest-labs/FLUX.1-dev",

"license:other",

"region:us"

] |

text-to-image

| 2024-11-01T15:26:37Z |

---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

language:

- en

tags:

- flux

- diffusers

- lora

- replicate

base_model: "black-forest-labs/FLUX.1-dev"

pipeline_tag: text-to-image

# widget:

# - text: >-

# prompt

# output:

# url: https://...

instance_prompt: MARIAPIC

---

# Adefkfe

<Gallery />

Trained on Replicate using:

https://replicate.com/ostris/flux-dev-lora-trainer/train

## Trigger words

You should use `MARIAPIC` to trigger the image generation.

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

```py

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained('black-forest-labs/FLUX.1-dev', torch_dtype=torch.float16).to('cuda')

pipeline.load_lora_weights('Daniyal100/adefkfe', weight_name='lora.safetensors')

image = pipeline('your prompt').images[0]

```

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

|

bb1070/barcelona_wf

|

bb1070

| 2024-11-01T16:08:40Z | 5 | 1 |

diffusers

|

[

"diffusers",

"flux",

"lora",

"replicate",

"text-to-image",

"en",

"base_model:black-forest-labs/FLUX.1-dev",

"base_model:adapter:black-forest-labs/FLUX.1-dev",

"license:other",

"region:us"

] |

text-to-image

| 2024-11-01T16:08:37Z |

---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

language:

- en

tags:

- flux

- diffusers

- lora

- replicate

base_model: "black-forest-labs/FLUX.1-dev"

pipeline_tag: text-to-image

# widget:

# - text: >-

# prompt

# output:

# url: https://...

instance_prompt: TOK

---

# Barcelona_Wf

<Gallery />

Trained on Replicate using:

https://replicate.com/ostris/flux-dev-lora-trainer/train

## Trigger words

You should use `TOK` to trigger the image generation.

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

```py

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained('black-forest-labs/FLUX.1-dev', torch_dtype=torch.float16).to('cuda')

pipeline.load_lora_weights('bb1070/barcelona_wf', weight_name='lora.safetensors')

image = pipeline('your prompt').images[0]

```

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

|

PJMixers-Dev/LLaMa-3.2-Instruct-JankMix-v0.2-SFT-HailMary-v0.1-KTO-3B-GGUF

|

PJMixers-Dev

| 2024-11-01T16:07:02Z | 15 | 0 | null |

[

"gguf",

"en",

"dataset:PJMixers-Dev/HailMary-v0.1-KTO",

"base_model:PJMixers-Dev/LLaMa-3.2-Instruct-JankMix-v0.2-SFT-3B",

"base_model:quantized:PJMixers-Dev/LLaMa-3.2-Instruct-JankMix-v0.2-SFT-3B",

"license:llama3.2",

"model-index",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2024-11-01T00:47:18Z |

---

license: llama3.2

language:

- en

datasets:

- PJMixers-Dev/HailMary-v0.1-KTO

base_model:

- PJMixers-Dev/LLaMa-3.2-Instruct-JankMix-v0.2-SFT-3B

model-index:

- name: PJMixers-Dev/LLaMa-3.2-Instruct-JankMix-v0.2-SFT-HailMary-v0.1-KTO-3B

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: IFEval (0-Shot)

type: HuggingFaceH4/ifeval

args:

num_few_shot: 0

metrics:

- type: inst_level_strict_acc and prompt_level_strict_acc

value: 65.04

name: strict accuracy

source:

url: https://huggingface.co/datasets/open-llm-leaderboard/PJMixers-Dev__LLaMa-3.2-Instruct-JankMix-v0.2-SFT-HailMary-v0.1-KTO-3B-details

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: BBH (3-Shot)

type: BBH

args:

num_few_shot: 3

metrics:

- type: acc_norm

value: 22.29

name: normalized accuracy

source:

url: https://huggingface.co/datasets/open-llm-leaderboard/PJMixers-Dev__LLaMa-3.2-Instruct-JankMix-v0.2-SFT-HailMary-v0.1-KTO-3B-details

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MATH Lvl 5 (4-Shot)

type: hendrycks/competition_math

args:

num_few_shot: 4

metrics:

- type: exact_match

value: 11.78

name: exact match

source:

url: https://huggingface.co/datasets/open-llm-leaderboard/PJMixers-Dev__LLaMa-3.2-Instruct-JankMix-v0.2-SFT-HailMary-v0.1-KTO-3B-details

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GPQA (0-shot)

type: Idavidrein/gpqa

args:

num_few_shot: 0

metrics:

- type: acc_norm

value: 2.91

name: acc_norm

source:

url: https://huggingface.co/datasets/open-llm-leaderboard/PJMixers-Dev__LLaMa-3.2-Instruct-JankMix-v0.2-SFT-HailMary-v0.1-KTO-3B-details

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MuSR (0-shot)

type: TAUR-Lab/MuSR

args:

num_few_shot: 0

metrics:

- type: acc_norm

value: 4.69

name: acc_norm

source:

url: https://huggingface.co/datasets/open-llm-leaderboard/PJMixers-Dev__LLaMa-3.2-Instruct-JankMix-v0.2-SFT-HailMary-v0.1-KTO-3B-details

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU-PRO (5-shot)

type: TIGER-Lab/MMLU-Pro

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 23.42

name: accuracy

source:

url: https://huggingface.co/datasets/open-llm-leaderboard/PJMixers-Dev__LLaMa-3.2-Instruct-JankMix-v0.2-SFT-HailMary-v0.1-KTO-3B-details

name: Open LLM Leaderboard

---

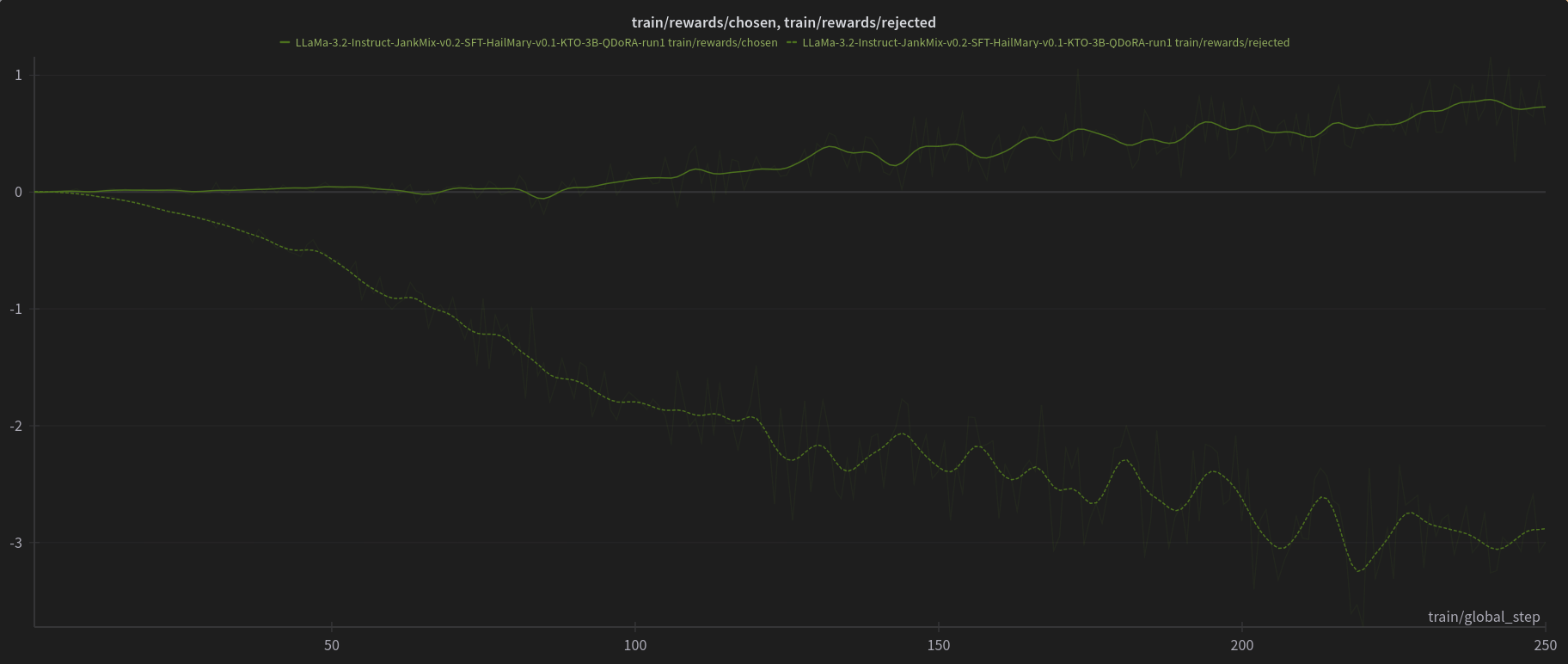

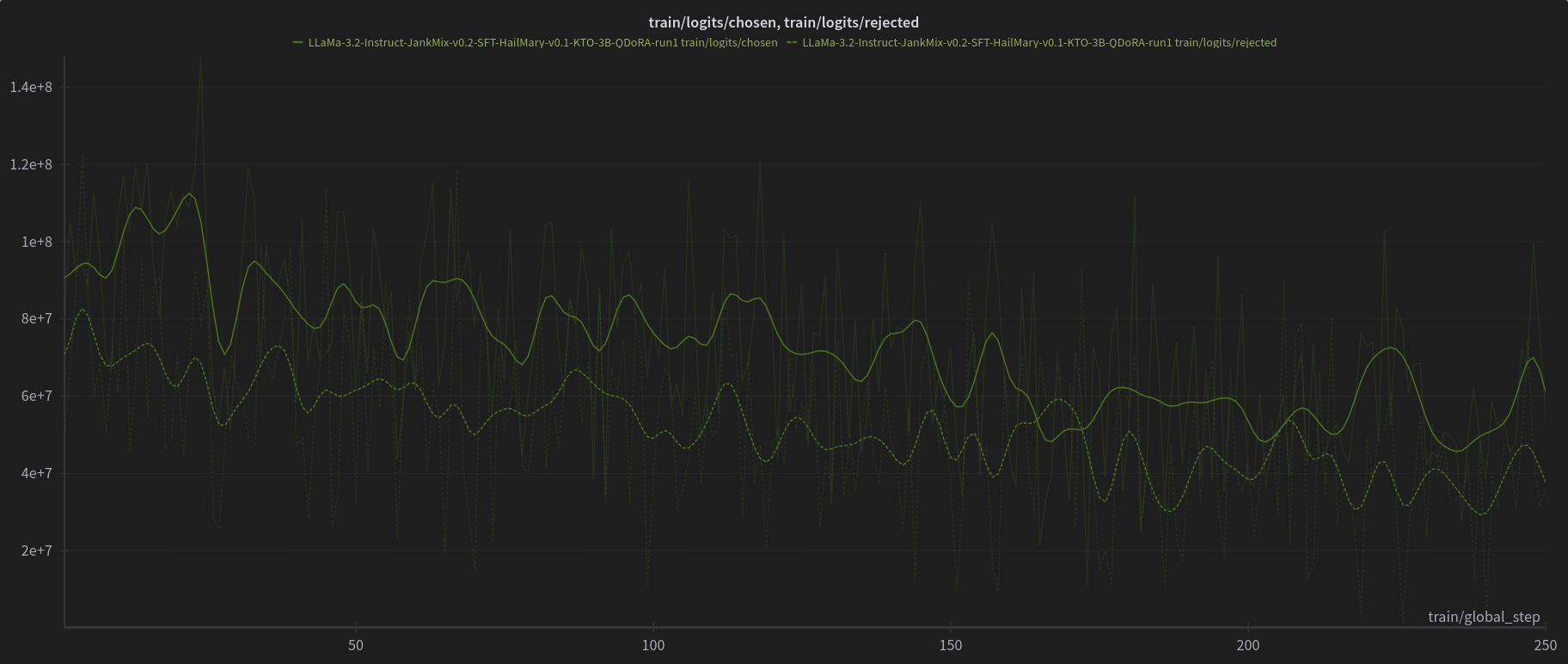

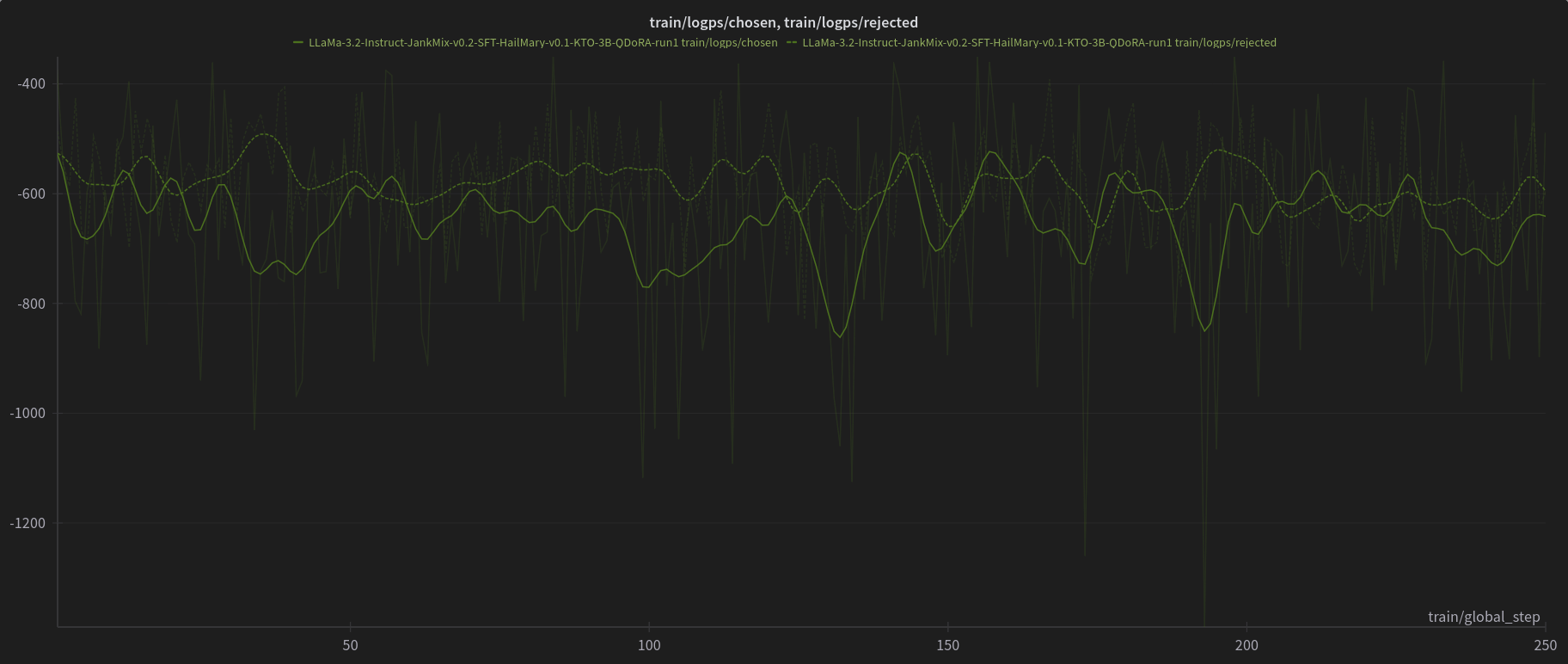

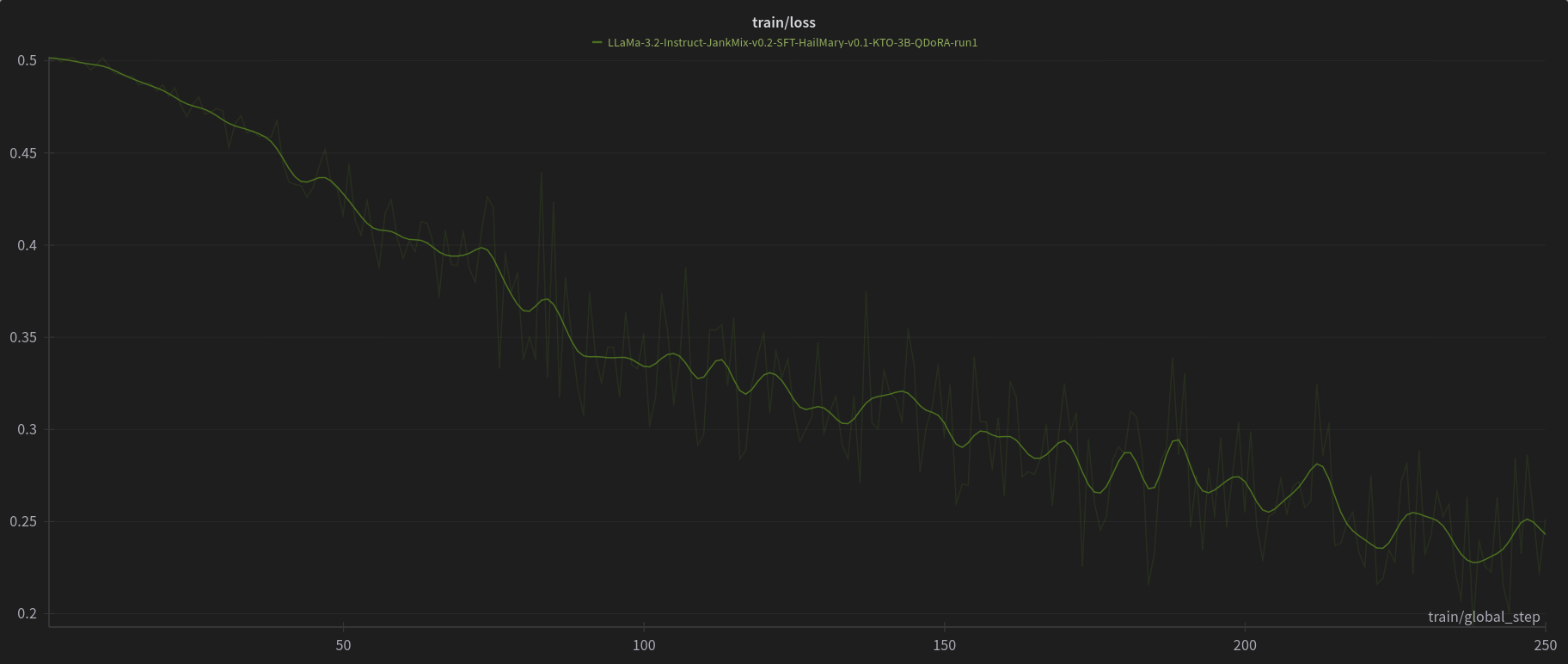

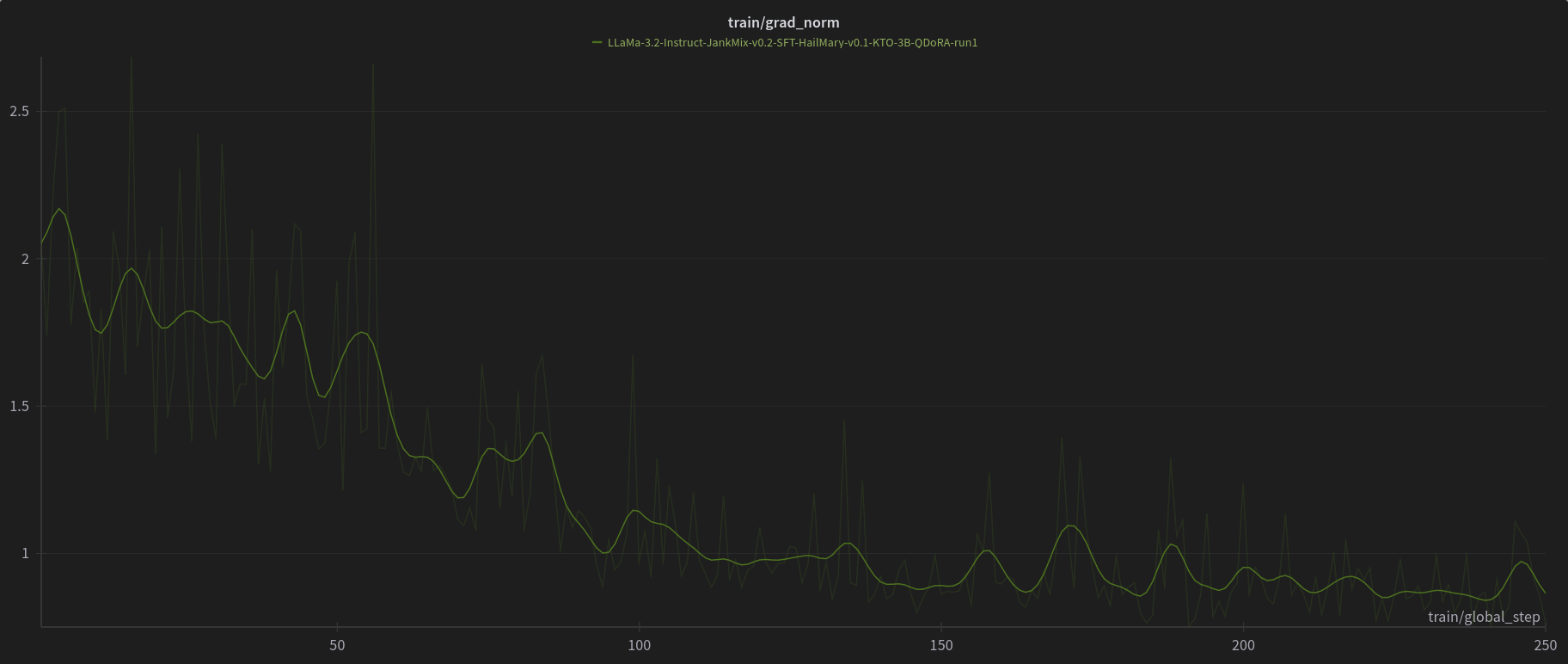

[PJMixers-Dev/LLaMa-3.2-Instruct-JankMix-v0.2-SFT-3B](https://huggingface.co/PJMixers-Dev/LLaMa-3.2-Instruct-JankMix-v0.2-SFT-3B) was further trained using KTO (with `apo_zero_unpaired` loss type) using a mix of instruct, RP, and storygen datasets. I created rejected samples by using the SFT with bad settings (including logit bias) for every model turn.

The model was only trained at `max_length=6144`, and is nowhere near a full epoch as it eventually crashed. So think of this like a test of a test.

# W&B Training Logs

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/PJMixers-Dev__LLaMa-3.2-Instruct-JankMix-v0.2-SFT-3B-details)

| Metric |Value|

|-------------------|----:|

|Avg. |21.69|

|IFEval (0-Shot) |65.04|

|BBH (3-Shot) |22.29|

|MATH Lvl 5 (4-Shot)|11.78|

|GPQA (0-shot) | 2.91|

|MuSR (0-shot) | 4.69|

|MMLU-PRO (5-shot) |23.42|

|

RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf

|

RichardErkhov

| 2024-11-01T16:04:43Z | 20 | 0 | null |

[

"gguf",

"arxiv:2304.01373",

"arxiv:2101.00027",

"arxiv:2201.07311",

"endpoints_compatible",

"region:us"

] | null | 2024-11-01T15:21:26Z |

Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

pythia-2.8b-deduped - GGUF

- Model creator: https://huggingface.co/EleutherAI/

- Original model: https://huggingface.co/EleutherAI/pythia-2.8b-deduped/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [pythia-2.8b-deduped.Q2_K.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q2_K.gguf) | Q2_K | 1.01GB |

| [pythia-2.8b-deduped.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q3_K_S.gguf) | Q3_K_S | 1.16GB |

| [pythia-2.8b-deduped.Q3_K.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q3_K.gguf) | Q3_K | 1.38GB |

| [pythia-2.8b-deduped.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q3_K_M.gguf) | Q3_K_M | 1.38GB |

| [pythia-2.8b-deduped.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q3_K_L.gguf) | Q3_K_L | 1.49GB |

| [pythia-2.8b-deduped.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.IQ4_XS.gguf) | IQ4_XS | 1.43GB |

| [pythia-2.8b-deduped.Q4_0.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q4_0.gguf) | Q4_0 | 1.49GB |

| [pythia-2.8b-deduped.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.IQ4_NL.gguf) | IQ4_NL | 1.5GB |

| [pythia-2.8b-deduped.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q4_K_S.gguf) | Q4_K_S | 1.5GB |

| [pythia-2.8b-deduped.Q4_K.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q4_K.gguf) | Q4_K | 1.66GB |

| [pythia-2.8b-deduped.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q4_K_M.gguf) | Q4_K_M | 1.66GB |

| [pythia-2.8b-deduped.Q4_1.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q4_1.gguf) | Q4_1 | 1.64GB |

| [pythia-2.8b-deduped.Q5_0.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q5_0.gguf) | Q5_0 | 1.8GB |

| [pythia-2.8b-deduped.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q5_K_S.gguf) | Q5_K_S | 1.8GB |

| [pythia-2.8b-deduped.Q5_K.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q5_K.gguf) | Q5_K | 1.93GB |

| [pythia-2.8b-deduped.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q5_K_M.gguf) | Q5_K_M | 1.93GB |

| [pythia-2.8b-deduped.Q5_1.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q5_1.gguf) | Q5_1 | 1.95GB |

| [pythia-2.8b-deduped.Q6_K.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q6_K.gguf) | Q6_K | 2.13GB |

| [pythia-2.8b-deduped.Q8_0.gguf](https://huggingface.co/RichardErkhov/EleutherAI_-_pythia-2.8b-deduped-gguf/blob/main/pythia-2.8b-deduped.Q8_0.gguf) | Q8_0 | 2.75GB |

Original model description:

---

language:

- en

tags:

- pytorch

- causal-lm

- pythia

license: apache-2.0

datasets:

- EleutherAI/the_pile_deduplicated

---

The *Pythia Scaling Suite* is a collection of models developed to facilitate

interpretability research [(see paper)](https://arxiv.org/pdf/2304.01373.pdf).

It contains two sets of eight models of sizes

70M, 160M, 410M, 1B, 1.4B, 2.8B, 6.9B, and 12B. For each size, there are two

models: one trained on the Pile, and one trained on the Pile after the dataset

has been globally deduplicated. All 8 model sizes are trained on the exact

same data, in the exact same order. We also provide 154 intermediate

checkpoints per model, hosted on Hugging Face as branches.

The Pythia model suite was designed to promote scientific

research on large language models, especially interpretability research.

Despite not centering downstream performance as a design goal, we find the

models <a href="#evaluations">match or exceed</a> the performance of

similar and same-sized models, such as those in the OPT and GPT-Neo suites.

<details>

<summary style="font-weight:600">Details on previous early release and naming convention.</summary>

Previously, we released an early version of the Pythia suite to the public.

However, we decided to retrain the model suite to address a few hyperparameter

discrepancies. This model card <a href="#changelog">lists the changes</a>;

see appendix B in the Pythia paper for further discussion. We found no

difference in benchmark performance between the two Pythia versions.

The old models are

[still available](https://huggingface.co/models?other=pythia_v0), but we

suggest the retrained suite if you are just starting to use Pythia.<br>

**This is the current release.**

Please note that all models in the *Pythia* suite were renamed in January

2023. For clarity, a <a href="#naming-convention-and-parameter-count">table

comparing the old and new names</a> is provided in this model card, together

with exact parameter counts.

</details>

<br>

# Pythia-2.8B-deduped

## Model Details

- Developed by: [EleutherAI](http://eleuther.ai)

- Model type: Transformer-based Language Model

- Language: English

- Learn more: [Pythia's GitHub repository](https://github.com/EleutherAI/pythia)

for training procedure, config files, and details on how to use.

[See paper](https://arxiv.org/pdf/2304.01373.pdf) for more evals and implementation

details.

- Library: [GPT-NeoX](https://github.com/EleutherAI/gpt-neox)

- License: Apache 2.0

- Contact: to ask questions about this model, join the [EleutherAI

Discord](https://discord.gg/zBGx3azzUn), and post them in `#release-discussion`.

Please read the existing *Pythia* documentation before asking about it in the

EleutherAI Discord. For general correspondence: [contact@eleuther.

ai](mailto:contact@eleuther.ai).

<figure>

| Pythia model | Non-Embedding Params | Layers | Model Dim | Heads | Batch Size | Learning Rate | Equivalent Models |

| -----------: | -------------------: | :----: | :-------: | :---: | :--------: | :-------------------: | :--------------------: |

| 70M | 18,915,328 | 6 | 512 | 8 | 2M | 1.0 x 10<sup>-3</sup> | — |

| 160M | 85,056,000 | 12 | 768 | 12 | 2M | 6.0 x 10<sup>-4</sup> | GPT-Neo 125M, OPT-125M |

| 410M | 302,311,424 | 24 | 1024 | 16 | 2M | 3.0 x 10<sup>-4</sup> | OPT-350M |

| 1.0B | 805,736,448 | 16 | 2048 | 8 | 2M | 3.0 x 10<sup>-4</sup> | — |

| 1.4B | 1,208,602,624 | 24 | 2048 | 16 | 2M | 2.0 x 10<sup>-4</sup> | GPT-Neo 1.3B, OPT-1.3B |

| 2.8B | 2,517,652,480 | 32 | 2560 | 32 | 2M | 1.6 x 10<sup>-4</sup> | GPT-Neo 2.7B, OPT-2.7B |

| 6.9B | 6,444,163,072 | 32 | 4096 | 32 | 2M | 1.2 x 10<sup>-4</sup> | OPT-6.7B |

| 12B | 11,327,027,200 | 36 | 5120 | 40 | 2M | 1.2 x 10<sup>-4</sup> | — |

<figcaption>Engineering details for the <i>Pythia Suite</i>. Deduped and

non-deduped models of a given size have the same hyperparameters. “Equivalent”

models have <b>exactly</b> the same architecture, and the same number of

non-embedding parameters.</figcaption>

</figure>

## Uses and Limitations

### Intended Use

The primary intended use of Pythia is research on the behavior, functionality,

and limitations of large language models. This suite is intended to provide

a controlled setting for performing scientific experiments. We also provide

154 checkpoints per model: initial `step0`, 10 log-spaced checkpoints

`step{1,2,4...512}`, and 143 evenly-spaced checkpoints from `step1000` to

`step143000`. These checkpoints are hosted on Hugging Face as branches. Note

that branch `143000` corresponds exactly to the model checkpoint on the `main`

branch of each model.

You may also further fine-tune and adapt Pythia-2.8B-deduped for deployment,

as long as your use is in accordance with the Apache 2.0 license. Pythia

models work with the Hugging Face [Transformers

Library](https://huggingface.co/docs/transformers/index). If you decide to use

pre-trained Pythia-2.8B-deduped as a basis for your fine-tuned model, please

conduct your own risk and bias assessment.

### Out-of-scope use

The Pythia Suite is **not** intended for deployment. It is not a in itself

a product and cannot be used for human-facing interactions. For example,

the model may generate harmful or offensive text. Please evaluate the risks

associated with your particular use case.

Pythia models are English-language only, and are not suitable for translation

or generating text in other languages.

Pythia-2.8B-deduped has not been fine-tuned for downstream contexts in which

language models are commonly deployed, such as writing genre prose,

or commercial chatbots. This means Pythia-2.8B-deduped will **not**

respond to a given prompt the way a product like ChatGPT does. This is because,

unlike this model, ChatGPT was fine-tuned using methods such as Reinforcement

Learning from Human Feedback (RLHF) to better “follow” human instructions.

### Limitations and biases

The core functionality of a large language model is to take a string of text

and predict the next token. The token used by the model need not produce the

most “accurate” text. Never rely on Pythia-2.8B-deduped to produce factually accurate

output.

This model was trained on [the Pile](https://pile.eleuther.ai/), a dataset

known to contain profanity and texts that are lewd or otherwise offensive.

See [Section 6 of the Pile paper](https://arxiv.org/abs/2101.00027) for a

discussion of documented biases with regards to gender, religion, and race.

Pythia-2.8B-deduped may produce socially unacceptable or undesirable text, *even if*

the prompt itself does not include anything explicitly offensive.

If you plan on using text generated through, for example, the Hosted Inference

API, we recommend having a human curate the outputs of this language model

before presenting it to other people. Please inform your audience that the

text was generated by Pythia-2.8B-deduped.

### Quickstart

Pythia models can be loaded and used via the following code, demonstrated here

for the third `pythia-70m-deduped` checkpoint:

```python

from transformers import GPTNeoXForCausalLM, AutoTokenizer

model = GPTNeoXForCausalLM.from_pretrained(

"EleutherAI/pythia-70m-deduped",

revision="step3000",

cache_dir="./pythia-70m-deduped/step3000",

)

tokenizer = AutoTokenizer.from_pretrained(

"EleutherAI/pythia-70m-deduped",

revision="step3000",

cache_dir="./pythia-70m-deduped/step3000",

)

inputs = tokenizer("Hello, I am", return_tensors="pt")

tokens = model.generate(**inputs)

tokenizer.decode(tokens[0])

```

Revision/branch `step143000` corresponds exactly to the model checkpoint on

the `main` branch of each model.<br>

For more information on how to use all Pythia models, see [documentation on

GitHub](https://github.com/EleutherAI/pythia).

## Training

### Training data

Pythia-2.8B-deduped was trained on the Pile **after the dataset has been globally

deduplicated**.<br>

[The Pile](https://pile.eleuther.ai/) is a 825GiB general-purpose dataset in

English. It was created by EleutherAI specifically for training large language

models. It contains texts from 22 diverse sources, roughly broken down into

five categories: academic writing (e.g. arXiv), internet (e.g. CommonCrawl),

prose (e.g. Project Gutenberg), dialogue (e.g. YouTube subtitles), and

miscellaneous (e.g. GitHub, Enron Emails). See [the Pile

paper](https://arxiv.org/abs/2101.00027) for a breakdown of all data sources,

methodology, and a discussion of ethical implications. Consult [the

datasheet](https://arxiv.org/abs/2201.07311) for more detailed documentation

about the Pile and its component datasets. The Pile can be downloaded from

the [official website](https://pile.eleuther.ai/), or from a [community

mirror](https://the-eye.eu/public/AI/pile/).

### Training procedure

All models were trained on the exact same data, in the exact same order. Each

model saw 299,892,736,000 tokens during training, and 143 checkpoints for each

model are saved every 2,097,152,000 tokens, spaced evenly throughout training,

from `step1000` to `step143000` (which is the same as `main`). In addition, we

also provide frequent early checkpoints: `step0` and `step{1,2,4...512}`.

This corresponds to training for just under 1 epoch on the Pile for

non-deduplicated models, and about 1.5 epochs on the deduplicated Pile.

All *Pythia* models trained for 143000 steps at a batch size

of 2M (2,097,152 tokens).<br>

See [GitHub](https://github.com/EleutherAI/pythia) for more details on training

procedure, including [how to reproduce

it](https://github.com/EleutherAI/pythia/blob/main/README.md#reproducing-training).<br>

Pythia uses the same tokenizer as [GPT-NeoX-

20B](https://huggingface.co/EleutherAI/gpt-neox-20b).

## Evaluations

All 16 *Pythia* models were evaluated using the [LM Evaluation

Harness](https://github.com/EleutherAI/lm-evaluation-harness). You can access

the results by model and step at `results/json/*` in the [GitHub

repository](https://github.com/EleutherAI/pythia/tree/main/results/json/).<br>

Expand the sections below to see plots of evaluation results for all

Pythia and Pythia-deduped models compared with OPT and BLOOM.

<details>

<summary>LAMBADA – OpenAI</summary>

<img src="/EleutherAI/pythia-12b/resolve/main/eval_plots/lambada_openai_v1.png" style="width:auto"/>

</details>

<details>

<summary>Physical Interaction: Question Answering (PIQA)</summary>

<img src="/EleutherAI/pythia-12b/resolve/main/eval_plots/piqa_v1.png" style="width:auto"/>

</details>

<details>

<summary>WinoGrande</summary>

<img src="/EleutherAI/pythia-12b/resolve/main/eval_plots/winogrande_v1.png" style="width:auto"/>

</details>

<details>

<summary>AI2 Reasoning Challenge—Easy Set</summary>

<img src="/EleutherAI/pythia-12b/resolve/main/eval_plots/arc_easy_v1.png" style="width:auto"/>

</details>

<details>

<summary>SciQ</summary>

<img src="/EleutherAI/pythia-12b/resolve/main/eval_plots/sciq_v1.png" style="width:auto"/>

</details>

## Changelog

This section compares differences between previously released

[Pythia v0](https://huggingface.co/models?other=pythia_v0) and the current

models. See Appendix B of the Pythia paper for further discussion of these

changes and the motivation behind them. We found that retraining Pythia had no

impact on benchmark performance.

- All model sizes are now trained with uniform batch size of 2M tokens.

Previously, the models of size 160M, 410M, and 1.4B parameters were trained

with batch sizes of 4M tokens.

- We added checkpoints at initialization (step 0) and steps {1,2,4,8,16,32,64,

128,256,512} in addition to every 1000 training steps.

- Flash Attention was used in the new retrained suite.

- We remedied a minor inconsistency that existed in the original suite: all

models of size 2.8B parameters or smaller had a learning rate (LR) schedule

which decayed to a minimum LR of 10% the starting LR rate, but the 6.9B and

12B models all used an LR schedule which decayed to a minimum LR of 0. In

the redone training runs, we rectified this inconsistency: all models now were

trained with LR decaying to a minimum of 0.1× their maximum LR.

### Naming convention and parameter count

*Pythia* models were renamed in January 2023. It is possible that the old

naming convention still persists in some documentation by accident. The

current naming convention (70M, 160M, etc.) is based on total parameter count.

<figure style="width:32em">

| current Pythia suffix | old suffix | total params | non-embedding params |

| --------------------: | ---------: | -------------: | -------------------: |

| 70M | 19M | 70,426,624 | 18,915,328 |

| 160M | 125M | 162,322,944 | 85,056,000 |

| 410M | 350M | 405,334,016 | 302,311,424 |

| 1B | 800M | 1,011,781,632 | 805,736,448 |

| 1.4B | 1.3B | 1,414,647,808 | 1,208,602,624 |

| 2.8B | 2.7B | 2,775,208,960 | 2,517,652,480 |

| 6.9B | 6.7B | 6,857,302,016 | 6,444,163,072 |

| 12B | 13B | 11,846,072,320 | 11,327,027,200 |

</figure>

|

RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf

|

RichardErkhov

| 2024-11-01T15:56:13Z | 450 | 0 | null |

[

"gguf",

"arxiv:2410.17215",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2024-11-01T15:37:27Z |

Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

Pretrain-Qwen-1.2B - GGUF

- Model creator: https://huggingface.co/MiniLLM/

- Original model: https://huggingface.co/MiniLLM/Pretrain-Qwen-1.2B/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [Pretrain-Qwen-1.2B.Q2_K.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q2_K.gguf) | Q2_K | 0.51GB |

| [Pretrain-Qwen-1.2B.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q3_K_S.gguf) | Q3_K_S | 0.57GB |

| [Pretrain-Qwen-1.2B.Q3_K.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q3_K.gguf) | Q3_K | 0.61GB |

| [Pretrain-Qwen-1.2B.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q3_K_M.gguf) | Q3_K_M | 0.61GB |

| [Pretrain-Qwen-1.2B.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q3_K_L.gguf) | Q3_K_L | 0.63GB |

| [Pretrain-Qwen-1.2B.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.IQ4_XS.gguf) | IQ4_XS | 0.65GB |

| [Pretrain-Qwen-1.2B.Q4_0.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q4_0.gguf) | Q4_0 | 0.67GB |

| [Pretrain-Qwen-1.2B.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.IQ4_NL.gguf) | IQ4_NL | 0.67GB |

| [Pretrain-Qwen-1.2B.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q4_K_S.gguf) | Q4_K_S | 0.69GB |

| [Pretrain-Qwen-1.2B.Q4_K.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q4_K.gguf) | Q4_K | 0.72GB |

| [Pretrain-Qwen-1.2B.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q4_K_M.gguf) | Q4_K_M | 0.72GB |

| [Pretrain-Qwen-1.2B.Q4_1.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q4_1.gguf) | Q4_1 | 0.72GB |

| [Pretrain-Qwen-1.2B.Q5_0.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q5_0.gguf) | Q5_0 | 0.78GB |

| [Pretrain-Qwen-1.2B.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q5_K_S.gguf) | Q5_K_S | 0.79GB |

| [Pretrain-Qwen-1.2B.Q5_K.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q5_K.gguf) | Q5_K | 0.81GB |

| [Pretrain-Qwen-1.2B.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q5_K_M.gguf) | Q5_K_M | 0.81GB |

| [Pretrain-Qwen-1.2B.Q5_1.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q5_1.gguf) | Q5_1 | 0.83GB |

| [Pretrain-Qwen-1.2B.Q6_K.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q6_K.gguf) | Q6_K | 0.93GB |

| [Pretrain-Qwen-1.2B.Q8_0.gguf](https://huggingface.co/RichardErkhov/MiniLLM_-_Pretrain-Qwen-1.2B-gguf/blob/main/Pretrain-Qwen-1.2B.Q8_0.gguf) | Q8_0 | 1.15GB |

Original model description:

---

library_name: transformers

license: apache-2.0

datasets:

- monology/pile-uncopyrighted

- MiniLLM/pile-tokenized

language:

- en

metrics:

- accuracy

pipeline_tag: text-generation

---

# Pretrain-Qwen-1.2B

[paper](https://arxiv.org/abs/2410.17215) | [code](https://github.com/thu-coai/MiniPLM)

**Pretrain-Qwen-1.2B** is a 1.2B model with Qwen achitecture conventionally pre-trained from scratch on [the Pile](https://huggingface.co/datasets/monology/pile-uncopyrighted) for 50B tokens.

We also open-source the tokenized [pre-training corpus](https://huggingface.co/datasets/MiniLLM/pile-tokenized) for reproducibility.

**It is used as the baseline for [MiniLLM-Qwen-1.2B](https://huggingface.co/MiniLLM/MiniPLM-Qwen-1.2B)**

## Evaluation

MiniPLM models achieves better performance given the same computation and scales well across model sizes:

<p align='left'>

<img src="https://cdn-uploads.huggingface.co/production/uploads/624ac662102fcdff87be51b9/EOYzajQcwQFT5PobqL3j0.png" width="1000">

</p>

## Other Baselines

+ [VanillaKD](https://huggingface.co/MiniLLM/VanillaKD-Pretrain-Qwen-1.2B)

## Citation

```bibtext

@article{miniplm,

title={MiniPLM: Knowledge Distillation for Pre-Training Language Models},

author={Yuxian Gu and Hao Zhou and Fandong Meng and Jie Zhou and Minlie Huang},

journal={arXiv preprint arXiv:2410.17215},

year={2024}

}

```

|

mlfoundations-dev/OH_DCFT_V3_wo_platypus

|

mlfoundations-dev

| 2024-11-01T15:54:44Z | 6 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"llama-factory",

"full",

"generated_from_trainer",

"conversational",

"base_model:meta-llama/Llama-3.1-8B",

"base_model:finetune:meta-llama/Llama-3.1-8B",

"license:llama3.1",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-10-30T21:32:27Z |

---

library_name: transformers

license: llama3.1

base_model: meta-llama/Llama-3.1-8B

tags:

- llama-factory

- full

- generated_from_trainer

model-index:

- name: OH_DCFT_V3_wo_platypus

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# OH_DCFT_V3_wo_platypus

This model is a fine-tuned version of [meta-llama/Llama-3.1-8B](https://huggingface.co/meta-llama/Llama-3.1-8B) on the mlfoundations-dev/OH_DCFT_V3_wo_platypus dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6428

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-06

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 16

- gradient_accumulation_steps: 4

- total_train_batch_size: 512

- total_eval_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- lr_scheduler_warmup_ratio: 0.1

- lr_scheduler_warmup_steps: 1738

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 0.6557 | 0.9988 | 410 | 0.6519 |

| 0.6082 | 2.0 | 821 | 0.6420 |

| 0.5706 | 2.9963 | 1230 | 0.6428 |

### Framework versions

- Transformers 4.45.2

- Pytorch 2.3.0

- Datasets 2.21.0

- Tokenizers 0.20.1

|

RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf

|

RichardErkhov

| 2024-11-01T15:47:02Z | 9 | 0 | null |

[

"gguf",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2024-11-01T15:06:22Z |

Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

vinallama-2.7b-chat-orpo-v2 - GGUF

- Model creator: https://huggingface.co/d-llm/

- Original model: https://huggingface.co/d-llm/vinallama-2.7b-chat-orpo-v2/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [vinallama-2.7b-chat-orpo-v2.Q2_K.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q2_K.gguf) | Q2_K | 1.0GB |

| [vinallama-2.7b-chat-orpo-v2.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q3_K_S.gguf) | Q3_K_S | 1.16GB |

| [vinallama-2.7b-chat-orpo-v2.Q3_K.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q3_K.gguf) | Q3_K | 1.28GB |

| [vinallama-2.7b-chat-orpo-v2.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q3_K_M.gguf) | Q3_K_M | 1.28GB |

| [vinallama-2.7b-chat-orpo-v2.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q3_K_L.gguf) | Q3_K_L | 1.39GB |

| [vinallama-2.7b-chat-orpo-v2.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.IQ4_XS.gguf) | IQ4_XS | 1.42GB |

| [vinallama-2.7b-chat-orpo-v2.Q4_0.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q4_0.gguf) | Q4_0 | 1.48GB |

| [vinallama-2.7b-chat-orpo-v2.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.IQ4_NL.gguf) | IQ4_NL | 1.49GB |

| [vinallama-2.7b-chat-orpo-v2.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q4_K_S.gguf) | Q4_K_S | 1.49GB |

| [vinallama-2.7b-chat-orpo-v2.Q4_K.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q4_K.gguf) | Q4_K | 1.58GB |

| [vinallama-2.7b-chat-orpo-v2.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q4_K_M.gguf) | Q4_K_M | 1.58GB |

| [vinallama-2.7b-chat-orpo-v2.Q4_1.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q4_1.gguf) | Q4_1 | 1.64GB |

| [vinallama-2.7b-chat-orpo-v2.Q5_0.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q5_0.gguf) | Q5_0 | 1.79GB |

| [vinallama-2.7b-chat-orpo-v2.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q5_K_S.gguf) | Q5_K_S | 1.79GB |

| [vinallama-2.7b-chat-orpo-v2.Q5_K.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q5_K.gguf) | Q5_K | 1.84GB |

| [vinallama-2.7b-chat-orpo-v2.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q5_K_M.gguf) | Q5_K_M | 1.84GB |

| [vinallama-2.7b-chat-orpo-v2.Q5_1.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q5_1.gguf) | Q5_1 | 1.95GB |

| [vinallama-2.7b-chat-orpo-v2.Q6_K.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q6_K.gguf) | Q6_K | 2.12GB |

| [vinallama-2.7b-chat-orpo-v2.Q8_0.gguf](https://huggingface.co/RichardErkhov/d-llm_-_vinallama-2.7b-chat-orpo-v2-gguf/blob/main/vinallama-2.7b-chat-orpo-v2.Q8_0.gguf) | Q8_0 | 2.75GB |

Original model description:

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]