modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-03 06:27:42

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 535

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-03 06:27:02

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

FlyingFishzzz/model_out_mesh

|

FlyingFishzzz

| 2023-11-22T08:29:03Z | 1 | 0 |

diffusers

|

[

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"controlnet",

"base_model:stabilityai/stable-diffusion-2-1-base",

"base_model:adapter:stabilityai/stable-diffusion-2-1-base",

"license:creativeml-openrail-m",

"region:us"

] |

text-to-image

| 2023-11-20T18:45:07Z |

---

license: creativeml-openrail-m

base_model: stabilityai/stable-diffusion-2-1-base

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- controlnet

inference: true

---

# controlnet-FlyingFishzzz/model_out_mesh

These are controlnet weights trained on stabilityai/stable-diffusion-2-1-base with new type of conditioning.

You can find some example images below.

prompt: High-quality close-up dslr photo of man wearing a hat with trees in the background

prompt: Girl smiling, professional dslr photograph, dark background, studio lights, high quality

prompt: Portrait of a clown face, oil on canvas, bittersweet expression

prompt: an old white European woman with a necklace in the snow

|

LarryAIDraw/illu-origin

|

LarryAIDraw

| 2023-11-22T08:12:25Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-11-22T08:05:49Z |

---

license: creativeml-openrail-m

---

https://civitai.com/models/206660/illustrious-azur-lane-origin-skinand

|

LarryAIDraw/chartreux

|

LarryAIDraw

| 2023-11-22T08:12:01Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-11-22T08:05:00Z |

---

license: creativeml-openrail-m

---

https://civitai.com/models/202917/chartreux-westia-boarding-school-juliet

|

asas-ai/noon_7B_4bit_qlora_xlsum

|

asas-ai

| 2023-11-22T08:11:24Z | 0 | 0 | null |

[

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:asas-ai/noon-7B_8bit",

"base_model:finetune:asas-ai/noon-7B_8bit",

"region:us"

] | null | 2023-11-22T08:10:36Z |

---

base_model: asas-ai/noon-7B_8bit

tags:

- generated_from_trainer

model-index:

- name: noon_7B_4bit_qlora_xlsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# noon_7B_4bit_qlora_xlsum

This model is a fine-tuned version of [asas-ai/noon-7B_8bit](https://huggingface.co/asas-ai/noon-7B_8bit) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- lr_scheduler_warmup_ratio: 0.03

- training_steps: 1950

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.1+cu121

- Datasets 2.15.0

- Tokenizers 0.15.0

|

LarryAIDraw/furina-focalors-v2e1x

|

LarryAIDraw

| 2023-11-22T08:11:04Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-11-22T08:03:11Z |

---

license: creativeml-openrail-m

---

https://civitai.com/models/197220/genshin-impact-furina-and-focalors-or-and

|

LarryAIDraw/yui_yuigahama_v2

|

LarryAIDraw

| 2023-11-22T08:10:47Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-11-22T08:02:48Z |

---

license: creativeml-openrail-m

---

https://civitai.com/models/126473/yui-yuigahama-or-my-teen-romantic-comedy-is-wrong-as-i-expected-oregairu

|

LoneStriker/Tess-M-v1.1-6.0bpw-h6-exl2

|

LoneStriker

| 2023-11-22T08:10:47Z | 6 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-11-22T07:52:59Z |

---

license: other

license_name: yi-34b

license_link: https://huggingface.co/01-ai/Yi-34B/blob/main/LICENSE

---

# Tess

Tess, short for Tessoro/Tessoso, is a general purpose Large Language Model series. Tess-M-v1.1 was trained on the Yi-34B-200K base.

# Prompt Format:

```

SYSTEM: <ANY SYSTEM CONTEXT>

USER:

ASSISTANT:

```

|

LarryAIDraw/RuanMei-08

|

LarryAIDraw

| 2023-11-22T08:10:20Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-11-22T08:01:46Z |

---

license: creativeml-openrail-m

---

https://civitai.com/models/207086/ruan-mei-honkai-star-rail-lora

|

vdo/stable-video-diffusion-img2vid-xt

|

vdo

| 2023-11-22T08:09:26Z | 0 | 3 | null |

[

"region:us"

] | null | 2023-11-22T07:54:24Z |

---

# For reference on model card metadata, see the spec: https://github.com/huggingface/hub-docs/blob/main/modelcard.md?plain=1

# Doc / guide: https://huggingface.co/docs/hub/model-cards

{}

---

# Stable Video Diffusion Image-to-Video Model Card

<!-- Provide a quick summary of what the model is/does. -->

Stable Video Diffusion (SVD) Image-to-Video is a diffusion model that takes in a still image as a conditioning frame, and generates a video from it.

## Model Details

### Model Description

(SVD) Image-to-Video is a latent diffusion model trained to generate short video clips from an image conditioning.

This model was trained to generate 25 frames at resolution 576x1024 given a context frame of the same size, finetuned from [SVD Image-to-Video [14 frames]](https://huggingface.co/stabilityai/stable-video-diffusion-img2vid).

We also finetune the widely used [f8-decoder](https://huggingface.co/docs/diffusers/api/models/autoencoderkl#loading-from-the-original-format) for temporal consistency.

For convenience, we additionally provide the model with the

standard frame-wise decoder [here](https://huggingface.co/stabilityai/stable-video-diffusion-img2vid-xt/blob/main/svd_xt_image_decoder.safetensors).

- **Developed by:** Stability AI

- **Funded by:** Stability AI

- **Model type:** Generative image-to-video model

- **Finetuned from model:** SVD Image-to-Video [14 frames]

### Model Sources

For research purposes, we recommend our `generative-models` Github repository (https://github.com/Stability-AI/generative-models),

which implements the most popular diffusion frameworks (both training and inference).

- **Repository:** https://github.com/Stability-AI/generative-models

- **Paper:** https://stability.ai/research/stable-video-diffusion-scaling-latent-video-diffusion-models-to-large-datasets

## Evaluation

The chart above evaluates user preference for SVD-Image-to-Video over [GEN-2](https://research.runwayml.com/gen2) and [PikaLabs](https://www.pika.art/).

SVD-Image-to-Video is preferred by human voters in terms of video quality. For details on the user study, we refer to the [research paper](https://stability.ai/research/stable-video-diffusion-scaling-latent-video-diffusion-models-to-large-datasets)

## Uses

### Direct Use

The model is intended for research purposes only. Possible research areas and tasks include

- Research on generative models.

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

Excluded uses are described below.

### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events,

and therefore using the model to generate such content is out-of-scope for the abilities of this model.

The model should not be used in any way that violates Stability AI's [Acceptable Use Policy](https://stability.ai/use-policy).

## Limitations and Bias

### Limitations

- The generated videos are rather short (<= 4sec), and the model does not achieve perfect photorealism.

- The model may generate videos without motion, or very slow camera pans.

- The model cannot be controlled through text.

- The model cannot render legible text.

- Faces and people in general may not be generated properly.

- The autoencoding part of the model is lossy.

### Recommendations

The model is intended for research purposes only.

## How to Get Started with the Model

Check out https://github.com/Stability-AI/generative-models

|

phuong-tk-nguyen/vit-base-patch16-224-finetuned-cifar10

|

phuong-tk-nguyen

| 2023-11-22T07:58:16Z | 7 | 0 |

transformers

|

[

"transformers",

"safetensors",

"vit",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"base_model:google/vit-base-patch16-224",

"base_model:finetune:google/vit-base-patch16-224",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2023-11-22T06:46:30Z |

---

license: apache-2.0

base_model: google/vit-base-patch16-224

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: vit-base-patch16-224-finetuned-cifar10

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9844

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vit-base-patch16-224-finetuned-cifar10

This model is a fine-tuned version of [google/vit-base-patch16-224](https://huggingface.co/google/vit-base-patch16-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0564

- Accuracy: 0.9844

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 2.4597 | 0.03 | 10 | 2.2902 | 0.1662 |

| 2.1429 | 0.06 | 20 | 1.7855 | 0.5086 |

| 1.6466 | 0.09 | 30 | 1.0829 | 0.8484 |

| 0.9962 | 0.11 | 40 | 0.4978 | 0.9288 |

| 0.6127 | 0.14 | 50 | 0.2717 | 0.9508 |

| 0.4544 | 0.17 | 60 | 0.1942 | 0.9588 |

| 0.4352 | 0.2 | 70 | 0.1504 | 0.9672 |

| 0.374 | 0.23 | 80 | 0.1221 | 0.9718 |

| 0.3261 | 0.26 | 90 | 0.1057 | 0.9772 |

| 0.34 | 0.28 | 100 | 0.0943 | 0.979 |

| 0.284 | 0.31 | 110 | 0.0958 | 0.9754 |

| 0.3151 | 0.34 | 120 | 0.0866 | 0.9776 |

| 0.3004 | 0.37 | 130 | 0.0838 | 0.9788 |

| 0.3334 | 0.4 | 140 | 0.0798 | 0.9806 |

| 0.3018 | 0.43 | 150 | 0.0800 | 0.9778 |

| 0.2957 | 0.45 | 160 | 0.0749 | 0.9808 |

| 0.2952 | 0.48 | 170 | 0.0704 | 0.9814 |

| 0.3084 | 0.51 | 180 | 0.0720 | 0.9812 |

| 0.3015 | 0.54 | 190 | 0.0708 | 0.983 |

| 0.2763 | 0.57 | 200 | 0.0672 | 0.9832 |

| 0.3376 | 0.6 | 210 | 0.0700 | 0.982 |

| 0.285 | 0.63 | 220 | 0.0657 | 0.9828 |

| 0.2857 | 0.65 | 230 | 0.0629 | 0.9836 |

| 0.2644 | 0.68 | 240 | 0.0612 | 0.9842 |

| 0.2461 | 0.71 | 250 | 0.0601 | 0.9836 |

| 0.2802 | 0.74 | 260 | 0.0589 | 0.9842 |

| 0.2481 | 0.77 | 270 | 0.0604 | 0.9838 |

| 0.2641 | 0.8 | 280 | 0.0591 | 0.9846 |

| 0.2737 | 0.82 | 290 | 0.0581 | 0.9842 |

| 0.2391 | 0.85 | 300 | 0.0565 | 0.9852 |

| 0.2283 | 0.88 | 310 | 0.0558 | 0.986 |

| 0.2626 | 0.91 | 320 | 0.0559 | 0.9852 |

| 0.2325 | 0.94 | 330 | 0.0563 | 0.9846 |

| 0.2459 | 0.97 | 340 | 0.0565 | 0.9846 |

| 0.2474 | 1.0 | 350 | 0.0564 | 0.9844 |

### Framework versions

- Transformers 4.35.0

- Pytorch 2.1.1

- Datasets 2.14.6

- Tokenizers 0.14.1

|

User1115/whisper-large-v2-test-singleWord-small-50steps

|

User1115

| 2023-11-22T07:58:10Z | 2 | 0 |

peft

|

[

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:openai/whisper-large-v2",

"base_model:adapter:openai/whisper-large-v2",

"region:us"

] | null | 2023-11-22T07:51:59Z |

---

library_name: peft

base_model: openai/whisper-large-v2

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.6.3.dev0

|

LoneStriker/Tess-M-v1.1-3.0bpw-h6-exl2

|

LoneStriker

| 2023-11-22T07:57:20Z | 6 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-11-22T07:47:13Z |

---

license: other

license_name: yi-34b

license_link: https://huggingface.co/01-ai/Yi-34B/blob/main/LICENSE

---

# Tess

Tess, short for Tessoro/Tessoso, is a general purpose Large Language Model series. Tess-M-v1.1 was trained on the Yi-34B-200K base.

# Prompt Format:

```

SYSTEM: <ANY SYSTEM CONTEXT>

USER:

ASSISTANT:

```

|

CyberPeace-Institute/SecureBERT-NER

|

CyberPeace-Institute

| 2023-11-22T07:53:38Z | 316 | 14 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"roberta",

"token-classification",

"en",

"arxiv:2204.02685",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-06-23T11:12:52Z |

---

language:

- en

library_name: transformers

pipeline_tag: token-classification

widget:

- text: >-

Microsoft Threat Intelligence analysts assess with high confidence that the

malware, which we call KingsPawn, is developed by DEV-0196 and therefore

strongly linked to QuaDream. We assess with medium confidence that the

mobile malware we associate with DEV-0196 is part of the system publicly

discussed as REIGN.

example_title: example

license: mit

---

# Named Entity Recognition for Cybersecurity

This model has been finetuned with SecureBERT (https://arxiv.org/abs/2204.02685)

on the APTNER dataset (https://ieeexplore.ieee.org/document/9776031)

## NER Classes

|

joshhu1123/DPO-mistral-no1

|

joshhu1123

| 2023-11-22T07:35:49Z | 0 | 0 |

peft

|

[

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:mistralai/Mistral-7B-v0.1",

"base_model:adapter:mistralai/Mistral-7B-v0.1",

"region:us"

] | null | 2023-11-22T07:35:46Z |

---

library_name: peft

base_model: mistralai/Mistral-7B-v0.1

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.6.3.dev0

|

uukuguy/speechless-mistral-dolphin-orca-platypus-samantha-7b-dare-0.85

|

uukuguy

| 2023-11-22T07:30:53Z | 1,425 | 1 |

transformers

|

[

"transformers",

"pytorch",

"mistral",

"text-generation",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-11-22T07:25:54Z |

---

license: llama2

---

Experiment for DARE(Drop and REscale), most of the delta parameters can be directly set to zeros without affecting the capabilities of SFT LMs and larger models can tolerate a higher proportion of discarded parameters.

weight_mask_rate: 0.85 / use_weight_rescale: True / mask_stratery: random / scaling_coefficient: 1.0

| Model | Average | ARC | HellaSwag | MMLU | TruthfulQA | Winogrande | GSM8K | DROP |

| ------ | ------ | ------ | ------ | ------ | ------ | ------ | ------ | ------ |

| Intel/neural-chat-7b-v3-1 | 59.06 | 66.21 | 83.64 | 62.37 | 59.65 | 78.14 | 19.56 | 43.84 |

| migtissera/SynthIA-7B-v1.3 | 57.11 | 62.12 | 83.45 | 62.65 | 51.37 | 78.85 | 17.59 | 43.76 |

| bhenrym14/mistral-7b-platypus-fp16 | 56.89 | 63.05 | 84.15 | 64.11 | 45.07 | 78.53 | 17.36 | 45.92 |

| jondurbin/airoboros-m-7b-3.1.2 | 56.24 | 61.86 | 83.51 | 61.91 | 53.75 | 77.58 | 13.87 | 41.2 |

| uukuguy/speechless-code-mistral-orca-7b-v1.0 | 55.33 | 59.64 | 82.25 | 61.33 | 48.45 | 77.51 | 8.26 | 49.89 |

| teknium/CollectiveCognition-v1.1-Mistral-7B | 53.87 | 62.12 | 84.17 | 62.35 | 57.62 | 75.37 | 15.62 | 19.85 |

| Open-Orca/Mistral-7B-SlimOrca | 53.34 | 62.54 | 83.86 | 62.77 | 54.23 | 77.43 | 21.38 | 11.2 |

| uukuguy/speechless-mistral-dolphin-orca-platypus-samantha-7b | 53.34 | 64.33 | 84.4 | 63.72 | 52.52 | 78.37 | 21.38 | 8.66 |

| ehartford/dolphin-2.2.1-mistral-7b | 53.06 | 63.48 | 83.86 | 63.28 | 53.17 | 78.37 | 21.08 | 8.19 |

| teknium/CollectiveCognition-v1-Mistral-7B | 52.55 | 62.37 | 85.5 | 62.76 | 54.48 | 77.58 | 17.89 | 7.22 |

| HuggingFaceH4/zephyr-7b-alpha | 52.4 | 61.01 | 84.04 | 61.39 | 57.9 | 78.61 | 14.03 | 9.82 |

| ehartford/samantha-1.2-mistral-7b | 52.16 | 64.08 | 85.08 | 63.91 | 50.4 | 78.53 | 16.98 | 6.13 |

|

Emmanuelalo52/xlm-roberta-base-finetuned-panx-de

|

Emmanuelalo52

| 2023-11-22T07:23:01Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"base_model:FacebookAI/xlm-roberta-base",

"base_model:finetune:FacebookAI/xlm-roberta-base",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-11-14T11:58:54Z |

---

license: mit

base_model: xlm-roberta-base

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

config: PAN-X.de

split: validation

args: PAN-X.de

metrics:

- name: F1

type: f1

value: 0.8630705394190871

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1345

- F1: 0.8631

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 30

- eval_batch_size: 30

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2658 | 1.0 | 420 | 0.1534 | 0.8227 |

| 0.1271 | 2.0 | 840 | 0.1410 | 0.8483 |

| 0.0836 | 3.0 | 1260 | 0.1345 | 0.8631 |

### Framework versions

- Transformers 4.34.1

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.14.1

|

sabasazad/sft_zephyr

|

sabasazad

| 2023-11-22T07:18:46Z | 0 | 0 | null |

[

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:HuggingFaceH4/zephyr-7b-alpha",

"base_model:finetune:HuggingFaceH4/zephyr-7b-alpha",

"license:mit",

"region:us"

] | null | 2023-11-22T07:14:02Z |

---

license: mit

base_model: HuggingFaceH4/zephyr-7b-alpha

tags:

- generated_from_trainer

model-index:

- name: sft_zephyr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sft_zephyr

This model is a fine-tuned version of [HuggingFaceH4/zephyr-7b-alpha](https://huggingface.co/HuggingFaceH4/zephyr-7b-alpha) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

goofyai/disney_style_xl

|

goofyai

| 2023-11-22T06:44:53Z | 758 | 15 |

diffusers

|

[

"diffusers",

"text-to-image",

"stable-diffusion",

"lora",

"template:sd-lora",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"base_model:adapter:stabilityai/stable-diffusion-xl-base-1.0",

"license:openrail",

"region:us"

] |

text-to-image

| 2023-11-22T06:40:59Z |

---

tags:

- text-to-image

- stable-diffusion

- lora

- diffusers

- template:sd-lora

widget:

- text: disney style,animal focus, animal, cat

parameters:

negative_prompt: bad quality, deformed, artifacts, digital noise

output:

url: images/c9ad912d-e9b1-4807-950d-ab2d07eaed6e.png

- text: >-

disney style,one girl wearing round glasses in school dress, short skirt and

socks. white shirt with black necktie

parameters:

negative_prompt: bad quality, deformed, artifacts, digital noise

output:

url: images/a2ed97c6-1ab5-431c-a4ae-73cedfb494e4.png

- text: >-

disney style, brown eyes, white shirt, round eyewear, shirt, earrings,

closed mouth, brown hair, jewelry, glasses, looking at viewer, dark skin,

1girl, solo, dark-skinned female, very dark skin, curly hair, lips,

portrait, black hair, print shirt, short hair, blurry background, outdoors,

yellow-framed eyewear, blurry

parameters:

negative_prompt: bad quality, deformed, artifacts, digital noise

output:

url: images/d7c67c24-9116-40da-a75f-bf42a211a6c0.png

- text: >-

disney style, uniform, rabbit, shirt, vest, day, upper body, hands on hips,

rabbit girl, animal nose, smile, furry, police, 1girl, solo, animal ears,

rabbit ears, policewoman, grey fur, furry female, long sleeves, purple eyes,

blurry background, police uniform, outdoors, blurry, blue shirt

parameters:

negative_prompt: bad quality, deformed, artifacts, digital noise

output:

url: images/1d0aac43-aa2a-495c-84fd-ca2c9eb22a0d.jpg

- text: >-

disney style, rain, furry, bear, 1boy, solo, blue headwear, water drop,

baseball cap, outdoors, blurry, shirt, male focus, furry male, hat, blue

shirt

parameters:

negative_prompt: bad quality, deformed, artifacts, digital noise

output:

url: images/5cd36626-22da-46d2-aa79-2ca31c80fd59.png

- text: >-

disney style, looking at viewer, long hair, dress, lipstick, braid, hair

over shoulder, blonde hair, 1girl, solo, purple dress, makeup, stairs, blue

eyes, single braid

parameters:

negative_prompt: bad quality, deformed, artifacts, digital noise

output:

url: images/4af61860-6dca-4694-9f31-ceaf08071e6d.png

- text: >-

disney style, lipstick, dress, smile, braid, tiara, blonde hair, 1girl,

solo, upper body, gloves, makeup, crown, blue eyes, cape

output:

url: images/882eb6c8-5c6c-4694-b3f1-f79f8df8ce8a.jpg

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: disney style

license: openrail

---

# Disney style xl

<Gallery />

## Trigger words

You should use `disney style` to trigger the image generation.

## Download model

Weights for this model are available in Safetensors format.

[Download](/goofyai/disney_style_xl/tree/main) them in the Files & versions tab.

|

andakm/swin-tiny-patch4-window7-224

|

andakm

| 2023-11-22T06:38:46Z | 7 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"swin",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"base_model:microsoft/swin-tiny-patch4-window7-224",

"base_model:finetune:microsoft/swin-tiny-patch4-window7-224",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2023-11-22T06:30:43Z |

---

license: apache-2.0

base_model: microsoft/swin-tiny-patch4-window7-224

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: swin-tiny-patch4-window7-224

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.5294117647058824

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 1.3635

- Accuracy: 0.5294

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 0.8 | 3 | 1.7560 | 0.3137 |

| No log | 1.87 | 7 | 1.6225 | 0.3725 |

| 1.7919 | 2.93 | 11 | 1.5661 | 0.4510 |

| 1.7919 | 4.0 | 15 | 1.5332 | 0.4510 |

| 1.7919 | 4.8 | 18 | 1.4522 | 0.5294 |

| 1.5187 | 5.87 | 22 | 1.3873 | 0.4902 |

| 1.5187 | 6.93 | 26 | 1.3741 | 0.4902 |

| 1.2773 | 8.0 | 30 | 1.3635 | 0.5294 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

LoneStriker/Yarn-Llama-2-70b-32k-2.55bpw-h6-exl2

|

LoneStriker

| 2023-11-22T06:24:59Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"custom_code",

"en",

"dataset:emozilla/yarn-train-tokenized-8k-llama",

"arxiv:2309.00071",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-11-22T06:11:36Z |

---

metrics:

- perplexity

library_name: transformers

license: apache-2.0

language:

- en

datasets:

- emozilla/yarn-train-tokenized-8k-llama

---

# Model Card: Yarn-Llama-2-70b-32k

[Preprint (arXiv)](https://arxiv.org/abs/2309.00071)

[GitHub](https://github.com/jquesnelle/yarn)

The authors would like to thank [LAION AI](https://laion.ai/) for their support of compute for this model.

It was trained on the [JUWELS](https://www.fz-juelich.de/en/ias/jsc/systems/supercomputers/juwels) supercomputer.

## Model Description

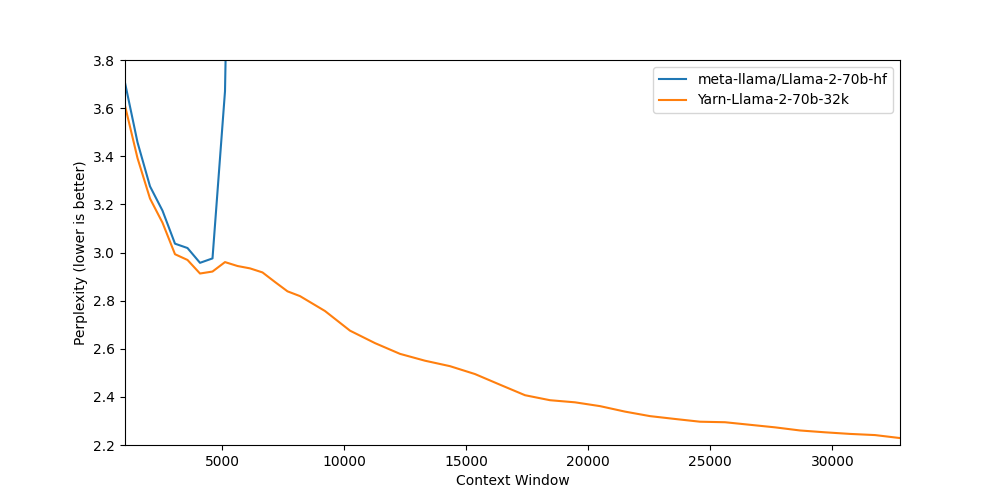

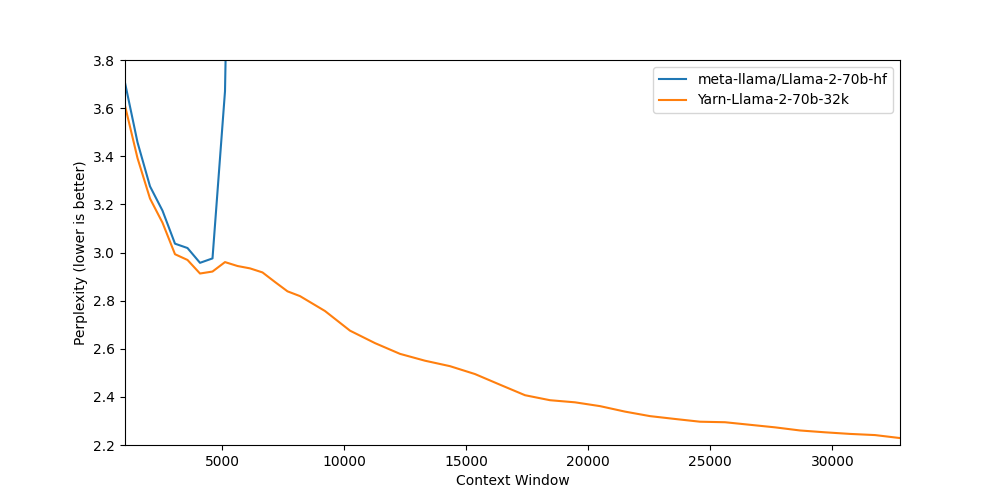

Nous-Yarn-Llama-2-70b-32k is a state-of-the-art language model for long context, further pretrained on long context data for 400 steps using the YaRN extension method.

It is an extension of [Llama-2-70b-hf](meta-llama/Llama-2-70b-hf) and supports a 32k token context window.

To use, pass `trust_remote_code=True` when loading the model, for example

```python

model = AutoModelForCausalLM.from_pretrained("NousResearch/Yarn-Llama-2-70b-32k",

use_flash_attention_2=True,

torch_dtype=torch.bfloat16,

device_map="auto",

trust_remote_code=True)

```

In addition you will need to use the latest version of `transformers` (until 4.35 comes out)

```sh

pip install git+https://github.com/huggingface/transformers

```

## Benchmarks

Long context benchmarks:

| Model | Context Window | 1k PPL | 2k PPL | 4k PPL | 8k PPL | 16k PPL | 32k PPL |

|-------|---------------:|-------:|--------:|------:|-------:|--------:|--------:|

| [Llama-2-70b-hf](meta-llama/Llama-2-70b-hf) | 4k | 3.71 | 3.27 | 2.96 | - | - | - |

| [Yarn-Llama-2-70b-32k](https://huggingface.co/NousResearch/Yarn-Llama-2-70b-32k) | 32k | 3.61 | 3.22 | 2.91 | 2.82 | 2.45 | 2.23 |

Short context benchmarks showing that quality degradation is minimal:

| Model | Context Window | ARC-c | MMLU | Truthful QA |

|-------|---------------:|------:|-----:|------------:|

| [Llama-2-70b-hf](meta-llama/Llama-2-70b-hf) | 4k | 67.32 | 69.83 | 44.92 |

| [Yarn-Llama-2-70b-32k](https://huggingface.co/NousResearch/Yarn-Llama-2-70b-32k) | 32k | 67.41 | 68.84 | 46.14 |

## Collaborators

- [bloc97](https://github.com/bloc97): Methods, paper and evals

- [@theemozilla](https://twitter.com/theemozilla): Methods, paper, model training, and evals

- [@EnricoShippole](https://twitter.com/EnricoShippole): Model training

- [honglu2875](https://github.com/honglu2875): Paper and evals

|

PK-B/roof_classifier

|

PK-B

| 2023-11-22T06:20:44Z | 5 | 0 |

transformers

|

[

"transformers",

"tf",

"vit",

"image-classification",

"generated_from_keras_callback",

"base_model:google/vit-base-patch16-224-in21k",

"base_model:finetune:google/vit-base-patch16-224-in21k",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2023-11-22T06:16:11Z |

---

license: apache-2.0

base_model: google/vit-base-patch16-224-in21k

tags:

- generated_from_keras_callback

model-index:

- name: PK-B/roof_classifier

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# PK-B/roof_classifier

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 1.6844

- Validation Loss: 2.3315

- Train Accuracy: 0.425

- Epoch: 14

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'module': 'keras.optimizers.schedules', 'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 3e-05, 'decay_steps': 1770, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, 'registered_name': None}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Accuracy | Epoch |

|:----------:|:---------------:|:--------------:|:-----:|

| 2.9736 | 2.9756 | 0.05 | 0 |

| 2.9016 | 2.9430 | 0.1 | 1 |

| 2.8192 | 2.9084 | 0.1 | 2 |

| 2.7004 | 2.8564 | 0.175 | 3 |

| 2.6005 | 2.8109 | 0.175 | 4 |

| 2.4981 | 2.7452 | 0.225 | 5 |

| 2.3819 | 2.6988 | 0.2125 | 6 |

| 2.2867 | 2.6998 | 0.25 | 7 |

| 2.1804 | 2.6510 | 0.275 | 8 |

| 2.1115 | 2.5307 | 0.3375 | 9 |

| 2.0161 | 2.5523 | 0.3 | 10 |

| 1.9189 | 2.5310 | 0.2875 | 11 |

| 1.8863 | 2.4733 | 0.3375 | 12 |

| 1.7518 | 2.4233 | 0.3625 | 13 |

| 1.6844 | 2.3315 | 0.425 | 14 |

### Framework versions

- Transformers 4.35.2

- TensorFlow 2.14.0

- Datasets 2.15.0

- Tokenizers 0.15.0

|

HeavenlyJoe/flan-t5-large-eng-tgl-translation

|

HeavenlyJoe

| 2023-11-22T06:12:34Z | 6 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"t5",

"text2text-generation",

"generated_from_trainer",

"base_model:google/flan-t5-large",

"base_model:finetune:google/flan-t5-large",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-11-22T00:30:49Z |

---

license: apache-2.0

base_model: google/flan-t5-large

tags:

- generated_from_trainer

metrics:

- bleu

model-index:

- name: t5-flan-t5-xl-fine-tuning-for-translation

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# HeavenlyJoe/flan-t5-large-eng-tgl-translation

This model is a fine-tuned version of [google/flan-t5-large](https://huggingface.co/google/flan-t5-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.3378

- Bleu: 0.4953

- Gen Len: 19.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:------:|:-------:|

| 1.9527 | 0.44 | 25 | 1.5761 | 0.2146 | 19.0 |

| 1.8866 | 0.88 | 50 | 1.5303 | 0.293 | 19.0 |

| 1.8045 | 1.32 | 75 | 1.5092 | 0.2499 | 19.0 |

| 1.7596 | 1.75 | 100 | 1.4840 | 0.3498 | 19.0 |

| 1.7354 | 2.19 | 125 | 1.4628 | 0.3282 | 19.0 |

| 1.6866 | 2.63 | 150 | 1.4437 | 0.3205 | 19.0 |

| 1.6605 | 3.07 | 175 | 1.4275 | 0.3781 | 19.0 |

| 1.6157 | 3.51 | 200 | 1.4177 | 0.3805 | 19.0 |

| 1.6237 | 3.95 | 225 | 1.4007 | 0.398 | 19.0 |

| 1.5948 | 4.39 | 250 | 1.3954 | 0.4022 | 19.0 |

| 1.5555 | 4.82 | 275 | 1.3866 | 0.3854 | 19.0 |

| 1.5388 | 5.26 | 300 | 1.3761 | 0.4105 | 19.0 |

| 1.5448 | 5.7 | 325 | 1.3712 | 0.4339 | 19.0 |

| 1.5149 | 6.14 | 350 | 1.3635 | 0.4342 | 19.0 |

| 1.5104 | 6.58 | 375 | 1.3566 | 0.459 | 19.0 |

| 1.4955 | 7.02 | 400 | 1.3525 | 0.4888 | 19.0 |

| 1.467 | 7.46 | 425 | 1.3491 | 0.4723 | 19.0 |

| 1.4872 | 7.89 | 450 | 1.3440 | 0.491 | 19.0 |

| 1.4766 | 8.33 | 475 | 1.3423 | 0.5183 | 19.0 |

| 1.4553 | 8.77 | 500 | 1.3404 | 0.5026 | 19.0 |

| 1.464 | 9.21 | 525 | 1.3384 | 0.4979 | 19.0 |

| 1.454 | 9.65 | 550 | 1.3378 | 0.4953 | 19.0 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

Charlie911/vicuna-7b-v1.5-lora-drop

|

Charlie911

| 2023-11-22T06:09:40Z | 2 | 0 |

peft

|

[

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:lmsys/vicuna-7b-v1.5",

"base_model:adapter:lmsys/vicuna-7b-v1.5",

"license:llama2",

"region:us"

] | null | 2023-11-21T16:50:16Z |

---

library_name: peft

base_model: lmsys/vicuna-7b-v1.5

license: llama2

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.6.2

|

biggiesmallslives/siamese_signature_dlt

|

biggiesmallslives

| 2023-11-22T05:58:34Z | 1 | 0 |

transformers

|

[

"transformers",

"siamese-network",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2023-11-21T10:29:58Z |

---

# For reference on model card metadata, see the spec: https://github.com/huggingface/hub-docs/blob/main/modelcard.md?plain=1

# Doc / guide: https://huggingface.co/docs/hub/model-cards

{}

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

This modelcard aims to be a base template for new models. It has been generated using [this raw template](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/modelcard_template.md?plain=1).

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

rozek/StableLM-3B-4E1T_GGUF

|

rozek

| 2023-11-22T05:57:03Z | 7 | 3 | null |

[

"gguf",

"license:cc-by-sa-4.0",

"endpoints_compatible",

"region:us"

] | null | 2023-11-17T16:00:42Z |

---

license: cc-by-sa-4.0

---

# StableLM-3B-4E1T #

* Model Creator: [Stability AI](https://huggingface.co/stabilityai)

* original Model: [StableLM-3B-4E1T](https://huggingface.co/stabilityai/stablelm-3b-4e1t)

## Description ##

This repository contains the most relevant quantizations of Stability AI's

[StableLM-3B-4E1T](https://huggingface.co/stabilityai/stablelm-3b-4e1t) model

in GGUF format - ready to be used with

[llama.cpp](https://github.com/ggerganov/llama.cpp) and similar applications.

## About StableLM-3B-4E1T ##

Stability AI claims: "_StableLM-3B-4E1T achieves

state-of-the-art performance (September 2023) at the 3B parameter scale

for open-source models and is competitive with many of the popular

contemporary 7B models, even outperforming our most recent 7B

StableLM-Base-Alpha-v2._"

According to them "_The model is intended to be used as a foundational base

model for application-specific fine-tuning. Developers must evaluate and

fine-tune the model for safe performance in downstream applications._"

## Files ##

Right now, the following quantizations are available:

* [stablelm-3b-4e1t-Q3_K_M](https://huggingface.co/rozek/StableLM-3B-4E1T_GGUF/blob/main/stablelm-3b-4e1t-Q3_K_M.bin)

* [stablelm-3b-4e1t-Q4_K_M](https://huggingface.co/rozek/StableLM-3B-4E1T_GGUF/blob/main/stablelm-3b-4e1t-Q4_K_M.bin)

* [stablelm-3b-4e1t-Q5_K_M](https://huggingface.co/rozek/StableLM-3B-4E1T_GGUF/blob/main/stablelm-3b-4e1t-Q5_K_M.bin)

* [stablelm-3b-4e1t-Q6_K](https://huggingface.co/rozek/StableLM-3B-4E1T_GGUF/blob/main/stablelm-3b-4e1t-Q6_K.bin)

* [stablelm-3b-4e1t-Q8_K](https://huggingface.co/rozek/StableLM-3B-4E1T_GGUF/blob/main/stablelm-3b-4e1t-Q8_K.bin)

(tell me if you need more)

These files are presented here with the written permission of Stability AI (although

access to the model itself is still "gated").

## Usage Details ##

Any technical details can be found on the

[original model card](https://huggingface.co/stabilityai/stablelm-3b-4e1t) and in

a paper on [StableLM-3B-4E1T](https://stability.wandb.io/stability-llm/stable-lm/reports/StableLM-3B-4E1T--VmlldzoyMjU4?accessToken=u3zujipenkx5g7rtcj9qojjgxpconyjktjkli2po09nffrffdhhchq045vp0wyfo).

The most important ones for using this model are

* context length is 4096

* there does not seem to be a specific prompt structure - just provide the text

you want to be completed

### Text Completion with LLaMA.cpp ###

For simple inferencing, use a command similar to

```

./main -m stablelm-3b-4e1t-Q8_0.bin --temp 0 --top-k 4 --prompt "who was Joseph Weizenbaum?"

```

### Text Tokenization with LLaMA.cpp ###

To get a list of tokens, use a command similar to

```

./tokenization -m stablelm-3b-4e1t-Q8_0.bin --prompt "who was Joseph Weizenbaum?"

```

### Embeddings Calculation with LLaMA.cpp ###

Text embeddings are calculated with a command similar to

```

./embedding -m stablelm-3b-4e1t-Q8_0.bin --prompt "who was Joseph Weizenbaum?"

```

## Conversion Details ##

Conversion was done using a Docker container based on

`python:3.10.13-slim-bookworm`

After downloading the original model files into a separate directory, the

container was started with

```

docker run --interactive \

--mount type=bind,src=<local-folder>,dst=/llm \

python:3.10.13-slim-bookworm

```

where `<local-folder>` was the path to the folder containing the downloaded

model.

Within the container's terminal, the following commands were issued:

```

apt-get update

apt-get install build-essential git -y

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp

## Important: uncomment the make command that fits to your host computer!

## on Apple Silicon machines: (see https://github.com/ggerganov/llama.cpp/issues/1655)

# UNAME_M=arm64 UNAME_p=arm LLAMA_NO_METAL=1 make

## otherwise

# make

python3 -m pip install -r requirements.txt

pip install torch transformers

# see https://github.com/ggerganov/llama.cpp/issues/3344

python3 convert-hf-to-gguf.py /llm

mv /llm/ggml-model-f16.gguf /llm/stablelm-3b-4e1t.gguf

# the following command is just an example, modify it as needed

./quantize /llm/stablelm-3b-4e1t.gguf /llm/stablelm-3b-4e1t_Q3_K_M.gguf q3_k_m

```

After conversion, the mounted folder (the one that originally contained the

model only) now also contains all conversions.

The container itself may now be safely deleted - the conversions will remain on

disk.

## License ##

The original "_Model checkpoints are licensed under the Creative Commons license

([CC BY-SA-4.0](https://creativecommons.org/licenses/by-sa/4.0/)). Under this

license, you must give [credit](https://creativecommons.org/licenses/by/4.0/#)

to Stability AI, provide a link to the license, and

[indicate if changes were made](https://creativecommons.org/licenses/by/4.0/#).

You may do so in any reasonable manner, but not in any way that suggests the Stability AI endorses you or your use._"

So, in order to be fair and give credits to whom they belong:

* the original model was created and published by [Stability AI](https://huggingface.co/stabilityai)

* besides quantization, no changes were applied to the model itself

|

BlitherBoom/q-FrozenLake-v1-4x4-noSlippery

|

BlitherBoom

| 2023-11-22T05:56:07Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-11-22T05:56:02Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="BlitherBoom/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

LoneStriker/Yarn-Llama-2-70b-32k-4.65bpw-h6-exl2

|

LoneStriker

| 2023-11-22T05:49:43Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"custom_code",

"en",

"dataset:emozilla/yarn-train-tokenized-8k-llama",

"arxiv:2309.00071",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-11-22T05:25:44Z |

---

metrics:

- perplexity

library_name: transformers

license: apache-2.0

language:

- en

datasets:

- emozilla/yarn-train-tokenized-8k-llama

---

# Model Card: Yarn-Llama-2-70b-32k

[Preprint (arXiv)](https://arxiv.org/abs/2309.00071)

[GitHub](https://github.com/jquesnelle/yarn)

The authors would like to thank [LAION AI](https://laion.ai/) for their support of compute for this model.

It was trained on the [JUWELS](https://www.fz-juelich.de/en/ias/jsc/systems/supercomputers/juwels) supercomputer.

## Model Description

Nous-Yarn-Llama-2-70b-32k is a state-of-the-art language model for long context, further pretrained on long context data for 400 steps using the YaRN extension method.

It is an extension of [Llama-2-70b-hf](meta-llama/Llama-2-70b-hf) and supports a 32k token context window.

To use, pass `trust_remote_code=True` when loading the model, for example

```python

model = AutoModelForCausalLM.from_pretrained("NousResearch/Yarn-Llama-2-70b-32k",

use_flash_attention_2=True,

torch_dtype=torch.bfloat16,

device_map="auto",

trust_remote_code=True)

```

In addition you will need to use the latest version of `transformers` (until 4.35 comes out)

```sh

pip install git+https://github.com/huggingface/transformers

```

## Benchmarks

Long context benchmarks:

| Model | Context Window | 1k PPL | 2k PPL | 4k PPL | 8k PPL | 16k PPL | 32k PPL |

|-------|---------------:|-------:|--------:|------:|-------:|--------:|--------:|

| [Llama-2-70b-hf](meta-llama/Llama-2-70b-hf) | 4k | 3.71 | 3.27 | 2.96 | - | - | - |

| [Yarn-Llama-2-70b-32k](https://huggingface.co/NousResearch/Yarn-Llama-2-70b-32k) | 32k | 3.61 | 3.22 | 2.91 | 2.82 | 2.45 | 2.23 |

Short context benchmarks showing that quality degradation is minimal:

| Model | Context Window | ARC-c | MMLU | Truthful QA |

|-------|---------------:|------:|-----:|------------:|

| [Llama-2-70b-hf](meta-llama/Llama-2-70b-hf) | 4k | 67.32 | 69.83 | 44.92 |

| [Yarn-Llama-2-70b-32k](https://huggingface.co/NousResearch/Yarn-Llama-2-70b-32k) | 32k | 67.41 | 68.84 | 46.14 |

## Collaborators

- [bloc97](https://github.com/bloc97): Methods, paper and evals

- [@theemozilla](https://twitter.com/theemozilla): Methods, paper, model training, and evals

- [@EnricoShippole](https://twitter.com/EnricoShippole): Model training

- [honglu2875](https://github.com/honglu2875): Paper and evals

|

Jinhwan99/polyglot-ko-12.8b-qlora-512steps

|

Jinhwan99

| 2023-11-22T05:49:35Z | 0 | 0 |

peft

|

[

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:EleutherAI/polyglot-ko-12.8b",

"base_model:adapter:EleutherAI/polyglot-ko-12.8b",

"region:us"

] | null | 2023-11-22T05:49:26Z |

---

library_name: peft

base_model: EleutherAI/polyglot-ko-12.8b

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]