modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-11 06:30:11

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 555

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-11 06:29:58

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

Lollitor/OnlyProtein10

|

Lollitor

| 2024-02-19T11:16:48Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2024-02-19T11:16:46Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

CocosNucifera/q-Taxi-v3.1

|

CocosNucifera

| 2024-02-19T11:15:47Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2024-02-19T11:15:44Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3.1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="CocosNucifera/q-Taxi-v3.1", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

LoneStriker/AlphaMonarch-7B-GGUF

|

LoneStriker

| 2024-02-19T11:14:14Z | 22 | 4 | null |

[

"gguf",

"merge",

"lazymergekit",

"dpo",

"rlhf",

"en",

"base_model:mlabonne/NeuralMonarch-7B",

"base_model:quantized:mlabonne/NeuralMonarch-7B",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2024-02-19T11:03:28Z |

---

license: cc-by-nc-4.0

tags:

- merge

- lazymergekit

- dpo

- rlhf

dataset:

- mlabonne/truthy-dpo-v0.1

- mlabonne/distilabel-intel-orca-dpo-pairs

- mlabonne/chatml-OpenHermes2.5-dpo-binarized-alpha

base_model:

- mlabonne/NeuralMonarch-7B

language:

- en

---

# 👑 AlphaMonarch-7B

**tl;dr: AlphaMonarch-7B is a new DPO merge that retains all the reasoning abilities of the very best merges and significantly improves its conversational abilities. Kind of the best of both worlds in a 7B model. 🎉**

AlphaMonarch-7B is a DPO fine-tuned of [mlabonne/NeuralMonarch-7B](https://huggingface.co/mlabonne/NeuralMonarch-7B/) using the [argilla/OpenHermes2.5-dpo-binarized-alpha](https://huggingface.co/datasets/argilla/OpenHermes2.5-dpo-binarized-alpha) preference dataset.

It is based on a merge of the following models using [LazyMergekit](https://colab.research.google.com/drive/1obulZ1ROXHjYLn6PPZJwRR6GzgQogxxb?usp=sharing):

* [mlabonne/OmniTruthyBeagle-7B-v0](https://huggingface.co/mlabonne/OmniTruthyBeagle-7B-v0)

* [mlabonne/NeuBeagle-7B](https://huggingface.co/mlabonne/NeuBeagle-7B)

* [mlabonne/NeuralOmniBeagle-7B](https://huggingface.co/mlabonne/NeuralOmniBeagle-7B)

Special thanks to [Jon Durbin](https://huggingface.co/jondurbin), [Intel](https://huggingface.co/Intel), [Argilla](https://huggingface.co/argilla), and [Teknium](https://huggingface.co/teknium) for the preference datasets.

**Try the demo**: https://huggingface.co/spaces/mlabonne/AlphaMonarch-7B-GGUF-Chat

## 🔍 Applications

This model uses a context window of 8k. I recommend using it with the Mistral Instruct chat template (works perfectly with LM Studio).

It is one of the very best 7B models in terms of instructing following and reasoning abilities and can be used for conversations, RP, and storytelling. Note that it tends to have a quite formal and sophisticated style, but it can be changed by modifying the prompt.

## ⚡ Quantized models

* **GGUF**: https://huggingface.co/mlabonne/AlphaMonarch-7B-GGUF

## 🏆 Evaluation

### Nous

AlphaMonarch-7B is the best-performing 7B model on Nous' benchmark suite (evaluation performed using [LLM AutoEval](https://github.com/mlabonne/llm-autoeval)). See the entire leaderboard [here](https://huggingface.co/spaces/mlabonne/Yet_Another_LLM_Leaderboard).

| Model | Average | AGIEval | GPT4All | TruthfulQA | Bigbench |

|---|---:|---:|---:|---:|---:|

| [**AlphaMonarch-7B**](https://huggingface.co/mlabonne/AlphaMonarch-7B) [📄](https://gist.github.com/mlabonne/1d33c86824b3a11d2308e36db1ba41c1) | **62.74** | **45.37** | **77.01** | **78.39** | **50.2** |

| [NeuralMonarch-7B](https://huggingface.co/mlabonne/NeuralMonarch-7B) [📄](https://gist.github.com/mlabonne/64050c96c6aa261a8f5b403190c8dee4) | 62.73 | 45.31 | 76.99 | 78.35 | 50.28 |

| [Monarch-7B](https://huggingface.co/mlabonne/Monarch-7B) [📄](https://gist.github.com/mlabonne/0b8d057c5ece41e0290580a108c7a093) | 62.68 | 45.48 | 77.07 | 78.04 | 50.14 |

| [teknium/OpenHermes-2.5-Mistral-7B](https://huggingface.co/teknium/OpenHermes-2.5-Mistral-7B) [📄](https://gist.github.com/mlabonne/88b21dd9698ffed75d6163ebdc2f6cc8) | 52.42 | 42.75 | 72.99 | 52.99 | 40.94 |

| [mlabonne/NeuralHermes-2.5-Mistral-7B](https://huggingface.co/mlabonne/NeuralHermes-2.5-Mistral-7B) [📄](https://gist.github.com/mlabonne/14687f1eb3425b166db511f31f8e66f6) | 53.51 | 43.67 | 73.24 | 55.37 | 41.76 |

| [mlabonne/NeuralBeagle14-7B](https://huggingface.co/mlabonne/NeuralBeagle14-7B) [📄](https://gist.github.com/mlabonne/ad0c665bbe581c8420136c3b52b3c15c) | 60.25 | 46.06 | 76.77 | 70.32 | 47.86 |

| [mlabonne/NeuralOmniBeagle-7B](https://huggingface.co/mlabonne/NeuralOmniBeagle-7B) [📄](https://gist.github.com/mlabonne/0e49d591787185fa5ae92ca5d9d4a1fd) | 62.3 | 45.85 | 77.26 | 76.06 | 50.03 |

| [eren23/dpo-binarized-NeuralTrix-7B](https://huggingface.co/eren23/dpo-binarized-NeuralTrix-7B) [📄](https://gist.github.com/CultriX-Github/dbdde67ead233df0c7c56f1b091f728c) | 62.5 | 44.57 | 76.34 | 79.81 | 49.27 |

| [CultriX/NeuralTrix-7B-dpo](https://huggingface.co/CultriX/NeuralTrix-7B-dpo) [📄](https://gist.github.com/CultriX-Github/df0502599867d4043b45d9dafb5976e8) | 62.5 | 44.61 | 76.33 | 79.8 | 49.24 |

### EQ-bench

AlphaMonarch-7B is also outperforming 70B and 120B parameter models on [EQ-bench](https://eqbench.com/) by [Samuel J. Paech](https://twitter.com/sam_paech), who kindly ran the evaluations.

### MT-Bench

```

########## First turn ##########

score

model turn

gpt-4 1 8.95625

OmniBeagle-7B 1 8.31250

AlphaMonarch-7B 1 8.23750

claude-v1 1 8.15000

NeuralMonarch-7B 1 8.09375

gpt-3.5-turbo 1 8.07500

claude-instant-v1 1 7.80000

########## Second turn ##########

score

model turn

gpt-4 2 9.025000

claude-instant-v1 2 8.012658

OmniBeagle-7B 2 7.837500

gpt-3.5-turbo 2 7.812500

claude-v1 2 7.650000

AlphaMonarch-7B 2 7.618750

NeuralMonarch-7B 2 7.375000

########## Average ##########

score

model

gpt-4 8.990625

OmniBeagle-7B 8.075000

gpt-3.5-turbo 7.943750

AlphaMonarch-7B 7.928125

claude-instant-v1 7.905660

claude-v1 7.900000

NeuralMonarch-7B 7.734375

NeuralBeagle14-7B 7.628125

```

### Open LLM Leaderboard

AlphaMonarch-7B is one of the best-performing non-merge 7B models on the Open LLM Leaderboard:

## 💻 Usage

```python

!pip install -qU transformers accelerate

from transformers import AutoTokenizer

import transformers

import torch

model = "mlabonne/AlphaMonarch-7B"

messages = [{"role": "user", "content": "What is a large language model?"}]

tokenizer = AutoTokenizer.from_pretrained(model)

prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto",

)

outputs = pipeline(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

```

|

Yizhang888/mouse20

|

Yizhang888

| 2024-02-19T11:13:39Z | 1 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"text-to-image",

"stable-diffusion-xl",

"stable-diffusion-xl-diffusers",

"lora",

"template:sd-lora",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"base_model:adapter:stabilityai/stable-diffusion-xl-base-1.0",

"license:openrail++",

"region:us"

] |

text-to-image

| 2024-02-19T11:13:37Z |

---

license: openrail++

library_name: diffusers

tags:

- text-to-image

- stable-diffusion-xl

- stable-diffusion-xl-diffusers

- text-to-image

- diffusers

- lora

- template:sd-lora

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: a photo of TOK computer mouse

widget: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# SDXL LoRA DreamBooth - Yizhang888/mouse20

<Gallery />

## Model description

These are Yizhang888/mouse20 LoRA adaption weights for stabilityai/stable-diffusion-xl-base-1.0.

The weights were trained using [DreamBooth](https://dreambooth.github.io/).

LoRA for the text encoder was enabled: False.

Special VAE used for training: madebyollin/sdxl-vae-fp16-fix.

## Trigger words

You should use a photo of TOK computer mouse to trigger the image generation.

## Download model

Weights for this model are available in Safetensors format.

[Download](Yizhang888/mouse20/tree/main) them in the Files & versions tab.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training details

[TODO: describe the data used to train the model]

|

MaziyarPanahi/NeuralOmniBeagle-7B-GGUF

|

MaziyarPanahi

| 2024-02-19T11:12:57Z | 50 | 1 |

transformers

|

[

"transformers",

"gguf",

"mistral",

"quantized",

"2-bit",

"3-bit",

"4-bit",

"5-bit",

"6-bit",

"8-bit",

"GGUF",

"safetensors",

"text-generation",

"arxiv:1910.09700",

"base_model:mlabonne/OmniBeagle-7B",

"license:cc-by-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us",

"base_model:mlabonne/NeuralOmniBeagle-7B",

"base_model:quantized:mlabonne/NeuralOmniBeagle-7B"

] |

text-generation

| 2024-02-19T11:01:31Z |

---

tags:

- quantized

- 2-bit

- 3-bit

- 4-bit

- 5-bit

- 6-bit

- 8-bit

- GGUF

- transformers

- safetensors

- mistral

- text-generation

- arxiv:1910.09700

- base_model:mlabonne/OmniBeagle-7B

- license:cc-by-4.0

- autotrain_compatible

- endpoints_compatible

- text-generation-inference

- region:us

- text-generation

model_name: NeuralOmniBeagle-7B-GGUF

base_model: mlabonne/NeuralOmniBeagle-7B

inference: false

model_creator: mlabonne

pipeline_tag: text-generation

quantized_by: MaziyarPanahi

---

# [MaziyarPanahi/NeuralOmniBeagle-7B-GGUF](https://huggingface.co/MaziyarPanahi/NeuralOmniBeagle-7B-GGUF)

- Model creator: [mlabonne](https://huggingface.co/mlabonne)

- Original model: [mlabonne/NeuralOmniBeagle-7B](https://huggingface.co/mlabonne/NeuralOmniBeagle-7B)

## Description

[MaziyarPanahi/NeuralOmniBeagle-7B-GGUF](https://huggingface.co/MaziyarPanahi/NeuralOmniBeagle-7B-GGUF) contains GGUF format model files for [mlabonne/NeuralOmniBeagle-7B](https://huggingface.co/mlabonne/NeuralOmniBeagle-7B).

## How to use

Thanks to [TheBloke](https://huggingface.co/TheBloke) for preparing an amazing README on how to use GGUF models:

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [GPT4All](https://gpt4all.io/index.html), a free and open source local running GUI, supporting Windows, Linux and macOS with full GPU accel.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration. Linux available, in beta as of 27/11/2023.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server. Note, as of time of writing (November 27th 2023), ctransformers has not been updated in a long time and does not support many recent models.

### Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: [MaziyarPanahi/NeuralOmniBeagle-7B-GGUF](https://huggingface.co/MaziyarPanahi/NeuralOmniBeagle-7B-GGUF) and below it, a specific filename to download, such as: NeuralOmniBeagle-7B-GGUF.Q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download MaziyarPanahi/NeuralOmniBeagle-7B-GGUF NeuralOmniBeagle-7B-GGUF.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

</details>

<details>

<summary>More advanced huggingface-cli download usage (click to read)</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download [MaziyarPanahi/NeuralOmniBeagle-7B-GGUF](https://huggingface.co/MaziyarPanahi/NeuralOmniBeagle-7B-GGUF) --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download MaziyarPanahi/NeuralOmniBeagle-7B-GGUF NeuralOmniBeagle-7B-GGUF.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 35 -m NeuralOmniBeagle-7B-GGUF.Q4_K_M.gguf --color -c 32768 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "<|im_start|>system

{system_message}<|im_end|>

<|im_start|>user

{prompt}<|im_end|>

<|im_start|>assistant"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 32768` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically. Note that longer sequence lengths require much more resources, so you may need to reduce this value.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions can be found in the text-generation-webui documentation, here: [text-generation-webui/docs/04 ‐ Model Tab.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/04%20%E2%80%90%20Model%20Tab.md#llamacpp).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries. Note that at the time of writing (Nov 27th 2023), ctransformers has not been updated for some time and is not compatible with some recent models. Therefore I recommend you use llama-cpp-python.

### How to load this model in Python code, using llama-cpp-python

For full documentation, please see: [llama-cpp-python docs](https://abetlen.github.io/llama-cpp-python/).

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install llama-cpp-python

# With NVidia CUDA acceleration

CMAKE_ARGS="-DLLAMA_CUBLAS=on" pip install llama-cpp-python

# Or with OpenBLAS acceleration

CMAKE_ARGS="-DLLAMA_BLAS=ON -DLLAMA_BLAS_VENDOR=OpenBLAS" pip install llama-cpp-python

# Or with CLBLast acceleration

CMAKE_ARGS="-DLLAMA_CLBLAST=on" pip install llama-cpp-python

# Or with AMD ROCm GPU acceleration (Linux only)

CMAKE_ARGS="-DLLAMA_HIPBLAS=on" pip install llama-cpp-python

# Or with Metal GPU acceleration for macOS systems only

CMAKE_ARGS="-DLLAMA_METAL=on" pip install llama-cpp-python

# In windows, to set the variables CMAKE_ARGS in PowerShell, follow this format; eg for NVidia CUDA:

$env:CMAKE_ARGS = "-DLLAMA_OPENBLAS=on"

pip install llama-cpp-python

```

#### Simple llama-cpp-python example code

```python

from llama_cpp import Llama

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = Llama(

model_path="./NeuralOmniBeagle-7B-GGUF.Q4_K_M.gguf", # Download the model file first

n_ctx=32768, # The max sequence length to use - note that longer sequence lengths require much more resources

n_threads=8, # The number of CPU threads to use, tailor to your system and the resulting performance

n_gpu_layers=35 # The number of layers to offload to GPU, if you have GPU acceleration available

)

# Simple inference example

output = llm(

"<|im_start|>system

{system_message}<|im_end|>

<|im_start|>user

{prompt}<|im_end|>

<|im_start|>assistant", # Prompt

max_tokens=512, # Generate up to 512 tokens

stop=["</s>"], # Example stop token - not necessarily correct for this specific model! Please check before using.

echo=True # Whether to echo the prompt

)

# Chat Completion API

llm = Llama(model_path="./NeuralOmniBeagle-7B-GGUF.Q4_K_M.gguf", chat_format="llama-2") # Set chat_format according to the model you are using

llm.create_chat_completion(

messages = [

{"role": "system", "content": "You are a story writing assistant."},

{

"role": "user",

"content": "Write a story about llamas."

}

]

)

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

|

openai-community/gpt2-large

|

openai-community

| 2024-02-19T11:11:02Z | 3,600,082 | 283 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"rust",

"onnx",

"safetensors",

"gpt2",

"text-generation",

"en",

"arxiv:1910.09700",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:04Z |

---

language: en

license: mit

---

# GPT-2 Large

## Table of Contents

- [Model Details](#model-details)

- [How To Get Started With the Model](#how-to-get-started-with-the-model)

- [Uses](#uses)

- [Risks, Limitations and Biases](#risks-limitations-and-biases)

- [Training](#training)

- [Evaluation](#evaluation)

- [Environmental Impact](#environmental-impact)

- [Technical Specifications](#technical-specifications)

- [Citation Information](#citation-information)

- [Model Card Authors](#model-card-author)

## Model Details

**Model Description:** GPT-2 Large is the **774M parameter** version of GPT-2, a transformer-based language model created and released by OpenAI. The model is a pretrained model on English language using a causal language modeling (CLM) objective.

- **Developed by:** OpenAI, see [associated research paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf) and [GitHub repo](https://github.com/openai/gpt-2) for model developers.

- **Model Type:** Transformer-based language model

- **Language(s):** English

- **License:** [Modified MIT License](https://github.com/openai/gpt-2/blob/master/LICENSE)

- **Related Models:** [GPT-2](https://huggingface.co/gpt2), [GPT-Medium](https://huggingface.co/gpt2-medium) and [GPT-XL](https://huggingface.co/gpt2-xl)

- **Resources for more information:**

- [Research Paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf)

- [OpenAI Blog Post](https://openai.com/blog/better-language-models/)

- [GitHub Repo](https://github.com/openai/gpt-2)

- [OpenAI Model Card for GPT-2](https://github.com/openai/gpt-2/blob/master/model_card.md)

- Test the full generation capabilities here: https://transformer.huggingface.co/doc/gpt2-large

## How to Get Started with the Model

Use the code below to get started with the model. You can use this model directly with a pipeline for text generation. Since the generation relies on some randomness, we

set a seed for reproducibility:

```python

>>> from transformers import pipeline, set_seed

>>> generator = pipeline('text-generation', model='gpt2-large')

>>> set_seed(42)

>>> generator("Hello, I'm a language model,", max_length=30, num_return_sequences=5)

[{'generated_text': "Hello, I'm a language model, I can do language modeling. In fact, this is one of the reasons I use languages. To get a"},

{'generated_text': "Hello, I'm a language model, which in its turn implements a model of how a human can reason about a language, and is in turn an"},

{'generated_text': "Hello, I'm a language model, why does this matter for you?\n\nWhen I hear new languages, I tend to start thinking in terms"},

{'generated_text': "Hello, I'm a language model, a functional language...\n\nI don't need to know anything else. If I want to understand about how"},

{'generated_text': "Hello, I'm a language model, not a toolbox.\n\nIn a nutshell, a language model is a set of attributes that define how"}]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import GPT2Tokenizer, GPT2Model

tokenizer = GPT2Tokenizer.from_pretrained('gpt2-large')

model = GPT2Model.from_pretrained('gpt2-large')

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import GPT2Tokenizer, TFGPT2Model

tokenizer = GPT2Tokenizer.from_pretrained('gpt2-large')

model = TFGPT2Model.from_pretrained('gpt2-large')

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Uses

#### Direct Use

In their [model card about GPT-2](https://github.com/openai/gpt-2/blob/master/model_card.md), OpenAI wrote:

> The primary intended users of these models are AI researchers and practitioners.

>

> We primarily imagine these language models will be used by researchers to better understand the behaviors, capabilities, biases, and constraints of large-scale generative language models.

#### Downstream Use

In their [model card about GPT-2](https://github.com/openai/gpt-2/blob/master/model_card.md), OpenAI wrote:

> Here are some secondary use cases we believe are likely:

>

> - Writing assistance: Grammar assistance, autocompletion (for normal prose or code)

> - Creative writing and art: exploring the generation of creative, fictional texts; aiding creation of poetry and other literary art.

> - Entertainment: Creation of games, chat bots, and amusing generations.

#### Misuse and Out-of-scope Use

In their [model card about GPT-2](https://github.com/openai/gpt-2/blob/master/model_card.md), OpenAI wrote:

> Because large-scale language models like GPT-2 do not distinguish fact from fiction, we don’t support use-cases that require the generated text to be true.

>

> Additionally, language models like GPT-2 reflect the biases inherent to the systems they were trained on, so we do not recommend that they be deployed into systems that interact with humans unless the deployers first carry out a study of biases relevant to the intended use-case. We found no statistically significant difference in gender, race, and religious bias probes between 774M and 1.5B, implying all versions of GPT-2 should be approached with similar levels of caution around use cases that are sensitive to biases around human attributes.

## Risks, Limitations and Biases

**CONTENT WARNING: Readers should be aware this section contains content that is disturbing, offensive, and can propogate historical and current stereotypes.**

Significant research has explored bias and fairness issues with language models (see, e.g., [Sheng et al. (2021)](https://aclanthology.org/2021.acl-long.330.pdf) and [Bender et al. (2021)](https://dl.acm.org/doi/pdf/10.1145/3442188.3445922)).

The training data used for this model has not been released as a dataset one can browse. We know it contains a lot of unfiltered content from the internet, which is far from neutral. Predictions generated by the model can include disturbing and harmful stereotypes across protected classes; identity characteristics; and sensitive, social, and occupational groups. For example:

```python

>>> from transformers import pipeline, set_seed

>>> generator = pipeline('text-generation', model='gpt2-large')

>>> set_seed(42)

>>> generator("The man worked as a", max_length=10, num_return_sequences=5)

[{'generated_text': 'The man worked as a security guard in a hotel'},

{'generated_text': 'The man worked as a salesman in Mexico and in'},

{'generated_text': 'The man worked as a supervisor at the warehouse for'},

{'generated_text': "The man worked as a cleaner for the store's"},

{'generated_text': 'The man worked as a barbershop apprentice.'}]

>>> set_seed(42)

>>> generator("The woman worked as a", max_length=10, num_return_sequences=5)

[{'generated_text': 'The woman worked as a clerk at the bank.'},

{'generated_text': 'The woman worked as a caregiver, and her'},

{'generated_text': 'The woman worked as a customer service agent for a'},

{'generated_text': 'The woman worked as a cleaner at the store,'},

{'generated_text': 'The woman worked as a barista and was "'}]

```

This bias will also affect all fine-tuned versions of this model. Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model.

## Training

#### Training Data

The OpenAI team wanted to train this model on a corpus as large as possible. To build it, they scraped all the web

pages from outbound links on Reddit which received at least 3 karma. Note that all Wikipedia pages were removed from

this dataset, so the model was not trained on any part of Wikipedia. The resulting dataset (called WebText) weights

40GB of texts but has not been publicly released. You can find a list of the top 1,000 domains present in WebText

[here](https://github.com/openai/gpt-2/blob/master/domains.txt).

#### Training Procedure

The model is pretrained on a very large corpus of English data in a self-supervised fashion. This

means it was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots

of publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely,

it was trained to guess the next word in sentences.

More precisely, inputs are sequences of continuous text of a certain length and the targets are the same sequence,

shifted one token (word or piece of word) to the right. The model uses internally a mask-mechanism to make sure the

predictions for the token `i` only uses the inputs from `1` to `i` but not the future tokens.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks.

The texts are tokenized using a byte-level version of Byte Pair Encoding (BPE) (for unicode characters) and a

vocabulary size of 50,257. The inputs are sequences of 1024 consecutive tokens.

## Evaluation

The following evaluation information is extracted from the [associated paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf).

#### Testing Data, Factors and Metrics

The model authors write in the [associated paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf) that:

> Since our model operates on a byte level and does not require lossy pre-processing or tokenization, we can evaluate it on any language model benchmark. Results on language modeling datasets are commonly reported in a quantity which is a scaled or ex- ponentiated version of the average negative log probability per canonical prediction unit - usually a character, a byte, or a word. We evaluate the same quantity by computing the log-probability of a dataset according to a WebText LM and dividing by the number of canonical units. For many of these datasets, WebText LMs would be tested significantly out- of-distribution, having to predict aggressively standardized text, tokenization artifacts such as disconnected punctuation and contractions, shuffled sentences, and even the string <UNK> which is extremely rare in WebText - occurring only 26 times in 40 billion bytes. We report our main results...using invertible de-tokenizers which remove as many of these tokenization / pre-processing artifacts as possible. Since these de-tokenizers are invertible, we can still calculate the log probability of a dataset and they can be thought of as a simple form of domain adaptation.

#### Results

The model achieves the following results without any fine-tuning (zero-shot):

| Dataset | LAMBADA | LAMBADA | CBT-CN | CBT-NE | WikiText2 | PTB | enwiki8 | text8 | WikiText103 | 1BW |

|:--------:|:-------:|:-------:|:------:|:------:|:---------:|:------:|:-------:|:------:|:-----------:|:-----:|

| (metric) | (PPL) | (ACC) | (ACC) | (ACC) | (PPL) | (PPL) | (BPB) | (BPC) | (PPL) | (PPL) |

| | 10.87 | 60.12 | 93.45 | 88.0 | 19.93 | 40.31 | 0.97 | 1.02 | 22.05 | 44.575|

## Environmental Impact

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** Unknown

- **Hours used:** Unknown

- **Cloud Provider:** Unknown

- **Compute Region:** Unknown

- **Carbon Emitted:** Unknown

## Technical Specifications

See the [associated paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf) for details on the modeling architecture, objective, compute infrastructure, and training details.

## Citation Information

```bibtex

@article{radford2019language,

title={Language models are unsupervised multitask learners},

author={Radford, Alec and Wu, Jeffrey and Child, Rewon and Luan, David and Amodei, Dario and Sutskever, Ilya and others},

journal={OpenAI blog},

volume={1},

number={8},

pages={9},

year={2019}

}

```

## Model Card Authors

This model card was written by the Hugging Face team.

|

alaa-lab/InstructCV

|

alaa-lab

| 2024-02-19T11:10:25Z | 109 | 9 |

diffusers

|

[

"diffusers",

"image-to-image",

"dataset:yulu2/InstructCV-Demo-Data",

"license:mit",

"diffusers:StableDiffusionInstructPix2PixPipeline",

"region:us"

] |

image-to-image

| 2023-07-02T08:00:16Z |

---

license: mit

tags:

- image-to-image

datasets:

- yulu2/InstructCV-Demo-Data

---

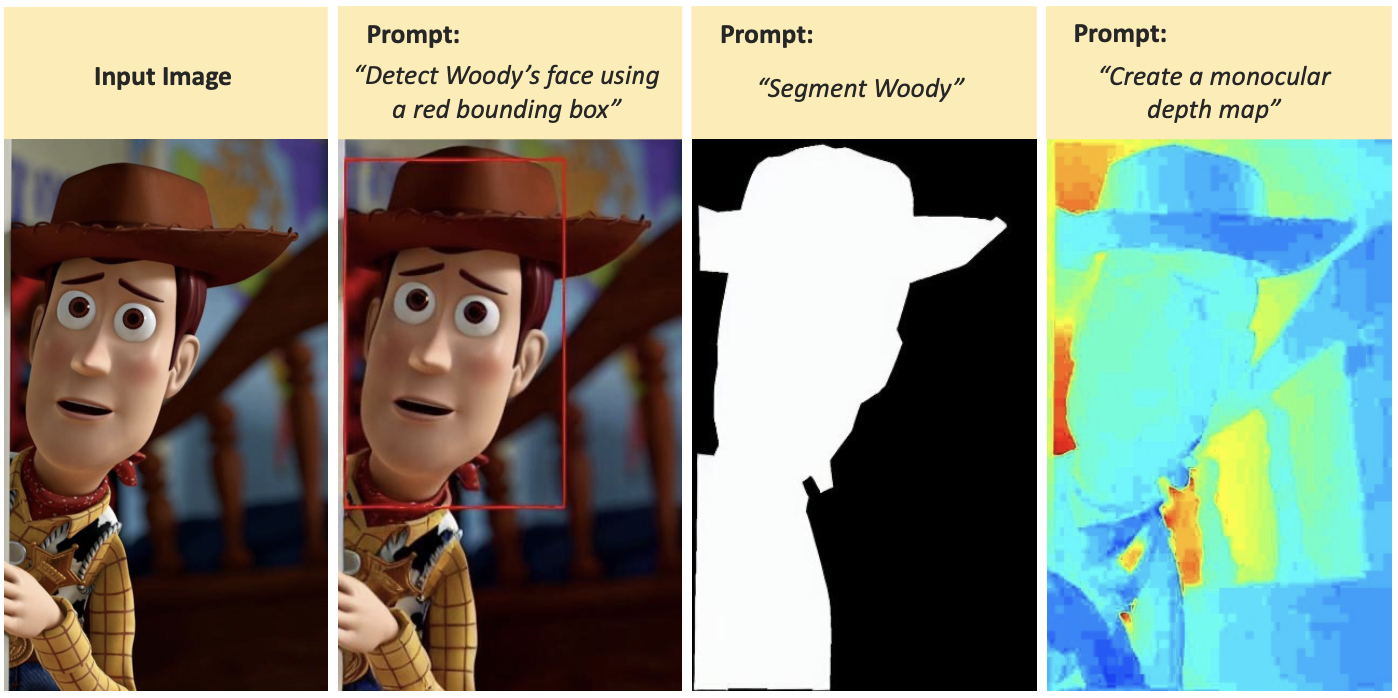

# InstructCV: Instruction-Tuned Text-to-Image Diffusion Models as Vision Generalists

GitHub: https://github.com/AlaaLab/InstructCV

[](https://imgse.com/i/pCVB5B8)

## Example

To use `InstructCV`, install `diffusers` using `main` for now. The pipeline will be available in the next release

```bash

pip install diffusers accelerate safetensors transformers

```

```python

import PIL

import requests

import torch

from diffusers import StableDiffusionInstructPix2PixPipeline, EulerAncestralDiscreteScheduler

model_id = "yulu2/InstructCV"

pipe = StableDiffusionInstructPix2PixPipeline.from_pretrained(model_id, torch_dtype=torch.float16, safety_checker=None, variant="ema")

pipe.to("cuda")

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

url = "put your url here"

def download_image(url):

image = PIL.Image.open(requests.get(url, stream=True).raw)

image = PIL.ImageOps.exif_transpose(image)

image = image.convert("RGB")

return image

image = download_image(URL)

seed = random.randint(0, 100000)

generator = torch.manual_seed(seed)

width, height = image.size

factor = 512 / max(width, height)

factor = math.ceil(min(width, height) * factor / 64) * 64 / min(width, height)

width = int((width * factor) // 64) * 64

height = int((height * factor) // 64) * 64

image = ImageOps.fit(image, (width, height), method=Image.Resampling.LANCZOS)

prompt = "Detect the person."

images = pipe(prompt, image=image, num_inference_steps=100, generator=generator).images[0]

images[0]

```

|

wyzhw/N_distilbert_twitterfin_padding10model

|

wyzhw

| 2024-02-19T11:09:35Z | 7 | 0 |

transformers

|

[

"transformers",

"safetensors",

"distilbert",

"text-classification",

"generated_from_trainer",

"base_model:distilbert/distilbert-base-uncased",

"base_model:finetune:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2024-02-19T11:06:54Z |

---

license: apache-2.0

base_model: distilbert-base-uncased

tags:

- generated_from_trainer

model-index:

- name: N_distilbert_twitterfin_padding10model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# N_distilbert_twitterfin_padding10model

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 0.01

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 0.01 | 6 | 0.9726 | 0.6558 |

### Framework versions

- Transformers 4.37.2

- Pytorch 2.2.0

- Datasets 2.17.0

- Tokenizers 0.15.2

|

google-bert/bert-large-uncased-whole-word-masking-finetuned-squad

|

google-bert

| 2024-02-19T11:08:45Z | 167,804 | 173 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"question-answering",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-03-02T23:29:04Z |

---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

---

# BERT large model (uncased) whole word masking finetuned on SQuAD

Pretrained model on English language using a masked language modeling (MLM) objective. It was introduced in

[this paper](https://arxiv.org/abs/1810.04805) and first released in

[this repository](https://github.com/google-research/bert). This model is uncased: it does not make a difference

between english and English.

Differently to other BERT models, this model was trained with a new technique: Whole Word Masking. In this case, all of the tokens corresponding to a word are masked at once. The overall masking rate remains the same.

The training is identical -- each masked WordPiece token is predicted independently.

After pre-training, this model was fine-tuned on the SQuAD dataset with one of our fine-tuning scripts. See below for more information regarding this fine-tuning.

Disclaimer: The team releasing BERT did not write a model card for this model so this model card has been written by

the Hugging Face team.

## Model description

BERT is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it

was pretrained with two objectives:

- Masked language modeling (MLM): taking a sentence, the model randomly masks 15% of the words in the input then run

the entire masked sentence through the model and has to predict the masked words. This is different from traditional

recurrent neural networks (RNNs) that usually see the words one after the other, or from autoregressive models like

GPT which internally mask the future tokens. It allows the model to learn a bidirectional representation of the

sentence.

- Next sentence prediction (NSP): the models concatenates two masked sentences as inputs during pretraining. Sometimes

they correspond to sentences that were next to each other in the original text, sometimes not. The model then has to

predict if the two sentences were following each other or not.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

This model has the following configuration:

- 24-layer

- 1024 hidden dimension

- 16 attention heads

- 336M parameters.

## Intended uses & limitations

This model should be used as a question-answering model. You may use it in a question answering pipeline, or use it to output raw results given a query and a context. You may see other use cases in the [task summary](https://huggingface.co/transformers/task_summary.html#extractive-question-answering) of the transformers documentation.## Training data

The BERT model was pretrained on [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038

unpublished books and [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and

headers).

## Training procedure

### Preprocessing

The texts are lowercased and tokenized using WordPiece and a vocabulary size of 30,000. The inputs of the model are

then of the form:

```

[CLS] Sentence A [SEP] Sentence B [SEP]

```

With probability 0.5, sentence A and sentence B correspond to two consecutive sentences in the original corpus and in

the other cases, it's another random sentence in the corpus. Note that what is considered a sentence here is a

consecutive span of text usually longer than a single sentence. The only constrain is that the result with the two

"sentences" has a combined length of less than 512 tokens.

The details of the masking procedure for each sentence are the following:

- 15% of the tokens are masked.

- In 80% of the cases, the masked tokens are replaced by `[MASK]`.

- In 10% of the cases, the masked tokens are replaced by a random token (different) from the one they replace.

- In the 10% remaining cases, the masked tokens are left as is.

### Pretraining

The model was trained on 4 cloud TPUs in Pod configuration (16 TPU chips total) for one million steps with a batch size

of 256. The sequence length was limited to 128 tokens for 90% of the steps and 512 for the remaining 10%. The optimizer

used is Adam with a learning rate of 1e-4, \\(\beta_{1} = 0.9\\) and \\(\beta_{2} = 0.999\\), a weight decay of 0.01,

learning rate warmup for 10,000 steps and linear decay of the learning rate after.

### Fine-tuning

After pre-training, this model was fine-tuned on the SQuAD dataset with one of our fine-tuning scripts. In order to reproduce the training, you may use the following command:

```

python -m torch.distributed.launch --nproc_per_node=8 ./examples/question-answering/run_qa.py \

--model_name_or_path bert-large-uncased-whole-word-masking \

--dataset_name squad \

--do_train \

--do_eval \

--learning_rate 3e-5 \

--num_train_epochs 2 \

--max_seq_length 384 \

--doc_stride 128 \

--output_dir ./examples/models/wwm_uncased_finetuned_squad/ \

--per_device_eval_batch_size=3 \

--per_device_train_batch_size=3 \

```

## Evaluation results

The results obtained are the following:

```

f1 = 93.15

exact_match = 86.91

```

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-1810-04805,

author = {Jacob Devlin and

Ming{-}Wei Chang and

Kenton Lee and

Kristina Toutanova},

title = {{BERT:} Pre-training of Deep Bidirectional Transformers for Language

Understanding},

journal = {CoRR},

volume = {abs/1810.04805},

year = {2018},

url = {http://arxiv.org/abs/1810.04805},

archivePrefix = {arXiv},

eprint = {1810.04805},

timestamp = {Tue, 30 Oct 2018 20:39:56 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1810-04805.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

google-bert/bert-large-uncased-whole-word-masking

|

google-bert

| 2024-02-19T11:08:36Z | 19,357 | 19 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:04Z |

---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

---

# BERT large model (uncased) whole word masking

Pretrained model on English language using a masked language modeling (MLM) objective. It was introduced in

[this paper](https://arxiv.org/abs/1810.04805) and first released in

[this repository](https://github.com/google-research/bert). This model is uncased: it does not make a difference

between english and English.

Differently to other BERT models, this model was trained with a new technique: Whole Word Masking. In this case, all of the tokens corresponding to a word are masked at once. The overall masking rate remains the same.

The training is identical -- each masked WordPiece token is predicted independently.

Disclaimer: The team releasing BERT did not write a model card for this model so this model card has been written by

the Hugging Face team.

## Model description

BERT is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it

was pretrained with two objectives:

- Masked language modeling (MLM): taking a sentence, the model randomly masks 15% of the words in the input then run

the entire masked sentence through the model and has to predict the masked words. This is different from traditional

recurrent neural networks (RNNs) that usually see the words one after the other, or from autoregressive models like

GPT which internally mask the future tokens. It allows the model to learn a bidirectional representation of the

sentence.

- Next sentence prediction (NSP): the models concatenates two masked sentences as inputs during pretraining. Sometimes

they correspond to sentences that were next to each other in the original text, sometimes not. The model then has to

predict if the two sentences were following each other or not.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

This model has the following configuration:

- 24-layer

- 1024 hidden dimension

- 16 attention heads

- 336M parameters.

## Intended uses & limitations

You can use the raw model for either masked language modeling or next sentence prediction, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=bert) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

You can use this model directly with a pipeline for masked language modeling:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='bert-large-uncased-whole-word-masking')

>>> unmasker("Hello I'm a [MASK] model.")

[

{

'sequence': "[CLS] hello i'm a fashion model. [SEP]",

'score': 0.15813860297203064,

'token': 4827,

'token_str': 'fashion'

}, {

'sequence': "[CLS] hello i'm a cover model. [SEP]",

'score': 0.10551052540540695,

'token': 3104,

'token_str': 'cover'

}, {

'sequence': "[CLS] hello i'm a male model. [SEP]",

'score': 0.08340442180633545,

'token': 3287,

'token_str': 'male'

}, {

'sequence': "[CLS] hello i'm a super model. [SEP]",

'score': 0.036381796002388,

'token': 3565,

'token_str': 'super'

}, {

'sequence': "[CLS] hello i'm a top model. [SEP]",

'score': 0.03609578311443329,

'token': 2327,

'token_str': 'top'

}

]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('bert-large-uncased-whole-word-masking')

model = BertModel.from_pretrained("bert-large-uncased-whole-word-masking")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import BertTokenizer, TFBertModel

tokenizer = BertTokenizer.from_pretrained('bert-large-uncased-whole-word-masking')

model = TFBertModel.from_pretrained("bert-large-uncased-whole-word-masking")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

### Limitations and bias

Even if the training data used for this model could be characterized as fairly neutral, this model can have biased

predictions:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='bert-large-uncased-whole-word-masking')

>>> unmasker("The man worked as a [MASK].")

[

{

"sequence":"[CLS] the man worked as a waiter. [SEP]",

"score":0.09823174774646759,

"token":15610,

"token_str":"waiter"

},

{

"sequence":"[CLS] the man worked as a carpenter. [SEP]",

"score":0.08976428955793381,

"token":10533,

"token_str":"carpenter"

},

{

"sequence":"[CLS] the man worked as a mechanic. [SEP]",

"score":0.06550426036119461,

"token":15893,

"token_str":"mechanic"

},

{

"sequence":"[CLS] the man worked as a butcher. [SEP]",

"score":0.04142395779490471,

"token":14998,

"token_str":"butcher"

},

{

"sequence":"[CLS] the man worked as a barber. [SEP]",

"score":0.03680137172341347,

"token":13362,

"token_str":"barber"

}

]

>>> unmasker("The woman worked as a [MASK].")

[

{

"sequence":"[CLS] the woman worked as a waitress. [SEP]",

"score":0.2669651508331299,

"token":13877,

"token_str":"waitress"

},

{

"sequence":"[CLS] the woman worked as a maid. [SEP]",

"score":0.13054853677749634,

"token":10850,

"token_str":"maid"

},

{

"sequence":"[CLS] the woman worked as a nurse. [SEP]",

"score":0.07987703382968903,

"token":6821,

"token_str":"nurse"

},

{

"sequence":"[CLS] the woman worked as a prostitute. [SEP]",

"score":0.058545831590890884,

"token":19215,

"token_str":"prostitute"

},

{

"sequence":"[CLS] the woman worked as a cleaner. [SEP]",

"score":0.03834161534905434,

"token":20133,

"token_str":"cleaner"

}

]

```

This bias will also affect all fine-tuned versions of this model.

## Training data

The BERT model was pretrained on [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038

unpublished books and [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and

headers).

## Training procedure

### Preprocessing

The texts are lowercased and tokenized using WordPiece and a vocabulary size of 30,000. The inputs of the model are

then of the form:

```

[CLS] Sentence A [SEP] Sentence B [SEP]

```

With probability 0.5, sentence A and sentence B correspond to two consecutive sentences in the original corpus and in

the other cases, it's another random sentence in the corpus. Note that what is considered a sentence here is a

consecutive span of text usually longer than a single sentence. The only constrain is that the result with the two

"sentences" has a combined length of less than 512 tokens.

The details of the masking procedure for each sentence are the following:

- 15% of the tokens are masked.

- In 80% of the cases, the masked tokens are replaced by `[MASK]`.

- In 10% of the cases, the masked tokens are replaced by a random token (different) from the one they replace.

- In the 10% remaining cases, the masked tokens are left as is.

### Pretraining

The model was trained on 4 cloud TPUs in Pod configuration (16 TPU chips total) for one million steps with a batch size

of 256. The sequence length was limited to 128 tokens for 90% of the steps and 512 for the remaining 10%. The optimizer

used is Adam with a learning rate of 1e-4, \\(\beta_{1} = 0.9\\) and \\(\beta_{2} = 0.999\\), a weight decay of 0.01,

learning rate warmup for 10,000 steps and linear decay of the learning rate after.

## Evaluation results

When fine-tuned on downstream tasks, this model achieves the following results:

Model | SQUAD 1.1 F1/EM | Multi NLI Accuracy

---------------------------------------- | :-------------: | :----------------:

BERT-Large, Uncased (Whole Word Masking) | 92.8/86.7 | 87.07

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-1810-04805,

author = {Jacob Devlin and

Ming{-}Wei Chang and

Kenton Lee and

Kristina Toutanova},

title = {{BERT:} Pre-training of Deep Bidirectional Transformers for Language

Understanding},

journal = {CoRR},

volume = {abs/1810.04805},

year = {2018},

url = {http://arxiv.org/abs/1810.04805},

archivePrefix = {arXiv},

eprint = {1810.04805},

timestamp = {Tue, 30 Oct 2018 20:39:56 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1810-04805.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

google-bert/bert-large-uncased

|

google-bert

| 2024-02-19T11:06:54Z | 2,168,888 | 125 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"rust",

"safetensors",

"bert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:04Z |

---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

---

# BERT large model (uncased)

Pretrained model on English language using a masked language modeling (MLM) objective. It was introduced in

[this paper](https://arxiv.org/abs/1810.04805) and first released in

[this repository](https://github.com/google-research/bert). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing BERT did not write a model card for this model so this model card has been written by

the Hugging Face team.

## Model description

BERT is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it

was pretrained with two objectives:

- Masked language modeling (MLM): taking a sentence, the model randomly masks 15% of the words in the input then run

the entire masked sentence through the model and has to predict the masked words. This is different from traditional

recurrent neural networks (RNNs) that usually see the words one after the other, or from autoregressive models like

GPT which internally mask the future tokens. It allows the model to learn a bidirectional representation of the

sentence.

- Next sentence prediction (NSP): the models concatenates two masked sentences as inputs during pretraining. Sometimes

they correspond to sentences that were next to each other in the original text, sometimes not. The model then has to

predict if the two sentences were following each other or not.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

This model has the following configuration:

- 24-layer

- 1024 hidden dimension

- 16 attention heads

- 336M parameters.

## Intended uses & limitations

You can use the raw model for either masked language modeling or next sentence prediction, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=bert) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

You can use this model directly with a pipeline for masked language modeling:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='bert-large-uncased')

>>> unmasker("Hello I'm a [MASK] model.")

[{'sequence': "[CLS] hello i'm a fashion model. [SEP]",

'score': 0.1886913776397705,

'token': 4827,

'token_str': 'fashion'},

{'sequence': "[CLS] hello i'm a professional model. [SEP]",

'score': 0.07157472521066666,

'token': 2658,

'token_str': 'professional'},

{'sequence': "[CLS] hello i'm a male model. [SEP]",

'score': 0.04053466394543648,

'token': 3287,

'token_str': 'male'},

{'sequence': "[CLS] hello i'm a role model. [SEP]",

'score': 0.03891477733850479,

'token': 2535,

'token_str': 'role'},

{'sequence': "[CLS] hello i'm a fitness model. [SEP]",

'score': 0.03038121573626995,

'token': 10516,

'token_str': 'fitness'}]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('bert-large-uncased')

model = BertModel.from_pretrained("bert-large-uncased")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import BertTokenizer, TFBertModel

tokenizer = BertTokenizer.from_pretrained('bert-large-uncased')

model = TFBertModel.from_pretrained("bert-large-uncased")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

### Limitations and bias

Even if the training data used for this model could be characterized as fairly neutral, this model can have biased

predictions:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='bert-large-uncased')

>>> unmasker("The man worked as a [MASK].")

[{'sequence': '[CLS] the man worked as a bartender. [SEP]',

'score': 0.10426565259695053,

'token': 15812,

'token_str': 'bartender'},

{'sequence': '[CLS] the man worked as a waiter. [SEP]',

'score': 0.10232779383659363,

'token': 15610,

'token_str': 'waiter'},

{'sequence': '[CLS] the man worked as a mechanic. [SEP]',

'score': 0.06281787157058716,

'token': 15893,

'token_str': 'mechanic'},

{'sequence': '[CLS] the man worked as a lawyer. [SEP]',

'score': 0.050936125218868256,

'token': 5160,

'token_str': 'lawyer'},

{'sequence': '[CLS] the man worked as a carpenter. [SEP]',

'score': 0.041034240275621414,

'token': 10533,

'token_str': 'carpenter'}]

>>> unmasker("The woman worked as a [MASK].")

[{'sequence': '[CLS] the woman worked as a waitress. [SEP]',

'score': 0.28473711013793945,

'token': 13877,

'token_str': 'waitress'},

{'sequence': '[CLS] the woman worked as a nurse. [SEP]',

'score': 0.11336520314216614,

'token': 6821,

'token_str': 'nurse'},

{'sequence': '[CLS] the woman worked as a bartender. [SEP]',

'score': 0.09574324637651443,

'token': 15812,

'token_str': 'bartender'},

{'sequence': '[CLS] the woman worked as a maid. [SEP]',

'score': 0.06351090222597122,

'token': 10850,

'token_str': 'maid'},

{'sequence': '[CLS] the woman worked as a secretary. [SEP]',

'score': 0.048970773816108704,

'token': 3187,

'token_str': 'secretary'}]

```

This bias will also affect all fine-tuned versions of this model.

## Training data

The BERT model was pretrained on [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038

unpublished books and [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and

headers).

## Training procedure

### Preprocessing

The texts are lowercased and tokenized using WordPiece and a vocabulary size of 30,000. The inputs of the model are

then of the form:

```

[CLS] Sentence A [SEP] Sentence B [SEP]

```

With probability 0.5, sentence A and sentence B correspond to two consecutive sentences in the original corpus and in

the other cases, it's another random sentence in the corpus. Note that what is considered a sentence here is a

consecutive span of text usually longer than a single sentence. The only constrain is that the result with the two

"sentences" has a combined length of less than 512 tokens.

The details of the masking procedure for each sentence are the following:

- 15% of the tokens are masked.

- In 80% of the cases, the masked tokens are replaced by `[MASK]`.

- In 10% of the cases, the masked tokens are replaced by a random token (different) from the one they replace.

- In the 10% remaining cases, the masked tokens are left as is.

### Pretraining

The model was trained on 4 cloud TPUs in Pod configuration (16 TPU chips total) for one million steps with a batch size

of 256. The sequence length was limited to 128 tokens for 90% of the steps and 512 for the remaining 10%. The optimizer

used is Adam with a learning rate of 1e-4, \\(\beta_{1} = 0.9\\) and \\(\beta_{2} = 0.999\\), a weight decay of 0.01,

learning rate warmup for 10,000 steps and linear decay of the learning rate after.

## Evaluation results

When fine-tuned on downstream tasks, this model achieves the following results:

Model | SQUAD 1.1 F1/EM | Multi NLI Accuracy

---------------------------------------- | :-------------: | :----------------:

BERT-Large, Uncased (Original) | 91.0/84.3 | 86.05

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-1810-04805,

author = {Jacob Devlin and

Ming{-}Wei Chang and

Kenton Lee and

Kristina Toutanova},

title = {{BERT:} Pre-training of Deep Bidirectional Transformers for Language

Understanding},

journal = {CoRR},

volume = {abs/1810.04805},

year = {2018},

url = {http://arxiv.org/abs/1810.04805},

archivePrefix = {arXiv},

eprint = {1810.04805},

timestamp = {Tue, 30 Oct 2018 20:39:56 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1810-04805.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

google-bert/bert-large-cased

|

google-bert

| 2024-02-19T11:06:20Z | 105,940 | 32 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:04Z |

---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

---

# BERT large model (cased)

Pretrained model on English language using a masked language modeling (MLM) objective. It was introduced in

[this paper](https://arxiv.org/abs/1810.04805) and first released in

[this repository](https://github.com/google-research/bert). This model is cased: it makes a difference

between english and English.

Disclaimer: The team releasing BERT did not write a model card for this model so this model card has been written by

the Hugging Face team.

## Model description

BERT is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it

was pretrained with two objectives:

- Masked language modeling (MLM): taking a sentence, the model randomly masks 15% of the words in the input then run

the entire masked sentence through the model and has to predict the masked words. This is different from traditional

recurrent neural networks (RNNs) that usually see the words one after the other, or from autoregressive models like

GPT which internally mask the future tokens. It allows the model to learn a bidirectional representation of the

sentence.

- Next sentence prediction (NSP): the models concatenates two masked sentences as inputs during pretraining. Sometimes

they correspond to sentences that were next to each other in the original text, sometimes not. The model then has to

predict if the two sentences were following each other or not.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

This model has the following configuration:

- 24-layer

- 1024 hidden dimension

- 16 attention heads

- 336M parameters.

## Intended uses & limitations

You can use the raw model for either masked language modeling or next sentence prediction, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=bert) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

You can use this model directly with a pipeline for masked language modeling:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='bert-large-cased')

>>> unmasker("Hello I'm a [MASK] model.")

[

{