modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-02 06:30:45

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 533

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-02 06:30:39

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

Dnyaneshwar/hing-mbert-finetuned-code-mixed-DS

|

Dnyaneshwar

| 2022-09-13T14:19:07Z | 102 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"license:cc-by-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-13T13:46:40Z |

---

license: cc-by-4.0

tags:

- generated_from_trainer

metrics:

- accuracy

- precision

- recall

- f1

model-index:

- name: hing-mbert-finetuned-code-mixed-DS

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hing-mbert-finetuned-code-mixed-DS

This model is a fine-tuned version of [l3cube-pune/hing-mbert](https://huggingface.co/l3cube-pune/hing-mbert) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0518

- Accuracy: 0.7545

- Precision: 0.7041

- Recall: 0.7076

- F1: 0.7053

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2.7277800745684633e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 43

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 0.8338 | 1.0 | 497 | 0.6922 | 0.7163 | 0.6697 | 0.6930 | 0.6686 |

| 0.5744 | 2.0 | 994 | 0.7872 | 0.7324 | 0.6786 | 0.6967 | 0.6845 |

| 0.36 | 3.0 | 1491 | 1.0518 | 0.7545 | 0.7041 | 0.7076 | 0.7053 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

SiddharthaM/bert-engonly-sentiment-test

|

SiddharthaM

| 2022-09-13T14:16:50Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-13T13:54:20Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

model-index:

- name: bert-engonly-sentiment-test

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

config: plain_text

split: train

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.8966666666666666

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-engonly-sentiment-test

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4479

- Accuracy: 0.8967

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

vamsibanda/sbert-all-MiniLM-L12-with-pooler

|

vamsibanda

| 2022-09-13T14:02:58Z | 4 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"onnx",

"bert",

"feature-extraction",

"sentence-similarity",

"transformers",

"en",

"license:apache-2.0",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-07-23T04:04:24Z |

---

pipeline_tag: sentence-similarity

language: en

license: apache-2.0

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

- onnx

---

# ONNX convert all-MiniLM-L12-v2

## Conversion of [sentence-transformers/all-MiniLM-L12-v2](https://huggingface.co/sentence-transformers/all-MiniLM-L12-v2)

This is a [sentence-transformers](https://www.SBERT.net) ONNX model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search. This custom model takes `last_hidden_state` and `pooler_output` whereas the sentence-transformers exported with default ONNX config only contains `last_hidden_state` as output.

## Usage (HuggingFace Optimum)

Using this model becomes easy when you have [optimum](https://github.com/huggingface/optimum) installed:

```

python -m pip install optimum

```

Then you can use the model like this:

```python

from optimum.onnxruntime.modeling_ort import ORTModelForCustomTasks

model = ORTModelForCustomTasks.from_pretrained("vamsibanda/sbert-all-MiniLM-L12-with-pooler")

tokenizer = AutoTokenizer.from_pretrained("vamsibanda/sbert-all-MiniLM-L12-with-pooler")

inputs = tokenizer("I love burritos!", return_tensors="pt")

pred = model(**inputs)

embedding = pred['pooler_output']

```

|

vamsibanda/sbert-all-roberta-large-v1-with-pooler

|

vamsibanda

| 2022-09-13T14:00:40Z | 3 | 1 |

sentence-transformers

|

[

"sentence-transformers",

"onnx",

"roberta",

"feature-extraction",

"sentence-similarity",

"transformers",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-07-19T00:43:14Z |

---

pipeline_tag: sentence-similarity

language: en

license: apache-2.0

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

- onnx

---

# ONNX convert all-roberta-large-v1

## Conversion of [sentence-transformers/all-roberta-large-v1](https://huggingface.co/sentence-transformers/all-roberta-large-v1)

## Usage (HuggingFace Optimum)

Using this model becomes easy when you have [optimum](https://github.com/huggingface/optimum) installed:

```

python -m pip install optimum

```

Then you can use the model like this:

```python

from optimum.onnxruntime.modeling_ort import ORTModelForCustomTasks

model = ORTModelForCustomTasks.from_pretrained("vamsibanda/sbert-all-roberta-large-v1-with-pooler")

tokenizer = AutoTokenizer.from_pretrained("vamsibanda/sbert-all-roberta-large-v1-with-pooler")

inputs = tokenizer("I love burritos!", return_tensors="pt")

pred = model(**inputs)

embedding = pred['pooler_output']

```

|

sd-concepts-library/a-tale-of-two-empires

|

sd-concepts-library

| 2022-09-13T13:35:14Z | 0 | 2 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T13:19:38Z |

---

license: mit

---

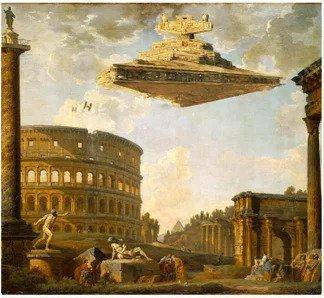

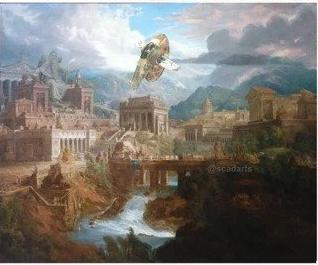

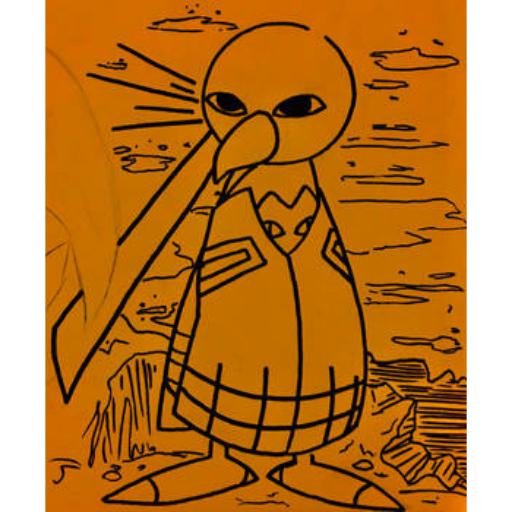

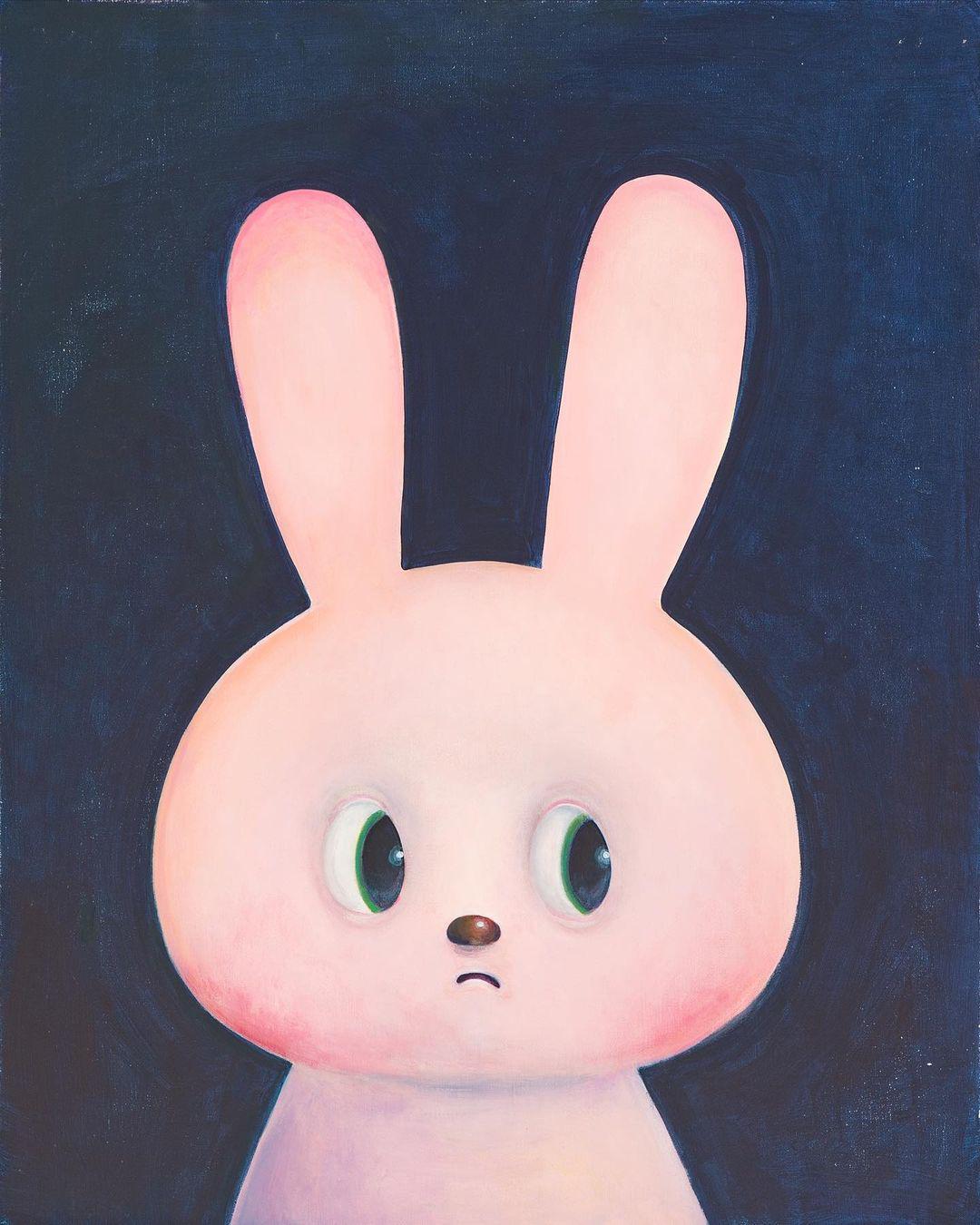

### A Tale of Two Empires on Stable Diffusion

This is the `<two-empires>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

Source: Reddit [u/mandal0re](https://www.reddit.com/r/StarWars/comments/kg6ovv/i_like_to_photoshop_old_paintings_heres_my_a_tale/)

|

DelinteNicolas/SDG_classifier_v0.0.4

|

DelinteNicolas

| 2022-09-13T13:13:45Z | 165 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"license:gpl-3.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-12T14:34:34Z |

---

license: gpl-3.0

---

Fined-tuned BERT trained on 6500+ labeled data, including control sentences from SuperGLUE.

|

MJ199999/gpt3_model

|

MJ199999

| 2022-09-13T12:42:18Z | 9 | 1 |

transformers

|

[

"transformers",

"tf",

"gpt2",

"text-generation",

"generated_from_keras_callback",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-09-09T05:19:15Z |

---

tags:

- generated_from_keras_callback

model-index:

- name: gpt3_model

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# gpt3_model

This model is a fine-tuned version of [MJ199999/gpt3_model](https://huggingface.co/MJ199999/gpt3_model) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.4905

- Train Lr: 0.0009999999

- Epoch: 199

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adagrad', 'learning_rate': 0.0009999999, 'decay': 0.0, 'initial_accumulator_value': 0.1, 'epsilon': 1e-07}

- training_precision: float32

### Training results

| Train Loss | Train Lr | Epoch |

|:----------:|:------------:|:-----:|

| 5.1583 | 0.01 | 0 |

| 3.9477 | 0.01 | 1 |

| 2.9332 | 0.01 | 2 |

| 2.1581 | 0.01 | 3 |

| 1.6918 | 0.01 | 4 |

| 1.3929 | 0.01 | 5 |

| 1.2062 | 0.01 | 6 |

| 1.0955 | 0.01 | 7 |

| 1.0068 | 0.01 | 8 |

| 0.9528 | 0.01 | 9 |

| 0.9051 | 0.01 | 10 |

| 0.8710 | 0.01 | 11 |

| 0.8564 | 0.01 | 12 |

| 0.8094 | 0.01 | 13 |

| 0.8143 | 0.01 | 14 |

| 0.7853 | 0.01 | 15 |

| 0.7625 | 0.01 | 16 |

| 0.7508 | 0.01 | 17 |

| 0.7449 | 0.01 | 18 |

| 0.7319 | 0.01 | 19 |

| 0.7144 | 0.01 | 20 |

| 0.7045 | 0.01 | 21 |

| 0.7029 | 0.01 | 22 |

| 0.6937 | 0.01 | 23 |

| 0.6898 | 0.01 | 24 |

| 0.6745 | 0.01 | 25 |

| 0.6767 | 0.01 | 26 |

| 0.6692 | 0.01 | 27 |

| 0.6604 | 0.01 | 28 |

| 0.6573 | 0.01 | 29 |

| 0.6524 | 0.01 | 30 |

| 0.6508 | 0.01 | 31 |

| 0.6443 | 0.01 | 32 |

| 0.6452 | 0.01 | 33 |

| 0.6371 | 0.01 | 34 |

| 0.6362 | 0.01 | 35 |

| 0.6304 | 0.01 | 36 |

| 0.6317 | 0.01 | 37 |

| 0.6270 | 0.01 | 38 |

| 0.6257 | 0.01 | 39 |

| 0.6208 | 0.01 | 40 |

| 0.6227 | 0.01 | 41 |

| 0.6154 | 0.01 | 42 |

| 0.6126 | 0.01 | 43 |

| 0.6149 | 0.01 | 44 |

| 0.6075 | 0.01 | 45 |

| 0.6084 | 0.01 | 46 |

| 0.6078 | 0.01 | 47 |

| 0.6057 | 0.01 | 48 |

| 0.6033 | 0.01 | 49 |

| 0.6040 | 0.01 | 50 |

| 0.5989 | 0.01 | 51 |

| 0.5967 | 0.01 | 52 |

| 0.5952 | 0.01 | 53 |

| 0.5911 | 0.01 | 54 |

| 0.5904 | 0.01 | 55 |

| 0.5888 | 0.01 | 56 |

| 0.5886 | 0.01 | 57 |

| 0.5883 | 0.01 | 58 |

| 0.5838 | 0.01 | 59 |

| 0.5856 | 0.01 | 60 |

| 0.5850 | 0.01 | 61 |

| 0.5801 | 0.01 | 62 |

| 0.5821 | 0.01 | 63 |

| 0.5781 | 0.01 | 64 |

| 0.5786 | 0.01 | 65 |

| 0.5835 | 0.01 | 66 |

| 0.5808 | 0.01 | 67 |

| 0.5754 | 0.01 | 68 |

| 0.5742 | 0.01 | 69 |

| 0.5733 | 0.01 | 70 |

| 0.5700 | 0.01 | 71 |

| 0.5738 | 0.01 | 72 |

| 0.5678 | 0.01 | 73 |

| 0.5695 | 0.01 | 74 |

| 0.5684 | 0.01 | 75 |

| 0.5696 | 0.01 | 76 |

| 0.5688 | 0.01 | 77 |

| 0.5648 | 0.01 | 78 |

| 0.5592 | 0.01 | 79 |

| 0.5622 | 0.01 | 80 |

| 0.5660 | 0.01 | 81 |

| 0.5636 | 0.01 | 82 |

| 0.5602 | 0.01 | 83 |

| 0.5613 | 0.01 | 84 |

| 0.5608 | 0.01 | 85 |

| 0.5589 | 0.01 | 86 |

| 0.5580 | 0.01 | 87 |

| 0.5566 | 0.01 | 88 |

| 0.5531 | 0.01 | 89 |

| 0.5571 | 0.01 | 90 |

| 0.5541 | 0.01 | 91 |

| 0.5576 | 0.01 | 92 |

| 0.5560 | 0.01 | 93 |

| 0.5517 | 0.01 | 94 |

| 0.5508 | 0.01 | 95 |

| 0.5554 | 0.01 | 96 |

| 0.5539 | 0.01 | 97 |

| 0.5493 | 0.01 | 98 |

| 0.5499 | 0.01 | 99 |

| 0.4999 | 0.0009999999 | 100 |

| 0.4981 | 0.0009999999 | 101 |

| 0.4983 | 0.0009999999 | 102 |

| 0.4984 | 0.0009999999 | 103 |

| 0.4974 | 0.0009999999 | 104 |

| 0.4957 | 0.0009999999 | 105 |

| 0.4966 | 0.0009999999 | 106 |

| 0.4975 | 0.0009999999 | 107 |

| 0.4962 | 0.0009999999 | 108 |

| 0.4932 | 0.0009999999 | 109 |

| 0.4983 | 0.0009999999 | 110 |

| 0.4937 | 0.0009999999 | 111 |

| 0.4926 | 0.0009999999 | 112 |

| 0.4944 | 0.0009999999 | 113 |

| 0.4947 | 0.0009999999 | 114 |

| 0.4953 | 0.0009999999 | 115 |

| 0.4934 | 0.0009999999 | 116 |

| 0.4929 | 0.0009999999 | 117 |

| 0.4925 | 0.0009999999 | 118 |

| 0.4948 | 0.0009999999 | 119 |

| 0.4947 | 0.0009999999 | 120 |

| 0.4936 | 0.0009999999 | 121 |

| 0.4909 | 0.0009999999 | 122 |

| 0.4960 | 0.0009999999 | 123 |

| 0.4952 | 0.0009999999 | 124 |

| 0.4923 | 0.0009999999 | 125 |

| 0.4930 | 0.0009999999 | 126 |

| 0.4942 | 0.0009999999 | 127 |

| 0.4927 | 0.0009999999 | 128 |

| 0.4917 | 0.0009999999 | 129 |

| 0.4926 | 0.0009999999 | 130 |

| 0.4927 | 0.0009999999 | 131 |

| 0.4932 | 0.0009999999 | 132 |

| 0.4925 | 0.0009999999 | 133 |

| 0.4928 | 0.0009999999 | 134 |

| 0.4936 | 0.0009999999 | 135 |

| 0.4908 | 0.0009999999 | 136 |

| 0.4936 | 0.0009999999 | 137 |

| 0.4916 | 0.0009999999 | 138 |

| 0.4906 | 0.0009999999 | 139 |

| 0.4904 | 0.0009999999 | 140 |

| 0.4920 | 0.0009999999 | 141 |

| 0.4924 | 0.0009999999 | 142 |

| 0.4902 | 0.0009999999 | 143 |

| 0.4903 | 0.0009999999 | 144 |

| 0.4903 | 0.0009999999 | 145 |

| 0.4924 | 0.0009999999 | 146 |

| 0.4889 | 0.0009999999 | 147 |

| 0.4896 | 0.0009999999 | 148 |

| 0.4919 | 0.0009999999 | 149 |

| 0.4896 | 0.0009999999 | 150 |

| 0.4906 | 0.0009999999 | 151 |

| 0.4923 | 0.0009999999 | 152 |

| 0.4899 | 0.0009999999 | 153 |

| 0.4925 | 0.0009999999 | 154 |

| 0.4901 | 0.0009999999 | 155 |

| 0.4910 | 0.0009999999 | 156 |

| 0.4904 | 0.0009999999 | 157 |

| 0.4912 | 0.0009999999 | 158 |

| 0.4937 | 0.0009999999 | 159 |

| 0.4894 | 0.0009999999 | 160 |

| 0.4913 | 0.0009999999 | 161 |

| 0.4899 | 0.0009999999 | 162 |

| 0.4894 | 0.0009999999 | 163 |

| 0.4904 | 0.0009999999 | 164 |

| 0.4900 | 0.0009999999 | 165 |

| 0.4890 | 0.0009999999 | 166 |

| 0.4919 | 0.0009999999 | 167 |

| 0.4909 | 0.0009999999 | 168 |

| 0.4891 | 0.0009999999 | 169 |

| 0.4900 | 0.0009999999 | 170 |

| 0.4910 | 0.0009999999 | 171 |

| 0.4901 | 0.0009999999 | 172 |

| 0.4914 | 0.0009999999 | 173 |

| 0.4913 | 0.0009999999 | 174 |

| 0.4897 | 0.0009999999 | 175 |

| 0.4892 | 0.0009999999 | 176 |

| 0.4929 | 0.0009999999 | 177 |

| 0.4881 | 0.0009999999 | 178 |

| 0.4920 | 0.0009999999 | 179 |

| 0.4888 | 0.0009999999 | 180 |

| 0.4901 | 0.0009999999 | 181 |

| 0.4875 | 0.0009999999 | 182 |

| 0.4930 | 0.0009999999 | 183 |

| 0.4867 | 0.0009999999 | 184 |

| 0.4890 | 0.0009999999 | 185 |

| 0.4898 | 0.0009999999 | 186 |

| 0.4880 | 0.0009999999 | 187 |

| 0.4899 | 0.0009999999 | 188 |

| 0.4881 | 0.0009999999 | 189 |

| 0.4897 | 0.0009999999 | 190 |

| 0.4876 | 0.0009999999 | 191 |

| 0.4873 | 0.0009999999 | 192 |

| 0.4901 | 0.0009999999 | 193 |

| 0.4898 | 0.0009999999 | 194 |

| 0.4898 | 0.0009999999 | 195 |

| 0.4861 | 0.0009999999 | 196 |

| 0.4878 | 0.0009999999 | 197 |

| 0.4880 | 0.0009999999 | 198 |

| 0.4905 | 0.0009999999 | 199 |

### Framework versions

- Transformers 4.21.3

- TensorFlow 2.8.2

- Tokenizers 0.12.1

|

Padomin/t5-base-TEDxJP-0front-1body-7rear

|

Padomin

| 2022-09-13T12:15:31Z | 12 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:te_dx_jp",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-13T02:28:03Z |

---

license: cc-by-sa-4.0

tags:

- generated_from_trainer

datasets:

- te_dx_jp

model-index:

- name: t5-base-TEDxJP-0front-1body-7rear

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-TEDxJP-0front-1body-7rear

This model is a fine-tuned version of [sonoisa/t5-base-japanese](https://huggingface.co/sonoisa/t5-base-japanese) on the te_dx_jp dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4666

- Wer: 0.1780

- Mer: 0.1718

- Wil: 0.2607

- Wip: 0.7393

- Hits: 55410

- Substitutions: 6566

- Deletions: 2611

- Insertions: 2321

- Cer: 0.1388

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Mer | Wil | Wip | Hits | Substitutions | Deletions | Insertions | Cer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:------:|:-----:|:-------------:|:---------:|:----------:|:------:|

| 0.6424 | 1.0 | 1457 | 0.4944 | 0.1980 | 0.1893 | 0.2798 | 0.7202 | 54775 | 6748 | 3064 | 2975 | 0.1603 |

| 0.5444 | 2.0 | 2914 | 0.4496 | 0.1799 | 0.1740 | 0.2619 | 0.7381 | 55175 | 6480 | 2932 | 2207 | 0.1400 |

| 0.4975 | 3.0 | 4371 | 0.4451 | 0.1773 | 0.1713 | 0.2586 | 0.7414 | 55399 | 6429 | 2759 | 2266 | 0.1397 |

| 0.4312 | 4.0 | 5828 | 0.4417 | 0.1758 | 0.1701 | 0.2572 | 0.7428 | 55408 | 6407 | 2772 | 2178 | 0.1378 |

| 0.3846 | 5.0 | 7285 | 0.4445 | 0.1753 | 0.1696 | 0.2573 | 0.7427 | 55409 | 6453 | 2725 | 2142 | 0.1367 |

| 0.3501 | 6.0 | 8742 | 0.4482 | 0.1792 | 0.1727 | 0.2609 | 0.7391 | 55453 | 6522 | 2612 | 2439 | 0.1401 |

| 0.381 | 7.0 | 10199 | 0.4531 | 0.1770 | 0.1711 | 0.2592 | 0.7408 | 55380 | 6498 | 2709 | 2223 | 0.1378 |

| 0.313 | 8.0 | 11656 | 0.4585 | 0.1775 | 0.1716 | 0.2599 | 0.7401 | 55371 | 6516 | 2700 | 2250 | 0.1383 |

| 0.2976 | 9.0 | 13113 | 0.4646 | 0.1778 | 0.1717 | 0.2603 | 0.7397 | 55387 | 6537 | 2663 | 2284 | 0.1402 |

| 0.3152 | 10.0 | 14570 | 0.4666 | 0.1780 | 0.1718 | 0.2607 | 0.7393 | 55410 | 6566 | 2611 | 2321 | 0.1388 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu116

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Padomin/t5-base-TEDxJP-0front-1body-6rear

|

Padomin

| 2022-09-13T11:59:57Z | 30 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:te_dx_jp",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-13T02:30:38Z |

---

license: cc-by-sa-4.0

tags:

- generated_from_trainer

datasets:

- te_dx_jp

model-index:

- name: t5-base-TEDxJP-0front-1body-6rear

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-TEDxJP-0front-1body-6rear

This model is a fine-tuned version of [sonoisa/t5-base-japanese](https://huggingface.co/sonoisa/t5-base-japanese) on the te_dx_jp dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4688

- Wer: 0.1755

- Mer: 0.1695

- Wil: 0.2577

- Wip: 0.7423

- Hits: 55504

- Substitutions: 6505

- Deletions: 2578

- Insertions: 2249

- Cer: 0.1373

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Mer | Wil | Wip | Hits | Substitutions | Deletions | Insertions | Cer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:------:|:-----:|:-------------:|:---------:|:----------:|:------:|

| 0.6426 | 1.0 | 1457 | 0.4936 | 0.2128 | 0.2007 | 0.2903 | 0.7097 | 54742 | 6734 | 3111 | 3899 | 0.1791 |

| 0.5519 | 2.0 | 2914 | 0.4535 | 0.1970 | 0.1876 | 0.2747 | 0.7253 | 55096 | 6467 | 3024 | 3233 | 0.1567 |

| 0.5007 | 3.0 | 4371 | 0.4465 | 0.1819 | 0.1751 | 0.2628 | 0.7372 | 55359 | 6481 | 2747 | 2522 | 0.1435 |

| 0.4374 | 4.0 | 5828 | 0.4417 | 0.1761 | 0.1703 | 0.2582 | 0.7418 | 55399 | 6471 | 2717 | 2184 | 0.1373 |

| 0.3831 | 5.0 | 7285 | 0.4459 | 0.1755 | 0.1697 | 0.2570 | 0.7430 | 55465 | 6429 | 2693 | 2214 | 0.1383 |

| 0.352 | 6.0 | 8742 | 0.4496 | 0.1755 | 0.1697 | 0.2573 | 0.7427 | 55452 | 6450 | 2685 | 2202 | 0.1374 |

| 0.3955 | 7.0 | 10199 | 0.4527 | 0.1766 | 0.1707 | 0.2580 | 0.7420 | 55429 | 6429 | 2729 | 2251 | 0.1392 |

| 0.3132 | 8.0 | 11656 | 0.4629 | 0.1764 | 0.1703 | 0.2580 | 0.7420 | 55522 | 6472 | 2593 | 2329 | 0.1380 |

| 0.3116 | 9.0 | 13113 | 0.4652 | 0.1755 | 0.1695 | 0.2577 | 0.7423 | 55517 | 6505 | 2565 | 2264 | 0.1371 |

| 0.313 | 10.0 | 14570 | 0.4688 | 0.1755 | 0.1695 | 0.2577 | 0.7423 | 55504 | 6505 | 2578 | 2249 | 0.1373 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu116

- Datasets 2.4.0

- Tokenizers 0.12.1

|

IIIT-L/hing-mbert-finetuned-TRAC-DS

|

IIIT-L

| 2022-09-13T11:50:24Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"license:cc-by-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-13T11:15:49Z |

---

license: cc-by-4.0

tags:

- generated_from_trainer

metrics:

- accuracy

- precision

- recall

- f1

model-index:

- name: hing-mbert-finetuned-TRAC-DS

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hing-mbert-finetuned-TRAC-DS

This model is a fine-tuned version of [l3cube-pune/hing-mbert](https://huggingface.co/l3cube-pune/hing-mbert) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.3580

- Accuracy: 0.7018

- Precision: 0.6759

- Recall: 0.6722

- F1: 0.6737

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2.824279936868144e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 43

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 0.7111 | 2.0 | 1224 | 0.7772 | 0.6683 | 0.6695 | 0.6793 | 0.6558 |

| 0.3026 | 3.99 | 2448 | 1.3580 | 0.7018 | 0.6759 | 0.6722 | 0.6737 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.1+cu111

- Datasets 2.3.2

- Tokenizers 0.12.1

|

sd-concepts-library/zaney

|

sd-concepts-library

| 2022-09-13T10:39:57Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T10:39:54Z |

---

license: mit

---

### zaney on Stable Diffusion

This is the `<zaney>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

SetFit/MiniLM_L3_clinc_oos_plus_distilled

|

SetFit

| 2022-09-13T10:39:03Z | 5 | 5 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"bert",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-09-13T10:38:58Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# SetFit/MiniLM_L3_clinc_oos_plus_distilled

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('SetFit/MiniLM_L3_clinc_oos_plus_distilled')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('SetFit/MiniLM_L3_clinc_oos_plus_distilled')

model = AutoModel.from_pretrained('SetFit/MiniLM_L3_clinc_oos_plus_distilled')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=SetFit/MiniLM_L3_clinc_oos_plus_distilled)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 190625 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 3,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 10,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 384, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

sd-concepts-library/bada-club

|

sd-concepts-library

| 2022-09-13T09:35:45Z | 0 | 1 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T09:35:32Z |

---

license: mit

---

### bada club on Stable Diffusion

This is the `<bada-club>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/dullboy-caricature

|

sd-concepts-library

| 2022-09-13T08:14:36Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T08:14:29Z |

---

license: mit

---

### Dullboy Caricature on Stable Diffusion

This is the `<dullboy-cari>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

Sebabrata/lmv2-g-passport-197-doc-09-13

|

Sebabrata

| 2022-09-13T04:54:38Z | 90 | 3 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"layoutlmv2",

"token-classification",

"generated_from_trainer",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-13T04:10:33Z |

---

license: cc-by-nc-sa-4.0

tags:

- generated_from_trainer

model-index:

- name: lmv2-g-passport-197-doc-09-13

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# lmv2-g-passport-197-doc-09-13

This model is a fine-tuned version of [microsoft/layoutlmv2-base-uncased](https://huggingface.co/microsoft/layoutlmv2-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0438

- Country Code Precision: 0.9412

- Country Code Recall: 0.9697

- Country Code F1: 0.9552

- Country Code Number: 33

- Date Of Birth Precision: 0.9714

- Date Of Birth Recall: 1.0

- Date Of Birth F1: 0.9855

- Date Of Birth Number: 34

- Date Of Expiry Precision: 1.0

- Date Of Expiry Recall: 1.0

- Date Of Expiry F1: 1.0

- Date Of Expiry Number: 36

- Date Of Issue Precision: 1.0

- Date Of Issue Recall: 1.0

- Date Of Issue F1: 1.0

- Date Of Issue Number: 36

- Given Name Precision: 0.9444

- Given Name Recall: 1.0

- Given Name F1: 0.9714

- Given Name Number: 34

- Nationality Precision: 0.9714

- Nationality Recall: 1.0

- Nationality F1: 0.9855

- Nationality Number: 34

- Passport No Precision: 0.9118

- Passport No Recall: 0.9688

- Passport No F1: 0.9394

- Passport No Number: 32

- Place Of Birth Precision: 1.0

- Place Of Birth Recall: 0.9730

- Place Of Birth F1: 0.9863

- Place Of Birth Number: 37

- Place Of Issue Precision: 1.0

- Place Of Issue Recall: 0.9722

- Place Of Issue F1: 0.9859

- Place Of Issue Number: 36

- Sex Precision: 0.9655

- Sex Recall: 0.9333

- Sex F1: 0.9492

- Sex Number: 30

- Surname Precision: 0.9259

- Surname Recall: 1.0

- Surname F1: 0.9615

- Surname Number: 25

- Type Precision: 1.0

- Type Recall: 1.0

- Type F1: 1.0

- Type Number: 27

- Overall Precision: 0.97

- Overall Recall: 0.9848

- Overall F1: 0.9773

- Overall Accuracy: 0.9941

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- num_epochs: 30

### Training results

| Training Loss | Epoch | Step | Validation Loss | Country Code Precision | Country Code Recall | Country Code F1 | Country Code Number | Date Of Birth Precision | Date Of Birth Recall | Date Of Birth F1 | Date Of Birth Number | Date Of Expiry Precision | Date Of Expiry Recall | Date Of Expiry F1 | Date Of Expiry Number | Date Of Issue Precision | Date Of Issue Recall | Date Of Issue F1 | Date Of Issue Number | Given Name Precision | Given Name Recall | Given Name F1 | Given Name Number | Nationality Precision | Nationality Recall | Nationality F1 | Nationality Number | Passport No Precision | Passport No Recall | Passport No F1 | Passport No Number | Place Of Birth Precision | Place Of Birth Recall | Place Of Birth F1 | Place Of Birth Number | Place Of Issue Precision | Place Of Issue Recall | Place Of Issue F1 | Place Of Issue Number | Sex Precision | Sex Recall | Sex F1 | Sex Number | Surname Precision | Surname Recall | Surname F1 | Surname Number | Type Precision | Type Recall | Type F1 | Type Number | Overall Precision | Overall Recall | Overall F1 | Overall Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:----------------------:|:-------------------:|:---------------:|:-------------------:|:-----------------------:|:--------------------:|:----------------:|:--------------------:|:------------------------:|:---------------------:|:-----------------:|:---------------------:|:-----------------------:|:--------------------:|:----------------:|:--------------------:|:--------------------:|:-----------------:|:-------------:|:-----------------:|:---------------------:|:------------------:|:--------------:|:------------------:|:---------------------:|:------------------:|:--------------:|:------------------:|:------------------------:|:---------------------:|:-----------------:|:---------------------:|:------------------------:|:---------------------:|:-----------------:|:---------------------:|:-------------:|:----------:|:------:|:----------:|:-----------------:|:--------------:|:----------:|:--------------:|:--------------:|:-----------:|:-------:|:-----------:|:-----------------:|:--------------:|:----------:|:----------------:|

| 1.6757 | 1.0 | 157 | 1.2569 | 0.0 | 0.0 | 0.0 | 33 | 0.0 | 0.0 | 0.0 | 34 | 0.2466 | 1.0 | 0.3956 | 36 | 0.0 | 0.0 | 0.0 | 36 | 0.0 | 0.0 | 0.0 | 34 | 0.0 | 0.0 | 0.0 | 34 | 0.0 | 0.0 | 0.0 | 32 | 0.0 | 0.0 | 0.0 | 37 | 0.0 | 0.0 | 0.0 | 36 | 0.0 | 0.0 | 0.0 | 30 | 0.0 | 0.0 | 0.0 | 25 | 0.0 | 0.0 | 0.0 | 27 | 0.2466 | 0.0914 | 0.1333 | 0.8446 |

| 0.9214 | 2.0 | 314 | 0.5683 | 0.9394 | 0.9394 | 0.9394 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.5625 | 0.5294 | 0.5455 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.6098 | 0.7812 | 0.6849 | 32 | 0.9394 | 0.8378 | 0.8857 | 37 | 0.8293 | 0.9444 | 0.8831 | 36 | 1.0 | 0.9333 | 0.9655 | 30 | 0.6129 | 0.76 | 0.6786 | 25 | 1.0 | 0.8889 | 0.9412 | 27 | 0.8642 | 0.8883 | 0.8761 | 0.9777 |

| 0.4452 | 3.0 | 471 | 0.3266 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.5556 | 0.4412 | 0.4918 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.625 | 0.7812 | 0.6944 | 32 | 1.0 | 0.8108 | 0.8955 | 37 | 0.7556 | 0.9444 | 0.8395 | 36 | 0.9655 | 0.9333 | 0.9492 | 30 | 0.5556 | 0.8 | 0.6557 | 25 | 1.0 | 0.7037 | 0.8261 | 27 | 0.8532 | 0.8706 | 0.8618 | 0.9784 |

| 0.2823 | 4.0 | 628 | 0.2215 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.75 | 0.8824 | 0.8108 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.8378 | 0.9118 | 37 | 0.9459 | 0.9722 | 0.9589 | 36 | 0.9333 | 0.9333 | 0.9333 | 30 | 0.75 | 0.96 | 0.8421 | 25 | 1.0 | 0.9630 | 0.9811 | 27 | 0.9286 | 0.9569 | 0.9425 | 0.9885 |

| 0.2092 | 5.0 | 785 | 0.1633 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.8889 | 0.9412 | 0.9143 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.8857 | 0.9688 | 0.9254 | 32 | 1.0 | 0.8649 | 0.9275 | 37 | 0.8974 | 0.9722 | 0.9333 | 36 | 1.0 | 0.9333 | 0.9655 | 30 | 0.8889 | 0.96 | 0.9231 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9525 | 0.9670 | 0.9597 | 0.9918 |

| 0.1593 | 6.0 | 942 | 0.1331 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 0.9730 | 1.0 | 0.9863 | 36 | 0.8857 | 0.9118 | 0.8986 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 0.9722 | 0.9459 | 0.9589 | 37 | 0.9722 | 0.9722 | 0.9722 | 36 | 1.0 | 0.9 | 0.9474 | 30 | 0.8571 | 0.96 | 0.9057 | 25 | 1.0 | 0.9630 | 0.9811 | 27 | 0.9549 | 0.9670 | 0.9609 | 0.9908 |

| 0.1288 | 7.0 | 1099 | 0.1064 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9444 | 1.0 | 0.9714 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 1.0 | 0.9333 | 0.9655 | 30 | 0.92 | 0.92 | 0.92 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9723 | 0.9797 | 0.9760 | 0.9941 |

| 0.1035 | 8.0 | 1256 | 0.1043 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9706 | 0.9706 | 0.9706 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 0.9231 | 0.9730 | 0.9474 | 37 | 0.75 | 1.0 | 0.8571 | 36 | 0.9032 | 0.9333 | 0.9180 | 30 | 0.6486 | 0.96 | 0.7742 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9085 | 0.9822 | 0.9439 | 0.9856 |

| 0.0843 | 9.0 | 1413 | 0.0823 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9143 | 0.9412 | 0.9275 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9394 | 0.9688 | 0.9538 | 32 | 0.9032 | 0.7568 | 0.8235 | 37 | 0.9211 | 0.9722 | 0.9459 | 36 | 0.9655 | 0.9333 | 0.9492 | 30 | 0.7059 | 0.96 | 0.8136 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9355 | 0.9569 | 0.9460 | 0.9905 |

| 0.0733 | 10.0 | 1570 | 0.0738 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 0.9459 | 0.9459 | 0.9459 | 37 | 1.0 | 0.9444 | 0.9714 | 36 | 0.8485 | 0.9333 | 0.8889 | 30 | 0.8333 | 1.0 | 0.9091 | 25 | 0.9643 | 1.0 | 0.9818 | 27 | 0.9484 | 0.9797 | 0.9638 | 0.9911 |

| 0.0614 | 11.0 | 1727 | 0.0661 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 0.9459 | 0.9459 | 0.9459 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 0.9655 | 0.9333 | 0.9492 | 30 | 0.9231 | 0.96 | 0.9412 | 25 | 1.0 | 0.9630 | 0.9811 | 27 | 0.9673 | 0.9772 | 0.9722 | 0.9934 |

| 0.0548 | 12.0 | 1884 | 0.0637 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 0.9730 | 1.0 | 0.9863 | 36 | 0.9167 | 0.9706 | 0.9429 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 0.9459 | 0.9459 | 0.9459 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 0.875 | 0.9333 | 0.9032 | 30 | 0.9259 | 1.0 | 0.9615 | 25 | 0.9643 | 1.0 | 0.9818 | 27 | 0.9507 | 0.9797 | 0.965 | 0.9921 |

| 0.0515 | 13.0 | 2041 | 0.0562 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 0.9730 | 0.9730 | 0.9730 | 37 | 1.0 | 1.0 | 1.0 | 36 | 0.9333 | 0.9333 | 0.9333 | 30 | 0.8621 | 1.0 | 0.9259 | 25 | 0.9643 | 1.0 | 0.9818 | 27 | 0.9605 | 0.9873 | 0.9737 | 0.9931 |

| 0.0431 | 14.0 | 2198 | 0.0513 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9444 | 1.0 | 0.9714 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 0.9333 | 0.9655 | 30 | 0.9231 | 0.96 | 0.9412 | 25 | 1.0 | 0.9630 | 0.9811 | 27 | 0.9724 | 0.9822 | 0.9773 | 0.9944 |

| 0.0413 | 15.0 | 2355 | 0.0582 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9706 | 0.9706 | 0.9706 | 34 | 0.9730 | 1.0 | 0.9863 | 36 | 0.9730 | 1.0 | 0.9863 | 36 | 0.9429 | 0.9706 | 0.9565 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 1.0 | 1.0 | 36 | 0.9655 | 0.9333 | 0.9492 | 30 | 0.8929 | 1.0 | 0.9434 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9627 | 0.9822 | 0.9724 | 0.9934 |

| 0.035 | 16.0 | 2512 | 0.0556 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 0.9722 | 0.9859 | 36 | 0.8857 | 0.9118 | 0.8986 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 0.9730 | 0.9730 | 0.9730 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 0.9333 | 0.9333 | 0.9333 | 30 | 0.8621 | 1.0 | 0.9259 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9552 | 0.9746 | 0.9648 | 0.9915 |

| 0.0316 | 17.0 | 2669 | 0.0517 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9167 | 0.9706 | 0.9429 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 0.875 | 0.9333 | 0.9032 | 30 | 0.8929 | 1.0 | 0.9434 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9579 | 0.9822 | 0.9699 | 0.9928 |

| 0.027 | 18.0 | 2826 | 0.0502 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9730 | 1.0 | 0.9863 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9444 | 1.0 | 0.9714 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 0.9032 | 0.9333 | 0.9180 | 30 | 0.9259 | 1.0 | 0.9615 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9628 | 0.9848 | 0.9737 | 0.9931 |

| 0.026 | 19.0 | 2983 | 0.0481 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9189 | 1.0 | 0.9577 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 1.0 | 1.0 | 36 | 0.9333 | 0.9333 | 0.9333 | 30 | 0.8333 | 1.0 | 0.9091 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9581 | 0.9873 | 0.9725 | 0.9928 |

| 0.026 | 20.0 | 3140 | 0.0652 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9730 | 1.0 | 0.9863 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.8611 | 0.9688 | 0.9118 | 32 | 0.9730 | 0.9730 | 0.9730 | 37 | 0.9730 | 1.0 | 0.9863 | 36 | 0.8235 | 0.9333 | 0.8750 | 30 | 0.8333 | 1.0 | 0.9091 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9419 | 0.9873 | 0.9641 | 0.9882 |

| 0.0311 | 21.0 | 3297 | 0.0438 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9444 | 1.0 | 0.9714 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 0.9655 | 0.9333 | 0.9492 | 30 | 0.9259 | 1.0 | 0.9615 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.97 | 0.9848 | 0.9773 | 0.9941 |

| 0.0216 | 22.0 | 3454 | 0.0454 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9706 | 0.9706 | 0.9706 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 0.9333 | 0.9333 | 0.9333 | 30 | 0.9259 | 1.0 | 0.9615 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9699 | 0.9822 | 0.9760 | 0.9941 |

| 0.0196 | 23.0 | 3611 | 0.0510 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 0.8718 | 0.9189 | 0.8947 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 0.9655 | 0.9333 | 0.9492 | 30 | 0.9259 | 1.0 | 0.9615 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9602 | 0.9797 | 0.9698 | 0.9934 |

| 0.0176 | 24.0 | 3768 | 0.0457 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9706 | 0.9706 | 0.9706 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 1.0 | 1.0 | 36 | 0.9333 | 0.9333 | 0.9333 | 30 | 0.8929 | 1.0 | 0.9434 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9676 | 0.9848 | 0.9761 | 0.9938 |

| 0.0141 | 25.0 | 3925 | 0.0516 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 0.9722 | 0.9459 | 0.9589 | 37 | 0.9730 | 1.0 | 0.9863 | 36 | 0.875 | 0.9333 | 0.9032 | 30 | 0.9231 | 0.96 | 0.9412 | 25 | 0.9643 | 1.0 | 0.9818 | 27 | 0.9579 | 0.9822 | 0.9699 | 0.9928 |

| 0.0129 | 26.0 | 4082 | 0.0508 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9730 | 1.0 | 0.9863 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 1.0 | 1.0 | 36 | 0.875 | 0.9333 | 0.9032 | 30 | 0.9259 | 1.0 | 0.9615 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9629 | 0.9873 | 0.9749 | 0.9934 |

| 0.0125 | 27.0 | 4239 | 0.0455 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 1.0 | 0.9333 | 0.9655 | 30 | 0.9259 | 1.0 | 0.9615 | 25 | 0.8710 | 1.0 | 0.9310 | 27 | 0.9652 | 0.9848 | 0.9749 | 0.9934 |

| 0.0131 | 28.0 | 4396 | 0.0452 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 0.9722 | 0.9859 | 36 | 0.9429 | 0.9706 | 0.9565 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 1.0 | 0.9730 | 0.9863 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 1.0 | 0.9333 | 0.9655 | 30 | 0.9231 | 0.96 | 0.9412 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9722 | 0.9772 | 0.9747 | 0.9941 |

| 0.0112 | 29.0 | 4553 | 0.0465 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 0.9459 | 0.9459 | 0.9459 | 37 | 0.9722 | 0.9722 | 0.9722 | 36 | 0.9333 | 0.9333 | 0.9333 | 30 | 0.9583 | 0.92 | 0.9388 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9649 | 0.9772 | 0.9710 | 0.9931 |

| 0.0152 | 30.0 | 4710 | 0.0510 | 0.9412 | 0.9697 | 0.9552 | 33 | 0.9714 | 1.0 | 0.9855 | 34 | 1.0 | 1.0 | 1.0 | 36 | 1.0 | 1.0 | 1.0 | 36 | 0.8857 | 0.9118 | 0.8986 | 34 | 0.9714 | 1.0 | 0.9855 | 34 | 0.9118 | 0.9688 | 0.9394 | 32 | 0.9730 | 0.9730 | 0.9730 | 37 | 1.0 | 0.9722 | 0.9859 | 36 | 1.0 | 0.9333 | 0.9655 | 30 | 0.9231 | 0.96 | 0.9412 | 25 | 1.0 | 1.0 | 1.0 | 27 | 0.9648 | 0.9746 | 0.9697 | 0.9931 |

### Framework versions

- Transformers 4.22.0.dev0

- Pytorch 1.12.1+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

Padomin/t5-base-TEDxJP-0front-1body-8rear

|

Padomin

| 2022-09-13T04:49:39Z | 24 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:te_dx_jp",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-12T18:14:02Z |

---

license: cc-by-sa-4.0

tags:

- generated_from_trainer

datasets:

- te_dx_jp

model-index:

- name: t5-base-TEDxJP-0front-1body-8rear

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-TEDxJP-0front-1body-8rear

This model is a fine-tuned version of [sonoisa/t5-base-japanese](https://huggingface.co/sonoisa/t5-base-japanese) on the te_dx_jp dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4672

- Wer: 0.1759

- Mer: 0.1698

- Wil: 0.2574

- Wip: 0.7426

- Hits: 55537

- Substitutions: 6457

- Deletions: 2593

- Insertions: 2312

- Cer: 0.1383

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Mer | Wil | Wip | Hits | Substitutions | Deletions | Insertions | Cer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:------:|:-----:|:-------------:|:---------:|:----------:|:------:|

| 0.6417 | 1.0 | 1457 | 0.4928 | 0.2086 | 0.1973 | 0.2873 | 0.7127 | 54805 | 6751 | 3031 | 3693 | 0.1746 |

| 0.5435 | 2.0 | 2914 | 0.4511 | 0.1814 | 0.1751 | 0.2634 | 0.7366 | 55192 | 6518 | 2877 | 2322 | 0.1452 |

| 0.4914 | 3.0 | 4371 | 0.4424 | 0.1762 | 0.1704 | 0.2572 | 0.7428 | 55389 | 6383 | 2815 | 2180 | 0.1390 |

| 0.427 | 4.0 | 5828 | 0.4388 | 0.1751 | 0.1695 | 0.2569 | 0.7431 | 55408 | 6431 | 2748 | 2129 | 0.1366 |

| 0.3762 | 5.0 | 7285 | 0.4465 | 0.1747 | 0.1689 | 0.2561 | 0.7439 | 55533 | 6424 | 2630 | 2230 | 0.1361 |

| 0.3562 | 6.0 | 8742 | 0.4505 | 0.1761 | 0.1700 | 0.2581 | 0.7419 | 55558 | 6507 | 2522 | 2348 | 0.1402 |

| 0.3884 | 7.0 | 10199 | 0.4550 | 0.1750 | 0.1691 | 0.2564 | 0.7436 | 55548 | 6439 | 2600 | 2264 | 0.1364 |

| 0.3144 | 8.0 | 11656 | 0.4616 | 0.1760 | 0.1698 | 0.2572 | 0.7428 | 55571 | 6447 | 2569 | 2352 | 0.1373 |

| 0.3075 | 9.0 | 13113 | 0.4660 | 0.1761 | 0.1700 | 0.2572 | 0.7428 | 55547 | 6431 | 2609 | 2336 | 0.1400 |

| 0.3152 | 10.0 | 14570 | 0.4672 | 0.1759 | 0.1698 | 0.2574 | 0.7426 | 55537 | 6457 | 2593 | 2312 | 0.1383 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu116

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Padomin/t5-base-TEDxJP-0front-1body-9rear

|

Padomin

| 2022-09-13T04:02:42Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:te_dx_jp",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-12T16:56:47Z |

---

license: cc-by-sa-4.0

tags:

- generated_from_trainer

datasets:

- te_dx_jp

model-index:

- name: t5-base-TEDxJP-0front-1body-9rear

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-TEDxJP-0front-1body-9rear

This model is a fine-tuned version of [sonoisa/t5-base-japanese](https://huggingface.co/sonoisa/t5-base-japanese) on the te_dx_jp dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4673

- Wer: 0.1766

- Mer: 0.1707

- Wil: 0.2594

- Wip: 0.7406

- Hits: 55410

- Substitutions: 6552

- Deletions: 2625

- Insertions: 2229

- Cer: 0.1386

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Mer | Wil | Wip | Hits | Substitutions | Deletions | Insertions | Cer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:------:|:-----:|:-------------:|:---------:|:----------:|:------:|

| 0.641 | 1.0 | 1457 | 0.4913 | 0.2084 | 0.1972 | 0.2875 | 0.7125 | 54788 | 6785 | 3014 | 3658 | 0.1743 |

| 0.5415 | 2.0 | 2914 | 0.4483 | 0.1818 | 0.1759 | 0.2643 | 0.7357 | 55033 | 6514 | 3040 | 2190 | 0.1447 |

| 0.4835 | 3.0 | 4371 | 0.4427 | 0.1785 | 0.1722 | 0.2595 | 0.7405 | 55442 | 6443 | 2702 | 2386 | 0.1402 |

| 0.4267 | 4.0 | 5828 | 0.4376 | 0.1769 | 0.1711 | 0.2587 | 0.7413 | 55339 | 6446 | 2802 | 2177 | 0.1399 |

| 0.3752 | 5.0 | 7285 | 0.4414 | 0.1756 | 0.1698 | 0.2571 | 0.7429 | 55467 | 6432 | 2688 | 2223 | 0.1374 |

| 0.3471 | 6.0 | 8742 | 0.4497 | 0.1761 | 0.1704 | 0.2585 | 0.7415 | 55379 | 6494 | 2714 | 2166 | 0.1380 |

| 0.3841 | 7.0 | 10199 | 0.4535 | 0.1769 | 0.1710 | 0.2589 | 0.7411 | 55383 | 6482 | 2722 | 2220 | 0.1394 |

| 0.3139 | 8.0 | 11656 | 0.4604 | 0.1753 | 0.1696 | 0.2577 | 0.7423 | 55462 | 6502 | 2623 | 2199 | 0.1367 |

| 0.3012 | 9.0 | 13113 | 0.4628 | 0.1766 | 0.1708 | 0.2597 | 0.7403 | 55391 | 6571 | 2625 | 2210 | 0.1388 |

| 0.3087 | 10.0 | 14570 | 0.4673 | 0.1766 | 0.1707 | 0.2594 | 0.7406 | 55410 | 6552 | 2625 | 2229 | 0.1386 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu116

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/tubby

|

sd-concepts-library

| 2022-09-13T03:01:07Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T03:01:00Z |

---

license: mit

---

### tubby on Stable Diffusion

This is the `<tubby>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/irasutoya

|

sd-concepts-library

| 2022-09-13T02:20:17Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T02:20:14Z |

---

license: mit

---

### irasutoya on Stable Diffusion

This is the `<irasutoya>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/bad_Hub_Hugh

|

sd-concepts-library

| 2022-09-13T02:13:39Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T02:13:33Z |

---

license: mit

---

### Hub Hugh on Stable Diffusion

This is the `<HubHugh>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/zoroark

|

sd-concepts-library

| 2022-09-13T01:42:13Z | 0 | 2 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T01:42:00Z |

---

license: mit

---

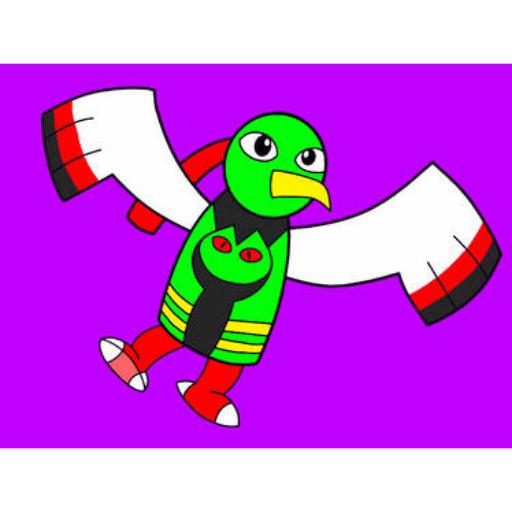

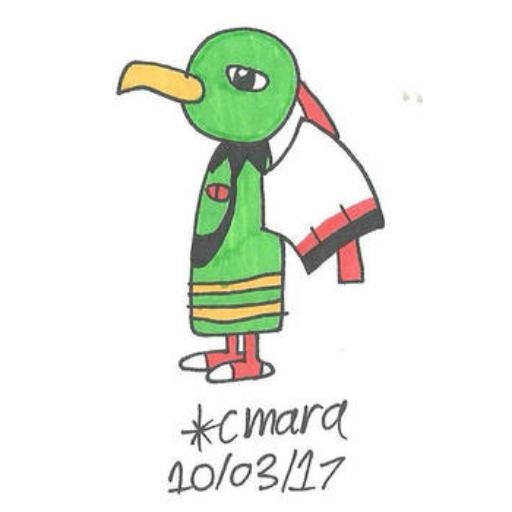

### zoroark on Stable Diffusion

This is the `<zoroark>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/centaur

|

sd-concepts-library

| 2022-09-13T01:41:40Z | 0 | 3 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T01:41:35Z |

---

license: mit

---

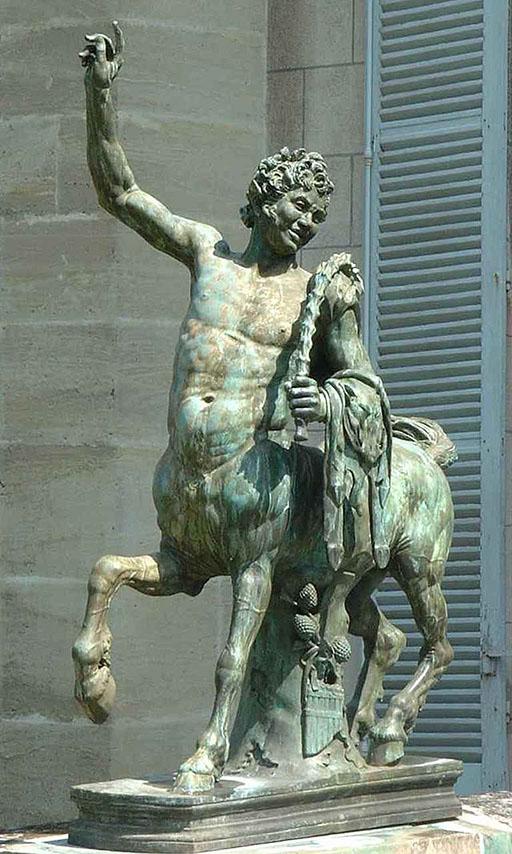

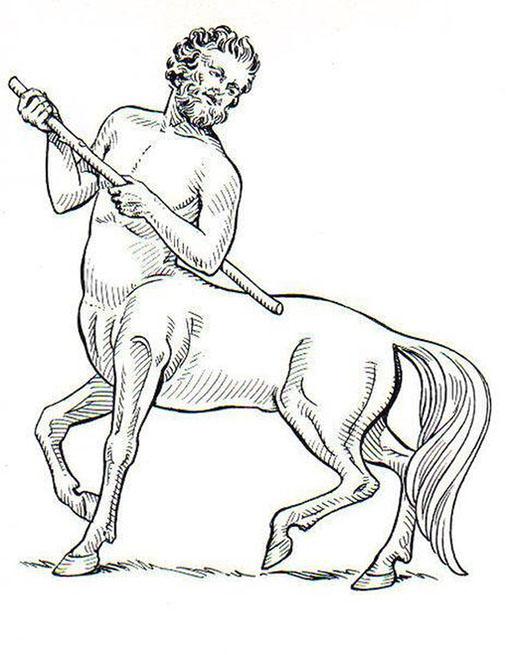

### Centaur on Stable Diffusion

This is the `<centaur>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/illustration-style

|

sd-concepts-library

| 2022-09-13T01:38:47Z | 0 | 25 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T01:38:43Z |

---

license: mit

---

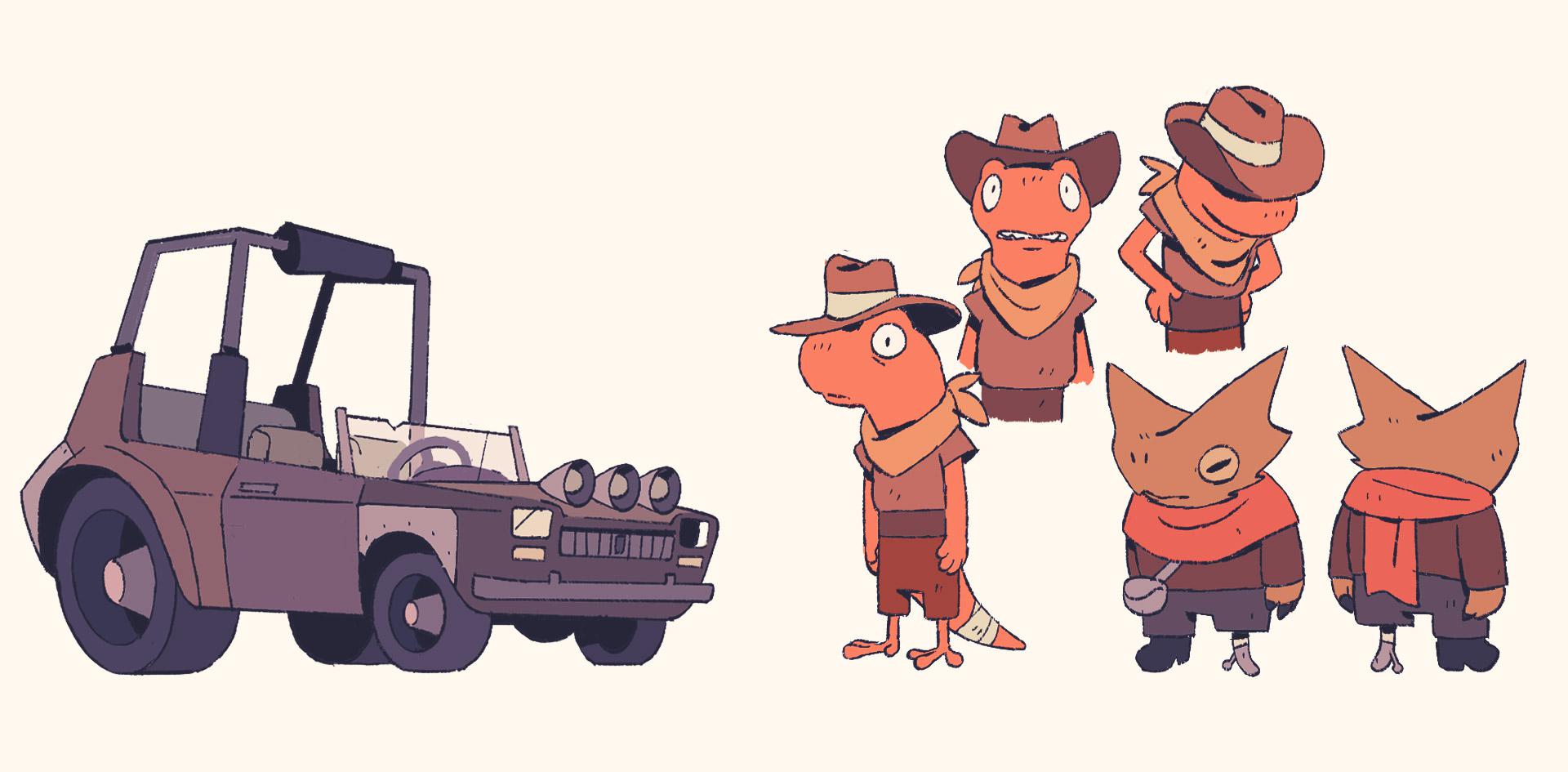

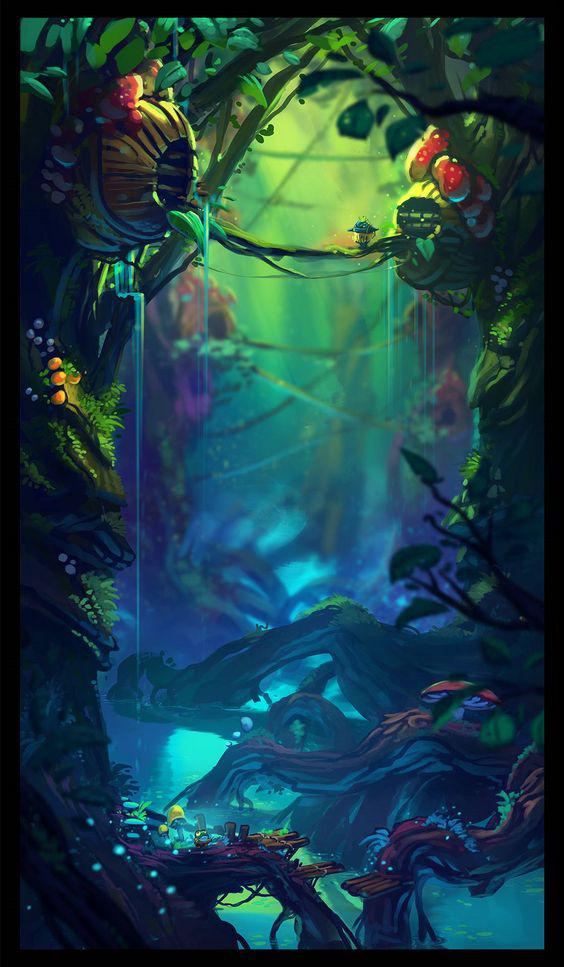

### Illustration style on Stable Diffusion

This is the `<illustration-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

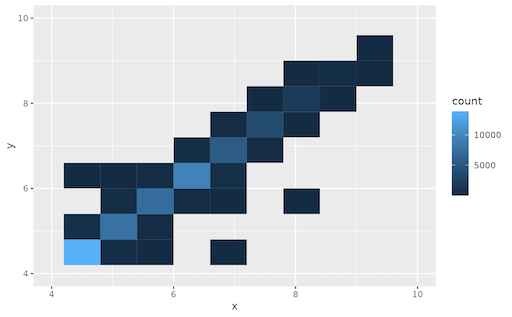

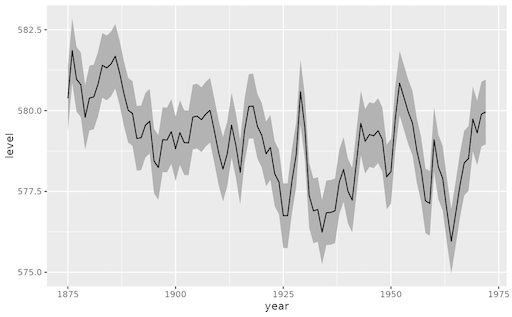

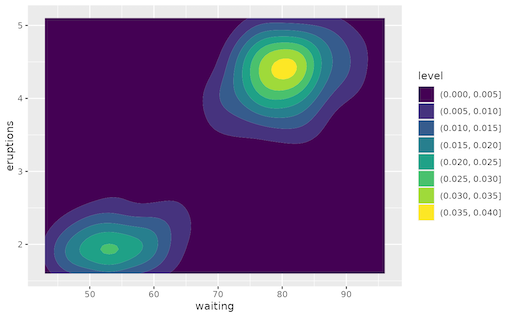

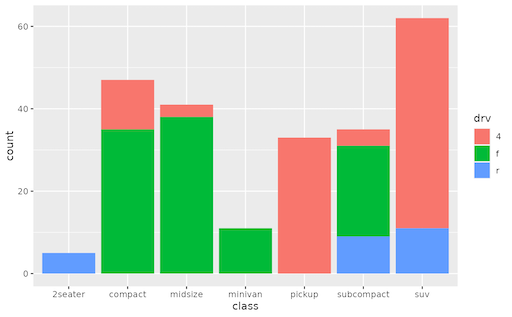

sd-concepts-library/ggplot2

|

sd-concepts-library

| 2022-09-13T00:00:14Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T00:00:10Z |

---

license: mit

---

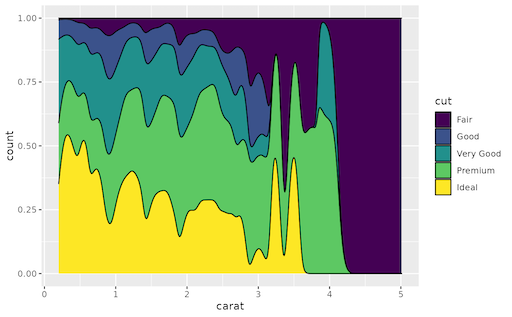

### ggplot2 on Stable Diffusion

This is the `<ggplot2>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/metagabe

|

sd-concepts-library

| 2022-09-12T23:56:54Z | 0 | 1 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-12T23:56:50Z |

---

license: mit

---

### metagabe on Stable Diffusion

This is the `<metagabe>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

YilinWang42/autotrain-trial-run-1444253725

|

YilinWang42

| 2022-09-12T23:54:52Z | 100 | 0 |

transformers

|

[

"transformers",

"pytorch",

"autotrain",

"token-classification",

"unk",

"dataset:YilinWang42/autotrain-data-trial-run",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-12T23:53:31Z |

---

tags:

- autotrain

- token-classification

language:

- unk

widget:

- text: "I love AutoTrain 🤗"

datasets:

- YilinWang42/autotrain-data-trial-run

co2_eq_emissions:

emissions: 0.00977392698077684

---

# Model Trained Using AutoTrain

- Problem type: Entity Extraction

- Model ID: 1444253725

- CO2 Emissions (in grams): 0.0098

## Validation Metrics

- Loss: 0.082

- Accuracy: 0.980

- Precision: 0.743

- Recall: 0.778

- F1: 0.760

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/YilinWang42/autotrain-trial-run-1444253725

```

Or Python API:

```

from transformers import AutoModelForTokenClassification, AutoTokenizer

model = AutoModelForTokenClassification.from_pretrained("YilinWang42/autotrain-trial-run-1444253725", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("YilinWang42/autotrain-trial-run-1444253725", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

Padomin/t5-base-TEDxJP-9front-1body-0rear

|

Padomin

| 2022-09-12T21:46:07Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:te_dx_jp",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-12T10:24:05Z |

---

license: cc-by-sa-4.0

tags:

- generated_from_trainer

datasets:

- te_dx_jp

model-index:

- name: t5-base-TEDxJP-9front-1body-0rear

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-TEDxJP-9front-1body-0rear

This model is a fine-tuned version of [sonoisa/t5-base-japanese](https://huggingface.co/sonoisa/t5-base-japanese) on the te_dx_jp dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4576

- Wer: 0.1728

- Mer: 0.1669

- Wil: 0.2543

- Wip: 0.7457

- Hits: 55705

- Substitutions: 6444

- Deletions: 2438

- Insertions: 2281

- Cer: 0.1351

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Mer | Wil | Wip | Hits | Substitutions | Deletions | Insertions | Cer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:------:|:-----:|:-------------:|:---------:|:----------:|:------:|

| 0.649 | 1.0 | 1457 | 0.4844 | 0.2290 | 0.2126 | 0.3015 | 0.6985 | 54758 | 6748 | 3081 | 4959 | 0.2080 |

| 0.5319 | 2.0 | 2914 | 0.4385 | 0.1804 | 0.1741 | 0.2614 | 0.7386 | 55298 | 6437 | 2852 | 2364 | 0.1465 |

| 0.4819 | 3.0 | 4371 | 0.4338 | 0.1760 | 0.1698 | 0.2569 | 0.7431 | 55558 | 6419 | 2610 | 2336 | 0.1389 |

| 0.4307 | 4.0 | 5828 | 0.4328 | 0.1759 | 0.1696 | 0.2569 | 0.7431 | 55649 | 6454 | 2484 | 2424 | 0.1390 |

| 0.3735 | 5.0 | 7285 | 0.4331 | 0.1740 | 0.1680 | 0.2549 | 0.7451 | 55652 | 6398 | 2537 | 2306 | 0.1367 |

| 0.3495 | 6.0 | 8742 | 0.4380 | 0.1740 | 0.1681 | 0.2552 | 0.7448 | 55619 | 6420 | 2548 | 2267 | 0.1356 |

| 0.3679 | 7.0 | 10199 | 0.4437 | 0.1741 | 0.1682 | 0.2556 | 0.7444 | 55621 | 6441 | 2525 | 2281 | 0.1354 |

| 0.3035 | 8.0 | 11656 | 0.4494 | 0.1727 | 0.1669 | 0.2542 | 0.7458 | 55672 | 6433 | 2482 | 2237 | 0.1350 |

| 0.3041 | 9.0 | 13113 | 0.4541 | 0.1736 | 0.1677 | 0.2550 | 0.7450 | 55674 | 6441 | 2472 | 2302 | 0.1383 |

| 0.2948 | 10.0 | 14570 | 0.4576 | 0.1728 | 0.1669 | 0.2543 | 0.7457 | 55705 | 6444 | 2438 | 2281 | 0.1351 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu116

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/type

|

sd-concepts-library

| 2022-09-12T21:18:54Z | 0 | 1 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-12T21:18:51Z |

---

license: mit

---

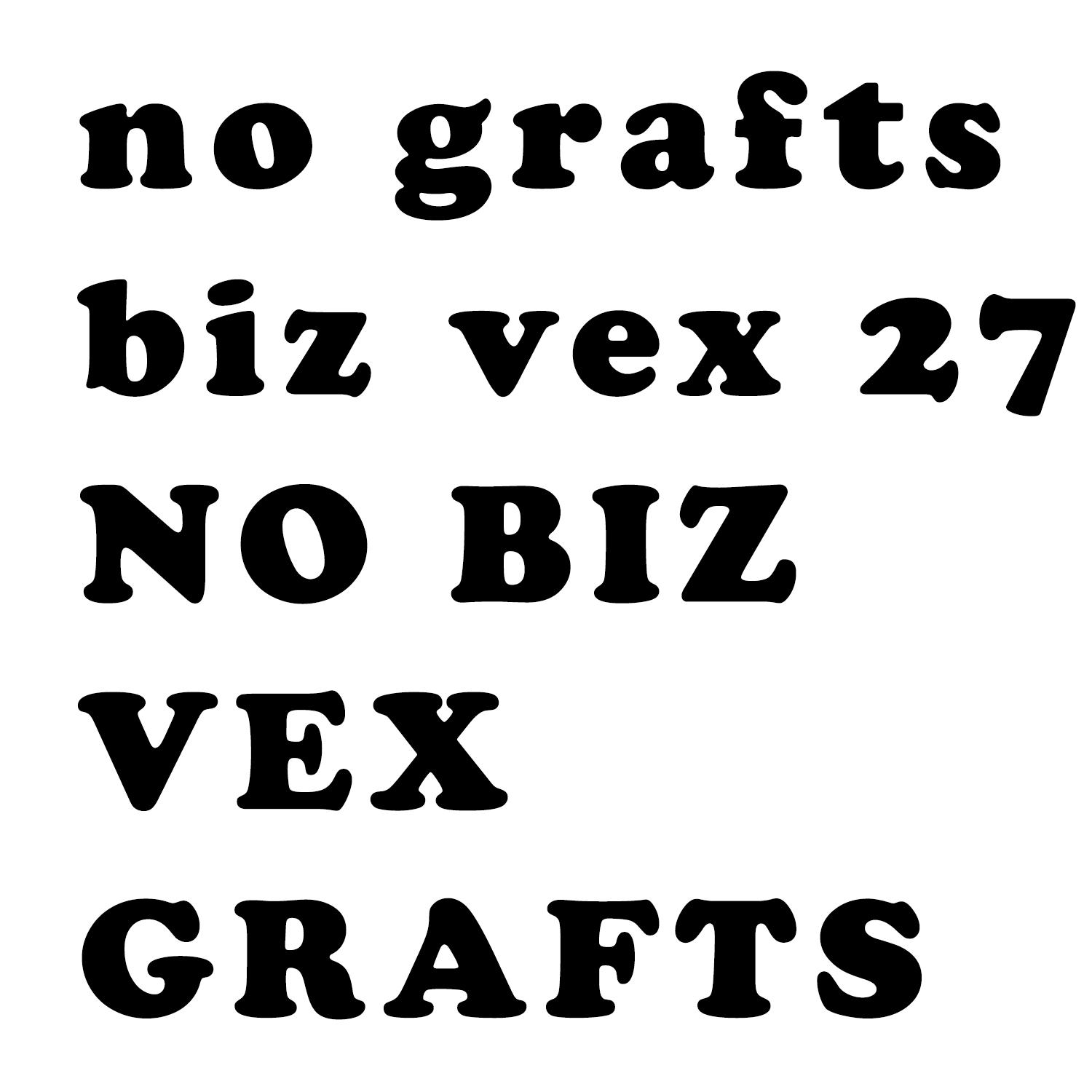

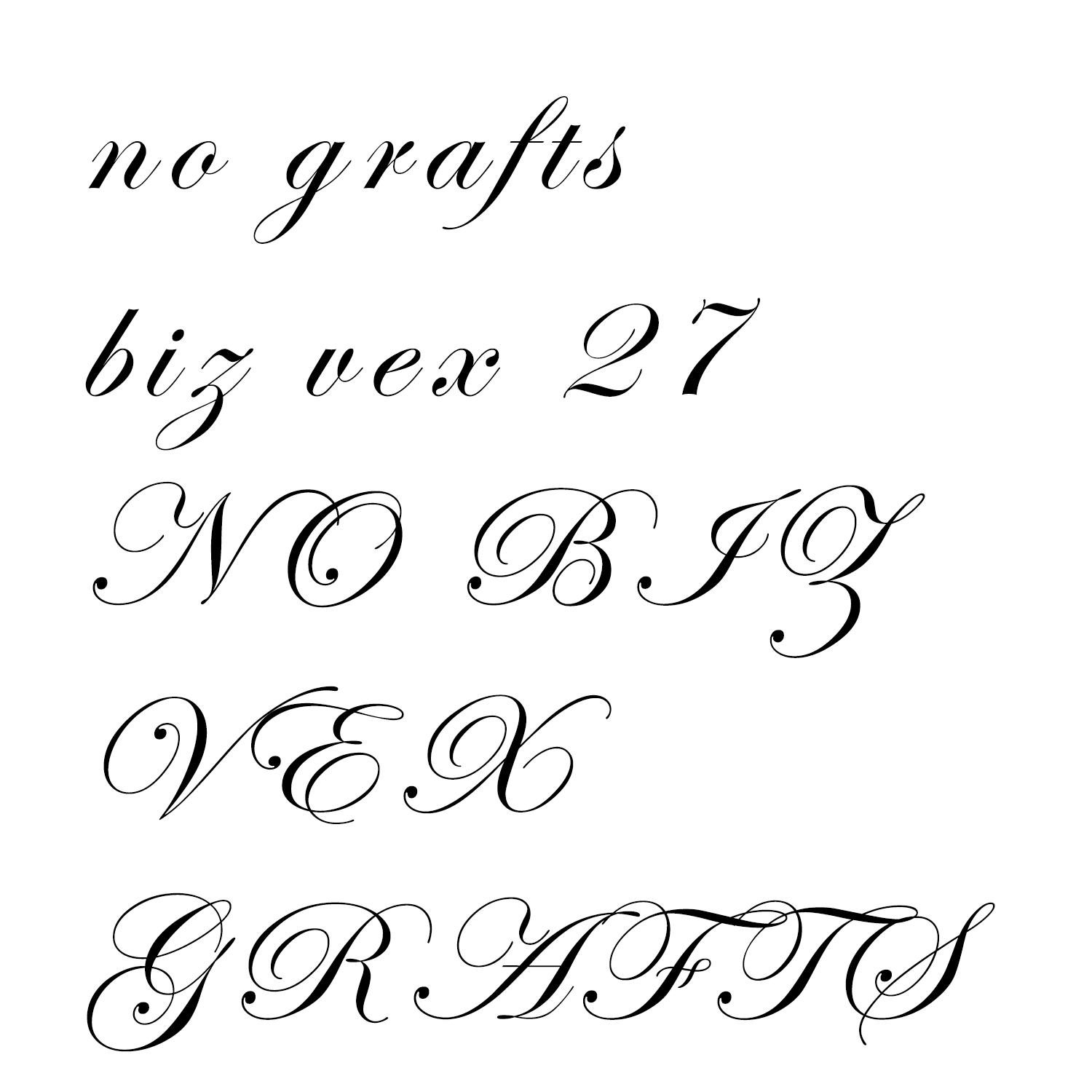

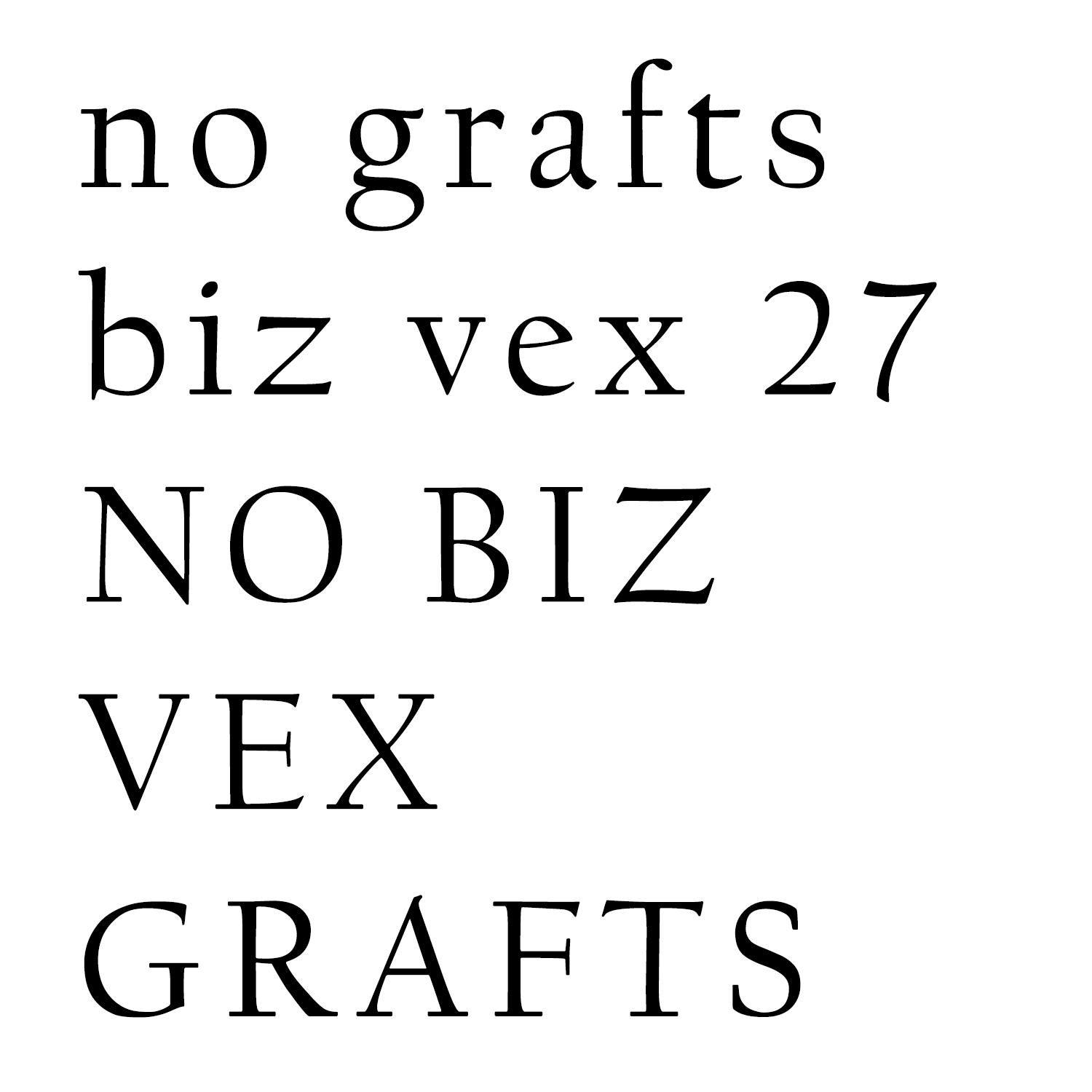

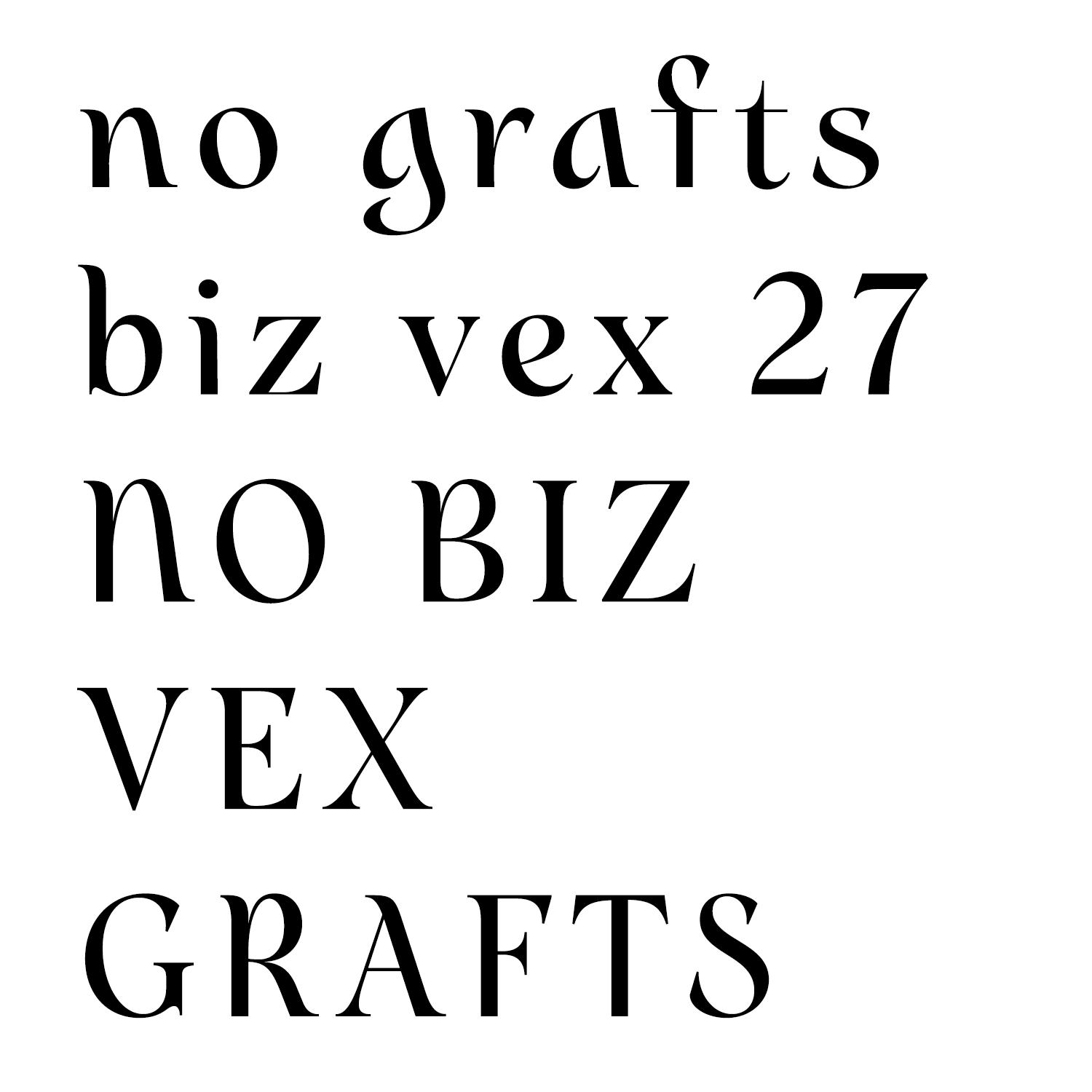

### type on Stable Diffusion

This is the `<typeface>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/doge-pound

|

sd-concepts-library

| 2022-09-12T21:08:14Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-12T21:08:03Z |

---

license: mit

---

### Doge Pound on Stable Diffusion

This is the `<doge-pound>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/alien-avatar

|

sd-concepts-library

| 2022-09-12T20:47:15Z | 0 | 2 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-12T20:47:10Z |

---

license: mit

---

### alien avatar on Stable Diffusion

This is the `<alien-avatar>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/dragonborn

|

sd-concepts-library

| 2022-09-12T20:22:04Z | 0 | 1 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-12T20:21:58Z |

---

license: mit

---

### Dragonborn on Stable Diffusion

This is the `<dragonborn>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

waltwang441/ddpm-butterflies-128

|

waltwang441

| 2022-09-12T20:10:52Z | 3 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:huggan/smithsonian_butterflies_subset",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-09-12T19:03:43Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: huggan/smithsonian_butterflies_subset

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/smithsonian_butterflies_subset` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/waltwang441/ddpm-butterflies-128/tensorboard?#scalars)

|

sd-concepts-library/xatu2

|

sd-concepts-library

| 2022-09-12T19:11:15Z | 0 | 1 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-12T19:11:08Z |

---

license: mit

---

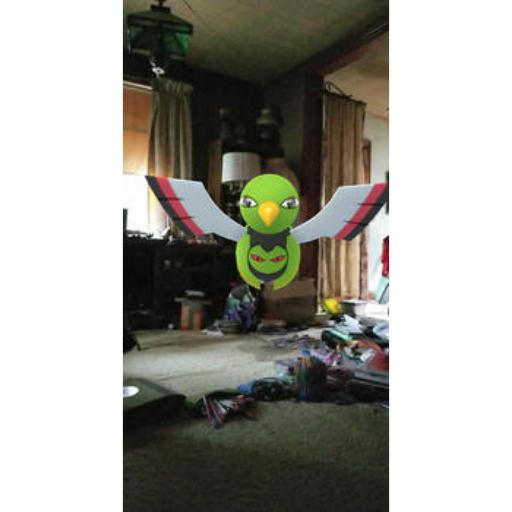

### xatu2 on Stable Diffusion

This is the `<xatu-test>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

iqbalc/stt_de_conformer_transducer_large

|

iqbalc