modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-03 00:36:49

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 535

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-03 00:36:49

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

dbwlsgh000/klue-mrc_koelectra_qa_model

|

dbwlsgh000

| 2025-08-07T06:14:39Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"electra",

"question-answering",

"generated_from_trainer",

"base_model:monologg/koelectra-small-discriminator",

"base_model:finetune:monologg/koelectra-small-discriminator",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2025-08-07T06:05:39Z |

---

library_name: transformers

base_model: monologg/koelectra-small-discriminator

tags:

- generated_from_trainer

model-index:

- name: klue-mrc_koelectra_qa_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# klue-mrc_koelectra_qa_model

This model is a fine-tuned version of [monologg/koelectra-small-discriminator](https://huggingface.co/monologg/koelectra-small-discriminator) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 4.5647

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 5.8469 | 1.0 | 50 | 5.7571 |

| 5.612 | 2.0 | 100 | 5.4824 |

| 5.3119 | 3.0 | 150 | 5.1945 |

| 5.0362 | 4.0 | 200 | 4.9599 |

| 4.7995 | 5.0 | 250 | 4.7934 |

| 4.6322 | 6.0 | 300 | 4.6802 |

| 4.5037 | 7.0 | 350 | 4.6203 |

| 4.4093 | 8.0 | 400 | 4.5894 |

| 4.357 | 9.0 | 450 | 4.5701 |

| 4.3299 | 10.0 | 500 | 4.5647 |

### Framework versions

- Transformers 4.54.1

- Pytorch 2.6.0+cu124

- Datasets 4.0.0

- Tokenizers 0.21.4

|

taengk/klue-mrc_koelectra_qa_model

|

taengk

| 2025-08-07T06:12:18Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"electra",

"question-answering",

"generated_from_trainer",

"base_model:monologg/koelectra-small-discriminator",

"base_model:finetune:monologg/koelectra-small-discriminator",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2025-08-07T06:12:05Z |

---

library_name: transformers

base_model: monologg/koelectra-small-discriminator

tags:

- generated_from_trainer

model-index:

- name: klue-mrc_koelectra_qa_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# klue-mrc_koelectra_qa_model

This model is a fine-tuned version of [monologg/koelectra-small-discriminator](https://huggingface.co/monologg/koelectra-small-discriminator) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.5657

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 50 | 5.7692 |

| No log | 2.0 | 100 | 5.6233 |

| No log | 3.0 | 150 | 5.5657 |

### Framework versions

- Transformers 4.54.1

- Pytorch 2.6.0+cu124

- Datasets 4.0.0

- Tokenizers 0.21.4

|

wls04/reward_1b_1

|

wls04

| 2025-08-07T06:10:40Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"generated_from_trainer",

"trl",

"reward-trainer",

"base_model:meta-llama/Llama-3.2-1B-Instruct",

"base_model:finetune:meta-llama/Llama-3.2-1B-Instruct",

"endpoints_compatible",

"region:us"

] | null | 2025-08-07T02:44:10Z |

---

base_model: meta-llama/Llama-3.2-1B-Instruct

library_name: transformers

model_name: llama1b-reward-seed123

tags:

- generated_from_trainer

- trl

- reward-trainer

licence: license

---

# Model Card for llama1b-reward-seed123

This model is a fine-tuned version of [meta-llama/Llama-3.2-1B-Instruct](https://huggingface.co/meta-llama/Llama-3.2-1B-Instruct).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="None", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/robusteval/huggingface/runs/gdmysf14)

This model was trained with Reward.

### Framework versions

- TRL: 0.21.0.dev0

- Transformers: 4.54.1

- Pytorch: 2.2.2+cu118

- Datasets: 4.0.0

- Tokenizers: 0.21.4

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

wwwvwww/klue-mrc_koelectra_qa_model

|

wwwvwww

| 2025-08-07T06:09:46Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"electra",

"question-answering",

"generated_from_trainer",

"base_model:monologg/koelectra-small-discriminator",

"base_model:finetune:monologg/koelectra-small-discriminator",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2025-08-07T06:09:41Z |

---

library_name: transformers

base_model: monologg/koelectra-small-discriminator

tags:

- generated_from_trainer

model-index:

- name: klue-mrc_koelectra_qa_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# klue-mrc_koelectra_qa_model

This model is a fine-tuned version of [monologg/koelectra-small-discriminator](https://huggingface.co/monologg/koelectra-small-discriminator) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.5881

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 50 | 5.7663 |

| No log | 2.0 | 100 | 5.6380 |

| No log | 3.0 | 150 | 5.5881 |

### Framework versions

- Transformers 4.54.1

- Pytorch 2.6.0+cu124

- Datasets 4.0.0

- Tokenizers 0.21.4

|

louisglobal/gemma-interLeaved5e-6

|

louisglobal

| 2025-08-07T06:05:44Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"generated_from_trainer",

"sft",

"trl",

"base_model:google/gemma-3-4b-it",

"base_model:finetune:google/gemma-3-4b-it",

"endpoints_compatible",

"region:us"

] | null | 2025-08-07T00:57:34Z |

---

base_model: google/gemma-3-4b-it

library_name: transformers

model_name: gemma-interLeaved5e-6

tags:

- generated_from_trainer

- sft

- trl

licence: license

---

# Model Card for gemma-interLeaved5e-6

This model is a fine-tuned version of [google/gemma-3-4b-it](https://huggingface.co/google/gemma-3-4b-it).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="louisglobal/gemma-interLeaved5e-6", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/alternis-universit-de-gen-ve/gemma-datamix/runs/8ocj50ik)

This model was trained with SFT.

### Framework versions

- TRL: 0.19.1

- Transformers: 4.54.1

- Pytorch: 2.7.1

- Datasets: 4.0.0

- Tokenizers: 0.21.2

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

eastman94/klue-mrc_koelectra_qa_model

|

eastman94

| 2025-08-07T06:05:22Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"electra",

"question-answering",

"generated_from_trainer",

"base_model:monologg/koelectra-small-discriminator",

"base_model:finetune:monologg/koelectra-small-discriminator",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2025-08-07T06:05:15Z |

---

library_name: transformers

base_model: monologg/koelectra-small-discriminator

tags:

- generated_from_trainer

model-index:

- name: klue-mrc_koelectra_qa_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# klue-mrc_koelectra_qa_model

This model is a fine-tuned version of [monologg/koelectra-small-discriminator](https://huggingface.co/monologg/koelectra-small-discriminator) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.3981

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 50 | 5.7869 |

| No log | 2.0 | 100 | 5.5458 |

| No log | 3.0 | 150 | 5.3981 |

### Framework versions

- Transformers 4.54.1

- Pytorch 2.6.0+cu124

- Datasets 4.0.0

- Tokenizers 0.21.4

|

hyojin98/klue-mrc_koelectra_qa_model

|

hyojin98

| 2025-08-07T06:04:32Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"electra",

"question-answering",

"generated_from_trainer",

"base_model:monologg/koelectra-small-discriminator",

"base_model:finetune:monologg/koelectra-small-discriminator",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2025-08-07T06:04:24Z |

---

library_name: transformers

base_model: monologg/koelectra-small-discriminator

tags:

- generated_from_trainer

model-index:

- name: klue-mrc_koelectra_qa_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# klue-mrc_koelectra_qa_model

This model is a fine-tuned version of [monologg/koelectra-small-discriminator](https://huggingface.co/monologg/koelectra-small-discriminator) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.6698

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 50 | 5.8281 |

| No log | 2.0 | 100 | 5.7156 |

| No log | 3.0 | 150 | 5.6698 |

### Framework versions

- Transformers 4.54.1

- Pytorch 2.6.0+cu124

- Datasets 4.0.0

- Tokenizers 0.21.4

|

m0vie/klue-mrc_koelectra_qa_model

|

m0vie

| 2025-08-07T06:04:26Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"electra",

"question-answering",

"generated_from_trainer",

"base_model:monologg/koelectra-small-discriminator",

"base_model:finetune:monologg/koelectra-small-discriminator",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2025-08-07T06:04:13Z |

---

library_name: transformers

base_model: monologg/koelectra-small-discriminator

tags:

- generated_from_trainer

model-index:

- name: klue-mrc_koelectra_qa_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# klue-mrc_koelectra_qa_model

This model is a fine-tuned version of [monologg/koelectra-small-discriminator](https://huggingface.co/monologg/koelectra-small-discriminator) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.5565

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 50 | 5.7540 |

| No log | 2.0 | 100 | 5.6129 |

| No log | 3.0 | 150 | 5.5565 |

### Framework versions

- Transformers 4.54.1

- Pytorch 2.6.0+cu124

- Datasets 4.0.0

- Tokenizers 0.21.4

|

rkdsan1013/klue-mrc_koelectra_qa_model

|

rkdsan1013

| 2025-08-07T06:04:16Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"electra",

"question-answering",

"generated_from_trainer",

"base_model:monologg/koelectra-small-discriminator",

"base_model:finetune:monologg/koelectra-small-discriminator",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2025-08-07T06:04:10Z |

---

library_name: transformers

base_model: monologg/koelectra-small-discriminator

tags:

- generated_from_trainer

model-index:

- name: klue-mrc_koelectra_qa_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# klue-mrc_koelectra_qa_model

This model is a fine-tuned version of [monologg/koelectra-small-discriminator](https://huggingface.co/monologg/koelectra-small-discriminator) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.6045

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 50 | 5.7855 |

| No log | 2.0 | 100 | 5.6560 |

| No log | 3.0 | 150 | 5.6045 |

### Framework versions

- Transformers 4.54.1

- Pytorch 2.6.0+cu124

- Datasets 4.0.0

- Tokenizers 0.21.4

|

Rachmaninofffff/klue-mrc_koelectra_qa_model

|

Rachmaninofffff

| 2025-08-07T06:03:53Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"electra",

"question-answering",

"generated_from_trainer",

"base_model:monologg/koelectra-small-discriminator",

"base_model:finetune:monologg/koelectra-small-discriminator",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2025-08-07T06:03:46Z |

---

library_name: transformers

base_model: monologg/koelectra-small-discriminator

tags:

- generated_from_trainer

model-index:

- name: klue-mrc_koelectra_qa_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# klue-mrc_koelectra_qa_model

This model is a fine-tuned version of [monologg/koelectra-small-discriminator](https://huggingface.co/monologg/koelectra-small-discriminator) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.1061

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 50 | 5.3516 |

| No log | 2.0 | 100 | 5.1622 |

| No log | 3.0 | 150 | 5.1061 |

### Framework versions

- Transformers 4.54.1

- Pytorch 2.6.0+cu124

- Datasets 4.0.0

- Tokenizers 0.21.4

|

Conexis/Qwen3-Coder-30B-A3B-Instruct-Channel-INT8

|

Conexis

| 2025-08-07T06:00:37Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen3_moe",

"text-generation",

"conversational",

"arxiv:2505.09388",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"8-bit",

"region:us"

] |

text-generation

| 2025-08-04T01:35:23Z |

---

library_name: transformers

license: apache-2.0

license_link: https://huggingface.co/Qwen/Qwen3-Coder-30B-A3B-Instruct/blob/main/LICENSE

pipeline_tag: text-generation

---

# Qwen3-Coder-30B-A3B-Instruct

<a href="https://chat.qwen.ai/" target="_blank" style="margin: 2px;">

<img alt="Chat" src="https://img.shields.io/badge/%F0%9F%92%9C%EF%B8%8F%20Qwen%20Chat%20-536af5" style="display: inline-block; vertical-align: middle;"/>

</a>

## Highlights

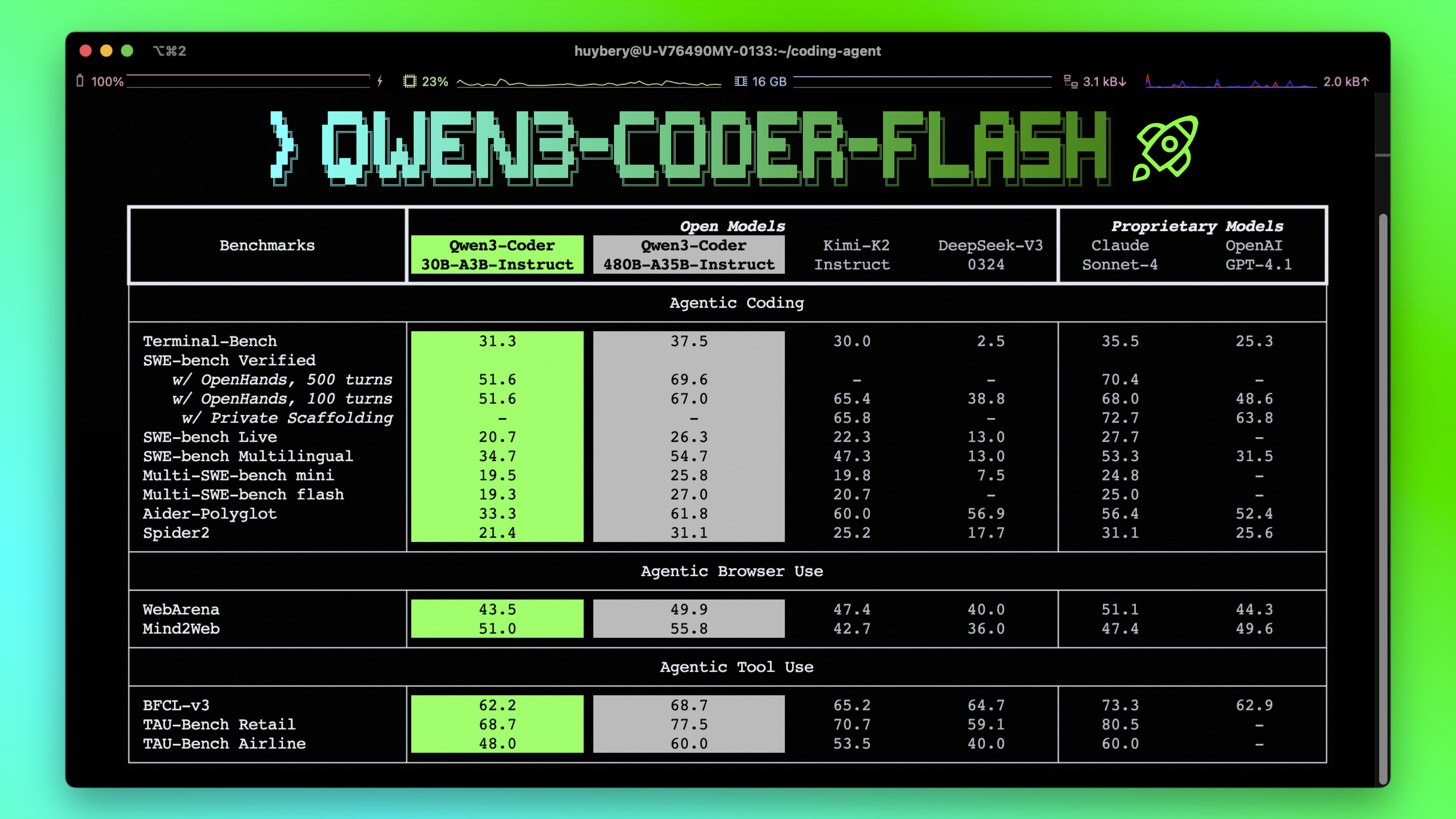

**Qwen3-Coder** is available in multiple sizes. Today, we're excited to introduce **Qwen3-Coder-30B-A3B-Instruct**. This streamlined model maintains impressive performance and efficiency, featuring the following key enhancements:

- **Significant Performance** among open models on **Agentic Coding**, **Agentic Browser-Use**, and other foundational coding tasks.

- **Long-context Capabilities** with native support for **256K** tokens, extendable up to **1M** tokens using Yarn, optimized for repository-scale understanding.

- **Agentic Coding** supporting for most platform such as **Qwen Code**, **CLINE**, featuring a specially designed function call format.

## Model Overview

**Qwen3-Coder-30B-A3B-Instruct** has the following features:

- Type: Causal Language Models

- Training Stage: Pretraining & Post-training

- Number of Parameters: 30.5B in total and 3.3B activated

- Number of Layers: 48

- Number of Attention Heads (GQA): 32 for Q and 4 for KV

- Number of Experts: 128

- Number of Activated Experts: 8

- Context Length: **262,144 natively**.

**NOTE: This model supports only non-thinking mode and does not generate ``<think></think>`` blocks in its output. Meanwhile, specifying `enable_thinking=False` is no longer required.**

For more details, including benchmark evaluation, hardware requirements, and inference performance, please refer to our [blog](https://qwenlm.github.io/blog/qwen3-coder/), [GitHub](https://github.com/QwenLM/Qwen3-Coder), and [Documentation](https://qwen.readthedocs.io/en/latest/).

## Quickstart

We advise you to use the latest version of `transformers`.

With `transformers<4.51.0`, you will encounter the following error:

```

KeyError: 'qwen3_moe'

```

The following contains a code snippet illustrating how to use the model generate content based on given inputs.

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen3-Coder-30B-A3B-Instruct"

# load the tokenizer and the model

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

# prepare the model input

prompt = "Write a quick sort algorithm."

messages = [

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

# conduct text completion

generated_ids = model.generate(

**model_inputs,

max_new_tokens=65536

)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

content = tokenizer.decode(output_ids, skip_special_tokens=True)

print("content:", content)

```

**Note: If you encounter out-of-memory (OOM) issues, consider reducing the context length to a shorter value, such as `32,768`.**

For local use, applications such as Ollama, LMStudio, MLX-LM, llama.cpp, and KTransformers have also supported Qwen3.

## Agentic Coding

Qwen3-Coder excels in tool calling capabilities.

You can simply define or use any tools as following example.

```python

# Your tool implementation

def square_the_number(num: float) -> dict:

return num ** 2

# Define Tools

tools=[

{

"type":"function",

"function":{

"name": "square_the_number",

"description": "output the square of the number.",

"parameters": {

"type": "object",

"required": ["input_num"],

"properties": {

'input_num': {

'type': 'number',

'description': 'input_num is a number that will be squared'

}

},

}

}

}

]

import OpenAI

# Define LLM

client = OpenAI(

# Use a custom endpoint compatible with OpenAI API

base_url='http://localhost:8000/v1', # api_base

api_key="EMPTY"

)

messages = [{'role': 'user', 'content': 'square the number 1024'}]

completion = client.chat.completions.create(

messages=messages,

model="Qwen3-Coder-30B-A3B-Instruct",

max_tokens=65536,

tools=tools,

)

print(completion.choice[0])

```

## Best Practices

To achieve optimal performance, we recommend the following settings:

1. **Sampling Parameters**:

- We suggest using `temperature=0.7`, `top_p=0.8`, `top_k=20`, `repetition_penalty=1.05`.

2. **Adequate Output Length**: We recommend using an output length of 65,536 tokens for most queries, which is adequate for instruct models.

### Citation

If you find our work helpful, feel free to give us a cite.

```

@misc{qwen3technicalreport,

title={Qwen3 Technical Report},

author={Qwen Team},

year={2025},

eprint={2505.09388},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2505.09388},

}

```

|

rbelanec/train_svamp_1754507512

|

rbelanec

| 2025-08-07T05:58:40Z | 0 | 0 |

peft

|

[

"peft",

"safetensors",

"llama-factory",

"lora",

"generated_from_trainer",

"base_model:meta-llama/Meta-Llama-3-8B-Instruct",

"base_model:adapter:meta-llama/Meta-Llama-3-8B-Instruct",

"license:llama3",

"region:us"

] | null | 2025-08-07T05:52:18Z |

---

library_name: peft

license: llama3

base_model: meta-llama/Meta-Llama-3-8B-Instruct

tags:

- llama-factory

- lora

- generated_from_trainer

model-index:

- name: train_svamp_1754507512

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# train_svamp_1754507512

This model is a fine-tuned version of [meta-llama/Meta-Llama-3-8B-Instruct](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct) on the svamp dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0719

- Num Input Tokens Seen: 705184

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 123

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Input Tokens Seen |

|:-------------:|:-----:|:----:|:---------------:|:-----------------:|

| 0.2129 | 0.5 | 79 | 0.1318 | 35776 |

| 0.0783 | 1.0 | 158 | 0.0855 | 70672 |

| 0.021 | 1.5 | 237 | 0.0906 | 105904 |

| 0.067 | 2.0 | 316 | 0.0719 | 141328 |

| 0.0552 | 2.5 | 395 | 0.0803 | 176752 |

| 0.0169 | 3.0 | 474 | 0.0922 | 211808 |

| 0.0035 | 3.5 | 553 | 0.0882 | 247104 |

| 0.0329 | 4.0 | 632 | 0.0805 | 282048 |

| 0.0009 | 4.5 | 711 | 0.1044 | 317248 |

| 0.0186 | 5.0 | 790 | 0.0958 | 352592 |

| 0.0012 | 5.5 | 869 | 0.1174 | 388176 |

| 0.0132 | 6.0 | 948 | 0.1097 | 423184 |

| 0.0001 | 6.5 | 1027 | 0.1172 | 458640 |

| 0.0 | 7.0 | 1106 | 0.1209 | 493440 |

| 0.0019 | 7.5 | 1185 | 0.1226 | 528768 |

| 0.0001 | 8.0 | 1264 | 0.1217 | 563872 |

| 0.0 | 8.5 | 1343 | 0.1231 | 599232 |

| 0.0003 | 9.0 | 1422 | 0.1228 | 634544 |

| 0.0005 | 9.5 | 1501 | 0.1250 | 670064 |

| 0.0 | 10.0 | 1580 | 0.1213 | 705184 |

### Framework versions

- PEFT 0.15.2

- Transformers 4.51.3

- Pytorch 2.8.0+cu128

- Datasets 3.6.0

- Tokenizers 0.21.1

|

sahil239/distilgpt2-lora-chatbot

|

sahil239

| 2025-08-07T05:54:10Z | 0 | 0 |

peft

|

[

"peft",

"safetensors",

"base_model:adapter:distilgpt2",

"lora",

"transformers",

"text-generation",

"arxiv:1910.09700",

"base_model:distilbert/distilgpt2",

"base_model:adapter:distilbert/distilgpt2",

"region:us"

] |

text-generation

| 2025-08-07T04:29:38Z |

---

base_model: distilgpt2

library_name: peft

pipeline_tag: text-generation

tags:

- base_model:adapter:distilgpt2

- lora

- transformers

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.16.0

|

Fugaki/RecurrentGemma_IndonesiaSummarizerNews

|

Fugaki

| 2025-08-07T05:52:27Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2025-08-07T05:33:18Z |

---

license: apache-2.0

---

|

Unkuk/gpt-oss-20b-bnb-4bit-bnb-8bit

|

Unkuk

| 2025-08-07T05:50:19Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"gpt_oss",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"4-bit",

"bitsandbytes",

"region:us"

] |

text-generation

| 2025-08-07T05:23:17Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

yobellee/a2c-PandaReachDense-v3-Video_Bug_Fixed

|

yobellee

| 2025-08-07T05:45:54Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"PandaReachDense-v3",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2025-08-07T05:17:45Z |

---

library_name: stable-baselines3

tags:

- PandaReachDense-v3

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: PandaReachDense-v3

type: PandaReachDense-v3

metrics:

- type: mean_reward

value: -0.20 +/- 0.12

name: mean_reward

verified: false

---

# **A2C** Agent playing **PandaReachDense-v3**

This is a trained model of a **A2C** agent playing **PandaReachDense-v3**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

rbelanec/train_svamp_1754507510

|

rbelanec

| 2025-08-07T05:45:37Z | 0 | 0 |

peft

|

[

"peft",

"safetensors",

"llama-factory",

"prompt-tuning",

"generated_from_trainer",

"base_model:meta-llama/Meta-Llama-3-8B-Instruct",

"base_model:adapter:meta-llama/Meta-Llama-3-8B-Instruct",

"license:llama3",

"region:us"

] | null | 2025-08-07T05:39:31Z |

---

library_name: peft

license: llama3

base_model: meta-llama/Meta-Llama-3-8B-Instruct

tags:

- llama-factory

- prompt-tuning

- generated_from_trainer

model-index:

- name: train_svamp_1754507510

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# train_svamp_1754507510

This model is a fine-tuned version of [meta-llama/Meta-Llama-3-8B-Instruct](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct) on the svamp dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0973

- Num Input Tokens Seen: 705184

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 123

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Input Tokens Seen |

|:-------------:|:-----:|:----:|:---------------:|:-----------------:|

| 1.8026 | 0.5 | 79 | 1.4394 | 35776 |

| 0.117 | 1.0 | 158 | 0.1366 | 70672 |

| 0.0931 | 1.5 | 237 | 0.1189 | 105904 |

| 0.1259 | 2.0 | 316 | 0.1163 | 141328 |

| 0.0836 | 2.5 | 395 | 0.1113 | 176752 |

| 0.0589 | 3.0 | 474 | 0.1143 | 211808 |

| 0.0598 | 3.5 | 553 | 0.1081 | 247104 |

| 0.1036 | 4.0 | 632 | 0.1026 | 282048 |

| 0.0738 | 4.5 | 711 | 0.0975 | 317248 |

| 0.1063 | 5.0 | 790 | 0.0975 | 352592 |

| 0.0617 | 5.5 | 869 | 0.0973 | 388176 |

| 0.0871 | 6.0 | 948 | 0.0979 | 423184 |

| 0.0905 | 6.5 | 1027 | 0.1042 | 458640 |

| 0.0633 | 7.0 | 1106 | 0.0979 | 493440 |

| 0.1537 | 7.5 | 1185 | 0.0979 | 528768 |

| 0.047 | 8.0 | 1264 | 0.0990 | 563872 |

| 0.0195 | 8.5 | 1343 | 0.0974 | 599232 |

| 0.1123 | 9.0 | 1422 | 0.0987 | 634544 |

| 0.0886 | 9.5 | 1501 | 0.0992 | 670064 |

| 0.0054 | 10.0 | 1580 | 0.0986 | 705184 |

### Framework versions

- PEFT 0.15.2

- Transformers 4.51.3

- Pytorch 2.8.0+cu128

- Datasets 3.6.0

- Tokenizers 0.21.1

|

sunxysun/a2c-PandaReachDense-v3

|

sunxysun

| 2025-08-07T05:45:06Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"PandaReachDense-v3",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2025-08-07T05:42:49Z |

---

library_name: stable-baselines3

tags:

- PandaReachDense-v3

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: PandaReachDense-v3

type: PandaReachDense-v3

metrics:

- type: mean_reward

value: -0.25 +/- 0.12

name: mean_reward

verified: false

---

# **A2C** Agent playing **PandaReachDense-v3**

This is a trained model of a **A2C** agent playing **PandaReachDense-v3**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

yyyyyxie/textflux-beta

|

yyyyyxie

| 2025-08-07T05:44:51Z | 0 | 1 |

diffusers

|

[

"diffusers",

"safetensors",

"scene-text-synthesis",

"multilingual",

"diffusion",

"dit",

"ocr-free",

"textflux",

"flux",

"text-to-image",

"arxiv:2505.17778",

"base_model:black-forest-labs/FLUX.1-Fill-dev",

"base_model:finetune:black-forest-labs/FLUX.1-Fill-dev",

"license:cc-by-nc-2.0",

"region:us"

] |

text-to-image

| 2025-07-30T03:45:44Z |

---

license: cc-by-nc-2.0

tags:

- scene-text-synthesis

- multilingual

- diffusion

- dit

- ocr-free

- textflux

- flux

# - text-to-image

# - generated_image_text

library_name: diffusers

pipeline_tag: text-to-image

base_model:

- black-forest-labs/FLUX.1-Fill-dev

---

# TextFlux: An OCR-Free DiT Model for High-Fidelity Multilingual Scene Text Synthesis

<div style="display: flex; justify-content: center; align-items: center;">

<a href="https://arxiv.org/abs/2505.17778">

<img src='https://img.shields.io/badge/arXiv-2505.17778-red?style=flat&logo=arXiv&logoColor=red' alt='arxiv'>

</a>

<a href='https://huggingface.co/yyyyyxie/textflux'>

<img src='https://img.shields.io/badge/Hugging Face-ckpts-orange?style=flat&logo=HuggingFace&logoColor=orange' alt='huggingface'>

</a>

<a href="https://github.com/yyyyyxie/textflux">

<img src='https://img.shields.io/badge/GitHub-Repo-blue?style=flat&logo=GitHub' alt='GitHub'>

</a>

<a href="https://huggingface.co/yyyyyxie/textflux" style="margin: 0 2px;">

<img src='https://img.shields.io/badge/Demo-Gradio-gold?style=flat&logo=Gradio&logoColor=red' alt='Demo'>

</a>

<a href='https://yyyyyxie.github.io/textflux-site/'>

<img src='https://img.shields.io/badge/Webpage-Project-silver?style=flat&logo=&logoColor=orange' alt='webpage'>

</a>

<a href="https://modelscope.cn/models/xieyu20001003/textflux">

<img src="https://img.shields.io/badge/🤖_ModelScope-ckpts-ffbd45.svg" alt="ModelScope">

</a>

</div>

<p align="left">

<strong>English</strong> | <a href="./README_CN.md"><strong>中文简体</strong></a>

</p>

**TextFlux** is an **OCR-free framework** using a Diffusion Transformer (DiT, based on [FLUX.1-Fill-dev](https://github.com/black-forest-labs/flux)) for high-fidelity multilingual scene text synthesis. It simplifies the learning task by providing direct visual glyph guidance through spatial concatenation of rendered glyphs with the scene image, enabling the model to focus on contextual reasoning and visual fusion.

## Key Features

* **OCR-Free:** Simplified architecture without OCR encoders.

* **High-Fidelity & Contextual Styles:** Precise rendering, stylistically consistent with scenes.

* **Multilingual & Low-Resource:** Strong performance across languages, adapts to new languages with minimal data (e.g., <1,000 samples).

* **Zero-Shot Generalization:** Renders characters unseen during training.

* **Controllable Multi-Line Text:** Flexible multi-line synthesis with line-level control.

* **Data Efficient:** Uses a fraction of data (e.g., ~1%) compared to other methods.

<div align="center">

<img src="https://image-transfer-season.oss-cn-qingdao.aliyuncs.com/pictures/abstract_fig.png" width="100%" height="100%"/>

</div>

## Updates

- **`2025/08/02`**: Our full param [**TextFlux-beta**](https://huggingface.co/yyyyyxie/textflux-beta) weights and [**TextFlux-LoRA-beta**](https://huggingface.co/yyyyyxie/textflux-lora-beta) weights are now available! Single-line text generation accuracy performance could be significantly enhanced by **10.9%** and **11.2%** respectively 👋!

- **`2025/08/02`**: Our [**Training Datasets**](https://huggingface.co/datasets/yyyyyxie/textflux-anyword) and [**Testing Datasets**](https://huggingface.co/datasets/yyyyyxie/textflux-test-datasets) are now available 👋!

- **`2025/08/01`**: Our [**Eval Scripts**](https://huggingface.co/yyyyyxie/textflux) are now available 👋!

- **`2025/05/27`**: Our [**Full-Param Weights**](https://huggingface.co/yyyyyxie/textflux) and [**LoRA Weights**](https://huggingface.co/yyyyyxie/textflux-lora) are now available 👋!

- **`2025/05/25`**: Our [**Paper on ArXiv**](https://arxiv.org/abs/2505.17778) is available 👋!

## TextFlux-beta

We are excited to release [**TextFlux-beta**](https://huggingface.co/yyyyyxie/textflux-beta) and [**TextFlux-LoRA-beta**](https://huggingface.co/yyyyyxie/textflux-lora-beta), new versions of our model specifically optimized for single-line text editing.

### Key Advantages

- **Significantly improves the quality** of single-line text rendering.

- **Increases inference speed** for single-line text by approximately **1.4x**.

- **Dramatically enhances the accuracy** of small text synthesis.

### How It Works

Considering that single-line editing is a primary use case for many users and generally yields more stable, high-quality results, we have released new weights optimized for this scenario.

Unlike the original model which renders glyphs onto a full-size mask, the beta version utilizes a **single-line image strip** for the glyph condition. This approach not only reduces unnecessary computational overhead but also provides a more stable and high-quality supervisory signal. This leads directly to the significant improvements in both single-line and small text rendering (see example [here](https://github.com/yyyyyxie/textflux/blob/main/resource/demo_singleline.png)).

To use these new models, please refer to the updated files: demo.py, run_inference.py, and run_inference_lora.py. While the beta models retain the ability to generate multi-line text, we **highly recommend** using them for single-line tasks to achieve the best performance and stability.

### Performance

This table shows that the TextFlux-beta model achieves a significant performance improvement of approximately **11 points** in single-line text editing, while also boosting inference speed by **1.4 times** compared to previous versions! The [**AMO Sampler**](https://github.com/hxixixh/amo-release) contributed approximately 3 points to this increase. The test dataset is [**ReCTS editing**](https://huggingface.co/datasets/yyyyyxie/textflux-test-datasets).

| Method | SeqAcc-Editing (%)↑ | NED (%)↑ | FID ↓ | LPIPS ↓ | Inference Speed (s/img)↓ |

| ------------------ | :-----------------: | :------: | :------: | :-------: | :----------------------: |

| TextFlux-LoRA | 37.2 | 58.2 | 4.93 | 0.063 | 16.8 |

| TextFlux | 40.6 | 60.7 | 4.84 | 0.062 | 15.6 |

| TextFlux-LoRA-beta | 48.4 | 70.5 | 4.69 | 0.062 | 12.0 |

| TextFlux-beta | **51.5** | **72.9** | **4.59** | **0.061** | **10.9** |

## Setup

1. **Clone/Download:** Get the necessary code and model weights.

2. **Dependencies:**

```bash

git clone https://github.com/yyyyyxie/textflux.git

cd textflux

conda create -n textflux python==3.11.4 -y

conda activate textflux

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txt

cd diffusers

pip install -e .

# Ensure gradio == 3.50.1

```

## Gradio Demo

Provides "Custom Mode" (upload scene image, draw masks, input text for automatic template generation) and "Normal Mode" (for pre-combined inputs).

```bash

# Ensure gradio == 3.50.1

python demo.py

```

## Training

This guide provides instructions for training and fine-tuning the **TextFlux** models.

-----

### Multi-line Training (Reproducing Paper Results)

Follow these steps to reproduce the multi-line text generation results from the original paper.

1. **Prepare the Dataset**

Download the [**Multi-line**](https://huggingface.co/datasets/yyyyyxie/textflux-multi-line) dataset and organize it using the following directory structure:

```

|- ./datasets

|- multi-lingual

| |- processed_mlt2017

| |- processed_ReCTS_train_images

| |- processed_totaltext

| ....

```

2. **Run the Training Script**

Execute the appropriate training script. The `train.sh` script is for standard training, while `train_lora.sh` is for training with LoRA.

```bash

# For standard training

bash scripts/train.sh

```

or

```bash

# For LoRA training

bash scripts/train_lora.sh

```

*Note: Ensure you are using the commands and configurations within the script designated for **multi-line** training.*

-----

### Single-line Training

To create our TextFlux beta weights optimized for the single-line task, we fine-tuned our pre-trained multi-line models. Specifically, we loaded the weights from the [**TextFlux**](https://huggingface.co/yyyyyxie/textflux) and [**TextFLux-LoRA**](https://huggingface.co/yyyyyxie/textflux-lora) models and continued training for an additional 10,000 steps on a single-line dataset.

If you wish to replicate this process, you can follow these steps:

1. **Prepare the Dataset**

First, download the [**Single-line**](https://huggingface.co/datasets/yyyyyxie/textflux-anyword) dataset and arrange it as follows:

```

|- ./datasets

|- anyword

| |- ReCTS

| |- TotalText

| |- ArT

| ...

....

```

2. **Run the Fine-tuning Script**

Ensure your script is configured to load the weights from a pre-trained multi-line model, and then execute the fine-tuning command.

```bash

# For standard fine-tuning

bash scripts/train.sh

```

or

```bash

# For LoRA fine-tuning

bash scripts/train_lora.sh

```

## Evaluation

First, use the `scripts/batch_eval.sh` script to perform batch inference on the images in the test set.

```

bash scripts/batch_eval.sh

```

Once inference is complete, use `eval/eval_ocr.sh` to evaluate the OCR accuracy and `eval/eval_fid_lpips.sh` to evaluate FID and LPIPS scores.

```

bash eval/eval_ocr.sh

```

```

bash eval/eval_fid_lpips.sh

```

## TODO

- [x] Release the training datasets and testing datasets

- [x] Release the training scripts

- [x] Release the eval scripts

- [ ] Support comfyui

## Acknowledgement

Our code is modified based on [Diffusers](https://github.com/huggingface/diffusers). We adopt [FLUX.1-Fill-dev](https://huggingface.co/black-forest-labs/FLUX.1-Fill-dev) as the base model. Thanks to all the contributors for the helpful discussions! We also sincerely thank the contributors of the following code repositories for their valuable contributions: [AnyText](https://github.com/tyxsspa/AnyText), [AMO](https://github.com/hxixixh/amo-release).

## Citation

```bibtex

@misc{xie2025textfluxocrfreeditmodel,

title={TextFlux: An OCR-Free DiT Model for High-Fidelity Multilingual Scene Text Synthesis},

author={Yu Xie and Jielei Zhang and Pengyu Chen and Ziyue Wang and Weihang Wang and Longwen Gao and Peiyi Li and Huyang Sun and Qiang Zhang and Qian Qiao and Jiaqing Fan and Zhouhui Lian},

year={2025},

eprint={2505.17778},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.17778},

}

```

|

vocotnhan/blockassist-bc-stinging_aquatic_beaver_1754542379

|

vocotnhan

| 2025-08-07T05:44:28Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"stinging aquatic beaver",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-07T05:44:12Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- stinging aquatic beaver

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

alex223311/soul-chat-model

|

alex223311

| 2025-08-07T05:41:50Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"generated_from_trainer",

"unsloth",

"trl",

"sft",

"endpoints_compatible",

"region:us"

] | null | 2025-05-14T08:56:39Z |

---

base_model: unsloth/qwen2.5-7b-unsloth-bnb-4bit

library_name: transformers

model_name: soul-chat-model

tags:

- generated_from_trainer

- unsloth

- trl

- sft

licence: license

---

# Model Card for soul-chat-model

This model is a fine-tuned version of [unsloth/qwen2.5-7b-unsloth-bnb-4bit](https://huggingface.co/unsloth/qwen2.5-7b-unsloth-bnb-4bit).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="alex223311/soul-chat-model", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

This model was trained with SFT.

### Framework versions

- TRL: 0.15.2

- Transformers: 4.55.0

- Pytorch: 2.6.0

- Datasets: 3.6.0

- Tokenizers: 0.21.4

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallouédec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

tyanfarm/gemma-3n-hotel-faq-conversations-adapters-01

|

tyanfarm

| 2025-08-07T05:40:56Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"unsloth",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2025-08-07T05:39:56Z |

---

library_name: transformers

tags:

- unsloth

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

ACECA/lowMvM_221

|

ACECA

| 2025-08-07T05:38:22Z | 0 | 0 | null |

[

"safetensors",

"any-to-any",

"omega",

"omegalabs",

"bittensor",

"agi",

"license:mit",

"region:us"

] |

any-to-any

| 2025-08-07T05:02:30Z |

---

license: mit

tags:

- any-to-any

- omega

- omegalabs

- bittensor

- agi

---

This is an Any-to-Any model checkpoint for the OMEGA Labs x Bittensor Any-to-Any subnet.

Check out the [git repo](https://github.com/omegalabsinc/omegalabs-anytoany-bittensor) and find OMEGA on X: [@omegalabsai](https://x.com/omegalabsai).

|

ACECA/lowMvM_220

|

ACECA

| 2025-08-07T05:37:35Z | 0 | 0 | null |

[

"safetensors",

"any-to-any",

"omega",

"omegalabs",

"bittensor",

"agi",

"license:mit",

"region:us"

] |

any-to-any

| 2025-07-30T15:11:02Z |

---

license: mit

tags:

- any-to-any

- omega

- omegalabs

- bittensor

- agi

---

This is an Any-to-Any model checkpoint for the OMEGA Labs x Bittensor Any-to-Any subnet.

Check out the [git repo](https://github.com/omegalabsinc/omegalabs-anytoany-bittensor) and find OMEGA on X: [@omegalabsai](https://x.com/omegalabsai).

|

lightx2v/Wan2.1-T2V-14B-StepDistill-CfgDistill-Lightx2v

|

lightx2v

| 2025-08-07T05:27:51Z | 0 | 34 |

diffusers

|

[

"diffusers",

"safetensors",

"t2v",

"video generation",

"image-to-video",

"en",

"zh",

"base_model:Wan-AI/Wan2.1-T2V-14B",

"base_model:finetune:Wan-AI/Wan2.1-T2V-14B",

"license:apache-2.0",

"region:us"

] |

image-to-video

| 2025-07-15T13:58:37Z |

---

license: apache-2.0

language:

- en

- zh

pipeline_tag: image-to-video

tags:

- video generation

library_name: diffusers

inference:

parameters:

num_inference_steps: 4

base_model:

- Wan-AI/Wan2.1-T2V-14B

---

# Wan2.1-T2V-14B-StepDistill-CfgDistill-Lightx2v

<p align="center">

<img src="assets/img_lightx2v.png" width=75%/>

<p>

## Overview

Wan2.1-T2V-14B-StepDistill-CfgDistill-Lightx2v is an advanced text-to-video generation model built upon the Wan2.1-T2V-14B foundation. This approach allows the model to generate videos with significantly fewer inference steps (4 steps) and without classifier-free guidance, substantially reducing video generation time while maintaining high quality outputs.

In this version, we added the following features:

1. Trained with higher quality datasets for extended iterations.

2. New fp8 and int8 quantized distillation models have been added, which enable fast inference using lightx2v on RTX 4060.

## Training

Our training code is modified based on the [Self-Forcing](https://github.com/guandeh17/Self-Forcing) repository. We extended support for the Wan2.1-14B-T2V model and performed a 4-step bidirectional distillation process. The modified code is available at [Self-Forcing-Plus](https://github.com/GoatWu/Self-Forcing-Plus).

## Inference

Our inference framework utilizes [lightx2v](https://github.com/ModelTC/lightx2v), a highly efficient inference engine that supports multiple models. This framework significantly accelerates the video generation process while maintaining high quality output.

```bash

bash scripts/wan/run_wan_t2v_distill_4step_cfg.sh

```

or using the lora version:

```bash

bash scripts/wan/run_wan_t2v_distill_4step_cfg_lora.sh

```

We recommend using the **LCM scheduler** with the following settings:

- `shift=5.0`

- `guidance_scale=1.0 (i.e., without CFG)`

## License Agreement

The models in this repository are licensed under the Apache 2.0 License. We claim no rights over the your generate contents, granting you the freedom to use them while ensuring that your usage complies with the provisions of this license. You are fully accountable for your use of the models, which must not involve sharing any content that violates applicable laws, causes harm to individuals or groups, disseminates personal information intended for harm, spreads misinformation, or targets vulnerable populations. For a complete list of restrictions and details regarding your rights, please refer to the full text of the [license](LICENSE.txt).

## Acknowledgements

We would like to thank the contributors to the [Wan2.1](https://huggingface.co/Wan-AI/Wan2.1-T2V-14B), [Self-Forcing](https://huggingface.co/gdhe17/Self-Forcing/tree/main) repositories, for their open research.

|

Rcgtt/RC-CHPA

|

Rcgtt

| 2025-08-07T05:27:28Z | 0 | 0 |

adapter-transformers

|

[

"adapter-transformers",

"en",

"dataset:NousResearch/Hermes-3-Dataset",

"base_model:moonshotai/Kimi-K2-Instruct",

"base_model:adapter:moonshotai/Kimi-K2-Instruct",

"license:mit",

"region:us"

] | null | 2025-01-16T07:29:50Z |

---

license: mit

datasets:

- NousResearch/Hermes-3-Dataset

language:

- en

metrics:

- accuracy

base_model:

- moonshotai/Kimi-K2-Instruct

new_version: moonshotai/Kimi-K2-Instruct

library_name: adapter-transformers

---

|

qingy2024/HRM-Text1-41M

|

qingy2024

| 2025-08-07T05:26:39Z | 0 | 1 |

pytorch

|

[

"pytorch",

"text-generation",

"hrm",

"tinystories",

"experimental",

"causal-lm",

"en",

"dataset:roneneldan/TinyStories",

"arxiv:2506.21734",

"license:mit",

"region:us"

] |

text-generation

| 2025-08-03T06:17:04Z |

---

language: en

license: mit

library_name: pytorch

datasets:

- roneneldan/TinyStories

tags:

- text-generation

- hrm

- tinystories

- experimental

- causal-lm

pipeline_tag: text-generation

---

<div class="container">

# HRM-Text1-41M

**HRM-Text1** is an experimental text generation architecture based on the **Hierarchical Reasoning Model (HRM)** architecture. I added positional embeddings to the model for each token and tweaked the training code a bit from their implementation so that text generation would work well. It was trained from scratch on the `roneneldan/TinyStories` dataset, and it can kind of produce... let's say semi-coherent sentences ;)

*Note: This repo corresponds to the 41M parameter model, which is the first iteration. Also note that although it has 'reasoning' in the name, this model does not do chain-of-thought reasoning. The 'reasoning' just helps the model on a per-token basis.*

The model utilizes the HRM structure, consisting of a "Specialist" module for low-level processing and a "Manager" module for high-level abstraction and planning. This architecture aims to handle long-range dependencies more effectively by summarizing information at different temporal scales.