modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-04 06:26:56

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 538

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-04 06:26:41

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

Intel/albert-base-v2-sst2-int8-dynamic-inc

|

Intel

| 2023-06-27T08:45:08Z | 5 | 0 |

transformers

|

[

"transformers",

"onnx",

"albert",

"text-classification",

"text-classfication",

"int8",

"Intel® Neural Compressor",

"PostTrainingDynamic",

"en",

"dataset:glue",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-12-28T09:10:38Z |

---

language: en

license: apache-2.0

tags:

- text-classfication

- int8

- Intel® Neural Compressor

- PostTrainingDynamic

- onnx

datasets:

- glue

metrics:

- f1

---

# INT8 albert-base-v2-sst2

## Post-training dynamic quantization

### ONNX

This is an INT8 ONNX model quantized with [Intel® Neural Compressor](https://github.com/intel/neural-compressor).

The original fp32 model comes from the fine-tuned model [Alireza1044/albert-base-v2-sst2](https://huggingface.co/Alireza1044/albert-base-v2-sst2).

#### Test result

| |INT8|FP32|

|---|:---:|:---:|

| **Accuracy (eval-accuracy)** |0.9186|0.9232|

| **Model size (MB)** |59|45|

#### Load ONNX model:

```python

from optimum.onnxruntime import ORTModelForSequenceClassification

model = ORTModelForSequenceClassification.from_pretrained('Intel/albert-base-v2-sst2-int8-dynamic')

```

|

yeounyi/PPO-LunarLander-v2

|

yeounyi

| 2023-06-27T08:39:37Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-06-27T07:41:53Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 293.31 +/- 19.97

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

silpakanneganti/flan-cpt-medical-ner

|

silpakanneganti

| 2023-06-27T08:35:10Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-06-27T07:23:35Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: flan-cpt-medical-ner

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# flan-cpt-medical-ner

This model is a fine-tuned version of [google/flan-t5-large](https://huggingface.co/google/flan-t5-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 8.4240

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 37 | 18.8471 |

| No log | 2.0 | 74 | 8.4240 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu117

- Datasets 2.12.0

- Tokenizers 0.13.3

|

loghai/ppo-LunarLander-v2

|

loghai

| 2023-06-27T08:33:45Z | 2 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-06-27T08:33:25Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 255.64 +/- 41.91

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

mukeiZ/osusume

|

mukeiZ

| 2023-06-27T08:22:59Z | 0 | 1 | null |

[

"license:other",

"region:us"

] | null | 2023-05-05T08:35:55Z |

---

license: other

---

★Lora-pri_ver1

トリガーワードはprishe。無くても出るが再現性が上がる?

crownの有無で帽子の着脱可。

生成モデルにもよるが、衣装再現しないならepochは25くらいからprisheぽくなる。

epochは数字が増えていくにつれて再現度は高くなるが汎用性はなくなっていくかも。数字無しが最終。

プロンプトの強調やLoraの強度を変えれば衣装やポーズも応用が利く。LoRA Block Weightの利用も有効。

----------------------------------------

★Lora-PandU

2キャラ同時学習。prisheとulmiaで描き分け。

キャラが混じらないようにタグをそれぞれで分けてみたが成功したかどうか不明。

余計な要素が混じる場合はネガプロを利用するのも手。

epochは衣装再現性は低いが顔をみると35あたりがオススメかも。数字なしは層別適用を利用したほうがいい。

●タグ説明

・prishe

頭装備は crown

胸のリボンと石は red ribbon

耳は pointy ears 外すとヒュム耳になるかも

衣装は costume 外しても脱ぐわけではない

脚装備は short pants

足装備は brown footwear

サンプルタグ

prishe, costume, crown, white background, open mouth, red ribbon, pointy ears, brown footwear, hand on own hip, short pants

・ulmia

ulmia, uniform, solo, standing, full body, brown footwear, medium hair, brown eyes, ear piercing, circlet, orange hair, holding harp, hair ornament, jewelry

頭装備は circlet,hair ornament

耳は elf

衣装は uniform

脚装備は black leggings, gaiters

足装備は brown footwear

楽器は harp

サンプルタグ

ulmia, uniform, brown footwear, elf, white background, harp, hair ornament, black leggings, gaiters, circlet

|

MQ-playground/ppo-Huggy

|

MQ-playground

| 2023-06-27T08:08:48Z | 0 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"Huggy",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] |

reinforcement-learning

| 2023-06-27T08:08:37Z |

---

library_name: ml-agents

tags:

- Huggy

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: MQ-playground/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

andrewshi/bert-finetuned-squad

|

andrewshi

| 2023-06-27T07:55:41Z | 122 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"safetensors",

"bert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2023-06-27T00:53:20Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: bert-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-squad

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the squad dataset.

## Model description

The BERT fine-tuned SQuAD model is a version of the BERT (Bidirectional Encoder Representations from Transformers) model that has been fine-tuned on the Stanford Question Answering Dataset (SQuAD). It is designed to answer questions based on the context given. The SQuAD dataset is a collection of 100k+ questions and answers based on Wikipedia articles. Fine-tuning the model on this dataset allows it to provide precise answers to a wide array of questions based on a given context.

## Intended uses & limitations

This model is intended to be used for question-answering tasks. Given a question and a context (a piece of text containing the information to answer the question), the model will return the text span in the context that most likely contains the answer. This model is not intended to generate creative content, conduct sentiment analysis, or predict future events.

It's important to note that the model's accuracy is heavily dependent on the relevance and quality of the context it is provided. If the context does not contain the answer to the question, the model will still return a text span, which may not make sense. Additionally, the model may struggle with nuanced or ambiguous questions as it may not fully understand the subtleties of human language.

## Training and evaluation data

The model was trained on the SQuAD dataset, encompassing over 87,599 questions generated by crowd workers from various Wikipedia articles. The answers are text segments from the relevant reading passage. For evaluation, a distinct subset of the SQuAD, containing 10,570 instances, unseen by the model during training, was employed.

## Training procedure

The model was initially pretrained on a large corpus of text in an unsupervised manner, learning to predict masked tokens in a sentence. The pretraining was done on the bert-base-cased model, which was trained on English text in a case-sensitive manner. After this, the model was fine-tuned on the SQuAD dataset. During fine-tuning, the model was trained to predict the start and end positions of the answer in the context text given a question.

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

- exact_match: 81.0406811731315

- f1: 88.65884513439593

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

nomad-ai/a2c-AntBulletEnv-v0

|

nomad-ai

| 2023-06-27T07:50:43Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"AntBulletEnv-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-06-27T05:57:08Z |

---

library_name: stable-baselines3

tags:

- AntBulletEnv-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AntBulletEnv-v0

type: AntBulletEnv-v0

metrics:

- type: mean_reward

value: 2380.90 +/- 42.56

name: mean_reward

verified: false

---

# **A2C** Agent playing **AntBulletEnv-v0**

This is a trained model of a **A2C** agent playing **AntBulletEnv-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

raafat3-16/text_summary

|

raafat3-16

| 2023-06-27T07:27:02Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-06-27T07:27:02Z |

---

license: creativeml-openrail-m

---

|

olianate/dqn-SpaceInvadersNoFrameskip-v4

|

olianate

| 2023-06-27T07:27:02Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-06-27T07:10:54Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 673.50 +/- 135.15

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga olianate -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga olianate -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga olianate

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 3000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

nojiyoon/nallm-polyglot-ko-1.3b

|

nojiyoon

| 2023-06-27T07:20:53Z | 4 | 1 |

peft

|

[

"peft",

"region:us"

] | null | 2023-06-20T08:43:19Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: True

- bnb_4bit_compute_dtype: bfloat16

### Framework versions

- PEFT 0.4.0.dev0

|

neukg/TechGPT-7B

|

neukg

| 2023-06-27T07:08:59Z | 0 | 17 | null |

[

"pytorch",

"text2text-generation",

"zh",

"en",

"arxiv:2304.07854",

"license:gpl-3.0",

"region:us"

] |

text2text-generation

| 2023-06-23T10:10:11Z |

---

license: gpl-3.0

tags:

- text2text-generation

pipeline_tag: text2text-generation

language:

- zh

- en

---

# TechGPT: Technology-Oriented Generative Pretrained Transformer

Demo: [TechGPT-neukg](http://techgpt.neukg.com) <br>

Github: [neukg/TechGPT](https://github.com/neukg/TechGPT)

## 简介 Introduction

TechGPT是[“东北大学知识图谱研究组”](http://faculty.neu.edu.cn/renfeiliang)发布的垂直领域大语言模型。目前已开源全量微调的7B版本。<br>

TechGPT主要强化了如下三类任务:

- 以“知识图谱构建”为核心的关系三元组抽取等各类信息抽取任务

- 以“阅读理解”为核心的各类智能问答任务。

- 以“文本理解”为核心的关键词生成等各类序列生成任务。

在这三大自然语言处理核心能力之内,TechGPT还具备了对计算机科学、材料、机械、冶金、金融和航空航天等十余种垂直专业领域自然语言文本的处理能力。

目前,TechGPT通过提示和指令输入方式的不同,支持单轮对话和多轮对话,涵盖了领域术语抽取、命名实体识别、关系三元组抽取、文本关键词生成、标题生成摘要、摘要生成标题、文本领域识别、机器阅读理解、基础常识问答、基于上下文的知识问答、建议咨询类问答、文案生成、中英互译和简单代码生成等多项自然语言理解和生成能力。

---

TechGPT mainly strengthens the following three types of tasks:

- Various information extraction tasks such as relation triplet extraction with "knowledge graph construction" as the core

- Various intelligent question-and-answer tasks centered on "reading comprehension".

- Various sequence generation tasks such as keyword generation with "text understanding" as the core.

Within these three core natural language processing capabilities, TechGPT also has the ability to process natural language texts in more than ten vertical professional fields such as computer science, materials, machinery, metallurgy, finance, and aerospace.

TechGPT supports single-round dialogue and multi-round dialogue through different input methods of prompts and instructions, covering domain term extraction, named entity recognition, relation triplet extraction, text keyword generation, title generation abstract, abstract generation title, text field Recognition, machine reading comprehension, basic common sense question and answer, context-based knowledge question and answer, suggestion consultation question and answer, copy generation, CM-EN translation and simple code generation and many other natural language understanding and generation capabilities.

## 模型功能 Model Capabilities

模型在保留基本的对话和翻译能力之外,重点强化了基于**TechKG**的数据内容,因此,**TechGPT**更擅长在计算机科学等专业领域,完成实体及实体类型抽取、关系三元组抽取、标题扩写、摘要缩写、关键词生成和机器阅读理解等任务。

下面展示了TechGPT的几个核心能力。

1. **命名实体识别**

<table> <colgroup> <col style="width:60%"> <col style="width:40%"> </colgroup> <tr> <td>问题</td> <td>回复</td> </tr>

<tr> <td>抽取出下面文本的实体和实体类型:<br/>《女人树》,国产电视剧,由导演田迪执导,根据作家子页的原著改编,故事从1947年开始,跨越了解放战争和建国初期两大历史时期,展现了战斗在隐形战线上的人民英雄是如何不惧怕任何危险,不计较个人牺牲,甚至不顾人民内部的误解和生死裁决,都不暴露个人真实身份,至死不渝,与敌人周旋到底的英雄故事。</td> <td>实体名: 女人树, 实体类型:影视作品<br/>实体名: 田迪, 实体类型:人物<br/>实体名: 子页, 实体类型:人物<br/>实体名: 1947年, 实体类型:Date</td> </tr>

</table>

2. **关系三元组抽取**

<table> <colgroup> <col style="width:60%"> <col style="width:40%"> </colgroup> <tr> <td>问题</td> <td>回复</td> </tr>

<tr> <td>

请抽取出下面的关系三元组:<br>陈奕迅(Eason Chan),1974年7月27日出生于香港,中国香港流行乐男歌手、演员,毕业于英国金斯顿大学。 1995年因获得第14届新秀歌唱大赛冠军而正式出道。2005年发行的歌曲《浮夸》成为其歌唱生涯的代表作品之一。</td> <td><陈奕迅, 出生日期, 1974年><br><陈奕迅, 出生地, 香港><br>

<陈奕迅, 毕业院校, 英国金斯顿大学><br>

<陈奕迅, 国籍, 中国></td> </tr>

</table>

3. **标题扩写**

<table> <colgroup> <col style="width:60%"> <col style="width:40%"> </colgroup> <tr> <td>问题</td> <td>回复</td> </tr>

<tr> <td>请把下列标题扩写成摘要, 不少于100字: 基于视觉语言多模态的实体关系联合抽取的研究。</td> <td>本文介绍了一种基于视觉语言的多模态实体关系联合抽取出方法。该方法利用了图像和文本之间的语义联系,通过将图像中的物体与相应的文本描述进行匹配来识别实体之间的关系。同时,本文还提出了一种新的模型结构——深度双向编码器-解码器网络(BiDAN),用于实现这种联合提取任务。实验结果表明,所提出的方法在多个数据集上取得了较好的性能表现,证明了其有效性和实用性。</td> </tr>

</table>

4. **摘要缩写**

<table> <colgroup> <col style="width:60%"> <col style="width:40%"> </colgroup> <tr> <td>问题</td> <td>回复</td> </tr>

<tr> <td>请把下列摘要缩写成标题:本文介绍了一种基于视觉语言的多模态实体关系联合抽取出方法。该方法利用了图像和文本之间的语义联系,通过将图像中的物体与相应的文本描述进行匹配来识别实体之间的关系。同时,本文还提出了一种新的模型结构——深度双向编码器-解码器网络(BiDAF),用于实现这种联合提取任务。实验结果表明,所提出的方法在多个数据集上取得了较好的性能表现,证明了其有效性和实用性。</td> <td>基于视觉语言的多模态实体关系联合抽取研究。</td> </tr>

</table>

5. **关键词生成**

<table> <colgroup> <col style="width:60%"> <col style="width:40%"> </colgroup> <tr> <td>问题</td> <td>回复</td> </tr>

<tr> <td>请提取下面文本中的关键词。<br>本体是一种重要的知识库,其包含的丰富的语义信息可以为问答系统、信息检索、语义Web、信息抽取等领域的研究及相关应用提供重要的支持.因而,如何快速有效地构建本体具有非常重要的研究价值.研究者们分别从不同角度提出了大量有效地进行本体构建的方法.一般来讲,这些本体构建方法可以分为手工构建的方法和采用自动、半自动技术构建的方法.手工本体的方法往往需要本体专家参与到构建的整个过程,存在着构建成本高、效率低下、主观性强、移植不便等缺点,因而,此类方法正逐步被大量基于自动、半自动技术的本体构建方法所代替.自动、半自动构建的方法不需要(或仅需少量)人工参与,可以很方便地使用其它研究领域(如机器学习、自然语言处理等)的最新研究成果,也可以方便地使用不同数据源进行本体构建.</td> <td>本体,知识库,问答系统,信息检索,语义 Web,信息抽取,统计主导,语言分析主导,术语抽取,概念抽取,关系抽取,本体形成,评价方法,本体构建系统</td> </tr>

</table>

6. **机器阅读理解**

<table> <colgroup> <col style="width:60%"> <col style="width:40%"> </colgroup> <tr> <td>问题</td> <td>回复</td> </tr>

<tr> <td>请问这起交通事故是谁的责任居多?<br>小车和摩托车发生事故,在无红绿灯的十字路口,小停车看看左右,在觉得安全的情况下刹车慢慢以时速10公里左右的速度靠右行驶过路口,好没有出到十字路口正中时,被左边突然快速行驶过来的摩托车撞在车头前, 摩托车主摔到膝盖和檫伤脸部,请问这起交通事故是谁的责任居多。</td> <td>根据提供的信息,我们可以得出以下结论:<br>

1. 小车司机应该先观察交通信号灯是否亮起,如果没有信号灯,则应减速慢行通过交叉路口;<br>2. 在没有信号灯的交叉口中,小车的驾驶员应该在确保安全的前提下选择右侧车道行驶,以避免与来自左侧的车辆相撞;

3. 由于事故发生地点是十字路口,因此可以确定该事故属于道路交通事故范畴。<br>根据以上分析,我认为这起交通事故的主要责任在于摩托车驾驶人的行为。因为摩托车驾驶员在没有信号灯的情况下从左侧冲过来,导致与小车在十字路口相遇,造成了伤害。

然而,我们也需要考虑其他因素的影响,例如小车的速度、路面状况等。如果小车的车速过快或者路面湿滑,也可能对事故的发生产生影响。但是,由于提供的信息中没有提到这些细节,所以我们只能基于上述基本判断来回答这个问题。</td>

</tr>

</table>

## 准备工作 Preparation

由于LLaMA开源协议的限制,本模型仅限于研究和学习用途使用。请严格遵守LLaMA的使用要求和规范。为了确保这一点,我们需要确认您具有LLaMA的原始权重,并来自完全合法渠道。

---

According to the limitations of the LLaMA open source agreement, this model is limited to research and learning purposes. Please strictly abide by the usage requirements and specifications of LLaMA. To ensure this, we need to confirm that you have LLaMA's original weight and come from a completely legitimate source.

1. 你需要先下载模型到本地,并校验它们的检查和:

```

md5sum ./*

6b2b545ff7bacaeec6297198b4b745dd ./config.json.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

4ba9cc7f11df0422798971bc962fe076 ./generation_config.json.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

560b35ffd8a7a1f5b2d34a94a523659a ./pytorch_model.bin.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

85ae4132b11747b1609b8953c7086367 ./special_tokens_map.json.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

953dceae026a7aa88e062787c61ed9b0 ./tokenizer_config.json.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

e765a7740a908b5e166e95b6ee09b94b ./tokenizer.model.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

```

2. 根据这里→的[指定脚本](https://github.com/neukg/TechGPT/blob/main/utils/decrypt.py)解码模型权重:

```shell

for file in $(ls /path/encrypt_weight); do

python decrypt.py --type decrypt \

--input_file /path/encrypt_weight/"$file" \

--output_dir /path/to_finetuned_model \

--key_file /path/to_original_llama_7B/consolidated.00.pth

done

```

请将 `/path/encrypt_weight`替换为你下载的加密文件目录,把`/path/to_original_llama_7B`替换为你已有的合法LLaMA-7B权重目录,里面应该有原LLaMA权重文件`consolidated.00.pth`,将 `/path/to_finetuned_model` 替换为你要存放解码后文件的目录。

在解码完成后,应该可以得到以下文件:

```shell

./config.json

./generation_config.json

./pytorch_model.bin

./special_tokens_map.json

./tokenizer_config.json

./tokenizer.model

```

3. 请检查所有文件的检查和是否和下面给出的相同, 以保证解码出正确的文件:

```

md5sum ./*

6d5f0d60a6e36ebc1518624a46f5a717 ./config.json

2917a1cafb895cf57e746cfd7696bfe5 ./generation_config.json

0d322cb6bde34f7086791ce12fbf2bdc ./pytorch_model.bin

15f7a943faa91a794f38dd81a212cb01 ./special_tokens_map.json

08f6f621dba90b2a23c6f9f7af974621 ./tokenizer_config.json

6ffe559392973a92ea28032add2a8494 ./tokenizer.model

```

---

1. Git clone this model first.

```

md5sum ./*

6b2b545ff7bacaeec6297198b4b745dd ./config.json.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

4ba9cc7f11df0422798971bc962fe076 ./generation_config.json.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

560b35ffd8a7a1f5b2d34a94a523659a ./pytorch_model.bin.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

85ae4132b11747b1609b8953c7086367 ./special_tokens_map.json.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

953dceae026a7aa88e062787c61ed9b0 ./tokenizer_config.json.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

e765a7740a908b5e166e95b6ee09b94b ./tokenizer.model.e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855.enc

```

2. Decrypt the files using the scripts in https://github.com/neukg/TechGPT/blob/main/utils/decrypt.py

You can use the following command in Bash.

Please replace `/path/to_encrypted` with the path where you stored your encrypted file,

replace `/path/to_original_llama_7B` with the path where you stored your original LLaMA-7B file `consolidated.00.pth`,

and replace `/path/to_finetuned_model` with the path where you want to save your final trained model.

```bash

for file in $(ls /path/encrypt_weight); do

python decrypt.py --type decrypt \

--input_file /path/encrypt_weight/"$file" \

--output_dir /path/to_finetuned_model \

--key_file /path/to_original_llama_7B/consolidated.00.pth

done

```

After executing the aforementioned command, you will obtain the following files.

```

./config.json

./generation_config.json

./pytorch_model.bin

./special_tokens_map.json

./tokenizer_config.json

./tokenizer.model

```

3. Check md5sum

You can verify the integrity of these files by performing an MD5 checksum to ensure their complete recovery.

Here are the MD5 checksums for the relevant files:

```

md5sum ./*

6d5f0d60a6e36ebc1518624a46f5a717 ./config.json

2917a1cafb895cf57e746cfd7696bfe5 ./generation_config.json

0d322cb6bde34f7086791ce12fbf2bdc ./pytorch_model.bin

15f7a943faa91a794f38dd81a212cb01 ./special_tokens_map.json

08f6f621dba90b2a23c6f9f7af974621 ./tokenizer_config.json

6ffe559392973a92ea28032add2a8494 ./tokenizer.model

```

## 使用方法 Model Usage

请注意在**训练**和**推理**阶段, 模型接收的输入格式是一致的:

Please note that the input should be formatted as follows in both **training** and **inference**.

``` python

Human: {input} \n\nAssistant:

```

请在使用TechGPT之前保证你已经安装好`transfomrers`和`torch`:

```shell

pip install transformers

pip install torch

```

- 注意,必须保证安装的 `transformers` 的版本中已经有 `LlamaForCausalLM` 。<br>

- Note that you must ensure that the installed version of `transformers` already has `LlamaForCausalLM`.

[Example:](https://github.com/neukg/TechGPT/blob/main/inference.py)

``` python

from transformers import LlamaTokenizer, AutoModelForCausalLM, AutoConfig, GenerationConfig

import torch

ckpt_path = '/workspace/BELLE-train/Version_raw/'

load_type = torch.float16

device = torch.device(0)

tokenizer = LlamaTokenizer.from_pretrained(ckpt_path)

tokenizer.pad_token_id = 0

tokenizer.bos_token_id = 1

tokenizer.eos_token_id = 2

tokenizer.padding_side = "left"

model_config = AutoConfig.from_pretrained(ckpt_path)

model = AutoModelForCausalLM.from_pretrained(ckpt_path, torch_dtype=load_type, config=model_config)

model.to(device)

model.eval()

prompt = "Human: 请把下列标题扩写成摘要, 不少于100字: 基于视觉语言多模态的实体关系联合抽取的研究 \n\nAssistant: "

inputs = tokenizer(prompt, return_tensors="pt")

input_ids = inputs["input_ids"].to(device)

generation_config = GenerationConfig(

temperature=0.1,

top_p=0.75,

top_k=40,

num_beams=1,

bos_token_id=1,

eos_token_id=2,

pad_token_id=0,

max_new_tokens=128,

min_new_tokens=10,

do_sample=True,

)

with torch.no_grad():

generation_output = model.generate(

input_ids=input_ids,

generation_config=generation_config,

return_dict_in_generate=True,

output_scores=True,

repetition_penalty=1.2,

)

output = generation_output.sequences[0]

output = tokenizer.decode(output, skip_special_tokens=True)

print(output)

```

输出:

```

Human: 请把下列标题扩写成摘要, 不少于100字: 基于视觉语言多模态的实体关系联合抽取的研究

Assistant: 文本:基于视觉语言的多模态的实体关系联合抽取是自然语言处理领域中的一个重要问题。该文提出了一种新的方法,利用深度学习技术来提取图像中的语义信息,并使用这些信息来识别和抽取图像中的人、物、地点等实体之间的关系。实验结果表明,该方法在多个基准数据集上取得了很好的性能表现,证明了其有效性和实用性。

```

## 免责声明 Disclaimers

该项目仅供学习交流使用,禁止用于商业用途。在使用过程中,使用者需认真阅读并遵守以下声明:

1. 本项目仅为大模型测试功能而生,使用者需自行承担风险和责任,如因使用不当而导致的任何损失或伤害,本项目概不负责。

2. 本项目中出现的第三方链接或库仅为提供便利而存在,其内容和观点与本项目无关。使用者在使用时需自行辨别,本项目不承担任何连带责任;

3. 使用者在测试和使用模型时,应遵守相关法律法规,如因使用不当而造成损失的,本项目不承担责任,使用者应自行承担;若项目出现任何错误,请向我方反馈,以助于我们及时修复;

4. 本模型中出现的任何违反法律法规或公序良俗的回答,均不代表本项目观点和立场,我们将不断完善模型回答以使其更符合社会伦理和道德规范。

使用本项目即表示您已经仔细阅读、理解并同意遵守以上免责声明。本项目保留在不预先通知任何人的情况下修改本声明的权利。

---

This project is for learning exchange only, commercial use is prohibited. During use, users should carefully read and abide by the following statements:

1. This project is only for the test function of the large model, and the user shall bear the risks and responsibilities. This project shall not be responsible for any loss or injury caused by improper use.

2. The third-party links or libraries appearing in this project exist only for convenience, and their content and opinions have nothing to do with this project. Users need to identify themselves when using it, and this project does not bear any joint and several liabilities;

3. Users should abide by the relevant laws and regulations when testing and using the model. If the loss is caused by improper use, the project will not bear the responsibility, and the user should bear it by themselves; if there is any error in the project, please feedback to us. to help us fix it in a timely manner;

4. Any answers in this model that violate laws and regulations or public order and good customs do not represent the views and positions of this project. We will continue to improve the model answers to make them more in line with social ethics and moral norms.

Using this project means that you have carefully read, understood and agreed to abide by the above disclaimer. The project reserves the right to modify this statement without prior notice to anyone.

## Citation

如果使用本项目的代码、数据或模型,请引用本项目。

Please cite our project when using our code, data or model.

```

@misc{TechGPT,

author = {Feiliang Ren, Ning An, Qi Ma, Hei Lei},

title = {TechGPT: Technology-Oriented Generative Pretrained Transformer},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/neukg/TechGPT}},

}

```

**我们对BELLE的工作表示衷心的感谢!**

**Our sincere thanks to BELLE for their work!**

```

@misc{ji2023better,

title={Towards Better Instruction Following Language Models for Chinese: Investigating the Impact of Training Data and Evaluation},

author={Yunjie Ji and Yan Gong and Yong Deng and Yiping Peng and Qiang Niu and Baochang Ma and Xiangang Li},

year={2023},

eprint={2304.07854},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@misc{BELLE,

author = {Yunjie Ji, Yong Deng, Yan Gong, Yiping Peng, Qiang Niu, Baochang Ma, Xiangang Li},

title = {BELLE: Be Everyone's Large Language model Engine},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/LianjiaTech/BELLE}},

}

```

|

TurkuNLP/gpt3-finnish-xl

|

TurkuNLP

| 2023-06-27T06:51:26Z | 164 | 7 |

transformers

|

[

"transformers",

"pytorch",

"bloom",

"feature-extraction",

"text-generation",

"fi",

"arxiv:2203.02155",

"license:apache-2.0",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-02-15T10:49:56Z |

---

language:

- fi

pipeline_tag: text-generation

license: apache-2.0

---

Generative Pretrained Transformer with 1.5B parameteres for Finnish.

TurkuNLP Finnish GPT-3-models are a model family of pretrained monolingual GPT-style language models that are based on BLOOM-architecture.

Note that the models are pure language models, meaning that they are not [instruction finetuned](https://arxiv.org/abs/2203.02155) for dialogue

or answering questions.

These models are intended to be used as foundational models that can be e.g. instruction finetuned to serve as modern chat-models.

All models are trained for 300B tokens.

**Parameters**

| Model | Layers | Dim | Heads | Params |

|--------|--------|------|-------|--------|

| Small | 12 | 768 | 12 | 186M |

| Medium | 24 | 1024 | 16 | 437M |

| Large | 24 | 1536 | 16 | 881M |

| XL | 24 | 2064 | 24 | 1.5B |

| ”3B” | 32 | 2560 | 32 | 2.8B |

| ”8B” | 32 | 4096 | 32 | 7.5B |

| "13B" | 40 | 5120 | 40 | 13.3B |

**Datasets**

We used a combination of multiple Finnish resources.

* Finnish Internet Parsebank https://turkunlp.org/finnish_nlp.html

mC4 multilingual colossal, cleaned Common Crawl https://huggingface.co/datasets/mc4

* Common Crawl Finnish https://TODO

* Finnish Wikipedia https://fi.wikipedia.org/wiki

* Lönnrot Projekti Lönnrot http://www.lonnrot.net/

* ePub National library ”epub” collection

* National library ”lehdet” collection

* Suomi24 The Suomi 24 Corpus 2001-2020 http://urn.fi/urn:nbn:fi:lb-2021101527

* Reddit r/Suomi submissions and comments https://www.reddit.com/r/Suomi

* STT Finnish News Agency Archive 1992-2018 http://urn.fi/urn:nbn:fi:lb-2019041501

* Yle Finnish News Archive 2011-2018 http://urn.fi/urn:nbn:fi:lb-2017070501

* Yle Finnish News Archive 2019-2020 http://urn.fi/urn:nbn:fi:lb-2021050401

* Yle News Archive Easy-to-read Finnish 2011-2018 http://urn.fi/urn:nbn:fi:lb-2019050901

* Yle News Archive Easy-to-read Finnish 2019-2020 http://urn.fi/urn:nbn:fi:lb-2021050701

* ROOTS TODO

**Sampling ratios**

|Dataset | Chars | Ratio | Weight | W.Ratio |

|----------|--------|---------|--------|---------|

|Parsebank | 35.0B | 16.9\% | 1.5 | 22.7\%|

|mC4-Fi | 46.3B | 22.4\% | 1.0 | 20.0\%|

|CC-Fi | 79.6B | 38.5\% | 1.0 | 34.4\%|

|Fiwiki | 0.8B | 0.4\% | 3.0 | 1.0\%|

|Lönnrot | 0.8B | 0.4\% | 3.0 | 1.0\%|

|Yle | 1.6B | 0.8\% | 2.0 | 1.4\%|

|STT | 2.2B | 1.1\% | 2.0 | 1.9\%|

|ePub | 13.5B | 6.5\% | 1.0 | 5.8\%|

|Lehdet | 5.8B | 2.8\% | 1.0 | 2.5\%|

|Suomi24 | 20.6B | 9.9\% | 1.0 | 8.9\%|

|Reddit-Fi | 0.7B | 0.4\% | 1.0 | 0.3\%|

|**TOTAL** | **207.0B** | **100.0\%** | **N/A** | **100.0\%** |

More documentation and a paper coming soon.

|

TurkuNLP/gpt3-finnish-3B

|

TurkuNLP

| 2023-06-27T06:48:57Z | 78 | 2 |

transformers

|

[

"transformers",

"pytorch",

"bloom",

"feature-extraction",

"text-generation",

"fi",

"arxiv:2203.02155",

"license:apache-2.0",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-02-15T11:40:09Z |

---

language:

- fi

pipeline_tag: text-generation

license: apache-2.0

---

Generative Pretrained Transformer with 3B parameteres for Finnish.

TurkuNLP Finnish GPT-3-models are a model family of pretrained monolingual GPT-style language models that are based on BLOOM-architecture.

Note that the models are pure language models, meaning that they are not [instruction finetuned](https://arxiv.org/abs/2203.02155) for dialogue

or answering questions.

These models are intended to be used as foundational models that can be e.g. instruction finetuned to serve as modern chat-models.

All models are trained for 300B tokens.

**Parameters**

| Model | Layers | Dim | Heads | Params |

|--------|--------|------|-------|--------|

| Small | 12 | 768 | 12 | 186M |

| Medium | 24 | 1024 | 16 | 437M |

| Large | 24 | 1536 | 16 | 881M |

| XL | 24 | 2064 | 24 | 1.5B |

| ”3B” | 32 | 2560 | 32 | 2.8B |

| ”8B” | 32 | 4096 | 32 | 7.5B |

| "13B" | 40 | 5120 | 40 | 13.3B |

**Datasets**

We used a combination of multiple Finnish resources.

* Finnish Internet Parsebank https://turkunlp.org/finnish_nlp.html

mC4 multilingual colossal, cleaned Common Crawl https://huggingface.co/datasets/mc4

* Common Crawl Finnish https://TODO

* Finnish Wikipedia https://fi.wikipedia.org/wiki

* Lönnrot Projekti Lönnrot http://www.lonnrot.net/

* ePub National library ”epub” collection

* National library ”lehdet” collection

* Suomi24 The Suomi 24 Corpus 2001-2020 http://urn.fi/urn:nbn:fi:lb-2021101527

* Reddit r/Suomi submissions and comments https://www.reddit.com/r/Suomi

* STT Finnish News Agency Archive 1992-2018 http://urn.fi/urn:nbn:fi:lb-2019041501

* Yle Finnish News Archive 2011-2018 http://urn.fi/urn:nbn:fi:lb-2017070501

* Yle Finnish News Archive 2019-2020 http://urn.fi/urn:nbn:fi:lb-2021050401

* Yle News Archive Easy-to-read Finnish 2011-2018 http://urn.fi/urn:nbn:fi:lb-2019050901

* Yle News Archive Easy-to-read Finnish 2019-2020 http://urn.fi/urn:nbn:fi:lb-2021050701

* ROOTS TODO

**Sampling ratios**

|Dataset | Chars | Ratio | Weight | W.Ratio |

|----------|--------|---------|--------|---------|

|Parsebank | 35.0B | 16.9\% | 1.5 | 22.7\%|

|mC4-Fi | 46.3B | 22.4\% | 1.0 | 20.0\%|

|CC-Fi | 79.6B | 38.5\% | 1.0 | 34.4\%|

|Fiwiki | 0.8B | 0.4\% | 3.0 | 1.0\%|

|Lönnrot | 0.8B | 0.4\% | 3.0 | 1.0\%|

|Yle | 1.6B | 0.8\% | 2.0 | 1.4\%|

|STT | 2.2B | 1.1\% | 2.0 | 1.9\%|

|ePub | 13.5B | 6.5\% | 1.0 | 5.8\%|

|Lehdet | 5.8B | 2.8\% | 1.0 | 2.5\%|

|Suomi24 | 20.6B | 9.9\% | 1.0 | 8.9\%|

|Reddit-Fi | 0.7B | 0.4\% | 1.0 | 0.3\%|

|**TOTAL** | **207.0B** | **100.0\%** | **N/A** | **100.0\%** |

More documentation and a paper coming soon.

|

TurkuNLP/gpt3-finnish-small

|

TurkuNLP

| 2023-06-27T06:48:35Z | 3,087 | 12 |

transformers

|

[

"transformers",

"pytorch",

"bloom",

"feature-extraction",

"text-generation",

"fi",

"arxiv:2203.02155",

"license:apache-2.0",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-02-15T10:08:16Z |

---

language:

- fi

pipeline_tag: text-generation

license: apache-2.0

---

Generative Pretrained Transformer with 186M parameteres for Finnish.

TurkuNLP Finnish GPT-3-models are a model family of pretrained monolingual GPT-style language models that are based on BLOOM-architecture.

Note that the models are pure language models, meaning that they are not [instruction finetuned](https://arxiv.org/abs/2203.02155) for dialogue

or answering questions.

These models are intended to be used as foundational models that can be e.g. instruction finetuned to serve as modern chat-models.

All models are trained for 300B tokens.

**Parameters**

| Model | Layers | Dim | Heads | Params |

|--------|--------|------|-------|--------|

| Small | 12 | 768 | 12 | 186M |

| Medium | 24 | 1024 | 16 | 437M |

| Large | 24 | 1536 | 16 | 881M |

| XL | 24 | 2064 | 24 | 1.5B |

| ”3B” | 32 | 2560 | 32 | 2.8B |

| ”8B” | 32 | 4096 | 32 | 7.5B |

| "13B" | 40 | 5120 | 40 | 13.3B |

**Datasets**

We used a combination of multiple Finnish resources.

* Finnish Internet Parsebank https://turkunlp.org/finnish_nlp.html

mC4 multilingual colossal, cleaned Common Crawl https://huggingface.co/datasets/mc4

* Common Crawl Finnish https://TODO

* Finnish Wikipedia https://fi.wikipedia.org/wiki

* Lönnrot Projekti Lönnrot http://www.lonnrot.net/

* ePub National library ”epub” collection

* National library ”lehdet” collection

* Suomi24 The Suomi 24 Corpus 2001-2020 http://urn.fi/urn:nbn:fi:lb-2021101527

* Reddit r/Suomi submissions and comments https://www.reddit.com/r/Suomi

* STT Finnish News Agency Archive 1992-2018 http://urn.fi/urn:nbn:fi:lb-2019041501

* Yle Finnish News Archive 2011-2018 http://urn.fi/urn:nbn:fi:lb-2017070501

* Yle Finnish News Archive 2019-2020 http://urn.fi/urn:nbn:fi:lb-2021050401

* Yle News Archive Easy-to-read Finnish 2011-2018 http://urn.fi/urn:nbn:fi:lb-2019050901

* Yle News Archive Easy-to-read Finnish 2019-2020 http://urn.fi/urn:nbn:fi:lb-2021050701

* ROOTS TODO

**Sampling ratios**

|Dataset | Chars | Ratio | Weight | W.Ratio |

|----------|--------|---------|--------|---------|

|Parsebank | 35.0B | 16.9\% | 1.5 | 22.7\%|

|mC4-Fi | 46.3B | 22.4\% | 1.0 | 20.0\%|

|CC-Fi | 79.6B | 38.5\% | 1.0 | 34.4\%|

|Fiwiki | 0.8B | 0.4\% | 3.0 | 1.0\%|

|Lönnrot | 0.8B | 0.4\% | 3.0 | 1.0\%|

|Yle | 1.6B | 0.8\% | 2.0 | 1.4\%|

|STT | 2.2B | 1.1\% | 2.0 | 1.9\%|

|ePub | 13.5B | 6.5\% | 1.0 | 5.8\%|

|Lehdet | 5.8B | 2.8\% | 1.0 | 2.5\%|

|Suomi24 | 20.6B | 9.9\% | 1.0 | 8.9\%|

|Reddit-Fi | 0.7B | 0.4\% | 1.0 | 0.3\%|

|**TOTAL** | **207.0B** | **100.0\%** | **N/A** | **100.0\%** |

More documentation and a paper coming soon.

|

TurkuNLP/gpt3-finnish-large

|

TurkuNLP

| 2023-06-27T06:48:11Z | 10,788 | 7 |

transformers

|

[

"transformers",

"pytorch",

"bloom",

"feature-extraction",

"text-generation",

"fi",

"arxiv:2203.02155",

"license:apache-2.0",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-02-15T10:26:29Z |

---

language:

- fi

pipeline_tag: text-generation

license: apache-2.0

---

Generative Pretrained Transformer with 881M parameteres for Finnish.

TurkuNLP Finnish GPT-3-models are a model family of pretrained monolingual GPT-style language models that are based on BLOOM-architecture.

Note that the models are pure language models, meaning that they are not [instruction finetuned](https://arxiv.org/abs/2203.02155) for dialogue

or answering questions.

These models are intended to be used as foundational models that can be e.g. instruction finetuned to serve as modern chat-models.

All models are trained for 300B tokens.

**Parameters**

| Model | Layers | Dim | Heads | Params |

|--------|--------|------|-------|--------|

| Small | 12 | 768 | 12 | 186M |

| Medium | 24 | 1024 | 16 | 437M |

| Large | 24 | 1536 | 16 | 881M |

| XL | 24 | 2064 | 24 | 1.5B |

| ”3B” | 32 | 2560 | 32 | 2.8B |

| ”8B” | 32 | 4096 | 32 | 7.5B |

| "13B" | 40 | 5120 | 40 | 13.3B |

**Datasets**

We used a combination of multiple Finnish resources.

* Finnish Internet Parsebank https://turkunlp.org/finnish_nlp.html

mC4 multilingual colossal, cleaned Common Crawl https://huggingface.co/datasets/mc4

* Common Crawl Finnish https://TODO

* Finnish Wikipedia https://fi.wikipedia.org/wiki

* Lönnrot Projekti Lönnrot http://www.lonnrot.net/

* ePub National library ”epub” collection

* National library ”lehdet” collection

* Suomi24 The Suomi 24 Corpus 2001-2020 http://urn.fi/urn:nbn:fi:lb-2021101527

* Reddit r/Suomi submissions and comments https://www.reddit.com/r/Suomi

* STT Finnish News Agency Archive 1992-2018 http://urn.fi/urn:nbn:fi:lb-2019041501

* Yle Finnish News Archive 2011-2018 http://urn.fi/urn:nbn:fi:lb-2017070501

* Yle Finnish News Archive 2019-2020 http://urn.fi/urn:nbn:fi:lb-2021050401

* Yle News Archive Easy-to-read Finnish 2011-2018 http://urn.fi/urn:nbn:fi:lb-2019050901

* Yle News Archive Easy-to-read Finnish 2019-2020 http://urn.fi/urn:nbn:fi:lb-2021050701

* ROOTS TODO

**Sampling ratios**

|Dataset | Chars | Ratio | Weight | W.Ratio |

|----------|--------|---------|--------|---------|

|Parsebank | 35.0B | 16.9\% | 1.5 | 22.7\%|

|mC4-Fi | 46.3B | 22.4\% | 1.0 | 20.0\%|

|CC-Fi | 79.6B | 38.5\% | 1.0 | 34.4\%|

|Fiwiki | 0.8B | 0.4\% | 3.0 | 1.0\%|

|Lönnrot | 0.8B | 0.4\% | 3.0 | 1.0\%|

|Yle | 1.6B | 0.8\% | 2.0 | 1.4\%|

|STT | 2.2B | 1.1\% | 2.0 | 1.9\%|

|ePub | 13.5B | 6.5\% | 1.0 | 5.8\%|

|Lehdet | 5.8B | 2.8\% | 1.0 | 2.5\%|

|Suomi24 | 20.6B | 9.9\% | 1.0 | 8.9\%|

|Reddit-Fi | 0.7B | 0.4\% | 1.0 | 0.3\%|

|**TOTAL** | **207.0B** | **100.0\%** | **N/A** | **100.0\%** |

More documentation and a paper coming soon.

|

Paulsunny/whisper-small-hi

|

Paulsunny

| 2023-06-27T06:47:09Z | 77 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"hf-asr-leaderboard",

"generated_from_trainer",

"hi",

"dataset:mozilla-foundation/common_voice_11_0",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2023-06-27T06:40:16Z |

---

language:

- hi

license: apache-2.0

tags:

- hf-asr-leaderboard

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

model-index:

- name: Whisper Small Hi - Sanchit Gandhi

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Hi - Sanchit Gandhi

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the Common Voice 11.0 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Tokenizers 0.13.3

|

spitfire4794/Kandinsky_2.1

|

spitfire4794

| 2023-06-27T06:45:56Z | 0 | 0 |

pytorch

|

[

"pytorch",

"Kandinsky",

"text-image",

"text2image",

"diffusion",

"latent diffusion",

"mCLIP-XLMR",

"mT5",

"text-to-image",

"license:apache-2.0",

"region:us"

] |

text-to-image

| 2023-06-27T05:54:36Z |

---

license: apache-2.0

tags:

- Kandinsky

- text-image

- text2image

- diffusion

- latent diffusion

- mCLIP-XLMR

- mT5

pipeline_tag: text-to-image

library_name: pytorch

---

# Kandinsky 2.1

[Open In Colab](https://colab.research.google.com/drive/1xSbu-b-EwYd6GdaFPRVgvXBX_mciZ41e?usp=sharing)

[GitHub repository](https://github.com/ai-forever/Kandinsky-2)

[Habr post](https://habr.com/ru/company/sberbank/blog/725282/)

[Demo](https://rudalle.ru/)

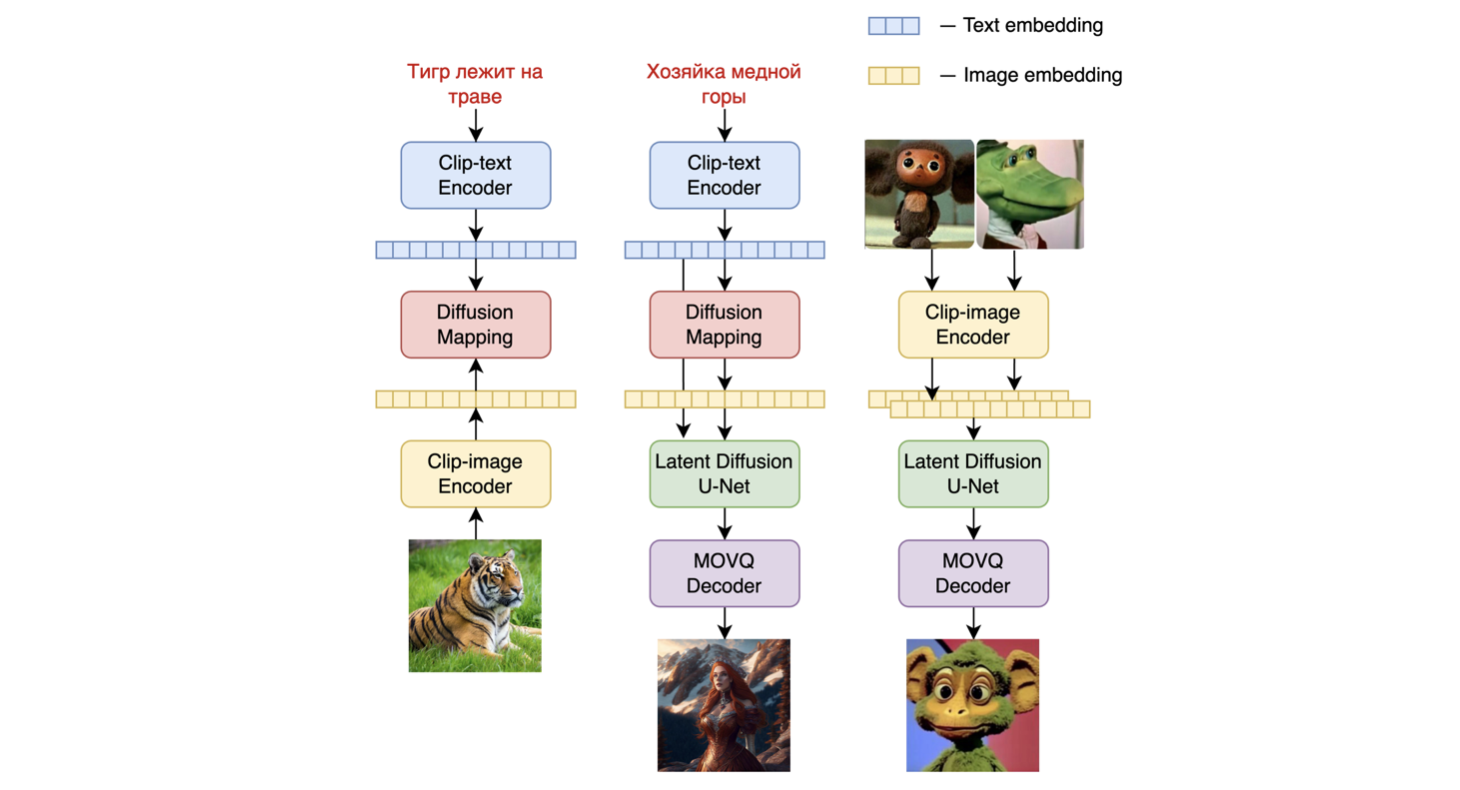

## Architecture

Kandinsky 2.1 inherits best practicies from Dall-E 2 and Latent diffusion, while introducing some new ideas.

As text and image encoder it uses CLIP model and diffusion image prior (mapping) between latent spaces of CLIP modalities. This approach increases the visual performance of the model and unveils new horizons in blending images and text-guided image manipulation.

For diffusion mapping of latent spaces we use transformer with num_layers=20, num_heads=32 and hidden_size=2048.

Other architecture parts:

+ Text encoder (XLM-Roberta-Large-Vit-L-14) - 560M

+ Diffusion Image Prior — 1B

+ CLIP image encoder (ViT-L/14) - 427M

+ Latent Diffusion U-Net - 1.22B

+ MoVQ encoder/decoder - 67M

# Authors

+ Arseniy Shakhmatov: [Github](https://github.com/cene555), [Blog](https://t.me/gradientdip)

+ Anton Razzhigaev: [Github](https://github.com/razzant), [Blog](https://t.me/abstractDL)

+ Aleksandr Nikolich: [Github](https://github.com/AlexWortega), [Blog](https://t.me/lovedeathtransformers)

+ Vladimir Arkhipkin: [Github](https://github.com/oriBetelgeuse)

+ Igor Pavlov: [Github](https://github.com/boomb0om)

+ Andrey Kuznetsov: [Github](https://github.com/kuznetsoffandrey)

+ Denis Dimitrov: [Github](https://github.com/denndimitrov)

|

joohwan/xlmr

|

joohwan

| 2023-06-27T06:30:36Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"xlm-roberta",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-06-27T06:08:00Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: xlmr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlmr

This model is a fine-tuned version of [xlm-roberta-large](https://huggingface.co/xlm-roberta-large) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6138

- Accuracy: 0.9163

- F1: 0.9153

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.3962 | 1.0 | 450 | 0.3872 | 0.9011 | 0.9005 |

| 0.0584 | 2.0 | 900 | 0.4941 | 0.9180 | 0.9171 |

| 0.0284 | 3.0 | 1350 | 0.6192 | 0.9138 | 0.9127 |

| 0.0144 | 4.0 | 1800 | 0.5967 | 0.9224 | 0.9214 |

| 0.0103 | 5.0 | 2250 | 0.6138 | 0.9163 | 0.9153 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

kejolong/bayonetta

|

kejolong

| 2023-06-27T06:25:57Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-06-27T06:19:24Z |

---

license: creativeml-openrail-m

---

|

mandliya/default-taxi-v3

|

mandliya

| 2023-06-27T06:08:55Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-06-27T06:08:54Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: default-taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="mandliya/default-taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

Shridipta-06/rl_course_vizdoom_health_gathering_supreme

|

Shridipta-06

| 2023-06-27T06:01:26Z | 0 | 0 |

sample-factory

|

[

"sample-factory",

"tensorboard",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-06-27T02:20:28Z |

---

library_name: sample-factory

tags:

- deep-reinforcement-learning

- reinforcement-learning

- sample-factory

model-index:

- name: APPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: doom_health_gathering_supreme

type: doom_health_gathering_supreme

metrics:

- type: mean_reward

value: 10.81 +/- 3.54

name: mean_reward

verified: false

---

A(n) **APPO** model trained on the **doom_health_gathering_supreme** environment.

This model was trained using Sample-Factory 2.0: https://github.com/alex-petrenko/sample-factory.

Documentation for how to use Sample-Factory can be found at https://www.samplefactory.dev/

## Downloading the model

After installing Sample-Factory, download the model with:

```

python -m sample_factory.huggingface.load_from_hub -r Shridipta-06/rl_course_vizdoom_health_gathering_supreme

```

## Using the model

To run the model after download, use the `enjoy` script corresponding to this environment:

```

python -m .usr.local.lib.python3.10.dist-packages.ipykernel_launcher --algo=APPO --env=doom_health_gathering_supreme --train_dir=./train_dir --experiment=rl_course_vizdoom_health_gathering_supreme

```

You can also upload models to the Hugging Face Hub using the same script with the `--push_to_hub` flag.

See https://www.samplefactory.dev/10-huggingface/huggingface/ for more details

## Training with this model

To continue training with this model, use the `train` script corresponding to this environment:

```

python -m .usr.local.lib.python3.10.dist-packages.ipykernel_launcher --algo=APPO --env=doom_health_gathering_supreme --train_dir=./train_dir --experiment=rl_course_vizdoom_health_gathering_supreme --restart_behavior=resume --train_for_env_steps=10000000000

```

Note, you may have to adjust `--train_for_env_steps` to a suitably high number as the experiment will resume at the number of steps it concluded at.

|

97jmlr/lander2

|

97jmlr

| 2023-06-27T05:51:34Z | 0 | 0 | null |

[

"tensorboard",

"LunarLander-v2",

"ppo",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"deep-rl-course",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-06-26T23:33:06Z |

---

tags:

- LunarLander-v2

- ppo

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

- deep-rl-course

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: -220.16 +/- 118.05

name: mean_reward

verified: false

---

# PPO Agent Playing LunarLander-v2

This is a trained model of a PPO agent playing LunarLander-v2.

# Hyperparameters

```python

{'exp_name': 'ppo'

'seed': 1

'torch_deterministic': True

'cuda': True

'track': False

'wandb_project_name': 'cleanRL'

'wandb_entity': None

'capture_video': False

'env_id': 'LunarLander-v2'

'total_timesteps': 1000

'learning_rate': 0.00025

'num_envs': 4

'num_steps': 128

'anneal_lr': True

'gae': True

'gamma': 0.99

'gae_lambda': 0.95

'num_minibatches': 4

'update_epochs': 4

'norm_adv': True

'clip_coef': 0.2

'clip_vloss': True

'ent_coef': 0.01

'vf_coef': 0.5

'max_grad_norm': 0.5

'target_kl': None

'repo_id': '97jmlr/lander2'

'batch_size': 512

'minibatch_size': 128}

```

|

S3S3/Reinforce-CartPole-v1

|

S3S3

| 2023-06-27T05:34:52Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-06-27T05:34:43Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-CartPole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 500.00 +/- 0.00

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

yhna/dqn-SpaceInvadersNoFrameskip-v4

|

yhna

| 2023-06-27T05:29:43Z | 11 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-06-26T08:52:38Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 820.50 +/- 249.79

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga yhna -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga yhna -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga yhna

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

gabrielZang/alpaca7B-lora-fine-tuning-with-test-data-int4

|

gabrielZang

| 2023-06-27T05:23:07Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-06-27T05:23:00Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: bfloat16

### Framework versions

- PEFT 0.4.0.dev0

|

tlsalfm820/wav2vec2-base-librispeech

|

tlsalfm820

| 2023-06-27T05:03:39Z | 79 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2023-06-20T06:09:04Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- wer

model-index:

- name: wav2vec2-base-librispeech

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-librispeech

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2162

- Wer: 0.1419

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 64

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.606 | 4.13 | 500 | 2.0411 | 0.7943 |

| 0.3862 | 8.26 | 1000 | 0.3058 | 0.2202 |

| 0.1253 | 12.4 | 1500 | 0.2450 | 0.1908 |

| 0.0794 | 16.53 | 2000 | 0.2152 | 0.1531 |

| 0.0566 | 20.66 | 2500 | 0.2012 | 0.1457 |

| 0.0446 | 24.79 | 3000 | 0.2061 | 0.1432 |

| 0.0363 | 28.93 | 3500 | 0.2162 | 0.1419 |

### Framework versions

- Transformers 4.29.2

- Pytorch 2.0.1+cu118

- Datasets 2.12.0

- Tokenizers 0.13.3

|

AlgorithmicResearchGroup/flan-t5-xxl-arxiv-cs-ml-closed-qa

|

AlgorithmicResearchGroup

| 2023-06-27T04:40:26Z | 10 | 0 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"arxiv",

"summarization",

"en",

"dataset:ArtifactAI/arxiv-cs-ml-instruct-tune-50k",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

summarization

| 2023-06-26T14:17:24Z |

---

license: apache-2.0

language:

- en

pipeline_tag: summarization

widget:

- text: What is an LSTM?

example_title: Question Answering

tags:

- arxiv

datasets:

- ArtifactAI/arxiv-cs-ml-instruct-tune-50k

---

# Table of Contents

0. [TL;DR](#TL;DR)

1. [Model Details](#model-details)

2. [Usage](#usage)

3. [Uses](#uses)

4. [Citation](#citation)

# TL;DR

This is a FLAN-T5-XXL model trained on [ArtifactAI/arxiv-cs-ml-instruct-50k](https://huggingface.co/datasets/ArtifactAI/arxiv-cs-ml-instruct-50k). This model is for research purposes only and ***should not be used in production settings***.

## Model Description

- **Model type:** Language model

- **Language(s) (NLP):** English

- **License:** Apache 2.0

- **Related Models:** [All FLAN-T5 Checkpoints](https://huggingface.co/models?search=flan-t5)

# Usage

Find below some example scripts on how to use the model in `transformers`:

## Using the Pytorch model

```python

import torch

from peft import PeftModel, PeftConfig

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

# Load peft config for pre-trained checkpoint etc.

peft_model_id = "ArtifactAI/flant5-xxl-math-full-training-run-one"

config = PeftConfig.from_pretrained(peft_model_id)

# load base LLM model and tokenizer

model = AutoModelForSeq2SeqLM.from_pretrained(config.base_model_name_or_path, load_in_8bit=True, device_map={"":0})

tokenizer = AutoTokenizer.from_pretrained(config.base_model_name_or_path)

# Load the Lora model

model = PeftModel.from_pretrained(model, peft_model_id, device_map={"":0})

model.eval()

input_ids = tokenizer("What is the peak phase of T-eV?", return_tensors="pt", truncation=True).input_ids.cuda()

# with torch.inference_mode():

outputs = model.generate(input_ids=input_ids, max_new_tokens=1000, do_sample=True, top_p=0.9)

print(f"summary: {tokenizer.batch_decode(outputs.detach().cpu().numpy(), skip_special_tokens=True)[0]}")

```

## Training Data

The model was trained on [ArtifactAI/arxiv-math-instruct-50k](https://huggingface.co/datasets/ArtifactAI/arxiv-cs-ml-instruct-50k), a dataset of question/answer pairs. Questions are generated using the t5-base model, while the answers are generated using the GPT-3.5-turbo model.

# Citation

```

@misc{flan-t5-xxl-arxiv-cs-ml-zeroshot-qa,

title={flan-t5-xxl-arxiv-cs-ml-zeroshot-qa},

author={Matthew Kenney},

year={2023}

}

```

|

AlgorithmicResearchGroup/flan-t5-xxl-arxiv-math-closed-qa

|

AlgorithmicResearchGroup

| 2023-06-27T04:39:54Z | 13 | 0 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"arxiv",

"summarization",

"en",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

summarization

| 2023-06-24T16:45:00Z |

---

license: apache-2.0

language:

- en

pipeline_tag: summarization

widget:

- text: What is the peak phase of T-eV?

example_title: Question Answering

tags:

- arxiv

---

# Table of Contents

0. [TL;DR](#TL;DR)

1. [Model Details](#model-details)

2. [Usage](#usage)

3. [Uses](#uses)

4. [Citation](#citation)

# TL;DR

This is a FLAN-T5-XXL model trained on [ArtifactAI/arxiv-math-instruct-50k](https://huggingface.co/datasets/ArtifactAI/arxiv-math-instruct-50k). This model is for research purposes only and ***should not be used in production settings***.

## Model Description

- **Model type:** Language model

- **Language(s) (NLP):** English

- **License:** Apache 2.0

- **Related Models:** [All FLAN-T5 Checkpoints](https://huggingface.co/models?search=flan-t5)

# Usage

Find below some example scripts on how to use the model in `transformers`:

## Using the Pytorch model

```python

import torch

from peft import PeftModel, PeftConfig

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

# Load peft config for pre-trained checkpoint etc.

peft_model_id = "ArtifactAI/flant5-xxl-math-full-training-run-one"

config = PeftConfig.from_pretrained(peft_model_id)

# load base LLM model and tokenizer

model = AutoModelForSeq2SeqLM.from_pretrained(config.base_model_name_or_path, load_in_8bit=True, device_map={"":0})

tokenizer = AutoTokenizer.from_pretrained(config.base_model_name_or_path)

# Load the Lora model

model = PeftModel.from_pretrained(model, peft_model_id, device_map={"":0})

model.eval()

input_ids = tokenizer("What is the peak phase of T-eV?", return_tensors="pt", truncation=True).input_ids.cuda()

# with torch.inference_mode():

outputs = model.generate(input_ids=input_ids, max_new_tokens=1000, do_sample=True, top_p=0.9)

print(f"summary: {tokenizer.batch_decode(outputs.detach().cpu().numpy(), skip_special_tokens=True)[0]}")

```

## Training Data

The model was trained on [ArtifactAI/arxiv-math-instruct-50k](https://huggingface.co/datasets/ArtifactAI/arxiv-math-instruct-50k), a dataset of question/answer pairs. Questions are generated using the t5-base model, while the answers are generated using the GPT-3.5-turbo model.

# Citation

```

@misc{flan-t5-xxl-arxiv-math-zeroshot-qa,

title={flan-t5-xxl-arxiv-math-zeroshot-qa},

author={Matthew Kenney},

year={2023}

}

```

|

arminmrm93/ppo-PyramidTraining

|

arminmrm93

| 2023-06-27T04:21:03Z | 0 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"Pyramids",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Pyramids",

"region:us"

] |

reinforcement-learning

| 2023-06-27T04:21:00Z |

---

library_name: ml-agents

tags:

- Pyramids

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Pyramids

---

# **ppo** Agent playing **Pyramids**

This is a trained model of a **ppo** agent playing **Pyramids**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: arminmrm93/ppo-PyramidTraining

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

draziert/reinforce-PixelCopter-v1

|

draziert

| 2023-06-27T03:58:30Z | 0 | 0 | null |

[

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-06-26T15:51:51Z |

---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: reinforce-PixelCopter-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 45.40 +/- 37.27

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

Holmodi/q-FrozenLake-v1-4x4-noSlippery

|

Holmodi

| 2023-06-27T03:44:06Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-06-27T03:44:04Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index: