modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

list | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

ShannonAI/ChineseBERT-large

|

ShannonAI

| 2022-06-19T12:07:31Z | 23 | 5 |

transformers

|

[

"transformers",

"pytorch",

"arxiv:2106.16038",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z |

# ChineseBERT-large

This repository contains code, model, dataset for **ChineseBERT** at ACL2021.

paper:

**[ChineseBERT: Chinese Pretraining Enhanced by Glyph and Pinyin Information](https://arxiv.org/abs/2106.16038)**

*Zijun Sun, Xiaoya Li, Xiaofei Sun, Yuxian Meng, Xiang Ao, Qing He, Fei Wu and Jiwei Li*

code:

[ChineseBERT github link](https://github.com/ShannonAI/ChineseBert)

## Model description

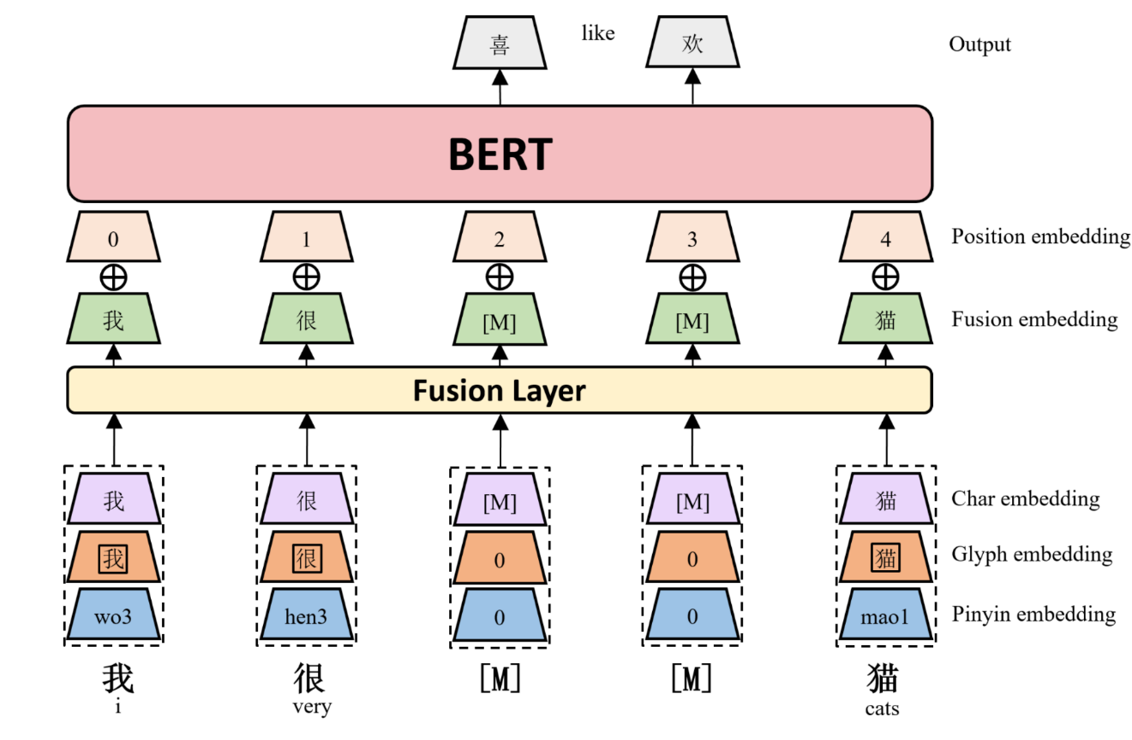

We propose ChineseBERT, which incorporates both the glyph and pinyin information of Chinese

characters into language model pretraining.

First, for each Chinese character, we get three kind of embedding.

- **Char Embedding:** the same as origin BERT token embedding.

- **Glyph Embedding:** capture visual features based on different fonts of a Chinese character.

- **Pinyin Embedding:** capture phonetic feature from the pinyin sequence ot a Chinese Character.

Then, char embedding, glyph embedding and pinyin embedding

are first concatenated, and mapped to a D-dimensional embedding through a fully

connected layer to form the fusion embedding.

Finally, the fusion embedding is added with the position embedding, which is fed as input to the BERT model.

The following image shows an overview architecture of ChineseBERT model.

ChineseBERT leverages the glyph and pinyin information of Chinese

characters to enhance the model's ability of capturing

context semantics from surface character forms and

disambiguating polyphonic characters in Chinese.

|

dibsondivya/distilbert-phmtweets-sutd

|

dibsondivya

| 2022-06-19T11:40:42Z | 11 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"text-classification",

"health",

"tweet",

"dataset:custom-phm-tweets",

"arxiv:1802.09130",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-06-19T10:09:47Z |

---

tags:

- distilbert

- health

- tweet

datasets:

- custom-phm-tweets

metrics:

- accuracy

model-index:

- name: distilbert-phmtweets-sutd

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: custom-phm-tweets

type: labelled

metrics:

- name: Accuracy

type: accuracy

value: 0.877

---

# distilbert-phmtweets-sutd

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) for text classification to identify public health events through tweets. The project was based on an [Emory University Study on Detection of Personal Health Mentions in Social Media paper](https://arxiv.org/pdf/1802.09130v2.pdf), that worked with this [custom dataset](https://github.com/emory-irlab/PHM2017).

It achieves the following results on the evaluation set:

- Accuracy: 0.877

## Usage

```Python

from transformers import AutoTokenizer, AutoModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained("dibsondivya/distilbert-phmtweets-sutd")

model = AutoModelForSequenceClassification.from_pretrained("dibsondivya/distilbert-phmtweets-sutd")

```

### Model Evaluation Results

With Validation Set

- Accuracy: 0.8708661417322835

With Test Set

- Accuracy: 0.8772961058045555

# Reference for distilbert-base-uncased Model

```bibtex

@article{Sanh2019DistilBERTAD,

title={DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter},

author={Victor Sanh and Lysandre Debut and Julien Chaumond and Thomas Wolf},

journal={ArXiv},

year={2019},

volume={abs/1910.01108}

}

```

|

dibsondivya/ernie-phmtweets-sutd

|

dibsondivya

| 2022-06-19T11:38:29Z | 14 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"ernie",

"health",

"tweet",

"dataset:custom-phm-tweets",

"arxiv:1802.09130",

"arxiv:1907.12412",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-06-19T11:20:14Z |

---

tags:

- ernie

- health

- tweet

datasets:

- custom-phm-tweets

metrics:

- accuracy

model-index:

- name: ernie-phmtweets-sutd

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: custom-phm-tweets

type: labelled

metrics:

- name: Accuracy

type: accuracy

value: 0.885

---

# ernie-phmtweets-sutd

This model is a fine-tuned version of [ernie-2.0-en](https://huggingface.co/nghuyong/ernie-2.0-en) for text classification to identify public health events through tweets. The project was based on an [Emory University Study on Detection of Personal Health Mentions in Social Media paper](https://arxiv.org/pdf/1802.09130v2.pdf), that worked with this [custom dataset](https://github.com/emory-irlab/PHM2017).

It achieves the following results on the evaluation set:

- Accuracy: 0.885

## Usage

```Python

from transformers import AutoTokenizer, AutoModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained("dibsondivya/ernie-phmtweets-sutd")

model = AutoModelForSequenceClassification.from_pretrained("dibsondivya/ernie-phmtweets-sutd")

```

### Model Evaluation Results

With Validation Set

- Accuracy: 0.889763779527559

With Test Set

- Accuracy: 0.884643644379133

## References for ERNIE 2.0 Model

```bibtex

@article{sun2019ernie20,

title={ERNIE 2.0: A Continual Pre-training Framework for Language Understanding},

author={Sun, Yu and Wang, Shuohuan and Li, Yukun and Feng, Shikun and Tian, Hao and Wu, Hua and Wang, Haifeng},

journal={arXiv preprint arXiv:1907.12412},

year={2019}

}

```

|

huggingtweets/rsapublic

|

huggingtweets

| 2022-06-19T11:26:27Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-06-19T11:14:09Z |

---

language: en

thumbnail: http://www.huggingtweets.com/rsapublic/1655637814216/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1536637048391491584/zfHd6Mha_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">bopo mofo</div>

<div style="text-align: center; font-size: 14px;">@rsapublic</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from bopo mofo.

| Data | bopo mofo |

| --- | --- |

| Tweets downloaded | 3212 |

| Retweets | 1562 |

| Short tweets | 303 |

| Tweets kept | 1347 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/2qnsx0b8/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @rsapublic's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/368jvjwu) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/368jvjwu/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/rsapublic')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

bookpanda/wangchanberta-base-att-spm-uncased-masking

|

bookpanda

| 2022-06-19T11:05:59Z | 19 | 0 |

transformers

|

[

"transformers",

"pytorch",

"camembert",

"fill-mask",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-06-19T10:59:54Z |

---

tags:

- generated_from_trainer

model-index:

- name: wangchanberta-base-att-spm-uncased-masking

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wangchanberta-base-att-spm-uncased-masking

This model is a fine-tuned version of [airesearch/wangchanberta-base-att-spm-uncased](https://huggingface.co/airesearch/wangchanberta-base-att-spm-uncased) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.15.0

- Pytorch 1.11.0+cu113

- Datasets 1.17.0

- Tokenizers 0.10.3

|

zakria/NLP_Project

|

zakria

| 2022-06-19T09:55:56Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-06-19T07:49:04Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: NLP_Project

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# NLP_Project

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5308

- Wer: 0.3428

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 3.5939 | 1.0 | 500 | 2.1356 | 1.0014 |

| 0.9126 | 2.01 | 1000 | 0.5469 | 0.5354 |

| 0.4491 | 3.01 | 1500 | 0.4636 | 0.4503 |

| 0.3008 | 4.02 | 2000 | 0.4269 | 0.4330 |

| 0.2229 | 5.02 | 2500 | 0.4164 | 0.4073 |

| 0.188 | 6.02 | 3000 | 0.4717 | 0.4107 |

| 0.1739 | 7.03 | 3500 | 0.4306 | 0.4031 |

| 0.159 | 8.03 | 4000 | 0.4394 | 0.3993 |

| 0.1342 | 9.04 | 4500 | 0.4462 | 0.3904 |

| 0.1093 | 10.04 | 5000 | 0.4387 | 0.3759 |

| 0.1005 | 11.04 | 5500 | 0.5033 | 0.3847 |

| 0.0857 | 12.05 | 6000 | 0.4805 | 0.3876 |

| 0.0779 | 13.05 | 6500 | 0.5269 | 0.3810 |

| 0.072 | 14.06 | 7000 | 0.5109 | 0.3710 |

| 0.0641 | 15.06 | 7500 | 0.4865 | 0.3638 |

| 0.0584 | 16.06 | 8000 | 0.5041 | 0.3646 |

| 0.0552 | 17.07 | 8500 | 0.4987 | 0.3537 |

| 0.0535 | 18.07 | 9000 | 0.4947 | 0.3586 |

| 0.0475 | 19.08 | 9500 | 0.5237 | 0.3647 |

| 0.042 | 20.08 | 10000 | 0.5338 | 0.3561 |

| 0.0416 | 21.08 | 10500 | 0.5068 | 0.3483 |

| 0.0358 | 22.09 | 11000 | 0.5126 | 0.3532 |

| 0.0334 | 23.09 | 11500 | 0.5213 | 0.3536 |

| 0.0331 | 24.1 | 12000 | 0.5378 | 0.3496 |

| 0.03 | 25.1 | 12500 | 0.5167 | 0.3470 |

| 0.0254 | 26.1 | 13000 | 0.5245 | 0.3418 |

| 0.0233 | 27.11 | 13500 | 0.5393 | 0.3456 |

| 0.0232 | 28.11 | 14000 | 0.5279 | 0.3425 |

| 0.022 | 29.12 | 14500 | 0.5308 | 0.3428 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.11.0+cu113

- Datasets 1.18.3

- Tokenizers 0.12.1

|

devetle/dqn-SpaceInvadersNoFrameskip-v4

|

devetle

| 2022-06-19T09:29:00Z | 4 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-19T03:34:30Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- metrics:

- type: mean_reward

value: 622.00 +/- 131.55

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

```

# Download model and save it into the logs/ folder

python -m utils.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga devetle -f logs/

python enjoy.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python train.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m utils.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga devetle

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1800000.0),

('optimize_memory_usage', True),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

sun1638650145/q-Taxi-v3

|

sun1638650145

| 2022-06-19T09:00:38Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-19T09:00:26Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- metrics:

- type: mean_reward

value: 7.54 +/- 2.73

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

---

# 使用**Q-Learning**智能体来玩**Taxi-v3**

这是一个使用**Q-Learning**训练有素的模型玩**Taxi-v3**.

## 用法

```python

model = load_from_hub(repo_id='sun1638650145/q-Taxi-v3', filename='q-learning.pkl')

# 不要忘记检查是否需要添加额外的参数(例如is_slippery=False)

env = gym.make(model['env_id'])

evaluate_agent(env, model['max_steps'], model['n_eval_episodes'], model['qtable'], model['eval_seed'])

```

|

janeel/tinyroberta-squad2-finetuned-squad

|

janeel

| 2022-06-19T08:51:09Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"roberta",

"question-answering",

"generated_from_trainer",

"dataset:squad_v2",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-06-16T12:51:25Z |

---

license: cc-by-4.0

tags:

- generated_from_trainer

datasets:

- squad_v2

model-index:

- name: tinyroberta-squad2-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tinyroberta-squad2-finetuned-squad

This model is a fine-tuned version of [deepset/tinyroberta-squad2](https://huggingface.co/deepset/tinyroberta-squad2) on the squad_v2 dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1592

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 0.6185 | 1.0 | 8239 | 0.9460 |

| 0.4243 | 2.0 | 16478 | 1.1592 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

ShannonAI/ChineseBERT-base

|

ShannonAI

| 2022-06-19T08:14:46Z | 109 | 20 |

transformers

|

[

"transformers",

"pytorch",

"arxiv:2106.16038",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z |

# ChineseBERT-base

This repository contains code, model, dataset for **ChineseBERT** at ACL2021.

paper:

**[ChineseBERT: Chinese Pretraining Enhanced by Glyph and Pinyin Information](https://arxiv.org/abs/2106.16038)**

*Zijun Sun, Xiaoya Li, Xiaofei Sun, Yuxian Meng, Xiang Ao, Qing He, Fei Wu and Jiwei Li*

code:

[ChineseBERT github link](https://github.com/ShannonAI/ChineseBert)

## Model description

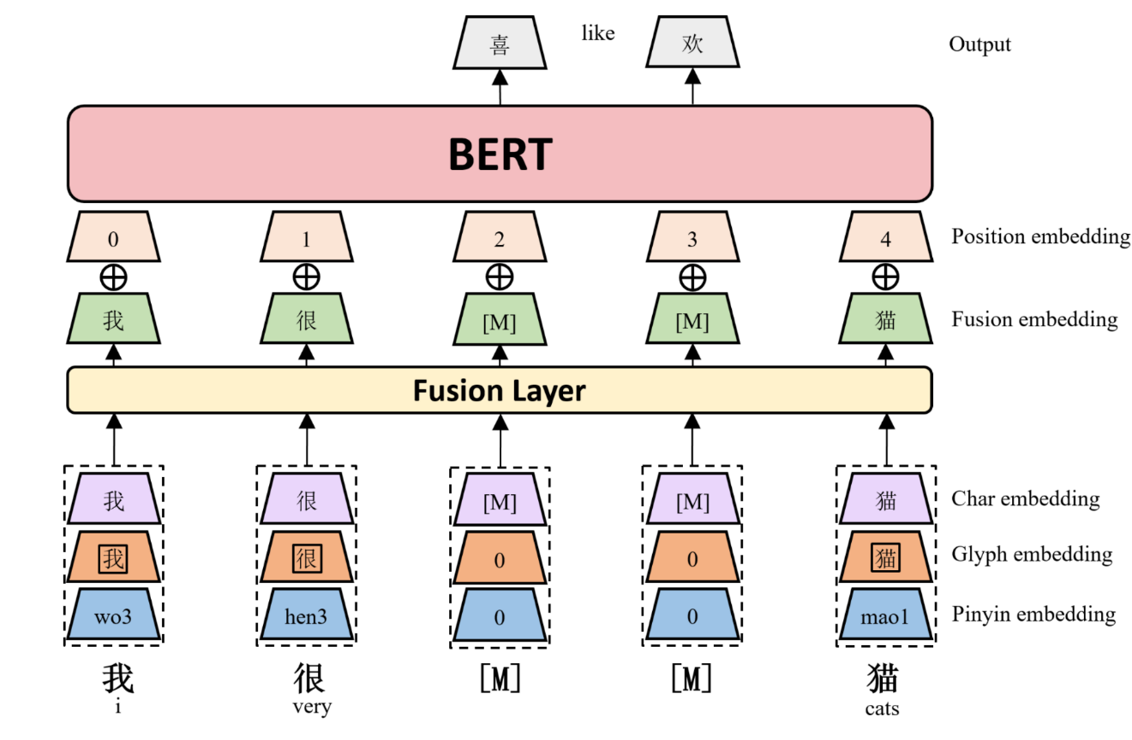

We propose ChineseBERT, which incorporates both the glyph and pinyin information of Chinese

characters into language model pretraining.

First, for each Chinese character, we get three kind of embedding.

- **Char Embedding:** the same as origin BERT token embedding.

- **Glyph Embedding:** capture visual features based on different fonts of a Chinese character.

- **Pinyin Embedding:** capture phonetic feature from the pinyin sequence ot a Chinese Character.

Then, char embedding, glyph embedding and pinyin embedding

are first concatenated, and mapped to a D-dimensional embedding through a fully

connected layer to form the fusion embedding.

Finally, the fusion embedding is added with the position embedding, which is fed as input to the BERT model.

The following image shows an overview architecture of ChineseBERT model.

ChineseBERT leverages the glyph and pinyin information of Chinese

characters to enhance the model's ability of capturing

context semantics from surface character forms and

disambiguating polyphonic characters in Chinese.

|

nestoralvaro/mt5-small-test-ged-mlsum_max_target_length_10

|

nestoralvaro

| 2022-06-19T06:39:24Z | 11 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"summarization",

"generated_from_trainer",

"dataset:mlsum",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-06-18T15:09:43Z |

---

license: apache-2.0

tags:

- summarization

- generated_from_trainer

datasets:

- mlsum

metrics:

- rouge

model-index:

- name: mt5-small-test-ged-mlsum_max_target_length_10

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: mlsum

type: mlsum

args: es

metrics:

- name: Rouge1

type: rouge

value: 74.8229

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-test-ged-mlsum_max_target_length_10

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the mlsum dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3341

- Rouge1: 74.8229

- Rouge2: 68.1808

- Rougel: 74.8297

- Rougelsum: 74.8414

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5.6e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:------:|:---------------:|:-------:|:-------:|:-------:|:---------:|

| 0.5565 | 1.0 | 33296 | 0.3827 | 69.9041 | 62.821 | 69.8709 | 69.8924 |

| 0.2636 | 2.0 | 66592 | 0.3552 | 72.0701 | 65.4937 | 72.0787 | 72.091 |

| 0.2309 | 3.0 | 99888 | 0.3525 | 72.5071 | 65.8026 | 72.5132 | 72.512 |

| 0.2109 | 4.0 | 133184 | 0.3346 | 74.0842 | 67.4776 | 74.0887 | 74.0968 |

| 0.1972 | 5.0 | 166480 | 0.3398 | 74.6051 | 68.6024 | 74.6177 | 74.6365 |

| 0.1867 | 6.0 | 199776 | 0.3283 | 74.9022 | 68.2146 | 74.9023 | 74.926 |

| 0.1785 | 7.0 | 233072 | 0.3325 | 74.8631 | 68.2468 | 74.8843 | 74.9026 |

| 0.1725 | 8.0 | 266368 | 0.3341 | 74.8229 | 68.1808 | 74.8297 | 74.8414 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

botika/checkpoint-124500-finetuned-squad

|

botika

| 2022-06-19T05:53:11Z | 7 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"generated_from_trainer",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-06-17T07:41:58Z |

---

tags:

- generated_from_trainer

model-index:

- name: checkpoint-124500-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# checkpoint-124500-finetuned-squad

This model was trained from scratch on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 14.9594

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 100

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:------:|:---------------:|

| 3.9975 | 1.0 | 3289 | 3.8405 |

| 3.7311 | 2.0 | 6578 | 3.7114 |

| 3.5681 | 3.0 | 9867 | 3.6829 |

| 3.4101 | 4.0 | 13156 | 3.6368 |

| 3.2487 | 5.0 | 16445 | 3.6526 |

| 3.1143 | 6.0 | 19734 | 3.7567 |

| 2.9783 | 7.0 | 23023 | 3.8469 |

| 2.8295 | 8.0 | 26312 | 4.0040 |

| 2.6912 | 9.0 | 29601 | 4.1996 |

| 2.5424 | 10.0 | 32890 | 4.3387 |

| 2.4161 | 11.0 | 36179 | 4.4988 |

| 2.2713 | 12.0 | 39468 | 4.7861 |

| 2.1413 | 13.0 | 42757 | 4.9276 |

| 2.0125 | 14.0 | 46046 | 5.0598 |

| 1.8798 | 15.0 | 49335 | 5.3347 |

| 1.726 | 16.0 | 52624 | 5.5869 |

| 1.5994 | 17.0 | 55913 | 5.7161 |

| 1.4643 | 18.0 | 59202 | 6.0174 |

| 1.3237 | 19.0 | 62491 | 6.4926 |

| 1.2155 | 20.0 | 65780 | 6.4882 |

| 1.1029 | 21.0 | 69069 | 6.9922 |

| 0.9948 | 22.0 | 72358 | 7.1357 |

| 0.9038 | 23.0 | 75647 | 7.3676 |

| 0.8099 | 24.0 | 78936 | 7.4180 |

| 0.7254 | 25.0 | 82225 | 7.7753 |

| 0.6598 | 26.0 | 85514 | 7.8643 |

| 0.5723 | 27.0 | 88803 | 8.1798 |

| 0.5337 | 28.0 | 92092 | 8.3053 |

| 0.4643 | 29.0 | 95381 | 8.8597 |

| 0.4241 | 30.0 | 98670 | 8.9849 |

| 0.3763 | 31.0 | 101959 | 8.8406 |

| 0.3479 | 32.0 | 105248 | 9.1517 |

| 0.3271 | 33.0 | 108537 | 9.3659 |

| 0.2911 | 34.0 | 111826 | 9.4813 |

| 0.2836 | 35.0 | 115115 | 9.5746 |

| 0.2528 | 36.0 | 118404 | 9.7027 |

| 0.2345 | 37.0 | 121693 | 9.7515 |

| 0.2184 | 38.0 | 124982 | 9.9729 |

| 0.2067 | 39.0 | 128271 | 10.0828 |

| 0.2077 | 40.0 | 131560 | 10.0878 |

| 0.1876 | 41.0 | 134849 | 10.2974 |

| 0.1719 | 42.0 | 138138 | 10.2712 |

| 0.1637 | 43.0 | 141427 | 10.5788 |

| 0.1482 | 44.0 | 144716 | 10.7465 |

| 0.1509 | 45.0 | 148005 | 10.4603 |

| 0.1358 | 46.0 | 151294 | 10.7665 |

| 0.1316 | 47.0 | 154583 | 10.7724 |

| 0.1223 | 48.0 | 157872 | 11.1766 |

| 0.1205 | 49.0 | 161161 | 11.1870 |

| 0.1203 | 50.0 | 164450 | 11.1053 |

| 0.1081 | 51.0 | 167739 | 10.9696 |

| 0.103 | 52.0 | 171028 | 11.2010 |

| 0.0938 | 53.0 | 174317 | 11.6728 |

| 0.0924 | 54.0 | 177606 | 11.1423 |

| 0.0922 | 55.0 | 180895 | 11.7409 |

| 0.0827 | 56.0 | 184184 | 11.7850 |

| 0.0829 | 57.0 | 187473 | 11.8956 |

| 0.073 | 58.0 | 190762 | 11.8915 |

| 0.0788 | 59.0 | 194051 | 12.1617 |

| 0.0734 | 60.0 | 197340 | 12.2007 |

| 0.0729 | 61.0 | 200629 | 12.2388 |

| 0.0663 | 62.0 | 203918 | 12.2471 |

| 0.0662 | 63.0 | 207207 | 12.5830 |

| 0.064 | 64.0 | 210496 | 12.6105 |

| 0.0599 | 65.0 | 213785 | 12.3712 |

| 0.0604 | 66.0 | 217074 | 12.9249 |

| 0.0574 | 67.0 | 220363 | 12.7309 |

| 0.0538 | 68.0 | 223652 | 12.8068 |

| 0.0526 | 69.0 | 226941 | 13.4368 |

| 0.0471 | 70.0 | 230230 | 13.5148 |

| 0.0436 | 71.0 | 233519 | 13.3391 |

| 0.0448 | 72.0 | 236808 | 13.4100 |

| 0.0428 | 73.0 | 240097 | 13.5617 |

| 0.0401 | 74.0 | 243386 | 13.8674 |

| 0.035 | 75.0 | 246675 | 13.5746 |

| 0.0342 | 76.0 | 249964 | 13.5042 |

| 0.0344 | 77.0 | 253253 | 14.2085 |

| 0.0365 | 78.0 | 256542 | 13.6393 |

| 0.0306 | 79.0 | 259831 | 13.9807 |

| 0.0311 | 80.0 | 263120 | 13.9768 |

| 0.0353 | 81.0 | 266409 | 14.5245 |

| 0.0299 | 82.0 | 269698 | 13.9471 |

| 0.0263 | 83.0 | 272987 | 13.7899 |

| 0.0254 | 84.0 | 276276 | 14.3786 |

| 0.0267 | 85.0 | 279565 | 14.5611 |

| 0.022 | 86.0 | 282854 | 14.2658 |

| 0.0198 | 87.0 | 286143 | 14.9215 |

| 0.0193 | 88.0 | 289432 | 14.5650 |

| 0.0228 | 89.0 | 292721 | 14.7014 |

| 0.0184 | 90.0 | 296010 | 14.6946 |

| 0.0182 | 91.0 | 299299 | 14.6614 |

| 0.0188 | 92.0 | 302588 | 14.6915 |

| 0.0196 | 93.0 | 305877 | 14.7262 |

| 0.0138 | 94.0 | 309166 | 14.7625 |

| 0.0201 | 95.0 | 312455 | 15.0442 |

| 0.0189 | 96.0 | 315744 | 14.8832 |

| 0.0148 | 97.0 | 319033 | 14.8995 |

| 0.0129 | 98.0 | 322322 | 14.8974 |

| 0.0132 | 99.0 | 325611 | 14.9813 |

| 0.0139 | 100.0 | 328900 | 14.9594 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu102

- Datasets 2.2.2

- Tokenizers 0.12.1

|

Klinsc/q-Taxi-v3

|

Klinsc

| 2022-06-19T05:09:11Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-19T05:09:06Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- metrics:

- type: mean_reward

value: 7.52 +/- 2.67

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="Klinsc/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

Klinsc/q-FrozenLake-v1-4x4-noSlippery

|

Klinsc

| 2022-06-19T04:46:57Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-19T04:43:46Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="Klinsc/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

janeel/roberta-base-finetuned-squad

|

janeel

| 2022-06-19T04:32:50Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"roberta",

"question-answering",

"generated_from_trainer",

"dataset:squad_v2",

"license:mit",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-06-18T14:02:52Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- squad_v2

model-index:

- name: roberta-base-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-finetuned-squad

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the squad_v2 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8556

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 0.8678 | 1.0 | 8239 | 0.8014 |

| 0.6423 | 2.0 | 16478 | 0.8556 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

Tstarshak/q-FrozenLake-v1-4x4-noSlippery

|

Tstarshak

| 2022-06-19T04:15:20Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-19T04:15:13Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="Tstarshak/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

huggingtweets/vox_akuma

|

huggingtweets

| 2022-06-19T03:26:08Z | 7 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-05-28T15:10:18Z |

---

language: en

thumbnail: http://www.huggingtweets.com/vox_akuma/1655609164156/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1509960920449093633/c0in4uvf_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Vox Akuma 👹🧧 NIJISANJI EN</div>

<div style="text-align: center; font-size: 14px;">@vox_akuma</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Vox Akuma 👹🧧 NIJISANJI EN.

| Data | Vox Akuma 👹🧧 NIJISANJI EN |

| --- | --- |

| Tweets downloaded | 3149 |

| Retweets | 948 |

| Short tweets | 465 |

| Tweets kept | 1736 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/2g4om0wh/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @vox_akuma's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/qy49fjem) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/qy49fjem/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/vox_akuma')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

gary109/ai-light-dance_singing_ft_wav2vec2-large-xlsr-53-5gram-v1

|

gary109

| 2022-06-19T00:31:50Z | 4 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"gary109/AI_Light_Dance",

"generated_from_trainer",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-06-18T03:12:49Z |

---

tags:

- automatic-speech-recognition

- gary109/AI_Light_Dance

- generated_from_trainer

model-index:

- name: ai-light-dance_singing_ft_wav2vec2-large-xlsr-53-5gram-v1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ai-light-dance_singing_ft_wav2vec2-large-xlsr-53-5gram-v1

This model is a fine-tuned version of [gary109/ai-light-dance_singing_ft_wav2vec2-large-xlsr-53-5gram](https://huggingface.co/gary109/ai-light-dance_singing_ft_wav2vec2-large-xlsr-53-5gram) on the GARY109/AI_LIGHT_DANCE - ONSET-SINGING dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4123

- Wer: 0.1668

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 500

- num_epochs: 10.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2696 | 1.0 | 552 | 0.4421 | 0.2013 |

| 0.2498 | 2.0 | 1104 | 0.4389 | 0.1887 |

| 0.2387 | 3.0 | 1656 | 0.4154 | 0.1788 |

| 0.1902 | 4.0 | 2208 | 0.4143 | 0.1753 |

| 0.1896 | 5.0 | 2760 | 0.4123 | 0.1668 |

| 0.1658 | 6.0 | 3312 | 0.4366 | 0.1651 |

| 0.1312 | 7.0 | 3864 | 0.4309 | 0.1594 |

| 0.1186 | 8.0 | 4416 | 0.4432 | 0.1561 |

| 0.1476 | 9.0 | 4968 | 0.4400 | 0.1569 |

| 0.1027 | 10.0 | 5520 | 0.4389 | 0.1554 |

### Framework versions

- Transformers 4.21.0.dev0

- Pytorch 1.9.1+cu102

- Datasets 2.3.3.dev0

- Tokenizers 0.12.1

|

raedinkhaled/swin-tiny-patch4-window7-224-finetuned-mri

|

raedinkhaled

| 2022-06-19T00:13:22Z | 80 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"swin",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-06-18T16:25:22Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: swin-tiny-patch4-window7-224-finetuned-mri

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9806603773584905

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-finetuned-mri

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0608

- Accuracy: 0.9807

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.0592 | 1.0 | 447 | 0.0823 | 0.9695 |

| 0.0196 | 2.0 | 894 | 0.0761 | 0.9739 |

| 0.0058 | 3.0 | 1341 | 0.0608 | 0.9807 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

kjunelee/pegasus-samsum

|

kjunelee

| 2022-06-18T22:35:27Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"pegasus",

"text2text-generation",

"generated_from_trainer",

"dataset:samsum",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-06-18T08:01:44Z |

---

tags:

- generated_from_trainer

datasets:

- samsum

model-index:

- name: pegasus-samsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pegasus-samsum

This model is a fine-tuned version of [google/pegasus-cnn_dailymail](https://huggingface.co/google/pegasus-cnn_dailymail) on the samsum dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 1

### Training results

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0

- Datasets 2.2.3.dev0

- Tokenizers 0.12.1

|

Hugo123/Ttgb

|

Hugo123

| 2022-06-18T22:13:53Z | 0 | 0 | null |

[

"license:bsd-3-clause-clear",

"region:us"

] | null | 2022-06-18T22:13:53Z |

---

license: bsd-3-clause-clear

---

|

MerlinTK/q-Taxi-v3

|

MerlinTK

| 2022-06-18T21:30:00Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-18T21:29:54Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="MerlinTK/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

BeardedJohn/bert-finetuned-seq-classification-fake-news

|

BeardedJohn

| 2022-06-18T21:03:42Z | 4 | 0 |

transformers

|

[

"transformers",

"tf",

"bert",

"text-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-06-17T16:58:33Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: BeardedJohn/bert-finetuned-seq-classification-fake-news

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# BeardedJohn/bert-finetuned-seq-classification-fake-news

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0719

- Validation Loss: 0.0214

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 332, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 0.0719 | 0.0214 | 0 |

### Framework versions

- Transformers 4.20.0

- TensorFlow 2.8.2

- Datasets 2.3.2

- Tokenizers 0.12.1

|

zakria/Project_NLP

|

zakria

| 2022-06-18T20:44:06Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-06-18T18:43:39Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: Project_NLP

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Project_NLP

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5324

- Wer: 0.3355

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 3.5697 | 1.0 | 500 | 2.1035 | 0.9979 |

| 0.8932 | 2.01 | 1000 | 0.5649 | 0.5621 |

| 0.4363 | 3.01 | 1500 | 0.4326 | 0.4612 |

| 0.3035 | 4.02 | 2000 | 0.4120 | 0.4191 |

| 0.2343 | 5.02 | 2500 | 0.4199 | 0.3985 |

| 0.1921 | 6.02 | 3000 | 0.4380 | 0.4043 |

| 0.1549 | 7.03 | 3500 | 0.4456 | 0.3925 |

| 0.1385 | 8.03 | 4000 | 0.4264 | 0.3871 |

| 0.1217 | 9.04 | 4500 | 0.4744 | 0.3774 |

| 0.1041 | 10.04 | 5000 | 0.4498 | 0.3745 |

| 0.0968 | 11.04 | 5500 | 0.4716 | 0.3628 |

| 0.0893 | 12.05 | 6000 | 0.4680 | 0.3764 |

| 0.078 | 13.05 | 6500 | 0.5100 | 0.3623 |

| 0.0704 | 14.06 | 7000 | 0.4893 | 0.3552 |

| 0.0659 | 15.06 | 7500 | 0.4956 | 0.3565 |

| 0.0578 | 16.06 | 8000 | 0.5450 | 0.3595 |

| 0.0563 | 17.07 | 8500 | 0.4891 | 0.3614 |

| 0.0557 | 18.07 | 9000 | 0.5307 | 0.3548 |

| 0.0447 | 19.08 | 9500 | 0.4923 | 0.3493 |

| 0.0456 | 20.08 | 10000 | 0.5156 | 0.3479 |

| 0.0407 | 21.08 | 10500 | 0.4979 | 0.3389 |

| 0.0354 | 22.09 | 11000 | 0.5549 | 0.3462 |

| 0.0322 | 23.09 | 11500 | 0.5601 | 0.3439 |

| 0.0342 | 24.1 | 12000 | 0.5131 | 0.3451 |

| 0.0276 | 25.1 | 12500 | 0.5206 | 0.3392 |

| 0.0245 | 26.1 | 13000 | 0.5337 | 0.3373 |

| 0.0226 | 27.11 | 13500 | 0.5311 | 0.3353 |

| 0.0229 | 28.11 | 14000 | 0.5375 | 0.3373 |

| 0.0225 | 29.12 | 14500 | 0.5324 | 0.3355 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.11.0+cu113

- Datasets 1.18.3

- Tokenizers 0.12.1

|

theojolliffe/bart-cnn-science-v3-e2-v4-e2-manual

|

theojolliffe

| 2022-06-18T18:01:15Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bart",

"text2text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-06-18T17:39:12Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: bart-cnn-science-v3-e2-v4-e2-manual

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-cnn-science-v3-e2-v4-e2-manual

This model is a fine-tuned version of [theojolliffe/bart-cnn-science-v3-e2](https://huggingface.co/theojolliffe/bart-cnn-science-v3-e2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9189

- Rouge1: 55.982

- Rouge2: 36.9147

- Rougel: 39.1563

- Rougelsum: 53.5959

- Gen Len: 142.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| No log | 1.0 | 42 | 0.9365 | 53.4332 | 34.0477 | 36.9735 | 51.1918 | 142.0 |

| No log | 2.0 | 84 | 0.9189 | 55.982 | 36.9147 | 39.1563 | 53.5959 | 142.0 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

vai6hav/wav2vec2-large-xls-r-300m-hindi-epochs15-colab

|

vai6hav

| 2022-06-18T17:42:12Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-06-18T16:56:04Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: wav2vec2-large-xls-r-300m-hindi-epochs15-colab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-hindi-epochs15-colab

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 3.5705

- Wer: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 100

- num_epochs: 15

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:---:|

| 20.2764 | 5.53 | 50 | 8.1197 | 1.0 |

| 5.2964 | 11.11 | 100 | 3.5705 | 1.0 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

theojolliffe/bart-cnn-science-v3-e1-v4-e4-manual

|

theojolliffe

| 2022-06-18T17:13:00Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bart",

"text2text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-06-18T16:46:47Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: bart-cnn-science-v3-e1-v4-e4-manual

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-cnn-science-v3-e1-v4-e4-manual

This model is a fine-tuned version of [theojolliffe/bart-cnn-science-v3-e1](https://huggingface.co/theojolliffe/bart-cnn-science-v3-e1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2615

- Rouge1: 53.36

- Rouge2: 32.0237

- Rougel: 33.2835

- Rougelsum: 50.7455

- Gen Len: 142.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| No log | 1.0 | 42 | 1.0675 | 51.743 | 31.3774 | 34.1939 | 48.7234 | 142.0 |

| No log | 2.0 | 84 | 1.0669 | 49.4166 | 28.1438 | 30.188 | 46.0289 | 142.0 |

| No log | 3.0 | 126 | 1.1799 | 52.6909 | 31.0174 | 35.441 | 50.0351 | 142.0 |

| No log | 4.0 | 168 | 1.2615 | 53.36 | 32.0237 | 33.2835 | 50.7455 | 142.0 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

tclong/wav2vec2-base-vios-v4

|

tclong

| 2022-06-18T16:59:17Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:vivos_dataset",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-06-06T18:29:59Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- vivos_dataset

model-index:

- name: wav2vec2-base-vios-v4

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-vios-v4

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the vivos_dataset dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3198

- Wer: 0.2169

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 15

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 7.8138 | 0.69 | 500 | 3.5011 | 1.0 |

| 3.4372 | 1.37 | 1000 | 3.3447 | 1.0 |

| 1.9519 | 2.06 | 1500 | 0.8356 | 0.5944 |

| 0.8581 | 2.74 | 2000 | 0.5280 | 0.4038 |

| 0.6405 | 3.43 | 2500 | 0.4410 | 0.3410 |

| 0.5417 | 4.12 | 3000 | 0.3990 | 0.3140 |

| 0.4804 | 4.8 | 3500 | 0.3804 | 0.2973 |

| 0.4384 | 5.49 | 4000 | 0.3644 | 0.2808 |

| 0.4162 | 6.17 | 4500 | 0.3542 | 0.2648 |

| 0.3941 | 6.86 | 5000 | 0.3436 | 0.2529 |

| 0.3733 | 7.54 | 5500 | 0.3355 | 0.2520 |

| 0.3564 | 8.23 | 6000 | 0.3294 | 0.2415 |

| 0.3412 | 8.92 | 6500 | 0.3311 | 0.2332 |

| 0.3266 | 9.6 | 7000 | 0.3217 | 0.2325 |

| 0.3226 | 10.29 | 7500 | 0.3317 | 0.2303 |

| 0.3115 | 10.97 | 8000 | 0.3226 | 0.2279 |

| 0.3094 | 11.66 | 8500 | 0.3157 | 0.2236 |

| 0.2967 | 12.35 | 9000 | 0.3109 | 0.2202 |

| 0.2995 | 13.03 | 9500 | 0.3129 | 0.2156 |

| 0.2895 | 13.72 | 10000 | 0.3195 | 0.2146 |

| 0.3089 | 14.4 | 10500 | 0.3198 | 0.2169 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.11.0+cu113

- Datasets 1.18.3

- Tokenizers 0.12.1

|

Ambiwlans/PPO-1m-SpaceInvadersNoFrameskip-v4

|

Ambiwlans

| 2022-06-18T15:58:34Z | 1 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-18T15:57:59Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: 273.00 +/- 82.29

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

---

# **PPO** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **PPO** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

```

# Download model and save it into the logs/ folder

python -m utils.load_from_hub --algo ppo --env SpaceInvadersNoFrameskip-v4 -orga Ambiwlans -f logs/

python enjoy.py --algo ppo --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python train.py --algo ppo --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m utils.push_to_hub --algo ppo --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga Ambiwlans

```

## Hyperparameters

```python

OrderedDict([('batch_size', 256),

('clip_range', 'lin_0.1'),

('ent_coef', 0.01),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('frame_stack', 4),

('learning_rate', 'lin_2.5e-4'),

('n_envs', 8),

('n_epochs', 4),

('n_steps', 128),

('n_timesteps', 1000000.0),

('policy', 'CnnPolicy'),

('vf_coef', 0.5),

('normalize', False)])

```

|

anibahug/mt5-small-finetuned-amazon-en-de

|

anibahug

| 2022-06-18T15:39:26Z | 8 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"summarization",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-06-18T14:20:45Z |

---

license: apache-2.0

tags:

- summarization

- generated_from_trainer

metrics:

- rouge

model-index:

- name: mt5-small-finetuned-amazon-en-de

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-finetuned-amazon-en-de

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the [Amazon reviews multi](https://huggingface.co/datasets/amazon_reviews_multi) dataset.

It achieves the following results on the evaluation set:

- Loss: 3.2896

- Rouge1: 14.7163

- Rouge2: 6.6341

- Rougel: 14.2052

- Rougelsum: 14.2318

## Model description

the model can summarize texts for english and deutsch

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

the training was done on google colab ( using it's free GPU)

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5.6e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:------:|:-------:|:---------:|

| 7.2925 | 1.0 | 1276 | 3.5751 | 13.6254 | 6.0527 | 13.109 | 13.1438 |

| 4.0677 | 2.0 | 2552 | 3.4031 | 13.5907 | 6.068 | 13.3526 | 13.2471 |

| 3.7458 | 3.0 | 3828 | 3.3434 | 14.7229 | 6.8482 | 14.1443 | 14.2218 |

| 3.5831 | 4.0 | 5104 | 3.3353 | 14.8696 | 6.6371 | 14.1342 | 14.2907 |

| 3.4841 | 5.0 | 6380 | 3.3037 | 14.233 | 6.2318 | 13.9218 | 13.9781 |

| 3.4142 | 6.0 | 7656 | 3.2914 | 13.7344 | 5.9446 | 13.5476 | 13.6362 |

| 3.3587 | 7.0 | 8932 | 3.2959 | 14.2007 | 6.1905 | 13.5255 | 13.5237 |

| 3.3448 | 8.0 | 10208 | 3.2896 | 14.7163 | 6.6341 | 14.2052 | 14.2318 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

dennis-fast/DialoGPT-ElonMusk

|

dennis-fast

| 2022-06-18T15:13:23Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-06-18T09:43:20Z |

---

tags:

- conversational

license: mit

---

# DialoGPT-ElonMusk: Chat with Elon Musk

This is a conversational language model of Elon Musk. The bot's conversation abilities come from Microsoft's [DialoGPT-small conversational model](https://huggingface.co/microsoft/DialoGPT-small) fine-tuned on conversation transcripts of 22 interviews with Elon Musk from [here](https://elon-musk-interviews.com/category/english/).

|

pinot/wav2vec2-large-xls-r-300m-turkish-colab

|

pinot

| 2022-06-18T15:04:03Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-06-18T08:16:34Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: wav2vec2-large-xls-r-300m-turkish-colab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-turkish-colab

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 2.7642

- Wer: 0.5894

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 24.5372 | 9.76 | 400 | 5.2857 | 0.9738 |

| 4.3812 | 19.51 | 800 | 3.6782 | 0.7315 |

| 1.624 | 29.27 | 1200 | 2.7642 | 0.5894 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

zdreiosis/ff_analysis_5

|

zdreiosis

| 2022-06-18T14:54:43Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"gen_ffa",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-06-18T12:46:34Z |

---

license: apache-2.0

tags:

- gen_ffa

- generated_from_trainer

metrics:

- f1

- accuracy

model-index:

- name: ff_analysis_5

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ff_analysis_5

This model is a fine-tuned version of [zdreiosis/ff_analysis_5](https://huggingface.co/zdreiosis/ff_analysis_5) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0824

- F1: 0.9306

- Roc Auc: 0.9483

- Accuracy: 0.8137

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 | Roc Auc | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:------:|:-------:|:--------:|

| No log | 0.27 | 50 | 0.0846 | 0.9305 | 0.9476 | 0.8075 |

| No log | 0.55 | 100 | 0.1000 | 0.9070 | 0.9320 | 0.7484 |

| No log | 0.82 | 150 | 0.0945 | 0.9126 | 0.9349 | 0.7640 |

| No log | 1.1 | 200 | 0.0973 | 0.9119 | 0.9353 | 0.7764 |

| No log | 1.37 | 250 | 0.0880 | 0.9336 | 0.9504 | 0.8261 |

| No log | 1.65 | 300 | 0.0857 | 0.9246 | 0.9434 | 0.8043 |

| No log | 1.92 | 350 | 0.0844 | 0.9324 | 0.9488 | 0.8199 |

| No log | 2.2 | 400 | 0.0881 | 0.9232 | 0.9450 | 0.7888 |