modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-10 12:31:44

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 552

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-10 12:31:31

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

edbeeching/decision-transformer-gym-halfcheetah-medium-replay

|

edbeeching

| 2022-06-29T19:21:08Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"decision_transformer",

"feature-extraction",

"deep-reinforcement-learning",

"reinforcement-learning",

"decision-transformer",

"gym-continous-control",

"arxiv:2106.01345",

"endpoints_compatible",

"region:us"

] |

reinforcement-learning

| 2022-03-16T08:20:08Z |

---

tags:

- deep-reinforcement-learning

- reinforcement-learning

- decision-transformer

- gym-continous-control

pipeline_tag: reinforcement-learning

---

# Decision Transformer model trained on medium-replay trajectories sampled from the Gym HalfCheetah environment

This is a trained [Decision Transformer](https://arxiv.org/abs/2106.01345) model trained on medium-replay trajectories sampled from the Gym HalfCheetah environment.

The following normlization coeficients are required to use this model:

mean = [-0.12880704, 0.37381196, -0.14995988, -0.23479079, -0.28412786, -0.13096535, -0.20157982, -0.06517727, 3.4768248, -0.02785066, -0.01503525, 0.07697279, 0.01266712, 0.0273253, 0.02316425, 0.01043872, -0.01583941]

std = [0.17019016, 1.2844249, 0.33442774, 0.36727592, 0.26092398, 0.4784107, 0.31814206 ,0.33552638, 2.0931616, 0.80374336, 1.9044334, 6.57321, 7.5728636, 5.0697494, 9.105554, 6.0856543, 7.253004, 5]

See our [Blog Post](https://colab.research.google.com/drive/1K3UuajwoPY1MzRKNkONNRS3gS5DxZ-qF?usp=sharing), [Colab notebook](https://colab.research.google.com/drive/1K3UuajwoPY1MzRKNkONNRS3gS5DxZ-qF?usp=sharing) or [Example Script](https://github.com/huggingface/transformers/tree/main/examples/research_projects/decision_transformer) for usage.

|

edbeeching/decision-transformer-gym-hopper-medium-replay

|

edbeeching

| 2022-06-29T19:20:14Z | 9 | 0 |

transformers

|

[

"transformers",

"pytorch",

"decision_transformer",

"feature-extraction",

"deep-reinforcement-learning",

"reinforcement-learning",

"decision-transformer",

"gym-continous-control",

"arxiv:2106.01345",

"endpoints_compatible",

"region:us"

] |

reinforcement-learning

| 2022-03-16T08:20:43Z |

---

tags:

- deep-reinforcement-learning

- reinforcement-learning

- decision-transformer

- gym-continous-control

pipeline_tag: reinforcement-learning

---

# Decision Transformer model trained on medium-replay trajectories sampled from the Gym Hopper environment

This is a trained [Decision Transformer](https://arxiv.org/abs/2106.01345) model trained on medium-replay trajectories sampled from the Gym Hopper environment.

The following normlization coefficients are required to use this model:

mean = [ 1.2305138, -0.04371411, -0.44542956, -0.09370098, 0.09094488, 1.3694725, -0.19992675, -0.02286135, -0.5287045, -0.14465883, -0.19652697]

std = [0.17565121, 0.06369286, 0.34383234, 0.19566889, 0.5547985, 1.0510299, 1.1583077, 0.79631287, 1.4802359, 1.6540332, 5.108601]

See our [Blog Post](https://colab.research.google.com/drive/1K3UuajwoPY1MzRKNkONNRS3gS5DxZ-qF?usp=sharing), [Colab notebook](https://colab.research.google.com/drive/1K3UuajwoPY1MzRKNkONNRS3gS5DxZ-qF?usp=sharing) or [Example Script](https://github.com/huggingface/transformers/tree/main/examples/research_projects/decision_transformer) for usage.

|

edbeeching/decision-transformer-gym-hopper-medium

|

edbeeching

| 2022-06-29T19:15:16Z | 34,485 | 6 |

transformers

|

[

"transformers",

"pytorch",

"decision_transformer",

"feature-extraction",

"deep-reinforcement-learning",

"reinforcement-learning",

"decision-transformer",

"gym-continous-control",

"arxiv:2106.01345",

"endpoints_compatible",

"region:us"

] |

reinforcement-learning

| 2022-03-16T08:20:31Z |

---

tags:

- deep-reinforcement-learning

- reinforcement-learning

- decision-transformer

- gym-continous-control

pipeline_tag: reinforcement-learning

---

# Decision Transformer model trained on medium trajectories sampled from the Gym Hopper environment

This is a trained [Decision Transformer](https://arxiv.org/abs/2106.01345) model trained on medium trajectories sampled from the Gym Hopper environment.

The following normlization coefficients are required to use this model:

mean = [ 1.311279, -0.08469521, -0.5382719, -0.07201576, 0.04932366, 2.1066856, -0.15017354, 0.00878345, -0.2848186, -0.18540096, -0.28461286]

std = [0.17790751, 0.05444621, 0.21297139, 0.14530419, 0.6124444, 0.85174465, 1.4515252, 0.6751696, 1.536239, 1.6160746, 5.6072536 ]

See our [Blog Post](https://colab.research.google.com/drive/1K3UuajwoPY1MzRKNkONNRS3gS5DxZ-qF?usp=sharing), [Colab notebook](https://colab.research.google.com/drive/1K3UuajwoPY1MzRKNkONNRS3gS5DxZ-qF?usp=sharing) or [Example Script](https://github.com/huggingface/transformers/tree/main/examples/research_projects/decision_transformer) for usage.

|

edbeeching/decision-transformer-gym-hopper-expert

|

edbeeching

| 2022-06-29T19:12:17Z | 566 | 18 |

transformers

|

[

"transformers",

"pytorch",

"decision_transformer",

"feature-extraction",

"deep-reinforcement-learning",

"reinforcement-learning",

"decision-transformer",

"gym-continous-control",

"arxiv:2106.01345",

"endpoints_compatible",

"region:us"

] |

reinforcement-learning

| 2022-03-16T08:20:20Z |

---

tags:

- deep-reinforcement-learning

- reinforcement-learning

- decision-transformer

- gym-continous-control

pipeline_tag: reinforcement-learning

---

# Decision Transformer model trained on expert trajectories sampled from the Gym Hopper environment

This is a trained [Decision Transformer](https://arxiv.org/abs/2106.01345) model trained on expert trajectories sampled from the Gym Hopper environment.

The following normlization coefficients are required to use this model:

mean = [ 1.3490015, -0.11208222, -0.5506444, -0.13188992, -0.00378754, 2.6071432, 0.02322114, -0.01626922, -0.06840388, -0.05183131, 0.04272673]

std = [0.15980862, 0.0446214, 0.14307782, 0.17629202, 0.5912333, 0.5899924, 1.5405099, 0.8152689, 2.0173461, 2.4107876, 5.8440027 ]

See our [Blog Post](https://colab.research.google.com/drive/1K3UuajwoPY1MzRKNkONNRS3gS5DxZ-qF?usp=sharing), [Colab notebook](https://colab.research.google.com/drive/1K3UuajwoPY1MzRKNkONNRS3gS5DxZ-qF?usp=sharing) or [Example Script](https://github.com/huggingface/transformers/tree/main/examples/research_projects/decision_transformer) for usage.

|

zhav1k/q-Taxi-v3

|

zhav1k

| 2022-06-29T18:56:01Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-29T18:55:53Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- metrics:

- type: mean_reward

value: 7.54 +/- 2.69

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

nbroad/bigbird-base-health-fact

|

nbroad

| 2022-06-29T18:29:17Z | 17 | 1 |

transformers

|

[

"transformers",

"pytorch",

"big_bird",

"text-classification",

"generated_from_trainer",

"en",

"dataset:health_fact",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-04-26T17:55:02Z |

---

language:

- en

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- health_fact

model-index:

- name: bigbird-base-health-fact

results:

- task:

type: text-classification

name: Text Classification

dataset:

name: health_fact

type: health_fact

split: test

metrics:

- name: F1

type: f1

value: 0.6694031411935434

- name: Accuracy

type: accuracy

value: 0.7948094079480941

- name: False Accuracy

type: accuracy

value: 0.8092783505154639

- name: Mixture Accuracy

type: accuracy

value: 0.4975124378109453

- name: True Accuracy

type: accuracy

value: 0.9148580968280468

- name: Unproven Accuracy

type: accuracy

value: 0.4

---

# bigbird-base-health-fact

This model is a fine-tuned version of [google/bigbird-roberta-base](https://huggingface.co/google/bigbird-roberta-base) on the health_fact dataset.

It achieves the following results on the VALIDATION set:

- Overall Accuracy: 0.8228995057660626

- Macro F1: 0.6979224830442152

- False Accuracy: 0.8289473684210527

- Mixture Accuracy: 0.47560975609756095

- True Accuracy: 0.9332273449920508

- Unproven Accuracy: 0.4634146341463415

It achieves the following results on the TEST set:

- Overall Accuracy: 0.7948094079480941

- Macro F1: 0.6694031411935434

- Mixture Accuracy: 0.4975124378109453

- False Accuracy: 0.8092783505154639

- True Accuracy: 0.9148580968280468

- Unproven Accuracy: 0.4

## Model description

Here is how you can use the model:

```python

import torch

from transformers import pipeline

claim = "A mother revealed to her child in a letter after her death that she had just one eye because she had donated the other to him."

text = "In April 2005, we spotted a tearjerker on the Internet about a mother who gave up one of her eyes to a son who had lost one of his at an early age. By February 2007 the item was circulating in e-mail in the following shortened version: My mom only had one eye. I hated her… She was such an embarrassment. She cooked for students and teachers to support the family. There was this one day during elementary school where my mom came to say hello to me. I was so embarrassed. How could she do this to me? I ignored her, threw her a hateful look and ran out. The next day at school one of my classmates said, “EEEE, your mom only has one eye!” I wanted to bury myself. I also wanted my mom to just disappear. I confronted her that day and said, “If you’re only gonna make me a laughing stock, why don’t you just die?” My mom did not respond… I didn’t even stop to think for a second about what I had said, because I was full of anger. I was oblivious to her feelings. I wanted out of that house, and have nothing to do with her. So I studied real hard, got a chance to go abroad to study. Then, I got married. I bought a house of my own. I had kids of my own. I was happy with my life, my kids and the comforts. Then one day, my Mother came to visit me. She hadn’t seen me in years and she didn’t even meet her grandchildren. When she stood by the door, my children laughed at her, and I yelled at her for coming over uninvited. I screamed at her, “How dare you come to my house and scare my children! GET OUT OF HERE! NOW!! !” And to this, my mother quietly answered, “Oh, I’m so sorry. I may have gotten the wrong address,” and she disappeared out of sight. One day, a letter regarding a school reunion came to my house. So I lied to my wife that I was going on a business trip. After the reunion, I went to the old shack just out of curiosity. My neighbors said that she died. I did not shed a single tear. They handed me a letter that she had wanted me to have. My dearest son, I think of you all the time. I’m sorry that I came to your house and scared your children. I was so glad when I heard you were coming for the reunion. But I may not be able to even get out of bed to see you. I’m sorry that I was a constant embarrassment to you when you were growing up. You see……..when you were very little, you got into an accident, and lost your eye. As a mother, I couldn’t stand watching you having to grow up with one eye. So I gave you mine. I was so proud of my son who was seeing a whole new world for me, in my place, with that eye. With all my love to you, Your mother. In its earlier incarnation, the story identified by implication its location as Korea through statements made by both the mother and the son (the son’s “I left my mother and came to Seoul” and the mother’s “I won’t visit Seoul anymore”). It also supplied a reason for the son’s behavior when his mother arrived unexpectedly to visit him (“My little girl ran away, scared of my mom’s eye” and “I screamed at her, ‘How dare you come to my house and scare my daughter!'”). A further twist was provided in the original: rather than gaining the news of his mother’s death from neighbors (who hand him her letter), the son instead discovered the woman who bore him lying dead on the floor of what used to be his childhood home, her missive to him clutched in her lifeless hand: Give your parents roses while they are alive, not deadMY mom only had one eye. I hated her … she was such an embarrassment. My mom ran a small shop at a flea market. She collected little weeds and such to sell … anything for the money we needed she was such an embarrassment. There was this one day during elementary school … It was field day, and my mom came. I was so embarrassed. How could she do this to me? I threw her a hateful look and ran out. The next day at school … “your mom only has one eye?!? !” … And they taunted me. I wished that my mom would just disappear from this world so I said to my mom, “mom … Why don’t you have the other eye?! If you’re only going to make me a laughingstock, why don’t you just die?!! !” my mom did not respond … I guess I felt a little bad, but at the same time, it felt good to think that I had said what I’d wanted to say all this time… maybe it was because my mom hadn’t punished me, but I didn’t think that I had hurt her feelings very badly. That night… I woke up, and went to the kitchen to get a glass of water. My mom was crying there, so quietly, as if she was afraid that she might wake me. I took a look at her, and then turned away. Because of the thing I had said to her earlier, there was something pinching at me in the corner of my heart. Even so, I hated my mother who was crying out of her one eye. So I told myself that I would grow up and become successful. Because I hated my one-eyed mom and our desperate poverty… then I studied real hard. I left my mother and came to Seoul and studied, and got accepted in the Seoul University with all the confidence I had. Then, I got married. I bought a house of my own. Then I had kids, too… now I’m living happily as a successful man. I like it here because it’s a place that doesn’t remind me of my mom. This happiness was getting bigger and bigger, when… what?! Who’s this…it was my mother… still with her one eye. It felt as if the whole sky was falling apart on me. My little girl ran away, scared of my mom’s eye. And I asked her, “who are you? !” “I don’t know you!! !” as if trying to make that real. I screamed at her, “How dare you come to my house and scare my daughter!” “GET OUT OF HERE! NOW!! !” and to this, my mother quietly answered, “oh, I’m so sorry. I may have gotten the wrong address,” and she disappeared out of sight. Thank goodness… she doesn’t recognize me… I was quite relieved. I told myself that I wasn’t going to care, or think about this for the rest of my life. Then a wave of relief came upon me… One day, a letter regarding a school reunion came to my house. So, lying to my wife that I was going on a business trip, I went. After the reunion, I went down to the old shack, that I used to call a house… just out of curiosity there, I found my mother fallen on the cold ground. But I did not shed a single tear. She had a piece of paper in her hand…. it was a letter to me. My son… I think my life has been long enough now… And… I won’t visit Seoul anymore… but would it be too much to ask if I wanted you to come visit me once in a while? I miss you so much… and I was so glad when I heard you were coming for the reunion. But I decided not to go to the school. …for you… and I’m sorry that I only have one eye, and I was an embarrassment for you. You see, when you were very little, you got into an accident, and lost your eye. as a mom, I couldn’t stand watching you having to grow up with only one eye… so I gave you mine… I was so proud of my son that was seeing a whole new world for me, in my place, with that eye. I was never upset at you for anything you did… the couple times that you were angry with me, I thought to myself, ‘it’s because he loves me…’ my son. Oh, my son… I don’t want you to cry for me, because of my death. My son, I love you my son, I love you so much. With all modern medical technology, transplantation of the eyeball is still impossible. The optic nerve isn’t an ordinary nerve, but instead an inset running from the brain. Modern medicine isn’t able to “connect” an eyeball back to brain after an optic nerve has been severed, let alone transplant the eye from a different person. (The only exception is the cornea, the transparent part in front of the eye: corneas are transplanted to replace injured and opaque ones.) We won’t try to comment on whether any surgeon would accept an eye from a living donor for transplant into another — we’ll leave that to others who are far more knowledgeable about medical ethics and transplant procedures. But we will note that the plot device of a mother’s dramatic sacrifice for the sake of her child’s being revealed in a written communication delivered after her demise appears in another legend about maternal love: the 2008 tale about a woman who left a touching message on her cell phone even as life ebbed from her as she used her body to shield the tot during an earthquake. Giving up one’s own life for a loved one is central to a 2005 urban legend about a boy on a motorcycle who has his girlfriend hug him one last time and put on his helmet just before the crash that kills him and spares her. Returning to the “notes from the dead” theme is the 1995 story about a son who discovers only through a posthumous letter from his mother what their occasional dinner “dates” had meant to her. Another legend we’re familiar with features a meme used in the one-eyed mother story (the coming to light of the enduring love of the person who died for the completely unworthy person she’d lavished it on), but that one involves a terminally ill woman and her cheating husband. In it, an about-to-be-spurned wife begs the adulterous hoon she’d married to stick around for another 30 days and to carry her over the threshold of their home once every day of that month as her way of keeping him around long enough for her to kick the bucket and thus spare their son the knowledge that his parents were on the verge of divorce."

label = "false"

device = 0 if torch.cuda.is_available() else -1

pl = pipeline("text-classification", model="nbroad/bigbird-base-health-fact", device=device)

input_text = claim+pl.tokenizer.sep_token+text

print(len(pl.tokenizer(input_text).input_ids))

# 2303 (which is why bigbird is useful)

pl(input_text)

# [{'label': 'false', 'score': 0.3866822123527527}]

```

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 32

- seed: 18

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-06

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Micro F1 | Macro F1 | False F1 | Mixture F1 | True F1 | Unproven F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:--------:|:--------:|:----------:|:-------:|:-----------:|

| 0.5563 | 1.0 | 1226 | 0.5020 | 0.7949 | 0.6062 | 0.7926 | 0.4591 | 0.8986 | 0.2745 |

| 0.5048 | 2.0 | 2452 | 0.4969 | 0.8180 | 0.6846 | 0.8202 | 0.4342 | 0.9126 | 0.5714 |

| 0.3454 | 3.0 | 3678 | 0.5864 | 0.8130 | 0.6874 | 0.8114 | 0.4557 | 0.9154 | 0.5672 |

### Framework versions

- Transformers 4.19.0.dev0

- Pytorch 1.11.0a0+17540c5

- Datasets 2.1.1.dev0

- Tokenizers 0.12.1

|

JHart96/finetuning-sentiment-model-3000-samples

|

JHart96

| 2022-06-29T18:20:13Z | 7 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-06-29T18:10:54Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

- f1

model-index:

- name: finetuning-sentiment-model-3000-samples

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.86

- name: F1

type: f1

value: 0.8627450980392156

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3300

- Accuracy: 0.86

- F1: 0.8627

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

ashraq/movielens_user_model_cos_32

|

ashraq

| 2022-06-29T18:07:51Z | 0 | 0 |

keras

|

[

"keras",

"tf-keras",

"region:us"

] | null | 2022-06-24T19:16:33Z |

---

library_name: keras

---

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Model Plot

<details>

<summary>View Model Plot</summary>

</details>

|

kakashi210/autotrain-tweet-sentiment-classifier-1055036381

|

kakashi210

| 2022-06-29T17:54:00Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"text-classification",

"autotrain",

"unk",

"dataset:kakashi210/autotrain-data-tweet-sentiment-classifier",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-06-29T17:45:44Z |

---

tags: autotrain

language: unk

widget:

- text: "I love AutoTrain 🤗"

datasets:

- kakashi210/autotrain-data-tweet-sentiment-classifier

co2_eq_emissions: 17.43982800509071

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 1055036381

- CO2 Emissions (in grams): 17.43982800509071

## Validation Metrics

- Loss: 0.6177256107330322

- Accuracy: 0.7306006137658921

- Macro F1: 0.719534854339415

- Micro F1: 0.730600613765892

- Weighted F1: 0.7302204676842725

- Macro Precision: 0.714938066281146

- Micro Precision: 0.7306006137658921

- Weighted Precision: 0.7316651970219867

- Macro Recall: 0.7258484087500343

- Micro Recall: 0.7306006137658921

- Weighted Recall: 0.7306006137658921

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/kakashi210/autotrain-tweet-sentiment-classifier-1055036381

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("kakashi210/autotrain-tweet-sentiment-classifier-1055036381", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("kakashi210/autotrain-tweet-sentiment-classifier-1055036381", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

BeardedJohn/bert-finetuned-ner-ubb-endava-only-misc

|

BeardedJohn

| 2022-06-29T16:59:54Z | 4 | 0 |

transformers

|

[

"transformers",

"tf",

"bert",

"token-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-29T11:44:27Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: BeardedJohn/bert-finetuned-ner-ubb-endava-only-misc

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# BeardedJohn/bert-finetuned-ner-ubb-endava-only-misc

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0015

- Validation Loss: 0.0006

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 705, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 0.1740 | 0.0013 | 0 |

| 0.0024 | 0.0007 | 1 |

| 0.0015 | 0.0006 | 2 |

### Framework versions

- Transformers 4.20.1

- TensorFlow 2.8.2

- Datasets 2.3.2

- Tokenizers 0.12.1

|

Abonia/finetuning-sentiment-model-3000-samples

|

Abonia

| 2022-06-29T15:27:48Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-06-29T15:12:16Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

- f1

model-index:

- name: finetuning-sentiment-model-3000-samples

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.8766666666666667

- name: F1

type: f1

value: 0.877076411960133

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2991

- Accuracy: 0.8767

- F1: 0.8771

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

ydshieh/clip-vit-base-patch32

|

ydshieh

| 2022-06-29T14:47:32Z | 15 | 1 |

transformers

|

[

"transformers",

"tf",

"clip",

"zero-shot-image-classification",

"summarization",

"en",

"dataset:scientific_papers",

"arxiv:2007.14062",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-03-02T23:29:05Z |

---

language: en

license: apache-2.0

datasets:

- scientific_papers

tags:

- summarization

model-index:

- name: google/bigbird-pegasus-large-pubmed

results:

- task:

type: summarization

name: Summarization

dataset:

name: scientific_papers

type: scientific_papers

config: pubmed

split: test

metrics:

- name: ROUGE-1

type: rouge

value: 40.8966

verified: true

- name: ROUGE-2

type: rouge

value: 18.1161

verified: true

- name: ROUGE-L

type: rouge

value: 26.1743

verified: true

- name: ROUGE-LSUM

type: rouge

value: 34.2773

verified: true

- name: loss

type: loss

value: 2.1707184314727783

verified: true

- name: meteor

type: meteor

value: 0.3513

verified: true

- name: gen_len

type: gen_len

value: 221.2531

verified: true

- task:

type: summarization

name: Summarization

dataset:

name: scientific_papers

type: scientific_papers

config: arxiv

split: test

metrics:

- name: ROUGE-1

type: rouge

value: 40.3815

verified: true

- name: ROUGE-2

type: rouge

value: 14.374

verified: true

- name: ROUGE-L

type: rouge

value: 23.4773

verified: true

- name: ROUGE-LSUM

type: rouge

value: 33.772

verified: true

- name: loss

type: loss

value: 3.235051393508911

verified: true

- name: gen_len

type: gen_len

value: 186.2003

verified: true

---

# BigBirdPegasus model (large)

BigBird, is a sparse-attention based transformer which extends Transformer based models, such as BERT to much longer sequences. Moreover, BigBird comes along with a theoretical understanding of the capabilities of a complete transformer that the sparse model can handle.

BigBird was introduced in this [paper](https://arxiv.org/abs/2007.14062) and first released in this [repository](https://github.com/google-research/bigbird).

Disclaimer: The team releasing BigBird did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

BigBird relies on **block sparse attention** instead of normal attention (i.e. BERT's attention) and can handle sequences up to a length of 4096 at a much lower compute cost compared to BERT. It has achieved SOTA on various tasks involving very long sequences such as long documents summarization, question-answering with long contexts.

## How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import BigBirdPegasusForConditionalGeneration, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("google/bigbird-pegasus-large-pubmed")

# by default encoder-attention is `block_sparse` with num_random_blocks=3, block_size=64

model = BigBirdPegasusForConditionalGeneration.from_pretrained("google/bigbird-pegasus-large-pubmed")

# decoder attention type can't be changed & will be "original_full"

# you can change `attention_type` (encoder only) to full attention like this:

model = BigBirdPegasusForConditionalGeneration.from_pretrained("google/bigbird-pegasus-large-pubmed", attention_type="original_full")

# you can change `block_size` & `num_random_blocks` like this:

model = BigBirdPegasusForConditionalGeneration.from_pretrained("google/bigbird-pegasus-large-pubmed", block_size=16, num_random_blocks=2)

text = "Replace me by any text you'd like."

inputs = tokenizer(text, return_tensors='pt')

prediction = model.generate(**inputs)

prediction = tokenizer.batch_decode(prediction)

```

## Training Procedure

This checkpoint is obtained after fine-tuning `BigBirdPegasusForConditionalGeneration` for **summarization** on **pubmed dataset** from [scientific_papers](https://huggingface.co/datasets/scientific_papers).

## BibTeX entry and citation info

```tex

@misc{zaheer2021big,

title={Big Bird: Transformers for Longer Sequences},

author={Manzil Zaheer and Guru Guruganesh and Avinava Dubey and Joshua Ainslie and Chris Alberti and Santiago Ontanon and Philip Pham and Anirudh Ravula and Qifan Wang and Li Yang and Amr Ahmed},

year={2021},

eprint={2007.14062},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

FabianWillner/bert-base-uncased-finetuned-squad

|

FabianWillner

| 2022-06-29T14:46:28Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-06-29T09:16:46Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: bert-base-uncased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-finetuned-squad

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0106

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 1.0626 | 1.0 | 5533 | 1.0308 |

| 0.8157 | 2.0 | 11066 | 1.0106 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

freedomking/prompt-uie-base

|

freedomking

| 2022-06-29T14:46:15Z | 4 | 5 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"endpoints_compatible",

"region:us"

] | null | 2022-06-29T14:28:56Z |

## Introduction

Universal Information Extraction

More detail:

https://github.com/PaddlePaddle/PaddleNLP/tree/develop/model_zoo/uie

|

igpaub/q-FrozenLake-v1-4x4

|

igpaub

| 2022-06-29T14:29:26Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-29T13:12:43Z |

---

tags:

- FrozenLake-v1-4x4

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4

results:

- metrics:

- type: mean_reward

value: 0.78 +/- 0.41

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4

type: FrozenLake-v1-4x4

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="igpaub/q-FrozenLake-v1-4x4", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

Salvatore/bert-finetuned-mutation-recognition-1

|

Salvatore

| 2022-06-29T13:59:03Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-29T09:40:09Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-finetuned-mutation-recognition-1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-mutation-recognition-1

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0380

- Proteinmutation F1: 0.8631

- Dnamutation F1: 0.7522

- Snp F1: 1.0

- Precision: 0.8061

- Recall: 0.8386

- F1: 0.8221

- Accuracy: 0.9942

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Proteinmutation F1 | Dnamutation F1 | Snp F1 | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:------------------:|:--------------:|:------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 259 | 0.0273 | 0.8072 | 0.5762 | 0.975 | 0.6685 | 0.7580 | 0.7104 | 0.9924 |

| 0.0597 | 2.0 | 518 | 0.0260 | 0.8148 | 0.6864 | 0.9873 | 0.7363 | 0.8004 | 0.7670 | 0.9936 |

| 0.0597 | 3.0 | 777 | 0.0338 | 0.8252 | 0.7221 | 1.0 | 0.7857 | 0.7941 | 0.7899 | 0.9935 |

| 0.0046 | 4.0 | 1036 | 0.0299 | 0.8707 | 0.7214 | 0.9873 | 0.7773 | 0.8450 | 0.8098 | 0.9941 |

| 0.0046 | 5.0 | 1295 | 0.0353 | 0.9035 | 0.7364 | 0.9873 | 0.8130 | 0.8493 | 0.8307 | 0.9941 |

| 0.0014 | 6.0 | 1554 | 0.0361 | 0.8941 | 0.7391 | 0.9873 | 0.8093 | 0.8471 | 0.8278 | 0.9941 |

| 0.0014 | 7.0 | 1813 | 0.0367 | 0.8957 | 0.7249 | 1.0 | 0.8090 | 0.8365 | 0.8225 | 0.9940 |

| 0.0004 | 8.0 | 2072 | 0.0381 | 0.8714 | 0.7578 | 1.0 | 0.8266 | 0.8301 | 0.8284 | 0.9940 |

| 0.0004 | 9.0 | 2331 | 0.0380 | 0.8732 | 0.7550 | 1.0 | 0.8148 | 0.8408 | 0.8276 | 0.9942 |

| 0.0002 | 10.0 | 2590 | 0.0380 | 0.8631 | 0.7522 | 1.0 | 0.8061 | 0.8386 | 0.8221 | 0.9942 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.2

- Datasets 2.0.0

- Tokenizers 0.12.1

|

robingeibel/bigbird-base-finetuned-big_patent

|

robingeibel

| 2022-06-29T12:35:25Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"big_bird",

"fill-mask",

"generated_from_trainer",

"dataset:big_patent",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-06-27T07:03:58Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- big_patent

model-index:

- name: bigbird-base-finetuned-big_patent

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bigbird-base-finetuned-big_patent

This model is a fine-tuned version of [robingeibel/bigbird-base-finetuned-big_patent](https://huggingface.co/robingeibel/bigbird-base-finetuned-big_patent) on the big_patent dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0686

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:------:|:---------------:|

| 1.1432 | 1.0 | 154482 | 1.0686 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

igpaub/q-FrozenLake-v1-4x4-noSlippery

|

igpaub

| 2022-06-29T12:17:50Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-29T12:17:41Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="igpaub/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

gguichard/q-Taxi-v3

|

gguichard

| 2022-06-29T09:26:50Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-29T09:26:45Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- metrics:

- type: mean_reward

value: 7.48 +/- 2.77

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="gguichard/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

squirro/distilroberta-base-squad_v2

|

squirro

| 2022-06-29T08:53:58Z | 15 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"onnx",

"roberta",

"question-answering",

"generated_from_trainer",

"en",

"dataset:squad_v2",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-03-07T10:00:04Z |

---

license: apache-2.0

language: en

tags:

- generated_from_trainer

datasets:

- squad_v2

model-index:

- name: distilroberta-base-squad_v2

results:

- task:

name: Question Answering

type: question-answering

dataset:

type: squad_v2 # Required. Example: common_voice. Use dataset id from https://hf.co/datasets

name: The Stanford Question Answering Dataset

args: en

metrics:

- type: eval_exact

value: 65.2405

- type: eval_f1

value: 68.6265

- type: eval_HasAns_exact

value: 67.5776

- type: eval_HasAns_f1

value: 74.3594

- type: eval_NoAns_exact

value: 62.91

- type: eval_NoAns_f1

value: 62.91

---

# distilroberta-base-squad_v2

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the squad_v2 dataset.

## Model description

This model is fine-tuned on the extractive question answering task -- The Stanford Question Answering Dataset -- [SQuAD2.0](https://rajpurkar.github.io/SQuAD-explorer/).

For convenience this model is prepared to be used with the frameworks `PyTorch`, `Tensorflow` and `ONNX`.

## Intended uses & limitations

This model can handle mismatched question-context pairs. Make sure to specify `handle_impossible_answer=True` when using `QuestionAnsweringPipeline`.

__Example usage:__

```python

>>> from transformers import AutoModelForQuestionAnswering, AutoTokenizer, QuestionAnsweringPipeline

>>> model = AutoModelForQuestionAnswering.from_pretrained("squirro/distilroberta-base-squad_v2")

>>> tokenizer = AutoTokenizer.from_pretrained("squirro/distilroberta-base-squad_v2")

>>> qa_model = QuestionAnsweringPipeline(model, tokenizer)

>>> qa_model(

>>> question="What's your name?",

>>> context="My name is Clara and I live in Berkeley.",

>>> handle_impossible_answer=True # important!

>>> )

{'score': 0.9498472809791565, 'start': 11, 'end': 16, 'answer': 'Clara'}

```

## Training and evaluation data

Training and evaluation was done on [SQuAD2.0](https://huggingface.co/datasets/squad_v2).

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 64

- eval_batch_size: 8

- seed: 42

- distributed_type: tpu

- num_devices: 8

- total_train_batch_size: 512

- total_eval_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Metric | Value |

|:-------------------------|-------------:|

| epoch | 3 |

| eval_HasAns_exact | 67.5776 |

| eval_HasAns_f1 | 74.3594 |

| eval_HasAns_total | 5928 |

| eval_NoAns_exact | 62.91 |

| eval_NoAns_f1 | 62.91 |

| eval_NoAns_total | 5945 |

| eval_best_exact | 65.2489 |

| eval_best_exact_thresh | 0 |

| eval_best_f1 | 68.6349 |

| eval_best_f1_thresh | 0 |

| eval_exact | 65.2405 |

| eval_f1 | 68.6265 |

| eval_samples | 12165 |

| eval_total | 11873 |

| train_loss | 1.40336 |

| train_runtime | 1365.28 |

| train_samples | 131823 |

| train_samples_per_second | 289.662 |

| train_steps_per_second | 0.567 |

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 1.18.3

- Tokenizers 0.11.6

---

# About Us

<img src="https://squirro.com/wp-content/themes/squirro/img/squirro_logo.svg" alt="Squirro Logo" width="250"/>

Squirro marries data from any source with your intent, and your context to intelligently augment decision-making - right when you need it!

An Insight Engine at its core, Squirro works with global organizations, primarily in financial services, public sector, professional services, and manufacturing, among others. Customers include Bank of England, European Central Bank (ECB), Deutsche Bundesbank, Standard Chartered, Henkel, Armacell, Candriam, and many other world-leading firms.

Founded in 2012, Squirro is currently present in Zürich, London, New York, and Singapore. Further information about AI-driven business insights can be found at http://squirro.com.

## Social media profiles:

- Redefining AI Podcast (Spotify): https://open.spotify.com/show/6NPLcv9EyaD2DcNT8v89Kb

- Redefining AI Podcast (Apple Podcasts): https://podcasts.apple.com/us/podcast/redefining-ai/id1613934397

- Squirro LinkedIn: https://www.linkedin.com/company/squirroag

- Squirro Academy LinkedIn: https://www.linkedin.com/showcase/the-squirro-academy

- Twitter: https://twitter.com/Squirro

- Facebook: https://www.facebook.com/squirro

- Instagram: https://www.instagram.com/squirro/

|

RuiqianLi/wav2vec2-large-960h-lv60-self-4-gram_fine-tune_real_29_Jun

|

RuiqianLi

| 2022-06-29T08:44:53Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:uob_singlish",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-06-29T04:45:13Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- uob_singlish

model-index:

- name: wav2vec2-large-960h-lv60-self-4-gram_fine-tune_real_29_Jun

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-960h-lv60-self-4-gram_fine-tune_real_29_Jun

This model is a fine-tuned version of [facebook/wav2vec2-large-960h-lv60-self](https://huggingface.co/facebook/wav2vec2-large-960h-lv60-self) on the uob_singlish dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2895

- Wer: 0.4583

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 2.1283 | 1.82 | 20 | 1.5236 | 0.5764 |

| 1.3015 | 3.64 | 40 | 1.2956 | 0.4931 |

| 0.9918 | 5.45 | 60 | 1.3087 | 0.5347 |

| 0.849 | 7.27 | 80 | 1.2914 | 0.5139 |

| 0.6191 | 9.09 | 100 | 1.2895 | 0.4583 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

cwkeam/m-ctc-t-large-lid

|

cwkeam

| 2022-06-29T08:11:14Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"mctct",

"speech",

"en",

"dataset:librispeech_asr",

"dataset:common_voice",

"arxiv:2111.00161",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2022-06-29T08:08:36Z |

---

language: en

datasets:

- librispeech_asr

- common_voice

tags:

- speech

license: apache-2.0

---

# M-CTC-T

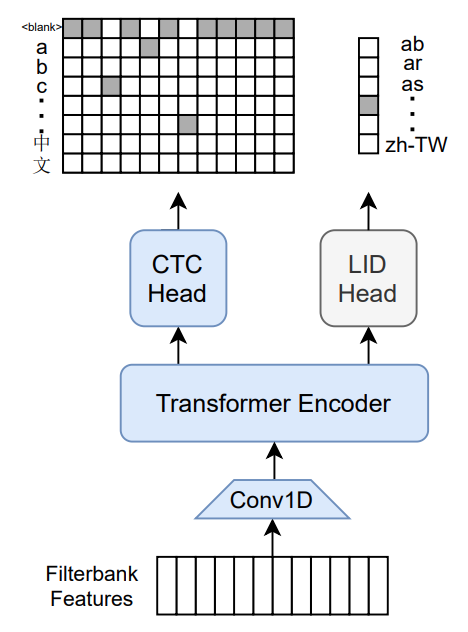

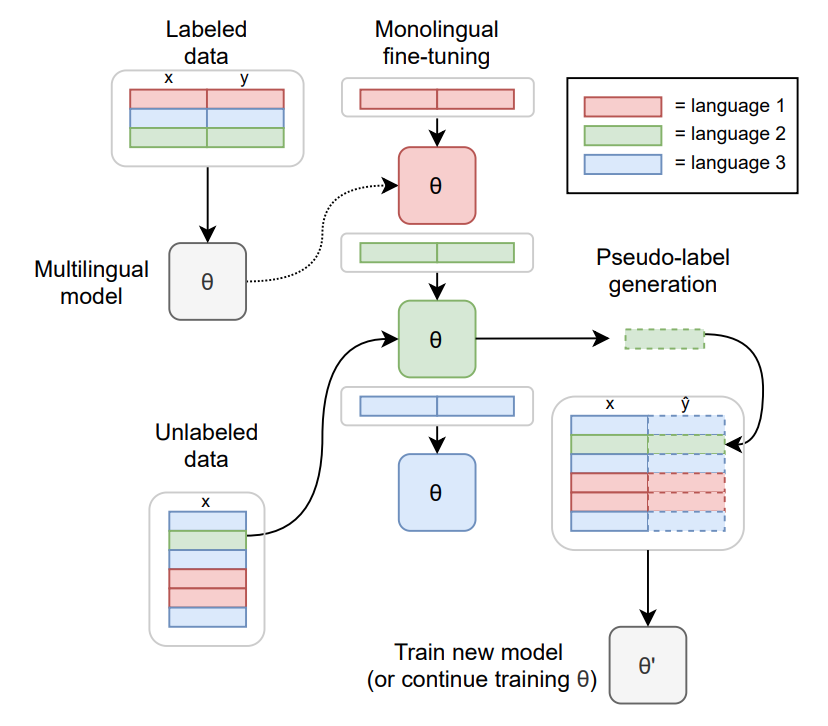

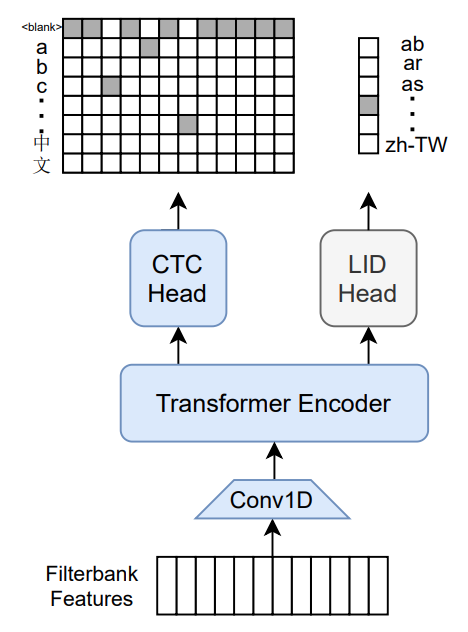

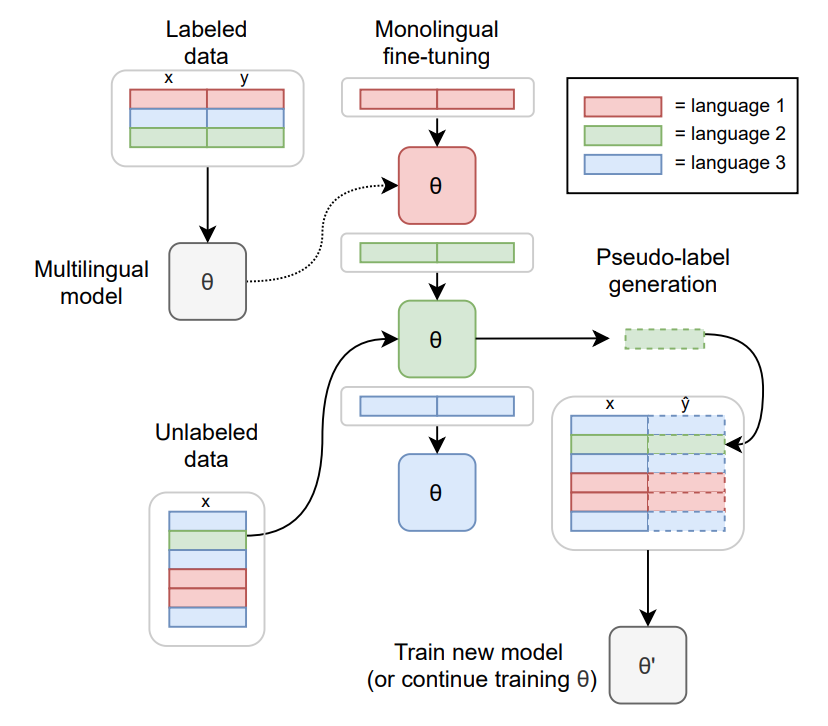

Massively multilingual speech recognizer from Meta AI. The model is a 1B-param transformer encoder, with a CTC head over 8065 character labels and a language identification head over 60 language ID labels. It is trained on Common Voice (version 6.1, December 2020 release) and VoxPopuli. After training on Common Voice and VoxPopuli, the model is trained on Common Voice only. The labels are unnormalized character-level transcripts (punctuation and capitalization are not removed). The model takes as input Mel filterbank features from a 16Khz audio signal.

The original Flashlight code, model checkpoints, and Colab notebook can be found at https://github.com/flashlight/wav2letter/tree/main/recipes/mling_pl .

## Citation

[Paper](https://arxiv.org/abs/2111.00161)

Authors: Loren Lugosch, Tatiana Likhomanenko, Gabriel Synnaeve, Ronan Collobert

```

@article{lugosch2021pseudo,

title={Pseudo-Labeling for Massively Multilingual Speech Recognition},

author={Lugosch, Loren and Likhomanenko, Tatiana and Synnaeve, Gabriel and Collobert, Ronan},

journal={ICASSP},

year={2022}

}

```

Additional thanks to [Chan Woo Kim](https://huggingface.co/cwkeam) and [Patrick von Platen](https://huggingface.co/patrickvonplaten) for porting the model from Flashlight to PyTorch.

# Training method

TO-DO: replace with the training diagram from paper

For more information on how the model was trained, please take a look at the [official paper](https://arxiv.org/abs/2111.00161).

# Usage

To transcribe audio files the model can be used as a standalone acoustic model as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import MCTCTForCTC, MCTCTProcessor

model = MCTCTForCTC.from_pretrained("speechbrain/mctct-large")

processor = MCTCTProcessor.from_pretrained("speechbrain/mctct-large")

# load dummy dataset and read soundfiles

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

# tokenize

input_features = processor(ds[0]["audio"]["array"], return_tensors="pt").input_features

# retrieve logits

logits = model(input_features).logits

# take argmax and decode

predicted_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(predicted_ids)

```

Results for Common Voice, averaged over all languages:

*Character error rate (CER)*:

| Valid | Test |

|-------|------|

| 21.4 | 23.3 |

|

prithivida/bert-for-patents-64d

|

prithivida

| 2022-06-29T07:47:23Z | 41 | 8 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"bert",

"feature-extraction",

"masked-lm",

"en",

"license:apache-2.0",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

feature-extraction

| 2022-03-31T06:40:35Z |

---

language:

- en

tags:

- masked-lm

- pytorch

pipeline-tag: "fill-mask"

mask-token: "[MASK]"

widget:

- text: "The present [MASK] provides a torque sensor that is small and highly rigid and for which high production efficiency is possible."

- text: "The present invention relates to [MASK] accessories and pertains particularly to a brake light unit for bicycles."

- text: "The present invention discloses a space-bound-free [MASK] and its coordinate determining circuit for determining a coordinate of a stylus pen."

- text: "The illuminated [MASK] includes a substantially translucent canopy supported by a plurality of ribs pivotally swingable towards and away from a shaft."

license: apache-2.0

metrics:

- perplexity

---

# Motivation

This model is based on anferico/bert-for-patents - a BERT<sub>LARGE</sub> model (See next section for details below). By default, the pre-trained model's output embeddings with size 768 (base-models) or with size 1024 (large-models). However, when you store Millions of embeddings, this can require quite a lot of memory/storage. So have reduced the embedding dimension to 64 i.e 1/16th of 1024 using Principle Component Analysis (PCA) and it still gives a comparable performance. Yes! PCA gives better performance than NMF. Note: This process neither improves the runtime, nor the memory requirement for running the model. It only reduces the needed space to store embeddings, for example, for semantic search using vector databases.

# BERT for Patents

BERT for Patents is a model trained by Google on 100M+ patents (not just US patents).

If you want to learn more about the model, check out the [blog post](https://cloud.google.com/blog/products/ai-machine-learning/how-ai-improves-patent-analysis), [white paper](https://services.google.com/fh/files/blogs/bert_for_patents_white_paper.pdf) and [GitHub page](https://github.com/google/patents-public-data/blob/master/models/BERT%20for%20Patents.md) containing the original TensorFlow checkpoint.

---

### Projects using this model (or variants of it):

- [Patents4IPPC](https://github.com/ec-jrc/Patents4IPPC) (carried out by [Pi School](https://picampus-school.com/) and commissioned by the [Joint Research Centre (JRC)](https://ec.europa.eu/jrc/en) of the European Commission)

|

coolzhao/xlm-roberta-base-finetuned-panx-de

|

coolzhao

| 2022-06-29T07:14:20Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-29T07:01:12Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

args: PAN-X.de

metrics:

- name: F1

type: f1

value: 0.8600306626540231

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1356

- F1: 0.8600

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2525 | 1.0 | 525 | 0.1673 | 0.8294 |

| 0.1298 | 2.0 | 1050 | 0.1381 | 0.8510 |

| 0.0839 | 3.0 | 1575 | 0.1356 | 0.8600 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.11.0

- Datasets 1.16.1

- Tokenizers 0.10.3

|

hellennamulinda/agric-eng-lug

|

hellennamulinda

| 2022-06-29T06:40:17Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"marian",

"text2text-generation",

"autotrain",

"unk",

"dataset:hellennamulinda/autotrain-data-agric-eng-lug",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-06-23T13:50:37Z |

---

tags: autotrain

language: unk

widget:

- text: "I love AutoTrain 🤗"

datasets:

- hellennamulinda/autotrain-data-agric-eng-lug

co2_eq_emissions: 0.04087910671538076

---

# Model Trained Using AutoTrain

- Problem type: Translation

- Model ID: 1026034854

- CO2 Emissions (in grams): 0.04087910671538076

## Validation Metrics

- Loss: 1.0871405601501465

- Rouge1: 55.8225

- Rouge2: 34.1547

- RougeL: 54.4274

- RougeLsum: 54.408

- Gen Len: 23.178

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_HUGGINGFACE_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/hellennamulinda/autotrain-agric-eng-lug-1026034854

```

|

iiShreya/q-FrozenLake-v1-4x4-noSlippery

|

iiShreya

| 2022-06-29T05:28:15Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-29T05:28:08Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

RodrigoGuerra/bert-base-spanish-wwm-uncased-finetuned-clinical

|

RodrigoGuerra

| 2022-06-29T05:26:54Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-29T04:04:21Z |

---

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: bert-base-spanish-wwm-uncased-finetuned-clinical

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-spanish-wwm-uncased-finetuned-clinical

This model is a fine-tuned version of [dccuchile/bert-base-spanish-wwm-uncased](https://huggingface.co/dccuchile/bert-base-spanish-wwm-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7962

- F1: 0.1081

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 80

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:------:|:---------------:|:------:|

| 1.1202 | 1.0 | 2007 | 1.0018 | 0.0062 |

| 1.0153 | 2.0 | 4014 | 0.9376 | 0.0166 |

| 0.9779 | 3.0 | 6021 | 0.9026 | 0.0342 |

| 0.9598 | 4.0 | 8028 | 0.8879 | 0.0337 |

| 0.9454 | 5.0 | 10035 | 0.8699 | 0.0598 |

| 0.9334 | 6.0 | 12042 | 0.8546 | 0.0682 |

| 0.9263 | 7.0 | 14049 | 0.8533 | 0.0551 |

| 0.9279 | 8.0 | 16056 | 0.8538 | 0.0715 |

| 0.9184 | 9.0 | 18063 | 0.8512 | 0.0652 |

| 0.9151 | 10.0 | 20070 | 0.8313 | 0.0789 |

| 0.9092 | 11.0 | 22077 | 0.8299 | 0.0838 |

| 0.9083 | 12.0 | 24084 | 0.8331 | 0.0718 |

| 0.9057 | 13.0 | 26091 | 0.8319 | 0.0719 |

| 0.9018 | 14.0 | 28098 | 0.8133 | 0.0969 |

| 0.9068 | 15.0 | 30105 | 0.8234 | 0.0816 |

| 0.9034 | 16.0 | 32112 | 0.8151 | 0.0899 |

| 0.9008 | 17.0 | 34119 | 0.8145 | 0.0967 |

| 0.8977 | 18.0 | 36126 | 0.8168 | 0.0891 |

| 0.898 | 19.0 | 38133 | 0.8167 | 0.0818 |

| 0.8956 | 20.0 | 40140 | 0.8076 | 0.1030 |

| 0.8983 | 21.0 | 42147 | 0.8129 | 0.0867 |

| 0.896 | 22.0 | 44154 | 0.8118 | 0.0892 |

| 0.8962 | 23.0 | 46161 | 0.8066 | 0.1017 |

| 0.8917 | 24.0 | 48168 | 0.8154 | 0.0908 |

| 0.8923 | 25.0 | 50175 | 0.8154 | 0.0897 |

| 0.8976 | 26.0 | 52182 | 0.8089 | 0.0910 |

| 0.8926 | 27.0 | 54189 | 0.8069 | 0.0947 |

| 0.8911 | 28.0 | 56196 | 0.8170 | 0.0882 |

| 0.8901 | 29.0 | 58203 | 0.7991 | 0.1112 |

| 0.8934 | 30.0 | 60210 | 0.7996 | 0.1112 |

| 0.8903 | 31.0 | 62217 | 0.8049 | 0.0950 |

| 0.8924 | 32.0 | 64224 | 0.8116 | 0.0951 |

| 0.8887 | 33.0 | 66231 | 0.7982 | 0.1075 |

| 0.8922 | 34.0 | 68238 | 0.8013 | 0.1025 |

| 0.8871 | 35.0 | 70245 | 0.8064 | 0.0979 |

| 0.8913 | 36.0 | 72252 | 0.8108 | 0.0909 |

| 0.8924 | 37.0 | 74259 | 0.8081 | 0.0889 |

| 0.8848 | 38.0 | 76266 | 0.7923 | 0.1228 |

| 0.8892 | 39.0 | 78273 | 0.8025 | 0.0959 |

| 0.8886 | 40.0 | 80280 | 0.7954 | 0.1148 |

| 0.8938 | 41.0 | 82287 | 0.8017 | 0.1058 |

| 0.8897 | 42.0 | 84294 | 0.7946 | 0.1146 |

| 0.8906 | 43.0 | 86301 | 0.7983 | 0.1102 |

| 0.889 | 44.0 | 88308 | 0.8068 | 0.0950 |

| 0.8872 | 45.0 | 90315 | 0.7999 | 0.1089 |

| 0.8902 | 46.0 | 92322 | 0.7992 | 0.0999 |

| 0.8912 | 47.0 | 94329 | 0.7981 | 0.1048 |

| 0.886 | 48.0 | 96336 | 0.8024 | 0.0991 |

| 0.8848 | 49.0 | 98343 | 0.8026 | 0.0984 |

| 0.8866 | 50.0 | 100350 | 0.7965 | 0.1135 |

| 0.8848 | 51.0 | 102357 | 0.8054 | 0.0926 |

| 0.8863 | 52.0 | 104364 | 0.8068 | 0.0917 |

| 0.8866 | 53.0 | 106371 | 0.7993 | 0.0964 |

| 0.8823 | 54.0 | 108378 | 0.7929 | 0.1126 |

| 0.8911 | 55.0 | 110385 | 0.7938 | 0.1132 |

| 0.8911 | 56.0 | 112392 | 0.7932 | 0.1144 |

| 0.8866 | 57.0 | 114399 | 0.8018 | 0.0957 |

| 0.8841 | 58.0 | 116406 | 0.7976 | 0.1015 |

| 0.8874 | 59.0 | 118413 | 0.8035 | 0.0966 |

| 0.887 | 60.0 | 120420 | 0.7954 | 0.1112 |

| 0.888 | 61.0 | 122427 | 0.7927 | 0.1164 |

| 0.8845 | 62.0 | 124434 | 0.7982 | 0.1012 |

| 0.8848 | 63.0 | 126441 | 0.7978 | 0.1034 |

| 0.8857 | 64.0 | 128448 | 0.8036 | 0.0969 |

| 0.8827 | 65.0 | 130455 | 0.7958 | 0.1036 |

| 0.8878 | 66.0 | 132462 | 0.7983 | 0.1030 |

| 0.885 | 67.0 | 134469 | 0.7956 | 0.1055 |

| 0.8859 | 68.0 | 136476 | 0.7964 | 0.1058 |

| 0.8872 | 69.0 | 138483 | 0.7989 | 0.1005 |

| 0.8841 | 70.0 | 140490 | 0.7949 | 0.1138 |

| 0.8846 | 71.0 | 142497 | 0.7960 | 0.1062 |

| 0.8867 | 72.0 | 144504 | 0.7965 | 0.1058 |

| 0.8856 | 73.0 | 146511 | 0.7980 | 0.1007 |

| 0.8852 | 74.0 | 148518 | 0.7971 | 0.1012 |

| 0.8841 | 75.0 | 150525 | 0.7975 | 0.1049 |

| 0.8865 | 76.0 | 152532 | 0.7981 | 0.1010 |

| 0.8887 | 77.0 | 154539 | 0.7945 | 0.1095 |

| 0.8853 | 78.0 | 156546 | 0.7965 | 0.1053 |

| 0.8843 | 79.0 | 158553 | 0.7966 | 0.1062 |

| 0.8858 | 80.0 | 160560 | 0.7962 | 0.1081 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.9.0+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

|

domenicrosati/deberta-mlm-test

|

domenicrosati

| 2022-06-29T05:17:09Z | 9 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"deberta-v2",

"fill-mask",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-06-28T23:53:45Z |

---

license: mit

tags:

- fill-mask

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: deberta-mlm-test

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# deberta-mlm-test

This model is a fine-tuned version of [microsoft/deberta-v3-xsmall](https://huggingface.co/microsoft/deberta-v3-xsmall) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.2792

- Accuracy: 0.4766

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 4.4466 | 1.0 | 2067 | 4.1217 | 0.3847 |

| 3.9191 | 2.0 | 4134 | 3.6562 | 0.4298 |

| 3.6397 | 3.0 | 6201 | 3.4417 | 0.4550 |

| 3.522 | 4.0 | 8268 | 3.3239 | 0.4692 |

| 3.4504 | 5.0 | 10335 | 3.2792 | 0.4766 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0a0+17540c5

- Datasets 2.3.2

- Tokenizers 0.12.1

|

Abhinandan/Atari

|

Abhinandan

| 2022-06-29T04:59:38Z | 5 | 1 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-29T04:38:24Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- metrics:

- type: mean_reward

value: 14.50 +/- 12.34

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

```

# Download model and save it into the logs/ folder

python -m utils.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga Abhinandan -f logs/

python enjoy.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python train.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m utils.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga Abhinandan

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 100000.0),

('optimize_memory_usage', True),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

Shikenrua/distilbert-base-uncased-finetuned-emotion