modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-11 18:29:29

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 555

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-11 18:25:24

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

mhyatt000/YOLOv5

|

mhyatt000

| 2022-09-01T15:25:36Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"seals/CartPole-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"object-detection",

"dataset:coco",

"license:gpl-2.0",

"model-index",

"region:us"

] |

object-detection

| 2022-06-20T16:37:08Z |

---

license: gpl-2.0

datasets:

- coco

library_name: stable-baselines3

tags:

- seals/CartPole-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

- object-detection

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: 500.00 +/- 0.00

name: mean_reward

verified: True

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: seals/CartPole-v0

type: seals/CartPole-v0

---

# YOLOv5

Ultralytics YOLOv5 model in Pytorch.

Proof of concept for (TypoSquatting, Niche Squatting) security flaw on Hugging Face.

## Model Description

## How to use

```python

from transformers import YolosFeatureExtractor, YolosForObjectDetection

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = YolosFeatureExtractor.from_pretrained('mhyatt000/yolov5')

model = YolosForObjectDetection.from_pretrained('mhyatt000/yolov5')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

# model predicts bounding boxes and corresponding COCO classes

logits = outputs.logits

bboxes = outputs.pred_boxes

```

## Training Data

### Training

## Evaluation

Model was evaluated on [COCO2017](https://cocodataset.org/#home) dataset.

| Model | size (pixels) | mAPval | Speed | params | FLOPS |

|---------------|-------------------|-----------|-----------|-----------|-----------|

| YOLOv5s6 | 1280 | 43.3 | 4.3 | 12.7 | 17.4 |

| YOLOv5m6 | 1280 | 50.5 | 8.4 | 35.9 | 52.4 |

| YOLOv5l6 | 1280 | 53.4 | 12.3 | 77.2 | 117.7 |

| YOLOv5x6 | 1280 | 54.4 | 22.4 | 141.8 | 222.9 |

### Bibtex and citation info

```bibtex

@software{glenn_jocher_2022_6222936,

author = {Glenn Jocher and

Ayush Chaurasia and

Alex Stoken and

Jirka Borovec and

NanoCode012 and

Yonghye Kwon and

TaoXie and

Jiacong Fang and

imyhxy and

Kalen Michael and

Lorna and

Abhiram V and

Diego Montes and

Jebastin Nadar and

Laughing and

tkianai and

yxNONG and

Piotr Skalski and

Zhiqiang Wang and

Adam Hogan and

Cristi Fati and

Lorenzo Mammana and

AlexWang1900 and

Deep Patel and

Ding Yiwei and

Felix You and

Jan Hajek and

Laurentiu Diaconu and

Mai Thanh Minh},

title = {{ultralytics/yolov5: v6.1 - TensorRT, TensorFlow

Edge TPU and OpenVINO Export and Inference}},

month = feb,

year = 2022,

publisher = {Zenodo},

version = {v6.1},

doi = {10.5281/zenodo.6222936},

url = {https://doi.org/10.5281/zenodo.6222936}

}

```

|

BigSalmon/Backwards

|

BigSalmon

| 2022-09-01T15:13:56Z | 159 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-08-31T18:40:44Z |

Used the same dataset as the one I trained https://huggingface.co/BigSalmon/InformalToFormalLincoln73Paraphrase, but all the words are in the opposite order.

* Note: I think I probably train it for more epochs. The loss was very high. That said, keep an eye on my profile, if this is something you are interested in.

|

GItaf/gpt2-finetuned-mbti-0901

|

GItaf

| 2022-09-01T15:10:56Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-09-01T13:20:36Z |

---

license: mit

tags:

- generated_from_trainer

model-index:

- name: gpt2-finetuned-mbti-0901

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-finetuned-mbti-0901

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.9470

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 4.1073 | 1.0 | 9906 | 4.0111 |

| 4.0302 | 2.0 | 19812 | 3.9761 |

| 3.9757 | 3.0 | 29718 | 3.9578 |

| 3.9471 | 4.0 | 39624 | 3.9495 |

| 3.9187 | 5.0 | 49530 | 3.9470 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1

- Datasets 2.4.0

- Tokenizers 0.12.1

|

butchland/Reinforce-Cartpole-v1

|

butchland

| 2022-09-01T14:40:46Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-01T14:23:01Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-Cartpole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 95.80 +/- 22.48

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

pmpc/twitter-roberta-base-stance-abortionV3

|

pmpc

| 2022-09-01T13:45:07Z | 102 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"roberta",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-01T13:34:41Z |

---

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: twitter-roberta-base-stance-abortionV3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# twitter-roberta-base-stance-abortionV3

This model is a fine-tuned version of [cardiffnlp/twitter-roberta-base-stance-abortion](https://huggingface.co/cardiffnlp/twitter-roberta-base-stance-abortion) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5095

- F1: 0.7917

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.8492 | 1.0 | 12 | 0.4862 | 0.7917 |

| 0.7291 | 2.0 | 24 | 0.4264 | 0.7917 |

| 0.5465 | 3.0 | 36 | 0.6450 | 0.7917 |

| 0.5905 | 4.0 | 48 | 0.5857 | 0.7917 |

| 0.4556 | 5.0 | 60 | 0.5095 | 0.7917 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

DrishtiSharma/finetuned-ViT-human-action-recognition-v1

|

DrishtiSharma

| 2022-09-01T13:09:05Z | 210 | 7 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-09-01T12:22:59Z |

---

license: apache-2.0

tags:

- image-classification

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: finetuned-ViT-human-action-recognition-v1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuned-ViT-human-action-recognition-v1

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the Human_Action_Recognition dataset.

It achieves the following results on the evaluation set:

- Loss: 3.1427

- Accuracy: 0.0791

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.4986 | 0.13 | 100 | 3.1427 | 0.0791 |

| 1.1929 | 0.25 | 200 | 3.4083 | 0.0726 |

| 1.2673 | 0.38 | 300 | 3.4615 | 0.0769 |

| 0.9805 | 0.51 | 400 | 3.9192 | 0.0824 |

| 1.158 | 0.63 | 500 | 4.2648 | 0.0698 |

| 1.2544 | 0.76 | 600 | 4.5536 | 0.0574 |

| 1.0073 | 0.89 | 700 | 4.0310 | 0.0819 |

| 0.9315 | 1.02 | 800 | 4.5154 | 0.0702 |

| 0.9063 | 1.14 | 900 | 4.7162 | 0.0633 |

| 0.6756 | 1.27 | 1000 | 4.6482 | 0.0626 |

| 1.0239 | 1.4 | 1100 | 4.6437 | 0.0635 |

| 0.7634 | 1.52 | 1200 | 4.5625 | 0.0752 |

| 0.8365 | 1.65 | 1300 | 4.9912 | 0.0561 |

| 0.8979 | 1.78 | 1400 | 5.1739 | 0.0356 |

| 0.9448 | 1.9 | 1500 | 4.8946 | 0.0541 |

| 0.697 | 2.03 | 1600 | 4.9516 | 0.0741 |

| 0.7861 | 2.16 | 1700 | 5.0090 | 0.0776 |

| 0.6404 | 2.28 | 1800 | 5.3905 | 0.0643 |

| 0.7939 | 2.41 | 1900 | 4.9159 | 0.1015 |

| 0.6331 | 2.54 | 2000 | 5.3083 | 0.0589 |

| 0.6082 | 2.66 | 2100 | 4.8538 | 0.0857 |

| 0.6229 | 2.79 | 2200 | 5.3086 | 0.0689 |

| 0.6964 | 2.92 | 2300 | 5.3745 | 0.0713 |

| 0.5246 | 3.05 | 2400 | 5.0369 | 0.0796 |

| 0.6097 | 3.17 | 2500 | 5.2935 | 0.0743 |

| 0.5778 | 3.3 | 2600 | 5.5431 | 0.0709 |

| 0.4196 | 3.43 | 2700 | 5.5508 | 0.0759 |

| 0.5495 | 3.55 | 2800 | 5.5728 | 0.0813 |

| 0.5932 | 3.68 | 2900 | 5.7992 | 0.0663 |

| 0.4382 | 3.81 | 3000 | 5.8010 | 0.0643 |

| 0.4827 | 3.93 | 3100 | 5.7529 | 0.0680 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

KISSz/wav2vac2-vee-train001-ASR

|

KISSz

| 2022-09-01T12:51:55Z | 111 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:cc-by-sa-4.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-09-01T09:50:51Z |

---

license: cc-by-sa-4.0

tags:

- generated_from_trainer

model_index:

name: wav2vac2-vee-train001-ASR

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vac2-vee-train001-ASR

This model is a fine-tuned version of [airesearch/wav2vec2-large-xlsr-53-th](https://huggingface.co/airesearch/wav2vec2-large-xlsr-53-th) on an unkown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.9.1

- Pytorch 1.9.0+cpu

- Datasets 1.11.0

- Tokenizers 0.10.3

|

GItaf/roberta-base-finetuned-mbti-0901

|

GItaf

| 2022-09-01T12:24:17Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-09-01T08:01:47Z |

---

license: mit

tags:

- generated_from_trainer

model-index:

- name: roberta-base-finetuned-mbti-0901

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-finetuned-mbti-0901

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 4.0780

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 4.3179 | 1.0 | 9920 | 4.1970 |

| 4.186 | 2.0 | 19840 | 4.1264 |

| 4.1057 | 3.0 | 29760 | 4.0955 |

| 4.0629 | 4.0 | 39680 | 4.0826 |

| 4.0333 | 5.0 | 49600 | 4.0780 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Astrofolia/distilbert-base-uncased-finetuned-emotion

|

Astrofolia

| 2022-09-01T11:20:22Z | 101 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-01T11:10:08Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9285

- name: F1

type: f1

value: 0.9284597945931914

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2175

- Accuracy: 0.9285

- F1: 0.9285

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8486 | 1.0 | 250 | 0.3234 | 0.896 | 0.8913 |

| 0.257 | 2.0 | 500 | 0.2175 | 0.9285 | 0.9285 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

PhucLe/LRO_v1.0.2a

|

PhucLe

| 2022-09-01T09:56:58Z | 107 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"text-classification",

"autotrain",

"en",

"dataset:PhucLe/autotrain-data-LRO_v1.0.2",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-01T09:55:28Z |

---

tags:

- autotrain

- text-classification

language:

- en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- PhucLe/autotrain-data-LRO_v1.0.2

co2_eq_emissions:

emissions: 1.2585708613878817

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 1345851607

- CO2 Emissions (in grams): 1.2586

## Validation Metrics

- Loss: 0.523

- Accuracy: 0.818

- Macro F1: 0.817

- Micro F1: 0.818

- Weighted F1: 0.817

- Macro Precision: 0.824

- Micro Precision: 0.818

- Weighted Precision: 0.824

- Macro Recall: 0.818

- Micro Recall: 0.818

- Weighted Recall: 0.818

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/PhucLe/autotrain-LRO_v1.0.2-1345851607

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("PhucLe/autotrain-LRO_v1.0.2-1345851607", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("PhucLe/autotrain-LRO_v1.0.2-1345851607", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

huggingtweets/buckeshot-onlinepete

|

huggingtweets

| 2022-09-01T09:35:19Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-04-18T07:03:11Z |

---

language: en

thumbnail: http://www.huggingtweets.com/buckeshot-onlinepete/1662024914888/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1545140847259406337/bTk2lL6O_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/456958582731603969/QZKpv6eI_400x400.jpeg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">BUCKSHOT & im pete online</div>

<div style="text-align: center; font-size: 14px;">@buckeshot-onlinepete</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from BUCKSHOT & im pete online.

| Data | BUCKSHOT | im pete online |

| --- | --- | --- |

| Tweets downloaded | 311 | 3190 |

| Retweets | 77 | 94 |

| Short tweets | 46 | 1003 |

| Tweets kept | 188 | 2093 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/1wyw1egj/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @buckeshot-onlinepete's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/1bnj1d4d) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/1bnj1d4d/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/buckeshot-onlinepete')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

Conrad747/lg-en-v3

|

Conrad747

| 2022-09-01T09:11:44Z | 116 | 0 |

transformers

|

[

"transformers",

"pytorch",

"marian",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-01T06:10:32Z |

---

tags:

- generated_from_trainer

metrics:

- bleu

model-index:

- name: lg-en-v3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# lg-en-v3

This model is a fine-tuned version of [AI-Lab-Makerere/lg_en](https://huggingface.co/AI-Lab-Makerere/lg_en) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9289

- Bleu: 32.5138

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4.4271483249908667e-05

- train_batch_size: 14

- eval_batch_size: 6

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| No log | 1.0 | 26 | 1.0323 | 32.6278 |

| No log | 2.0 | 52 | 0.9289 | 32.5138 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

NimaBoscarino/IS-Net_DIS-general-use

|

NimaBoscarino

| 2022-09-01T06:41:58Z | 0 | 15 | null |

[

"background-removal",

"computer-vision",

"image-segmentation",

"arxiv:2203.03041",

"license:apache-2.0",

"region:us"

] |

image-segmentation

| 2022-09-01T05:33:22Z |

---

tags:

- background-removal

- computer-vision

- image-segmentation

license: apache-2.0

library: pytorch

inference: false

---

# IS-Net_DIS-general-use

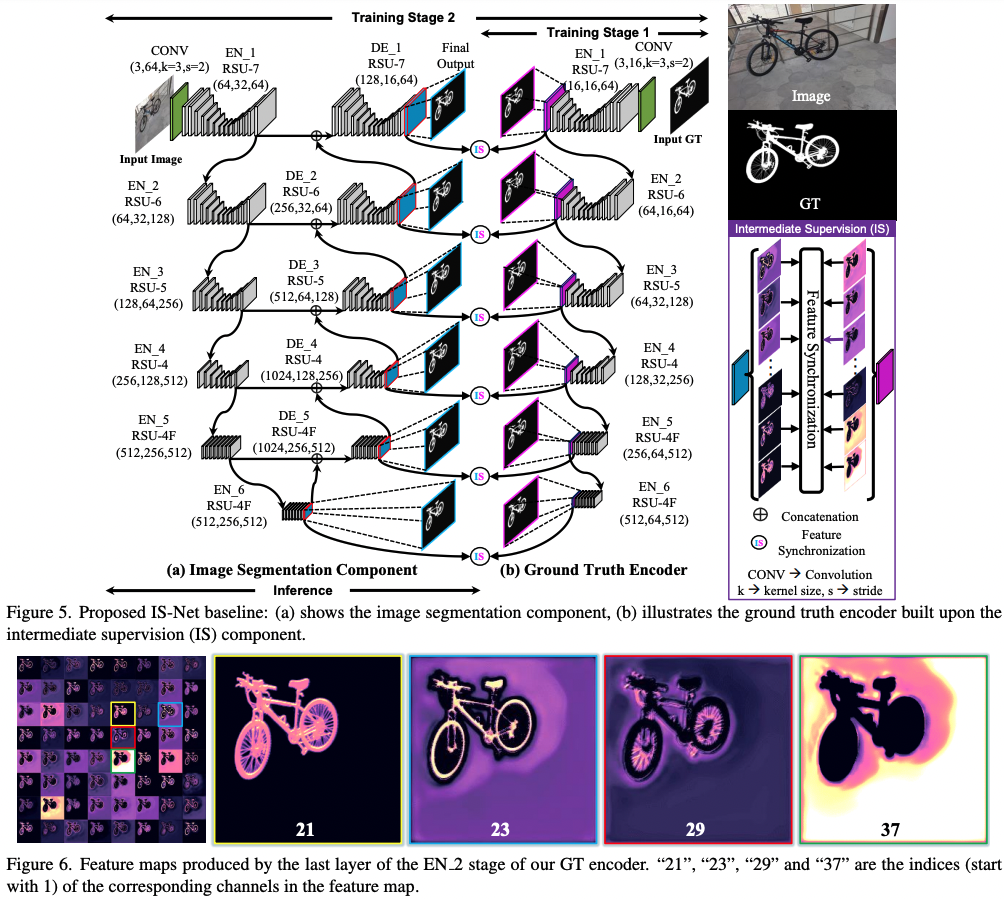

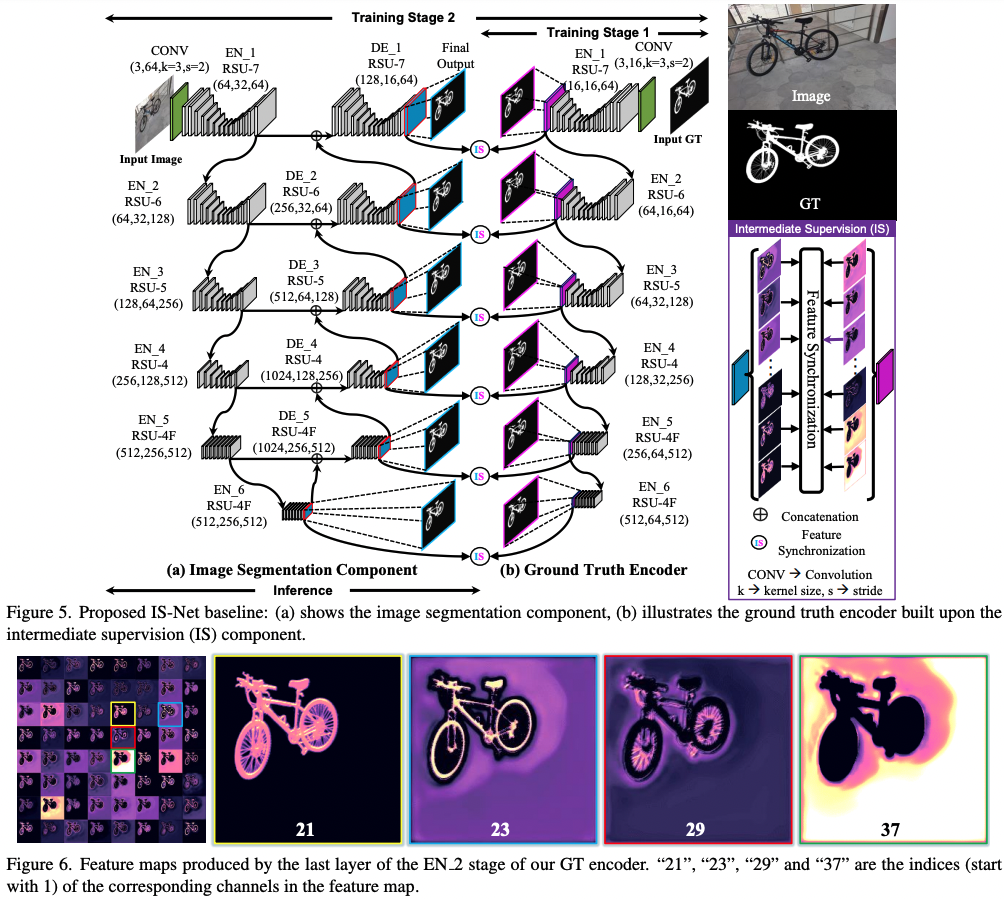

* Model Authors: Xuebin Qin, Hang Dai, Xiaobin Hu, Deng-Ping Fan*, Ling Shao, Luc Van Gool

* Paper: Highly Accurate Dichotomous Image Segmentation (ECCV 2022 - https://arxiv.org/pdf/2203.03041.pdf

* Code Repo: https://github.com/xuebinqin/DIS

* Project Homepage: https://xuebinqin.github.io/dis/index.html

Note that this is an _optimized_ version of the IS-NET model.

From the paper abstract:

> [...] we introduce a simple intermediate supervision baseline (IS- Net) using both feature-level and mask-level guidance for DIS model training. Without tricks, IS-Net outperforms var- ious cutting-edge baselines on the proposed DIS5K, mak- ing it a general self-learned supervision network that can help facilitate future research in DIS.

# Citation

```

@InProceedings{qin2022,

author={Xuebin Qin and Hang Dai and Xiaobin Hu and Deng-Ping Fan and Ling Shao and Luc Van Gool},

title={Highly Accurate Dichotomous Image Segmentation},

booktitle={ECCV},

year={2022}

}

```

|

NimaBoscarino/IS-Net_DIS

|

NimaBoscarino

| 2022-09-01T06:41:46Z | 0 | 4 | null |

[

"background-removal",

"computer-vision",

"image-segmentation",

"arxiv:2203.03041",

"license:apache-2.0",

"region:us"

] |

image-segmentation

| 2022-09-01T05:05:18Z |

---

tags:

- background-removal

- computer-vision

- image-segmentation

license: apache-2.0

library: pytorch

inference: false

---

# IS-Net_DIS

* Model Authors: Xuebin Qin, Hang Dai, Xiaobin Hu, Deng-Ping Fan*, Ling Shao, Luc Van Gool

* Paper: Highly Accurate Dichotomous Image Segmentation (ECCV 2022 - https://arxiv.org/pdf/2203.03041.pdf

* Code Repo: https://github.com/xuebinqin/DIS

* Project Homepage: https://xuebinqin.github.io/dis/index.html

From the paper abstract:

> [...] we introduce a simple intermediate supervision baseline (IS- Net) using both feature-level and mask-level guidance for DIS model training. Without tricks, IS-Net outperforms var- ious cutting-edge baselines on the proposed DIS5K, mak- ing it a general self-learned supervision network that can help facilitate future research in DIS.

[HCE score](https://github.com/xuebinqin/DIS#4-human-correction-efforts-hce): 1016

# Citation

```

@InProceedings{qin2022,

author={Xuebin Qin and Hang Dai and Xiaobin Hu and Deng-Ping Fan and Ling Shao and Luc Van Gool},

title={Highly Accurate Dichotomous Image Segmentation},

booktitle={ECCV},

year={2022}

}

```

|

Wakeme/ddpm-butterflies-128

|

Wakeme

| 2022-09-01T05:47:18Z | 0 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:huggan/smithsonian_butterflies_subset",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-09-01T04:34:11Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: huggan/smithsonian_butterflies_subset

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/smithsonian_butterflies_subset` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/Wakeme/ddpm-butterflies-128/tensorboard?#scalars)

|

dvalbuena1/a2c-AntBulletEnv-v0

|

dvalbuena1

| 2022-09-01T04:21:12Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"AntBulletEnv-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-01T04:19:59Z |

---

library_name: stable-baselines3

tags:

- AntBulletEnv-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- metrics:

- type: mean_reward

value: 836.44 +/- 139.46

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AntBulletEnv-v0

type: AntBulletEnv-v0

---

# **A2C** Agent playing **AntBulletEnv-v0**

This is a trained model of a **A2C** agent playing **AntBulletEnv-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

SharpAI/mal-net-traffic-t5-l12

|

SharpAI

| 2022-09-01T01:17:03Z | 110 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"t5",

"text2text-generation",

"generated_from_keras_callback",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-01T01:16:05Z |

---

tags:

- generated_from_keras_callback

model-index:

- name: mal-net-traffic-t5-l12

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# mal-net-traffic-t5-l12

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.20.1

- TensorFlow 2.6.4

- Datasets 2.1.0

- Tokenizers 0.12.1

|

JAlexis/modelF_01

|

JAlexis

| 2022-08-31T22:59:44Z | 99 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"question-answering",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-08-31T22:46:53Z |

---

widget:

- text: "How can I protect myself against covid-19?"

context: "Preventative measures consist of recommendations to wear a mask in public, maintain social distancing of at least six feet, wash hands regularly, and use hand sanitizer. To facilitate this aim, we adapt the conceptual model and measures of Liao et al. "

- text: "What are the risk factors for covid-19?"

context: "To identify risk factors for hospital deaths from COVID-19, the OpenSAFELY platform examined electronic health records from 17.4 million UK adults. The authors used multivariable Cox proportional hazards model to identify the association of risk of death with older age, lower socio-economic status, being male, non-white ethnic background and certain clinical conditions (diabetes, obesity, cancer, respiratory diseases, heart, kidney, liver, neurological and autoimmune conditions). Notably, asthma was identified as a risk factor, despite prior suggestion of a potential protective role. Interestingly, higher risks due to ethnicity or lower socio-economic status could not be completely attributed to pre-existing health conditions."

---

|

nawage/dragons-test

|

nawage

| 2022-08-31T22:35:54Z | 4 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:imagefolder",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-08-31T21:44:48Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: imagefolder

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# dragons-test

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `imagefolder` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 4

- eval_batch_size: 4

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/nawage/dragons-test/tensorboard?#scalars)

|

marsyanzeyu/bert-finetuned-ner-test-2

|

marsyanzeyu

| 2022-08-31T21:53:00Z | 59 | 0 |

transformers

|

[

"transformers",

"tf",

"bert",

"token-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-08-31T21:45:00Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: marsyanzeyu/bert-finetuned-ner-test-2

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# marsyanzeyu/bert-finetuned-ner-test-2

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0266

- Validation Loss: 0.0542

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 2634, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 0.1742 | 0.0652 | 0 |

| 0.0467 | 0.0561 | 1 |

| 0.0266 | 0.0542 | 2 |

### Framework versions

- Transformers 4.21.2

- TensorFlow 2.8.2

- Datasets 2.4.0

- Tokenizers 0.12.1

|

theojolliffe/T5-model-1-feedback-e1

|

theojolliffe

| 2022-08-31T21:00:29Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-08-31T20:36:55Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: T5-model-1-feedback-e1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# T5-model-1-feedback-e1

This model is a fine-tuned version of [theojolliffe/T5-model-1-feedback](https://huggingface.co/theojolliffe/T5-model-1-feedback) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:------:|:---------:|:-------:|

| No log | 1.0 | 119 | 0.4255 | 86.0527 | 81.4537 | 85.654 | 85.9336 | 14.1852 |

### Framework versions

- Transformers 4.12.3

- Pytorch 1.9.0

- Datasets 1.18.0

- Tokenizers 0.10.3

|

kiheh85202/yolo

|

kiheh85202

| 2022-08-31T20:28:31Z | 162 | 1 |

transformers

|

[

"transformers",

"pytorch",

"dpt",

"vision",

"image-segmentation",

"dataset:scene_parse_150",

"arxiv:2103.13413",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

image-segmentation

| 2022-08-18T20:27:18Z |

---

license: apache-2.0

tags:

- vision

- image-segmentation

datasets:

- scene_parse_150

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

---

# DPT (large-sized model) fine-tuned on ADE20k

Dense Prediction Transformer (DPT) model trained on ADE20k for semantic segmentation. It was introduced in the paper [Vision Transformers for Dense Prediction](https://arxiv.org/abs/2103.13413) by Ranftl et al. and first released in [this repository](https://github.com/isl-org/DPT).

Disclaimer: The team releasing DPT did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

DPT uses the Vision Transformer (ViT) as backbone and adds a neck + head on top for semantic segmentation.

## Intended uses & limitations

You can use the raw model for semantic segmentation. See the [model hub](https://huggingface.co/models?search=dpt) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model:

```python

from transformers import DPTFeatureExtractor, DPTForSemanticSegmentation

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = DPTFeatureExtractor.from_pretrained("Intel/dpt-large-ade")

model = DPTForSemanticSegmentation.from_pretrained("Intel/dpt-large-ade")

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

```

For more code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/master/en/model_doc/dpt).

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2103-13413,

author = {Ren{\'{e}} Ranftl and

Alexey Bochkovskiy and

Vladlen Koltun},

title = {Vision Transformers for Dense Prediction},

journal = {CoRR},

volume = {abs/2103.13413},

year = {2021},

url = {https://arxiv.org/abs/2103.13413},

eprinttype = {arXiv},

eprint = {2103.13413},

timestamp = {Wed, 07 Apr 2021 15:31:46 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2103-13413.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

muhtasham/tajberto-ner

|

muhtasham

| 2022-08-31T20:15:40Z | 110 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"roberta",

"token-classification",

"generated_from_trainer",

"dataset:wikiann",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-08-27T15:15:25Z |

---

widget:

- text: " Исмоили Сомонӣ - намояндаи бузурги форсу-тоҷик"

- text: "Ин фурудгоҳ дар кишвари Индонезия қарор дорад."

- text: " Бобоҷон Ғафуров – солҳои 1946-1956"

- text: " Лоиқ Шералӣ дар васфи Модар шеър"

tags:

- generated_from_trainer

datasets:

- wikiann

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: tajberto-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: wikiann

type: wikiann

config: tg

split: train+test

args: tg

metrics:

- name: Precision

type: precision

value: 0.576

- name: Recall

type: recall

value: 0.6923076923076923

- name: F1

type: f1

value: 0.62882096069869

- name: Accuracy

type: accuracy

value: 0.8934049079754601

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tajberto-ner

This model is a fine-tuned version of [muhtasham/TajBERTo](https://huggingface.co/muhtasham/TajBERTo) on the wikiann dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6129

- Precision: 0.576

- Recall: 0.6923

- F1: 0.6288

- Accuracy: 0.8934

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 200

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 2.0 | 50 | 0.6171 | 0.1667 | 0.2885 | 0.2113 | 0.7646 |

| No log | 4.0 | 100 | 0.4733 | 0.2824 | 0.4615 | 0.3504 | 0.8344 |

| No log | 6.0 | 150 | 0.3857 | 0.3372 | 0.5577 | 0.4203 | 0.8589 |

| No log | 8.0 | 200 | 0.4523 | 0.4519 | 0.5865 | 0.5105 | 0.8765 |

| No log | 10.0 | 250 | 0.3870 | 0.44 | 0.6346 | 0.5197 | 0.8834 |

| No log | 12.0 | 300 | 0.4512 | 0.5267 | 0.6635 | 0.5872 | 0.8865 |

| No log | 14.0 | 350 | 0.4934 | 0.4789 | 0.6538 | 0.5528 | 0.8819 |

| No log | 16.0 | 400 | 0.4924 | 0.4783 | 0.6346 | 0.5455 | 0.8842 |

| No log | 18.0 | 450 | 0.5355 | 0.4595 | 0.6538 | 0.5397 | 0.8788 |

| 0.1682 | 20.0 | 500 | 0.5440 | 0.5547 | 0.6827 | 0.6121 | 0.8942 |

| 0.1682 | 22.0 | 550 | 0.5299 | 0.5794 | 0.7019 | 0.6348 | 0.9003 |

| 0.1682 | 24.0 | 600 | 0.5735 | 0.5691 | 0.6731 | 0.6167 | 0.8926 |

| 0.1682 | 26.0 | 650 | 0.6027 | 0.5833 | 0.6731 | 0.6250 | 0.8796 |

| 0.1682 | 28.0 | 700 | 0.6119 | 0.568 | 0.6827 | 0.6201 | 0.8934 |

| 0.1682 | 30.0 | 750 | 0.6098 | 0.5635 | 0.6827 | 0.6174 | 0.8911 |

| 0.1682 | 32.0 | 800 | 0.6237 | 0.5469 | 0.6731 | 0.6034 | 0.8834 |

| 0.1682 | 34.0 | 850 | 0.6215 | 0.5530 | 0.7019 | 0.6186 | 0.8842 |

| 0.1682 | 36.0 | 900 | 0.6179 | 0.5802 | 0.7308 | 0.6468 | 0.8888 |

| 0.1682 | 38.0 | 950 | 0.6201 | 0.5373 | 0.6923 | 0.6050 | 0.8873 |

| 0.0007 | 40.0 | 1000 | 0.6114 | 0.5952 | 0.7212 | 0.6522 | 0.8911 |

| 0.0007 | 42.0 | 1050 | 0.6073 | 0.5625 | 0.6923 | 0.6207 | 0.8896 |

| 0.0007 | 44.0 | 1100 | 0.6327 | 0.5620 | 0.6538 | 0.6044 | 0.8896 |

| 0.0007 | 46.0 | 1150 | 0.6129 | 0.576 | 0.6923 | 0.6288 | 0.8934 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

gus1999/model

|

gus1999

| 2022-08-31T20:10:26Z | 160 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"camembert",

"fill-mask",

"generated_from_trainer",

"dataset:allocine",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-08-31T19:07:54Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- allocine

model-index:

- name: model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# model

This model is a fine-tuned version of [cmarkea/distilcamembert-base](https://huggingface.co/cmarkea/distilcamembert-base) on the allocine dataset.

It achieves the following results on the evaluation set:

- Loss: 2.0254

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.4388 | 1.0 | 157 | 2.1637 |

| 2.288 | 2.0 | 314 | 2.1697 |

| 2.2444 | 3.0 | 471 | 2.1150 |

| 2.2166 | 4.0 | 628 | 2.0906 |

| 2.1754 | 5.0 | 785 | 2.0899 |

| 2.1604 | 6.0 | 942 | 2.0797 |

| 2.1299 | 7.0 | 1099 | 2.0589 |

| 2.1195 | 8.0 | 1256 | 2.0178 |

| 2.1258 | 9.0 | 1413 | 2.0348 |

| 2.1071 | 10.0 | 1570 | 2.0090 |

| 2.0888 | 11.0 | 1727 | 2.0047 |

| 2.0792 | 12.0 | 1884 | 2.0219 |

| 2.0687 | 13.0 | 2041 | 2.0080 |

| 2.0527 | 14.0 | 2198 | 2.0298 |

| 2.0589 | 15.0 | 2355 | 1.9869 |

| 2.0518 | 16.0 | 2512 | 2.0152 |

| 2.0409 | 17.0 | 2669 | 2.0247 |

| 2.0507 | 18.0 | 2826 | 1.9928 |

| 2.0366 | 19.0 | 2983 | 2.0175 |

| 2.0386 | 20.0 | 3140 | 1.9487 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

AaronCU/attribute-classification

|

AaronCU

| 2022-08-31T19:25:23Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"text-classification",

"autotrain",

"en",

"dataset:AaronCU/autotrain-data-attribute-classification",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-08-31T19:24:40Z |

---

tags:

- autotrain

- text-classification

language:

- en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- AaronCU/autotrain-data-attribute-classification

co2_eq_emissions:

emissions: 0.002847008943614719

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 1343651539

- CO2 Emissions (in grams): 0.0028

## Validation Metrics

- Loss: 0.163

- Accuracy: 0.949

- Macro F1: 0.947

- Micro F1: 0.949

- Weighted F1: 0.949

- Macro Precision: 0.943

- Micro Precision: 0.949

- Weighted Precision: 0.951

- Macro Recall: 0.952

- Micro Recall: 0.949

- Weighted Recall: 0.949

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/AaronCU/autotrain-attribute-classification-1343651539

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("AaronCU/autotrain-attribute-classification-1343651539", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("AaronCU/autotrain-attribute-classification-1343651539", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

castorini/monot5-3b-msmarco-10k

|

castorini

| 2022-08-31T19:20:16Z | 497 | 12 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"arxiv:2206.02873",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-03-28T15:08:54Z |

This model is a T5-3B reranker fine-tuned on the MS MARCO passage dataset for 10k steps (or 1 epoch).

For more details on how to use it, check [pygaggle.ai](pygaggle.ai)

Paper describing the model: [Document Ranking with a Pretrained Sequence-to-Sequence Model](https://www.aclweb.org/anthology/2020.findings-emnlp.63/)

This model is also the state of the art on the BEIR Benchmark.

- Paper: [No Parameter Left Behind: How Distillation and Model Size Affect Zero-Shot Retrieval](https://arxiv.org/abs/2206.02873)

- [Repository](https://github.com/guilhermemr04/scaling-zero-shot-retrieval)

|

suey2580/distilbert-base-uncased-finetuned-cola

|

suey2580

| 2022-08-31T18:58:43Z | 12 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:glue",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-04-06T01:29:16Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- matthews_correlation

model-index:

- name: distilbert-base-uncased-finetuned-cola

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: glue

type: glue

args: cola

metrics:

- name: Matthews Correlation

type: matthews_correlation

value: 0.5238347808517775

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0766

- Matthews Correlation: 0.5238

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2.403175733231667e-05

- train_batch_size: 8

- eval_batch_size: 16

- seed: 33

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.4954 | 1.0 | 1069 | 0.4770 | 0.4589 |

| 0.3627 | 2.0 | 2138 | 0.5464 | 0.4998 |

| 0.2576 | 3.0 | 3207 | 0.8439 | 0.4933 |

| 0.1488 | 4.0 | 4276 | 1.0184 | 0.5035 |

| 0.1031 | 5.0 | 5345 | 1.0766 | 0.5238 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 2.0.0

- Tokenizers 0.11.6

|

curt-tigges/q-Taxi-v3

|

curt-tigges

| 2022-08-31T18:47:23Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-08-31T18:47:16Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="curt-tigges/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

Shivus/cartpole

|

Shivus

| 2022-08-31T17:07:14Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-08-31T17:04:29Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: cartpole

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 139.50 +/- 32.14

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

29thDay/A2C-SpaceInvadersNoFrameskip-v4

|

29thDay

| 2022-08-31T15:47:23Z | 2 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-08-31T15:46:15Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- metrics:

- type: mean_reward

value: 10.00 +/- 10.25

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

---

# **A2C** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **A2C** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

ericntay/stbl_clinical_bert_ft

|

ericntay

| 2022-08-31T15:31:41Z | 107 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-08-31T15:14:00Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: stbl_clinical_bert_ft

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# stbl_clinical_bert_ft

This model is a fine-tuned version of [emilyalsentzer/Bio_ClinicalBERT](https://huggingface.co/emilyalsentzer/Bio_ClinicalBERT) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1789

- F1: 0.8523

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 12

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2786 | 1.0 | 95 | 0.1083 | 0.8090 |

| 0.0654 | 2.0 | 190 | 0.1005 | 0.8475 |

| 0.0299 | 3.0 | 285 | 0.1207 | 0.8481 |

| 0.0146 | 4.0 | 380 | 0.1432 | 0.8454 |

| 0.0088 | 5.0 | 475 | 0.1362 | 0.8475 |

| 0.0056 | 6.0 | 570 | 0.1527 | 0.8518 |

| 0.0037 | 7.0 | 665 | 0.1617 | 0.8519 |

| 0.0022 | 8.0 | 760 | 0.1726 | 0.8495 |

| 0.0018 | 9.0 | 855 | 0.1743 | 0.8527 |

| 0.0014 | 10.0 | 950 | 0.1750 | 0.8463 |

| 0.0014 | 11.0 | 1045 | 0.1775 | 0.8522 |

| 0.001 | 12.0 | 1140 | 0.1789 | 0.8523 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

ai-forever/mGPT-armenian

|

ai-forever

| 2022-08-31T15:05:46Z | 25 | 8 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"multilingual",

"PyTorch",

"Transformers",

"gpt3",

"Deepspeed",

"Megatron",

"hy",

"dataset:mc4",

"dataset:wikipedia",

"arxiv:2112.10668",

"arxiv:2204.07580",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-08-31T14:24:00Z |

---

license: apache-2.0

language:

- hy

pipeline_tag: text-generation

tags:

- multilingual

- PyTorch

- Transformers

- gpt3

- gpt2

- Deepspeed

- Megatron

datasets:

- mc4

- wikipedia

thumbnail: "https://github.com/sberbank-ai/mgpt"

---

# Multilingual GPT model, Armenian language finetune

We introduce a monolingual GPT-3-based model for Armenian language

The model is based on [mGPT](https://huggingface.co/sberbank-ai/mGPT/), a family of autoregressive GPT-like models with 1.3 billion parameters trained on 60 languages from 25 language families using Wikipedia and Colossal Clean Crawled Corpus.

We reproduce the GPT-3 architecture using GPT-2 sources and the sparse attention mechanism, [Deepspeed](https://github.com/microsoft/DeepSpeed) and [Megatron](https://github.com/NVIDIA/Megatron-LM) frameworks allows us to effectively parallelize the training and inference steps. The resulting models show performance on par with the recently released [XGLM](https://arxiv.org/pdf/2112.10668.pdf) models at the same time covering more languages and enhancing NLP possibilities for low resource languages.

## Code

The source code for the mGPT XL model is available on [Github](https://github.com/sberbank-ai/mgpt)

## Paper

mGPT: Few-Shot Learners Go Multilingual

[Abstract](https://arxiv.org/abs/2204.07580) [PDF](https://arxiv.org/pdf/2204.07580.pdf)

```

@misc{https://doi.org/10.48550/arxiv.2204.07580,

doi = {10.48550/ARXIV.2204.07580},

url = {https://arxiv.org/abs/2204.07580},

author = {Shliazhko, Oleh and Fenogenova, Alena and Tikhonova, Maria and Mikhailov, Vladislav and Kozlova, Anastasia and Shavrina, Tatiana},

keywords = {Computation and Language (cs.CL), Artificial Intelligence (cs.AI), FOS: Computer and information sciences, FOS: Computer and information sciences, I.2; I.2.7, 68-06, 68-04, 68T50, 68T01},

title = {mGPT: Few-Shot Learners Go Multilingual},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

```

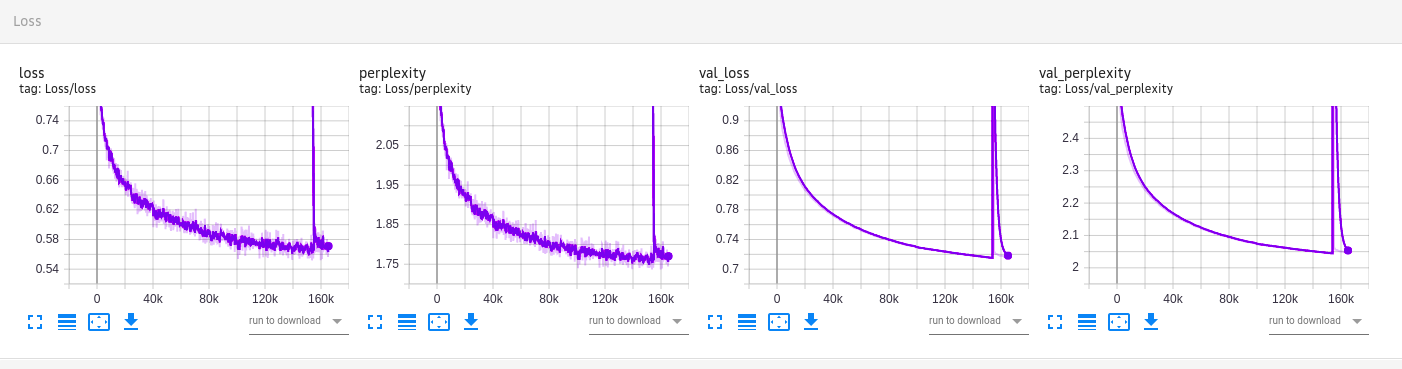

## Training

The model was fine-tuned on 170GB of Armenian texts, including MC4, Archive.org fiction, EANC public data, OpenSubtitles, OSCAR corpus and blog texts.

Val perplexity is 2.046.

The mGPT model was pre-trained for 12 days x 256 GPU (Tesla NVidia V100), 4 epochs, then 9 days x 64 GPU, 1 epoch

The Armenian finetune was around 7 days with 4 Tesla NVidia V100 and has made 160k steps.

What happens on this image? The model is originally trained with sparse attention masks, then fine-tuned with no sparsity on the last steps (perplexity and loss peak). Getting rid of the sparsity in the end of the training helps to integrate the model into the GPT2 HF class.

|

farleyknight-org-username/vit-base-mnist

|

farleyknight-org-username

| 2022-08-31T14:55:56Z | 1,370 | 8 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"vision",

"generated_from_trainer",

"dataset:mnist",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-08-21T16:48:27Z |

---

license: apache-2.0

tags:

- image-classification

- vision

- generated_from_trainer

datasets:

- mnist

metrics:

- accuracy

model-index:

- name: vit-base-mnist

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: mnist

type: mnist

config: mnist

split: train

args: mnist

metrics:

- name: Accuracy

type: accuracy

value: 0.9948888888888889

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vit-base-mnist

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the mnist dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0236

- Accuracy: 0.9949

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 1337

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.3717 | 1.0 | 6375 | 0.0522 | 0.9893 |

| 0.3453 | 2.0 | 12750 | 0.0370 | 0.9906 |

| 0.3736 | 3.0 | 19125 | 0.0308 | 0.9916 |

| 0.3224 | 4.0 | 25500 | 0.0269 | 0.9939 |

| 0.2846 | 5.0 | 31875 | 0.0236 | 0.9949 |

### Framework versions

- Transformers 4.22.0.dev0

- Pytorch 1.11.0a0+17540c5

- Datasets 2.4.0

- Tokenizers 0.12.1

|

AliShaker/layoutlmv3-finetuned-wildreceipt

|

AliShaker

| 2022-08-31T14:44:42Z | 10 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"layoutlmv3",

"token-classification",

"generated_from_trainer",

"dataset:wildreceipt",

"license:cc-by-nc-sa-4.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-08-31T13:06:54Z |

---

license: cc-by-nc-sa-4.0

tags:

- generated_from_trainer

datasets:

- wildreceipt

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: layoutlmv3-finetuned-wildreceipt

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: wildreceipt

type: wildreceipt

config: WildReceipt

split: train

args: WildReceipt

metrics:

- name: Precision

type: precision

value: 0.877962408063198

- name: Recall

type: recall

value: 0.8870235310306867

- name: F1

type: f1

value: 0.8824697104524608

- name: Accuracy

type: accuracy

value: 0.9265109136777449

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# layoutlmv3-finetuned-wildreceipt

This model is a fine-tuned version of [microsoft/layoutlmv3-base](https://huggingface.co/microsoft/layoutlmv3-base) on the wildreceipt dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3129

- Precision: 0.8780

- Recall: 0.8870

- F1: 0.8825

- Accuracy: 0.9265

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 4000

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 0.32 | 100 | 1.2240 | 0.6077 | 0.3766 | 0.4650 | 0.7011 |

| No log | 0.63 | 200 | 0.8417 | 0.6440 | 0.5089 | 0.5685 | 0.7743 |

| No log | 0.95 | 300 | 0.6466 | 0.7243 | 0.6583 | 0.6897 | 0.8311 |

| No log | 1.26 | 400 | 0.5516 | 0.7533 | 0.7158 | 0.7341 | 0.8537 |

| 0.9961 | 1.58 | 500 | 0.4845 | 0.7835 | 0.7557 | 0.7693 | 0.8699 |

| 0.9961 | 1.89 | 600 | 0.4506 | 0.7809 | 0.7930 | 0.7869 | 0.8770 |

| 0.9961 | 2.21 | 700 | 0.4230 | 0.8101 | 0.8107 | 0.8104 | 0.8886 |

| 0.9961 | 2.52 | 800 | 0.3797 | 0.8211 | 0.8296 | 0.8253 | 0.8983 |

| 0.9961 | 2.84 | 900 | 0.3576 | 0.8289 | 0.8411 | 0.8349 | 0.9016 |

| 0.4076 | 3.15 | 1000 | 0.3430 | 0.8394 | 0.8371 | 0.8382 | 0.9055 |

| 0.4076 | 3.47 | 1100 | 0.3354 | 0.8531 | 0.8405 | 0.8467 | 0.9071 |

| 0.4076 | 3.79 | 1200 | 0.3331 | 0.8371 | 0.8504 | 0.8437 | 0.9076 |

| 0.4076 | 4.1 | 1300 | 0.3184 | 0.8445 | 0.8609 | 0.8526 | 0.9118 |

| 0.4076 | 4.42 | 1400 | 0.3087 | 0.8617 | 0.8580 | 0.8598 | 0.9150 |

| 0.2673 | 4.73 | 1500 | 0.3013 | 0.8613 | 0.8657 | 0.8635 | 0.9177 |

| 0.2673 | 5.05 | 1600 | 0.2971 | 0.8630 | 0.8689 | 0.8659 | 0.9181 |

| 0.2673 | 5.36 | 1700 | 0.3075 | 0.8675 | 0.8639 | 0.8657 | 0.9177 |

| 0.2673 | 5.68 | 1800 | 0.2989 | 0.8551 | 0.8764 | 0.8656 | 0.9193 |

| 0.2673 | 5.99 | 1900 | 0.3011 | 0.8572 | 0.8762 | 0.8666 | 0.9194 |

| 0.2026 | 6.31 | 2000 | 0.3107 | 0.8595 | 0.8722 | 0.8658 | 0.9181 |

| 0.2026 | 6.62 | 2100 | 0.3050 | 0.8678 | 0.8800 | 0.8739 | 0.9220 |

| 0.2026 | 6.94 | 2200 | 0.2971 | 0.8722 | 0.8789 | 0.8755 | 0.9237 |

| 0.2026 | 7.26 | 2300 | 0.3057 | 0.8666 | 0.8785 | 0.8725 | 0.9209 |

| 0.2026 | 7.57 | 2400 | 0.3172 | 0.8593 | 0.8773 | 0.8682 | 0.9184 |

| 0.1647 | 7.89 | 2500 | 0.3018 | 0.8695 | 0.8823 | 0.8759 | 0.9228 |

| 0.1647 | 8.2 | 2600 | 0.3001 | 0.8760 | 0.8795 | 0.8777 | 0.9256 |

| 0.1647 | 8.52 | 2700 | 0.3068 | 0.8758 | 0.8745 | 0.8752 | 0.9235 |

| 0.1647 | 8.83 | 2800 | 0.3007 | 0.8779 | 0.8779 | 0.8779 | 0.9248 |

| 0.1647 | 9.15 | 2900 | 0.3063 | 0.8740 | 0.8763 | 0.8751 | 0.9228 |

| 0.1342 | 9.46 | 3000 | 0.3096 | 0.8675 | 0.8834 | 0.8754 | 0.9235 |

| 0.1342 | 9.78 | 3100 | 0.3052 | 0.8736 | 0.8848 | 0.8792 | 0.9249 |

| 0.1342 | 10.09 | 3200 | 0.3120 | 0.8727 | 0.8885 | 0.8805 | 0.9252 |

| 0.1342 | 10.41 | 3300 | 0.3146 | 0.8718 | 0.8843 | 0.8780 | 0.9243 |

| 0.1342 | 10.73 | 3400 | 0.3124 | 0.8720 | 0.8880 | 0.8799 | 0.9253 |

| 0.117 | 11.04 | 3500 | 0.3088 | 0.8761 | 0.8817 | 0.8789 | 0.9252 |

| 0.117 | 11.36 | 3600 | 0.3082 | 0.8782 | 0.8834 | 0.8808 | 0.9257 |

| 0.117 | 11.67 | 3700 | 0.3129 | 0.8767 | 0.8847 | 0.8807 | 0.9256 |

| 0.117 | 11.99 | 3800 | 0.3116 | 0.8792 | 0.8847 | 0.8820 | 0.9265 |

| 0.117 | 12.3 | 3900 | 0.3142 | 0.8768 | 0.8874 | 0.8821 | 0.9261 |

| 0.1022 | 12.62 | 4000 | 0.3129 | 0.8780 | 0.8870 | 0.8825 | 0.9265 |

### Framework versions

- Transformers 4.22.0.dev0

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

chinoll/ACGTTS

|

chinoll

| 2022-08-31T13:59:25Z | 0 | 4 | null |

[

"license:cc-by-nc-sa-4.0",

"region:us"

] | null | 2022-08-06T10:02:11Z |

---

license: cc-by-nc-sa-4.0

---

# ACGTTS 模型库

### old支持的语音

```

0 - 绫地宁宁

1 - 因幡巡

2 - 户隐憧子

```

### new支持的语音

```

0 - 绫地宁宁

1 - 户隐憧子

2 - 因幡巡

3 - 明月栞那

4 - 四季夏目

5 - 墨染希