modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-13 06:30:42

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 556

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-13 06:27:56

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

hoshingakag/autotrain-emotion-detection-1587956110

|

hoshingakag

| 2022-09-28T15:53:01Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"autotrain",

"text-classification",

"en",

"dataset:hoshingakag/autotrain-data-emotion-detection",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-28T15:51:45Z |

---

tags:

- autotrain

- text-classification

language:

- en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- hoshingakag/autotrain-data-emotion-detection

co2_eq_emissions:

emissions: 2.3491292126039087

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 1587956110

- CO2 Emissions (in grams): 2.3491

## Validation Metrics

- Loss: 0.448

- Accuracy: 0.888

- Macro F1: 0.823

- Micro F1: 0.888

- Weighted F1: 0.884

- Macro Precision: 0.885

- Micro Precision: 0.888

- Weighted Precision: 0.890

- Macro Recall: 0.800

- Micro Recall: 0.888

- Weighted Recall: 0.888

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/hoshingakag/autotrain-emotion-detection-1587956110

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("hoshingakag/autotrain-emotion-detection-1587956110", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("hoshingakag/autotrain-emotion-detection-1587956110", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

bggmyfuture-ai/autotrain-sphere-intent-classification-1584456046

|

bggmyfuture-ai

| 2022-09-28T15:35:06Z | 100 | 0 |

transformers

|

[

"transformers",

"pytorch",

"autotrain",

"text-classification",

"unk",

"dataset:bggmyfuture-ai/autotrain-data-sphere-intent-classification",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-28T15:34:05Z |

---

tags:

- autotrain

- text-classification

language:

- unk

widget:

- text: "I love AutoTrain 🤗"

datasets:

- bggmyfuture-ai/autotrain-data-sphere-intent-classification

co2_eq_emissions:

emissions: 1.893124351907886

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 1584456046

- CO2 Emissions (in grams): 1.8931

## Validation Metrics

- Loss: 0.690

- Accuracy: 0.744

- Macro F1: 0.678

- Micro F1: 0.744

- Weighted F1: 0.739

- Macro Precision: 0.697

- Micro Precision: 0.744

- Weighted Precision: 0.738

- Macro Recall: 0.669

- Micro Recall: 0.744

- Weighted Recall: 0.744

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/bggmyfuture-ai/autotrain-sphere-intent-classification-1584456046

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("bggmyfuture-ai/autotrain-sphere-intent-classification-1584456046", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("bggmyfuture-ai/autotrain-sphere-intent-classification-1584456046", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

Armandoliv/es_pipeline

|

Armandoliv

| 2022-09-28T14:44:01Z | 3 | 0 |

spacy

|

[

"spacy",

"token-classification",

"es",

"model-index",

"region:us"

] |

token-classification

| 2022-09-28T14:43:08Z |

---

tags:

- spacy

- token-classification

language:

- es

model-index:

- name: es_pipeline

results:

- task:

name: NER

type: token-classification

metrics:

- name: NER Precision

type: precision

value: 0.8450473416

- name: NER Recall

type: recall

value: 0.8476402688

- name: NER F Score

type: f_score

value: 0.8463418192

---

|

jonghyunlee/DrugLikeMoleculeBERT

|

jonghyunlee

| 2022-09-28T14:34:50Z | 102 | 1 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"feature-extraction",

"arxiv:1908.06760",

"endpoints_compatible",

"region:us"

] |

feature-extraction

| 2022-09-28T14:03:53Z |

# Model description

This model is BERT-based architecture with 8 layers. The detailed config is summarized as follows. The drug-like molecule BERT is inspired by ["Self-Attention Based Molecule Representation for Predicting Drug-Target Interaction"](https://arxiv.org/abs/1908.06760). We modified several points of training procedures.

```

config = BertConfig(

vocab_size=vocab_size,

hidden_size=128,

num_hidden_layers=8,

num_attention_heads=8,

intermediate_size=512,

hidden_act="gelu",

hidden_dropout_prob=0.1,

attention_probs_dropout_prob=0.1,

max_position_embeddings=max_seq_len + 2,

type_vocab_size=1,

pad_token_id=0,

position_embedding_type="absolute"

)

```

# Training and evaluation data

It's trained on drug-like molecules on the PubChem database. The PubChem database contains more than 100 M molecules, therefore, we filtered drug-like molecules using the quality of drug-likeliness score (QED). The 4.1 M molecules were filtered and the QED score threshold was set to 0.7.

# Tokenizer

We utilize a character-level tokenizer. The special tokens are "[SOS]", "[EOS]", "[PAD]", "[UNK]".

# Training hyperparameters

The following hyperparameters were used during training:

- Adam optimizer, learning_rate: 5e-4, scheduler: cosine annealing

- Batch size: 2048

- Training steps: 24 K

- Training_precision: FP16

- Loss function: cross-entropy loss

- Training masking rate: 30 %

- Testing masking rate: 15 % (original molecule BERT utilized 15 % of masking rate)

- NSP task: None

# Performance

- Accuracy: 94.02 %

|

alpineai/cosql

|

alpineai

| 2022-09-28T14:09:33Z | 18 | 0 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"text2sql",

"en",

"dataset:cosql",

"dataset:spider",

"arxiv:2109.05093",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-27T18:14:50Z |

---

language:

- en

thumbnail: "https://repository-images.githubusercontent.com/401779782/c2f46be5-b74b-4620-ad64-57487be3b1ab"

tags:

- text2sql

widget:

- "And the concert named Auditions? | concert_singer | stadium : stadium_id, location, name, capacity, highest, lowest, average | singer : sing er_id, name, country, song_name, song_release_year, age, is_male | concert : concert_id, concert_name ( Super bootcamp, Auditions ), theme, stadium_id, year | singer_in_concert : concert_id, singer_id || Which year did the concert Super bootcamp happen in? | Find the name and location of the stadiums which some concerts happened in the years of both 2014 and 2015."

- "How many singers do we have? | concert_singer | stadium : stadium_id, location, name, capacity, highest, lowest, average | singer : singer_id, name, country, song_name, song_release_year, age, is_male | concert : concert_id, concert_name, theme, stadium_id, year | singer_in_concert : concert_id, singer_id"

license: "apache-2.0"

datasets:

- cosql

- spider

metrics:

- cosql

---

## tscholak/2e826ioa

Fine-tuned weights for [PICARD - Parsing Incrementally for Constrained Auto-Regressive Decoding from Language Models](https://arxiv.org/abs/2109.05093) based on [T5-3B](https://huggingface.co/t5-3b).

### Training Data

The model has been fine-tuned on the 2,164 training dialogues in the [CoSQL SQL-grounded dialogue state tracking dataset](https://yale-lily.github.io/cosql) and the 7,000 training examples in the [Spider text-to-SQL dataset](https://yale-lily.github.io/spider). The model solves both, CoSQL's zero-shot text-to-SQL dialogue state tracking task and Spider's zero-shot text-to-SQL translation task. Zero-shot means that the model can generalize to unseen SQL databases.

### Training Objective

This model was initialized with [T5-3B](https://huggingface.co/t5-3b) and fine-tuned with the text-to-text generation objective.

A question is always grounded in both, a database schema and the preceiding questions in the dialogue. The model is trained to predict the SQL query that would be used to answer the user's current natural language question. The input to the model is composed of the user's current question, the database identifier, a list of tables and their columns, and a sequence of previous questions in reverse chronological order.

```

[current question] | [db_id] | [table] : [column] ( [content] , [content] ) , [column] ( ... ) , [...] | [table] : ... | ... || [previous question] | ... | [first question]

```

The sequence of previous questions is separated by `||` from the linearized schema. In the absence of previous questions (for example, for the first question in a dialogue or for Spider questions), this separator is omitted.

The model outputs the database identifier and the SQL query that will be executed on the database to answer the user's current question in the dialog.

```

[db_id] | [sql]

```

### Performance

Out of the box, this model achieves 53.8 % question match accuracy and 21.8 % interaction match accuracy on the CoSQL development set. On the CoSQL test set, the model achieves 51.4 % question match accuracy and 21.7 % interaction match accuracy.

Using the PICARD constrained decoding method (see [the official PICARD implementation](https://github.com/ElementAI/picard)), the model's performance can be improved to **56.9 %** question match accuracy and **24.2 %** interaction match accuracy on the CoSQL development set. On the CoSQL test set and with PICARD, the model achieves **54.6 %** question match accuracy and **23.7 %** interaction match accuracy.

### Usage

Please see [the official repository](https://github.com/ElementAI/picard) for scripts and docker images that support evaluation and serving of this model.

### References

1. [PICARD - Parsing Incrementally for Constrained Auto-Regressive Decoding from Language Models](https://arxiv.org/abs/2109.05093)

2. [Official PICARD code](https://github.com/ElementAI/picard)

### Citation

```bibtex

@inproceedings{Scholak2021:PICARD,

author = {Torsten Scholak and Nathan Schucher and Dzmitry Bahdanau},

title = "{PICARD}: Parsing Incrementally for Constrained Auto-Regressive Decoding from Language Models",

booktitle = "Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing",

month = nov,

year = "2021",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.emnlp-main.779",

pages = "9895--9901",

}

```

|

Conrad747/lg-en-v4

|

Conrad747

| 2022-09-28T13:36:04Z | 119 | 0 |

transformers

|

[

"transformers",

"pytorch",

"marian",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-27T11:31:47Z |

---

tags:

- generated_from_trainer

metrics:

- bleu

model-index:

- name: lg-en-v4

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# lg-en-v4

This model is a fine-tuned version of [AI-Lab-Makerere/lg_en](https://huggingface.co/AI-Lab-Makerere/lg_en) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1615

- Bleu: 28.3855

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4.4271483249908667e-05

- train_batch_size: 14

- eval_batch_size: 6

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| No log | 1.0 | 26 | 1.2704 | 25.9847 |

| No log | 2.0 | 52 | 1.1615 | 28.3855 |

### Framework versions

- Transformers 4.22.2

- Pytorch 1.12.1+cu113

- Datasets 2.5.1

- Tokenizers 0.12.1

|

Linksonder/RoBERTje-finetuned

|

Linksonder

| 2022-09-28T12:13:46Z | 61 | 0 |

transformers

|

[

"transformers",

"tf",

"roberta",

"fill-mask",

"generated_from_keras_callback",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-09-28T09:32:01Z |

---

license: mit

tags:

- generated_from_keras_callback

model-index:

- name: Linksonder/RoBERTje-finetuned

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Linksonder/RoBERTje-finetuned

This model is a fine-tuned version of [DTAI-KULeuven/robbertje-1-gb-shuffled](https://huggingface.co/DTAI-KULeuven/robbertje-1-gb-shuffled) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 16.5695

- Validation Loss: 17.2618

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 2e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': -992, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 16.5695 | 17.2618 | 0 |

### Framework versions

- Transformers 4.19.2

- TensorFlow 2.5.0

- Datasets 2.4.0

- Tokenizers 0.12.1

|

TextCortex/codegen-350M-optimized

|

TextCortex

| 2022-09-28T10:04:35Z | 5 | 1 |

transformers

|

[

"transformers",

"onnx",

"text-generation",

"license:bsd-3-clause",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-09-26T17:00:02Z |

---

license: bsd-3-clause

---

# CodeGen (CodeGen-Mono 350M)

Clone of [Salesforce/codegen-350M-mono](https://huggingface.co/Salesforce/codegen-350M-mono) converted to ONNX and optimized.

## Usage

```python

from transformers import AutoTokenizer

from optimum.onnxruntime import ORTModelForCausalLM

model = ORTModelForCausalLM.from_pretrained("TextCortex/codegen-350M-optimized")

tokenizer = AutoTokenizer.from_pretrained("TextCortex/codegen-350M-optimized")

text = "def hello_world():"

input_ids = tokenizer(text, return_tensors="pt").input_ids

generated_ids = model.generate(

input_ids,

max_length=64,

temperature=0.1,

num_return_sequences=1,

early_stopping=True,

)

out = tokenizer.decode(generated_ids[0], skip_special_tokens=True)

print(out)

```

Refer to the original model for more details.

|

Linksonder/tutorial-finetuned-imdb

|

Linksonder

| 2022-09-28T08:54:35Z | 61 | 0 |

transformers

|

[

"transformers",

"tf",

"distilbert",

"fill-mask",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-09-27T14:50:55Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: Linksonder/tutorial-finetuned-imdb

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Linksonder/tutorial-finetuned-imdb

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 5.1648

- Validation Loss: 4.7466

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 2e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': -998, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 5.1648 | 4.7466 | 0 |

### Framework versions

- Transformers 4.19.2

- TensorFlow 2.5.0

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/kawaii-girl-plus-style-v1-1

|

sd-concepts-library

| 2022-09-28T08:34:03Z | 0 | 9 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-28T08:33:56Z |

---

license: mit

---

### kawaii_girl_plus_style_v1.1 on Stable Diffusion

This is the `<kawaii>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

dvilasuero/setfit-mini-imdb

|

dvilasuero

| 2022-09-28T07:58:37Z | 1 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"mpnet",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-09-28T07:58:29Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 40 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 40,

"warmup_steps": 4,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

jack-berry4/Chairman-Model-1

|

jack-berry4

| 2022-09-28T06:42:59Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2022-09-28T06:42:59Z |

---

license: creativeml-openrail-m

---

|

Zengwei/icefall-asr-librispeech-lstm-transducer-stateless3-2022-09-28

|

Zengwei

| 2022-09-28T06:10:47Z | 0 | 1 | null |

[

"tensorboard",

"region:us"

] | null | 2022-09-28T04:34:44Z |

See <https://github.com/k2-fsa/icefall/pull/564>

|

bongsoo/moco-sentencedistilbertV2.1

|

bongsoo

| 2022-09-28T05:09:33Z | 114 | 2 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"distilbert",

"feature-extraction",

"sentence-similarity",

"transformers",

"ko",

"en",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-09-23T05:42:57Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

- ko

- en

widget:

source_sentence: "대한민국의 수도는?"

sentences:

- "서울특별시는 한국이 정치,경제,문화 중심 도시이다."

- "부산은 대한민국의 제2의 도시이자 최대의 해양 물류 도시이다."

- "제주도는 대한민국에서 유명한 관광지이다"

- "Seoul is the capital of Korea"

- "울산광역시는 대한민국 남동부 해안에 있는 광역시이다"

---

# moco-sentencedistilbertV2.1

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

- 이 모델은 [bongsoo/mdistilbertV2.1](https://huggingface.co/bongsoo/mdistilbertV2.1) MLM 모델을

<br>sentencebert로 만든 후,추가적으로 STS Tearch-student 증류 학습 시켜 만든 모델 입니다.

- **vocab: 152,537 개**(기존 119,548 vocab 에 32,989 신규 vocab 추가)

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence_transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["서울은 한국이 수도이다", "The capital of Korea is Seoul"]

model = SentenceTransformer('bongsoo/moco-sentencedistilbertV2.1')

embeddings = model.encode(sentences)

print(embeddings)

# sklearn 을 이용하여 cosine_scores를 구함

# => 입력값 embeddings 은 (1,768) 처럼 2D 여야 함.

from sklearn.metrics.pairwise import paired_cosine_distances, paired_euclidean_distances, paired_manhattan_distances

cosine_scores = 1 - (paired_cosine_distances(embeddings[0].reshape(1,-1), embeddings[1].reshape(1,-1)))

print(f'*cosine_score:{cosine_scores[0]}')

```

#### 출력(Outputs)

```

[[ 0.27124503 -0.5836643 0.00736023 ... -0.0038319 0.01802095 -0.09652182]

[ 0.2765149 -0.5754248 0.00788184 ... 0.07659392 -0.07825544 -0.06120609]]

*cosine_score:0.9513546228408813

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```

pip install transformers[torch]

```

- 평균 폴링(mean_pooling) 방식 사용. ([cls 폴링](https://huggingface.co/sentence-transformers/bert-base-nli-cls-token), [max 폴링](https://huggingface.co/sentence-transformers/bert-base-nli-max-tokens))

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ["서울은 한국이 수도이다", "The capital of Korea is Seoul"]

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('bongsoo/moco-sentencedistilbertV2.1')

model = AutoModel.from_pretrained('bongsoo/moco-sentencedistilbertV2.1')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

# sklearn 을 이용하여 cosine_scores를 구함

# => 입력값 embeddings 은 (1,768) 처럼 2D 여야 함.

from sklearn.metrics.pairwise import paired_cosine_distances, paired_euclidean_distances, paired_manhattan_distances

cosine_scores = 1 - (paired_cosine_distances(sentence_embeddings[0].reshape(1,-1), sentence_embeddings[1].reshape(1,-1)))

print(f'*cosine_score:{cosine_scores[0]}')

```

#### 출력(Outputs)

```

Sentence embeddings:

tensor([[ 0.2712, -0.5837, 0.0074, ..., -0.0038, 0.0180, -0.0965],

[ 0.2765, -0.5754, 0.0079, ..., 0.0766, -0.0783, -0.0612]])

*cosine_score:0.9513546228408813

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

- 성능 측정을 위한 말뭉치는, 아래 한국어 (kor), 영어(en) 평가 말뭉치를 이용함

<br> 한국어 : **korsts(1,379쌍문장)** 와 **klue-sts(519쌍문장)**

<br> 영어 : [stsb_multi_mt](https://huggingface.co/datasets/stsb_multi_mt)(1,376쌍문장) 와 [glue:stsb](https://huggingface.co/datasets/glue/viewer/stsb/validation) (1,500쌍문장)

- 성능 지표는 **cosin.spearman/max**(cosine,eculidean,manhatten,doc중 max값)

- 평가 측정 코드는 [여기](https://github.com/kobongsoo/BERT/blob/master/sbert/sbert-test.ipynb) 참조

|모델 |korsts|klue-sts|glue(stsb)|stsb_multi_mt(en)|

|:--------|------:|--------:|--------------:|------------:|

|distiluse-base-multilingual-cased-v2 |0.7475/0.7556 |0.7855/0.7862 |0.8193 |0.8075/0.8168|

|paraphrase-multilingual-mpnet-base-v2 |0.8201 |0.7993 |**0.8907/0.8919**|**0.8682** |

|bongsoo/sentencedistilbertV1.2 |0.8198/0.8202 |0.8584/0.8608 |0.8739/0.8740 |0.8377/0.8388|

|bongsoo/moco-sentencedistilbertV2.0 |0.8124/0.8128 |0.8470/0.8515 |0.8773/0.8778 |0.8371/0.8388|

|bongsoo/moco-sentencebertV2.0 |0.8244/0.8277 |0.8411/0.8478 |0.8792/0.8796 |0.8436/0.8456|

|**bongsoo/moco-sentencedistilbertV2.1**|**0.8390/0.8398**|**0.8767/0.8808**|0.8805/0.8816 |0.8548 |

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training(훈련 과정)

The model was trained with the parameters:

**1. MLM 훈련**

- 입력 모델 : distilbert-base-multilingual-cased

- 말뭉치 : 훈련 : bongsoo/moco-corpus-kowiki2022(7.6M) , 평가: bongsoo/bongevalsmall

- HyperParameter : **LearningRate : 5e-5, epochs: 8, batchsize: 32, max_token_len : 128**

- vocab : 152,537개 (기존 119,548 에 32,989 신규 vocab 추가)

- 출력 모델 : mdistilbertV2.1 (size: 643MB)

- 훈련시간 : 63h/1GPU (24GB/23.9 use)

- 평가: **훈련loss: 2.203400, 평가loss: 2.972835, perplexity: 23.43**(bong_eval:1,500)

- 훈련코드 [여기](https://github.com/kobongsoo/BERT/blob/master/distilbert/distilbert-MLM-Trainer-V1.2.ipynb) 참조

**2. STS 훈련**

<br>=>bert를 sentencebert로 만듬.

- 입력 모델 : mdistilbertV2.1 (size: 643MB)

- 말뭉치 : korsts(5,749) + kluestsV1.1(11,668) + stsb_multi_mt(5,749) + mteb/sickr-sts(9,927) + glue stsb(5,749) (총:38,842)

- HyperParameter : **LearningRate : 3e-5, epochs: 800, batchsize: 128, max_token_len : 256**

- 출력 모델 : sbert-mdistilbertV2.1 (size: 640MB)

- 훈련시간 : 13h/1GPU (24GB/16.1GB use)

- 평가(cosin_spearman) : **0.790**(말뭉치:korsts(tune_test.tsv))

- 훈련코드 [여기](https://github.com/kobongsoo/BERT/blob/master/sbert/sentece-bert-sts.ipynb) 참조

**3.증류(distilation) 훈련**

- 학생 모델 : sbert-mdistilbertV2.1

- 교사 모델 : paraphrase-multilingual-mpnet-base-v2(max_token_len:128)

- 말뭉치 : news_talk_en_ko_train.tsv (영어-한국어 대화-뉴스 병렬 말뭉치 : 1.38M)

- HyperParameter : **LearningRate : 5e-5, epochs: 40, batchsize: 128, max_token_len : 128(교사모델이 128이므로 맟춰줌)**

- 출력 모델 : sbert-mdistilbertV2.1-distil

- 훈련시간 : 17h/1GPU (24GB/9GB use)

- 훈련코드 [여기](https://github.com/kobongsoo/BERT/blob/master/sbert/sbert-distillaton.ipynb) 참조

**4.STS 훈련**

<br>=> sentencebert 모델을 sts 훈련시킴

- 입력 모델 : sbert-mdistilbertV2.1-distil

- 말뭉치 : korsts(5,749) + kluestsV1.1(11,668) + stsb_multi_mt(5,749) + mteb/sickr-sts(9,927) + glue stsb(5,749) (총:38,842)

- HyperParameter : **LearningRate : 3e-5, epochs: 1200, batchsize: 128, max_token_len : 256**

- 출력 모델 : moco-sentencedistilbertV2.1

- 훈련시간 : 12/1GPU (24GB/16.1GB use)

- 평가(cosin_spearman) : **0.839**(말뭉치:korsts(tune_test.tsv))

- 훈련코드 [여기](https://github.com/kobongsoo/BERT/blob/master/sbert/sentece-bert-sts.ipynb) 참조

<br>모델 제작 과정에 대한 자세한 내용은 [여기](https://github.com/kobongsoo/BERT/tree/master)를 참조 하세요.

**Config**:

```

{

"_name_or_path": "../../data11/model/sbert/sbert-mdistilbertV2.1-distil",

"activation": "gelu",

"architectures": [

"DistilBertModel"

],

"attention_dropout": 0.1,

"dim": 768,

"dropout": 0.1,

"hidden_dim": 3072,

"initializer_range": 0.02,

"max_position_embeddings": 512,

"model_type": "distilbert",

"n_heads": 12,

"n_layers": 6,

"output_past": true,

"pad_token_id": 0,

"qa_dropout": 0.1,

"seq_classif_dropout": 0.2,

"sinusoidal_pos_embds": false,

"tie_weights_": true,

"torch_dtype": "float32",

"transformers_version": "4.21.2",

"vocab_size": 152537

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 256, 'do_lower_case': False}) with Transformer model: DistilBertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## tokenizer_config

```

{

"cls_token": "[CLS]",

"do_basic_tokenize": true,

"do_lower_case": false,

"mask_token": "[MASK]",

"max_len": 128,

"name_or_path": "../../data11/model/sbert/sbert-mdistilbertV2.1-distil",

"never_split": null,

"pad_token": "[PAD]",

"sep_token": "[SEP]",

"special_tokens_map_file": "../../data11/model/distilbert/mdistilbertV2.1-4/special_tokens_map.json",

"strip_accents": false,

"tokenize_chinese_chars": true,

"tokenizer_class": "DistilBertTokenizer",

"unk_token": "[UNK]"

}

```

## sentence_bert_config

```

{

"max_seq_length": 256,

"do_lower_case": false

}

```

## config_sentence_transformers

```

{

"__version__": {

"sentence_transformers": "2.2.0",

"transformers": "4.21.2",

"pytorch": "1.10.1"

}

}

```

## Citing & Authors

<!--- Describe where people can find more information -->

bongsoo

|

bongsoo/moco-sentencebertV2.0

|

bongsoo

| 2022-09-28T05:09:20Z | 4 | 1 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"bert",

"feature-extraction",

"sentence-similarity",

"transformers",

"ko",

"en",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-09-19T04:15:36Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

- ko

- en

widget:

source_sentence: "대한민국의 수도는?"

sentences:

- "서울특별시는 한국이 정치,경제,문화 중심 도시이다."

- "부산은 대한민국의 제2의 도시이자 최대의 해양 물류 도시이다."

- "제주도는 대한민국에서 유명한 관광지이다"

- "Seoul is the capital of Korea"

- "울산광역시는 대한민국 남동부 해안에 있는 광역시이다"

---

# moco-sentencebertV2.0

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

- 이 모델은 [bongsoo/mbertV2.0](https://huggingface.co/bongsoo/mbertV2.0) MLM 모델을

<br>sentencebert로 만든 후,추가적으로 STS Tearch-student 증류 학습 시켜 만든 모델 입니다.

- **vocab: 152,537 개**(기존 119,548 vocab 에 32,989 신규 vocab 추가)

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence_transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('bongsoo/moco-sentencebertV2.0')

embeddings = model.encode(sentences)

print(embeddings)

# sklearn 을 이용하여 cosine_scores를 구함

# => 입력값 embeddings 은 (1,768) 처럼 2D 여야 함.

from sklearn.metrics.pairwise import paired_cosine_distances, paired_euclidean_distances, paired_manhattan_distances

cosine_scores = 1 - (paired_cosine_distances(embeddings[0].reshape(1,-1), embeddings[1].reshape(1,-1)))

print(f'*cosine_score:{cosine_scores[0]}')

```

#### 출력(Outputs)

```

[[ 0.16649279 -0.2933038 -0.00391259 ... 0.00720964 0.18175027 -0.21052675]

[ 0.10106096 -0.11454111 -0.00378215 ... -0.009032 -0.2111504 -0.15030429]]

*cosine_score:0.3352515697479248

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

- 평균 폴링(mean_pooling) 방식 사용. ([cls 폴링](https://huggingface.co/sentence-transformers/bert-base-nli-cls-token), [max 폴링](https://huggingface.co/sentence-transformers/bert-base-nli-max-tokens))

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('bongsoo/moco-sentencebertV2.0')

model = AutoModel.from_pretrained('bongsoo/moco-sentencebertV2.0')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

# sklearn 을 이용하여 cosine_scores를 구함

# => 입력값 embeddings 은 (1,768) 처럼 2D 여야 함.

from sklearn.metrics.pairwise import paired_cosine_distances, paired_euclidean_distances, paired_manhattan_distances

cosine_scores = 1 - (paired_cosine_distances(sentence_embeddings[0].reshape(1,-1), sentence_embeddings[1].reshape(1,-1)))

print(f'*cosine_score:{cosine_scores[0]}')

```

#### 출력(Outputs)

```

Sentence embeddings:

tensor([[ 0.1665, -0.2933, -0.0039, ..., 0.0072, 0.1818, -0.2105],

[ 0.1011, -0.1145, -0.0038, ..., -0.0090, -0.2112, -0.1503]])

*cosine_score:0.3352515697479248

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

- 성능 측정을 위한 말뭉치는, 아래 한국어 (kor), 영어(en) 평가 말뭉치를 이용함

<br> 한국어 : **korsts(1,379쌍문장)** 와 **klue-sts(519쌍문장)**

<br> 영어 : [stsb_multi_mt](https://huggingface.co/datasets/stsb_multi_mt)(1,376쌍문장) 와 [glue:stsb](https://huggingface.co/datasets/glue/viewer/stsb/validation) (1,500쌍문장)

- 성능 지표는 **cosin.spearman** 측정하여 비교함.

- 평가 측정 코드는 [여기](https://github.com/kobongsoo/BERT/blob/master/sbert/sbert-test.ipynb) 참조

|모델 |korsts|klue-sts|korsts+klue-sts|stsb_multi_mt|glue(stsb)

|:--------|------:|--------:|--------------:|------------:|-----------:|

|distiluse-base-multilingual-cased-v2|0.747|0.785|0.577|0.807|0.819|

|paraphrase-multilingual-mpnet-base-v2|0.820|0.799|0.711|0.868|0.890|

|bongsoo/sentencedistilbertV1.2|0.819|0.858|0.630|0.837|0.873|

|bongsoo/moco-sentencedistilbertV2.0|0.812|0.847|0.627|0.837|0.877|

|bongsoo/moco-sentencebertV2.0|0.824|0.841|0.635|0.843|0.879|

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training(훈련 과정)

The model was trained with the parameters:

**1. MLM 훈련**

- 입력 모델 : bert-base-multilingual-cased

- 말뭉치 : 훈련 : bongsoo/moco-corpus-kowiki2022(7.6M) , 평가: bongsoo/bongevalsmall

- HyperParameter : LearningRate : 5e-5, epochs: 8, batchsize: 32, max_token_len : 128

- vocab : 152,537개 (기존 119,548 에 32,989 신규 vocab 추가)

- 출력 모델 : mbertV2.0 (size: 813MB)

- 훈련시간 : 90h/1GPU (24GB/19.6GB use)

- loss : 훈련loss: 2.258400, 평가loss: 3.102096, perplexity: 19.78158(bong_eval:1,500)

- 훈련코드 [여기](https://github.com/kobongsoo/BERT/blob/master/bert/bert-MLM-Trainer-V1.2.ipynb) 참조

**2. STS 훈련**

=>bert를 sentencebert로 만듬.

- 입력 모델 : mbertV2.0

- 말뭉치 : korsts + kluestsV1.1 + stsb_multi_mt + mteb/sickr-sts (총:33,093)

- HyperParameter : LearningRate : 3e-5, epochs: 200, batchsize: 32, max_token_len : 128

- 출력 모델 : sbert-mbertV2.0 (size: 813MB)

- 훈련시간 : 9h20m/1GPU (24GB/9.0GB use)

- loss(cosin_spearman) : 0.799(말뭉치:korsts(tune_test.tsv))

- 훈련코드 [여기](https://github.com/kobongsoo/BERT/blob/master/sbert/sentece-bert-sts.ipynb) 참조

**3.증류(distilation) 훈련**

- 학생 모델 : sbert-mbertV2.0

- 교사 모델 : paraphrase-multilingual-mpnet-base-v2

- 말뭉치 : en_ko_train.tsv(한국어-영어 사회과학분야 병렬 말뭉치 : 1.1M)

- HyperParameter : LearningRate : 5e-5, epochs: 40, batchsize: 128, max_token_len : 128

- 출력 모델 : sbert-mlbertV2.0-distil

- 훈련시간 : 17h/1GPU (24GB/18.6GB use)

- 훈련코드 [여기](https://github.com/kobongsoo/BERT/blob/master/sbert/sbert-distillaton.ipynb) 참조

**4.STS 훈련**

=> sentencebert 모델을 sts 훈련시킴

- 입력 모델 : sbert-mlbertV2.0-distil

- 말뭉치 : korsts(5,749) + kluestsV1.1(11,668) + stsb_multi_mt(5,749) + mteb/sickr-sts(9,927) + glue stsb(5,749) (총:38,842)

- HyperParameter : LearningRate : 3e-5, epochs: 800, batchsize: 64, max_token_len : 128

- 출력 모델 : moco-sentencebertV2.0

- 훈련시간 : 25h/1GPU (24GB/13GB use)

- 훈련코드 [여기](https://github.com/kobongsoo/BERT/blob/master/sbert/sentece-bert-sts.ipynb) 참조

<br>모델 제작 과정에 대한 자세한 내용은 [여기](https://github.com/kobongsoo/BERT/tree/master)를 참조 하세요.

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 1035 with parameters:

```

{'batch_size': 32, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Config**:

```

{

"_name_or_path": "../../data11/model/sbert/sbert-mbertV2.0-distil",

"architectures": [

"BertModel"

],

"attention_probs_dropout_prob": 0.1,

"classifier_dropout": null,

"directionality": "bidi",

"hidden_act": "gelu",

"hidden_dropout_prob": 0.1,

"hidden_size": 768,

"initializer_range": 0.02,

"intermediate_size": 3072,

"layer_norm_eps": 1e-12,

"max_position_embeddings": 512,

"model_type": "bert",

"num_attention_heads": 12,

"num_hidden_layers": 12,

"pad_token_id": 0,

"pooler_fc_size": 768,

"pooler_num_attention_heads": 12,

"pooler_num_fc_layers": 3,

"pooler_size_per_head": 128,

"pooler_type": "first_token_transform",

"position_embedding_type": "absolute",

"torch_dtype": "float32",

"transformers_version": "4.21.2",

"type_vocab_size": 2,

"use_cache": true,

"vocab_size": 152537

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

bongsoo

|

bkim12/t5-small-finetuned-eli5

|

bkim12

| 2022-09-28T04:00:37Z | 110 | 0 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:eli5",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-27T22:23:29Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- eli5

metrics:

- rouge

model-index:

- name: t5-small-finetuned-eli5

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: eli5

type: eli5

config: LFQA_reddit

split: train_eli5

args: LFQA_reddit

metrics:

- name: Rouge1

type: rouge

value: 13.0163

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-finetuned-eli5

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the eli5 dataset.

It achieves the following results on the evaluation set:

- Loss: 3.6782

- Rouge1: 13.0163

- Rouge2: 1.9263

- Rougel: 10.484

- Rougelsum: 11.8234

- Gen Len: 18.9951

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:------:|:------:|:---------:|:-------:|

| 3.8841 | 1.0 | 17040 | 3.6782 | 13.0163 | 1.9263 | 10.484 | 11.8234 | 18.9951 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1

- Datasets 2.5.1

- Tokenizers 0.12.1

|

underoohcf/finetuning-sentiment-model-3000-samples

|

underoohcf

| 2022-09-28T02:54:08Z | 107 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-28T02:41:59Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

- f1

model-index:

- name: finetuning-sentiment-model-3000-samples

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

config: plain_text

split: train

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.87

- name: F1

type: f1

value: 0.8695652173913044

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2983

- Accuracy: 0.87

- F1: 0.8696

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.22.2

- Pytorch 1.12.1+cu113

- Datasets 2.5.1

- Tokenizers 0.12.1

|

helloway/test_model

|

helloway

| 2022-09-28T02:13:43Z | 0 | 0 | null |

[

"image-classification",

"license:apache-2.0",

"region:us"

] |

image-classification

| 2022-09-28T02:03:58Z |

---

license: apache-2.0

tags:

- image-classification

---

|

sd-concepts-library/sanguo-guanyu

|

sd-concepts-library

| 2022-09-28T02:10:40Z | 0 | 1 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-28T02:10:28Z |

---

license: mit

---

### sanguo-guanyu on Stable Diffusion

This is the `<sanguo-guanyu>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

erich-hf/ml-agents-pyramids

|

erich-hf

| 2022-09-28T02:07:28Z | 5 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Pyramids",

"region:us"

] |

reinforcement-learning

| 2022-09-28T02:07:19Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Pyramids

library_name: ml-agents

---

# **ppo** Agent playing **Pyramids**

This is a trained model of a **ppo** agent playing **Pyramids** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Pyramids

2. Step 1: Write your model_id: erich-hf/ml-agents-pyramids

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

akira0402/xlm-roberta-base-finetuned-panx-de

|

akira0402

| 2022-09-28T00:54:17Z | 117 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-27T07:20:10Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

config: PAN-X.de

split: train

args: PAN-X.de

metrics:

- name: F1

type: f1

value: 0.8629724353509519

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1380

- F1: 0.8630

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2625 | 1.0 | 525 | 0.1667 | 0.8208 |

| 0.1281 | 2.0 | 1050 | 0.1361 | 0.8510 |

| 0.0809 | 3.0 | 1575 | 0.1380 | 0.8630 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

crumb/genshin-stable-inversion

|

crumb

| 2022-09-27T22:52:43Z | 0 | 2 | null |

[

"stable-diffusion",

"text-to-image",

"en",

"license:bigscience-bloom-rail-1.0",

"region:us"

] |

text-to-image

| 2022-09-27T02:21:25Z |

---

language:

- en

tags:

- stable-diffusion

- text-to-image

license: bigscience-bloom-rail-1.0

inference: false

---

project that probably won't lead to anything useful but is still interesting (Less VRAM requirement than finetuning Stable Diffusion, faster if you have all the images downloaded, less space taken up by the models since you only need CLIP)

a notebook for producing your own "stable inversions" is included in this repo but I wouldn't recommend doing so (they suck). It works on Colab free tier though.

[link to notebook for you to download](https://huggingface.co/crumb/genshin-stable-inversion/blob/main/stable_inversion%20(1).ipynb)

how you can load this into a diffusers-based notebook like [Doohickey](https://github.com/aicrumb/doohickey) might look something like this

```python

from huggingface_hub import hf_hub_download

stable_inversion = "user/my-stable-inversion" #@param {type:"string"}

inversion_path = hf_hub_download(repo_id=stable_inversion, filename="token_embeddings.pt")

text_encoder.text_model.embeddings.token_embedding.weight = torch.load(inversion_path)

```

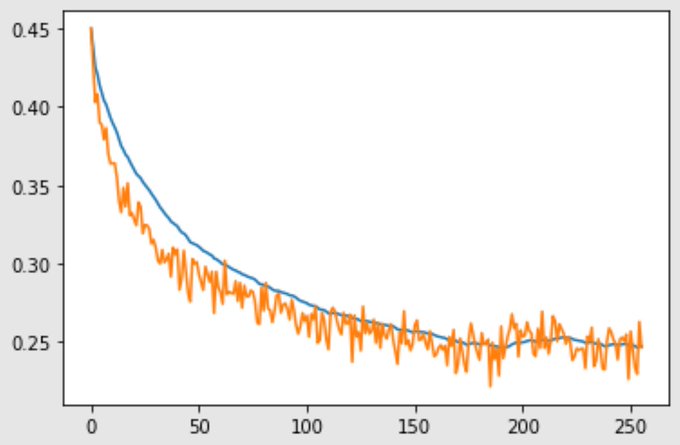

it was trained on 1024 images matching the 'genshin_impact' tag on safebooru, epochs 1 and 2 had the model being fed the full captions, epoch 3 had 50% of the tags in the caption, and epoch 4 had 25% of the tags in the caption. Learning rate was 1e-3 and the loss curve looked like this

Samples from this finetuned inversion for the prompt "beidou_(genshin_impact)" using just the 1-4 Stable Diffusion model

Sample for the same prompt BEFORE finetuning (matches seeds with first finetuned sample)

|

ShadowTwin41/distilbert-base-uncased-finetuned-squad-d5716d28

|

ShadowTwin41

| 2022-09-27T21:50:12Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"fill-mask",

"question-answering",

"en",

"dataset:squad",

"arxiv:1910.01108",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-09-27T21:46:09Z |

---

language:

- en

thumbnail: https://github.com/karanchahal/distiller/blob/master/distiller.jpg

tags:

- question-answering

license: apache-2.0

datasets:

- squad

metrics:

- squad

---

# DistilBERT with a second step of distillation

## Model description

This model replicates the "DistilBERT (D)" model from Table 2 of the [DistilBERT paper](https://arxiv.org/pdf/1910.01108.pdf). In this approach, a DistilBERT student is fine-tuned on SQuAD v1.1, but with a BERT model (also fine-tuned on SQuAD v1.1) acting as a teacher for a second step of task-specific distillation.

In this version, the following pre-trained models were used:

* Student: `distilbert-base-uncased`

* Teacher: `lewtun/bert-base-uncased-finetuned-squad-v1`

## Training data

This model was trained on the SQuAD v1.1 dataset which can be obtained from the `datasets` library as follows:

```python

from datasets import load_dataset

squad = load_dataset('squad')

```

## Training procedure

## Eval results

| | Exact Match | F1 |

|------------------|-------------|------|

| DistilBERT paper | 79.1 | 86.9 |

| Ours | 78.4 | 86.5 |

The scores were calculated using the `squad` metric from `datasets`.

### BibTeX entry and citation info

```bibtex

@misc{sanh2020distilbert,

title={DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter},

author={Victor Sanh and Lysandre Debut and Julien Chaumond and Thomas Wolf},

year={2020},

eprint={1910.01108},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

sd-concepts-library/blue-haired-boy

|

sd-concepts-library

| 2022-09-27T21:48:43Z | 0 | 3 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-27T21:48:29Z |

---

license: mit

---

### Blue-Haired-Boy on Stable Diffusion

This is the `<Blue-Haired-Boy>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

ask4rizwan/FirstModel

|

ask4rizwan

| 2022-09-27T21:14:54Z | 0 | 0 | null |

[

"license:bigscience-bloom-rail-1.0",

"region:us"

] | null | 2022-09-27T21:14:54Z |

---

license: bigscience-bloom-rail-1.0

---

|

DeepaKrish/distilbert-base-uncased-finetuned

|

DeepaKrish

| 2022-09-27T20:43:00Z | 104 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-26T23:59:34Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1137

- Accuracy: 0.9733

- F1: 0.9743

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.0868 | 1.0 | 1370 | 0.1098 | 0.9729 | 0.9738 |

| 0.0598 | 2.0 | 2740 | 0.1137 | 0.9733 | 0.9743 |

| 0.0383 | 3.0 | 4110 | 0.1604 | 0.9721 | 0.9731 |

| 0.0257 | 4.0 | 5480 | 0.1671 | 0.9717 | 0.9729 |

| 0.016 | 5.0 | 6850 | 0.1904 | 0.9709 | 0.9720 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.9.0

- Datasets 2.5.1

- Tokenizers 0.10.3

|

Kevin123/t5-small-finetuned-xsum

|

Kevin123

| 2022-09-27T20:06:05Z | 112 | 0 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:xsum",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-27T20:02:54Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- xsum

model-index:

- name: t5-small-finetuned-xsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-finetuned-xsum

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the xsum dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.12.3

- Pytorch 1.8.1+cu102

- Datasets 1.18.3

- Tokenizers 0.10.3

|

marktrovinger/q-FrozenLake-v1-4x4-noSlippery

|

marktrovinger

| 2022-09-27T19:50:25Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-27T19:50:17Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="marktrovinger/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

IIIT-L/indic-bert-finetuned-TRAC-DS

|

IIIT-L

| 2022-09-27T19:02:06Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"albert",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-27T17:06:18Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

- precision

- recall

- f1

model-index:

- name: indic-bert-finetuned-TRAC-DS

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# indic-bert-finetuned-TRAC-DS

This model is a fine-tuned version of [ai4bharat/indic-bert](https://huggingface.co/ai4bharat/indic-bert) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9922

- Accuracy: 0.5825

- Precision: 0.5493

- Recall: 0.5412

- F1: 0.5428

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-06

- train_batch_size: 32

- eval_batch_size: 32

- seed: 43

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 1.0755 | 1.99 | 612 | 1.0346 | 0.5057 | 0.4072 | 0.4554 | 0.3806 |

| 1.0175 | 3.99 | 1224 | 1.0096 | 0.5678 | 0.6135 | 0.5011 | 0.4422 |

| 0.9974 | 5.98 | 1836 | 1.0010 | 0.5776 | 0.5637 | 0.5140 | 0.4799 |

| 0.9812 | 7.97 | 2448 | 0.9960 | 0.5694 | 0.5426 | 0.5283 | 0.5298 |

| 0.9675 | 9.97 | 3060 | 0.9956 | 0.5776 | 0.5565 | 0.5422 | 0.5442 |

| 0.9542 | 11.96 | 3672 | 0.9925 | 0.5882 | 0.5601 | 0.5420 | 0.5419 |

| 0.944 | 13.95 | 4284 | 0.9907 | 0.5866 | 0.5525 | 0.5441 | 0.5454 |

| 0.9347 | 15.95 | 4896 | 0.9921 | 0.5858 | 0.5527 | 0.5441 | 0.5456 |

| 0.9271 | 17.94 | 5508 | 0.9906 | 0.5931 | 0.5596 | 0.5482 | 0.5490 |

| 0.9236 | 19.93 | 6120 | 0.9922 | 0.5825 | 0.5493 | 0.5412 | 0.5428 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.1+cu111

- Datasets 2.3.2

- Tokenizers 0.12.1

|

VietAI/vit5-base

|

VietAI

| 2022-09-27T18:09:26Z | 1,798 | 11 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"t5",

"text2text-generation",

"summarization",

"translation",

"question-answering",

"vi",

"dataset:cc100",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-03-14T16:36:06Z |

---

language: vi

datasets:

- cc100

tags:

- summarization

- translation

- question-answering

license: mit

---

# ViT5-base

State-of-the-art pretrained Transformer-based encoder-decoder model for Vietnamese.

## How to use

For more details, do check out [our Github repo](https://github.com/vietai/ViT5).

[Finetunning Example can be found here](https://github.com/vietai/ViT5/tree/main/finetunning_huggingface).

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("VietAI/vit5-base")

model = AutoModelForSeq2SeqLM.from_pretrained("VietAI/vit5-base")

model.cuda()

```

## Citation

```

@inproceedings{phan-etal-2022-vit5,

title = "{V}i{T}5: Pretrained Text-to-Text Transformer for {V}ietnamese Language Generation",

author = "Phan, Long and Tran, Hieu and Nguyen, Hieu and Trinh, Trieu H.",

booktitle = "Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Student Research Workshop",

year = "2022",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.naacl-srw.18",

pages = "136--142",

}

```

|

sd-concepts-library/plen-ki-mun

|

sd-concepts-library

| 2022-09-27T17:47:15Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-27T17:47:01Z |

---

license: mit

---

### Plen-Ki-Mun on Stable Diffusion

This is the `<plen-ki-mun>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

anas-awadalla/t5-small-few-shot-k-1024-finetuned-squad-seed-4

|

anas-awadalla

| 2022-09-27T16:26:18Z | 107 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-27T16:10:01Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: t5-small-few-shot-k-1024-finetuned-squad-seed-4

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-few-shot-k-1024-finetuned-squad-seed-4

This model is a fine-tuned version of [google/t5-v1_1-small](https://huggingface.co/google/t5-v1_1-small) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- num_epochs: 35.0

### Training results

### Framework versions

- Transformers 4.20.0.dev0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.11.6

|

anas-awadalla/t5-small-few-shot-k-1024-finetuned-squad-seed-2

|

anas-awadalla

| 2022-09-27T16:08:21Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-27T15:51:40Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: t5-small-few-shot-k-1024-finetuned-squad-seed-2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-few-shot-k-1024-finetuned-squad-seed-2

This model is a fine-tuned version of [google/t5-v1_1-small](https://huggingface.co/google/t5-v1_1-small) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 2

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- num_epochs: 35.0

### Training results

### Framework versions

- Transformers 4.20.0.dev0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.11.6

|

anas-awadalla/t5-small-few-shot-k-1024-finetuned-squad-seed-0

|

anas-awadalla

| 2022-09-27T15:49:41Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation