modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-11 12:33:28

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 555

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-11 12:33:10

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

huggingtweets/sun_soony-unjaded_jade-veganhollyg

|

huggingtweets

| 2022-06-08T21:45:56Z | 104 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-05-30T21:50:31Z |

---

language: en

thumbnail: http://www.huggingtweets.com/sun_soony-unjaded_jade-veganhollyg/1654724750416/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1105554414427885569/XkyfcoMJ_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1290809762637131776/uwGH2mYu_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/900359049061036032/LYf3Ouv__400x400.jpg')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Jade Bowler & soony & Holly Gabrielle</div>

<div style="text-align: center; font-size: 14px;">@sun_soony-unjaded_jade-veganhollyg</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Jade Bowler & soony & Holly Gabrielle.

| Data | Jade Bowler | soony | Holly Gabrielle |

| --- | --- | --- | --- |

| Tweets downloaded | 3170 | 815 | 1802 |

| Retweets | 121 | 260 | 276 |

| Short tweets | 120 | 47 | 253 |

| Tweets kept | 2929 | 508 | 1273 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/afi2j4p2/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @sun_soony-unjaded_jade-veganhollyg's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/3uiqxuec) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/3uiqxuec/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/sun_soony-unjaded_jade-veganhollyg')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

huggingtweets/neiltyson

|

huggingtweets

| 2022-06-08T21:26:48Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: en

thumbnail: http://www.huggingtweets.com/neiltyson/1654723603504/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/74188698/NeilTysonOriginsA-Crop_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Neil deGrasse Tyson</div>

<div style="text-align: center; font-size: 14px;">@neiltyson</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Neil deGrasse Tyson.

| Data | Neil deGrasse Tyson |

| --- | --- |

| Tweets downloaded | 3234 |

| Retweets | 10 |

| Short tweets | 87 |

| Tweets kept | 3137 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/1v949iob/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @neiltyson's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/kjzq9tjy) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/kjzq9tjy/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/neiltyson')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

huggingtweets/kentcdodds-richardbranson-sikiraamer

|

huggingtweets

| 2022-06-08T21:08:46Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-06-08T21:04:40Z |

---

language: en

thumbnail: http://www.huggingtweets.com/kentcdodds-richardbranson-sikiraamer/1654722520391/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1496777835062648833/3Ao6Xb2a_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1529905780542959616/Ibwrp7VJ_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1410740591483293697/tRbW1XoV_400x400.jpg')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Amer Sikira & Kent C. Dodds 💿 & Richard Branson</div>

<div style="text-align: center; font-size: 14px;">@kentcdodds-richardbranson-sikiraamer</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Amer Sikira & Kent C. Dodds 💿 & Richard Branson.

| Data | Amer Sikira | Kent C. Dodds 💿 | Richard Branson |

| --- | --- | --- | --- |

| Tweets downloaded | 3250 | 3249 | 3215 |

| Retweets | 94 | 578 | 234 |

| Short tweets | 214 | 507 | 96 |

| Tweets kept | 2942 | 2164 | 2885 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/jtwa65l2/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @kentcdodds-richardbranson-sikiraamer's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/3vt6qlgf) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/3vt6qlgf/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/kentcdodds-richardbranson-sikiraamer')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

Sohaibsyed/wav2vec2-large-xls-r-300m-turkish-colab

|

Sohaibsyed

| 2022-06-08T20:48:31Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-06-08T16:53:05Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: wav2vec2-large-xls-r-300m-turkish-colab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-turkish-colab

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3717

- Wer: 0.2972

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 4.0139 | 3.67 | 400 | 0.7020 | 0.7112 |

| 0.4129 | 7.34 | 800 | 0.4162 | 0.4503 |

| 0.1869 | 11.01 | 1200 | 0.4174 | 0.3959 |

| 0.1273 | 14.68 | 1600 | 0.4020 | 0.3695 |

| 0.0959 | 18.35 | 2000 | 0.4026 | 0.3545 |

| 0.0771 | 22.02 | 2400 | 0.3904 | 0.3361 |

| 0.0614 | 25.69 | 2800 | 0.3736 | 0.3127 |

| 0.0486 | 29.36 | 3200 | 0.3717 | 0.2972 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

valurank/distilroberta-propaganda-2class

|

valurank

| 2022-06-08T20:39:15Z | 11 | 3 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"text-classification",

"generated_from_trainer",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z |

---

license: other

tags:

- generated_from_trainer

model-index:

- name: distilroberta-propaganda-2class

results: []

---

# distilroberta-propaganda-2class

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the QCRI propaganda dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5087

- Acc: 0.7424

## Training and evaluation data

Training data is the 19-class QCRI propaganda data, with all propaganda classes collapsed to a single catch-all 'prop' class.

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 12345

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 16

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Acc |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.5737 | 1.0 | 493 | 0.5998 | 0.6515 |

| 0.4954 | 2.0 | 986 | 0.5530 | 0.7080 |

| 0.4774 | 3.0 | 1479 | 0.5331 | 0.7258 |

| 0.4846 | 4.0 | 1972 | 0.5247 | 0.7339 |

| 0.4749 | 5.0 | 2465 | 0.5392 | 0.7199 |

| 0.502 | 6.0 | 2958 | 0.5124 | 0.7466 |

| 0.457 | 7.0 | 3451 | 0.5167 | 0.7432 |

| 0.4899 | 8.0 | 3944 | 0.5160 | 0.7428 |

| 0.4833 | 9.0 | 4437 | 0.5280 | 0.7339 |

| 0.5114 | 10.0 | 4930 | 0.5112 | 0.7436 |

| 0.4419 | 11.0 | 5423 | 0.5060 | 0.7525 |

| 0.4743 | 12.0 | 5916 | 0.5031 | 0.7547 |

| 0.4597 | 13.0 | 6409 | 0.5043 | 0.7517 |

| 0.4861 | 14.0 | 6902 | 0.5055 | 0.7487 |

| 0.499 | 15.0 | 7395 | 0.5091 | 0.7419 |

| 0.501 | 16.0 | 7888 | 0.5037 | 0.7521 |

| 0.4659 | 17.0 | 8381 | 0.5087 | 0.7424 |

### Framework versions

- Transformers 4.11.2

- Pytorch 1.7.1

- Datasets 1.11.0

- Tokenizers 0.10.3

|

valurank/distilroberta-proppy

|

valurank

| 2022-06-08T20:38:27Z | 9 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"text-classification",

"generated_from_trainer",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z |

---

license: other

tags:

- generated_from_trainer

model-index:

- name: distilroberta-proppy

results: []

---

# distilroberta-proppy

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the proppy corpus.

It achieves the following results on the evaluation set:

- Loss: 0.1838

- Acc: 0.9269

## Training and evaluation data

The training data is the [proppy corpus](https://zenodo.org/record/3271522). See [Proppy: Organizing the News

Based on Their Propagandistic Content](https://propaganda.qcri.org/papers/elsarticle-template.pdf) for details.

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 12345

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 16

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Acc |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 0.3179 | 1.0 | 732 | 0.2032 | 0.9146 |

| 0.2933 | 2.0 | 1464 | 0.2026 | 0.9206 |

| 0.2938 | 3.0 | 2196 | 0.1849 | 0.9252 |

| 0.3429 | 4.0 | 2928 | 0.1983 | 0.9221 |

| 0.2608 | 5.0 | 3660 | 0.2310 | 0.9106 |

| 0.2562 | 6.0 | 4392 | 0.1826 | 0.9270 |

| 0.2785 | 7.0 | 5124 | 0.1954 | 0.9228 |

| 0.307 | 8.0 | 5856 | 0.2056 | 0.9200 |

| 0.28 | 9.0 | 6588 | 0.1843 | 0.9259 |

| 0.2794 | 10.0 | 7320 | 0.1782 | 0.9299 |

| 0.2868 | 11.0 | 8052 | 0.1907 | 0.9242 |

| 0.2789 | 12.0 | 8784 | 0.2031 | 0.9216 |

| 0.2827 | 13.0 | 9516 | 0.1976 | 0.9229 |

| 0.2795 | 14.0 | 10248 | 0.1866 | 0.9255 |

| 0.2895 | 15.0 | 10980 | 0.1838 | 0.9269 |

### Framework versions

- Transformers 4.11.2

- Pytorch 1.7.1

- Datasets 1.11.0

- Tokenizers 0.10.3

|

valurank/distilroberta-clickbait

|

valurank

| 2022-06-08T20:24:26Z | 257 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"text-classification",

"generated_from_trainer",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z |

---

license: other

tags:

- generated_from_trainer

model-index:

- name: distilroberta-clickbait

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilroberta-clickbait

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on a dataset of headlines.

It achieves the following results on the evaluation set:

- Loss: 0.0268

- Acc: 0.9963

## Training and evaluation data

The following data sources were used:

* 32k headlines classified as clickbait/not-clickbait from [kaggle](https://www.kaggle.com/amananandrai/clickbait-dataset)

* A dataset of headlines from https://github.com/MotiBaadror/Clickbait-Detection

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 12345

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 16

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Acc |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.0195 | 1.0 | 981 | 0.0192 | 0.9954 |

| 0.0026 | 2.0 | 1962 | 0.0172 | 0.9963 |

| 0.0031 | 3.0 | 2943 | 0.0275 | 0.9945 |

| 0.0003 | 4.0 | 3924 | 0.0268 | 0.9963 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.1

- Datasets 1.17.0

- Tokenizers 0.10.3

|

joniponi/TEST2ppo-LunarLander-v2

|

joniponi

| 2022-06-08T20:00:34Z | 3 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-08T20:00:02Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: 253.16 +/- 21.62

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

cutten/wav2vec2-large-multilang-cv-ru-night

|

cutten

| 2022-06-08T19:58:05Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-06-08T14:24:42Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: wav2vec2-large-multilang-cv-ru-night

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-multilang-cv-ru-night

This model is a fine-tuned version of [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6617

- Wer: 0.5097

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 100

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 8.725 | 1.58 | 500 | 3.2788 | 1.0 |

| 3.1184 | 3.15 | 1000 | 2.4018 | 1.0015 |

| 1.2393 | 4.73 | 1500 | 0.6213 | 0.7655 |

| 0.6899 | 6.31 | 2000 | 0.5518 | 0.6811 |

| 0.5532 | 7.89 | 2500 | 0.5102 | 0.6467 |

| 0.4604 | 9.46 | 3000 | 0.4887 | 0.6213 |

| 0.4095 | 11.04 | 3500 | 0.4874 | 0.6042 |

| 0.3565 | 12.62 | 4000 | 0.4810 | 0.5893 |

| 0.3238 | 14.2 | 4500 | 0.5028 | 0.5890 |

| 0.3011 | 15.77 | 5000 | 0.5475 | 0.5808 |

| 0.2827 | 17.35 | 5500 | 0.5289 | 0.5720 |

| 0.2659 | 18.93 | 6000 | 0.5496 | 0.5733 |

| 0.2445 | 20.5 | 6500 | 0.5354 | 0.5737 |

| 0.2366 | 22.08 | 7000 | 0.5357 | 0.5686 |

| 0.2181 | 23.66 | 7500 | 0.5491 | 0.5611 |

| 0.2146 | 25.24 | 8000 | 0.5591 | 0.5597 |

| 0.2006 | 26.81 | 8500 | 0.5625 | 0.5631 |

| 0.1912 | 28.39 | 9000 | 0.5577 | 0.5647 |

| 0.1821 | 29.97 | 9500 | 0.5684 | 0.5519 |

| 0.1744 | 31.55 | 10000 | 0.5639 | 0.5551 |

| 0.1691 | 33.12 | 10500 | 0.5596 | 0.5425 |

| 0.1577 | 34.7 | 11000 | 0.5770 | 0.5551 |

| 0.1522 | 36.28 | 11500 | 0.5634 | 0.5560 |

| 0.1468 | 37.85 | 12000 | 0.5815 | 0.5453 |

| 0.1508 | 39.43 | 12500 | 0.6053 | 0.5490 |

| 0.1394 | 41.01 | 13000 | 0.6193 | 0.5504 |

| 0.1291 | 42.59 | 13500 | 0.5930 | 0.5424 |

| 0.1345 | 44.16 | 14000 | 0.6283 | 0.5442 |

| 0.1296 | 45.74 | 14500 | 0.6063 | 0.5560 |

| 0.1286 | 47.32 | 15000 | 0.6248 | 0.5378 |

| 0.1231 | 48.9 | 15500 | 0.6106 | 0.5405 |

| 0.1189 | 50.47 | 16000 | 0.6164 | 0.5342 |

| 0.1127 | 52.05 | 16500 | 0.6269 | 0.5359 |

| 0.112 | 53.63 | 17000 | 0.6170 | 0.5390 |

| 0.1113 | 55.21 | 17500 | 0.6489 | 0.5385 |

| 0.1023 | 56.78 | 18000 | 0.6826 | 0.5490 |

| 0.1069 | 58.36 | 18500 | 0.6147 | 0.5296 |

| 0.1008 | 59.94 | 19000 | 0.6414 | 0.5332 |

| 0.1018 | 61.51 | 19500 | 0.6454 | 0.5288 |

| 0.0989 | 63.09 | 20000 | 0.6603 | 0.5303 |

| 0.0944 | 64.67 | 20500 | 0.6350 | 0.5288 |

| 0.0905 | 66.25 | 21000 | 0.6386 | 0.5247 |

| 0.0837 | 67.82 | 21500 | 0.6563 | 0.5298 |

| 0.0868 | 69.4 | 22000 | 0.6375 | 0.5208 |

| 0.0827 | 70.98 | 22500 | 0.6401 | 0.5271 |

| 0.0797 | 72.56 | 23000 | 0.6723 | 0.5191 |

| 0.0847 | 74.13 | 23500 | 0.6610 | 0.5213 |

| 0.0818 | 75.71 | 24000 | 0.6774 | 0.5254 |

| 0.0793 | 77.29 | 24500 | 0.6543 | 0.5250 |

| 0.0758 | 78.86 | 25000 | 0.6607 | 0.5218 |

| 0.0755 | 80.44 | 25500 | 0.6599 | 0.5160 |

| 0.0722 | 82.02 | 26000 | 0.6683 | 0.5196 |

| 0.0714 | 83.6 | 26500 | 0.6941 | 0.5180 |

| 0.0684 | 85.17 | 27000 | 0.6581 | 0.5167 |

| 0.0686 | 86.75 | 27500 | 0.6651 | 0.5172 |

| 0.0712 | 88.33 | 28000 | 0.6547 | 0.5208 |

| 0.0697 | 89.91 | 28500 | 0.6555 | 0.5162 |

| 0.0696 | 91.48 | 29000 | 0.6678 | 0.5107 |

| 0.0686 | 93.06 | 29500 | 0.6630 | 0.5124 |

| 0.0671 | 94.64 | 30000 | 0.6675 | 0.5143 |

| 0.0668 | 96.21 | 30500 | 0.6602 | 0.5107 |

| 0.0666 | 97.79 | 31000 | 0.6611 | 0.5097 |

| 0.0664 | 99.37 | 31500 | 0.6617 | 0.5097 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0

- Datasets 2.2.2

- Tokenizers 0.12.1

|

renjithks/layoutlmv2-er-ner

|

renjithks

| 2022-06-08T19:37:51Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"layoutlmv2",

"token-classification",

"generated_from_trainer",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-05T15:40:30Z |

---

license: cc-by-nc-sa-4.0

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: layoutlmv2-er-ner

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# layoutlmv2-er-ner

This model is a fine-tuned version of [renjithks/layoutlmv2-cord-ner](https://huggingface.co/renjithks/layoutlmv2-cord-ner) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1217

- Precision: 0.7810

- Recall: 0.8085

- F1: 0.7945

- Accuracy: 0.9747

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 41 | 0.5441 | 0.0 | 0.0 | 0.0 | 0.8851 |

| No log | 2.0 | 82 | 0.4660 | 0.1019 | 0.0732 | 0.0852 | 0.8690 |

| No log | 3.0 | 123 | 0.2506 | 0.4404 | 0.4828 | 0.4606 | 0.9240 |

| No log | 4.0 | 164 | 0.1725 | 0.6120 | 0.6076 | 0.6098 | 0.9529 |

| No log | 5.0 | 205 | 0.1387 | 0.7204 | 0.7245 | 0.7225 | 0.9671 |

| No log | 6.0 | 246 | 0.1237 | 0.7742 | 0.7747 | 0.7745 | 0.9722 |

| No log | 7.0 | 287 | 0.1231 | 0.7619 | 0.7554 | 0.7586 | 0.9697 |

| No log | 8.0 | 328 | 0.1199 | 0.7994 | 0.7719 | 0.7854 | 0.9738 |

| No log | 9.0 | 369 | 0.1197 | 0.7937 | 0.8113 | 0.8024 | 0.9741 |

| No log | 10.0 | 410 | 0.1284 | 0.7581 | 0.7597 | 0.7589 | 0.9690 |

| No log | 11.0 | 451 | 0.1172 | 0.7792 | 0.7848 | 0.7820 | 0.9738 |

| No log | 12.0 | 492 | 0.1192 | 0.7913 | 0.7970 | 0.7941 | 0.9743 |

| 0.1858 | 13.0 | 533 | 0.1175 | 0.7960 | 0.8006 | 0.7983 | 0.9753 |

| 0.1858 | 14.0 | 574 | 0.1184 | 0.7724 | 0.8034 | 0.7876 | 0.9740 |

| 0.1858 | 15.0 | 615 | 0.1171 | 0.7882 | 0.8142 | 0.8010 | 0.9756 |

| 0.1858 | 16.0 | 656 | 0.1195 | 0.7829 | 0.8070 | 0.7948 | 0.9745 |

| 0.1858 | 17.0 | 697 | 0.1209 | 0.7810 | 0.8006 | 0.7906 | 0.9743 |

| 0.1858 | 18.0 | 738 | 0.1241 | 0.7806 | 0.7963 | 0.7884 | 0.9740 |

| 0.1858 | 19.0 | 779 | 0.1222 | 0.7755 | 0.8027 | 0.7889 | 0.9742 |

| 0.1858 | 20.0 | 820 | 0.1217 | 0.7810 | 0.8085 | 0.7945 | 0.9747 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.9.0+cu111

- Datasets 1.18.4

- Tokenizers 0.11.6

|

kalmufti/q-Taxi-v3

|

kalmufti

| 2022-06-08T19:29:47Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-08T19:29:39Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- metrics:

- type: mean_reward

value: 7.50 +/- 2.67

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="kalmufti/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

huggingtweets/makimasdoggy

|

huggingtweets

| 2022-06-08T19:17:06Z | 102 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-06-08T19:15:48Z |

---

language: en

thumbnail: http://www.huggingtweets.com/makimasdoggy/1654715821978/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1534537330014445569/ql3I-npY_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Vanser</div>

<div style="text-align: center; font-size: 14px;">@makimasdoggy</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Vanser.

| Data | Vanser |

| --- | --- |

| Tweets downloaded | 3249 |

| Retweets | 1548 |

| Short tweets | 346 |

| Tweets kept | 1355 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/66wk3fyw/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @makimasdoggy's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2di8hgps) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2di8hgps/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/makimasdoggy')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

renjithks/layoutlmv1-er-ner

|

renjithks

| 2022-06-08T18:53:25Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"layoutlm",

"token-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-08T17:45:15Z |

---

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: layoutlmv1-er-ner

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# layoutlmv1-er-ner

This model is a fine-tuned version of [renjithks/layoutlmv1-cord-ner](https://huggingface.co/renjithks/layoutlmv1-cord-ner) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2092

- Precision: 0.7202

- Recall: 0.7238

- F1: 0.7220

- Accuracy: 0.9639

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 41 | 0.2444 | 0.4045 | 0.3996 | 0.4020 | 0.9226 |

| No log | 2.0 | 82 | 0.1640 | 0.5319 | 0.6098 | 0.5682 | 0.9455 |

| No log | 3.0 | 123 | 0.1531 | 0.6324 | 0.6614 | 0.6466 | 0.9578 |

| No log | 4.0 | 164 | 0.1440 | 0.6927 | 0.6743 | 0.6834 | 0.9620 |

| No log | 5.0 | 205 | 0.1520 | 0.6750 | 0.6958 | 0.6853 | 0.9613 |

| No log | 6.0 | 246 | 0.1597 | 0.6840 | 0.6987 | 0.6913 | 0.9605 |

| No log | 7.0 | 287 | 0.1910 | 0.7002 | 0.6887 | 0.6944 | 0.9605 |

| No log | 8.0 | 328 | 0.1860 | 0.6834 | 0.6923 | 0.6878 | 0.9609 |

| No log | 9.0 | 369 | 0.1665 | 0.6785 | 0.7102 | 0.6940 | 0.9624 |

| No log | 10.0 | 410 | 0.1816 | 0.7016 | 0.7052 | 0.7034 | 0.9624 |

| No log | 11.0 | 451 | 0.1808 | 0.6913 | 0.7166 | 0.7038 | 0.9638 |

| No log | 12.0 | 492 | 0.2165 | 0.712 | 0.7023 | 0.7071 | 0.9628 |

| 0.1014 | 13.0 | 533 | 0.2135 | 0.6979 | 0.7109 | 0.7043 | 0.9613 |

| 0.1014 | 14.0 | 574 | 0.2154 | 0.6906 | 0.7109 | 0.7006 | 0.9612 |

| 0.1014 | 15.0 | 615 | 0.2118 | 0.6902 | 0.7016 | 0.6958 | 0.9615 |

| 0.1014 | 16.0 | 656 | 0.2091 | 0.6985 | 0.7080 | 0.7032 | 0.9623 |

| 0.1014 | 17.0 | 697 | 0.2104 | 0.7118 | 0.7123 | 0.7121 | 0.9630 |

| 0.1014 | 18.0 | 738 | 0.2081 | 0.7129 | 0.7231 | 0.7179 | 0.9638 |

| 0.1014 | 19.0 | 779 | 0.2093 | 0.7205 | 0.7231 | 0.7218 | 0.9638 |

| 0.1014 | 20.0 | 820 | 0.2092 | 0.7202 | 0.7238 | 0.7220 | 0.9639 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

skyfox/dqn-SpaceInvadersNoFrameskip-v4

|

skyfox

| 2022-06-08T18:47:18Z | 4 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-08T17:15:22Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- metrics:

- type: mean_reward

value: 767.00 +/- 378.16

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

```

# Download model and save it into the logs/ folder

python -m utils.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga skyfox -f logs/

python enjoy.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python train.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m utils.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga skyfox

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000),

('optimize_memory_usage', True),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

aspis/swin-base-finetuned-snacks

|

aspis

| 2022-06-08T18:43:00Z | 75 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"swin",

"image-classification",

"generated_from_trainer",

"dataset:snacks",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-06-08T18:26:26Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- snacks

metrics:

- accuracy

model-index:

- name: swin-base-finetuned-snacks

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: snacks

type: snacks

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9455497382198953

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-base-finetuned-snacks

This model is a fine-tuned version of [microsoft/swin-base-patch4-window7-224](https://huggingface.co/microsoft/swin-base-patch4-window7-224) on the snacks dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2404

- Accuracy: 0.9455

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.0044 | 1.0 | 38 | 0.2981 | 0.9309 |

| 0.0023 | 2.0 | 76 | 0.2287 | 0.9445 |

| 0.0012 | 3.0 | 114 | 0.2404 | 0.9455 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

vincentbonnet/q-Taxi-v3

|

vincentbonnet

| 2022-06-08T17:36:29Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-05-28T03:19:00Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- metrics:

- type: mean_reward

value: -99.00 +/- 0.00

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="vincentbonnet/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

nateraw/autoencoder-keras-rm-history-pr-review

|

nateraw

| 2022-06-08T16:58:21Z | 0 | 0 |

keras

|

[

"keras",

"tf-keras",

"region:us"

] | null | 2022-06-08T16:56:15Z |

---

library_name: keras

---

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': 0.001, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}

- training_precision: float32

## Model Plot

<details>

<summary>View Model Plot</summary>

</details>

|

huggingtweets/ripvillage

|

huggingtweets

| 2022-06-08T16:38:52Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-06-08T16:35:39Z |

---

language: en

thumbnail: http://www.huggingtweets.com/ripvillage/1654706327179/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/378800000120011180/ffb093c084cfb4b60f70488a7e6355d0_400x400.jpeg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Mathurin Village</div>

<div style="text-align: center; font-size: 14px;">@ripvillage</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Mathurin Village.

| Data | Mathurin Village |

| --- | --- |

| Tweets downloaded | 3243 |

| Retweets | 118 |

| Short tweets | 335 |

| Tweets kept | 2790 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3e20ev2s/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @ripvillage's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/ecq32lhi) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/ecq32lhi/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/ripvillage')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

mmillet/distilrubert-tiny-cased-conversational-v1_finetuned_emotion_experiment_augmented_anger_fear

|

mmillet

| 2022-06-08T16:10:06Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-06-08T16:03:02Z |

---

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

- precision

- recall

model-index:

- name: distilrubert-tiny-cased-conversational-v1_finetuned_emotion_experiment_augmented_anger_fear

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilrubert-tiny-cased-conversational-v1_finetuned_emotion_experiment_augmented_anger_fear

This model is a fine-tuned version of [DeepPavlov/distilrubert-tiny-cased-conversational-v1](https://huggingface.co/DeepPavlov/distilrubert-tiny-cased-conversational-v1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3760

- Accuracy: 0.8758

- F1: 0.8750

- Precision: 0.8753

- Recall: 0.8758

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=0.0001

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Precision | Recall |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:---------:|:------:|

| 1.2636 | 1.0 | 69 | 1.0914 | 0.6013 | 0.5599 | 0.5780 | 0.6013 |

| 1.029 | 2.0 | 138 | 0.9180 | 0.6514 | 0.6344 | 0.6356 | 0.6514 |

| 0.904 | 3.0 | 207 | 0.8235 | 0.6827 | 0.6588 | 0.6904 | 0.6827 |

| 0.8084 | 4.0 | 276 | 0.7272 | 0.7537 | 0.7477 | 0.7564 | 0.7537 |

| 0.7242 | 5.0 | 345 | 0.6435 | 0.7860 | 0.7841 | 0.7861 | 0.7860 |

| 0.6305 | 6.0 | 414 | 0.5543 | 0.8173 | 0.8156 | 0.8200 | 0.8173 |

| 0.562 | 7.0 | 483 | 0.4860 | 0.8392 | 0.8383 | 0.8411 | 0.8392 |

| 0.5042 | 8.0 | 552 | 0.4474 | 0.8528 | 0.8514 | 0.8546 | 0.8528 |

| 0.4535 | 9.0 | 621 | 0.4213 | 0.8580 | 0.8579 | 0.8590 | 0.8580 |

| 0.4338 | 10.0 | 690 | 0.4106 | 0.8591 | 0.8578 | 0.8605 | 0.8591 |

| 0.4026 | 11.0 | 759 | 0.4064 | 0.8622 | 0.8615 | 0.8632 | 0.8622 |

| 0.3861 | 12.0 | 828 | 0.3874 | 0.8737 | 0.8728 | 0.8733 | 0.8737 |

| 0.3709 | 13.0 | 897 | 0.3841 | 0.8706 | 0.8696 | 0.8701 | 0.8706 |

| 0.3592 | 14.0 | 966 | 0.3841 | 0.8716 | 0.8709 | 0.8714 | 0.8716 |

| 0.3475 | 15.0 | 1035 | 0.3834 | 0.8737 | 0.8728 | 0.8732 | 0.8737 |

| 0.3537 | 16.0 | 1104 | 0.3805 | 0.8727 | 0.8717 | 0.8722 | 0.8727 |

| 0.3317 | 17.0 | 1173 | 0.3775 | 0.8747 | 0.8739 | 0.8741 | 0.8747 |

| 0.323 | 18.0 | 1242 | 0.3759 | 0.8727 | 0.8718 | 0.8721 | 0.8727 |

| 0.3327 | 19.0 | 1311 | 0.3776 | 0.8758 | 0.8750 | 0.8756 | 0.8758 |

| 0.3339 | 20.0 | 1380 | 0.3760 | 0.8758 | 0.8750 | 0.8753 | 0.8758 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

ahmeddbahaa/mT5_multilingual_XLSum-finetuned-fa

|

ahmeddbahaa

| 2022-06-08T15:51:15Z | 49 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"summarization",

"fa",

"Abstractive Summarization",

"generated_from_trainer",

"dataset:pn_summary",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-05-29T17:01:06Z |

---

tags:

- summarization

- fa

- mt5

- Abstractive Summarization

- generated_from_trainer

datasets:

- pn_summary

model-index:

- name: mT5_multilingual_XLSum-finetuned-fa

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mT5_multilingual_XLSum-finetuned-fa

This model is a fine-tuned version of [csebuetnlp/mT5_multilingual_XLSum](https://huggingface.co/csebuetnlp/mT5_multilingual_XLSum) on the pn_summary dataset.

It achieves the following results on the evaluation set:

- Loss: 2.5703

- Rouge-1: 45.12

- Rouge-2: 26.25

- Rouge-l: 39.96

- Gen Len: 48.72

- Bertscore: 79.54

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 250

- num_epochs: 5

- label_smoothing_factor: 0.1

### Training results

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

Cole/xlm-roberta-base-finetuned-panx-de

|

Cole

| 2022-06-08T15:27:30Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-06T20:49:59Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

args: PAN-X.de

metrics:

- name: F1

type: f1

value: 0.8662369516855856

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1428

- F1: 0.8662

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 12

- eval_batch_size: 12

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2499 | 1.0 | 1049 | 0.1916 | 0.8157 |

| 0.1312 | 2.0 | 2098 | 0.1394 | 0.8479 |

| 0.0809 | 3.0 | 3147 | 0.1428 | 0.8662 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

nicofloresuribe/ndad

|

nicofloresuribe

| 2022-06-08T15:24:15Z | 0 | 0 | null |

[

"license:bigscience-bloom-rail-1.0",

"region:us"

] | null | 2022-06-08T15:24:15Z |

---

license: bigscience-bloom-rail-1.0

---

|

Vkt/model-facebookptbrlarge

|

Vkt

| 2022-06-08T15:05:20Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-06-07T17:48:51Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: model-facebookptbrlarge

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# model-facebookptbrlarge

This model is a fine-tuned version of [facebook/wav2vec2-large-xlsr-53-portuguese](https://huggingface.co/facebook/wav2vec2-large-xlsr-53-portuguese) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2206

- Wer: 0.1322

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 5.8975 | 0.29 | 400 | 0.4131 | 0.3336 |

| 0.5131 | 0.57 | 800 | 0.4103 | 0.3293 |

| 0.4846 | 0.86 | 1200 | 0.3493 | 0.3028 |

| 0.4174 | 1.14 | 1600 | 0.3055 | 0.2730 |

| 0.4105 | 1.43 | 2000 | 0.3283 | 0.3041 |

| 0.4028 | 1.72 | 2400 | 0.3539 | 0.3210 |

| 0.386 | 2.0 | 2800 | 0.2925 | 0.2690 |

| 0.3224 | 2.29 | 3200 | 0.2842 | 0.2665 |

| 0.3122 | 2.57 | 3600 | 0.2781 | 0.2472 |

| 0.3087 | 2.86 | 4000 | 0.2794 | 0.2692 |

| 0.2878 | 3.15 | 4400 | 0.2795 | 0.2537 |

| 0.2915 | 3.43 | 4800 | 0.2764 | 0.2478 |

| 0.2816 | 3.72 | 5200 | 0.2761 | 0.2366 |

| 0.283 | 4.0 | 5600 | 0.2641 | 0.2587 |

| 0.2448 | 4.29 | 6000 | 0.2489 | 0.2417 |

| 0.247 | 4.57 | 6400 | 0.2538 | 0.2422 |

| 0.25 | 4.86 | 6800 | 0.2660 | 0.2306 |

| 0.2256 | 5.15 | 7200 | 0.2477 | 0.2267 |

| 0.2225 | 5.43 | 7600 | 0.2364 | 0.2195 |

| 0.2217 | 5.72 | 8000 | 0.2319 | 0.2139 |

| 0.2272 | 6.0 | 8400 | 0.2489 | 0.2427 |

| 0.2016 | 6.29 | 8800 | 0.2404 | 0.2181 |

| 0.1973 | 6.58 | 9200 | 0.2532 | 0.2273 |

| 0.2101 | 6.86 | 9600 | 0.2590 | 0.2100 |

| 0.1946 | 7.15 | 10000 | 0.2414 | 0.2108 |

| 0.1845 | 7.43 | 10400 | 0.2485 | 0.2124 |

| 0.1861 | 7.72 | 10800 | 0.2405 | 0.2124 |

| 0.1851 | 8.01 | 11200 | 0.2449 | 0.2062 |

| 0.1587 | 8.29 | 11600 | 0.2510 | 0.2048 |

| 0.1694 | 8.58 | 12000 | 0.2290 | 0.2059 |

| 0.1637 | 8.86 | 12400 | 0.2376 | 0.2063 |

| 0.1594 | 9.15 | 12800 | 0.2307 | 0.1967 |

| 0.1537 | 9.44 | 13200 | 0.2274 | 0.2017 |

| 0.1498 | 9.72 | 13600 | 0.2322 | 0.2025 |

| 0.1516 | 10.01 | 14000 | 0.2323 | 0.1971 |

| 0.1336 | 10.29 | 14400 | 0.2249 | 0.1920 |

| 0.134 | 10.58 | 14800 | 0.2258 | 0.2055 |

| 0.138 | 10.86 | 15200 | 0.2250 | 0.1906 |

| 0.13 | 11.15 | 15600 | 0.2423 | 0.1920 |

| 0.1302 | 11.44 | 16000 | 0.2294 | 0.1849 |

| 0.1253 | 11.72 | 16400 | 0.2193 | 0.1889 |

| 0.1219 | 12.01 | 16800 | 0.2350 | 0.1869 |

| 0.1149 | 12.29 | 17200 | 0.2350 | 0.1903 |

| 0.1161 | 12.58 | 17600 | 0.2277 | 0.1899 |

| 0.1129 | 12.87 | 18000 | 0.2416 | 0.1855 |

| 0.1091 | 13.15 | 18400 | 0.2289 | 0.1815 |

| 0.1073 | 13.44 | 18800 | 0.2383 | 0.1799 |

| 0.1135 | 13.72 | 19200 | 0.2306 | 0.1819 |

| 0.1075 | 14.01 | 19600 | 0.2283 | 0.1742 |

| 0.0971 | 14.3 | 20000 | 0.2271 | 0.1851 |

| 0.0967 | 14.58 | 20400 | 0.2395 | 0.1809 |

| 0.1039 | 14.87 | 20800 | 0.2286 | 0.1808 |

| 0.0984 | 15.15 | 21200 | 0.2303 | 0.1821 |

| 0.0922 | 15.44 | 21600 | 0.2254 | 0.1745 |

| 0.0882 | 15.73 | 22000 | 0.2280 | 0.1836 |

| 0.0859 | 16.01 | 22400 | 0.2355 | 0.1779 |

| 0.0832 | 16.3 | 22800 | 0.2347 | 0.1740 |

| 0.0854 | 16.58 | 23200 | 0.2342 | 0.1739 |

| 0.0874 | 16.87 | 23600 | 0.2316 | 0.1719 |

| 0.0808 | 17.16 | 24000 | 0.2291 | 0.1730 |

| 0.0741 | 17.44 | 24400 | 0.2308 | 0.1674 |

| 0.0815 | 17.73 | 24800 | 0.2329 | 0.1655 |

| 0.0764 | 18.01 | 25200 | 0.2514 | 0.1711 |

| 0.0719 | 18.3 | 25600 | 0.2275 | 0.1578 |

| 0.0665 | 18.58 | 26000 | 0.2367 | 0.1614 |

| 0.0693 | 18.87 | 26400 | 0.2185 | 0.1593 |

| 0.0662 | 19.16 | 26800 | 0.2266 | 0.1678 |

| 0.0612 | 19.44 | 27200 | 0.2332 | 0.1602 |

| 0.0623 | 19.73 | 27600 | 0.2283 | 0.1670 |

| 0.0659 | 20.01 | 28000 | 0.2142 | 0.1626 |

| 0.0581 | 20.3 | 28400 | 0.2198 | 0.1646 |

| 0.063 | 20.59 | 28800 | 0.2251 | 0.1588 |

| 0.0618 | 20.87 | 29200 | 0.2186 | 0.1554 |

| 0.0549 | 21.16 | 29600 | 0.2251 | 0.1490 |

| 0.058 | 21.44 | 30000 | 0.2366 | 0.1559 |

| 0.0543 | 21.73 | 30400 | 0.2262 | 0.1535 |

| 0.0529 | 22.02 | 30800 | 0.2358 | 0.1519 |

| 0.053 | 22.3 | 31200 | 0.2198 | 0.1513 |

| 0.0552 | 22.59 | 31600 | 0.2234 | 0.1503 |

| 0.0492 | 22.87 | 32000 | 0.2191 | 0.1516 |

| 0.0488 | 23.16 | 32400 | 0.2321 | 0.1500 |

| 0.0479 | 23.45 | 32800 | 0.2152 | 0.1420 |

| 0.0453 | 23.73 | 33200 | 0.2202 | 0.1453 |

| 0.0485 | 24.02 | 33600 | 0.2235 | 0.1468 |

| 0.0451 | 24.3 | 34000 | 0.2192 | 0.1455 |

| 0.041 | 24.59 | 34400 | 0.2138 | 0.1438 |

| 0.0435 | 24.87 | 34800 | 0.2335 | 0.1423 |

| 0.0404 | 25.16 | 35200 | 0.2220 | 0.1409 |

| 0.0374 | 25.45 | 35600 | 0.2366 | 0.1437 |

| 0.0405 | 25.73 | 36000 | 0.2233 | 0.1428 |

| 0.0385 | 26.02 | 36400 | 0.2208 | 0.1414 |

| 0.0373 | 26.3 | 36800 | 0.2265 | 0.1420 |

| 0.0365 | 26.59 | 37200 | 0.2174 | 0.1402 |

| 0.037 | 26.88 | 37600 | 0.2249 | 0.1397 |

| 0.0379 | 27.16 | 38000 | 0.2173 | 0.1374 |

| 0.0354 | 27.45 | 38400 | 0.2212 | 0.1381 |

| 0.034 | 27.73 | 38800 | 0.2313 | 0.1364 |

| 0.0347 | 28.02 | 39200 | 0.2230 | 0.1356 |

| 0.0318 | 28.31 | 39600 | 0.2231 | 0.1357 |

| 0.0305 | 28.59 | 40000 | 0.2281 | 0.1366 |

| 0.0307 | 28.88 | 40400 | 0.2259 | 0.1342 |

| 0.0315 | 29.16 | 40800 | 0.2252 | 0.1332 |

| 0.0314 | 29.45 | 41200 | 0.2218 | 0.1328 |

| 0.0307 | 29.74 | 41600 | 0.2206 | 0.1322 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.8.1+cu111

- Datasets 2.2.1

- Tokenizers 0.12.1

|

fusing/ddim-lsun-bedroom

|

fusing

| 2022-06-08T13:10:21Z | 41 | 0 |

transformers

|

[

"transformers",

"ddim_diffusion",

"arxiv:2010.02502",

"endpoints_compatible",

"region:us"

] | null | 2022-06-08T12:42:50Z |

---

tags:

- ddim_diffusion

---

# Denoising Diffusion Implicit Models (DDIM)

**Paper**: [Denoising Diffusion Implicit Models](https://arxiv.org/abs/2010.02502)

**Abstract**:

*Denoising diffusion probabilistic models (DDPMs) have achieved high quality image generation without adversarial training, yet they require simulating a Markov chain for many steps to produce a sample. To accelerate sampling, we present denoising diffusion implicit models (DDIMs), a more efficient class of iterative implicit probabilistic models with the same training procedure as DDPMs. In DDPMs, the generative process is defined as the reverse of a Markovian diffusion process. We construct a class of non-Markovian diffusion processes that lead to the same training objective, but whose reverse process can be much faster to sample from. We empirically demonstrate that DDIMs can produce high quality samples 10× to 50× faster in terms of wall-clock time compared to DDPMs, allow us to trade off computation for sample quality, and can perform semantically meaningful image interpolation directly in the latent space.*

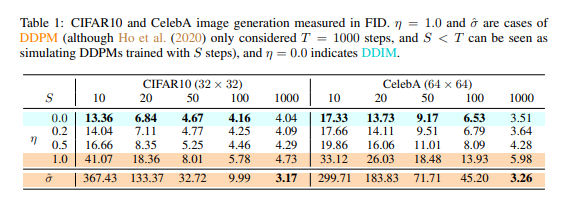

**Explanation on `eta` and `num_inference_steps`**

- `num_inference_steps` is called *S* in the following table

- `eta` is called *η* in the following table

## Usage

```python

# !pip install diffusers

from diffusers import DiffusionPipeline

import PIL.Image

import numpy as np

model_id = "fusing/ddim-lsun-bedroom"

# load model and scheduler

ddpm = DiffusionPipeline.from_pretrained(model_id)

# run pipeline in inference (sample random noise and denoise)

image = ddpm(eta=0.0, num_inference_steps=50)

# process image to PIL

image_processed = image.cpu().permute(0, 2, 3, 1)

image_processed = (image_processed + 1.0) * 127.5

image_processed = image_processed.numpy().astype(np.uint8)

image_pil = PIL.Image.fromarray(image_processed[0])

# save image

image_pil.save("test.png")

```

## Samples

1.

2.

3.

4.

|

jcmc/q-FrozenLake-v1-4x4-noSlippery

|

jcmc

| 2022-06-08T12:41:26Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-08T12:41:19Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="jcmc/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

naveenk903/TEST2ppo-LunarLander-v2

|

naveenk903

| 2022-06-08T12:37:14Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-08T12:10:46Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: 237.66 +/- 43.74

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

FabianWillner/distilbert-base-uncased-finetuned-triviaqa

|

FabianWillner

| 2022-06-08T12:22:36Z | 43 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-05-10T12:20:49Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: distilbert-base-uncased-finetuned-triviaqa

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-triviaqa

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9949

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 1.0391 | 1.0 | 11195 | 1.0133 |

| 0.8425 | 2.0 | 22390 | 0.9949 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

huggingtweets/conspiracymill

|

huggingtweets

| 2022-06-08T10:46:08Z | 105 | 1 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-06-08T10:44:11Z |

---

language: en

thumbnail: http://www.huggingtweets.com/conspiracymill/1654685163989/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1447765226376638469/EuvZlKan_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Conspiracy Mill</div>

<div style="text-align: center; font-size: 14px;">@conspiracymill</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Conspiracy Mill.

| Data | Conspiracy Mill |

| --- | --- |

| Tweets downloaded | 3196 |

| Retweets | 626 |

| Short tweets | 869 |

| Tweets kept | 1701 |