modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-11 06:30:11

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 555

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-11 06:29:58

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

horychtom/czech_media_bias_classifier

|

horychtom

| 2022-04-28T13:51:18Z | 4 | 2 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"Czech",

"cs",

"autotrain_compatible",

"region:us"

] |

text-classification

| 2022-04-04T09:04:34Z |

---

inference: false

language: "cs"

tags:

- Czech

---

## Czech Media Bias Classifier

A FERNET-C5 model fine-tuned to perform binary classification task on czech media bias detection.

|

espnet/simpleoier_chime4_enh_asr_convtasnet_init_noenhloss_wavlm_transformer_init_raw_en_char

|

espnet

| 2022-04-28T12:40:15Z | 0 | 0 |

espnet

|

[

"espnet",

"audio",

"speech-enhancement-recognition",

"en",

"dataset:chime4",

"arxiv:1804.00015",

"license:cc-by-4.0",

"region:us"

] | null | 2022-04-28T12:38:58Z |

---

tags:

- espnet

- audio

- speech-enhancement-recognition

language: en

datasets:

- chime4

license: cc-by-4.0

---

## ESPnet2 EnhS2T model

### `espnet/simpleoier_chime4_enh_asr_convtasnet_init_noenhloss_wavlm_transformer_init_raw_en_char`

This model was trained by simpleoier using chime4 recipe in [espnet](https://github.com/espnet/espnet/).

### Demo: How to use in ESPnet2

```bash

cd espnet

git checkout 2b663318cd1773fb8685b1e03295b6bc6889c283

pip install -e .

cd egs2/chime4/enh_asr1

./run.sh --skip_data_prep false --skip_train true --download_model espnet/simpleoier_chime4_enh_asr_convtasnet_init_noenhloss_wavlm_transformer_init_raw_en_char

```

<!-- Generated by scripts/utils/show_asr_result.sh -->

# RESULTS

## Environments

- date: `Thu Apr 28 08:15:30 EDT 2022`

- python version: `3.7.11 (default, Jul 27 2021, 14:32:16) [GCC 7.5.0]`

- espnet version: `espnet 202204`

- pytorch version: `pytorch 1.8.1`

- Git hash: ``

- Commit date: ``

## enh_asr_train_enh_asr_convtasnet_init_noenhloss_wavlm_transformer_init_lr1e-4_accum1_adam_specaug_bypass0_raw_en_char

### WER

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|---|---|---|---|---|---|---|---|---|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_real_beamformit_2mics|1640|27119|98.5|1.2|0.3|0.2|1.7|19.6|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_real_beamformit_5mics|1640|27119|98.6|1.1|0.3|0.2|1.5|18.7|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_real_isolated_1ch_track|1640|27119|98.3|1.3|0.4|0.2|1.9|21.8|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_simu_beamformit_2mics|1640|27120|97.9|1.5|0.5|0.2|2.3|25.2|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_simu_beamformit_5mics|1640|27120|98.4|1.2|0.4|0.1|1.7|19.9|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_simu_isolated_1ch_track|1640|27120|97.2|2.1|0.7|0.3|3.1|28.9|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_real_beamformit_2mics|1320|21409|97.4|2.0|0.6|0.3|2.9|27.3|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_real_beamformit_5mics|1320|21409|97.8|1.8|0.4|0.2|2.5|24.3|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_real_isolated_1ch_track|1320|21409|96.7|2.6|0.7|0.4|3.7|31.6|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_simu_beamformit_2mics|1320|21416|96.6|2.5|1.0|0.3|3.7|32.5|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_simu_beamformit_5mics|1320|21416|97.5|1.9|0.7|0.3|2.9|28.9|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_simu_isolated_1ch_track|1320|21416|94.6|3.7|1.6|0.5|5.9|37.3|

### CER

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|---|---|---|---|---|---|---|---|---|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_real_beamformit_2mics|1640|160390|99.5|0.2|0.3|0.2|0.7|19.6|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_real_beamformit_5mics|1640|160390|99.6|0.1|0.3|0.2|0.6|18.7|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_real_isolated_1ch_track|1640|160390|99.4|0.2|0.4|0.2|0.8|21.8|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_simu_beamformit_2mics|1640|160400|99.2|0.3|0.5|0.2|1.1|25.2|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_simu_beamformit_5mics|1640|160400|99.5|0.2|0.3|0.1|0.7|19.9|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/dt05_simu_isolated_1ch_track|1640|160400|98.8|0.5|0.7|0.3|1.5|28.9|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_real_beamformit_2mics|1320|126796|98.9|0.4|0.7|0.3|1.4|27.3|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_real_beamformit_5mics|1320|126796|99.1|0.4|0.5|0.2|1.1|24.3|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_real_isolated_1ch_track|1320|126796|98.6|0.6|0.8|0.4|1.8|31.7|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_simu_beamformit_2mics|1320|126812|98.2|0.6|1.1|0.4|2.1|32.5|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_simu_beamformit_5mics|1320|126812|98.8|0.4|0.8|0.3|1.5|28.9|

|decode_asr_transformer_normalize_output_wavtrue_lm_lm_train_lm_transformer_en_char_valid.loss.ave_enh_asr_model_valid.acc.ave/et05_simu_isolated_1ch_track|1320|126812|97.0|1.2|1.9|0.6|3.7|37.3|

## EnhS2T config

<details><summary>expand</summary>

```

config: conf/tuning/train_enh_asr_convtasnet_init_noenhloss_wavlm_transformer_init_lr1e-4_accum1_adam_specaug_bypass0.yaml

print_config: false

log_level: INFO

dry_run: false

iterator_type: sequence

output_dir: exp/enh_asr_train_enh_asr_convtasnet_init_noenhloss_wavlm_transformer_init_lr1e-4_accum1_adam_specaug_bypass0_raw_en_char

ngpu: 1

seed: 0

num_workers: 1

num_att_plot: 3

dist_backend: nccl

dist_init_method: env://

dist_world_size: null

dist_rank: null

local_rank: 0

dist_master_addr: null

dist_master_port: null

dist_launcher: null

multiprocessing_distributed: false

unused_parameters: true

sharded_ddp: false

cudnn_enabled: true

cudnn_benchmark: false

cudnn_deterministic: true

collect_stats: false

write_collected_feats: false

max_epoch: 12

patience: 10

val_scheduler_criterion:

- valid

- loss

early_stopping_criterion:

- valid

- loss

- min

best_model_criterion:

- - valid

- acc

- max

- - train

- loss

- min

keep_nbest_models: 10

nbest_averaging_interval: 0

grad_clip: 5

grad_clip_type: 2.0

grad_noise: false

accum_grad: 2

no_forward_run: false

resume: true

train_dtype: float32

use_amp: false

log_interval: null

use_matplotlib: true

use_tensorboard: true

use_wandb: false

wandb_project: null

wandb_id: null

wandb_entity: null

wandb_name: null

wandb_model_log_interval: -1

detect_anomaly: false

pretrain_path: null

init_param:

- ../enh1/exp/enh_train_enh_convtasnet_small_raw/valid.loss.ave_1best.pth:encoder:enh_model.encoder

- ../enh1/exp/enh_train_enh_convtasnet_small_raw/valid.loss.ave_1best.pth:separator:enh_model.separator

- ../enh1/exp/enh_train_enh_convtasnet_small_raw/valid.loss.ave_1best.pth:decoder:enh_model.decoder

- ../asr1/exp/asr_train_asr_transformer_wavlm_lr1e-3_specaug_accum1_preenc128_warmup20k_raw_en_char/valid.acc.ave.pth:frontend:s2t_model.frontend

- ../asr1/exp/asr_train_asr_transformer_wavlm_lr1e-3_specaug_accum1_preenc128_warmup20k_raw_en_char/valid.acc.ave.pth:preencoder:s2t_model.preencoder

- ../asr1/exp/asr_train_asr_transformer_wavlm_lr1e-3_specaug_accum1_preenc128_warmup20k_raw_en_char/valid.acc.ave.pth:encoder:s2t_model.encoder

- ../asr1/exp/asr_train_asr_transformer_wavlm_lr1e-3_specaug_accum1_preenc128_warmup20k_raw_en_char/valid.acc.ave.pth:ctc:s2t_model.ctc

- ../asr1/exp/asr_train_asr_transformer_wavlm_lr1e-3_specaug_accum1_preenc128_warmup20k_raw_en_char/valid.acc.ave.pth:decoder:s2t_model.decoder

ignore_init_mismatch: false

freeze_param:

- s2t_model.frontend.upstream

num_iters_per_epoch: null

batch_size: 12

valid_batch_size: null

batch_bins: 1000000

valid_batch_bins: null

train_shape_file:

- exp/enh_asr_stats_raw_en_char/train/speech_shape

- exp/enh_asr_stats_raw_en_char/train/speech_ref1_shape

- exp/enh_asr_stats_raw_en_char/train/text_shape.char

valid_shape_file:

- exp/enh_asr_stats_raw_en_char/valid/speech_shape

- exp/enh_asr_stats_raw_en_char/valid/speech_ref1_shape

- exp/enh_asr_stats_raw_en_char/valid/text_shape.char

batch_type: folded

valid_batch_type: null

fold_length:

- 80000

- 80000

- 150

sort_in_batch: descending

sort_batch: descending

multiple_iterator: false

chunk_length: 500

chunk_shift_ratio: 0.5

num_cache_chunks: 1024

train_data_path_and_name_and_type:

- - dump/raw/tr05_multi_noisy_si284/wav.scp

- speech

- sound

- - dump/raw/tr05_multi_noisy_si284/spk1.scp

- speech_ref1

- sound

- - dump/raw/tr05_multi_noisy_si284/text

- text

- text

valid_data_path_and_name_and_type:

- - dump/raw/dt05_multi_isolated_1ch_track/wav.scp

- speech

- sound

- - dump/raw/dt05_multi_isolated_1ch_track/spk1.scp

- speech_ref1

- sound

- - dump/raw/dt05_multi_isolated_1ch_track/text

- text

- text

allow_variable_data_keys: false

max_cache_size: 0.0

max_cache_fd: 32

valid_max_cache_size: null

optim: adam

optim_conf:

lr: 0.0001

scheduler: null

scheduler_conf: {}

token_list: data/en_token_list/char/tokens.txt

src_token_list: null

init: xavier_uniform

input_size: null

ctc_conf:

dropout_rate: 0.0

ctc_type: builtin

reduce: true

ignore_nan_grad: true

enh_criterions:

- name: si_snr

conf: {}

wrapper: fixed_order

wrapper_conf: {}

enh_model_conf:

stft_consistency: false

loss_type: mask_mse

mask_type: null

asr_model_conf:

ctc_weight: 0.3

lsm_weight: 0.1

length_normalized_loss: false

extract_feats_in_collect_stats: false

st_model_conf:

stft_consistency: false

loss_type: mask_mse

mask_type: null

subtask_series:

- enh

- asr

model_conf:

calc_enh_loss: false

bypass_enh_prob: 0.0

use_preprocessor: true

token_type: char

bpemodel: null

src_token_type: bpe

src_bpemodel: null

non_linguistic_symbols: data/nlsyms.txt

cleaner: null

g2p: null

enh_encoder: conv

enh_encoder_conf:

channel: 256

kernel_size: 40

stride: 20

enh_separator: tcn

enh_separator_conf:

num_spk: 1

layer: 4

stack: 2

bottleneck_dim: 256

hidden_dim: 512

kernel: 3

causal: false

norm_type: gLN

nonlinear: relu

enh_decoder: conv

enh_decoder_conf:

channel: 256

kernel_size: 40

stride: 20

frontend: s3prl

frontend_conf:

frontend_conf:

upstream: wavlm_large

download_dir: ./hub

multilayer_feature: true

fs: 16k

specaug: specaug

specaug_conf:

apply_time_warp: true

time_warp_window: 5

time_warp_mode: bicubic

apply_freq_mask: true

freq_mask_width_range:

- 0

- 100

num_freq_mask: 4

apply_time_mask: true

time_mask_width_range:

- 0

- 40

num_time_mask: 2

normalize: utterance_mvn

normalize_conf: {}

asr_preencoder: linear

asr_preencoder_conf:

input_size: 1024

output_size: 128

asr_encoder: transformer

asr_encoder_conf:

output_size: 256

attention_heads: 4

linear_units: 2048

num_blocks: 12

dropout_rate: 0.1

attention_dropout_rate: 0.0

input_layer: conv2d2

normalize_before: true

asr_postencoder: null

asr_postencoder_conf: {}

asr_decoder: transformer

asr_decoder_conf:

input_layer: embed

attention_heads: 4

linear_units: 2048

num_blocks: 6

dropout_rate: 0.1

positional_dropout_rate: 0.0

self_attention_dropout_rate: 0.0

src_attention_dropout_rate: 0.0

st_preencoder: null

st_preencoder_conf: {}

st_encoder: rnn

st_encoder_conf: {}

st_postencoder: null

st_postencoder_conf: {}

st_decoder: rnn

st_decoder_conf: {}

st_extra_asr_decoder: rnn

st_extra_asr_decoder_conf: {}

st_extra_mt_decoder: rnn

st_extra_mt_decoder_conf: {}

required:

- output_dir

- token_list

version: '202204'

distributed: false

```

</details>

### Citing ESPnet

```BibTex

@inproceedings{watanabe2018espnet,

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

title={{ESPnet}: End-to-End Speech Processing Toolkit},

year={2018},

booktitle={Proceedings of Interspeech},

pages={2207--2211},

doi={10.21437/Interspeech.2018-1456},

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

}

```

or arXiv:

```bibtex

@misc{watanabe2018espnet,

title={ESPnet: End-to-End Speech Processing Toolkit},

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

year={2018},

eprint={1804.00015},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

YASH312312/distilroberta-base-finetuned-wikitext2

|

YASH312312

| 2022-04-28T10:03:53Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"roberta",

"fill-mask",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-04-27T15:07:49Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: distilroberta-base-finetuned-wikitext2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilroberta-base-finetuned-wikitext2

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.7515

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 3.1203 | 1.0 | 766 | 2.8510 |

| 2.9255 | 2.0 | 1532 | 2.8106 |

| 2.8669 | 3.0 | 2298 | 2.7515 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

bdickson/distilbert-base-uncased-finetuned-squad

|

bdickson

| 2022-04-28T09:59:39Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-04-27T19:56:30Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: distilbert-base-uncased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1617

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 1.2299 | 1.0 | 5533 | 1.1673 |

| 0.9564 | 2.0 | 11066 | 1.1223 |

| 0.7572 | 3.0 | 16599 | 1.1617 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

bdickson/albert-base-v2-finetuned-squad

|

bdickson

| 2022-04-28T07:31:59Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"albert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-04-28T01:10:47Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: albert-base-v2-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# albert-base-v2-finetuned-squad

This model is a fine-tuned version of [albert-base-v2](https://huggingface.co/albert-base-v2) on the squad dataset.

It achieves the following results on the evaluation set:

- eval_loss: 1.0191

- eval_runtime: 291.8551

- eval_samples_per_second: 37.032

- eval_steps_per_second: 2.316

- epoch: 3.0

- step: 16620

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

bdickson/bert-base-uncased-finetuned-squad

|

bdickson

| 2022-04-28T07:30:32Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-04-28T00:58:17Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: bert-base-uncased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-finetuned-squad

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- eval_loss: 1.1240

- eval_runtime: 262.7193

- eval_samples_per_second: 41.048

- eval_steps_per_second: 2.565

- epoch: 3.0

- step: 16599

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

Lilya/distilbert-base-uncased-finetuned-ner-TRANS

|

Lilya

| 2022-04-28T07:00:58Z | 9 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"token-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-04-27T11:44:59Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: distilbert-base-uncased-finetuned-ner-TRANS

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-ner-TRANS

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1053

- Precision: 0.7911

- Recall: 0.8114

- F1: 0.8011

- Accuracy: 0.9815

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 12

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.077 | 1.0 | 3762 | 0.0724 | 0.7096 | 0.7472 | 0.7279 | 0.9741 |

| 0.0538 | 2.0 | 7524 | 0.0652 | 0.7308 | 0.7687 | 0.7493 | 0.9766 |

| 0.0412 | 3.0 | 11286 | 0.0643 | 0.7672 | 0.7875 | 0.7772 | 0.9788 |

| 0.0315 | 4.0 | 15048 | 0.0735 | 0.7646 | 0.7966 | 0.7803 | 0.9793 |

| 0.0249 | 5.0 | 18810 | 0.0772 | 0.7805 | 0.7981 | 0.7892 | 0.9801 |

| 0.0213 | 6.0 | 22572 | 0.0783 | 0.7829 | 0.8063 | 0.7944 | 0.9805 |

| 0.0187 | 7.0 | 26334 | 0.0858 | 0.7821 | 0.8010 | 0.7914 | 0.9809 |

| 0.0157 | 8.0 | 30096 | 0.0860 | 0.7837 | 0.8120 | 0.7976 | 0.9812 |

| 0.0122 | 9.0 | 33858 | 0.0963 | 0.7857 | 0.8129 | 0.7990 | 0.9813 |

| 0.0107 | 10.0 | 37620 | 0.0993 | 0.7934 | 0.8089 | 0.8010 | 0.9812 |

| 0.0091 | 11.0 | 41382 | 0.1031 | 0.7882 | 0.8123 | 0.8001 | 0.9814 |

| 0.0083 | 12.0 | 45144 | 0.1053 | 0.7911 | 0.8114 | 0.8011 | 0.9815 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.1

- Datasets 2.0.0

- Tokenizers 0.10.3

|

snunlp/KR-FinBert

|

snunlp

| 2022-04-28T05:06:40Z | 263 | 2 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"fill-mask",

"ko",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

---

language:

- ko

---

# KR-FinBert & KR-FinBert-SC

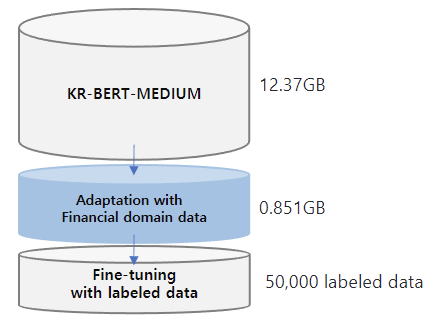

Much progress has been made in the NLP (Natural Language Processing) field, with numerous studies showing that domain adaptation using small-scale corpus and fine-tuning with labeled data is effective for overall performance improvement.

we proposed KR-FinBert for the financial domain by further pre-training it on a financial corpus and fine-tuning it for sentiment analysis. As many studies have shown, the performance improvement through adaptation and conducting the downstream task was also clear in this experiment.

## Data

The training data for this model is expanded from those of **[KR-BERT-MEDIUM](https://huggingface.co/snunlp/KR-Medium)**, texts from Korean Wikipedia, general news articles, legal texts crawled from the National Law Information Center and [Korean Comments dataset](https://www.kaggle.com/junbumlee/kcbert-pretraining-corpus-korean-news-comments). For the transfer learning, **corporate related economic news articles from 72 media sources** such as the Financial Times, The Korean Economy Daily, etc and **analyst reports from 16 securities companies** such as Kiwoom Securities, Samsung Securities, etc are added. Included in the dataset is 440,067 news titles with their content and 11,237 analyst reports. **The total data size is about 13.22GB.** For mlm training, we split the data line by line and **the total no. of lines is 6,379,315.**

KR-FinBert is trained for 5.5M steps with the maxlen of 512, training batch size of 32, and learning rate of 5e-5, taking 67.48 hours to train the model using NVIDIA TITAN XP.

## Citation

```

@misc{kr-FinBert,

author = {Kim, Eunhee and Hyopil Shin},

title = {KR-FinBert: KR-BERT-Medium Adapted With Financial Domain Data},

year = {2022},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://huggingface.co/snunlp/KR-FinBert}}

}

```

|

chv5/t5-small-shuffled_take1

|

chv5

| 2022-04-28T03:36:55Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:xsum",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-04-27T20:27:04Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- xsum

metrics:

- rouge

model-index:

- name: t5-small-shuffled_take1

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: xsum

type: xsum

args: default

metrics:

- name: Rouge1

type: rouge

value: 11.9641

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-shuffled_take1

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the xsum dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1788

- Rouge1: 11.9641

- Rouge2: 10.5245

- Rougel: 11.5825

- Rougelsum: 11.842

- Gen Len: 18.9838

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 6

- eval_batch_size: 6

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| 0.2238 | 1.0 | 34008 | 0.1788 | 11.9641 | 10.5245 | 11.5825 | 11.842 | 18.9838 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

Ahmed9275/ALL-2

|

Ahmed9275

| 2022-04-28T02:07:25Z | 64 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"huggingpics",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-04-28T02:07:14Z |

---

tags:

- image-classification

- pytorch

- huggingpics

metrics:

- accuracy

model-index:

- name: ALL-2

results:

- task:

name: Image Classification

type: image-classification

metrics:

- name: Accuracy

type: accuracy

value: 0.9855383038520813

---

# ALL-2

Autogenerated by HuggingPics🤗🖼️

Create your own image classifier for **anything** by running [the demo on Google Colab](https://colab.research.google.com/github/nateraw/huggingpics/blob/main/HuggingPics.ipynb).

Report any issues with the demo at the [github repo](https://github.com/nateraw/huggingpics).

## Example Images

|

Elie/NLP_Challenge

|

Elie

| 2022-04-28T01:50:12Z | 0 | 0 | null |

[

"region:us"

] | null | 2022-04-27T20:36:46Z |

This my Fatima Fellowship notebokk

|

yihsuan/best_model_0426_base

|

yihsuan

| 2022-04-28T01:44:27Z | 15 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"summarization",

"mT5",

"zh",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-04-26T09:05:10Z |

---

tags:

- summarization

- mT5

language:

- zh

widget:

- text: "專家稱維康桑格研究所(Wellcome Sanger Institute)的上述研究發現「令人震驚」而且「發人深省」。基因變異指關於我們身體成長和管理的相關指令,也就是DNA當中發生的變化。長期以來,變異一直被當作癌症的根源,但是數十年來關於變異是否對衰老有重要影響一直存在爭論。桑格研究所的研究人員說他們得到了「第一個試驗性證據」,證明了兩者的關係。他們分析了預期壽命各異的物種基因變異的不同速度。研究人員分析了貓、黑白疣猴、狗、雪貂、長頸鹿、馬、人、獅子、裸鼴鼠、兔子、老鼠、環尾狐猴和老虎等十幾種動物的DNA。發表在《自然》雜誌上的研究顯示,老鼠在短暫的生命當中每年經歷了將近800次變異,老鼠的壽命一般不到4年。"

inference:

parameters:

max_length: 50

---

|

Ahmed9275/ALL

|

Ahmed9275

| 2022-04-28T01:01:23Z | 62 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"huggingpics",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-04-28T01:00:00Z |

---

tags:

- image-classification

- pytorch

- huggingpics

metrics:

- accuracy

model-index:

- name: ALL

results:

- task:

name: Image Classification

type: image-classification

metrics:

- name: Accuracy

type: accuracy

value: 0.9262039065361023

---

# ALL

Autogenerated by HuggingPics🤗🖼️

Create your own image classifier for **anything** by running [the demo on Google Colab](https://colab.research.google.com/github/nateraw/huggingpics/blob/main/HuggingPics.ipynb).

Report any issues with the demo at the [github repo](https://github.com/nateraw/huggingpics).

## Example Images

|

davidenam/distilbert-base-uncased-finetuned-emotion

|

davidenam

| 2022-04-27T21:59:00Z | 13 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-04-27T18:53:15Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9205

- name: F1

type: f1

value: 0.9203318889648883

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2230

- Accuracy: 0.9205

- F1: 0.9203

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| No log | 1.0 | 250 | 0.3224 | 0.9055 | 0.9034 |

| No log | 2.0 | 500 | 0.2230 | 0.9205 | 0.9203 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cpu

- Datasets 2.1.0

- Tokenizers 0.12.1

|

SerdarHelli/Brain-MRI-GAN

|

SerdarHelli

| 2022-04-27T20:32:07Z | 0 | 0 | null |

[

"brainMRI",

"GAN",

"medicalimaging",

"pytorch",

"region:us"

] | null | 2022-04-27T19:07:39Z |

---

tags:

- brainMRI

- GAN

- medicalimaging

- pytorch

metrics:

- fid50k

---

The model's kernels etc. source code ==> https://github.com/NVlabs/stylegan3

|

gagan3012/ArOCRv4

|

gagan3012

| 2022-04-27T20:23:52Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vision-encoder-decoder",

"image-text-to-text",

"generated_from_trainer",

"doi:10.57967/hf/0018",

"endpoints_compatible",

"region:us"

] |

image-text-to-text

| 2022-04-27T18:49:46Z |

---

tags:

- generated_from_trainer

model-index:

- name: ArOCRv4

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ArOCRv4

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.5811

- Cer: 0.1249

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Cer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 3.103 | 1.18 | 1000 | 8.0852 | 11.5974 |

| 1.2535 | 2.36 | 2000 | 2.0400 | 0.4904 |

| 0.5682 | 3.55 | 3000 | 1.9336 | 0.2145 |

| 0.3038 | 4.73 | 4000 | 1.5811 | 0.1249 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.9.1

- Datasets 2.1.0

- Tokenizers 0.11.6

|

iamholmes/english-phrases-bible

|

iamholmes

| 2022-04-27T19:48:58Z | 69 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"distilbert",

"feature-extraction",

"sentence-similarity",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-04-27T19:48:50Z |

---

pipeline_tag: sentence-similarity

license: apache-2.0

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# sentence-transformers/msmarco-distilbert-base-tas-b

This is a port of the [DistilBert TAS-B Model](https://huggingface.co/sebastian-hofstaetter/distilbert-dot-tas_b-b256-msmarco) to [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and is optimized for the task of semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer, util

query = "How many people live in London?"

docs = ["Around 9 Million people live in London", "London is known for its financial district"]

#Load the model

model = SentenceTransformer('sentence-transformers/msmarco-distilbert-base-tas-b')

#Encode query and documents

query_emb = model.encode(query)

doc_emb = model.encode(docs)

#Compute dot score between query and all document embeddings

scores = util.dot_score(query_emb, doc_emb)[0].cpu().tolist()

#Combine docs & scores

doc_score_pairs = list(zip(docs, scores))

#Sort by decreasing score

doc_score_pairs = sorted(doc_score_pairs, key=lambda x: x[1], reverse=True)

#Output passages & scores

for doc, score in doc_score_pairs:

print(score, doc)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#CLS Pooling - Take output from first token

def cls_pooling(model_output):

return model_output.last_hidden_state[:,0]

#Encode text

def encode(texts):

# Tokenize sentences

encoded_input = tokenizer(texts, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input, return_dict=True)

# Perform pooling

embeddings = cls_pooling(model_output)

return embeddings

# Sentences we want sentence embeddings for

query = "How many people live in London?"

docs = ["Around 9 Million people live in London", "London is known for its financial district"]

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained("sentence-transformers/msmarco-distilbert-base-tas-b")

model = AutoModel.from_pretrained("sentence-transformers/msmarco-distilbert-base-tas-b")

#Encode query and docs

query_emb = encode(query)

doc_emb = encode(docs)

#Compute dot score between query and all document embeddings

scores = torch.mm(query_emb, doc_emb.transpose(0, 1))[0].cpu().tolist()

#Combine docs & scores

doc_score_pairs = list(zip(docs, scores))

#Sort by decreasing score

doc_score_pairs = sorted(doc_score_pairs, key=lambda x: x[1], reverse=True)

#Output passages & scores

for doc, score in doc_score_pairs:

print(score, doc)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/msmarco-distilbert-base-tas-b)

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: DistilBertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': False, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

Have a look at: [DistilBert TAS-B Model](https://huggingface.co/sebastian-hofstaetter/distilbert-dot-tas_b-b256-msmarco)

|

princeton-nlp/efficient_mlm_m0.15-801010

|

princeton-nlp

| 2022-04-27T18:54:45Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"fill-mask",

"arxiv:2202.08005",

"autotrain_compatible",

"region:us"

] |

fill-mask

| 2022-04-22T18:45:04Z |

---

inference: false

---

This is a model checkpoint for ["Should You Mask 15% in Masked Language Modeling"](https://arxiv.org/abs/2202.08005) [(code)](https://github.com/princeton-nlp/DinkyTrain.git). We use pre layer norm, which is not supported by HuggingFace. To use our model, go to our [github repo](https://github.com/princeton-nlp/DinkyTrain.git), download our code, and import the RoBERTa class from `huggingface/modeling_roberta_prelayernorm.py`. For example,

``` bash

from huggingface.modeling_roberta_prelayernorm import RobertaForMaskedLM, RobertaForSequenceClassification

```

|

princeton-nlp/efficient_mlm_m0.40

|

princeton-nlp

| 2022-04-27T18:54:13Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"fill-mask",

"arxiv:2202.08005",

"autotrain_compatible",

"region:us"

] |

fill-mask

| 2022-04-22T18:44:55Z |

---

inference: false

---

This is a model checkpoint for ["Should You Mask 15% in Masked Language Modeling"](https://arxiv.org/abs/2202.08005) [(code)](https://github.com/princeton-nlp/DinkyTrain.git). We use pre layer norm, which is not supported by HuggingFace. To use our model, go to our [github repo](https://github.com/princeton-nlp/DinkyTrain.git), download our code, and import the RoBERTa class from `huggingface/modeling_roberta_prelayernorm.py`. For example,

``` bash

from huggingface.modeling_roberta_prelayernorm import RobertaForMaskedLM, RobertaForSequenceClassification

```

|

obokkkk/wav2vec2-base-960h-finetuned_common_voice2

|

obokkkk

| 2022-04-27T18:42:54Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-04-27T15:50:53Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: wav2vec2-base-960h-finetuned_common_voice2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-960h-finetuned_common_voice2

This model is a fine-tuned version of [facebook/wav2vec2-base-960h](https://huggingface.co/facebook/wav2vec2-base-960h) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 64

- total_train_batch_size: 1024

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

faisalahmad/autotrain-nsut-nlp-project-textsummarization-791824374

|

faisalahmad

| 2022-04-27T17:50:47Z | 11 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bart",

"text2text-generation",

"autotrain",

"en",

"dataset:faisalahmad/autotrain-data-nsut-nlp-project-textsummarization",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-04-27T09:08:22Z |

---

tags: autotrain

language: en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- faisalahmad/autotrain-data-nsut-nlp-project-textsummarization

co2_eq_emissions: 1119.6398037843474

---

# Model Trained Using AutoTrain

- Problem type: Summarization

- Model ID: 791824374

- CO2 Emissions (in grams): 1119.6398037843474

## Validation Metrics

- Loss: 1.6432833671569824

- Rouge1: 38.5315

- Rouge2: 18.0869

- RougeL: 32.3742

- RougeLsum: 32.3801

- Gen Len: 19.846

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_HUGGINGFACE_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/faisalahmad/autotrain-nsut-nlp-project-textsummarization-791824374

```

|

obokkkk/mbart-large-cc25-finetuned-en-to-ko2

|

obokkkk

| 2022-04-27T17:49:20Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"mbart",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-04-27T15:00:41Z |

---

tags:

- generated_from_trainer

model-index:

- name: mbart-large-cc25-finetuned-en-to-ko2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mbart-large-cc25-finetuned-en-to-ko2

This model is a fine-tuned version of [facebook/mbart-large-cc25](https://huggingface.co/facebook/mbart-large-cc25) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 128

- total_train_batch_size: 2048

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

wypa93/keras-dummy-sequential-demo

|

wypa93

| 2022-04-27T16:46:55Z | 0 | 0 |

keras

|

[

"keras",

"tf-keras",

"region:us"

] | null | 2022-04-27T16:46:48Z |

---

library_name: keras

---

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': 0.001, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}

- training_precision: float32

## Training Metrics

Model history needed

## Model Plot

<details>

<summary>View Model Plot</summary>

</details>

|

joniponi/multilabel_inpatient_comments_16labels

|

joniponi

| 2022-04-27T16:20:55Z | 8 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-04-25T03:22:59Z |

# HCAHPS survey comments multilabel classification

This model is a fine-tuned version of [Bio_ClinicalBERT](https://huggingface.co/emilyalsentzer/Bio_ClinicalBERT) on a dataset of HCAHPS survey comments.

It achieves the following results on the evaluation set:

precision recall f1-score support

medical 0.87 0.81 0.84 83

environmental 0.77 0.91 0.84 93

administration 0.58 0.32 0.41 22

communication 0.85 0.82 0.84 50

condition 0.42 0.52 0.46 29

treatment 0.90 0.78 0.83 68

food 0.92 0.94 0.93 36

clean 0.65 0.83 0.73 18

bathroom 0.64 0.64 0.64 14

discharge 0.83 0.83 0.83 24

wait 0.96 1.00 0.98 24

financial 0.44 1.00 0.62 4

extra_nice 0.20 0.13 0.16 23

rude 1.00 0.64 0.78 11

nurse 0.92 0.98 0.95 110

doctor 0.96 0.84 0.90 57

micro avg 0.81 0.81 0.81 666

macro avg 0.75 0.75 0.73 666

weighted avg 0.82 0.81 0.81 666

samples avg 0.64 0.64 0.62 666

## Model description

The model classifies free-text comments into the following labels

* Medical

* Environmental

* Administration

* Communication

* Condition

* Treatment

* Food

* Clean

* Bathroom

* Discharge

* Wait

* Financial

* Extra_nice

* Rude

* Nurse

* Doctor

## How to use

You can now use the models directly through the transformers library. Check out the [model's page](https://huggingface.co/joniponi/multilabel_inpatient_comments_16labels) for instructions on how to use the models within the Transformers library.

Load the model via the transformers library:

```

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("joniponi/multilabel_inpatient_comments_16labels")

model = AutoModel.from_pretrained("joniponi/multilabel_inpatient_comments_16labels")

```

|

eliwill/gpt2-finetuned-krishna

|

eliwill

| 2022-04-27T16:14:21Z | 4 | 0 |

transformers

|

[

"transformers",

"tf",

"tensorboard",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-04-09T10:04:33Z |

---

model-index:

- name: eliwill/gpt2-finetuned-krishna

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# eliwill/gpt2-finetuned-krishna

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on a collection of books by Jiddu Krishnamurti.

It achieves the following results on the evaluation set:

- Train Loss: 3.4997

- Validation Loss: 3.6853

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': 2e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 3.4997 | 3.6853 | 0 |

### Framework versions

- Transformers 4.18.0

- TensorFlow 2.8.0

- Datasets 2.0.0

- Tokenizers 0.11.6

|

Das282000Prit/bert-base-uncased-finetuned-wikitext2

|

Das282000Prit

| 2022-04-27T16:11:28Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"fill-mask",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-04-27T15:00:15Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: bert-base-uncased-finetuned-wikitext2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-finetuned-wikitext2

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7295

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.9288 | 1.0 | 2319 | 1.7729 |

| 1.8208 | 2.0 | 4638 | 1.7398 |

| 1.7888 | 3.0 | 6957 | 1.7523 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

faisalahmad/summarizer1

|

faisalahmad

| 2022-04-27T15:53:08Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bart",

"text2text-generation",

"autotrain",

"en",

"dataset:faisalahmad/autotrain-data-nsut-nlp-project-textsummarization",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-04-27T09:08:33Z |

---

tags: autotrain

language: en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- faisalahmad/autotrain-data-nsut-nlp-project-textsummarization

co2_eq_emissions: 736.9366247330848

---

# Model Trained Using AutoTrain

- Problem type: Summarization

- Model ID: 791824379

- CO2 Emissions (in grams): 736.9366247330848

## Validation Metrics

- Loss: 1.7805895805358887

- Rouge1: 37.8222

- Rouge2: 16.7598

- RougeL: 31.2959

- RougeLsum: 31.3048

- Gen Len: 19.7213

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_HUGGINGFACE_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/faisalahmad/autotrain-nsut-nlp-project-textsummarization-791824379

```

|

stevems1/bert-base-uncased-French123

|

stevems1

| 2022-04-27T14:55:35Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"fill-mask",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-04-27T14:40:05Z |

---

tags:

- generated_from_trainer

model-index:

- name: bert-base-uncased-French123

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-French123

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

espnet/chai_librispeech_asr_train_rnnt_conformer_raw_en_bpe5000_sp

|

espnet

| 2022-04-27T14:51:25Z | 4 | 0 |

espnet

|

[

"espnet",

"audio",

"automatic-speech-recognition",

"en",

"arxiv:1804.00015",

"license:cc-by-4.0",

"region:us"

] |

automatic-speech-recognition

| 2022-03-24T21:32:22Z |

---

tags:

- espnet

- audio

- automatic-speech-recognition

language: en

datasets:

- librispeech_asr

- librispeech 960h

license: cc-by-4.0

---

## ESPnet2 model

This model was trained by Chaitanya Narisetty using recipe in [espnet](https://github.com/espnet/espnet/).

<!-- Generated by scripts/utils/show_asr_result.sh -->

# RESULTS

## Environments

- date: `Fri Mar 25 04:35:42 EDT 2022`

- python version: `3.9.5 (default, Jun 4 2021, 12:28:51) [GCC 7.5.0]`

- espnet version: `espnet 0.10.7a1`

- pytorch version: `pytorch 1.8.1+cu111`

- Git hash: `21d19be00089678ca27f7fce474ef8d787689512`

- Commit date: `Wed Mar 16 08:06:52 2022 -0400`

## asr_train_rnnt_conformer_ngpu4_raw_en_bpe5000_sp

### WER

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|---|---|---|---|---|---|---|---|---|

|decode_rnnt_conformer_asr_model_valid.loss.ave_10best/test_clean|2620|52576|97.2|2.5|0.3|0.3|3.1|35.2|

|decode_rnnt_conformer_asr_model_valid.loss.ave_10best/test_other|2939|52343|93.4|6.0|0.6|0.8|7.4|56.3|

|decode_rnnt_conformer_asr_model_valid.loss.ave_3best/test_clean|2620|52576|97.1|2.6|0.3|0.3|3.2|35.8|

|decode_rnnt_conformer_asr_model_valid.loss.ave_3best/test_other|2939|52343|93.1|6.1|0.7|0.8|7.7|57.0|

|decode_rnnt_conformer_asr_model_valid.loss.ave_5best/test_clean|2620|52576|97.2|2.5|0.3|0.3|3.1|35.8|

|decode_rnnt_conformer_asr_model_valid.loss.ave_5best/test_other|2939|52343|93.3|6.0|0.7|0.8|7.5|56.5|

|decode_rnnt_conformer_asr_model_valid.loss.best/test_clean|2620|52576|96.8|2.8|0.4|0.4|3.6|38.3|

|decode_rnnt_conformer_asr_model_valid.loss.best/test_other|2939|52343|92.2|6.9|0.9|0.9|8.7|61.7|

### CER

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|---|---|---|---|---|---|---|---|---|

|decode_rnnt_conformer_asr_model_valid.loss.ave_10best/test_clean|2620|281530|99.3|0.4|0.3|0.3|1.0|35.2|

|decode_rnnt_conformer_asr_model_valid.loss.ave_10best/test_other|2939|272758|97.7|1.4|1.0|0.9|3.2|56.3|

|decode_rnnt_conformer_asr_model_valid.loss.ave_3best/test_clean|2620|281530|99.2|0.4|0.4|0.3|1.1|35.8|

|decode_rnnt_conformer_asr_model_valid.loss.ave_3best/test_other|2939|272758|97.5|1.4|1.1|0.9|3.4|57.0|

|decode_rnnt_conformer_asr_model_valid.loss.ave_5best/test_clean|2620|281530|99.2|0.4|0.4|0.3|1.1|35.8|

|decode_rnnt_conformer_asr_model_valid.loss.ave_5best/test_other|2939|272758|97.6|1.4|1.0|0.9|3.2|56.5|

|decode_rnnt_conformer_asr_model_valid.loss.best/test_clean|2620|281530|99.1|0.5|0.4|0.3|1.2|38.3|

|decode_rnnt_conformer_asr_model_valid.loss.best/test_other|2939|272758|97.1|1.6|1.3|1.0|3.9|61.7|

### TER

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|---|---|---|---|---|---|---|---|---|

|decode_rnnt_conformer_asr_model_valid.loss.ave_10best/test_clean|2620|65818|96.6|2.4|1.0|0.5|3.9|35.2|

|decode_rnnt_conformer_asr_model_valid.loss.ave_10best/test_other|2939|65101|92.1|5.9|2.0|1.3|9.2|56.3|

|decode_rnnt_conformer_asr_model_valid.loss.ave_3best/test_clean|2620|65818|96.6|2.5|1.0|0.5|4.0|35.8|

|decode_rnnt_conformer_asr_model_valid.loss.ave_3best/test_other|2939|65101|91.8|6.1|2.1|1.3|9.6|57.0|

|decode_rnnt_conformer_asr_model_valid.loss.ave_5best/test_clean|2620|65818|96.6|2.5|1.0|0.5|3.9|35.8|

|decode_rnnt_conformer_asr_model_valid.loss.ave_5best/test_other|2939|65101|92.0|5.9|2.0|1.3|9.2|56.5|

|decode_rnnt_conformer_asr_model_valid.loss.best/test_clean|2620|65818|96.1|2.8|1.1|0.6|4.4|38.3|

|decode_rnnt_conformer_asr_model_valid.loss.best/test_other|2939|65101|90.7|6.8|2.5|1.5|10.8|61.7|

## ASR config

<details><summary>expand</summary>

```

config: conf/train_rnnt_conformer_ngpu4.yaml

print_config: false

log_level: INFO

dry_run: false

iterator_type: sequence

output_dir: exp/asr_train_rnnt_conformer_ngpu4_raw_en_bpe5000_sp

ngpu: 2

seed: 0

num_workers: 1

num_att_plot: 3

dist_backend: nccl

dist_init_method: env://

dist_world_size: null

dist_rank: null

local_rank: 0

dist_master_addr: null

dist_master_port: null

dist_launcher: null

multiprocessing_distributed: false

unused_parameters: false

sharded_ddp: false

cudnn_enabled: true

cudnn_benchmark: false

cudnn_deterministic: true

collect_stats: false

write_collected_feats: false

max_epoch: 18

patience: null

val_scheduler_criterion:

- valid

- loss

early_stopping_criterion:

- valid

- loss

- min

best_model_criterion:

- - valid

- loss

- min

keep_nbest_models: 10

nbest_averaging_interval: 0

grad_clip: 5.0

grad_clip_type: 2.0

grad_noise: false

accum_grad: 6

no_forward_run: false

resume: true

train_dtype: float32

use_amp: false

log_interval: null

use_matplotlib: true

use_tensorboard: true

use_wandb: false

wandb_project: null

wandb_id: null

wandb_entity: null

wandb_name: null

wandb_model_log_interval: -1

detect_anomaly: false

pretrain_path: null

init_param: []

ignore_init_mismatch: false

freeze_param: []

num_iters_per_epoch: null

batch_size: 20

valid_batch_size: null

batch_bins: 6000000

valid_batch_bins: null

train_shape_file:

- exp/asr_stats_raw_en_bpe5000_sp/train/speech_shape

- exp/asr_stats_raw_en_bpe5000_sp/train/text_shape.bpe

valid_shape_file:

- exp/asr_stats_raw_en_bpe5000_sp/valid/speech_shape

- exp/asr_stats_raw_en_bpe5000_sp/valid/text_shape.bpe

batch_type: numel

valid_batch_type: null

fold_length:

- 80000

- 150

sort_in_batch: descending

sort_batch: descending

multiple_iterator: false

chunk_length: 500

chunk_shift_ratio: 0.5

num_cache_chunks: 1024

train_data_path_and_name_and_type:

- - dump/raw/train_960_sp/wav.scp

- speech

- kaldi_ark

- - dump/raw/train_960_sp/text

- text

- text

valid_data_path_and_name_and_type:

- - dump/raw/dev/wav.scp

- speech

- kaldi_ark

- - dump/raw/dev/text

- text

- text

allow_variable_data_keys: false

max_cache_size: 0.0

max_cache_fd: 32

valid_max_cache_size: null

optim: adam

optim_conf:

lr: 0.0015

scheduler: warmuplr

scheduler_conf:

warmup_steps: 25000

token_list:

- <blank>

- <unk>

- ▁THE

- S

- ▁AND

- ▁OF

- ▁TO

- ▁A

- ▁IN

- ▁I

- ▁HE

- ▁THAT

- ▁WAS

- ED

- ▁IT

- ''''

- ▁HIS

- ING

- ▁YOU

- ▁WITH

- ▁FOR

- ▁HAD

- T

- ▁AS

- ▁HER

- ▁IS

- ▁BE

- ▁BUT

- ▁NOT

- ▁SHE

- D

- ▁AT

- ▁ON

- LY

- ▁HIM

- ▁THEY

- ▁ALL

- ▁HAVE

- ▁BY

- ▁SO

- ▁THIS

- ▁MY

- ▁WHICH

- ▁ME

- ▁SAID

- ▁FROM

- ▁ONE

- Y

- E

- ▁WERE

- ▁WE

- ▁NO

- N

- ▁THERE

- ▁OR

- ER

- ▁AN

- ▁WHEN

- ▁ARE

- ▁THEIR

- ▁WOULD

- ▁IF

- ▁WHAT

- ▁THEM

- ▁WHO

- ▁OUT

- M

- ▁DO

- ▁WILL

- ▁UP

- ▁BEEN

- P

- R

- ▁MAN

- ▁THEN

- ▁COULD

- ▁MORE

- C

- ▁INTO

- ▁NOW

- ▁VERY

- ▁YOUR

- ▁SOME

- ▁LITTLE

- ES

- ▁TIME

- RE

- ▁CAN

- ▁LIKE

- LL

- ▁ABOUT

- ▁HAS

- ▁THAN

- ▁DID

- ▁UPON

- ▁OVER

- IN

- ▁ANY

- ▁WELL

- ▁ONLY

- B

- ▁SEE

- ▁GOOD

- ▁OTHER

- ▁TWO

- L

- ▁KNOW

- ▁GO

- ▁DOWN

- ▁BEFORE

- A

- AL

- ▁OUR

- ▁OLD

- ▁SHOULD

- ▁MADE

- ▁AFTER

- ▁GREAT

- ▁DAY

- ▁MUST

- ▁COME

- ▁HOW

- ▁SUCH

- ▁CAME

- LE

- ▁WHERE

- ▁US

- ▁NEVER

- ▁THESE

- ▁MUCH

- ▁DE

- ▁MISTER

- ▁WAY

- G

- ▁S

- ▁MAY

- ATION

- ▁LONG

- OR

- ▁AM

- ▁FIRST

- ▁BACK

- ▁OWN

- ▁RE

- ▁AGAIN

- ▁SAY

- ▁MEN

- ▁WENT

- ▁HIMSELF

- ▁HERE

- NESS

- ▁THINK

- V

- IC

- ▁EVEN

- ▁THOUGHT

- ▁HAND

- ▁JUST

- ▁O

- ▁UN

- VE

- ION

- ▁ITS

- 'ON'

- ▁MAKE

- ▁MIGHT

- ▁TOO

- K

- ▁AWAY

- ▁LIFE

- TH

- ▁WITHOUT

- ST

- ▁THROUGH

- ▁MOST

- ▁TAKE

- ▁DON

- ▁EVERY

- F

- O

- ▁SHALL

- ▁THOSE

- ▁EYES

- AR

- ▁STILL

- ▁LAST

- ▁HOUSE

- ▁HEAD

- ABLE

- ▁NOTHING

- ▁NIGHT

- ITY

- ▁LET

- ▁MANY

- ▁OFF

- ▁BEING

- ▁FOUND

- ▁WHILE

- EN

- ▁SAW

- ▁GET

- ▁PEOPLE

- ▁FACE

- ▁YOUNG

- CH

- ▁UNDER

- ▁ONCE

- ▁TELL

- AN

- ▁THREE

- ▁PLACE

- ▁ROOM

- ▁YET

- ▁SAME

- IL

- US

- U

- ▁FATHER

- ▁RIGHT

- EL

- ▁THOUGH

- ▁ANOTHER

- LI

- RI

- ▁HEART

- IT

- ▁PUT

- ▁TOOK

- ▁GIVE

- ▁EVER

- ▁E

- ▁PART

- ▁WORK

- ERS

- ▁LOOK

- ▁NEW

- ▁KING

- ▁MISSUS

- ▁SIR

- ▁LOVE

- ▁MIND

- ▁LOOKED

- W

- RY

- ▁ASKED

- ▁LEFT

- ET

- ▁LIGHT

- CK

- ▁DOOR

- ▁MOMENT

- RO

- ▁WORLD

- ▁THINGS

- ▁HOME

- UL

- ▁THING

- LA

- ▁WHY

- ▁MOTHER

- ▁ALWAYS

- ▁FAR

- FUL

- ▁WATER

- CE

- IVE

- UR

- ▁HEARD

- ▁SOMETHING

- ▁SEEMED

- I

- LO

- ▁BECAUSE

- OL

- ▁END

- ▁TOLD

- ▁CON

- ▁YES

- ▁GOING

- ▁GOT

- RA

- IR

- ▁WOMAN

- ▁GOD

- EST

- TED

- ▁FIND

- ▁KNEW

- ▁SOON

- ▁EACH

- ▁SIDE

- H

- TON

- MENT

- ▁OH

- NE

- Z

- LING

- ▁AGAINST

- TER

- ▁NAME

- ▁MISS

- ▁QUITE

- ▁WANT

- ▁YEARS

- ▁FEW

- ▁BETTER

- ENT

- ▁HALF

- ▁DONE

- ▁ALSO

- ▁BEGAN

- ▁HAVING

- ▁ENOUGH

- IS

- ▁LADY

- ▁WHOLE

- LESS

- ▁BOTH

- ▁SEEN

- ▁SET

- ▁WHITE

- ▁COURSE

- IES

- ▁VOICE

- ▁CALLED

- ▁D

- ▁EX

- ATE

- ▁TURNED

- ▁GAVE

- ▁C

- ▁POOR

- MAN

- UT

- NA

- ▁DEAR

- ISH

- ▁GIRL

- ▁MORNING

- ▁BETWEEN

- LED

- ▁NOR

- IA

- ▁AMONG

- MA

- ▁

- ▁SMALL

- ▁REST

- ▁WHOM

- ▁FELT

- ▁HANDS

- ▁MYSELF

- ▁HIGH

- ▁M

- ▁HOWEVER

- ▁HERSELF

- ▁P

- CO

- ▁STOOD

- ID

- ▁KIND

- ▁HUNDRED

- AS

- ▁ROUND

- ▁ALMOST

- TY

- ▁SINCE

- ▁G

- AM

- ▁LA

- SE

- ▁BOY

- ▁MA

- ▁PERHAPS

- ▁WORDS

- ATED

- ▁HO

- X

- ▁MO

- ▁SAT

- ▁REPLIED

- ▁FOUR

- ▁ANYTHING

- ▁TILL

- ▁UNTIL

- ▁BLACK

- TION

- ▁CRIED

- RU

- TE

- ▁FACT

- ▁HELP

- ▁NEXT

- ▁LOOKING

- ▁DOES

- ▁FRIEND

- ▁LAY

- ANCE

- ▁POWER

- ▁BROUGHT

- VER

- ▁FIRE

- ▁KEEP

- PO

- FF

- ▁COUNTRY

- ▁SEA

- ▁WORD

- ▁CAR

- ▁DAYS

- ▁TOGETHER

- ▁IMP

- ▁REASON

- KE

- ▁INDEED

- TING

- ▁MATTER

- ▁FULL

- ▁TEN

- TIC

- ▁LAND

- ▁RATHER

- ▁AIR

- ▁HOPE

- ▁DA

- ▁OPEN

- ▁FEET

- ▁EN

- ▁FIVE

- ▁POINT

- ▁CO

- OM

- ▁LARGE

- ▁B

- ▁CL

- ME

- ▁GONE

- ▁CHILD

- INE

- GG

- ▁BEST

- ▁DIS

- UM

- ▁HARD

- ▁LORD

- OUS

- ▁WIFE

- ▁SURE

- ▁FORM

- DE

- ▁DEATH

- ANT

- ▁NATURE

- ▁BA

- ▁CARE

- ▁BELIEVE

- PP

- ▁NEAR

- ▁RO

- ▁RED

- ▁WAR

- IE

- ▁SPEAK

- ▁FEAR

- ▁CASE

- ▁TAKEN

- ▁ALONG

- ▁CANNOT

- ▁HEAR

- ▁THEMSELVES

- CI

- ▁PRESENT

- AD

- ▁MASTER

- ▁SON

- ▁THUS

- ▁LI

- ▁LESS

- ▁SUN

- ▁TRUE

- IM

- IOUS

- ▁THOUSAND

- ▁MONEY

- ▁W

- ▁BEHIND

- ▁CHILDREN

- ▁DOCTOR

- AC

- ▁TWENTY

- ▁WISH

- ▁SOUND

- ▁WHOSE

- ▁LEAVE

- ▁ANSWERED

- ▁THOU

- ▁DUR

- ▁HA

- ▁CERTAIN

- ▁PO

- ▁PASSED

- GE

- TO

- ▁ARM

- ▁LO

- ▁STATE

- ▁ALONE

- TA

- ▁SHOW

- ▁NEED

- ▁LIVE

- ND

- ▁DEAD

- ENCE

- ▁STRONG

- ▁PRE

- ▁TI

- ▁GROUND

- SH

- TI

- ▁SHORT

- IAN

- UN

- ▁PRO

- ▁HORSE

- MI

- ▁PRINCE

- ARD

- ▁FELL

- ▁ORDER

- ▁CALL

- AT

- ▁GIVEN

- ▁DARK

- ▁THEREFORE

- ▁CLOSE

- ▁BODY

- ▁OTHERS

- ▁SENT

- ▁SECOND

- ▁OFTEN

- ▁CA

- ▁MANNER

- MO

- NI

- ▁BRING

- ▁QUESTION

- ▁HOUR

- ▁BO

- AGE

- ▁ST

- ▁TURN

- ▁TABLE

- ▁GENERAL

- ▁EARTH

- ▁BED

- ▁REALLY

- ▁SIX

- 'NO'

- IST

- ▁BECOME

- ▁USE

- ▁READ

- ▁SE

- ▁VI

- ▁COMING

- ▁EVERYTHING

- ▁EM

- ▁ABOVE

- ▁EVENING

- ▁BEAUTIFUL

- ▁FEEL

- ▁RAN

- ▁LEAST

- ▁LAW

- ▁ALREADY

- ▁MEAN

- ▁ROSE

- WARD

- ▁ITSELF

- ▁SOUL

- ▁SUDDENLY

- ▁AROUND

- RED

- ▁ANSWER

- ICAL

- ▁RA

- ▁WIND

- ▁FINE

- ▁WON

- ▁WHETHER

- ▁KNOWN

- BER

- NG

- ▁TA

- ▁CAPTAIN

- ▁EYE

- ▁PERSON

- ▁WOMEN

- ▁SORT

- ▁ASK

- ▁BROTHER

- ▁USED

- ▁HELD

- ▁BIG