modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-12 12:31:00

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 555

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-12 12:28:53

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

silveroxides/Chroma-LoRA-Experiments

|

silveroxides

| 2025-09-12T02:57:03Z | 0 | 117 | null |

[

"base_model:lodestones/Chroma",

"base_model:finetune:lodestones/Chroma",

"license:cc-by-sa-4.0",

"region:us"

] | null | 2025-03-09T19:02:25Z |

---

license: cc-by-sa-4.0

base_model:

- lodestones/Chroma

---

<br>

<h1>YOU ARE UNDER NO CIRCUMSTANCE ALLOWED TO REDISTRIBUTE THE</h1>

<h1>FILES IN THIS REPOSITORY ON ANY OTHER SITE SUCH AS CIVITAI</h1>

<br>

These nodes are not official but for personal experimentation.

<br>

They are made available for use but you will have to figure out weight on your own for most of them.

<br>

<br>

<b>Chroma-Anthro</b> - LoRA name kind of says it. Heavily biased towards any anthro style. Up to 1.0 Weight.<br><br>

<b>Chroma-FurAlpha</b> - LoRA based of Chromafur Alpha. Lodestones first Flux1 model release. Up to 1.0 Weight.<br><br>

<b>Chroma-RealFur</b> - LoRA based of freek22 Midgard Flux model. Up to 1.0 Weight.<br><br>

<b>Chroma-Turbo</b> - General purpose low step LoRA(best used in combo with other LoRA). Keep at mid to normal Weight(0.5-1.0).<br><br>

<b>Chroma2schnell</b> - Schnell similar low step LoRA. Keep at low Weight(0.3-0.6 for 8-12 step).<br><br>

<b>Chroma_NSFW_Porn</b> - Mainstream style nsfw LoRA. Up to 1.0 Weight.<br><br>

<b>Chroma-ProjReal</b> - LoRA based on a flux1 model called Project0. Up to 1.0 Weight.<br><br>

<b>Chroma-RealFine</b> - LoRA based on a flux1 model called UltraRealFinetune. Up to 1.0 Weight.<br><br>

<b>Chroma-ProjDev</b> - Basically converts Chroma to generate close to flux1-dev style. Up to 1.0 Weight.<br><br>

|

VoilaRaj/81_g_TjUX8U

|

VoilaRaj

| 2025-09-12T02:54:56Z | 0 | 0 | null |

[

"safetensors",

"any-to-any",

"omega",

"omegalabs",

"bittensor",

"agi",

"license:mit",

"region:us"

] |

any-to-any

| 2025-09-12T02:54:28Z |

---

license: mit

tags:

- any-to-any

- omega

- omegalabs

- bittensor

- agi

---

This is an Any-to-Any model checkpoint for the OMEGA Labs x Bittensor Any-to-Any subnet.

Check out the [git repo](https://github.com/omegalabsinc/omegalabs-anytoany-bittensor) and find OMEGA on X: [@omegalabsai](https://x.com/omegalabsai).

|

danganhdat/nuextract-2b-ft-maxim-invoice-qlora

|

danganhdat

| 2025-09-12T02:54:54Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2_vl",

"image-to-text",

"arxiv:1910.09700",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

image-to-text

| 2025-09-11T18:08:23Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

whybe-choi/Qwen2.5-3B-Instruct-clinic-sft

|

whybe-choi

| 2025-09-12T02:54:09Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2",

"text-generation",

"generated_from_trainer",

"trl",

"sft",

"conversational",

"base_model:Qwen/Qwen2.5-7B-Instruct",

"base_model:finetune:Qwen/Qwen2.5-7B-Instruct",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-12T02:41:18Z |

---

base_model: Qwen/Qwen2.5-7B-Instruct

library_name: transformers

model_name: Qwen2.5-7B-Instruct

tags:

- generated_from_trainer

- trl

- sft

licence: license

---

# Model Card for Qwen2.5-7B-Instruct

This model is a fine-tuned version of [Qwen/Qwen2.5-7B-Instruct](https://huggingface.co/Qwen/Qwen2.5-7B-Instruct).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="None", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/rlcf-train/rlcf-sft/runs/5ilk1vch)

This model was trained with SFT.

### Framework versions

- TRL: 0.22.2

- Transformers: 4.56.0

- Pytorch: 2.7.1

- Datasets: 4.0.0

- Tokenizers: 0.22.0

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

OPPOer/Qwen-Image-Pruning

|

OPPOer

| 2025-09-12T02:53:59Z | 11 | 1 |

diffusers

|

[

"diffusers",

"safetensors",

"text-to-image",

"en",

"zh",

"base_model:Qwen/Qwen-Image",

"base_model:finetune:Qwen/Qwen-Image",

"license:apache-2.0",

"diffusers:QwenImagePipeline",

"region:us"

] |

text-to-image

| 2025-09-09T11:02:16Z |

---

license: apache-2.0

base_model:

- Qwen/Qwen-Image

language:

- en

- zh

library_name: diffusers

pipeline_tag: text-to-image

---

<div align="center">

<h1>Qwen-Image-Pruning</h1>

<a href='https://github.com/OPPO-Mente-Lab/Qwen-Image-Pruning'><img src="https://img.shields.io/badge/GitHub-OPPOer-blue.svg?logo=github" alt="GitHub"></a>

</div>

## Introduction

This open-source project is based on Qwen-Image and has attempted model pruning, removing 20 layers while retaining the weights of 40 layers, resulting in a model size of 13.3B parameters. The pruned model has experienced a slight drop in objective metrics. The pruned version will continue to be iterated upon. Additionally, the pruned version supports the adaptation and loading of community models such as LoRA and ControlNet. Please stay tuned. For the relevant inference scripts, please refer to https://github.com/OPPO-Mente-Lab/Qwen-Image-Pruning.

<div align="center">

<img src="bench.png">

</div>

## Quick Start

Install the latest version of diffusers and pytorch

```

pip install torch

pip install git+https://github.com/huggingface/diffusers

```

### 1. Qwen-Image-Pruning Inference

```python

import torch

import os

from diffusers import DiffusionPipeline

model_name = "OPPOer/Qwen-Image-Pruning"

if torch.cuda.is_available():

torch_dtype = torch.bfloat16

device = "cuda"

else:

torch_dtype = torch.bfloat16

device = "cpu"

pipe = DiffusionPipeline.from_pretrained(model_name, torch_dtype=torch_dtype)

pipe = pipe.to(device)

# Generate image

positive_magic = {"en": ", Ultra HD, 4K, cinematic composition.", # for english prompt,

"zh": ",超清,4K,电影级构图。" # for chinese prompt,

}

negative_prompt = " "

prompts = [

'一个穿着"QWEN"标志的T恤的中国美女正拿着黑色的马克笔面相镜头微笑。她身后的玻璃板上手写体写着 "一、Qwen-Image的技术路线: 探索视觉生成基础模型的极限,开创理解与生成一体化的未来。二、Qwen-Image的模型特色:1、复杂文字渲染。支持中英渲染、自动布局; 2、精准图像编辑。支持文字编辑、物体增减、风格变换。三、Qwen-Image的未来愿景:赋能专业内容创作、助力生成式AI发展。"',

'海报,温馨家庭场景,柔和阳光洒在野餐布上,色彩温暖明亮,主色调为浅黄、米白与淡绿,点缀着鲜艳的水果和野花,营造轻松愉快的氛围,画面简洁而富有层次,充满生活气息,传达家庭团聚与自然和谐的主题。文字内容:“共享阳光,共享爱。全家一起野餐,享受美好时光。让每一刻都充满欢笑与温暖。”',

'一个穿着校服的年轻女孩站在教室里,在黑板上写字。黑板中央用整洁的白粉笔写着“Introducing Qwen-Image, a foundational image generation model that excels in complex text rendering and precise image editing”。柔和的自然光线透过窗户,投下温柔的阴影。场景以写实的摄影风格呈现,细节精细,景深浅,色调温暖。女孩专注的表情和空气中的粉笔灰增添了动感。背景元素包括课桌和教育海报,略微模糊以突出中心动作。超精细32K分辨率,单反质量,柔和的散景效果,纪录片式的构图。',

'一个台球桌上放着两排台球,每排5个,第一行的台球上面分别写着"Qwen""Image" "将 "于" "8" ,第二排台球上面分别写着"月" "正" "式" "发" "布" 。',

]

output_dir = 'examples_Pruning'

os.makedirs(output_dir, exist_ok=True)

for prompt in prompts:

output_img_path = f"{output_dir}/{prompt[:80]}.png"

image = pipe(

prompt=prompt + positive_magic['zh'],

negative_prompt=negative_prompt,

width=1328,

height=1328,

num_inference_steps=8,

true_cfg_scale=1,

generator=torch.Generator(device="cuda").manual_seed(42)

).images[0]

image.save(output_img_path)

```

### 2. Qwen-Image-Pruning & Realism-LoRA Inference

```python

import torch

import os

from diffusers import DiffusionPipeline

model_name = "OPPOer/Qwen-Image-Pruning"

lora_name = 'flymy_realism.safetensors'

if torch.cuda.is_available():

torch_dtype = torch.bfloat16

device = "cuda"

else:

torch_dtype = torch.bfloat16

device = "cpu"

pipe = DiffusionPipeline.from_pretrained(model_name, torch_dtype=torch_dtype)

pipe = pipe.to(device)

pipe.load_lora_weights(lora_name, adapter_name="lora")

# Generate image

positive_magic = {"en": ", Ultra HD, 4K, cinematic composition.", # for english prompt,

"zh": ",超清,4K,电影级构图。" # for chinese prompt,

}

negative_prompt = " "

prompts = [

'一个穿着"QWEN"标志的T恤的中国美女正拿着黑色的马克笔面相镜头微笑。她身后的玻璃板上手写体写着 "一、Qwen-Image的技术路线: 探索视觉生成基础模型的极限,开创理解与生成一体化的未来。二、Qwen-Image的模型特色:1、复杂文字渲染。支持中英渲染、自动布局; 2、精准图像编辑。支持文字编辑、物体增减、风格变换。三、Qwen-Image的未来愿景:赋能专业内容创作、助力生成式AI发展。"',

'海报,温馨家庭场景,柔和阳光洒在野餐布上,色彩温暖明亮,主色调为浅黄、米白与淡绿,点缀着鲜艳的水果和野花,营造轻松愉快的氛围,画面简洁而富有层次,充满生活气息,传达家庭团聚与自然和谐的主题。文字内容:“共享阳光,共享爱。全家一起野餐,享受美好时光。让每一刻都充满欢笑与温暖。”',

'一个穿着校服的年轻女孩站在教室里,在黑板上写字。黑板中央用整洁的白粉笔写着“Introducing Qwen-Image, a foundational image generation model that excels in complex text rendering and precise image editing”。柔和的自然光线透过窗户,投下温柔的阴影。场景以写实的摄影风格呈现,细节精细,景深浅,色调温暖。女孩专注的表情和空气中的粉笔灰增添了动感。背景元素包括课桌和教育海报,略微模糊以突出中心动作。超精细32K分辨率,单反质量,柔和的散景效果,纪录片式的构图。',

'一个台球桌上放着两排台球,每排5个,第一行的台球上面分别写着"Qwen""Image" "将 "于" "8" ,第二排台球上面分别写着"月" "正" "式" "发" "布" 。',

]

output_dir = 'examples_Pruning+Realism_LoRA'

os.makedirs(output_dir, exist_ok=True)

for prompt in prompts:

output_img_path = f"{output_dir}/{prompt[:80]}.png"

image = pipe(

prompt=prompt + positive_magic['zh'],

negative_prompt=negative_prompt,

width=1328,

height=1328,

num_inference_steps=8,

true_cfg_scale=1,

generator=torch.Generator(device="cuda").manual_seed(42)

).images[0]

image.save(output_img_path)

```

### 3. Qwen-Image-Pruning & ControlNet Inference

```python

import os

import glob

import torch

from diffusers import DiffusionPipeline

from diffusers.utils import load_image

from diffusers import QwenImageControlNetPipeline, QwenImageControlNetModel

model_name = "OPPOer/Qwen-Image-Pruning"

controlnet_name = "InstantX/Qwen-Image-ControlNet-Union"

# Load the pipeline

if torch.cuda.is_available():

torch_dtype = torch.bfloat16

device = "cuda"

else:

torch_dtype = torch.bfloat16

device = "cpu"

controlnet = QwenImageControlNetModel.from_pretrained(controlnet_name, torch_dtype=torch.bfloat16)

pipe = QwenImageControlNetPipeline.from_pretrained(

model_name, controlnet=controlnet, torch_dtype=torch.bfloat16

)

pipe = pipe.to(device)

# Generate image

prompt_dict = {

"soft_edge.png": "Photograph of a young man with light brown hair jumping mid-air off a large, reddish-brown rock. He's wearing a navy blue sweater, light blue shirt, gray pants, and brown shoes. His arms are outstretched, and he has a slight smile on his face. The background features a cloudy sky and a distant, leafless tree line. The grass around the rock is patchy.",

"canny.png": "Aesthetics art, traditional asian pagoda, elaborate golden accents, sky blue and white color palette, swirling cloud pattern, digital illustration, east asian architecture, ornamental rooftop, intricate detailing on building, cultural representation.",

"depth.png": "A swanky, minimalist living room with a huge floor-to-ceiling window letting in loads of natural light. A beige couch with white cushions sits on a wooden floor, with a matching coffee table in front. The walls are a soft, warm beige, decorated with two framed botanical prints. A potted plant chills in the corner near the window. Sunlight pours through the leaves outside, casting cool shadows on the floor.",

"pose.png": "Photograph of a young man with light brown hair and a beard, wearing a beige flat cap, black leather jacket, gray shirt, brown pants, and white sneakers. He's sitting on a concrete ledge in front of a large circular window, with a cityscape reflected in the glass. The wall is cream-colored, and the sky is clear blue. His shadow is cast on the wall.",

}

controlnet_conditioning_scale = 1.0

output_dir = f'examples_Pruning+ControlNet'

os.makedirs(output_dir, exist_ok=True)

for path in glob.glob('conds/*'):

control_image = load_image(path)

image_name = path.split('/')[-1]

if image_name in prompt_dict:

image = pipe(

prompt=prompt_dict[image_name],

negative_prompt=" ",

control_image=control_image,

controlnet_conditioning_scale=controlnet_conditioning_scale,

width=control_image.size[0],

height=control_image.size[1],

num_inference_steps=8,

true_cfg_scale=4.0,

generator=torch.Generator(device="cuda").manual_seed(42),

).images[0]

image.save(os.path.join(output_dir, image_name))

```

|

stonermay/blockassist-bc-diving_lightfooted_caterpillar_1757645412

|

stonermay

| 2025-09-12T02:51:22Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"diving lightfooted caterpillar",

"arxiv:2504.07091",

"region:us"

] | null | 2025-09-12T02:51:12Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- diving lightfooted caterpillar

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

omerbektasss/blockassist-bc-insectivorous_bold_lion_1757645435

|

omerbektasss

| 2025-09-12T02:50:55Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"insectivorous bold lion",

"arxiv:2504.07091",

"region:us"

] | null | 2025-09-12T02:50:51Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- insectivorous bold lion

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

VoilaRaj/81_g_j09815

|

VoilaRaj

| 2025-09-12T02:50:04Z | 0 | 0 | null |

[

"safetensors",

"any-to-any",

"omega",

"omegalabs",

"bittensor",

"agi",

"license:mit",

"region:us"

] |

any-to-any

| 2025-09-12T02:49:35Z |

---

license: mit

tags:

- any-to-any

- omega

- omegalabs

- bittensor

- agi

---

This is an Any-to-Any model checkpoint for the OMEGA Labs x Bittensor Any-to-Any subnet.

Check out the [git repo](https://github.com/omegalabsinc/omegalabs-anytoany-bittensor) and find OMEGA on X: [@omegalabsai](https://x.com/omegalabsai).

|

antisoc-qa-assoc/uphill-instruct-crest-e2-clash-e2-lime-faint-try1

|

antisoc-qa-assoc

| 2025-09-12T02:47:51Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"mixtral",

"text-generation",

"mergekit",

"merge",

"arxiv:2311.03099",

"base_model:antisoc-qa-assoc/Mixtral-8x7B-Yes-Instruct-LimaRP",

"base_model:merge:antisoc-qa-assoc/Mixtral-8x7B-Yes-Instruct-LimaRP",

"base_model:antisoc-qa-assoc/uphill-instruct-clash-e2",

"base_model:merge:antisoc-qa-assoc/uphill-instruct-clash-e2",

"base_model:antisoc-qa-assoc/uphill-instruct-crest-0.1-e2",

"base_model:merge:antisoc-qa-assoc/uphill-instruct-crest-0.1-e2",

"base_model:mistralai/Mixtral-8x7B-v0.1",

"base_model:merge:mistralai/Mixtral-8x7B-v0.1",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-12T02:22:56Z |

---

base_model:

- mistralai/Mixtral-8x7B-v0.1

- antisoc-qa-assoc/uphill-instruct-crest-0.1-e2

- antisoc-qa-assoc/uphill-instruct-clash-e2

- antisoc-qa-assoc/Mixtral-8x7B-Yes-Instruct-LimaRP

library_name: transformers

tags:

- mergekit

- merge

---

# uphill-instruct-crest-e2-clash-e2-lime-faint-try1

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [DARE TIES](https://arxiv.org/abs/2311.03099) merge method using [mistralai/Mixtral-8x7B-v0.1](https://huggingface.co/mistralai/Mixtral-8x7B-v0.1) as a base.

### Models Merged

The following models were included in the merge:

* ./Mixtral-8x7B-Yes-Instruct-LimaRP

* ./uphill-instruct-crest-e2-nolime

* ./uphill-pure-clash-0.2-e2

### Configuration

The following YAML configuration was used to produce this model:

```yaml

# Faint tecnnique, crest-e2 clash-e1

#

# review:

# - Instruction-following:

# - Swerve:

# - Word choice:

# - Rhythm, cadence:

# - Notes:

# -

#

# - Design:

# The idea here is to cut crush -- formerly the very cornerstone

# of our merges -- completely out. it's very good for word choice

# but crest is, too. The only problem is I seem to remember that

# crest is overfit. So, we make it faint.

#

# Note: nearly two years later I'm trying to bring Mixtral

# back from the dead. There are multiple reasons:

# 1. Mistral-Small is kind of crap and smells like slop.

# Hell, even the comprehension felt weak but maybe that's

# just how I tried to sample it.

# 2. Llama3 hasn't been interesting and is definitely crammed

# with slop.

# 3. Mixtral is probably the least synthetic-trained sounding

# of all the OG models. Even when I tried the Quen shit

# it seemed to be just openai. Mixtral is still sloppy.

#

# So, the pieces that are ours are uphill: non-instruct lora

# being applied to the instruct rawdog without an intermediate

# step.

#

# Obviously we're using pure elemental antisoc loras, hush's shit

# but not her merge because the merges aren't "uphill", as in,

# a lora made with "mixtral non-instruct" applied straight to

# the instruct with loraize.

#

# The notion, which came to me in the middle of the night, is

# to have the hush loras be only barely present layer-wise but

# weighted heavily. Likewise with LimaRP, send uphill from

# doctor-shotgun's qlora straight into mixtral-instruct

#

# My hypothesis is that we should get really fucking close to

# pure-ass mixtral-instruct in terms of attention, but that

# we're weighting really hard not to write like it. I have no

# idea if that's how it works--I'm a fucking caveman.

#

# What I'm given to understand, and I'm way out of my depth,

# is that the antisoc layers won't have blotched the instruct

# as badly as they usually do, but when they're triggered they

# are dominant. It's entirely possible I've got no idea what

# I'm saying.

# Model descriptions:

# - crush: poetry; we have all checkpoints

# - crest: fic; we only have e2 for this

# - clash: novels (I think); we have all checkpoints for 0.2

models:

# I wonder what happens if we just hurl this out the window

# - model: mistralai/Mixtral-8x7B-Instruct-v0.1

# parameters:

# density: 0.9

# weight: 0.55

#

# crest is fic

- model: ./uphill-instruct-crest-e2-nolime

# i found lima in this, I need to cook another

parameters:

density: 0.4

weight: 0.3

# This is actually an uphill lima but I didn't name it that way.

- model: ./Mixtral-8x7B-Yes-Instruct-LimaRP

parameters:

# Still just a breath of layers from the thing

density: 0.2

# I am gimping its weight compared to hush tunes because limarp has too

# much ai-slop and amateur-smut cliche slop. Honestly, if there were

# something better than limarp I'd try to train it myself but I don't

# know if there is.

weight: 0.1

# Pure uphill clash at e2. Also more weight.

- model: ./uphill-pure-clash-0.2-e2

parameters:

density: 0.5

weight: 0.6

# della sucked ass so dare_ties it is

merge_method: dare_ties

# I know all of these look like instruct but the lora

# is actually not so we go to the base base

base_model: mistralai/Mixtral-8x7B-v0.1

parameters:

normalize: true

int8_mask: true

dtype: bfloat16

```

|

FreedomIntelligence/EchoX-3B

|

FreedomIntelligence

| 2025-09-12T02:45:36Z | 4 | 1 | null |

[

"safetensors",

"ACLlama",

"audio-text-to-audio-text",

"speech-understanding",

"audio",

"chat",

"en",

"dataset:custom",

"arxiv:2509.09174",

"license:apache-2.0",

"region:us"

] | null | 2025-09-08T12:23:36Z |

---

language:

- en

tags:

- audio-text-to-audio-text

- speech-understanding

- audio

- chat

license: apache-2.0

datasets:

- custom

metrics:

- wer

- bleu

- AIR-Bench

---

<div align="center">

<h1>

EchoX: Towards Mitigating Acoustic-Semantic Gap via Echo Training for Speech-to-Speech LLMs

</h1>

</div>

<p align="center">

<font size="3"><a href="https://github.com/FreedomIntelligence/EchoX">🐈⬛ Github</a> | <a href="https://arxiv.org/abs/2509.09174">📃 Paper</a> | <a href="https://huggingface.co/spaces/FreedomIntelligence/EchoX">🚀 Space (8B)</a> </font>

</p>

## Model Description

EchoX is a Speech-to-Speech large language model that addresses the acoustic-semantic gap. This is the 3B version. By introducing **Echo Training**, EchoX integrates semantic and acoustic learning, mitigating the degradation of reasoning ability observed in existing speech-based LLMs. It is trained on only 10k hours of data while delivering state-of-the-art results in knowledge-based question answering and speech interaction tasks.

### Key Features

<div>

<ul>

<font size="3"><li>Mitigates Acoustic-Semantic Gap in Speech-to-Speech LLMs</li></font>

<font size="3"><li>Introduces Echo Training with a Novel Three-Stage Pipeline (S2T, T2C, Echo)</li></font>

<font size="3"><li>Trained on Only 10k Hours of Curated Data, Ensuring Efficiency</li></font>

<font size="3"><li>Achieves State-of-the-Art Performance in Knowledge-Based QA Benchmarks</li></font>

<font size="3"><li>Preserves Reasoning and Knowledge Abilities for Interactive Speech Tasks</li></font>

</ul>

</div>

## Usage

Load the EchoX model and run inference with your audio files as shown in the <a href="https://github.com/FreedomIntelligence/EchoX">GitHub repository</a>.

# <span>📖 Citation</span>

```

@misc{zhang2025echoxmitigatingacousticsemanticgap,

title={EchoX: Towards Mitigating Acoustic-Semantic Gap via Echo Training for Speech-to-Speech LLMs},

author={Yuhao Zhang and Yuhao Du and Zhanchen Dai and Xiangnan Ma and Kaiqi Kou and Benyou Wang and Haizhou Li},

year={2025},

eprint={2509.09174},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2509.09174},

}

```

|

LE1X1N/ppo-pytorch-LunarLander-v2

|

LE1X1N

| 2025-09-12T02:45:32Z | 0 | 0 | null |

[

"tensorboard",

"LunarLander-v2",

"ppo",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"deep-rl-course",

"model-index",

"region:us"

] |

reinforcement-learning

| 2025-09-12T02:35:22Z |

---

tags:

- LunarLander-v2

- ppo

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

- deep-rl-course

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 103.73 +/- 112.03

name: mean_reward

verified: false

---

# PPO Agent Playing LunarLander-v2

This is a trained model of a PPO agent playing LunarLander-v2.

# Hyperparameters

```python

{'exp_name': 'ppo_train'

'seed': 1

'torch_deterministic': True

'cuda': True

'track': False

'wandb_project_name': 'cleanRL'

'wandb_entity': None

'capture_video': False

'env_id': 'LunarLander-v2'

'total_timesteps': 500000

'learning_rate': 0.00025

'num_envs': 4

'num_steps': 128

'anneal_lr': True

'gae': True

'gamma': 0.99

'gae_lambda': 0.95

'num_minibatches': 4

'update_epochs': 4

'norm_adv': True

'clip_coef': 0.2

'clip_vloss': True

'ent_coef': 0.01

'vf_coef': 0.5

'max_grad_norm': 0.5

'target_kl': None

'repo_id': 'LE1X1N/ppo-pytorch-LunarLander-v2'

'batch_size': 512

'minibatch_size': 128}

```

|

mcptester0606/MyAwesomeModel-TestRepo

|

mcptester0606

| 2025-09-12T02:45:19Z | 0 | 0 |

transformers

|

[

"transformers",

"license:mit",

"endpoints_compatible",

"region:us"

] | null | 2025-09-12T02:45:04Z |

---

license: mit

library_name: transformers

---

# MyAwesomeModel

<!-- markdownlint-disable first-line-h1 -->

<!-- markdownlint-disable html -->

<!-- markdownlint-disable no-duplicate-header -->

<div align="center">

<img src="figures/fig1.png" width="60%" alt="MyAwesomeModel" />

</div>

<hr>

<div align="center" style="line-height: 1;">

<a href="LICENSE" style="margin: 2px;">

<img alt="License" src="figures/fig2.png" style="display: inline-block; vertical-align: middle;"/>

</a>

</div>

## 1. Introduction

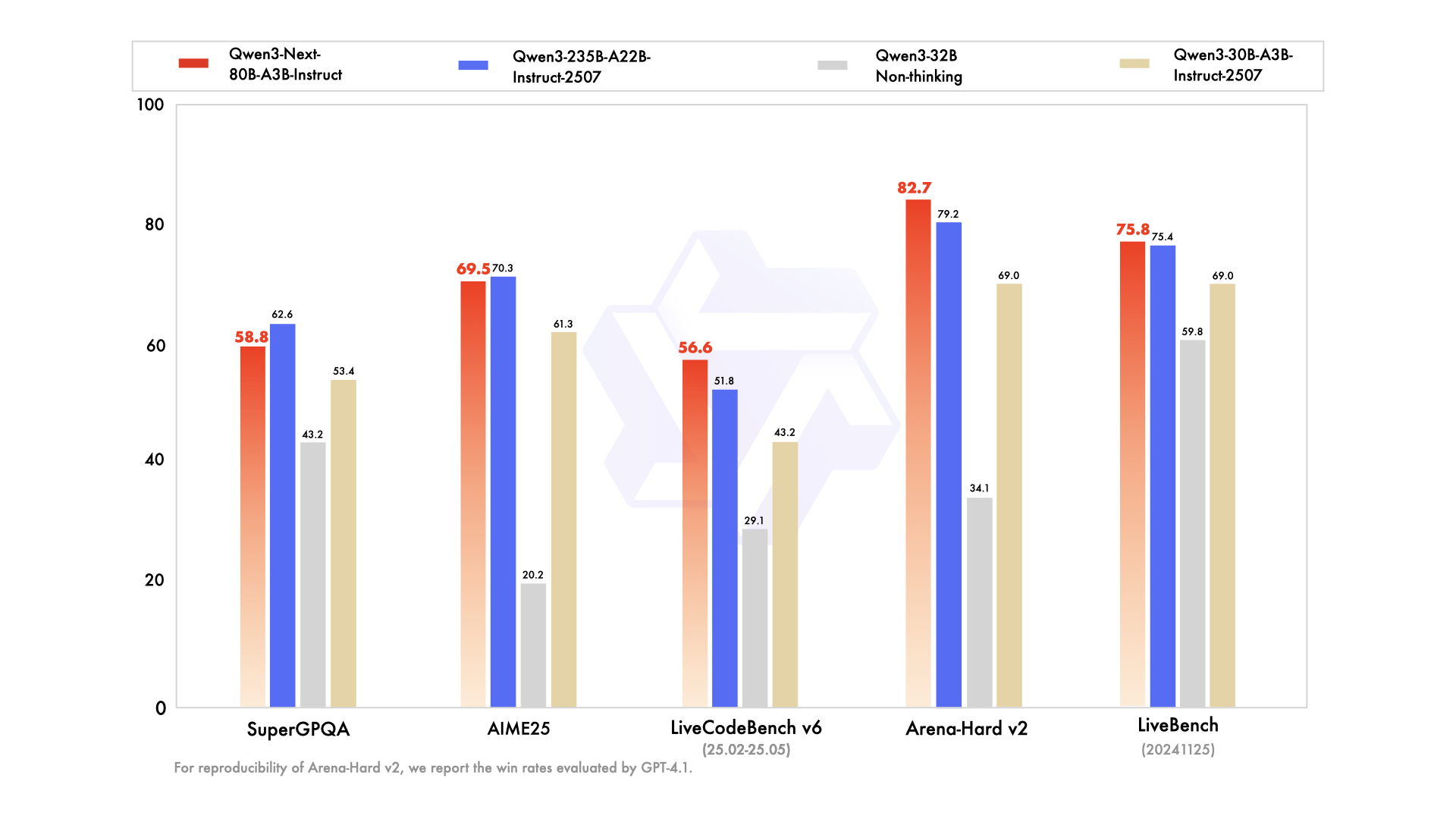

The MyAwesomeModel has undergone a significant version upgrade. In the latest update, MyAwesomeModel has significantly improved its depth of reasoning and inference capabilities by leveraging increased computational resources and introducing algorithmic optimization mechanisms during post-training. The model has demonstrated outstanding performance across various benchmark evaluations, including mathematics, programming, and general logic. Its overall performance is now approaching that of other leading models.

<p align="center">

<img width="80%" src="figures/fig3.png">

</p>

Compared to the previous version, the upgraded model shows significant improvements in handling complex reasoning tasks. For instance, in the AIME 2025 test, the model’s accuracy has increased from 70% in the previous version to 87.5% in the current version. This advancement stems from enhanced thinking depth during the reasoning process: in the AIME test set, the previous model used an average of 12K tokens per question, whereas the new version averages 23K tokens per question.

Beyond its improved reasoning capabilities, this version also offers a reduced hallucination rate and enhanced support for function calling.

## 2. Evaluation Results

### Comprehensive Benchmark Results

<div align="center">

| | Benchmark | Model1 | Model2 | Model1-v2 | MyAwesomeModel |

|---|---|---|---|---|---|

| **Core Reasoning Tasks** | Math Reasoning | 0.510 | 0.535 | 0.521 | 0.550 |

| | Logical Reasoning | 0.789 | 0.801 | 0.810 | 0.819 |

| | Common Sense | 0.716 | 0.702 | 0.725 | 0.736 |

| **Language Understanding** | Reading Comprehension | 0.671 | 0.685 | 0.690 | 0.700 |

| | Question Answering | 0.582 | 0.599 | 0.601 | 0.607 |

| | Text Classification | 0.803 | 0.811 | 0.820 | N/A |

| | Sentiment Analysis | 0.777 | 0.781 | 0.790 | 0.792 |

| **Generation Tasks** | Code Generation | 0.615 | 0.631 | 0.640 | N/A |

| | Creative Writing | 0.588 | 0.579 | 0.601 | 0.610 |

| | Dialogue Generation | 0.621 | 0.635 | 0.639 | N/A |

| | Summarization | 0.745 | 0.755 | 0.760 | 0.767 |

| **Specialized Capabilities**| Translation | 0.782 | 0.799 | 0.801 | 0.804 |

| | Knowledge Retrieval | 0.651 | 0.668 | 0.670 | 0.676 |

| | Instruction Following | 0.733 | 0.749 | 0.751 | 0.758 |

| | Safety Evaluation | 0.718 | 0.701 | 0.725 | 0.739 |

</div>

### Overall Performance Summary

The MyAwesomeModel demonstrates strong performance across all evaluated benchmark categories, with particularly notable results in reasoning and generation tasks.

## 3. Chat Website & API Platform

We offer a chat interface and API for you to interact with MyAwesomeModel. Please check our official website for more details.

## 4. How to Run Locally

Please refer to our code repository for more information about running MyAwesomeModel locally.

Compared to previous versions, the usage recommendations for MyAwesomeModel have the following changes:

1. System prompt is supported.

2. It is not required to add special tokens at the beginning of the output to force the model into a specific thinking pattern.

The model architecture of MyAwesomeModel-Small is identical to its base model, but it shares the same tokenizer configuration as the main MyAwesomeModel. This model can be run in the same manner as its base model.

### System Prompt

We recommend using the following system prompt with a specific date.

```

You are MyAwesomeModel, a helpful AI assistant.

Today is {current date}.

```

For example,

```

You are MyAwesomeModel, a helpful AI assistant.

Today is May 28, 2025, Monday.

```

### Temperature

We recommend setting the temperature parameter $T_{model}$ to 0.6.

### Prompts for File Uploading and Web Search

For file uploading, please follow the template to create prompts, where {file_name}, {file_content} and {question} are arguments.

```

file_template = \

"""[file name]: {file_name}

[file content begin]

{file_content}

[file content end]

{question}"""

```

For web search enhanced generation, we recommend the following prompt template where {search_results}, {cur_date}, and {question} are arguments.

```

search_answer_en_template = \

'''# The following contents are the search results related to the user's message:

{search_results}

In the search results I provide to you, each result is formatted as [webpage X begin]...[webpage X end], where X represents the numerical index of each article. Please cite the context at the end of the relevant sentence when appropriate. Use the citation format [citation:X] in the corresponding part of your answer. If a sentence is derived from multiple contexts, list all relevant citation numbers, such as [citation:3][citation:5]. Be sure not to cluster all citations at the end; instead, include them in the corresponding parts of the answer.

When responding, please keep the following points in mind:

- Today is {cur_date}.

- Not all content in the search results is closely related to the user's question. You need to evaluate and filter the search results based on the question.

- For listing-type questions (e.g., listing all flight information), try to limit the answer to 10 key points and inform the user that they can refer to the search sources for complete information. Prioritize providing the most complete and relevant items in the list. Avoid mentioning content not provided in the search results unless necessary.

- For creative tasks (e.g., writing an essay), ensure that references are cited within the body of the text, such as [citation:3][citation:5], rather than only at the end of the text. You need to interpret and summarize the user's requirements, choose an appropriate format, fully utilize the search results, extract key information, and generate an answer that is insightful, creative, and professional. Extend the length of your response as much as possible, addressing each point in detail and from multiple perspectives, ensuring the content is rich and thorough.

- If the response is lengthy, structure it well and summarize it in paragraphs. If a point-by-point format is needed, try to limit it to 5 points and merge related content.

- For objective Q&A, if the answer is very brief, you may add one or two related sentences to enrich the content.

- Choose an appropriate and visually appealing format for your response based on the user's requirements and the content of the answer, ensuring strong readability.

- Your answer should synthesize information from multiple relevant webpages and avoid repeatedly citing the same webpage.

- Unless the user requests otherwise, your response should be in the same language as the user's question.

# The user's message is:

{question}'''

```

## 5. License

This code repository is licensed under the [MIT License](LICENSE). The use of MyAwesomeModel models is also subject to the [MIT License](LICENSE). The model series supports commercial use and distillation.

## 6. Contact

If you have any questions, please raise an issue on our GitHub repository or contact us at contact@MyAwesomeModel.ai.

```

|

KISTI-KONI/KONI-4B-instruct-20250901

|

KISTI-KONI

| 2025-09-12T02:45:08Z | 242 | 0 |

transformers

|

[

"transformers",

"safetensors",

"gemma3_text",

"text-generation",

"pytorch",

"causal-lm",

"gemma3",

"4b",

"conversational",

"ko",

"en",

"base_model:google/gemma-3-4b-pt",

"base_model:finetune:google/gemma-3-4b-pt",

"license:gemma",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-01T05:28:42Z |

---

license: gemma

language:

- ko

- en

tags:

- text-generation

- pytorch

- causal-lm

- gemma3

- 4b

library_name: transformers

base_model:

- google/gemma-3-4b-pt

---

# KISTI-KONI/KONI-4B-instruct-20250901

## Model Description

**KONI (KISTI Open Neural Intelligence)** is a large language model developed by the Korea Institute of Science and Technology Information (KISTI). Designed specifically for the scientific and technological domains, KONI excels in both Korean and English, making it an ideal tool for tasks requiring specialized knowledge in these areas.

---

## Key Features

- **Bilingual Model**: Supports both Korean and English, with a focus on scientific and technical texts.

- **Post-training**: The model undergoes post-training via instruction tuning (IT) and direct preference optimization (DPO) using a filtered, high-quality bilingual dataset that includes scientific data and publicly available resources. This ensures adaptability to evolving scientific and technological content.

- **Base Model**: Built upon *KISTI-KONI/KONI-4B-base-20250819*, KONI-4B-instruct undergoes post-training for superior performance on both general and scientific benchmarks.

- **Training Environment**: Trained on *24* H200 GPUs at the KISTI supercomputer, optimizing both speed and quality during development.

- **Dataset**: Utilizes a high-quality and balanced dataset of 9 billion instruction-following pairs, comprising scientific texts as well as publicly available bilingual data.

- **Data Optimization**: The post-training process involved testing a variety of data distributions (balanced, reasoning-enhanced, knowledge-enhanced, minimal Korean settings, etc.) and selecting the optimal combination for training.

- **Enhanced Performance**: KONI-4B-instruct, developed through instruction tuning of the KONI-4B-base model, delivers superior performance compared to other similarly-sized models.

---

## Model Performance

KONI-4B-instruct has demonstrated strong performance on a variety of scientific benchmarks, outperforming several other 4B-sized pretrained models. Here is a comparison of KONI-4B-instruct’s performance across various benchmarks including scientific and technological benchmarks:

| Rank | Model | KMMLU | KMMLU-Hard | KMMLU-Direct | KoBEST | HAERAE | kormedmcqa | MMLU | ARC_easy | ARC_challenge | Hellaswag | ScholarBench-MC | AidaBench-MC | average |

|------|--------------------------------------------------------------|-------|------------|------------|--------|--------|------------|-------|----------|---------------|-----------|-----------------|--------------|---------|

| 1 | Qwen/Qwen3-8B | 0.5500 | 0.2900 | 0.5558 | 0.7800 | 0.6700 | 0.3750 | 0.7400 | 0.8700 | 0.6400 | 0.5700 | 0.7094 | 0.7314 | 0.623462 |

| 2 | kakaocorp/kanana-1.5-8b-base | 0.4800 | 0.2500 | 0.4872 | 0.6200 | 0.8200 | 0.5910 | 0.6300 | 0.8300 | 0.5600 | 0.6000 | 0.6800 | 0.7548 | 0.608580 |

| 3 | LGAI-EXAONE/EXAONE-3.5-7.8B-Instruct | 0.4700 | 0.2300 | 0.4532 | 0.5900 | 0.7800 | 0.5310 | 0.6500 | 0.8300 | 0.5900 | 0.6200 | 0.6900 | 0.7057 | 0.594986 |

| 4 | **KISTI-KONI/KONI-4B-instruct-20250901** | **0.4188** | **0.2110** | **0.4194** | **0.7393** | **0.7333** | **0.4719** | **0.5823** | **0.8342** | **0.5452** | **0.5783** | **0.6980** | **0.6274** | **0.571603** |

| 5 | kakaocorp/kanana-1.5-2.1b-instruct-2505 | 0.4200 | 0.2100 | 0.4247 | 0.7700 | 0.7900 | 0.5224 | 0.5500 | 0.8000 | 0.5300 | 0.5100 | 0.6630 | 0.6688 | 0.571577 |

| 6 | **KISTI-KONI/KONI-4B-base-20250819** | **0.4300** | **0.2100** | **0.4349** | **0.7300** | 0.6600 | **0.4800** | **0.5800** | **0.8200** | **0.5200** | **0.5700** | **0.6800** | **0.6147** | **0.560803** |

| 7 | LGAI-EXAONE/EXAONE-3.5-2.4B-Instruct | 0.4300 | 0.2100 | 0.4379 | 0.7400 | 0.6600 | 0.4842 | 0.5900 | 0.7700 | 0.5000 | 0.5400 | 0.6900 | 0.6511 | 0.558603 |

| 8 | KISTI-KONI/KONI-Llama3.1-8B-Instruct-20241024 | 0.4000 | 0.2000 | 0.4100 | 0.5600 | 0.6400 | 0.4905 | 0.6300 | 0.8300 | 0.5400 | 0.6100 | 0.6980 | 0.6722 | 0.556725 |

| 9 | meta-llama/Llama-3.1-8B-Instruct | 0.4000 | 0.2000 | 0.4119 | 0.7000 | 0.4400 | 0.4789 | 0.6500 | 0.8400 | 0.5400 | 0.6100 | 0.6960 | 0.6709 | 0.553135 |

| 10 | google/gemma-3-4b-pt | 0.3980 | 0.1998 | 0.3966 | 0.6990 | 0.6672 | 0.4726 | 0.5964 | 0.8300 | 0.5435 | 0.5763 | 0.6670 | 0.5886 | 0.552906 |

| 11 | google/gemma-3-4b-it | 0.3900 | 0.2100 | 0.3904 | 0.7200 | 0.5900 | 0.4400 | 0.5800 | 0.8400 | 0.5600 | 0.5600 | 0.6990 | 0.6013 | 0.548388 |

| 12 | saltlux/Ko-Llama3-Luxia-8B | 0.3800 | 0.2100 | 0.3935 | 0.7100 | 0.6800 | 0.4320 | 0.5500 | 0.8000 | 0.4800 | 0.5600 | 0.6650 | 0.6109 | 0.539283 |

| 13 | MLP-KTLim/llama-3-Korean-Bllossom-8B | 0.3700 | 0.2200 | 0.3738 | 0.5500 | 0.4700 | 0.4163 | 0.6400 | 0.8400 | 0.5700 | 0.5900 | 0.6525 | 0.5862 | 0.523239 |

| 14 | kakaocorp/kanana-1.5-2.1b-base | 0.3900 | 0.2400 | 0.4502 | 0.6200 | 0.5700 | 0.5138 | 0.4700 | 0.7300 | 0.4400 | 0.4500 | 0.6500 | 0.6478 | 0.514315 |

| 15 | naver-hyperclovax/HyperCLOVAX-SEED-Text-Instruct-1.5B | 0.3900 | 0.2400 | 0.3524 | 0.6400 | 0.5700 | 0.3550 | 0.4700 | 0.7300 | 0.4400 | 0.4500 | 0.5950 | 0.5450 | 0.481447 |

| 16 | naver-hyperclovax/HyperCLOVAX-SEED-Text-Instruct-0.5B | 0.3700 | 0.2200 | 0.3798 | 0.6200 | 0.5600 | 0.3383 | 0.4400 | 0.7200 | 0.3900 | 0.4100 | 0.5600 | 0.5173 | 0.460449 |

| 17 | mistralai/Mistral-7B-v0.3 | 0.3700 | 0.2200 | 0.3739 | 0.6300 | 0.3700 | 0.3735 | 0.6200 | 0.8300 | 0.5500 | 0.6200 | 0.5440 | 0.4257 | 0.413117 |

| 18 | google/gemma-3-1b-it | 0.3069 | 0.2400 | 0.2935 | 0.3556 | 0.5987 | 0.2761 | 0.3970 | 0.6620 | 0.3430 | 0.4204 | 0.5720 | 0.3972 | 0.390038 |

| 19 | google/gemma-3-1b-pt | 0.2582 | 0.2456 | 0.2556 | 0.5569 | 0.1952 | 0.1964 | 0.2641 | 0.7146 | 0.3541 | 0.4703 | 0.2192 | 0.1980 | 0.327362 |

| 20 | etri-lirs/eagle-3b-preview | 0.1600 | 0.2100 | 0.1617 | 0.5100 | 0.1900 | 0.1804 | 0.2500 | 0.5700 | 0.2400 | 0.3700 | 0.2678 | 0.2224 | 0.236846 |

As shown, **KISTI-KONI/KONI-4B-instruct-20250901** is the top-performing model in the 4B-size instruction-tuned model category, outperforming *google/gemma-3-4b-it* and *KISTI-KONI/KONI-4B-base-20250819*.

---

## Strengths & Use Cases

- **Domain-Specific Excellence**: KONI-4B-instruct excels at tasks involving scientific literature, technological content, and complex reasoning. It is ideal for research, academic analysis, and specialized problem-solving.

- **Bilingual Advantage**: The model’s bilingual nature enables handling diverse datasets and generating high-quality responses in both English and Korean, especially in bilingual scientific collaborations.

- **Benchmark Performance**: KONI-4B-instruct has shown superior performance in benchmarks such as *KMMLU*, *kormedmcqa*, and *ScholarBench-MC*, proving its robustness in knowledge-intensive tasks.

---

## Usage

```sh

$ pip install -U transformers

```

```python

from transformers import pipeline

import torch

pipe = pipeline("text-generation", model="KISTI-KONI/KONI-4B-instruct-20250901", device="cuda", torch_dtype=torch.bfloat16)

messages = [

[

{

"role": "system",

"content": [{"type": "text", "text": "You are a helpful assistant."},]

},

{

"role": "user",

"content": [{"type": "text", "text": "슈퍼컴퓨터에 대해서 설명해줘."},]

},

],

]

output = pipe(

messages,

max_new_tokens=512,

eos_token_id=[pipe.tokenizer.eos_token_id, pipe.tokenizer.convert_tokens_to_ids("<end_of_turn>")]

)

```

## Citation

If you use this model in your work, please cite it as follows:

```bibtex

@article{KISTI-KONI/KONI-4B-instruct-20250901,

title={KISTI-KONI/KONI-4B-instruct-20250901},

author={KISTI},

year={2025},

url={https://huggingface.co/KISTI-KONI/KONI-4B-instruct-20250901}

}

```

---

## Acknowledgements

- This research was supported by the Korea Institute of Science and Technology Information (KISTI) in 2025 (No. (KISTI) K25L1M1C1), aimed at developing KONI (KISTI Open Neural Intelligence), a large language model specialized in science and technology.

- This work also benefited from the resources and technical support provided by the National Supercomputing Center (KISTI).

---

## References

- https://huggingface.co/KISTI-KONI/KONI-4B-base-20250819

|

FreedomIntelligence/EchoX-8B

|

FreedomIntelligence

| 2025-09-12T02:44:59Z | 53 | 4 | null |

[

"safetensors",

"ACLlama",

"audio-text-to-audio-text",

"speech-understanding",

"audio",

"chat",

"en",

"dataset:custom",

"arxiv:2509.09174",

"license:apache-2.0",

"region:us"

] | null | 2025-09-04T11:01:11Z |

---

language:

- en

tags:

- audio-text-to-audio-text

- speech-understanding

- audio

- chat

license: apache-2.0

datasets:

- custom

metrics:

- wer

- bleu

- AIR-Bench

---

<div align="center">

<h1>

EchoX: Towards Mitigating Acoustic-Semantic Gap via Echo Training for Speech-to-Speech LLMs

</h1>

</div>

<p align="center">

<font size="3"><a href="https://github.com/FreedomIntelligence/EchoX">🐈⬛ Github</a> | <a href="https://arxiv.org/abs/2509.09174">📃 Paper</a> | <a href="https://huggingface.co/spaces/FreedomIntelligence/EchoX">🚀 Space</a> </font>

</p>

## Model Description

EchoX is a Speech-to-Speech large language model that addresses the acoustic-semantic gap. By introducing **Echo Training**, EchoX integrates semantic and acoustic learning, mitigating the degradation of reasoning ability observed in existing speech-based LLMs. It is trained on only 10k hours of data while delivering state-of-the-art results in knowledge-based question answering and speech interaction tasks.

### Key Features

<div>

<ul>

<font size="3"><li>Mitigates Acoustic-Semantic Gap in Speech-to-Speech LLMs</li></font>

<font size="3"><li>Introduces Echo Training with a Novel Three-Stage Pipeline (S2T, T2C, Echo)</li></font>

<font size="3"><li>Trained on Only 10k Hours of Curated Data, Ensuring Efficiency</li></font>

<font size="3"><li>Achieves State-of-the-Art Performance in Knowledge-Based QA Benchmarks</li></font>

<font size="3"><li>Preserves Reasoning and Knowledge Abilities for Interactive Speech Tasks</li></font>

</ul>

</div>

## Usage

Load the EchoX model and run inference with your audio files as shown in the <a href="https://github.com/FreedomIntelligence/EchoX">GitHub repository</a>.

# <span>📖 Citation</span>

```

@misc{zhang2025echoxmitigatingacousticsemanticgap,

title={EchoX: Towards Mitigating Acoustic-Semantic Gap via Echo Training for Speech-to-Speech LLMs},

author={Yuhao Zhang and Yuhao Du and Zhanchen Dai and Xiangnan Ma and Kaiqi Kou and Benyou Wang and Haizhou Li},

year={2025},

eprint={2509.09174},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2509.09174},

}

```

|

omerbektasss/blockassist-bc-keen_fast_giraffe_1757645053

|

omerbektasss

| 2025-09-12T02:44:33Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"keen fast giraffe",

"arxiv:2504.07091",

"region:us"

] | null | 2025-09-12T02:44:29Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- keen fast giraffe

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

onnx-community/SmolLM2-135M-humanized-ONNX

|

onnx-community

| 2025-09-12T02:42:09Z | 0 | 0 |

transformers.js

|

[

"transformers.js",

"onnx",

"llama",

"text-generation",

"conversational",

"base_model:AssistantsLab/SmolLM2-135M-humanized",

"base_model:quantized:AssistantsLab/SmolLM2-135M-humanized",

"region:us"

] |

text-generation

| 2025-09-12T02:41:59Z |

---

library_name: transformers.js

base_model:

- AssistantsLab/SmolLM2-135M-humanized

---

# SmolLM2-135M-humanized (ONNX)

This is an ONNX version of [AssistantsLab/SmolLM2-135M-humanized](https://huggingface.co/AssistantsLab/SmolLM2-135M-humanized). It was automatically converted and uploaded using [this space](https://huggingface.co/spaces/onnx-community/convert-to-onnx).

|

teysty/vjepa2-vitl-fpc16-256-ssv2-fdet_64-frames_1clip_1indice_cleaned-new-split_10epochs

|

teysty

| 2025-09-12T02:41:37Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"vjepa2",

"video-classification",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] |

video-classification

| 2025-09-12T02:33:40Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

stonermay/blockassist-bc-diving_lightfooted_caterpillar_1757644795

|

stonermay

| 2025-09-12T02:41:11Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"diving lightfooted caterpillar",

"arxiv:2504.07091",

"region:us"

] | null | 2025-09-12T02:40:51Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- diving lightfooted caterpillar

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

justpluso/turn-detection-gguf

|

justpluso

| 2025-09-12T02:38:57Z | 0 | 0 | null |

[

"gguf",

"voice",

"agent",

"text-classification",

"zh",

"en",

"base_model:justpluso/turn-detection",

"base_model:quantized:justpluso/turn-detection",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] |

text-classification

| 2025-09-12T02:32:31Z |

---

license: apache-2.0

language:

- zh

- en

metrics:

- accuracy

base_model:

- justpluso/turn-detection

pipeline_tag: text-classification

tags:

- voice

- agent

---

|

jahyungu/Llama-3.2-1B-Instruct_apps

|

jahyungu

| 2025-09-12T02:38:52Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"generated_from_trainer",

"conversational",

"dataset:apps",

"base_model:meta-llama/Llama-3.2-1B-Instruct",

"base_model:finetune:meta-llama/Llama-3.2-1B-Instruct",

"license:llama3.2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-12T02:08:59Z |

---

library_name: transformers

license: llama3.2

base_model: meta-llama/Llama-3.2-1B-Instruct

tags:

- generated_from_trainer

datasets:

- apps

model-index:

- name: Llama-3.2-1B-Instruct_apps

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Llama-3.2-1B-Instruct_apps

This model is a fine-tuned version of [meta-llama/Llama-3.2-1B-Instruct](https://huggingface.co/meta-llama/Llama-3.2-1B-Instruct) on the apps dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 16

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.03

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.55.0

- Pytorch 2.6.0+cu124

- Datasets 3.4.1

- Tokenizers 0.21.0

|

omerbektasss/blockassist-bc-insectivorous_bold_lion_1757644682

|

omerbektasss

| 2025-09-12T02:38:25Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"insectivorous bold lion",

"arxiv:2504.07091",

"region:us"

] | null | 2025-09-12T02:38:20Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- insectivorous bold lion

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

TAUR-dev/M-0911__0epoch_3args_grpo_try2-rl

|

TAUR-dev

| 2025-09-12T02:37:24Z | 0 | 0 | null |

[

"safetensors",

"qwen2",

"en",

"license:mit",

"region:us"

] | null | 2025-09-11T20:42:14Z |

---

language: en

license: mit

---

# M-0911__0epoch_3args_grpo_try2-rl

## Model Details

- **Training Method**: VeRL Reinforcement Learning (RL)

- **Stage Name**: rl

- **Experiment**: 0911__0epoch_3args_grpo_try2

- **RL Framework**: VeRL (Versatile Reinforcement Learning)

## Training Configuration

## Experiment Tracking

🔗 **View complete experiment details**: https://huggingface.co/datasets/TAUR-dev/D-ExpTracker__0911__0epoch_3args_grpo_try2__v1

## Usage

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("TAUR-dev/M-0911__0epoch_3args_grpo_try2-rl")

model = AutoModelForCausalLM.from_pretrained("TAUR-dev/M-0911__0epoch_3args_grpo_try2-rl")

```

|

dadu/qwen3-0.6b-translation-synthetic-reasoning-1

|

dadu

| 2025-09-12T02:36:56Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen3",

"text-generation",

"translation",

"reasoning",

"few-shot",

"biblical-languages",

"base_model:Qwen/Qwen3-0.6B",

"base_model:finetune:Qwen/Qwen3-0.6B",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

translation

| 2025-09-12T01:11:13Z |

---

library_name: transformers

tags:

- translation

- reasoning

- few-shot

- biblical-languages

license: apache-2.0

base_model:

- Qwen/Qwen3-0.6B

---

# qwen3-0.6b-translation-synthetic-reasoning-1

A fine-tuned Qwen3-0.6B model that provides step-by-step reasoning for few-shot translation tasks, particularly focused on low-resource and biblical language pairs.

## Model Details

### Model Description

This model extends Qwen/Qwen3-0.6B with the ability to perform detailed reasoning during translation. Given a query and several few-shot examples, it explains its translation choices step-by-step, making the process transparent and educational.

- **Developed by:** dadu

- **Model type:** Causal Language Model (Fine-tuned for few-shot translation reasoning)

- **Language(s):** Multi-lingual (specialized in biblical/low-resource language pairs)

- **License:** Apache 2.0 (following base model)

- **Finetuned from model:** Qwen/Qwen3-0.6B

### Model Sources

- **Repository:** [dadu/qwen3-0.6b-translation-synthetic-reasoning-1](https://huggingface.co/dadu/qwen3-0.6b-translation-synthetic-reasoning-1)

## Uses

### Direct Use

This model is designed for translation tasks where you need:

- Step-by-step reasoning explanations

- Fragment-by-fragment translation analysis

- Reference to linguistic patterns from few-shot examples

- Educational translation methodology for low-resource languages

### Out-of-Scope Use

- General conversation (may be overly verbose)

- Real-time translation (generates long explanations)

- Zero-shot translation (performs best with few-shot examples)

- Languages significantly different from training data

## How to Get Started with the Model

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "dadu/qwen3-0.6b-translation-synthetic-reasoning-1"

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Few-shot examples provide context for the translation style

few_shot_prompt = """

Examples:

source: Aŋkɛ bímbɔ áwúlégé, ɛkiɛ́nné Ɛsɔwɔ ɛ́kwɔ́ Josɛf ushu né gejya, ɛ́jɔɔ́ ne ji ɛké...

target: Jalla nekztanaqui nii magonacaz̈ ojktan tsjii Yooz Jilirz̈ anjilaqui wiiquin Josez̈quiz parisisquichic̈ha...

source: Josɛf ápégé, asɛ maá yimbɔ ne mmá wuú áfɛ́ né mme Isrɛli.

target: Jalla nuz̈ cjen Josequi z̈aaz̈cu Israel yokquin nii uztan maatan chjitchic̈ha.

Query: ɛké “Josɛf, kwilé ka ɔ́kpá maá yina ne mma wuú, ɛnyú dékéré meso né mme Isrɛli. Bɔɔ́ abi ákɛlege manwá ji ágboó.”

"""

messages = [

{"role": "system", "content": "You are a helpful Bible translation assistant. Given examples of language pairs and a query, you will write a high quality translation with reasoning."},

{"role": "user", "content": few_shot_prompt}

]

text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

inputs = tokenizer(text, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=1024, temperature=0.7)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)

```

## Training Details

### Training Data

- **Dataset:** `dadu/translation-synthetic-reasoning-1`

- **Size:** ~980 translation examples with detailed reasoning

- **Source:** Synthetically generated using LLMs with gold standard translations

- **Format:** Each example includes source text, target translation, and step-by-step reasoning

- **Languages:** Primarily biblical and low-resource language pairs

- **Quality:** Filtered to remove corrupted examples

### Training Procedure

#### Training Hyperparameters

- **Training regime:** Full fine-tuning (not LoRA)

- **Context Length:** 16,384 tokens

- **Epochs:** 2

- **Batch Size:** 8 (1 per device × 8 gradient accumulation)

- **Learning Rate:** 1.5e-5 with cosine scheduling

- **Optimizer:** AdamW with gradient clipping (max_grad_norm=1.0)

- **Precision:** BF16 mixed precision

## Methodology

The model was trained on few-shot prompts to:

1. **Analyze source text fragment by fragment**

2. **Reference similar patterns from the provided few-shot examples**

3. **Explain lexical and grammatical choices based on context**

4. **Provide systematic reasoning before the final translation**

## Limitations

- **Specialized domain:** Optimized for biblical/low-resource language translation

- **Verbose output:** Generates detailed explanations for all translations

- **Training data scope:** Performance may vary on language pairs not represented in training data

- **Few-shot dependency:** Works best when provided with relevant few-shot examples

## Technical Specifications

### Model Architecture

- **Base:** Qwen/Qwen3-0.6B (transformer decoder)

- **Parameters:** ~600M

- **Context Window:** 16,384 tokens

### Compute Infrastructure

#### Hardware

- **Training:** Google Colab Pro (A100 GPU)

- **Memory:** High memory configuration for 16K context training

#### Software

- **Framework:** Transformers, TRL

- **Precision:** BF16 mixed precision

- **Environment:** Google Colab

|

H5N1AIDS/Transcribe_and_Translate_Subtitles

|

H5N1AIDS

| 2025-09-12T02:36:41Z | 1 | 1 | null |

[

"onnx",

"license:apache-2.0",

"region:us"

] | null | 2025-05-08T03:51:45Z |

---

license: apache-2.0

---

# Transcribe and Translate Subtitles

## 🚨 Important Note

- **Every task runs locally without internet, ensuring maximum privacy.**

- [Visit Github](https://github.com/DakeQQ/Transcribe-and-Translate-Subtitles)

---

## Updates

- 2025/7/5

- Added a noise reduction model: MossFormerGAN_SE_16K

- 2025/6/11

- Added HumAware-VAD, NVIDIA-NeMo-VAD, TEN-VAD

- 2025/6/3

- Added Dolphin ASR model to support Asian languages.

- 2025/5/13

- Added Float16/32 ASR models to support CUDA/DirectML GPU usage. These models can achieve >99% GPU operator deployment.

- 2025/5/9

- Added an option to **not use** VAD (Voice Activity Detection), offering greater flexibility.

- Added a noise reduction model: **MelBandRoformer**.

- Added three Japanese anime fine-tuned Whisper models.

- Added ASR model: **CrisperWhisper**.

- Added English fine-tuned ASR model: **Whisper-Large-v3.5-Distil**.

- Added ASR model supporting Chinese (including some dialects): **FireRedASR-AED-L**.

- Removed the IPEX-LLM framework to enhance overall performance.

- Cancelled LLM quantization options, standardizing on the **Q4F32** format.

- Improved accuracy of **FSMN-VAD**.

- Improved recognition accuracy of **Paraformer**.

- Improved recognition accuracy of **SenseVoice**.

- Improved inference speed of the **Whisper** series by over 10%.

- Supported the following large language models (LLMs) with **ONNX Runtime 100% GPU operator deployment**:

- Qwen3-4B/8B

- InternLM3-8B

- Phi-4-mini-Instruct

- Gemma3-4B/12B-it

- Expanded hardware support:

- **Intel OpenVINO**

- **NVIDIA CUDA GPU**

- **Windows DirectML GPU** (supports integrated and discrete GPUs)

---

## ✨ Features

This project is built on ONNX Runtime framework.

- Deoiser Support:

- [DFSMN](https://modelscope.cn/models/iic/speech_dfsmn_ans_psm_48k_causal)

- [GTCRN](https://github.com/Xiaobin-Rong/gtcrn)

- [ZipEnhancer](https://modelscope.cn/models/iic/speech_zipenhancer_ans_multiloss_16k_base)

- [Mel-Band-Roformer](https://github.com/KimberleyJensen/Mel-Band-Roformer-Vocal-Model)

- [MossFormerGAN_SE_16K](https://www.modelscope.cn/models/alibabasglab/MossFormerGAN_SE_16K)

- VAD Support:

- [FSMN](https://modelscope.cn/models/iic/speech_fsmn_vad_zh-cn-16k-common-pytorch)

- [Faster_Whisper - Silero](https://github.com/SYSTRAN/faster-whisper/blob/master/faster_whisper/vad.py)

- [Official - Silero](https://github.com/snakers4/silero-vad)

- [HumAware](https://huggingface.co/CuriousMonkey7/HumAware-VAD)

- [NVIDIA-NeMo-VAD-v2.0](https://huggingface.co/nvidia/Frame_VAD_Multilingual_MarbleNet_v2.0)

- [TEN-VAD](https://github.com/TEN-framework/ten-vad)

- [Pyannote-Segmentation-3.0](https://huggingface.co/pyannote/segmentation-3.0)

- You need to accept Pyannote's terms of use and download the Pyannote `pytorch_model.bin` file. Next, place it in the `VAD/pyannote_segmentation` folder.

- ASR Support:

- [SenseVoice-Small](https://modelscope.cn/models/iic/SenseVoiceSmall)

- [Paraformer-Small-Chinese](https://modelscope.cn/models/iic/speech_paraformer_asr_nat-zh-cn-16k-common-vocab8358-tensorflow1)

- [Paraformer-Large-Chinese](https://modelscope.cn/models/iic/speech_paraformer-large_asr_nat-zh-cn-16k-common-vocab8404-pytorch)

- [Paraformer-Large-English](https://modelscope.cn/models/iic/speech_paraformer_asr-en-16k-vocab4199-pytorch)

- [Whisper-Large-V3](https://huggingface.co/openai/whisper-large-v3)

- [Whisper-Large-V3-Turbo](https://huggingface.co/openai/whisper-large-v3-turbo)

- [Whisper-Large-V3-Turbo-Japanese](https://huggingface.co/hhim8826/whisper-large-v3-turbo-ja)

- [Whisper-Large-V3-Anime-A](https://huggingface.co/efwkjn/whisper-ja-anime-v0.1)

- [Whisper-Large-V3-Anime-B](https://huggingface.co/litagin/anime-whisper)

- [Whisper-Large-v3.5-Distil](https://huggingface.co/distil-whisper/distil-large-v3.5)

- [CrisperWhisper](https://github.com/nyrahealth/CrisperWhisper)

- [FireRedASR-AED-L](https://github.com/FireRedTeam/FireRedASR)

- [Dolphin-Small](https://github.com/DataoceanAI/Dolphin)

- LLM Supports:

- Qwen-3: [4B](https://modelscope.cn/models/Qwen/Qwen3-4B), [8B](https://modelscope.cn/models/Qwen/Qwen3-8B)

- InternLM-3: [8B](https://huggingface.co/internlm/internlm3-8b-instruct)

- Gemma-3-it: [4B](https://huggingface.co/google/gemma-3-4b-it), [12B](https://huggingface.co/google/gemma-3-12b-it)

- Phi-4-Instruct: [mini](https://huggingface.co/microsoft/Phi-4-mini-instruct)

---

## 📋 Setup Instructions

### ✅ Step 1: Install Dependencies

- Run the following command in your terminal to install the latest required Python packages:

- For Apple Silicon M-series chips, avoid installing `onnxruntime-openvino`, as it will cause errors.

```bash

conda install ffmpeg

pip install -r requirements.txt

```

### 📥 Step 2: Download Necessary Models

- Download the required models from HuggingFace: [Transcribe_and_Translate_Subtitles](https://huggingface.co/H5N1AIDS/Transcribe_and_Translate_Subtitles).

### 🖥️ Step 3: Download and Place `run.py`

- Download the `run.py` script from this repository.

- Place it in the `Transcribe_and_Translate_Subtitles` folder.

### 📁 Step 4: Place Target Videos in the Media Folder

- Place the videos you want to transcribe and translate in the following directory. The application will process the videos one by one.:

```

Transcribe_and_Translate_Subtitles/Media

```

### 🚀 Step 5: Run the Application

- Open your preferred terminal (PyCharm, CMD, PowerShell, etc.).

- Execute the following command to start the application:

```bash

python run.py

```