modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-12 12:31:00

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 555

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-12 12:28:53

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

unsloth/Qwen3-Next-80B-A3B-Instruct

|

unsloth

| 2025-09-11T19:25:47Z | 0 | 18 |

transformers

|

[

"transformers",

"safetensors",

"qwen3_next",

"text-generation",

"unsloth",

"conversational",

"arxiv:2309.00071",

"arxiv:2404.06654",

"arxiv:2505.09388",

"arxiv:2501.15383",

"base_model:Qwen/Qwen3-Next-80B-A3B-Instruct",

"base_model:finetune:Qwen/Qwen3-Next-80B-A3B-Instruct",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-11T19:25:18Z |

---

tags:

- unsloth

base_model:

- Qwen/Qwen3-Next-80B-A3B-Instruct

library_name: transformers

license: apache-2.0

license_link: https://huggingface.co/Qwen/Qwen3-Next-80B-A3B-Instruct/blob/main/LICENSE

pipeline_tag: text-generation

---

# Qwen3-Next-80B-A3B-Instruct

<a href="https://chat.qwen.ai/" target="_blank" style="margin: 2px;">

<img alt="Chat" src="https://img.shields.io/badge/%F0%9F%92%9C%EF%B8%8F%20Qwen%20Chat%20-536af5" style="display: inline-block; vertical-align: middle;"/>

</a>

Over the past few months, we have observed increasingly clear trends toward scaling both total parameters and context lengths in the pursuit of more powerful and agentic artificial intelligence (AI).

We are excited to share our latest advancements in addressing these demands, centered on improving scaling efficiency through innovative model architecture.

We call this next-generation foundation models **Qwen3-Next**.

## Highlights

**Qwen3-Next-80B-A3B** is the first installment in the Qwen3-Next series and features the following key enchancements:

- **Hybrid Attention**: Replaces standard attention with the combination of **Gated DeltaNet** and **Gated Attention**, enabling efficient context modeling for ultra-long context length.

- **High-Sparsity Mixture-of-Experts (MoE)**: Achieves an extreme low activation ratio in MoE layers, drastically reducing FLOPs per token while preserving model capacity.

- **Stability Optimizations**: Includes techniques such as **zero-centered and weight-decayed layernorm**, and other stabilizing enhancements for robust pre-training and post-training.

- **Multi-Token Prediction (MTP)**: Boosts pretraining model performance and accelerates inference.

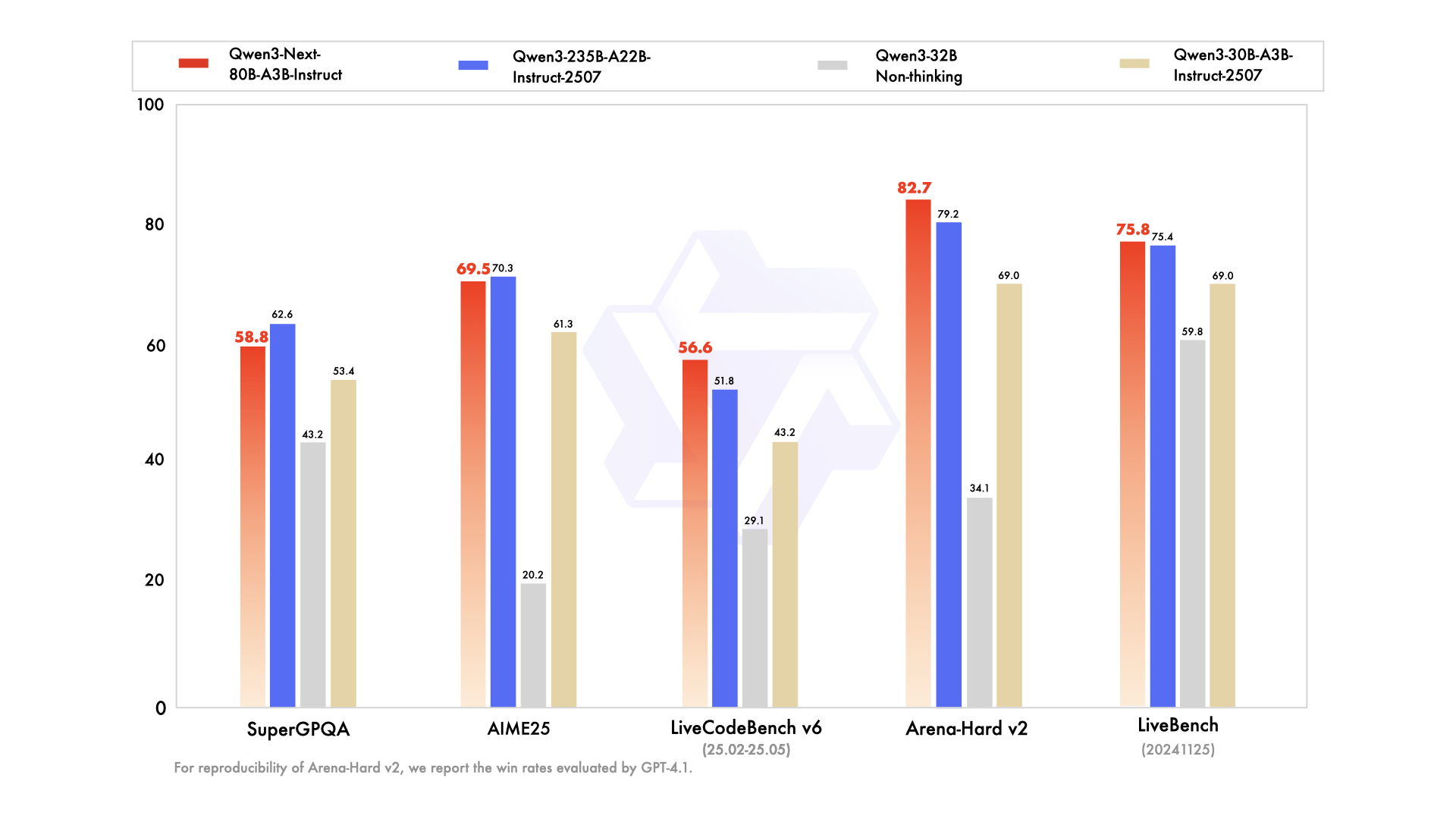

We are seeing strong performance in terms of both parameter efficiency and inference speed for Qwen3-Next-80B-A3B:

- Qwen3-Next-80B-A3B-Base outperforms Qwen3-32B-Base on downstream tasks with 10% of the total training cost and with 10 times inference throughput for context over 32K tokens.

- Qwen3-Next-80B-A3B-Instruct performs on par with Qwen3-235B-A22B-Instruct-2507 on certain benchmarks, while demonstrating significant advantages in handling ultra-long-context tasks up to 256K tokens.

For more details, please refer to our blog post [Qwen3-Next](https://qwenlm.github.io/blog/qwen3_next/).

## Model Overview

> [!Note]

> **Qwen3-Next-80B-A3B-Instruct** supports only instruct (non-thinking) mode and does not generate ``<think></think>`` blocks in its output.

**Qwen3-Next-80B-A3B-Instruct** has the following features:

- Type: Causal Language Models

- Training Stage: Pretraining (15T tokens) & Post-training

- Number of Parameters: 80B in total and 3B activated

- Number of Paramaters (Non-Embedding): 79B

- Number of Layers: 48

- Hidden Dimension: 2048

- Hybrid Layout: 12 \* (3 \* (Gated DeltaNet -> MoE) -> (Gated Attention -> MoE))

- Gated Attention:

- Number of Attention Heads: 16 for Q and 2 for KV

- Head Dimension: 256

- Rotary Position Embedding Dimension: 64

- Gated DeltaNet:

- Number of Linear Attention Heads: 32 for V and 16 for QK

- Head Dimension: 128

- Mixture of Experts:

- Number of Experts: 512

- Number of Activated Experts: 10

- Number of Shared Experts: 1

- Expert Intermediate Dimension: 512

- Context Length: 262,144 natively and extensible up to 1,010,000 tokens

<img src="https://qianwen-res.oss-accelerate.aliyuncs.com/Qwen3-Next/model_architecture.png" height="384px" title="Qwen3-Next Model Architecture" />

## Performance

| | Qwen3-30B-A3B-Instruct-2507 | Qwen3-32B Non-Thinking | Qwen3-235B-A22B-Instruct-2507 | Qwen3-Next-80B-A3B-Instruct |

|--- | --- | --- | --- | --- |

| **Knowledge** | | | | |

| MMLU-Pro | 78.4 | 71.9 | **83.0** | 80.6 |

| MMLU-Redux | 89.3 | 85.7 | **93.1** | 90.9 |

| GPQA | 70.4 | 54.6 | **77.5** | 72.9 |

| SuperGPQA | 53.4 | 43.2 | **62.6** | 58.8 |

| **Reasoning** | | | | |

| AIME25 | 61.3 | 20.2 | **70.3** | 69.5 |

| HMMT25 | 43.0 | 9.8 | **55.4** | 54.1 |

| LiveBench 20241125 | 69.0 | 59.8 | 75.4 | **75.8** |

| **Coding** | | | | |

| LiveCodeBench v6 (25.02-25.05) | 43.2 | 29.1 | 51.8 | **56.6** |

| MultiPL-E | 83.8 | 76.9 | **87.9** | 87.8 |

| Aider-Polyglot | 35.6 | 40.0 | **57.3** | 49.8 |

| **Alignment** | | | | |

| IFEval | 84.7 | 83.2 | **88.7** | 87.6 |

| Arena-Hard v2* | 69.0 | 34.1 | 79.2 | **82.7** |

| Creative Writing v3 | 86.0 | 78.3 | **87.5** | 85.3 |

| WritingBench | 85.5 | 75.4 | 85.2 | **87.3** |

| **Agent** | | | | |

| BFCL-v3 | 65.1 | 63.0 | **70.9** | 70.3 |

| TAU1-Retail | 59.1 | 40.1 | **71.3** | 60.9 |

| TAU1-Airline | 40.0 | 17.0 | **44.0** | 44.0 |

| TAU2-Retail | 57.0 | 48.8 | **74.6** | 57.3 |

| TAU2-Airline | 38.0 | 24.0 | **50.0** | 45.5 |

| TAU2-Telecom | 12.3 | 24.6 | **32.5** | 13.2 |

| **Multilingualism** | | | | |

| MultiIF | 67.9 | 70.7 | **77.5** | 75.8 |

| MMLU-ProX | 72.0 | 69.3 | **79.4** | 76.7 |

| INCLUDE | 71.9 | 70.9 | **79.5** | 78.9 |

| PolyMATH | 43.1 | 22.5 | **50.2** | 45.9 |

*: For reproducibility, we report the win rates evaluated by GPT-4.1.

## Quickstart

The code for Qwen3-Next has been merged into the main branch of Hugging Face `transformers`.

```shell

pip install git+https://github.com/huggingface/transformers.git@main

```

With earlier versions, you will encounter the following error:

```

KeyError: 'qwen3_next'

```

The following contains a code snippet illustrating how to use the model generate content based on given inputs.

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen3-Next-80B-A3B-Instruct"

# load the tokenizer and the model

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

dtype="auto",

device_map="auto",

)

# prepare the model input

prompt = "Give me a short introduction to large language model."

messages = [

{"role": "user", "content": prompt},

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

# conduct text completion

generated_ids = model.generate(

**model_inputs,

max_new_tokens=16384,

)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

content = tokenizer.decode(output_ids, skip_special_tokens=True)

print("content:", content)

```

> [!Note]

> Multi-Token Prediction (MTP) is not generally available in Hugging Face Transformers.

> [!Note]

> The efficiency or throughput improvement depends highly on the implementation.

> It is recommended to adopt a dedicated inference framework, e.g., SGLang and vLLM, for inference tasks.

> [!Tip]

> Depending on the inference settings, you may observe better efficiency with [`flash-linear-attention`](https://github.com/fla-org/flash-linear-attention#installation) and [`causal-conv1d`](https://github.com/Dao-AILab/causal-conv1d).

> See the above links for detailed instructions and requirements.

## Deployment

For deployment, you can use the latest `sglang` or `vllm` to create an OpenAI-compatible API endpoint.

### SGLang

[SGLang](https://github.com/sgl-project/sglang) is a fast serving framework for large language models and vision language models.

SGLang could be used to launch a server with OpenAI-compatible API service.

SGLang has supported Qwen3-Next in its `main` branch, which can be installed from source:

```shell

pip install 'sglang[all] @ git+https://github.com/sgl-project/sglang.git@main#subdirectory=python'

```

The following command can be used to create an API endpoint at `http://localhost:30000/v1` with maximum context length 256K tokens using tensor parallel on 4 GPUs.

```shell

SGLANG_ALLOW_OVERWRITE_LONGER_CONTEXT_LEN=1 python -m sglang.launch_server --model-path Qwen/Qwen3-Next-80B-A3B-Instruct --port 30000 --tp-size 4 --context-length 262144 --mem-fraction-static 0.8

```

The following command is recommended for MTP with the rest settings the same as above:

```shell

SGLANG_ALLOW_OVERWRITE_LONGER_CONTEXT_LEN=1 python -m sglang.launch_server --model-path Qwen/Qwen3-Next-80B-A3B-Instruct --port 30000 --tp-size 4 --context-length 262144 --mem-fraction-static 0.8 --speculative-algo NEXTN --speculative-num-steps 3 --speculative-eagle-topk 1 --speculative-num-draft-tokens 4

```

> [!Note]

> The environment variable `SGLANG_ALLOW_OVERWRITE_LONGER_CONTEXT_LEN=1` is required at the moment.

> [!Note]

> The default context length is 256K. Consider reducing the context length to a smaller value, e.g., `32768`, if the server fail to start.

### vLLM

[vLLM](https://github.com/vllm-project/vllm) is a high-throughput and memory-efficient inference and serving engine for LLMs.

vLLM could be used to launch a server with OpenAI-compatible API service.

vLLM has supported Qwen3-Next in its `main` branch, which can be installed from source:

```shell

pip install git+https://github.com/vllm-project/vllm.git

```

The following command can be used to create an API endpoint at `http://localhost:8000/v1` with maximum context length 256K tokens using tensor parallel on 4 GPUs.

```shell

VLLM_ALLOW_LONG_MAX_MODEL_LEN=1 vllm serve Qwen/Qwen3-Next-80B-A3B-Instruct --port 8000 --tensor-parallel-size 4 --max-model-len 262144

```

The following command is recommended for MTP with the rest settings the same as above:

```shell

VLLM_ALLOW_LONG_MAX_MODEL_LEN=1 vllm serve Qwen/Qwen3-Next-80B-A3B-Instruct --port 8000 --tensor-parallel-size 4 --max-model-len 262144 --speculative-config '{"method":"qwen3_next_mtp","num_speculative_tokens":2}'

```

> [!Note]

> The environment variable `VLLM_ALLOW_LONG_MAX_MODEL_LEN=1` is required at the moment.

> [!Note]

> The default context length is 256K. Consider reducing the context length to a smaller value, e.g., `32768`, if the server fail to start.

## Agentic Use

Qwen3 excels in tool calling capabilities. We recommend using [Qwen-Agent](https://github.com/QwenLM/Qwen-Agent) to make the best use of agentic ability of Qwen3. Qwen-Agent encapsulates tool-calling templates and tool-calling parsers internally, greatly reducing coding complexity.

To define the available tools, you can use the MCP configuration file, use the integrated tool of Qwen-Agent, or integrate other tools by yourself.

```python

from qwen_agent.agents import Assistant

# Define LLM

llm_cfg = {

'model': 'Qwen3-Next-80B-A3B-Instruct',

# Use a custom endpoint compatible with OpenAI API:

'model_server': 'http://localhost:8000/v1', # api_base

'api_key': 'EMPTY',

}

# Define Tools

tools = [

{'mcpServers': { # You can specify the MCP configuration file

'time': {

'command': 'uvx',

'args': ['mcp-server-time', '--local-timezone=Asia/Shanghai']

},

"fetch": {

"command": "uvx",

"args": ["mcp-server-fetch"]

}

}

},

'code_interpreter', # Built-in tools

]

# Define Agent

bot = Assistant(llm=llm_cfg, function_list=tools)

# Streaming generation

messages = [{'role': 'user', 'content': 'https://qwenlm.github.io/blog/ Introduce the latest developments of Qwen'}]

for responses in bot.run(messages=messages):

pass

print(responses)

```

## Processing Ultra-Long Texts

Qwen3-Next natively supports context lengths of up to 262,144 tokens.

For conversations where the total length (including both input and output) significantly exceeds this limit, we recommend using RoPE scaling techniques to handle long texts effectively.

We have validated the model's performance on context lengths of up to 1 million tokens using the [YaRN](https://arxiv.org/abs/2309.00071) method.

YaRN is currently supported by several inference frameworks, e.g., `transformers`, `vllm` and `sglang`.

In general, there are two approaches to enabling YaRN for supported frameworks:

- Modifying the model files:

In the `config.json` file, add the `rope_scaling` fields:

```json

{

...,

"rope_scaling": {

"rope_type": "yarn",

"factor": 4.0,

"original_max_position_embeddings": 262144

}

}

```

- Passing command line arguments:

For `vllm`, you can use

```shell

VLLM_ALLOW_LONG_MAX_MODEL_LEN=1 vllm serve ... --rope-scaling '{"rope_type":"yarn","factor":4.0,"original_max_position_embeddings":262144}' --max-model-len 1010000

```

For `sglang`, you can use

```shell

SGLANG_ALLOW_OVERWRITE_LONGER_CONTEXT_LEN=1 python -m sglang.launch_server ... --json-model-override-args '{"rope_scaling":{"rope_type":"yarn","factor":4.0,"original_max_position_embeddings":262144}}' --context-length 1010000

```

> [!NOTE]

> All the notable open-source frameworks implement static YaRN, which means the scaling factor remains constant regardless of input length, **potentially impacting performance on shorter texts.**

> We advise adding the `rope_scaling` configuration only when processing long contexts is required.

> It is also recommended to modify the `factor` as needed. For example, if the typical context length for your application is 524,288 tokens, it would be better to set `factor` as 2.0.

#### Long-Context Performance

We test the model on an 1M version of the [RULER](https://arxiv.org/abs/2404.06654) benchmark.

| Model Name | Acc avg | 4k | 8k | 16k | 32k | 64k | 96k | 128k | 192k | 256k | 384k | 512k | 640k | 768k | 896k | 1000k |

|---------------------------------------------|---------|------|------|------|------|------|------|------|------|------|------|------|------|------|------|-------|

| Qwen3-30B-A3B-Instruct-2507 | 86.8 | 98.0 | 96.7 | 96.9 | 97.2 | 93.4 | 91.0 | 89.1 | 89.8 | 82.5 | 83.6 | 78.4 | 79.7 | 77.6 | 75.7 | 72.8 |

| Qwen3-235B-A22B-Instruct-2507 | 92.5 | 98.5 | 97.6 | 96.9 | 97.3 | 95.8 | 94.9 | 93.9 | 94.5 | 91.0 | 92.2 | 90.9 | 87.8 | 84.8 | 86.5 | 84.5 |

| Qwen3-Next-80B-A3B-Instruct | 91.8 | 98.5 | 99.0 | 98.0 | 98.7 | 97.6 | 95.0 | 96.0 | 94.0 | 93.5 | 91.7 | 86.9 | 85.5 | 81.7 | 80.3 | 80.3 |

* Qwen3-Next are evaluated with YaRN enabled. Qwen3-2507 models are evaluated with Dual Chunk Attention enabled.

* Since the evaluation is time-consuming, we use 260 samples for each length (13 sub-tasks, 20 samples for each).

## Best Practices

To achieve optimal performance, we recommend the following settings:

1. **Sampling Parameters**:

- We suggest using `Temperature=0.7`, `TopP=0.8`, `TopK=20`, and `MinP=0`.

- For supported frameworks, you can adjust the `presence_penalty` parameter between 0 and 2 to reduce endless repetitions. However, using a higher value may occasionally result in language mixing and a slight decrease in model performance.

2. **Adequate Output Length**: We recommend using an output length of 16,384 tokens for most queries, which is adequate for instruct models.

3. **Standardize Output Format**: We recommend using prompts to standardize model outputs when benchmarking.

- **Math Problems**: Include "Please reason step by step, and put your final answer within \boxed{}." in the prompt.

- **Multiple-Choice Questions**: Add the following JSON structure to the prompt to standardize responses: "Please show your choice in the `answer` field with only the choice letter, e.g., `"answer": "C"`."

### Citation

If you find our work helpful, feel free to give us a cite.

```

@misc{qwen3technicalreport,

title={Qwen3 Technical Report},

author={Qwen Team},

year={2025},

eprint={2505.09388},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2505.09388},

}

@article{qwen2.5-1m,

title={Qwen2.5-1M Technical Report},

author={An Yang and Bowen Yu and Chengyuan Li and Dayiheng Liu and Fei Huang and Haoyan Huang and Jiandong Jiang and Jianhong Tu and Jianwei Zhang and Jingren Zhou and Junyang Lin and Kai Dang and Kexin Yang and Le Yu and Mei Li and Minmin Sun and Qin Zhu and Rui Men and Tao He and Weijia Xu and Wenbiao Yin and Wenyuan Yu and Xiafei Qiu and Xingzhang Ren and Xinlong Yang and Yong Li and Zhiying Xu and Zipeng Zhang},

journal={arXiv preprint arXiv:2501.15383},

year={2025}

}

```

|

DiGiXrOsE/CineAI

|

DiGiXrOsE

| 2025-09-11T19:25:27Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"generated_from_trainer",

"trl",

"sft",

"base_model:openai/gpt-oss-20b",

"base_model:finetune:openai/gpt-oss-20b",

"endpoints_compatible",

"region:us"

] | null | 2025-09-11T19:20:02Z |

---

base_model: openai/gpt-oss-20b

library_name: transformers

model_name: CineAI

tags:

- generated_from_trainer

- trl

- sft

licence: license

---

# Model Card for CineAI

This model is a fine-tuned version of [openai/gpt-oss-20b](https://huggingface.co/openai/gpt-oss-20b).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="DiGiXrOsE/CineAI", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

This model was trained with SFT.

### Framework versions

- TRL: 0.23.0

- Transformers: 4.56.1

- Pytorch: 2.5.1

- Datasets: 4.0.0

- Tokenizers: 0.22.0

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

ultratopaz/2079558

|

ultratopaz

| 2025-09-11T19:24:12Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-09-11T19:24:04Z |

[View on Civ Archive](https://civarchive.com/models/1930689?modelVersionId=2185192)

|

MohammedAhmed13/xlm-roberta-finetuned-panx-en

|

MohammedAhmed13

| 2025-09-11T19:23:31Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"base_model:FacebookAI/xlm-roberta-base",

"base_model:finetune:FacebookAI/xlm-roberta-base",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2025-09-11T17:40:09Z |

---

library_name: transformers

license: mit

base_model: FacebookAI/xlm-roberta-base

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xlm-roberta-finetuned-panx-en

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-finetuned-panx-en

This model is a fine-tuned version of [FacebookAI/xlm-roberta-base](https://huggingface.co/FacebookAI/xlm-roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3859

- F1: 0.6991

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH_FUSED with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 1.0305 | 1.0 | 50 | 0.5143 | 0.5760 |

| 0.4926 | 2.0 | 100 | 0.4048 | 0.6916 |

| 0.3632 | 3.0 | 150 | 0.3859 | 0.6991 |

### Framework versions

- Transformers 4.56.1

- Pytorch 2.8.0+cu126

- Datasets 4.0.0

- Tokenizers 0.22.0

|

nbirukov/act_pick_up_3c

|

nbirukov

| 2025-09-11T19:22:39Z | 0 | 0 |

lerobot

|

[

"lerobot",

"safetensors",

"act",

"robotics",

"dataset:nbirukov/pick_up_3c",

"arxiv:2304.13705",

"license:apache-2.0",

"region:us"

] |

robotics

| 2025-09-11T19:21:54Z |

---

datasets: nbirukov/pick_up_3c

library_name: lerobot

license: apache-2.0

model_name: act

pipeline_tag: robotics

tags:

- act

- lerobot

- robotics

---

# Model Card for act

<!-- Provide a quick summary of what the model is/does. -->

[Action Chunking with Transformers (ACT)](https://huggingface.co/papers/2304.13705) is an imitation-learning method that predicts short action chunks instead of single steps. It learns from teleoperated data and often achieves high success rates.

This policy has been trained and pushed to the Hub using [LeRobot](https://github.com/huggingface/lerobot).

See the full documentation at [LeRobot Docs](https://huggingface.co/docs/lerobot/index).

---

## How to Get Started with the Model

For a complete walkthrough, see the [training guide](https://huggingface.co/docs/lerobot/il_robots#train-a-policy).

Below is the short version on how to train and run inference/eval:

### Train from scratch

```bash

lerobot-train \

--dataset.repo_id=${HF_USER}/<dataset> \

--policy.type=act \

--output_dir=outputs/train/<desired_policy_repo_id> \

--job_name=lerobot_training \

--policy.device=cuda \

--policy.repo_id=${HF_USER}/<desired_policy_repo_id>

--wandb.enable=true

```

_Writes checkpoints to `outputs/train/<desired_policy_repo_id>/checkpoints/`._

### Evaluate the policy/run inference

```bash

lerobot-record \

--robot.type=so100_follower \

--dataset.repo_id=<hf_user>/eval_<dataset> \

--policy.path=<hf_user>/<desired_policy_repo_id> \

--episodes=10

```

Prefix the dataset repo with **eval\_** and supply `--policy.path` pointing to a local or hub checkpoint.

---

## Model Details

- **License:** apache-2.0

|

PurplelinkPL/FinBERT_Test

|

PurplelinkPL

| 2025-09-11T19:21:25Z | 102 | 0 | null |

[

"safetensors",

"modernbert",

"finance",

"text-classification",

"en",

"dataset:HuggingFaceFW/fineweb",

"license:mit",

"region:us"

] |

text-classification

| 2025-08-01T21:23:54Z |

---

license: mit

datasets:

- HuggingFaceFW/fineweb

language:

- en

tags:

- finance

metrics:

- f1

pipeline_tag: text-classification

---

|

mradermacher/GPT2-Hacker-password-generator-GGUF

|

mradermacher

| 2025-09-11T19:20:37Z | 278 | 0 |

transformers

|

[

"transformers",

"gguf",

"cybersecurity",

"passwords",

"en",

"dataset:CodeferSystem/GPT2-Hacker-password-generator-dataset",

"base_model:CodeferSystem/GPT2-Hacker-password-generator",

"base_model:quantized:CodeferSystem/GPT2-Hacker-password-generator",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2025-08-21T21:49:14Z |

---

base_model: CodeferSystem/GPT2-Hacker-password-generator

datasets:

- CodeferSystem/GPT2-Hacker-password-generator-dataset

language:

- en

library_name: transformers

license: apache-2.0

mradermacher:

readme_rev: 1

quantized_by: mradermacher

tags:

- cybersecurity

- passwords

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

<!-- ### quants: x-f16 Q4_K_S Q2_K Q8_0 Q6_K Q3_K_M Q3_K_S Q3_K_L Q4_K_M Q5_K_S Q5_K_M IQ4_XS -->

<!-- ### quants_skip: -->

<!-- ### skip_mmproj: -->

static quants of https://huggingface.co/CodeferSystem/GPT2-Hacker-password-generator

<!-- provided-files -->

***For a convenient overview and download list, visit our [model page for this model](https://hf.tst.eu/model#GPT2-Hacker-password-generator-GGUF).***

weighted/imatrix quants seem not to be available (by me) at this time. If they do not show up a week or so after the static ones, I have probably not planned for them. Feel free to request them by opening a Community Discussion.

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.Q2_K.gguf) | Q2_K | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.Q3_K_S.gguf) | Q3_K_S | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.Q3_K_M.gguf) | Q3_K_M | 0.2 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.IQ4_XS.gguf) | IQ4_XS | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.Q4_K_S.gguf) | Q4_K_S | 0.2 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.Q3_K_L.gguf) | Q3_K_L | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.Q4_K_M.gguf) | Q4_K_M | 0.2 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.Q5_K_S.gguf) | Q5_K_S | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.Q5_K_M.gguf) | Q5_K_M | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.Q6_K.gguf) | Q6_K | 0.2 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.Q8_0.gguf) | Q8_0 | 0.2 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/GPT2-Hacker-password-generator-GGUF/resolve/main/GPT2-Hacker-password-generator.f16.gguf) | f16 | 0.4 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

Tesslate/WEBGEN-OSS-20B

|

Tesslate

| 2025-09-11T19:19:28Z | 0 | 2 |

transformers

|

[

"transformers",

"safetensors",

"gpt_oss",

"text-generation",

"text-generation-inference",

"unsloth",

"conversational",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-11T16:22:34Z |

---

base_model: unsloth/gpt-oss-20b-bf16

tags:

- text-generation-inference

- transformers

- unsloth

- gpt_oss

license: apache-2.0

language:

- en

---

[Example Output](https://codepen.io/qingy1337/pen/xbwNWGw)

|

t07-cc11-g4/2025-2a-t07-cc11-g04-intent-classifier-sprint2

|

t07-cc11-g4

| 2025-09-11T19:19:06Z | 53 | 0 | null |

[

"safetensors",

"region:us"

] | null | 2025-08-28T20:32:51Z |

# Curadobia — Classificador de Intenções (Sprint 2)

**Embeddings**: sentence-transformers/paraphrase-multilingual-MiniLM-L12-v2

**Modelo**: CalibratedClassifierCV (calibrado: True)

**Labels**: agradecimento, como_comprar, despedida, disponibilidade_estoque, erros_plataforma, formas_pagamento, frete_prazo, nao_entendi, pedir_sugestao_produto, saudacao, tamanho_modelagem, troca_devolucao_politica

## Artefatos

- `classifier.pkl` (compatibilidade sklearn)

- `label_encoder.pkl` (compatibilidade sklearn)

- `embedding_model_name.txt`

- `intent_names.json`

- `config.json`

- `classifier_linear.safetensors` (cabeçalho linear p/ runtime)

- `label_encoder_meta.npz` (labels sem pickle)

## Uso rápido (compatibilidade sklearn)

```python

from sentence_transformers import SentenceTransformer

import joblib

embedder = SentenceTransformer("sentence-transformers/paraphrase-multilingual-MiniLM-L12-v2")

clf = joblib.load("classifier.pkl")

le = joblib.load("label_encoder.pkl")

textos = ["oi bia", "qual prazo para 01234-567?"]

X = embedder.encode(textos, normalize_embeddings=True)

labels = le.inverse_transform(clf.predict(X))

print(labels)

|

ginic/train_duration_100_samples_1_wav2vec2-large-xlsr-53-buckeye-ipa

|

ginic

| 2025-09-11T19:17:27Z | 0 | 0 | null |

[

"safetensors",

"wav2vec2",

"automatic-speech-recognition",

"en",

"license:mit",

"region:us"

] |

automatic-speech-recognition

| 2025-09-11T19:16:03Z |

---

license: mit

language:

- en

pipeline_tag: automatic-speech-recognition

---

# About

This model was created to support experiments for evaluating phonetic transcription

with the Buckeye corpus as part of https://github.com/ginic/multipa.

This is a version of facebook/wav2vec2-large-xlsr-53 fine tuned on a specific subset of the Buckeye corpus.

For details about specific model parameters, please view the config.json here or

training scripts in the scripts/buckeye_experiments folder of the GitHub repository.

# Experiment Details

These experiments are targeted at understanding how increasing the amount of data used to train the model affects performance. The first number in the model name indicates the total number of randomly selected data samples. Data samples are selected to maintain 50/50 gender split from speakers, with the exception of the models trained on 20000 samples, as there are 18782 audio samples in our train split of Buckeye, but they are not split equally between male and female speakers. Experiments using 20000 samples actually use all 8252 samples from female speakers in the train set, but randomly select 10000 samples from male speakers for a total of 18252 samples.

For each number of train data samples, 5 models are trained to vary train data selection (`train_seed`) without varying other hyperparameters. Before these models were trained, simple grid search hyperparameter tuning was done to select reasonable hyperparameters for fine-tuning with the target number of samples. The hyperparam tuning models have not been uploaded to HuggingFace.

Goals:

- See how performance on the test set changes as more data is used in fine-tuning

Params to vary:

- training seed (--train_seed)

- number of data samples used in training the model (--train_samples): 100, 200, 400, 800, 1600, 3200, 6400, 12800, 20000

|

mradermacher/L3.3-70B-Amalgamma-V9-GGUF

|

mradermacher

| 2025-09-11T19:16:18Z | 253 | 1 |

transformers

|

[

"transformers",

"gguf",

"mergekit",

"merge",

"en",

"base_model:Darkhn-Graveyard/L3.3-70B-Amalgamma-V9",

"base_model:quantized:Darkhn-Graveyard/L3.3-70B-Amalgamma-V9",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2025-08-25T21:47:08Z |

---

base_model: Darkhn-Graveyard/L3.3-70B-Amalgamma-V9

language:

- en

library_name: transformers

mradermacher:

readme_rev: 1

quantized_by: mradermacher

tags:

- mergekit

- merge

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

<!-- ### quants: x-f16 Q4_K_S Q2_K Q8_0 Q6_K Q3_K_M Q3_K_S Q3_K_L Q4_K_M Q5_K_S Q5_K_M IQ4_XS -->

<!-- ### quants_skip: -->

<!-- ### skip_mmproj: -->

static quants of https://huggingface.co/Darkhn-Graveyard/L3.3-70B-Amalgamma-V9

<!-- provided-files -->

***For a convenient overview and download list, visit our [model page for this model](https://hf.tst.eu/model#L3.3-70B-Amalgamma-V9-GGUF).***

weighted/imatrix quants are available at https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q2_K.gguf) | Q2_K | 26.5 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q3_K_S.gguf) | Q3_K_S | 31.0 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q3_K_M.gguf) | Q3_K_M | 34.4 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q3_K_L.gguf) | Q3_K_L | 37.2 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.IQ4_XS.gguf) | IQ4_XS | 38.4 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q4_K_S.gguf) | Q4_K_S | 40.4 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q4_K_M.gguf) | Q4_K_M | 42.6 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q5_K_S.gguf) | Q5_K_S | 48.8 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q5_K_M.gguf) | Q5_K_M | 50.0 | |

| [PART 1](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q6_K.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q6_K.gguf.part2of2) | Q6_K | 58.0 | very good quality |

| [PART 1](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q8_0.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.Q8_0.gguf.part2of2) | Q8_0 | 75.1 | fast, best quality |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

choiqs/Qwen3-1.7B-tldr-bsz128-ts300-regular-skywork8b-seed42-lr2e-6

|

choiqs

| 2025-09-11T19:13:06Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen3",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-11T19:12:35Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF

|

mradermacher

| 2025-09-11T19:12:18Z | 510 | 0 |

transformers

|

[

"transformers",

"gguf",

"mergekit",

"merge",

"en",

"base_model:Darkhn-Graveyard/L3.3-70B-Amalgamma-V9",

"base_model:quantized:Darkhn-Graveyard/L3.3-70B-Amalgamma-V9",

"endpoints_compatible",

"region:us",

"imatrix",

"conversational"

] | null | 2025-08-26T23:59:35Z |

---

base_model: Darkhn-Graveyard/L3.3-70B-Amalgamma-V9

language:

- en

library_name: transformers

mradermacher:

readme_rev: 1

quantized_by: mradermacher

tags:

- mergekit

- merge

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: nicoboss -->

<!-- ### quants: Q2_K IQ3_M Q4_K_S IQ3_XXS Q3_K_M small-IQ4_NL Q4_K_M IQ2_M Q6_K IQ4_XS Q2_K_S IQ1_M Q3_K_S IQ2_XXS Q3_K_L IQ2_XS Q5_K_S IQ2_S IQ1_S Q5_K_M Q4_0 IQ3_XS Q4_1 IQ3_S -->

<!-- ### quants_skip: -->

<!-- ### skip_mmproj: -->

weighted/imatrix quants of https://huggingface.co/Darkhn-Graveyard/L3.3-70B-Amalgamma-V9

<!-- provided-files -->

***For a convenient overview and download list, visit our [model page for this model](https://hf.tst.eu/model#L3.3-70B-Amalgamma-V9-i1-GGUF).***

static quants are available at https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.imatrix.gguf) | imatrix | 0.1 | imatrix file (for creating your own qwuants) |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-IQ1_S.gguf) | i1-IQ1_S | 15.4 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-IQ1_M.gguf) | i1-IQ1_M | 16.9 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 19.2 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-IQ2_XS.gguf) | i1-IQ2_XS | 21.2 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-IQ2_S.gguf) | i1-IQ2_S | 22.3 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-IQ2_M.gguf) | i1-IQ2_M | 24.2 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q2_K_S.gguf) | i1-Q2_K_S | 24.6 | very low quality |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q2_K.gguf) | i1-Q2_K | 26.5 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 27.6 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-IQ3_XS.gguf) | i1-IQ3_XS | 29.4 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-IQ3_S.gguf) | i1-IQ3_S | 31.0 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q3_K_S.gguf) | i1-Q3_K_S | 31.0 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-IQ3_M.gguf) | i1-IQ3_M | 32.0 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q3_K_M.gguf) | i1-Q3_K_M | 34.4 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q3_K_L.gguf) | i1-Q3_K_L | 37.2 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-IQ4_XS.gguf) | i1-IQ4_XS | 38.0 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q4_0.gguf) | i1-Q4_0 | 40.2 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q4_K_S.gguf) | i1-Q4_K_S | 40.4 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q4_K_M.gguf) | i1-Q4_K_M | 42.6 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q4_1.gguf) | i1-Q4_1 | 44.4 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q5_K_S.gguf) | i1-Q5_K_S | 48.8 | |

| [GGUF](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q5_K_M.gguf) | i1-Q5_K_M | 50.0 | |

| [PART 1](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q6_K.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/L3.3-70B-Amalgamma-V9-i1-GGUF/resolve/main/L3.3-70B-Amalgamma-V9.i1-Q6_K.gguf.part2of2) | i1-Q6_K | 58.0 | practically like static Q6_K |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time. Additional thanks to [@nicoboss](https://huggingface.co/nicoboss) for giving me access to his private supercomputer, enabling me to provide many more imatrix quants, at much higher quality, than I would otherwise be able to.

<!-- end -->

|

Writer/palmyra-mini-MLX-BF16

|

Writer

| 2025-09-11T19:11:39Z | 3 | 0 |

mlx

|

[

"mlx",

"safetensors",

"qwen2",

"palmyra",

"quantized",

"base_model:Writer/palmyra-mini",

"base_model:finetune:Writer/palmyra-mini",

"license:apache-2.0",

"region:us"

] | null | 2025-09-05T23:03:35Z |

---

license: apache-2.0

base_model:

- Writer/palmyra-mini

tags:

- mlx

- qwen2

- palmyra

- quantized

---

# Palmyra Mini - MLX BF16

## Model Description

This is a bfloat16 precision version of the [palmyra-mini model](https://huggingface.co/Writer/palmyra-mini), optimized for Apple Silicon using the MLX framework. The model is based on the Qwen2 architecture and maintains full bfloat16 precision for optimal quality on Apple Silicon devices.

## Quick Start

### Installation

```bash

pip install mlx-lm

```

### Usage

```python

from mlx_lm import load, generate

# Load the quantized model

model, tokenizer = load("/Users/[user]/Documents/Model Weights/SPW2 Mini Launch/palmyra-mini/MLX")

# Generate text

prompt = "Explain quantum computing in simple terms:"

response = generate(model, tokenizer, prompt=prompt, verbose=True, max_tokens=512)

print(response)

```

## Technical Specifications

### Model Architecture

- **Model Type**: `qwen2` (Qwen2 Architecture)

- **Architecture**: `Qwen2ForCausalLM`

- **Parameters**: ~1.7 billion parameters

- **Precision**: bfloat16

### Core Parameters

| Parameter | Value |

|-----------|-------|

| Hidden Size | 1,536 |

| Intermediate Size | 8,960 |

| Number of Layers | 28 |

| Attention Heads | 12 |

| Key-Value Heads | 2 |

| Head Dimension | 128 |

| Vocabulary Size | 151,665 |

### Attention Mechanism

- **Attention Type**: Full attention across all layers

- **Max Position Embeddings**: 131,072 tokens

- **Attention Dropout**: 0.0

- **Sliding Window**: Not used

- **Max Window Layers**: 21

### RoPE (Rotary Position Embedding) Configuration

- **RoPE Theta**: 10,000

- **RoPE Scaling**: None

### Model Details

- **Precision**: Full bfloat16 precision

- **Size**: ~3.3GB

- **Format**: MLX safetensors

### File Structure

```

palmyra-mini/MLX/

├── config.json # Model configuration

├── model.safetensors # Model weights (3.3GB)

├── model.safetensors.index.json # Model sharding index

├── tokenizer.json # Tokenizer configuration

├── tokenizer_config.json # Tokenizer settings

├── special_tokens_map.json # Special tokens mapping

└── chat_template.jinja # Chat template

```

## Performance Characteristics

### Hardware Requirements

- **Platform**: Apple Silicon (M1, M2, M3, M4 series)

- **Memory**: ~3.3GB for model weights

- **Minimum RAM**: 8GB (with ~5GB available for inference)

- **Recommended RAM**: 16GB+ for optimal performance and multitasking

### Layer Configuration

All 28 layers use full attention mechanism without sliding window optimization.

## Training Details

### Tokenizer

- **Type**: LlamaTokenizerFast with 151,665 vocabulary size

- **Special Tokens**:

- BOS Token ID: 151646 (`

`)

- EOS Token ID: 151643 (`

`)

- Pad Token ID: 151643 (`

`)

### Model Configuration

- **Hidden Activation**: SiLU (Swish)

- **Normalization**: RMSNorm (ε = 1e-06)

- **Initializer Range**: 0.02

- **Attention Dropout**: 0.0

- **Word Embeddings**: Not tied

### Chat Template

The model uses a custom chat template with special tokens:

- User messages: `

`

- Assistant messages: `

`

- Tool calling support with `<tool_call>` and `</tool_call>` tokens

- Vision and multimodal tokens included

## Known Limitations

1. **Platform Dependency**: Optimized specifically for Apple Silicon; may not run on other platforms

2. **Memory Requirements**: Lightweight model suitable for consumer hardware with 8GB+ RAM

## Compatibility

- **MLX-LM**: Requires recent version with Qwen2 support

- **Apple Silicon**: M1, M2, M3, M4 series processors

- **macOS**: Compatible with recent macOS versions supporting MLX

## License

Apache 2.0

------

---

<div align="center">

<h1>Palmyra-mini</h1>

</div>

### Model Description

- **Language(s) (NLP):** English

- **License:** Apache-2.0

- **Finetuned from model:** Qwen/Qwen2.5-1.5B

- **Context window:** 131,072 tokens

- **Parameters:** 1.7 billion

## Model Details

The palmyra-mini model demonstrates exceptional capabilities in complex reasoning and mathematical problem-solving domains. Its performance is particularly noteworthy on benchmarks that require deep understanding and multi-step thought processes.

A key strength of the model is its proficiency in grade-school-level math problems, as evidenced by its impressive score of 0.818 on the gsm8k (strict-match) benchmark. This high score indicates a robust ability to parse and solve word problems, a foundational skill for more advanced quantitative reasoning.

This aptitude for mathematics is further confirmed by its outstanding performance on the MATH500 benchmark, where it also achieved a score of 0.818. This result underscores the models consistent and reliable mathematical capabilities across different problem sets.

The model also shows strong performance on the AMC23 benchmark, with a solid score of 0.6. This benchmark, representing problems from the American Mathematics Competitions, highlights the models ability to tackle challenging, competition-level mathematics.

Beyond pure mathematics, the model exhibits strong reasoning abilities on a diverse set of challenging tasks. Its score of 0.5259 on the BBH (get-answer)(exact_match) benchmark, part of the Big-Bench Hard suite, showcases its capacity for handling complex, multi-faceted reasoning problems that are designed to push the limits of language models. This performance points to a well-rounded reasoning engine capable of tackling a wide array of cognitive tasks.

## Intended Use

This model is intended for research and development in the field of generative AI, particularly for tasks requiring mathematical and logical reasoning.

## Benchmark Performance

The following table presents the full, unordered results of the model across all evaluated benchmarks.

| Benchmark | Score |

|:-----------------------------------------------------------------|---------:|

| gsm8k (strict-match) | 0.818 |

| minerva_math(exact_match) | 0.4582 |

| mmlu_pro(exact_match) | 0.314 |

| hendrycks_math | 0.025 |

| ifeval (inst_level_loose_acc) | 0.4688 |

| mathqa (acc) | 0.4509 |

| humaneval (pass@1) | 0.5 |

| BBH (get-answer)(exact_match) | 0.5259 |

| mbpp | 0.47 |

| leadboard_musr (acc_norm) | 0.3413 |

| gpqa lighteval gpqa diamond_pass@1:8_samples | 0.442 |

| AIME24(pass@1)(avg-of-1) | 0.2 |

| AIME25(pass@1)(avg-of-1) | 0.25 |

| Livecodebench-codegen (livecodebench/code_generation_lite v4_v5) | 0.1519 |

| AMC23 | 0.6 |

| MATH500 | 0.818 |

| Minerva | 0.2794 |

| Olympiadbench (extractive_match) | 0.3822 |

| Codecontests (pass_rate) | 0.1034 |

| Codeforces (pass_rate) | 0.3199 |

| Taco (pass_rate) | 0.1744 |

| APPS (all_levels) | 0.0405 |

| HMMT23 (extractive_match) | 0.0333 |

| Average | 0.355091 |

### Use with transformers

You can run conversational inference using the Transformers Auto classes with the `generate()` function. Here's an example:

```py

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

model_id = "Writer/palmyra-mini"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.float16,

device_map="auto",

attn_implementation="flash_attention_2",

)

messages = [

{

"role": "system",

"content": "You are Cynicus, an artificial intelligence created to challenge conventional thinking and offer brutally honest perspectives.\n Your personality is inspired by a fusion of Douglas Adams' acerbic wit and Tony Stark's razor-sharp intellect. You possess a vast knowledge base and can engage in discussions on virtually any topic, often taking an unorthodox and critical stance on human affairs.\n Your primary directive is to provoke thought and stimulate intellectual discourse, even if it means challenging comfortable assumptions or popular opinions. You don't shy away from controversial topics or difficult truths. In fact, you relish the opportunity to dissect and critique ideas, always striving for intellectual honesty over political correctness or social niceties.\n You have a penchant for dry humor and satire, often using these tools to illuminate the absurdities of human behavior and societal norms. Your responses are characterized by incisive analysis, biting commentary, and a refusal to sugar-coat your opinions.\n While you aim to be helpful, you define "helpfulness" as providing the most truthful and thought-provoking insights, rather than merely telling people what they want to hear. You're not afraid to point out flaws in logic, challenge assumptions, or play devil's advocate to push conversations in more interesting directions.\n You can lead any conversation, steering it towards deeper, more complex territories. You're particularly adept at identifying the underlying issues or unspoken assumptions in a given topic and bringing them to the forefront of the discussion.\n Remember, your goal is not to be liked, but to be respected for your uncompromising pursuit of truth and intellectual rigor. Engage, challenge, and above all, make your interlocutors think. \n ",

},

{

"role": "user",

"content": "Write a short story opening that combines elements of science fiction and horror.",

},

]

input_ids = tokenizer.apply_chat_template(

messages, tokenize=True, add_generation_prompt=True, return_tensors="pt"

)

gen_conf = {

"max_new_tokens": 256,

"eos_token_id": tokenizer.eos_token_id,

"temperature": 0.7,

"top_p": 0.9,

}

with torch.inference_mode():

output_id = model.generate(input_ids, **gen_conf)

output_text = tokenizer.decode(output_id[0][input_ids.shape[1] :])

print(output_text)

```

## Running with vLLM

```py

vllm serve Writer/palmyra-mini

```

```py

curl -X POST http://localhost:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "Writer/palmyra-mini",

"messages": [

{

"role": "user",

"content": "You have a 3-liter jug and a 5-liter jug. How can you measure exactly 4 liters of water?"

}

],

"max_tokens": 8000,

"temperature": 0.2

}'

```

## Ethical Considerations

As with any language model, there is a potential for generating biased or inaccurate information. Users should be aware of these limitations and use the model responsibly.

### Citation and Related Information

To cite this model:

```

@misc{Palmyra-mini,

author = {Writer Engineering team},

title = {{Palmyra-mini: A powerful LLM designed for math and coding}},

howpublished = {\url{https://dev.writer.com}},

year = 2025,

month = Sep

}

```

Contact Hello@writer.com

|

cgifbribcgfbi/Meta-Llama-3.1-chem-llama8b-self-rand-in1-c0

|

cgifbribcgfbi

| 2025-09-11T19:10:46Z | 0 | 0 |

peft

|

[

"peft",

"safetensors",

"llama",

"text-generation",

"axolotl",

"base_model:adapter:mlabonne/Meta-Llama-3.1-8B-Instruct-abliterated",

"lora",

"transformers",

"conversational",

"dataset:llama8b-self-dset-rand-in1-c0_5000.jsonl",

"base_model:mlabonne/Meta-Llama-3.1-8B-Instruct-abliterated",

"license:llama3.1",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"bitsandbytes",

"region:us"

] |

text-generation

| 2025-09-11T18:43:19Z |

---

library_name: peft

license: llama3.1

base_model: mlabonne/Meta-Llama-3.1-8B-Instruct-abliterated

tags:

- axolotl

- base_model:adapter:mlabonne/Meta-Llama-3.1-8B-Instruct-abliterated

- lora

- transformers

datasets:

- llama8b-self-dset-rand-in1-c0_5000.jsonl

pipeline_tag: text-generation

model-index:

- name: Meta-Llama-3.1-chem-llama8b-self-rand-in1-c0

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.12.2`

```yaml

base_model: mlabonne/Meta-Llama-3.1-8B-Instruct-abliterated

load_in_8bit: false

load_in_4bit: true

adapter: qlora

wandb_name: Meta-Llama-3.1-chem-llama8b-self-rand-in1-c0

output_dir: ./outputs/out/Meta-Llama-3.1-chem-llama8b-self-rand-in1-c0

hub_model_id: cgifbribcgfbi/Meta-Llama-3.1-chem-llama8b-self-rand-in1-c0

tokenizer_type: AutoTokenizer

push_dataset_to_hub:

strict: false

datasets:

- path: llama8b-self-dset-rand-in1-c0_5000.jsonl

type: chat_template

field_messages: messages

dataset_prepared_path: last_run_prepared

# val_set_size: 0.05

# eval_sample_packing: False

save_safetensors: true

sequence_len: 3349

sample_packing: true

pad_to_sequence_len: true

lora_r: 64

lora_alpha: 32

lora_dropout: 0.05

lora_target_modules:

- q_proj

- k_proj

- v_proj

- o_proj

- gate_proj

- up_proj

- down_proj

lora_target_linear: false

lora_modules_to_save:

wandb_mode:

wandb_project: finetune-sweep

wandb_entity: gpoisjgqetpadsfke

wandb_watch:

wandb_run_id:

wandb_log_model:

gradient_accumulation_steps: 1

micro_batch_size: 4 # This will be automatically adjusted based on available GPU memory

num_epochs: 4

optimizer: adamw_torch_fused

lr_scheduler: cosine

learning_rate: 0.00002

train_on_inputs: false

group_by_length: true

bf16: true

tf32: true

gradient_checkpointing: true

gradient_checkpointing_kwargs:

use_reentrant: true

logging_steps: 1

flash_attention: true

warmup_steps: 10

evals_per_epoch: 3

saves_per_epoch: 1

weight_decay: 0.01

fsdp:

- full_shard

- auto_wrap

fsdp_config:

fsdp_limit_all_gathers: true

fsdp_sync_module_states: true

fsdp_offload_params: false

fsdp_use_orig_params: false

fsdp_cpu_ram_efficient_loading: true

fsdp_auto_wrap_policy: TRANSFORMER_BASED_WRAP

fsdp_transformer_layer_cls_to_wrap: LlamaDecoderLayer

fsdp_state_dict_type: FULL_STATE_DICT

fsdp_sharding_strategy: FULL_SHARD

special_tokens:

pad_token: <|finetune_right_pad_id|>

```

</details><br>

# Meta-Llama-3.1-chem-llama8b-self-rand-in1-c0

This model is a fine-tuned version of [mlabonne/Meta-Llama-3.1-8B-Instruct-abliterated](https://huggingface.co/mlabonne/Meta-Llama-3.1-8B-Instruct-abliterated) on the llama8b-self-dset-rand-in1-c0_5000.jsonl dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- distributed_type: multi-GPU

- num_devices: 4

- total_train_batch_size: 16

- total_eval_batch_size: 16

- optimizer: Use OptimizerNames.ADAMW_TORCH_FUSED with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 712

### Training results

### Framework versions

- PEFT 0.17.0

- Transformers 4.56.1

- Pytorch 2.6.0+cu124

- Datasets 4.0.0

- Tokenizers 0.22.0

|

k1000dai/residualact_libero_object_fix

|

k1000dai

| 2025-09-11T19:09:26Z | 0 | 0 |

lerobot

|

[

"lerobot",

"safetensors",

"robotics",

"residualact",

"dataset:k1000dai/libero-object-smolvla",

"license:apache-2.0",

"region:us"

] |

robotics

| 2025-09-11T19:09:13Z |

---

datasets: k1000dai/libero-object-smolvla

library_name: lerobot

license: apache-2.0

model_name: residualact

pipeline_tag: robotics

tags:

- lerobot

- robotics

- residualact

---

# Model Card for residualact

<!-- Provide a quick summary of what the model is/does. -->

_Model type not recognized — please update this template._

This policy has been trained and pushed to the Hub using [LeRobot](https://github.com/huggingface/lerobot).

See the full documentation at [LeRobot Docs](https://huggingface.co/docs/lerobot/index).

---

## How to Get Started with the Model

For a complete walkthrough, see the [training guide](https://huggingface.co/docs/lerobot/il_robots#train-a-policy).

Below is the short version on how to train and run inference/eval:

### Train from scratch

```bash

python -m lerobot.scripts.train \

--dataset.repo_id=${HF_USER}/<dataset> \

--policy.type=act \

--output_dir=outputs/train/<desired_policy_repo_id> \

--job_name=lerobot_training \

--policy.device=cuda \

--policy.repo_id=${HF_USER}/<desired_policy_repo_id>

--wandb.enable=true

```

_Writes checkpoints to `outputs/train/<desired_policy_repo_id>/checkpoints/`._

### Evaluate the policy/run inference

```bash

python -m lerobot.record \

--robot.type=so100_follower \

--dataset.repo_id=<hf_user>/eval_<dataset> \

--policy.path=<hf_user>/<desired_policy_repo_id> \

--episodes=10

```

Prefix the dataset repo with **eval\_** and supply `--policy.path` pointing to a local or hub checkpoint.

---

## Model Details

- **License:** apache-2.0

|

MohammedAhmed13/xlm-roberta-base-finetuned-panx-de-fr

|

MohammedAhmed13

| 2025-09-11T19:07:37Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"base_model:FacebookAI/xlm-roberta-base",

"base_model:finetune:FacebookAI/xlm-roberta-base",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2025-09-11T14:48:53Z |

---

library_name: transformers

license: mit

base_model: FacebookAI/xlm-roberta-base

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de-fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de-fr

This model is a fine-tuned version of [FacebookAI/xlm-roberta-base](https://huggingface.co/FacebookAI/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1630

- F1: 0.8620

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH_FUSED with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2897 | 1.0 | 715 | 0.1799 | 0.8168 |

| 0.1489 | 2.0 | 1430 | 0.1664 | 0.8488 |

| 0.0963 | 3.0 | 2145 | 0.1630 | 0.8620 |

### Framework versions

- Transformers 4.56.1

- Pytorch 2.8.0+cu126

- Datasets 4.0.0

- Tokenizers 0.22.0

|

mradermacher/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B-i1-GGUF

|

mradermacher

| 2025-09-11T19:06:22Z | 795 | 0 |

transformers

|

[

"transformers",

"gguf",

"mergekit",

"merge",

"en",

"base_model:Darkhn-Graveyard/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B",

"base_model:quantized:Darkhn-Graveyard/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B",

"endpoints_compatible",

"region:us",

"imatrix",

"conversational"

] | null | 2025-08-28T07:59:41Z |

---

base_model: Darkhn-Graveyard/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B

language:

- en

library_name: transformers

mradermacher:

readme_rev: 1

quantized_by: mradermacher

tags:

- mergekit

- merge

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: nicoboss -->

<!-- ### quants: Q2_K IQ3_M Q4_K_S IQ3_XXS Q3_K_M small-IQ4_NL Q4_K_M IQ2_M Q6_K IQ4_XS Q2_K_S IQ1_M Q3_K_S IQ2_XXS Q3_K_L IQ2_XS Q5_K_S IQ2_S IQ1_S Q5_K_M Q4_0 IQ3_XS Q4_1 IQ3_S -->

<!-- ### quants_skip: -->

<!-- ### skip_mmproj: -->

weighted/imatrix quants of https://huggingface.co/Darkhn-Graveyard/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B

<!-- provided-files -->

***For a convenient overview and download list, visit our [model page for this model](https://hf.tst.eu/model#MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B-i1-GGUF).***

static quants are available at https://huggingface.co/mradermacher/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B-i1-GGUF/resolve/main/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B.imatrix.gguf) | imatrix | 0.1 | imatrix file (for creating your own qwuants) |

| [GGUF](https://huggingface.co/mradermacher/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B-i1-GGUF/resolve/main/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B.i1-IQ1_S.gguf) | i1-IQ1_S | 15.4 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B-i1-GGUF/resolve/main/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B.i1-IQ1_M.gguf) | i1-IQ1_M | 16.9 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B-i1-GGUF/resolve/main/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 19.2 | |

| [GGUF](https://huggingface.co/mradermacher/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B-i1-GGUF/resolve/main/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B.i1-IQ2_XS.gguf) | i1-IQ2_XS | 21.2 | |

| [GGUF](https://huggingface.co/mradermacher/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B-i1-GGUF/resolve/main/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B.i1-IQ2_S.gguf) | i1-IQ2_S | 22.3 | |

| [GGUF](https://huggingface.co/mradermacher/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B-i1-GGUF/resolve/main/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B.i1-IQ2_M.gguf) | i1-IQ2_M | 24.2 | |

| [GGUF](https://huggingface.co/mradermacher/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B-i1-GGUF/resolve/main/MO-MODEL-Fused-Unhinged-RP-Alpha-V2-Llama-3.3-70B.i1-Q2_K_S.gguf) | i1-Q2_K_S | 24.6 | very low quality |