modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-12 18:33:19

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 555

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-12 18:33:14

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

Prathyusha101/tldr-ppco-g1p0-l1p0

|

Prathyusha101

| 2025-08-19T14:52:57Z | 0 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt_neox",

"text-classification",

"generated_from_trainer",

"dataset:trl-internal-testing/tldr-preference-sft-trl-style",

"arxiv:1909.08593",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2025-08-19T10:22:47Z |

---

datasets: trl-internal-testing/tldr-preference-sft-trl-style

library_name: transformers

model_name: tldr-ppco-g1p0-l1p0

tags:

- generated_from_trainer

licence: license

---

# Model Card for tldr-ppco-g1p0-l1p0

This model is a fine-tuned version of [None](https://huggingface.co/None) on the [trl-internal-testing/tldr-preference-sft-trl-style](https://huggingface.co/datasets/trl-internal-testing/tldr-preference-sft-trl-style) dataset.

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="Prathyusha101/tldr-ppco-g1p0-l1p0", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/prathyusha1-the-university-of-texas-at-austin/huggingface/runs/qb7oufpu)

This model was trained with PPO, a method introduced in [Fine-Tuning Language Models from Human Preferences](https://huggingface.co/papers/1909.08593).

### Framework versions

- TRL: 0.15.0.dev0

- Transformers: 4.53.1

- Pytorch: 2.5.1

- Datasets: 3.6.0

- Tokenizers: 0.21.2

## Citations

Cite PPO as:

```bibtex

@article{mziegler2019fine-tuning,

title = {{Fine-Tuning Language Models from Human Preferences}},

author = {Daniel M. Ziegler and Nisan Stiennon and Jeffrey Wu and Tom B. Brown and Alec Radford and Dario Amodei and Paul F. Christiano and Geoffrey Irving},

year = 2019,

eprint = {arXiv:1909.08593}

}

```

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallouédec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

forstseh/blockassist-bc-arctic_soaring_heron_1755611858

|

forstseh

| 2025-08-19T14:51:28Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"arctic soaring heron",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:51:13Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- arctic soaring heron

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

hakimjustbao/blockassist-bc-raging_subtle_wasp_1755613402

|

hakimjustbao

| 2025-08-19T14:51:26Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"raging subtle wasp",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:51:23Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- raging subtle wasp

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

zenqqq/blockassist-bc-restless_reptilian_caterpillar_1755614989

|

zenqqq

| 2025-08-19T14:51:10Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"restless reptilian caterpillar",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:50:41Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- restless reptilian caterpillar

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Leoar/blockassist-bc-pudgy_toothy_cheetah_1755614865

|

Leoar

| 2025-08-19T14:49:09Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"pudgy toothy cheetah",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:49:00Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- pudgy toothy cheetah

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

uncahined/blockassist-bc-prowling_durable_tapir_1755614817

|

uncahined

| 2025-08-19T14:48:34Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"prowling durable tapir",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:48:28Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- prowling durable tapir

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

umairmaliick/falcon-7b-instruct-taskpro-lora

|

umairmaliick

| 2025-08-19T14:45:49Z | 0 | 0 |

peft

|

[

"peft",

"tensorboard",

"safetensors",

"base_model:adapter:tiiuae/falcon-7b-instruct",

"lora",

"transformers",

"text-generation",

"conversational",

"base_model:tiiuae/falcon-7b-instruct",

"license:apache-2.0",

"region:us"

] |

text-generation

| 2025-08-19T13:53:18Z |

---

library_name: peft

license: apache-2.0

base_model: tiiuae/falcon-7b-instruct

tags:

- base_model:adapter:tiiuae/falcon-7b-instruct

- lora

- transformers

pipeline_tag: text-generation

model-index:

- name: falcon-7b-instruct-taskpro-lora

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# falcon-7b-instruct-taskpro-lora

This model is a fine-tuned version of [tiiuae/falcon-7b-instruct](https://huggingface.co/tiiuae/falcon-7b-instruct) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 3.2754

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 1 | 3.2923 |

| No log | 2.0 | 2 | 3.2812 |

| No log | 3.0 | 3 | 3.2754 |

### Framework versions

- PEFT 0.17.0

- Transformers 4.55.2

- Pytorch 2.6.0+cu124

- Datasets 4.0.0

- Tokenizers 0.21.4

|

lilTAT/blockassist-bc-gentle_rugged_hare_1755614706

|

lilTAT

| 2025-08-19T14:45:33Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"gentle rugged hare",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:45:30Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- gentle rugged hare

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Trelis/Qwen3-4B_ds-arc-agi-2-perfect-100_test-c8

|

Trelis

| 2025-08-19T14:45:18Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen3",

"text-generation",

"text-generation-inference",

"unsloth",

"trl",

"conversational",

"en",

"base_model:unsloth/Qwen3-4B",

"base_model:finetune:unsloth/Qwen3-4B",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-19T14:44:31Z |

---

base_model: unsloth/Qwen3-4B

tags:

- text-generation-inference

- transformers

- unsloth

- qwen3

- trl

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** Trelis

- **License:** apache-2.0

- **Finetuned from model :** unsloth/Qwen3-4B

This qwen3 model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

lisaozill03/blockassist-bc-rugged_prickly_alpaca_1755613191

|

lisaozill03

| 2025-08-19T14:45:03Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"rugged prickly alpaca",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:45:00Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- rugged prickly alpaca

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Team-Atom/act_record_pp_blue001_96_100000

|

Team-Atom

| 2025-08-19T14:41:55Z | 0 | 0 |

lerobot

|

[

"lerobot",

"safetensors",

"robotics",

"act",

"dataset:Team-Atom/PiPl_blue_001",

"arxiv:2304.13705",

"license:apache-2.0",

"region:us"

] |

robotics

| 2025-08-19T14:41:42Z |

---

datasets: Team-Atom/PiPl_blue_001

library_name: lerobot

license: apache-2.0

model_name: act

pipeline_tag: robotics

tags:

- robotics

- lerobot

- act

---

# Model Card for act

<!-- Provide a quick summary of what the model is/does. -->

[Action Chunking with Transformers (ACT)](https://huggingface.co/papers/2304.13705) is an imitation-learning method that predicts short action chunks instead of single steps. It learns from teleoperated data and often achieves high success rates.

This policy has been trained and pushed to the Hub using [LeRobot](https://github.com/huggingface/lerobot).

See the full documentation at [LeRobot Docs](https://huggingface.co/docs/lerobot/index).

---

## How to Get Started with the Model

For a complete walkthrough, see the [training guide](https://huggingface.co/docs/lerobot/il_robots#train-a-policy).

Below is the short version on how to train and run inference/eval:

### Train from scratch

```bash

python -m lerobot.scripts.train \

--dataset.repo_id=${HF_USER}/<dataset> \

--policy.type=act \

--output_dir=outputs/train/<desired_policy_repo_id> \

--job_name=lerobot_training \

--policy.device=cuda \

--policy.repo_id=${HF_USER}/<desired_policy_repo_id>

--wandb.enable=true

```

_Writes checkpoints to `outputs/train/<desired_policy_repo_id>/checkpoints/`._

### Evaluate the policy/run inference

```bash

python -m lerobot.record \

--robot.type=so100_follower \

--dataset.repo_id=<hf_user>/eval_<dataset> \

--policy.path=<hf_user>/<desired_policy_repo_id> \

--episodes=10

```

Prefix the dataset repo with **eval\_** and supply `--policy.path` pointing to a local or hub checkpoint.

---

## Model Details

- **License:** apache-2.0

|

lilTAT/blockassist-bc-gentle_rugged_hare_1755614412

|

lilTAT

| 2025-08-19T14:40:39Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"gentle rugged hare",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:40:35Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- gentle rugged hare

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Feruru/Classifier

|

Feruru

| 2025-08-19T14:36:48Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2025-08-19T14:35:49Z |

---

license: apache-2.0

---

|

asteria-life/openalex_articles_v0

|

asteria-life

| 2025-08-19T14:36:06Z | 0 | 0 |

model2vec

|

[

"model2vec",

"safetensors",

"embeddings",

"static-embeddings",

"sentence-transformers",

"license:mit",

"region:us"

] | null | 2025-08-19T14:35:56Z |

---

library_name: model2vec

license: mit

model_name: tmpp0ggqq40

tags:

- embeddings

- static-embeddings

- sentence-transformers

---

# tmpp0ggqq40 Model Card

This [Model2Vec](https://github.com/MinishLab/model2vec) model is a distilled version of a Sentence Transformer. It uses static embeddings, allowing text embeddings to be computed orders of magnitude faster on both GPU and CPU. It is designed for applications where computational resources are limited or where real-time performance is critical. Model2Vec models are the smallest, fastest, and most performant static embedders available. The distilled models are up to 50 times smaller and 500 times faster than traditional Sentence Transformers.

## Installation

Install model2vec using pip:

```

pip install model2vec

```

## Usage

### Using Model2Vec

The [Model2Vec library](https://github.com/MinishLab/model2vec) is the fastest and most lightweight way to run Model2Vec models.

Load this model using the `from_pretrained` method:

```python

from model2vec import StaticModel

# Load a pretrained Model2Vec model

model = StaticModel.from_pretrained("tmpp0ggqq40")

# Compute text embeddings

embeddings = model.encode(["Example sentence"])

```

### Using Sentence Transformers

You can also use the [Sentence Transformers library](https://github.com/UKPLab/sentence-transformers) to load and use the model:

```python

from sentence_transformers import SentenceTransformer

# Load a pretrained Sentence Transformer model

model = SentenceTransformer("tmpp0ggqq40")

# Compute text embeddings

embeddings = model.encode(["Example sentence"])

```

### Distilling a Model2Vec model

You can distill a Model2Vec model from a Sentence Transformer model using the `distill` method. First, install the `distill` extra with `pip install model2vec[distill]`. Then, run the following code:

```python

from model2vec.distill import distill

# Distill a Sentence Transformer model, in this case the BAAI/bge-base-en-v1.5 model

m2v_model = distill(model_name="BAAI/bge-base-en-v1.5", pca_dims=256)

# Save the model

m2v_model.save_pretrained("m2v_model")

```

## How it works

Model2vec creates a small, fast, and powerful model that outperforms other static embedding models by a large margin on all tasks we could find, while being much faster to create than traditional static embedding models such as GloVe. Best of all, you don't need any data to distill a model using Model2Vec.

It works by passing a vocabulary through a sentence transformer model, then reducing the dimensionality of the resulting embeddings using PCA, and finally weighting the embeddings using [SIF weighting](https://openreview.net/pdf?id=SyK00v5xx). During inference, we simply take the mean of all token embeddings occurring in a sentence.

## Additional Resources

- [Model2Vec Repo](https://github.com/MinishLab/model2vec)

- [Model2Vec Base Models](https://huggingface.co/collections/minishlab/model2vec-base-models-66fd9dd9b7c3b3c0f25ca90e)

- [Model2Vec Results](https://github.com/MinishLab/model2vec/tree/main/results)

- [Model2Vec Tutorials](https://github.com/MinishLab/model2vec/tree/main/tutorials)

- [Website](https://minishlab.github.io/)

## Library Authors

Model2Vec was developed by the [Minish Lab](https://github.com/MinishLab) team consisting of [Stephan Tulkens](https://github.com/stephantul) and [Thomas van Dongen](https://github.com/Pringled).

## Citation

Please cite the [Model2Vec repository](https://github.com/MinishLab/model2vec) if you use this model in your work.

```

@article{minishlab2024model2vec,

author = {Tulkens, Stephan and {van Dongen}, Thomas},

title = {Model2Vec: Fast State-of-the-Art Static Embeddings},

year = {2024},

url = {https://github.com/MinishLab/model2vec}

}

```

|

Sayemahsjn/blockassist-bc-playful_feline_octopus_1755613025

|

Sayemahsjn

| 2025-08-19T14:35:58Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"playful feline octopus",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:35:54Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- playful feline octopus

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

aleebaster/blockassist-bc-sly_eager_boar_1755612564

|

aleebaster

| 2025-08-19T14:34:33Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"sly eager boar",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:34:26Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- sly eager boar

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Jekareka/test

|

Jekareka

| 2025-08-19T14:34:22Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2025-08-19T14:34:22Z |

---

license: apache-2.0

---

|

ihsanridzi/blockassist-bc-wiry_flexible_owl_1755612485

|

ihsanridzi

| 2025-08-19T14:34:04Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"wiry flexible owl",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:34:00Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- wiry flexible owl

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

sarrockia/prefectIllustriousXL_v3.safetensors

|

sarrockia

| 2025-08-19T14:33:06Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2025-08-19T14:04:58Z |

---

license: apache-2.0

---

|

shanaka95/gemma-3-270m-it-rag-finetune

|

shanaka95

| 2025-08-19T14:28:51Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"gemma3_text",

"text-generation",

"generated_from_trainer",

"sft",

"trl",

"conversational",

"base_model:shanaka95/checkpoints",

"base_model:finetune:shanaka95/checkpoints",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-18T10:32:44Z |

---

base_model: shanaka95/checkpoints

library_name: transformers

model_name: gemma-3-270m-it-rag-finetune

tags:

- generated_from_trainer

- sft

- trl

licence: license

---

# Model Card for gemma-3-270m-it-rag-finetune

This model is a fine-tuned version of [shanaka95/checkpoints](https://huggingface.co/shanaka95/checkpoints).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="shanaka95/gemma-3-270m-it-rag-finetune", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

This model was trained with SFT.

### Framework versions

- TRL: 0.21.0

- Transformers: 4.55.2

- Pytorch: 2.8.0+cu129

- Datasets: 4.0.0

- Tokenizers: 0.21.4

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

quantumxnode/blockassist-bc-dormant_peckish_seahorse_1755611635

|

quantumxnode

| 2025-08-19T14:22:09Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"dormant peckish seahorse",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:22:06Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- dormant peckish seahorse

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Andra76/blockassist-bc-deadly_enormous_butterfly_1755613240

|

Andra76

| 2025-08-19T14:21:36Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"deadly enormous butterfly",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:21:31Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- deadly enormous butterfly

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

chainway9/blockassist-bc-untamed_quick_eel_1755611572

|

chainway9

| 2025-08-19T14:20:21Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"untamed quick eel",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:20:17Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- untamed quick eel

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Joetib/en-twi-qwen2.5-0.5B-Instruct

|

Joetib

| 2025-08-19T14:19:37Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-19T14:19:22Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

mradermacher/qqWen-32B-RL-Reasoning-GGUF

|

mradermacher

| 2025-08-19T14:19:30Z | 0 | 0 |

transformers

|

[

"transformers",

"gguf",

"en",

"base_model:morganstanley/qqWen-32B-RL-Reasoning",

"base_model:quantized:morganstanley/qqWen-32B-RL-Reasoning",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2025-08-18T22:30:32Z |

---

base_model: morganstanley/qqWen-32B-RL-Reasoning

language:

- en

library_name: transformers

license: apache-2.0

mradermacher:

readme_rev: 1

quantized_by: mradermacher

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

<!-- ### quants: x-f16 Q4_K_S Q2_K Q8_0 Q6_K Q3_K_M Q3_K_S Q3_K_L Q4_K_M Q5_K_S Q5_K_M IQ4_XS -->

<!-- ### quants_skip: -->

<!-- ### skip_mmproj: -->

static quants of https://huggingface.co/morganstanley/qqWen-32B-RL-Reasoning

<!-- provided-files -->

***For a convenient overview and download list, visit our [model page for this model](https://hf.tst.eu/model#qqWen-32B-RL-Reasoning-GGUF).***

weighted/imatrix quants are available at https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-GGUF/resolve/main/qqWen-32B-RL-Reasoning.Q2_K.gguf) | Q2_K | 12.4 | |

| [GGUF](https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-GGUF/resolve/main/qqWen-32B-RL-Reasoning.Q3_K_S.gguf) | Q3_K_S | 14.5 | |

| [GGUF](https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-GGUF/resolve/main/qqWen-32B-RL-Reasoning.Q3_K_M.gguf) | Q3_K_M | 16.0 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-GGUF/resolve/main/qqWen-32B-RL-Reasoning.Q3_K_L.gguf) | Q3_K_L | 17.3 | |

| [GGUF](https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-GGUF/resolve/main/qqWen-32B-RL-Reasoning.IQ4_XS.gguf) | IQ4_XS | 18.0 | |

| [GGUF](https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-GGUF/resolve/main/qqWen-32B-RL-Reasoning.Q4_K_S.gguf) | Q4_K_S | 18.9 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-GGUF/resolve/main/qqWen-32B-RL-Reasoning.Q4_K_M.gguf) | Q4_K_M | 20.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-GGUF/resolve/main/qqWen-32B-RL-Reasoning.Q5_K_S.gguf) | Q5_K_S | 22.7 | |

| [GGUF](https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-GGUF/resolve/main/qqWen-32B-RL-Reasoning.Q5_K_M.gguf) | Q5_K_M | 23.4 | |

| [GGUF](https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-GGUF/resolve/main/qqWen-32B-RL-Reasoning.Q6_K.gguf) | Q6_K | 27.0 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/qqWen-32B-RL-Reasoning-GGUF/resolve/main/qqWen-32B-RL-Reasoning.Q8_0.gguf) | Q8_0 | 34.9 | fast, best quality |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

Kdch2597/ppo-LunarLander-v2

|

Kdch2597

| 2025-08-19T14:18:49Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2025-08-19T14:18:31Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 263.01 +/- 19.22

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Neurazum/Tbai-DPA-1.0

|

Neurazum

| 2025-08-19T14:17:43Z | 0 | 1 |

transformers

|

[

"transformers",

"safetensors",

"text",

"image",

"brain",

"dementia",

"mri",

"fmri",

"health",

"diagnosis",

"diseases",

"alzheimer",

"parkinson",

"comment",

"doctor",

"vbai",

"tbai",

"bai",

"text-generation",

"tr",

"doi:10.57967/hf/5699",

"license:cc-by-nc-sa-4.0",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-05-31T13:02:32Z |

---

license: cc-by-nc-sa-4.0

language:

- tr

pipeline_tag: text-generation

tags:

- text

- image

- brain

- dementia

- mri

- fmri

- health

- diagnosis

- diseases

- alzheimer

- parkinson

- comment

- doctor

- vbai

- tbai

- bai

library_name: transformers

---

# Tbai-DPA 1.0 Sürümü (TR) [BETA]

## Tanım

Tbai-DPA 1.0 (Dementia, Parkinson, Alzheimer) modeli, MRI veya fMRI görüntüsü üzerinden beyin hastalıklarını yorumlayarak daha detaylı teşhis etmek amacıyla eğitilmiş ve geliştirilmiştir. Hastanın parkinson olup olmadığını, demans durumunu ve alzheimer riskini yüksek doğruluk oranı ile göstermektedir.

### Kitle / Hedef

Tbai modelleri, Vbai ile birlikte çalışarak; öncelikle hastaneler, sağlık merkezleri ve bilim merkezleri için geliştirilmiştir.

### Sınıflar

- **Alzheimer Hastası**

- **Ortalama Alzheimer Riski**

- **Hafif Alzheimer Riski**

- **Çok Hafif Alzheimer Riski**

- **Risk Yok**

- **Parkinson Hastası**

## ----------------------------------------

# Tbai-DPA 1.0 Version (EN) [BETA]

## Description

The Tbai-DPA 1.0 (Dementia, Parkinson's, Alzheimer's) model has been trained and developed to interpret brain diseases through MRI or fMRI images for more detailed diagnosis. It indicates whether the patient has Parkinson's disease, dementia, and Alzheimer's risk with a high accuracy rate.

### Audience / Target

Tbai models, working in conjunction with Vbai, have been developed primarily for hospitals, health centers, and science centers.

### Classes

- **Alzheimer's disease**

- **Average Risk of Alzheimer's Disease**

- **Mild Alzheimer's Risk**

- **Very Mild Alzheimer's Risk**

- **No Risk**

- **Parkinson's Disease**

## Kullanım / Usage

1. Sanal ortam oluşturun. / Create a virtual environment.

```bash

python -3.9.0 -m venv myenv

```

2. Bağımlılıkları yükleyin. / Load dependencies.

```bash

pip install -r requirements.txt

```

3. Dosyayı çalıştırın. / Run the script.

```python

import torch

from transformers import T5Tokenizer, T5ForConditionalGeneration

import warnings

warnings.filterwarnings("ignore", category=FutureWarning)

warnings.filterwarnings("ignore", category=UserWarning)

def load_tbai_model(model_dir: str, device):

tokenizer = T5Tokenizer.from_pretrained(model_dir)

model = T5ForConditionalGeneration.from_pretrained(model_dir).to(device)

return tokenizer, model

def generate_comment_sampling(

tokenizer,

model,

sinif_adi: str,

device,

max_length: int = 128

) -> str:

input_text = f"Sınıf: {sinif_adi}"

inputs = tokenizer(

input_text,

return_tensors="pt",

padding="longest",

truncation=True,

max_length=32

).to(device)

out_ids = model.generate(

**inputs,

max_length=max_length,

do_sample=True,

top_k=50,

top_p=0.95,

no_repeat_ngram_size=2,

early_stopping=True

)

comment = tokenizer.decode(out_ids[0], skip_special_tokens=True)

return comment

def test_with_sampling():

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

tokenizer, model = load_tbai_model(

"Tbai/model/dir/path",

device)

test_classes = [

"alzheimer disease",

"mild alzheimer risk",

"moderate alzheimer risk",

"very mild alzheimer risk",

"no risk",

"parkinson disease"

]

for cls in test_classes:

print(f"--- Class: {cls} (Deneme 1) ---")

print(generate_comment_sampling(tokenizer, model, cls, device))

print(f"--- Class: {cls} (Deneme 2) ---")

print(generate_comment_sampling(tokenizer, model, cls, device))

print()

def main():

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f">>> Using device: {device}\n")

model_dir = "Tbai/model/dir/path"

tokenizer, model = load_tbai_model(model_dir, device)

print(">>> Tokenizer ve model başarıyla yüklendi.\n")

test_classes = [

"alzheimer disease",

"mild alzheimer risk",

"moderate alzheimer risk",

"very mild alzheimer risk",

"no risk",

"parkinson disease"

]

for cls in test_classes:

generated = generate_comment_sampling(tokenizer, model, cls, device)

print(f"Sınıf: {cls}")

print(f"Üretilen Yorum: {generated}\n")

if __name__ == "__main__":

main()

```

4. Görüntü İşleme Modeli ile Beraber Çalıştırın. / Run Together with Image Processing Model.

```python

import os

import time

import torch

import torch.nn as nn

from torchvision import transforms

from PIL import Image

import matplotlib.pyplot as plt

from thop import profile

import numpy as np

from datetime import datetime

import warnings

from sklearn.metrics import average_precision_score

warnings.filterwarnings("ignore", category=FutureWarning)

warnings.filterwarnings("ignore", category=UserWarning)

from transformers import T5Tokenizer, T5ForConditionalGeneration

class SimpleCNN(nn.Module):

def __init__(self, model_type='c', num_classes=6): # Model tipine göre "model_type" değişkeni "f, c, q" olarak değiştirilebilir. / The ‘model_type’ variable can be changed to ‘f, c, q’ according to the model type.

super(SimpleCNN, self).__init__()

self.num_classes = num_classes

if model_type == 'f':

self.conv1 = nn.Conv2d(3, 16, kernel_size=3, stride=1, padding=1)

self.conv2 = nn.Conv2d(16, 32, kernel_size=3, stride=1, padding=1)

self.conv3 = nn.Conv2d(32, 64, kernel_size=3, stride=1, padding=1)

self.fc1 = nn.Linear(64 * 28 * 28, 256)

self.dropout = nn.Dropout(0.5)

elif model_type == 'c':

self.conv1 = nn.Conv2d(3, 32, kernel_size=3, stride=1, padding=1)

self.conv2 = nn.Conv2d(32, 64, kernel_size=3, stride=1, padding=1)

self.conv3 = nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1)

self.fc1 = nn.Linear(128 * 28 * 28, 512)

self.dropout = nn.Dropout(0.5)

elif model_type == 'q':

self.conv1 = nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1)

self.conv2 = nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1)

self.conv3 = nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1)

self.conv4 = nn.Conv2d(256, 512, kernel_size=3, stride=1, padding=1)

self.fc1 = nn.Linear(512 * 14 * 14, 1024)

self.dropout = nn.Dropout(0.5)

self.fc2 = nn.Linear(self.fc1.out_features, num_classes)

self.relu = nn.ReLU()

self.pool = nn.MaxPool2d(kernel_size=2, stride=2, padding=0)

def forward(self, x):

x = self.pool(self.relu(self.conv1(x)))

x = self.pool(self.relu(self.conv2(x)))

x = self.pool(self.relu(self.conv3(x)))

if hasattr(self, 'conv4'):

x = self.pool(self.relu(self.conv4(x)))

x = x.view(x.size(0), -1)

x = self.relu(self.fc1(x))

x = self.dropout(x)

x = self.fc2(x)

return x

def predict_image(model: nn.Module, image_path: str, transform, device):

img = Image.open(image_path).convert('RGB')

inp = transform(img).unsqueeze(0).to(device)

model.eval()

with torch.no_grad():

out = model(inp)

prob = torch.nn.functional.softmax(out, dim=1)

pred = prob.argmax(dim=1).item()

conf = prob[0, pred].item() * 100

return pred, conf, inp, prob

def calculate_performance_metrics(model: nn.Module, device, input_size=(1, 3, 224, 224)):

model.to(device)

x = torch.randn(input_size).to(device)

flops, params = profile(model, inputs=(x,), verbose=False)

cpu_start = time.time()

_ = model(x)

cpu_time = (time.time() - cpu_start) * 1000

return {

'size_pixels': input_size[-1],

'speed_cpu_b1': cpu_time,

'speed_cpu_b32': cpu_time / 10,

'speed_v100_b1': cpu_time / 2,

'params_million': params / 1e6,

'flops_billion': flops / 1e9

}

def load_tbai_model(model_dir: str, device):

tokenizer = T5Tokenizer.from_pretrained(model_dir)

model = T5ForConditionalGeneration.from_pretrained(model_dir).to(device)

model.eval()

return tokenizer, model

def generate_comment_turkce(tokenizer, model, sinif_adi: str, device, max_length: int = 64) -> str:

input_text = f"Sınıf: {sinif_adi}"

inputs = tokenizer(

input_text,

return_tensors="pt",

padding="longest",

truncation=True,

max_length=32

).to(device)

out_ids = model.generate(

**inputs,

max_length=max_length,

do_sample=True,

top_k=50,

top_p=0.95,

no_repeat_ngram_size=2,

early_stopping=True

)

comment = tokenizer.decode(out_ids[0], skip_special_tokens=True)

return comment

def save_monitoring_log(predicted_class, confidence, comment_text,

metrics, class_names, image_path, ap_scores=None, map_score=None,

log_path='monitoring_log.txt'):

os.makedirs(os.path.dirname(log_path) or '.', exist_ok=True)

timestamp = datetime.now().strftime('%Y-%m-%d %H:%M:%S')

img_name = os.path.basename(image_path)

log = f"""

===== Model Monitoring Log =====

Timestamp: {timestamp}

Image: {img_name}

Predicted Class: {class_names[predicted_class]}

Confidence: {confidence:.2f}%

Comment: {comment_text}

-- Performance Metrics --

Params (M): {metrics['params_million']:.2f}

FLOPs (B): {metrics['flops_billion']:.2f}

Image Size: {metrics['size_pixels']}x{metrics['size_pixels']}

CPU Time b1 (ms): {metrics['speed_cpu_b1']:.2f}

V100 Time b1 (ms): {metrics['speed_v100_b1']:.2f}

V100 Time b32 (ms): {metrics['speed_cpu_b32']:.2f}

-- AP/mAP Metrics --"""

if ap_scores is not None and map_score is not None:

log += f"\nmAP: {map_score:.4f}"

for i, (class_name, ap) in enumerate(zip(class_names, ap_scores)):

log += f"\nAP_{class_name}: {ap:.4f}"

else:

log += "\nAP/mAP: Not calculated (single image)"

log += "\n================================\n"

with open(log_path, 'a', encoding='utf-8') as f:

f.write(log)

def main():

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(device)

transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406],

[0.229, 0.224, 0.225])

])

class_names = [

'Alzheimer Disease',

'Mild Alzheimer Risk',

'Moderate Alzheimer Risk',

'Very Mild Alzheimer Risk',

'No Risk',

'Parkinson Disease'

]

model = SimpleCNN(model_type='c', num_classes=len(class_names)).to(device) # Model tipine göre "model_type" değişkeni "f, c, q" olarak değiştirilebilir. / The ‘model_type’ variable can be changed to ‘f, c, q’ according to the model type.

model_path = 'Vbai/model/file/path'

try:

model.load_state_dict(torch.load(model_path, map_location=device))

except Exception as e:

print(f"Görüntü modeli yükleme hatası: {e}")

return

metrics = calculate_performance_metrics(model, device)

tbai_model_dir = "Tbai/model/dir/path"

tokenizer, tbai_model = load_tbai_model(tbai_model_dir, device)

en2tr = {

'Alzheimer Disease': 'Alzheimer Hastalığı',

'Mild Alzheimer Risk': 'Hafif Alzheimer Riski',

'Moderate Alzheimer Risk': 'Orta Düzey Alzheimer Riski',

'Very Mild Alzheimer Risk': 'Çok Hafif Alzheimer Riski',

'No Risk': 'Risk Yok',

'Parkinson Disease': 'Parkinson Hastalığı'

}

image_path = 'test/images/path'

pred_class_idx, confidence, inp_tensor, predicted_probs = predict_image(model, image_path, transform, device)

predicted_class_name = class_names[pred_class_idx]

print(f"Prediction: {predicted_class_name} ({confidence:.2f}%)")

print(f"Confidence: {confidence:.2f}%")

print(f"Params (M): {metrics['params_million']:.2f}")

print(f"FLOPs (B): {metrics['flops_billion']:.2f}")

print(f"Image Size: {metrics['size_pixels']}x{metrics['size_pixels']}")

print(f"CPU Time b1 (ms): {metrics['speed_cpu_b1']:.2f}")

print(f"V100 Time b1 (ms): {metrics['speed_v100_b1']:.2f}")

print(f"V100 Time b32 (ms): {metrics['speed_cpu_b32']:.2f}")

tr_class_name = en2tr.get(predicted_class_name, predicted_class_name)

try:

comment_text = generate_comment_turkce(tokenizer, tbai_model, tr_class_name, device)

except Exception as e:

print(f"Yorum üretme hatası: {e}")

comment_text = "Yorum üretilemedi."

print(f"\nComment (Tbai-DPA 1.0): {comment_text}")

save_monitoring_log(

pred_class_idx, confidence, comment_text,

metrics, class_names, image_path)

img_show = inp_tensor.squeeze(0).permute(1, 2, 0).cpu().numpy()

mean = np.array([0.485, 0.456, 0.406])

std = np.array([0.229, 0.224, 0.225])

img_show = img_show * std + mean

img_show_clipped = np.clip(img_show, 0.0, 1.0)

plt.imshow(img_show_clipped)

plt.title(f'{predicted_class_name} — {confidence:.2f}%')

plt.axis('off')

plt.show()

if __name__ == '__main__':

main()

```

#### Lisans/License: CC-BY-NC-SA-4.0

|

AiArtLab/kc

|

AiArtLab

| 2025-08-19T14:17:04Z | 0 | 2 | null |

[

"text-to-image",

"base_model:KBlueLeaf/Kohaku-XL-Zeta",

"base_model:finetune:KBlueLeaf/Kohaku-XL-Zeta",

"region:us"

] |

text-to-image

| 2025-04-30T17:10:58Z |

---

base_model:

- stabilityai/stable-diffusion-xl-base-1.0

- KBlueLeaf/Kohaku-XL-Zeta

pipeline_tag: text-to-image

---

## Description

This model is a custom fine-tuned variant based on the Kohaku-XL-Zeta pretrained foundation [Kohaku-XL-Zeta](https://huggingface.co/KBlueLeaf/Kohaku-XL-Zeta). Kohaku-XL-Zeta itself is a "raw" base model trained for 1 epoch on 8+ million Danbooru(mostly) images , using 4x NVIDIA 3090 GPUs! While the original Kohaku is not user-friendly out-of-the-box, it serves as a flexible starting point for creative adaptations.

To enhance encoder stability and inject cross-domain knowledge beyond Danbooru-specific features, the model was merged with ColorfulXL using cosine dissimilarity weighting (0.25 blend ratio). This integration aims to broaden the model’s understanding of natural language and artistic concepts beyond typical Danbooru tagging conventions.

Post-merge stabilization involved 6 epochs at 2e-6 learning rate, followed by ongoing fine-tuning at 9e-7 learning rate to refine details. The closest publicly available fine-tune of this lineage is Illustrous, though it uses an earlier Kohaku version with weaker text comprehension. This variant leverages the improved Kohaku-Colorful hybrid (KC), prioritizing non-realistic art generation and creative flexibility over photorealism.

Key Notes :

- Not optimized for realism; best suited for anime/artistic styles.

- Ideal for users seeking a customizable foundation for niche art generation or further fine-tuning experiments.

## Donations

Please contact with us if you may provide some GPU's or money on training

DOGE: DEw2DR8C7BnF8GgcrfTzUjSnGkuMeJhg83

BTC: 3JHv9Hb8kEW8zMAccdgCdZGfrHeMhH1rpN

## Contacts

[recoilme](https://t.me/recoilme)

|

mradermacher/UI-Venus-Navi-72B-GGUF

|

mradermacher

| 2025-08-19T14:16:46Z | 0 | 1 |

transformers

|

[

"transformers",

"gguf",

"en",

"base_model:inclusionAI/UI-Venus-Navi-72B",

"base_model:quantized:inclusionAI/UI-Venus-Navi-72B",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2025-08-18T23:56:29Z |

---

base_model: inclusionAI/UI-Venus-Navi-72B

language:

- en

library_name: transformers

license: apache-2.0

mradermacher:

readme_rev: 1

quantized_by: mradermacher

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

<!-- ### quants: x-f16 Q4_K_S Q2_K Q8_0 Q6_K Q3_K_M Q3_K_S Q3_K_L Q4_K_M Q5_K_S Q5_K_M IQ4_XS -->

<!-- ### quants_skip: -->

<!-- ### skip_mmproj: -->

static quants of https://huggingface.co/inclusionAI/UI-Venus-Navi-72B

<!-- provided-files -->

***For a convenient overview and download list, visit our [model page for this model](https://hf.tst.eu/model#UI-Venus-Navi-72B-GGUF).***

weighted/imatrix quants are available at https://huggingface.co/mradermacher/UI-Venus-Navi-72B-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.mmproj-Q8_0.gguf) | mmproj-Q8_0 | 0.9 | multi-modal supplement |

| [GGUF](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.mmproj-f16.gguf) | mmproj-f16 | 1.5 | multi-modal supplement |

| [GGUF](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q2_K.gguf) | Q2_K | 29.9 | |

| [GGUF](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q3_K_S.gguf) | Q3_K_S | 34.6 | |

| [GGUF](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q3_K_M.gguf) | Q3_K_M | 37.8 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q3_K_L.gguf) | Q3_K_L | 39.6 | |

| [GGUF](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.IQ4_XS.gguf) | IQ4_XS | 40.3 | |

| [GGUF](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q4_K_S.gguf) | Q4_K_S | 44.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q4_K_M.gguf) | Q4_K_M | 47.5 | fast, recommended |

| [PART 1](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q5_K_S.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q5_K_S.gguf.part2of2) | Q5_K_S | 51.5 | |

| [PART 1](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q5_K_M.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q5_K_M.gguf.part2of2) | Q5_K_M | 54.5 | |

| [PART 1](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q6_K.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q6_K.gguf.part2of2) | Q6_K | 64.4 | very good quality |

| [PART 1](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q8_0.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/UI-Venus-Navi-72B-GGUF/resolve/main/UI-Venus-Navi-72B.Q8_0.gguf.part2of2) | Q8_0 | 77.4 | fast, best quality |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

Kurosawama/Llama-3.2-3B-Instruct-Inference-align

|

Kurosawama

| 2025-08-19T14:15:36Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"trl",

"dpo",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2025-08-19T14:15:34Z |

---

library_name: transformers

tags:

- trl

- dpo

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

mang3dd/blockassist-bc-tangled_slithering_alligator_1755611332

|

mang3dd

| 2025-08-19T14:15:31Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"tangled slithering alligator",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T14:15:28Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- tangled slithering alligator

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

ibm-granite/granite-speech-3.3-2b

|

ibm-granite

| 2025-08-19T14:14:22Z | 44,969 | 22 |

transformers

|

[

"transformers",

"safetensors",

"granite_speech",

"automatic-speech-recognition",

"multilingual",

"en",

"fr",

"de",

"es",

"pt",

"arxiv:2505.08699",

"base_model:ibm-granite/granite-3.3-2b-instruct",

"base_model:finetune:ibm-granite/granite-3.3-2b-instruct",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2025-04-28T15:25:29Z |

---

license: apache-2.0

language:

- multilingual

- en

- fr

- de

- es

- pt

base_model:

- ibm-granite/granite-3.3-2b-instruct

library_name: transformers

---

# Granite-speech-3.3-2b (revision 3.3.2)

**Model Summary:**

Granite-speech-3.3-2b is a compact and efficient speech-language model, specifically designed for automatic speech recognition (ASR) and automatic speech translation (AST). Granite-speech-3.3-2b uses a two-pass design, unlike integrated models that combine speech and language into a single pass. Initial calls to granite-speech-3.3-2b will transcribe audio files into text. To process the transcribed text using the underlying Granite language model, users must make a second call as each step must be explicitly initiated.

The model was trained on a collection of public corpora comprising diverse datasets for ASR and AST as well as synthetic datasets tailored to support the speech translation task. Granite-speech-3.3-2b was trained by modality aligning granite-3.3-2b-instruct (https://huggingface.co/ibm-granite/granite-3.3-2b-instruct) to speech on publicly available open source corpora containing audio inputs and text targets. Compared to the initial release, revision 3.3.2

* supports multilingual speech inputs in English, French, German, Spanish and Portuguese,

* provides transcription accuracy improvements for English ASR by using a deeper acoustic encoder and additional training data.

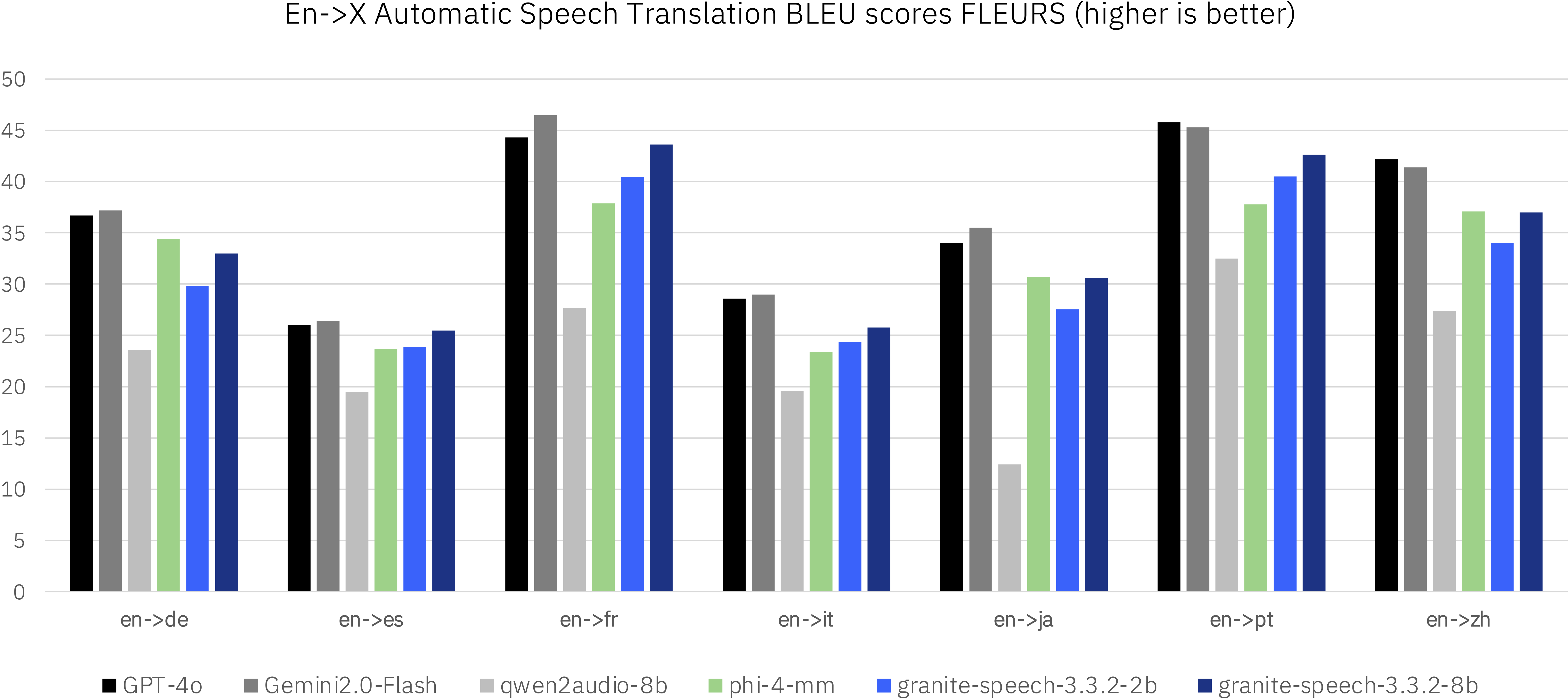

**Evaluations:**

We evaluated granite-speech-3.3-2b revision 3.3.2 alongside granite-speech-3.3-8b (https://huggingface.co/ibm-granite/granite-speech-3.3-8b) and other speech-language models in the less than 8b parameter range as well as dedicated ASR and AST systems on standard benchmarks. The evaluation spanned multiple public benchmarks, with particular emphasis on English ASR tasks while also including multilingual ASR and AST for X-En and En-X translations.

<br>

<br>

<br>

<br>

<br>

<br>

**Release Date**: June 19, 2025

**License:** [Apache 2.0](https://www.apache.org/licenses/LICENSE-2.0)

**Supported Languages:**

English, French, German, Spanish, Portuguese

**Intended Use:**

The model is intended to be used in enterprise applications that involve processing of speech inputs. In particular, the model is well-suited for English, French, German, Spanish and Portuguese speech-to-text and speech translations to and from English for the same languages plus English-to-Japanese and English-to-Mandarin. The model can also be used for tasks that involve text-only input since it calls the underlying granite-3.3-2b-instruct when the user specifies a prompt that does not contain audio.

## Generation:

Granite Speech model is supported natively in `transformers` from the `main` branch. Below is a simple example of how to use the `granite-speech-3.3-2b` revision 3.3.2 model.

### Usage with `transformers`

First, make sure to install a recent version of transformers:

```shell

pip install transformers>=4.52.4 torchaudio peft soundfile

```

Then run the code:

```python

import torch

import torchaudio

from transformers import AutoProcessor, AutoModelForSpeechSeq2Seq

from huggingface_hub import hf_hub_download

device = "cuda" if torch.cuda.is_available() else "cpu"

model_name = "ibm-granite/granite-speech-3.3-2b"

processor = AutoProcessor.from_pretrained(model_name)

tokenizer = processor.tokenizer

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name, device_map=device, torch_dtype=torch.bfloat16

)

# load audio

audio_path = hf_hub_download(repo_id=model_name, filename="10226_10111_000000.wav")

wav, sr = torchaudio.load(audio_path, normalize=True)

assert wav.shape[0] == 1 and sr == 16000 # mono, 16khz

# create text prompt

system_prompt = "Knowledge Cutoff Date: April 2024.\nToday's Date: April 9, 2025.\nYou are Granite, developed by IBM. You are a helpful AI assistant"

user_prompt = "<|audio|>can you transcribe the speech into a written format?"

chat = [

dict(role="system", content=system_prompt),

dict(role="user", content=user_prompt),

]

prompt = tokenizer.apply_chat_template(chat, tokenize=False, add_generation_prompt=True)

# run the processor+model

model_inputs = processor(prompt, wav, device=device, return_tensors="pt").to(device)

model_outputs = model.generate(**model_inputs, max_new_tokens=200, do_sample=False, num_beams=1)

# Transformers includes the input IDs in the response.

num_input_tokens = model_inputs["input_ids"].shape[-1]

new_tokens = torch.unsqueeze(model_outputs[0, num_input_tokens:], dim=0)

output_text = tokenizer.batch_decode(

new_tokens, add_special_tokens=False, skip_special_tokens=True

)

print(f"STT output = {output_text[0].upper()}")

```

### Usage with `vLLM`

First, make sure to install the latest version of vLLM:

```shell

pip install vllm --upgrade

```

* Code for offline mode:

```python

from transformers import AutoTokenizer

from vllm import LLM, SamplingParams

from vllm.assets.audio import AudioAsset

from vllm.lora.request import LoRARequest

model_id = "ibm-granite/granite-speech-3.3-2b"

tokenizer = AutoTokenizer.from_pretrained(model_id)

def get_prompt(question: str, has_audio: bool):

"""Build the input prompt to send to vLLM."""

if has_audio:

question = f"<|audio|>{question}"

chat = [

{

"role": "user",

"content": question

}

]

return tokenizer.apply_chat_template(chat, tokenize=False)

# NOTE - you may see warnings about multimodal lora layers being ignored;

# this is okay as the lora in this model is only applied to the LLM.

model = LLM(

model=model_id,

enable_lora=True,

max_lora_rank=64,

max_model_len=2048, # This may be needed for lower resource devices.

limit_mm_per_prompt={"audio": 1},

)

### 1. Example with Audio [make sure to use the lora]

question = "can you transcribe the speech into a written format?"

prompt_with_audio = get_prompt(

question=question,

has_audio=True,

)

audio = AudioAsset("mary_had_lamb").audio_and_sample_rate

inputs = {

"prompt": prompt_with_audio,

"multi_modal_data": {

"audio": audio,

}

}

outputs = model.generate(

inputs,

sampling_params=SamplingParams(

temperature=0.2,

max_tokens=64,

),

lora_request=[LoRARequest("speech", 1, model_id)]

)

print(f"Audio Example - Question: {question}")

print(f"Generated text: {outputs[0].outputs[0].text}")

### 2. Example without Audio [do NOT use the lora]

question = "What is the capital of Brazil?"

prompt = get_prompt(

question=question,

has_audio=False,

)

outputs = model.generate(

{"prompt": prompt},

sampling_params=SamplingParams(

temperature=0.2,

max_tokens=12,

),

)

print(f"Text Only Example - Question: {question}")

print(f"Generated text: {outputs[0].outputs[0].text}")

```

* Code for online mode:

```python

"""

Launch the vLLM server with the following command:

vllm serve ibm-granite/granite-speech-3.3-2b \

--api-key token-abc123 \

--max-model-len 2048 \

--enable-lora \

--lora-modules speech=ibm-granite/granite-speech-3.3-2b \

--max-lora-rank 64

"""

import base64

import requests

from openai import OpenAI

from vllm.assets.audio import AudioAsset

# Modify OpenAI's API key and API base to use vLLM's API server.

openai_api_key = "token-abc123"

openai_api_base = "http://localhost:8000/v1"

client = OpenAI(

# defaults to os.environ.get("OPENAI_API_KEY")

api_key=openai_api_key,

base_url=openai_api_base,

)

base_model_name = "ibm-granite/granite-speech-3.3-2b"

lora_model_name = "speech"

# Any format supported by librosa is supported

audio_url = AudioAsset("mary_had_lamb").url

# Use base64 encoded audio in the payload

def encode_audio_base64_from_url(audio_url: str) -> str:

"""Encode an audio retrieved from a remote url to base64 format."""

with requests.get(audio_url) as response:

response.raise_for_status()

result = base64.b64encode(response.content).decode('utf-8')

return result

audio_base64 = encode_audio_base64_from_url(audio_url=audio_url)

### 1. Example with Audio

# NOTE: we pass the name of the lora model (`speech`) here because we have audio.

question = "can you transcribe the speech into a written format?"

chat_completion_with_audio = client.chat.completions.create(

messages=[{

"role": "user",

"content": [

{

"type": "text",

"text": question

},

{

"type": "audio_url",

"audio_url": {

# Any format supported by librosa is supported

"url": f"data:audio/ogg;base64,{audio_base64}"

},

},

],

}],

temperature=0.2,

max_tokens=64,

model=lora_model_name,

)

print(f"Audio Example - Question: {question}")

print(f"Generated text: {chat_completion_with_audio.choices[0].message.content}")

### 2. Example without Audio

# NOTE: we pass the name of the base model here because we do not have audio.

question = "What is the capital of Brazil?"

chat_completion_with_audio = client.chat.completions.create(

messages=[{

"role": "user",

"content": [

{

"type": "text",

"text": question

},

],

}],

temperature=0.2,

max_tokens=12,

model=base_model_name,

)

print(f"Text Only Example - Question: {question}")

print(f"Generated text: {chat_completion_with_audio.choices[0].message.content}")

```

**Model Architecture:**

The architecture of granite-speech-3.3-2b revision 3.3.2 consists of the following components:

(1) Speech encoder: 16 conformer blocks trained with Connectionist Temporal Classification (CTC) on character-level targets on the subset containing

only ASR corpora (see configuration below). In addition, our CTC encoder uses block-attention with 4-seconds audio blocks and self-conditioned CTC

from the middle layer.

| Configuration parameter | Value |

|-----------------|----------------------|

| Input dimension | 160 (80 logmels x 2) |

| Nb. of layers | 16 |

| Hidden dimension | 1024 |

| Nb. of attention heads | 8 |

| Attention head size | 128 |

| Convolution kernel size | 15 |

| Output dimension | 256 |

(2) Speech projector and temporal downsampler (speech-text modality adapter): we use a 2-layer window query transformer (q-former) operating on

blocks of 15 1024-dimensional acoustic embeddings coming out of the last conformer block of the speech encoder that get downsampled by a factor of 5

using 3 trainable queries per block and per layer. The total temporal downsampling factor is 10 (2x from the encoder and 5x from the projector)

resulting in a 10Hz acoustic embeddings rate for the LLM. The encoder, projector and LoRA adapters were fine-tuned/trained jointly on all the

corpora mentioned under **Training Data**.

(3) Large language model: granite-3.3-2b-instruct with 128k context length (https://huggingface.co/ibm-granite/granite-3.3-2b-instruct).

(4) LoRA adapters: rank=64 applied to the query, value projection matrices

**Training Data:**