code

stringlengths 2.5k

6.36M

| kind

stringclasses 2

values | parsed_code

stringlengths 0

404k

| quality_prob

float64 0

0.98

| learning_prob

float64 0.03

1

|

|---|---|---|---|---|

# Hands-on LH2: the multijunction launcher

A tokamak a intrinsequally a pulsed machine. In order to perform long plasma discharges, it is necessary to drive a part of the plasma current, in order to limit (or ideally cancel) the magnetic flux consumption. Additional current drive systems are used for these. Among the current drive systems, _Lower Hybrid Current Drive_ (LHCD) systems have demonstrated the larger current drive efficiency.

A Lower Hybrid launcher is generally made of numerous waveguides, stacked next to each other by their large sides. Since there is a phase shift between each waveguide in the toroidal direction, these launchers constitute a _phased array_. A phased array is an array of radiating elements in which the relative phases of the respective signals feeding these elements are varied in such a way that the effective radiation pattern of the array is reinforced in a desired direction and suppressed in undesired directions. A phased array is an example of N-slit diffraction. It may also be viewed as the coherent addition of N sources. In the case of LHCD launchers, the RF power is transmitted into a specific direction in the plasma through plasma waves. These waves will ultimately drive some additional plasma current in the tokamak.

The total number of waveguides in a LHCD launcher increased with each launcher generation. From simple structures, made of two or four waveguides, today's LHCD launchers such as the Tore Supra ones have hundred of waveguides. With such a number of waveguides, it is not possible anymore to excite each waveguides separately. _Multijunctions_ have been designed to solve this challenge. They act as a power splitter while phasing the adjacent waveguides.

The aim of this hands-on is to measure and characterize the RF performances of a multijunction structure, on a real Tore Supra C3 mock-up. From these relative measurements, you will have to analyse the performances of the multijunction and to deduce if the manufacturing tolerances affect them.

Before or in parallel to making measurements, you will have to calculate what would be the ideal spectral power density (or _spectrum_) launched by such a multijunction. This will serve them for making comparison theory vs experimental and thus discuss about the performances one could expect.

## 1. LHCD launcher spectrum

The objective of this section is to get you to understand the multiple requirements of a LHCD launcher.

## 2. RF Measurements

Because of the non-standard dimensions of the waveguides, it is not possible to use commercial calibration toolkit, and thus to make an

precise and absolute calibration of the RF measurements. However, relative comparisons are still relevant.

-----

# Solution

This notebook illustrates a method to compute the power density spectrum of a phased array.

```

%pylab

%matplotlib inline

from scipy.constants import c

```

## Phased array geometry

A linear phased array with equal spaced elements is easiest to analyze. It is illustrated in the following figure, in which radiating elements are located at a $\Delta z$ distance between each other. These elements radiates with a phase shift $\Delta \Phi$.

Let's a rectangular waveguide phased array facing the plasma. The waveguides periodicity is $\Delta z=b+e$ where $b$ is the width of a waveguide and $e$ the thickness of the septum between waveguides and the phase shift between waveguides is $\Delta \Phi$. We will suppose here that the amplitude and the phase of the waves in a waveguide do not depend of the spatial direction $z$. Thus we have $\Delta\Phi=$. The geometry is illustrated in the figure below.

<img src="./LH2_Multijunction_data/phased_array_grill.png">

## Ideal grill

Let's make the hypothesis that the electric field at the interface antenna-plasma is not perturbed from the electric field in the waveguide. At the antenna-plasma interface, the total electric field is thus:

$$ E(z) = \sum_{n=1}^N E_n \Pi_n(z) = \sum_{n=1}^N A_n e^{j\Phi_n} \Pi_n(z) $$

where $\Pi_n(z)$ is a Heavisyde step function, equals to unity for $z$ corresponding to a waveguide $n$, and zero elsewhere. The power density spectrum is homogeneous to the square of the Fourier transform of the electric field, that is, to :

$$ \tilde{E}(k_z) = \int E(z) e^{j k_z z} \, dz $$

Where $k_z=n_z k_0$. If one calculates the modulus square of the previous expression, this should give:

$$ dP(n_z) \propto sinc^2 \left( k_0 n_z \frac{b}{2} \right) \left( \frac{\sin(N \Phi/2)}{\sin\Phi/2} \right)^2 $$

With $\Phi=k_0 n_z \Delta z + \Delta\Phi$. The previous expression is maximized for $\Phi=2 p \pi, p\in \mathbb{N}$. Assuming $p=0$, this leads to the condition :

$$ n_{z0} = - \frac{\Delta\Phi}{k_0 \Delta z} $$

Let's define the following function:

```

def ideal_spectrum(b,e,phi,N=6,f=3.7e9):

nz = np.arange(-10,10,0.1)

k0 = (2*pi*f)/c

PHI = k0*nz*(b+e) + phi

dP = sinc(k0*nz*b/2)*(sin(N*PHI/2)/sin(PHI/2))**2

return(nz,dP)

```

And trace the spectrum of an ideal launcher:

```

nz, dP_ideal = ideal_spectrum(b=8e-3, e=2e-3, phi=pi/2, N=6)

plot(nz, abs(dP_ideal), lw=2)

xlabel('$n_z=k_z/k_0$');

ylabel('Power density [a.u.]');

title('Power density spectrum of an ideal LH grill launcher')

grid('on')

plt.savefig('multijunction_ideal_spectrum.png', dpi=150)

```

## Realistic grill

Let's illustrate this with a more realistic case. Below we define a function that generate the electric field along z for N waveguides, d-spaced and with a constant phase shift. The spacial precision can be set optionnaly.

```

def generate_Efield(b,e,phi,N=6,dz=1e-4,A=1):

# generate the z-axis, between [z_min, z_max[ by dz steps

z_min = 0 - 0.01

z_max = N*(b+e) + 0.01

z = arange(z_min, z_max, dz)

# construct the Efield (complex valued)

E = zeros_like(z,dtype=complex)

for idx in arange(N):

E[ (z>=idx*(b+e)) & (z < idx*(b+e)+b) ] = A * exp(1j*idx*phi)

return(z,E)

```

Then we use this function to generate the electric field at the mouth of a 6 waveguide launcher, of waveguide width b=8mm, septum thickness e=2mm with a phase shift between waveguides of 90° ($\pi/2$) :

```

z,E = generate_Efield(b=8e-3, e=2e-3,phi=pi/2)

fig, (ax1, ax2) = plt.subplots(2,1,sharex=True)

ax1.plot(z,abs(E), lw=2)

ax1.set_ylabel('Amplitude [a.u.]')

ax1.grid(True)

ax1.set_ylim((-0.1,1.1))

ax2.plot(z,angle(E)*180/pi,'g', lw=2)

ax2.set_xlabel('z [m]')

ax2.set_ylabel('Phase [deg]')

ax2.grid(True)

ax2.set_ylim((-200, 200))

fig.savefig('multijunction_ideal_excitation.png', dpi=150)

```

Now, let's take the fourier transform of such a field (the source frequency is here f=3.7 GHz, the frequency of the Tore Supra LH system).

```

def calculate_spectrum(z,E,f=3.7e9):

k0 = 2*pi*f/c

lambda0 = c/f

# fourier domain points

B = 2**18

Efft = np.fft.fftshift(np.fft.fft(E,B))

# fourier domain bins

dz = z[1] - z[0] # assumes spatial period is constant

df = 1/(B*dz)

K = arange(-B/2,+B/2)

# spatial frequency bins

Fz= K*df

# parallel index is kz/k0

nz= (2*pi/k0)*Fz

# ~ power density spectrum

p = (dz)**2/lambda0 * (1/2*Efft*conj(Efft));

return(nz,p)

nz,p = calculate_spectrum(z,E)

plot(nz,real(p),lw=2)

xlim((-10,10))

xlabel('$n_z=k_z/k_0$')

ylabel('Power density [a.u.]')

title('Spectral power density of an ideal LH launcher')

grid('on')

plt.savefig('multijunction_ideal_spectrum.png', dpi=150)

```

The main component of the spectrum seems located near $n_z$=2. Looking at the previous analytical formula, which expresses the extremum of the power density spectrum:

```

f = 3.7e9 # frequency [Hz]

b = 8e-3 # waveguide width [m]

e = 2e-3 # septum width [m]

k0 = (2*pi*f) / c # wavenumber in vacuum

delta_phi = pi/2 # phase shift between waveguides

```

$$n_{z0} = \frac{k_{z0}}{k_0} = \frac{\Delta \Phi}{k_0 \Delta z }$$

```

nz0 = pi/2 / ((b+e) * k0) # main component of the spectrum

nz0

```

Which is what we expected from the previous Figure.

## Current Drive Direction

Let's assume that the plasma current is clockwise as seen from the top of a tokamak. Let's also define the positive direction of the toroidal magnetic field being in the same direction than the plasma current, i.e. :

$$

\mathbf{B}_0 = B_0 \mathbf{e}_\parallel

$$

and

$$

\mathbf{I}_p = I_p \mathbf{e}_\parallel

$$

Let be $\mathbf{J}_{LH}$ the current density created by the Lower Hybrid system. The current density is expressed as

$$

\mathbf{J}_{LH} = - n e v_\parallel \mathbf{e}_\parallel

$$

where $v_\parallel=c/n_\parallel$. As we want the current driven to be in the same direction than the plasma current, i.e.:

$$

\mathbf{J}_{LH}\cdot\mathbf{I}_p > 0

$$

one must have :

$$

n_\parallel < 0

$$

## RF Measurements of the multijunction

```

import numpy as np

calibration = np.loadtxt('LH2_Multijunction_data/calibration.s2p', skiprows=5)

fwd1 = np.loadtxt('LH2_Multijunction_data/fwd1.s2p', skiprows=5)

fwd2 = np.loadtxt('LH2_Multijunction_data/fwd2.s2p', skiprows=5)

fwd3 = np.loadtxt('LH2_Multijunction_data/fwd3.s2p', skiprows=5)

fwd4 = np.loadtxt('LH2_Multijunction_data/fwd4.s2p', skiprows=5)

fwd5 = np.loadtxt('LH2_Multijunction_data/fwd5.s2p', skiprows=5)

fwd6 = np.loadtxt('LH2_Multijunction_data/fwd6.s2p', skiprows=5)

f = calibration[:,0]

S21_cal = calibration[:,3]

fig, ax = plt.subplots()

ax.plot(f/1e9, fwd1[:,3]-S21_cal)

ax.plot(f/1e9, fwd2[:,3]-S21_cal)

ax.plot(f/1e9, fwd3[:,3]-S21_cal)

ax.plot(f/1e9, fwd4[:,3]-S21_cal)

ax.plot(f/1e9, fwd5[:,3]-S21_cal)

#ax.plot(f/1e9, fwd6[:,3]-S21_cal)

ax.legend(('Forward wg#1', 'Forward wg#2','Forward wg#3','Forward wg#4','Forward wg#5'))

ax.grid(True)

ax.axvline(3.7, color='r', ls='--')

ax.axhline(10*np.log10(1/6), color='gray', ls='--')

ax.set_ylabel('S21 [dB]')

ax.set_xlabel('f [GHz]')

fwds = [fwd1, fwd2, fwd3, fwd4, fwd5, fwd6]

s21 = []

for fwd in fwds:

s21.append(10**((fwd[:,3]-S21_cal)/20) * np.exp(1j*fwd[:,4]*np.pi/180))

s21 = np.asarray(s21)

# find the 3.7 GHz point

idx = np.argwhere(f == 3.7e9)

s21_3dot7 = s21[:,idx].squeeze()

fig, ax = plt.subplots(nrows=2)

ax[0].bar(np.arange(1,7), np.abs(s21_3dot7))

ax[1].bar(np.arange(1,7), 180/np.pi*np.unwrap(np.angle(s21_3dot7)))

```

Clearly, the last waveguide measurements are strange...

## Checking the power conservation

```

fig, ax = plt.subplots()

plot(f/1e9, abs(s21**2).sum(axis=0))

ax.grid(True)

ax.axvline(3.7, color='r', ls='--')

ax.set_ylabel('$\sum |S_{21}|^2$ ')

ax.set_xlabel('f [GHz]')

```

Clearly, the power conservation is far from being verified, because of the large incertitude of the measurement here.

### CSS Styling

```

from IPython.core.display import HTML

def css_styling():

styles = open("../../styles/custom.css", "r").read()

return HTML(styles)

css_styling()

```

|

github_jupyter

|

%pylab

%matplotlib inline

from scipy.constants import c

def ideal_spectrum(b,e,phi,N=6,f=3.7e9):

nz = np.arange(-10,10,0.1)

k0 = (2*pi*f)/c

PHI = k0*nz*(b+e) + phi

dP = sinc(k0*nz*b/2)*(sin(N*PHI/2)/sin(PHI/2))**2

return(nz,dP)

nz, dP_ideal = ideal_spectrum(b=8e-3, e=2e-3, phi=pi/2, N=6)

plot(nz, abs(dP_ideal), lw=2)

xlabel('$n_z=k_z/k_0$');

ylabel('Power density [a.u.]');

title('Power density spectrum of an ideal LH grill launcher')

grid('on')

plt.savefig('multijunction_ideal_spectrum.png', dpi=150)

def generate_Efield(b,e,phi,N=6,dz=1e-4,A=1):

# generate the z-axis, between [z_min, z_max[ by dz steps

z_min = 0 - 0.01

z_max = N*(b+e) + 0.01

z = arange(z_min, z_max, dz)

# construct the Efield (complex valued)

E = zeros_like(z,dtype=complex)

for idx in arange(N):

E[ (z>=idx*(b+e)) & (z < idx*(b+e)+b) ] = A * exp(1j*idx*phi)

return(z,E)

z,E = generate_Efield(b=8e-3, e=2e-3,phi=pi/2)

fig, (ax1, ax2) = plt.subplots(2,1,sharex=True)

ax1.plot(z,abs(E), lw=2)

ax1.set_ylabel('Amplitude [a.u.]')

ax1.grid(True)

ax1.set_ylim((-0.1,1.1))

ax2.plot(z,angle(E)*180/pi,'g', lw=2)

ax2.set_xlabel('z [m]')

ax2.set_ylabel('Phase [deg]')

ax2.grid(True)

ax2.set_ylim((-200, 200))

fig.savefig('multijunction_ideal_excitation.png', dpi=150)

def calculate_spectrum(z,E,f=3.7e9):

k0 = 2*pi*f/c

lambda0 = c/f

# fourier domain points

B = 2**18

Efft = np.fft.fftshift(np.fft.fft(E,B))

# fourier domain bins

dz = z[1] - z[0] # assumes spatial period is constant

df = 1/(B*dz)

K = arange(-B/2,+B/2)

# spatial frequency bins

Fz= K*df

# parallel index is kz/k0

nz= (2*pi/k0)*Fz

# ~ power density spectrum

p = (dz)**2/lambda0 * (1/2*Efft*conj(Efft));

return(nz,p)

nz,p = calculate_spectrum(z,E)

plot(nz,real(p),lw=2)

xlim((-10,10))

xlabel('$n_z=k_z/k_0$')

ylabel('Power density [a.u.]')

title('Spectral power density of an ideal LH launcher')

grid('on')

plt.savefig('multijunction_ideal_spectrum.png', dpi=150)

f = 3.7e9 # frequency [Hz]

b = 8e-3 # waveguide width [m]

e = 2e-3 # septum width [m]

k0 = (2*pi*f) / c # wavenumber in vacuum

delta_phi = pi/2 # phase shift between waveguides

nz0 = pi/2 / ((b+e) * k0) # main component of the spectrum

nz0

import numpy as np

calibration = np.loadtxt('LH2_Multijunction_data/calibration.s2p', skiprows=5)

fwd1 = np.loadtxt('LH2_Multijunction_data/fwd1.s2p', skiprows=5)

fwd2 = np.loadtxt('LH2_Multijunction_data/fwd2.s2p', skiprows=5)

fwd3 = np.loadtxt('LH2_Multijunction_data/fwd3.s2p', skiprows=5)

fwd4 = np.loadtxt('LH2_Multijunction_data/fwd4.s2p', skiprows=5)

fwd5 = np.loadtxt('LH2_Multijunction_data/fwd5.s2p', skiprows=5)

fwd6 = np.loadtxt('LH2_Multijunction_data/fwd6.s2p', skiprows=5)

f = calibration[:,0]

S21_cal = calibration[:,3]

fig, ax = plt.subplots()

ax.plot(f/1e9, fwd1[:,3]-S21_cal)

ax.plot(f/1e9, fwd2[:,3]-S21_cal)

ax.plot(f/1e9, fwd3[:,3]-S21_cal)

ax.plot(f/1e9, fwd4[:,3]-S21_cal)

ax.plot(f/1e9, fwd5[:,3]-S21_cal)

#ax.plot(f/1e9, fwd6[:,3]-S21_cal)

ax.legend(('Forward wg#1', 'Forward wg#2','Forward wg#3','Forward wg#4','Forward wg#5'))

ax.grid(True)

ax.axvline(3.7, color='r', ls='--')

ax.axhline(10*np.log10(1/6), color='gray', ls='--')

ax.set_ylabel('S21 [dB]')

ax.set_xlabel('f [GHz]')

fwds = [fwd1, fwd2, fwd3, fwd4, fwd5, fwd6]

s21 = []

for fwd in fwds:

s21.append(10**((fwd[:,3]-S21_cal)/20) * np.exp(1j*fwd[:,4]*np.pi/180))

s21 = np.asarray(s21)

# find the 3.7 GHz point

idx = np.argwhere(f == 3.7e9)

s21_3dot7 = s21[:,idx].squeeze()

fig, ax = plt.subplots(nrows=2)

ax[0].bar(np.arange(1,7), np.abs(s21_3dot7))

ax[1].bar(np.arange(1,7), 180/np.pi*np.unwrap(np.angle(s21_3dot7)))

fig, ax = plt.subplots()

plot(f/1e9, abs(s21**2).sum(axis=0))

ax.grid(True)

ax.axvline(3.7, color='r', ls='--')

ax.set_ylabel('$\sum |S_{21}|^2$ ')

ax.set_xlabel('f [GHz]')

from IPython.core.display import HTML

def css_styling():

styles = open("../../styles/custom.css", "r").read()

return HTML(styles)

css_styling()

| 0.523908 | 0.993183 |

# Tutorial Python

## Print

```

print("Halo, nama saya Adam, biasa dipanggil Arthur")

print('Halo, nama saya Adam, biasa dipanggil Arthur')

print("""Halo, nama saya Adam, biasa dipanggil Arthur""")

print('''Halo, nama saya Adam, biasa dipanggil Arthur''')

```

## Variable

```

a = 2

a

a, b = 3, 4

a

b

a, b, c = 6, 8, 10

a

b

c

a, b, c

```

## List

```

a = [1, 2, 3, 'empat', 'lima', True]

a[0]

a[2]

a[-2]

a[-1]

a[-6]

a.append(7)

a

len(a)

```

## Slicing

`inclusive` : `exclusive` : `step`

```

a = [0, 1, 2, 3, 4, 5, 6, 7, 8]

a[0:2]

a[0:5]

a[0:-3]

a[:]

a[:5]

a[::]

a[1:8:2]

a[::2]

a[::-2]

```

## Join & Split

```

a = ['cat', 'dog', 'fish']

" ".join(a)

", ".join(a)

a = "Halo nama saya Adam biasa dipanggil Arthur"

a.split()

a = "Halo, nama saya Adam, biasa dipanggil Arthur"

a.split(", ")

```

## Dictionary

```

a = {

'cat': 'kucing',

'dog': 'anjing',

'fish': 'ikan',

}

a

a['cat']

a['bird'] = 'burung'

a['elephant'] = 'gajah'

a

a.keys()

a.values()

nilai = {'naruto': 40, 'kaneki': 80, 'izuku': 85, 'ayanokouji': 100}

nilai

nilai['ayanokouji'] = 90

nilai

```

## Function

```

def jumlah(a, b):

return a + b

jumlah(5,6)

def kali(a, b):

return a * b

kali(5,6)

def pangkat(a, b):

return a ** b

pangkat(5, 6)

```

## Conditionals

```

score = 50

def konversi_indeks(indeks):

if 0 <= indeks < 60:

return "D"

elif 60 <= indeks < 70:

return "C"

elif 70 <= indeks < 80:

return "B"

elif 80 <= indeks < 90:

return "A"

elif 90 <= indeks <= 100:

return "S"

else:

return "Nilai nya ngaco"

konversi_indeks(60)

konversi_indeks(100)

konversi_indeks(1234)

```

## Iterasi

```

numbers = [1, 2, 3, 4, 5, 6, 7]

for n in numbers:

print(n)

for score in [50, 60, 70, 80, 90, 100, 110]:

print("Indeks: ", konversi_indeks(score))

animals = ['Cat', 'Fish', 'Dog', 'Elephant', 'Bird']

for animal in animals:

print(animal)

for animal in animals:

print(animal.upper())

for animal in animals:

print(animal.lower())

for angka in range(2, 10, 2):

print(angka)

animals ={

'Cat': 'Kucing',

'Fish': 'Ikan',

'Elephant': 'Gajah',

'Dog': 'Anjing',

'Bird': 'Burung'

}

for animal in animals.keys():

print(animal)

for animal in animals.values():

print(animal)

for animal in animals.keys():

print(f"Bahasa Indonesia dari {animal} adalah {animals[animal]}")

a = []

for angka in range(8):

a.append(angka ** 2)

a

```

## Python Comprehension

```

b = [angka ** 2 for angka in range(10)]

b

b = {angka: angka ** 2 for angka in range(10)}

b

{1, 1, 2, 2, 3, 3, 4, 4, 5, 5}

```

|

github_jupyter

|

print("Halo, nama saya Adam, biasa dipanggil Arthur")

print('Halo, nama saya Adam, biasa dipanggil Arthur')

print("""Halo, nama saya Adam, biasa dipanggil Arthur""")

print('''Halo, nama saya Adam, biasa dipanggil Arthur''')

a = 2

a

a, b = 3, 4

a

b

a, b, c = 6, 8, 10

a

b

c

a, b, c

a = [1, 2, 3, 'empat', 'lima', True]

a[0]

a[2]

a[-2]

a[-1]

a[-6]

a.append(7)

a

len(a)

a = [0, 1, 2, 3, 4, 5, 6, 7, 8]

a[0:2]

a[0:5]

a[0:-3]

a[:]

a[:5]

a[::]

a[1:8:2]

a[::2]

a[::-2]

a = ['cat', 'dog', 'fish']

" ".join(a)

", ".join(a)

a = "Halo nama saya Adam biasa dipanggil Arthur"

a.split()

a = "Halo, nama saya Adam, biasa dipanggil Arthur"

a.split(", ")

a = {

'cat': 'kucing',

'dog': 'anjing',

'fish': 'ikan',

}

a

a['cat']

a['bird'] = 'burung'

a['elephant'] = 'gajah'

a

a.keys()

a.values()

nilai = {'naruto': 40, 'kaneki': 80, 'izuku': 85, 'ayanokouji': 100}

nilai

nilai['ayanokouji'] = 90

nilai

def jumlah(a, b):

return a + b

jumlah(5,6)

def kali(a, b):

return a * b

kali(5,6)

def pangkat(a, b):

return a ** b

pangkat(5, 6)

score = 50

def konversi_indeks(indeks):

if 0 <= indeks < 60:

return "D"

elif 60 <= indeks < 70:

return "C"

elif 70 <= indeks < 80:

return "B"

elif 80 <= indeks < 90:

return "A"

elif 90 <= indeks <= 100:

return "S"

else:

return "Nilai nya ngaco"

konversi_indeks(60)

konversi_indeks(100)

konversi_indeks(1234)

numbers = [1, 2, 3, 4, 5, 6, 7]

for n in numbers:

print(n)

for score in [50, 60, 70, 80, 90, 100, 110]:

print("Indeks: ", konversi_indeks(score))

animals = ['Cat', 'Fish', 'Dog', 'Elephant', 'Bird']

for animal in animals:

print(animal)

for animal in animals:

print(animal.upper())

for animal in animals:

print(animal.lower())

for angka in range(2, 10, 2):

print(angka)

animals ={

'Cat': 'Kucing',

'Fish': 'Ikan',

'Elephant': 'Gajah',

'Dog': 'Anjing',

'Bird': 'Burung'

}

for animal in animals.keys():

print(animal)

for animal in animals.values():

print(animal)

for animal in animals.keys():

print(f"Bahasa Indonesia dari {animal} adalah {animals[animal]}")

a = []

for angka in range(8):

a.append(angka ** 2)

a

b = [angka ** 2 for angka in range(10)]

b

b = {angka: angka ** 2 for angka in range(10)}

b

{1, 1, 2, 2, 3, 3, 4, 4, 5, 5}

| 0.299617 | 0.930962 |

```

import Brunel

import datetime

import random

import pandas as pd

nodes = pd.read_csv("input/nodes.csv")

edges = pd.read_csv("input/edges.csv")

nodes

edges

```

Adding some random dates so that I can test the temporal controls

```

days = datetime.timedelta(days=1)

weeks = datetime.timedelta(weeks=1)

months = 30*days

years = datetime.timedelta(days=365)

def random_date(start=datetime.date(year=1800, month=1, day=1), end=datetime.date(year=1890, month=12, day=31),

within=None):

if within:

start = within[0]

end = within[1]

start = datetime.datetime.combine(start, datetime.time()).timestamp()

end = datetime.datetime.combine(end, datetime.time()).timestamp()

result = start + random.random() * (end - start)

return datetime.datetime.fromtimestamp(result).date()

def random_duration(start=datetime.date(year=1800, month=1, day=1),

end=datetime.date(year=1890, month=12, day=31),

within=None,

minimum=20*years, maximum=80*years,

breach_maximum=False):

if within:

start = within[0]

end = within[1]

mindur = minimum.total_seconds()

maxdur = maximum.total_seconds()

start = random_date(start=start, end=end)

if not breach_maximum:

lifedur = (end - start).total_seconds()

if maxdur > lifedur:

maxdur = lifedur

if mindur > maxdur:

mindur = 0.5*maxdur

dur = mindur + random.random() * (maxdur-mindur)

return (start, start + datetime.timedelta(seconds=dur))

def random_lifetime(start=datetime.date(year=1800, month=1, day=1),

end=datetime.date(year=1890, month=12, day=31),

maximum_age=80*years, all_adults=True):

if all_adults:

minimum = 18*years

else:

minimum = 1*day

return random_duration(start=start, end=end, minimum=minimum, maximum=maximum_age, breach_maximum=True)

def adult(lifetime):

start = lifetime[0]

end = lifetime[1]

start = start + 18*years

if start > end:

raise ValueError("Not an adult %s => %s" % (start.isoformat(), end.isoformat()))

return (start, end)

lifetime = random_lifetime()

print(lifetime)

print(adult(lifetime))

print(random_duration(within=adult(lifetime), minimum=6*months, maximum=5*years))

print(f"Lived {(lifetime[1]-lifetime[0]).total_seconds() / (3600*24*365)} years")

def get_earliest(start, end, ids):

try:

start = ids[start][0]

except KeyError:

try:

return ids[end][0]

except KeyError:

return None

try:

end = ids[end][0]

except KeyError:

return start

if start < end:

return end

else:

return start

def get_latest(start, end, ids):

try:

start = ids[start][1]

except KeyError:

try:

return ids[end][1]

except KeyError:

return None

try:

end = ids[end][1]

except KeyError:

return start

if start < end:

return start

else:

return end

Brunel.DateRange(start=lifetime[0], end=lifetime[1])

lifetimes = {}

def add_random_dates_to_node(node):

lifetime = random_lifetime()

duration = lifetime

if "alive" in node.state:

node.state["alive"] = Brunel.DateRange(start=lifetime[0], end=lifetime[1])

lifetimes[node.getID()] = lifetime

duration = adult(lifetime)

if "positions" in node.state:

pos = node.state["positions"]

for key in pos.keys():

member = random_duration(within=duration, minimum=6*months, maximum=20*years)

pos[key] = Brunel.DateRange(start=member[0], end=member[1])

node.state["positions"] = pos

if "affiliations" in node.state:

aff = node.state["affiliations"]

for key in aff.keys():

member = random_duration(within=duration, minimum=5*years, maximum=20*years)

aff[key] = Brunel.DateRange(start=member[0], end=member[1])

node.state["affiliations"] = aff

return node

def add_random_dates_to_message(message):

start = get_earliest(message.getSender(), message.getReceiver(), lifetimes)

end = get_latest(message.getSender(), message.getReceiver(), lifetimes)

if not start:

start = datetime.date(year=1850, month=1, day=1)

end = datetime.date(year=1870, month=12, day=31)

if not end:

end = start + 12*months

sent = random_date(start=start, end=end)

message.state["sent"] = Brunel.DateRange(start=sent, end=sent)

return message

social = Brunel.Social.load_from_csv("input/nodes.csv", "input/edges.csv",

modifiers={"person": add_random_dates_to_node,

"business": add_random_dates_to_node,

"message": add_random_dates_to_message})

with open("data.json", "w") as FILE:

FILE.write(Brunel.stringify(social))

```

|

github_jupyter

|

import Brunel

import datetime

import random

import pandas as pd

nodes = pd.read_csv("input/nodes.csv")

edges = pd.read_csv("input/edges.csv")

nodes

edges

days = datetime.timedelta(days=1)

weeks = datetime.timedelta(weeks=1)

months = 30*days

years = datetime.timedelta(days=365)

def random_date(start=datetime.date(year=1800, month=1, day=1), end=datetime.date(year=1890, month=12, day=31),

within=None):

if within:

start = within[0]

end = within[1]

start = datetime.datetime.combine(start, datetime.time()).timestamp()

end = datetime.datetime.combine(end, datetime.time()).timestamp()

result = start + random.random() * (end - start)

return datetime.datetime.fromtimestamp(result).date()

def random_duration(start=datetime.date(year=1800, month=1, day=1),

end=datetime.date(year=1890, month=12, day=31),

within=None,

minimum=20*years, maximum=80*years,

breach_maximum=False):

if within:

start = within[0]

end = within[1]

mindur = minimum.total_seconds()

maxdur = maximum.total_seconds()

start = random_date(start=start, end=end)

if not breach_maximum:

lifedur = (end - start).total_seconds()

if maxdur > lifedur:

maxdur = lifedur

if mindur > maxdur:

mindur = 0.5*maxdur

dur = mindur + random.random() * (maxdur-mindur)

return (start, start + datetime.timedelta(seconds=dur))

def random_lifetime(start=datetime.date(year=1800, month=1, day=1),

end=datetime.date(year=1890, month=12, day=31),

maximum_age=80*years, all_adults=True):

if all_adults:

minimum = 18*years

else:

minimum = 1*day

return random_duration(start=start, end=end, minimum=minimum, maximum=maximum_age, breach_maximum=True)

def adult(lifetime):

start = lifetime[0]

end = lifetime[1]

start = start + 18*years

if start > end:

raise ValueError("Not an adult %s => %s" % (start.isoformat(), end.isoformat()))

return (start, end)

lifetime = random_lifetime()

print(lifetime)

print(adult(lifetime))

print(random_duration(within=adult(lifetime), minimum=6*months, maximum=5*years))

print(f"Lived {(lifetime[1]-lifetime[0]).total_seconds() / (3600*24*365)} years")

def get_earliest(start, end, ids):

try:

start = ids[start][0]

except KeyError:

try:

return ids[end][0]

except KeyError:

return None

try:

end = ids[end][0]

except KeyError:

return start

if start < end:

return end

else:

return start

def get_latest(start, end, ids):

try:

start = ids[start][1]

except KeyError:

try:

return ids[end][1]

except KeyError:

return None

try:

end = ids[end][1]

except KeyError:

return start

if start < end:

return start

else:

return end

Brunel.DateRange(start=lifetime[0], end=lifetime[1])

lifetimes = {}

def add_random_dates_to_node(node):

lifetime = random_lifetime()

duration = lifetime

if "alive" in node.state:

node.state["alive"] = Brunel.DateRange(start=lifetime[0], end=lifetime[1])

lifetimes[node.getID()] = lifetime

duration = adult(lifetime)

if "positions" in node.state:

pos = node.state["positions"]

for key in pos.keys():

member = random_duration(within=duration, minimum=6*months, maximum=20*years)

pos[key] = Brunel.DateRange(start=member[0], end=member[1])

node.state["positions"] = pos

if "affiliations" in node.state:

aff = node.state["affiliations"]

for key in aff.keys():

member = random_duration(within=duration, minimum=5*years, maximum=20*years)

aff[key] = Brunel.DateRange(start=member[0], end=member[1])

node.state["affiliations"] = aff

return node

def add_random_dates_to_message(message):

start = get_earliest(message.getSender(), message.getReceiver(), lifetimes)

end = get_latest(message.getSender(), message.getReceiver(), lifetimes)

if not start:

start = datetime.date(year=1850, month=1, day=1)

end = datetime.date(year=1870, month=12, day=31)

if not end:

end = start + 12*months

sent = random_date(start=start, end=end)

message.state["sent"] = Brunel.DateRange(start=sent, end=sent)

return message

social = Brunel.Social.load_from_csv("input/nodes.csv", "input/edges.csv",

modifiers={"person": add_random_dates_to_node,

"business": add_random_dates_to_node,

"message": add_random_dates_to_message})

with open("data.json", "w") as FILE:

FILE.write(Brunel.stringify(social))

| 0.382487 | 0.42471 |

# Probability concepts using Python

### Dr. Tirthajyoti Sarkar, Fremont, CA 94536

---

This notebook illustrates the concept of probability (frequntist definition) using simple scripts and functions.

## Set theory basics

Set theory is a branch of mathematical logic that studies sets, which informally are collections of objects. Although any type of object can be collected into a set, set theory is applied most often to objects that are relevant to mathematics. The language of set theory can be used in the definitions of nearly all mathematical objects.

**Set theory is commonly employed as a foundational system for modern mathematics**.

Python offers a **native data structure called set**, which can be used as a proxy for a mathematical set for almost all purposes.

```

# Directly with curly braces

Set1 = {1,2}

print (Set1)

print(type(Set1))

my_list=[1,2,3,4]

my_set_from_list = set(my_list)

print(my_set_from_list)

```

### Membership testing with `in` and `not in`

```

my_set = set([1,3,5])

print("Here is my set:",my_set)

print("1 is in the set:",1 in my_set)

print("2 is in the set:",2 in my_set)

print("4 is NOT in the set:",4 not in my_set)

```

### Set relations

* **Subset**

* **Superset**

* **Disjoint**

* **Universal set**

* **Null set**

```

Univ = set([x for x in range(11)])

Super = set([x for x in range(11) if x%2==0])

disj = set([x for x in range(11) if x%2==1])

Sub = set([4,6])

Null = set([x for x in range(11) if x>10])

print("Universal set (all the positive integers up to 10):",Univ)

print("All the even positive integers up to 10:",Super)

print("All the odd positive integers up to 10:",disj)

print("Set of 2 elements, 4 and 6:",Sub)

print("A null set:", Null)

print('Is "Super" a superset of "Sub"?',Super.issuperset(Sub))

print('Is "Super" a subset of "Univ"?',Super.issubset(Univ))

print('Is "Sub" a superset of "Super"?',Sub.issuperset(Super))

print('Is "Super" disjoint with "disj"?',Sub.isdisjoint(disj))

```

### Set algebra/Operations

* **Equality**

* **Intersection**

* **Union**

* **Complement**

* **Difference**

* **Cartesian product**

```

S1 = {1,2}

S2 = {2,2,1,1,2}

print ("S1 and S2 are equal because order or repetition of elements do not matter for sets\nS1==S2:", S1==S2)

S1 = {1,2,3,4,5,6}

S2 = {1,2,3,4,0,6}

print ("S1 and S2 are NOT equal because at least one element is different\nS1==S2:", S1==S2)

```

In mathematics, the intersection A ∩ B of two sets A and B is the set that contains all elements of A that also belong to B (or equivalently, all elements of B that also belong to A), but no other elements. Formally,

$$ {\displaystyle A\cap B=\{x:x\in A{\text{ and }}x\in B\}.} $$

```

# Define a set using list comprehension

S1 = set([x for x in range(1,11) if x%3==0])

print("S1:", S1)

S2 = set([x for x in range(1,7)])

print("S2:", S2)

# Both intersection method or & can be used

S_intersection = S1.intersection(S2)

print("Intersection of S1 and S2:", S_intersection)

S_intersection = S1 & S2

print("Intersection of S1 and S2:", S_intersection)

S3 = set([x for x in range(6,10)])

print("S3:", S3)

S1_S2_S3 = S1.intersection(S2).intersection(S3)

print("Intersection of S1, S2, and S3:", S1_S2_S3)

```

In set theory, the union (denoted by ∪) of a collection of sets is the set of all elements in the collection. It is one of the fundamental operations through which sets can be combined and related to each other. Formally,

$$ {A\cup B=\{x:x\in A{\text{ or }}x\in B\}} $$

```

# Both union method or | can be used

S1 = set([x for x in range(1,11) if x%3==0])

print("S1:", S1)

S2 = set([x for x in range(1,5)])

print("S2:", S2)

S_union = S1.union(S2)

print("Union of S1 and S2:", S_union)

S_union = S1 | S2

print("Union of S1 and S2:", S_union)

```

### Set algebra laws

**Commutative law:**

$$ {\displaystyle A\cap B=B\cap A} $$

$$ {\displaystyle A\cup (B\cup C)=(A\cup B)\cup C} $$

**Associative law:**

$$ {\displaystyle (A\cap B)\cap C=A\cap (B\cap C)} $$

$$ {\displaystyle A\cap (B\cup C)=(A\cap B)\cup (A\cap C)} $$

**Distributive law:**

$$ {\displaystyle A\cap (B\cup C)=(A\cap B)\cup (A\cap C)} $$

$$ {\displaystyle A\cup (B\cap C)=(A\cup B)\cap (A\cup C)} $$

### Complement

If A is a set, then the absolute complement of A (or simply the complement of A) is the set of elements not in A. In other words, if U is the universe that contains all the elements under study, and there is no need to mention it because it is obvious and unique, then the absolute complement of A is the relative complement of A in U. Formally,

$$ {\displaystyle A^{\complement }=\{x\in U\mid x\notin A\}.} $$

You can take the union of two sets and if that is equal to the universal set (in the context of your problem), then you have found the right complement

```

S=set([x for x in range (21) if x%2==0])

print ("S is the set of even numbers between 0 and 20:", S)

S_complement = set([x for x in range (21) if x%2!=0])

print ("S_complement is the set of odd numbers between 0 and 20:", S_complement)

print ("Is the union of S and S_complement equal to all numbers between 0 and 20?",

S.union(S_complement)==set([x for x in range (21)]))

```

**De Morgan's laws**

$$ {\displaystyle \left(A\cup B\right)^{\complement }=A^{\complement }\cap B^{\complement }.} $$

$$ {\displaystyle \left(A\cap B\right)^{\complement }=A^{\complement }\cup B^{\complement }.} $$

**Complement laws**

$$ {\displaystyle A\cup A^{\complement }=U.} $$

$$ {\displaystyle A\cap A^{\complement }=\varnothing .} $$

$$ {\displaystyle \varnothing ^{\complement }=U.} $$

$$ {\displaystyle U^{\complement }=\varnothing .} $$

$$ {\displaystyle {\text{If }}A\subset B{\text{, then }}B^{\complement }\subset A^{\complement }.} $$

### Difference between sets

If A and B are sets, then the relative complement of A in B, also termed the set-theoretic difference of B and A, is the **set of elements in B but not in A**.

$$ {\displaystyle B\setminus A=\{x\in B\mid x\notin A\}.} $$

```

S1 = set([x for x in range(31) if x%3==0])

print ("Set S1:", S1)

S2 = set([x for x in range(31) if x%5==0])

print ("Set S2:", S2)

S_difference = S2-S1

print("Difference of S1 and S2 i.e. S2\S1:", S_difference)

S_difference = S1.difference(S2)

print("Difference of S2 and S1 i.e. S1\S2:", S_difference)

```

**Following identities can be obtained with algebraic manipulation: **

$$ {\displaystyle C\setminus (A\cap B)=(C\setminus A)\cup (C\setminus B)} $$

$$ {\displaystyle C\setminus (A\cup B)=(C\setminus A)\cap (C\setminus B)} $$

$$ {\displaystyle C\setminus (B\setminus A)=(C\cap A)\cup (C\setminus B)} $$

$$ {\displaystyle C\setminus (C\setminus A)=(C\cap A)} $$

$$ {\displaystyle (B\setminus A)\cap C=(B\cap C)\setminus A=B\cap (C\setminus A)} $$

$$ {\displaystyle (B\setminus A)\cup C=(B\cup C)\setminus (A\setminus C)} $$

$$ {\displaystyle A\setminus A=\emptyset} $$

$$ {\displaystyle \emptyset \setminus A=\emptyset } $$

$$ {\displaystyle A\setminus \emptyset =A} $$

$$ {\displaystyle A\setminus U=\emptyset } $$

### Symmetric difference

In set theory, the ***symmetric difference***, also known as the ***disjunctive union***, of two sets is the set of elements which are in either of the sets and not in their intersection.

$$ {\displaystyle A\,\triangle \,B=\{x:(x\in A)\oplus (x\in B)\}}$$

$$ {\displaystyle A\,\triangle \,B=(A\smallsetminus B)\cup (B\smallsetminus A)} $$

$${\displaystyle A\,\triangle \,B=(A\cup B)\smallsetminus (A\cap B)} $$

**Some properties,**

$$ {\displaystyle A\,\triangle \,B=B\,\triangle \,A,} $$

$$ {\displaystyle (A\,\triangle \,B)\,\triangle \,C=A\,\triangle \,(B\,\triangle \,C).} $$

**The empty set is neutral, and every set is its own inverse:**

$$ {\displaystyle A\,\triangle \,\varnothing =A,} $$

$$ {\displaystyle A\,\triangle \,A=\varnothing .} $$

```

print("S1",S1)

print("S2",S2)

print("Symmetric difference", S1^S2)

print("Symmetric difference", S2.symmetric_difference(S1))

```

### Cartesian product

In set theory (and, usually, in other parts of mathematics), a Cartesian product is a mathematical operation that returns a set (or product set or simply product) from multiple sets. That is, for sets A and B, the Cartesian product A × B is the set of all ordered pairs (a, b) where a ∈ A and b ∈ B.

$$ {\displaystyle A\times B=\{\,(a,b)\mid a\in A\ {\mbox{ and }}\ b\in B\,\}.} $$

More generally, a Cartesian product of n sets, also known as an n-fold Cartesian product, can be represented by an array of n dimensions, where each element is an *n-tuple*. An ordered pair is a *2-tuple* or couple. The Cartesian product is named after [René Descartes](https://en.wikipedia.org/wiki/Ren%C3%A9_Descartes) whose formulation of analytic geometry gave rise to the concept.

```

A = set(['a','b','c'])

S = {1,2,3}

def cartesian_product(S1,S2):

result = set()

for i in S1:

for j in S2:

result.add(tuple([i,j]))

return (result)

C = cartesian_product(A,S)

print("Cartesian product of A and S\n{} X {}:{}".format(A,S,C))

print("Length of the Cartesian product set:",len(C))

```

Note that because these are ordered pairs, **same element can be repeated inside the pair** i.e. even if two sets contain some identical elements, they can be paired up in the Cartesian product.

Instead of writing functions ourselves, we could use the **`itertools`** library of Python. Remember to **turn the resulting product object** into a list for viewing and subsequent processing.

```

from itertools import product as prod

A = set([x for x in range(1,7)])

B = set([x for x in range(1,7)])

p=list(prod(A,B))

print("A is set of all possible throws of a dice:",A)

print("B is set of all possible throws of a dice:",B)

print ("\nProduct of A and B is the all possible combinations of A and B thrown together:\n",p)

```

### Cartesian Power

The Cartesian square (or binary Cartesian product) of a set X is the Cartesian product $X^2 = X × X$. An example is the 2-dimensional plane $R^2 = R × R$ where _R_ is the set of real numbers: $R^2$ is the set of all points (_x_,_y_) where _x_ and _y_ are real numbers (see the [Cartesian coordinate system](https://en.wikipedia.org/wiki/Cartesian_coordinate_system)).

The cartesian power of a set X can be defined as:

${\displaystyle X^{n}=\underbrace {X\times X\times \cdots \times X} _{n}=\{(x_{1},\ldots ,x_{n})\ |\ x_{i}\in X{\text{ for all }}i=1,\ldots ,n\}.} $

The [cardinality of a set](https://en.wikipedia.org/wiki/Cardinality) is the number of elements of the set. Cardinality of a Cartesian power set is $|S|^{n}$ where |S| is the cardinality of the set _S_ and _n_ is the power.

__We can easily use itertools again for calculating Cartesian power__. The `repeat` parameter is used as power.

```

A = {'Head','Tail'} # 2 element set

p2=list(prod(A,repeat=2)) # Power set of power 2

print("Cartesian power 2 with length {}: {}".format(len(p2),p2))

print()

p3=list(prod(A,repeat=3)) # Power set of power 3

print("Cartesian power 3 with length {}: {}".format(len(p3),p3))

```

---

## Permutations

In mathematics, the notion of permutation relates to the **act of arranging all the members of a set into some sequence or order**, or if the set is already ordered, rearranging (reordering) its elements, a process called __permuting__. The study of permutations of finite sets is a topic in the field of [combinatorics](https://en.wikipedia.org/wiki/Combinatorics).

We find the number of $k$-permutations of $A$, first by determining the set of permutations and then by calculating $\frac{|A|!}{(|A|-k)!}$. We first consider the special case of $k=|A|$, which is equivalent to finding the number of ways of ordering the elements of $A$.

```

import itertools

A = {'Red','Green','Blue'}

# Find all permutations of A

permute_all = set(itertools.permutations(A))

print("Permutations of {}".format(A))

print("-"*50)

for i in permute_all:

print(i)

print("-"*50)

print;print ("Number of permutations: ", len(permute_all))

import matplotlib.patches as mpatches

import matplotlib.pyplot as plt

from math import factorial

print("Factorial of 3:", factorial(3))

```

### Selecting _k_ items out of a set containing _n_ items and permuting

```

A = {'Red','Green','Blue','Violet'}

k=2

n = len(A)

permute_k = list(itertools.permutations(A, k))

print("{}-permutations of {}: ".format(k,A))

print("-"*50)

for i in permute_k:

print(i)

print("-"*50)

print ("Size of the permutation set = {}!/({}-{})! = {}".format(n,n,k, len(permute_k)))

factorial(4)/(factorial(4-2))

```

## Combinations

Combinatorics is an area of mathematics primarily concerned with counting, both as a means and an end in obtaining results, and certain properties of finite structures. It is closely related to many other areas of mathematics and has many applications ranging from logic to statistical physics, from evolutionary biology to computer science, etc.

Combinatorics is well known for the breadth of the problems it tackles. Combinatorial problems arise in many areas of pure mathematics, notably in algebra, [probability theory](https://en.wikipedia.org/wiki/Probability_theory), [topology](https://en.wikipedia.org/wiki/Topology), and geometry, as well as in its many application areas. Many combinatorial questions have historically been considered in isolation, giving an _ad hoc_ solution to a problem arising in some mathematical context. In the later twentieth century, however, powerful and general theoretical methods were developed, making combinatorics into an independent branch of mathematics in its own right. One of the oldest and most accessible parts of combinatorics is [graph theory](https://en.wikipedia.org/wiki/Graph_theory), which by itself has numerous natural connections to other areas. Combinatorics is used frequently in computer science to obtain formulas and estimates in the [analysis of algorithms](https://en.wikipedia.org/wiki/Analysis_of_algorithms).

We find the number of $k$-combinations of $A$, first by determining the set of combinations and then by simply calculating:

$$\frac{|A|!}{k!\times(|A|-k)!}$$

**In combination, order matters, unlike permutations.**

```

# Print all the k-combinations of A

choose_k = list(itertools.combinations(A,k))

print("%i-combinations of %s: " %(k,A))

for i in choose_k:

print(i)

print;print("Number of combinations = %i!/(%i!(%i-%i)!) = %i" %(n,k,n,k,len(choose_k) ))

```

## Putting it all together - some probability calculation examples

### Problem 1: Two dice

Two dice are rolled together. What is the probability of getting a total which is a multiple of 3?

```

n_dice = 2

dice_faces = {1,2,3,4,5,6}

# Construct the event space i.e. set of ALL POSSIBLE events

event_space = set(prod(dice_faces,repeat=n_dice))

for outcome in event_space:

print(outcome,end=', ')

# What is the set we are interested in?

favorable_outcome = []

for outcome in event_space:

x,y = outcome

if (x+y)%3==0:

favorable_outcome.append(outcome)

favorable_outcome = set(favorable_outcome)

for f_outcome in favorable_outcome:

print(f_outcome,end=', ')

prob = len(favorable_outcome)/len(event_space)

print("The probability of getting a sum which is a multiple of 3 is: ", prob)

```

### Problem 2: Five dice!

Five dice are rolled together. What is the probability of getting a total which is a multiple of 5 but not a multiple of 3?

```

n_dice = 5

dice_faces = {1,2,3,4,5,6}

# Construct the event space i.e. set of ALL POSSIBLE events

event_space = set(prod(dice_faces,repeat=n_dice))

6**5

# What is the set we are interested in?

favorable_outcome = []

for outcome in event_space:

d1,d2,d3,d4,d5 = outcome

if (d1+d2+d3+d4+d5)%5==0 and (d1+d2+d3+d4+d5)%3!=0 :

favorable_outcome.append(outcome)

favorable_outcome = set(favorable_outcome)

prob = len(favorable_outcome)/len(event_space)

print("The probability of getting a sum, which is a multiple of 5 but not a multiple of 3, is: ", prob)

```

### Problem 2 solved using set difference

```

multiple_of_5 = []

multiple_of_3 = []

for outcome in event_space:

d1,d2,d3,d4,d5 = outcome

if (d1+d2+d3+d4+d5)%5==0:

multiple_of_5.append(outcome)

if (d1+d2+d3+d4+d5)%3==0:

multiple_of_3.append(outcome)

favorable_outcome = set(multiple_of_5).difference(set(multiple_of_3))

for i in list(favorable_outcome)[:5]:

a1,a2,a3,a4,a5=i

print("{}, SUM: {}".format(i,a1+a2+a3+a4+a5))

prob = len(favorable_outcome)/len(event_space)

print("The probability of getting a sum, which is a multiple of 5 but not a multiple of 3, is: ", prob)

```

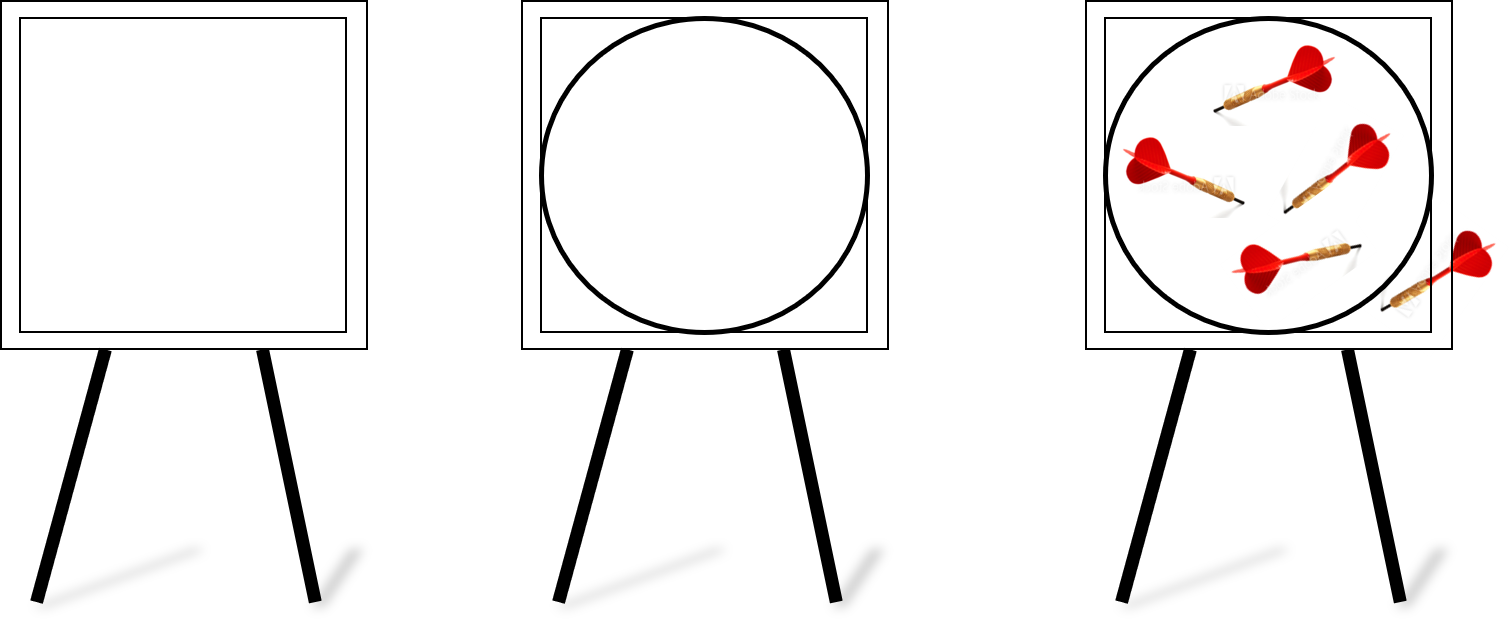

## Computing _pi_ ($\pi$) with a random dart throwing game and using probability concept

The number $\pi$ is a mathematical constant. Originally defined as the ratio of a circle's circumference to its diameter, it now has various equivalent definitions and appears in many formulas in all areas of mathematics and physics. It is approximately equal to 3.14159. It has been represented by the Greek letter __"$\pi$"__ since the mid-18th century, though it is also sometimes spelled out as "pi". It is also called Archimedes' constant.

Being an irrational number, $\pi$ cannot be expressed as a common fraction (equivalently, its decimal representation never ends and never settles into a permanently repeating pattern).

### What is the logic behind computing $\pi$ by throwing dart randomly?

Imagine a square dartboard.

Then, the dartboard with a circle drawn inside it touching all its sides.

And then, you throw darts at it. Randomly. That means some fall inside the circle, some outside. But assume that no dart falls outside the board.

At the end of your dart throwing session,

- You count the fraction of darts that fell inside the circle of the total number of darts thrown.

- Multiply that number by 4.

- The resulting number should be pi. Or, a close approximation if you had thrown a lot of darts.

The idea is extremely simple. If you throw a large number of darts, then the **probability of a dart falling inside the circle is just the ratio of the area of the circle to that of the area of the square board**. With the help of basic mathematics, you can show that this ratio turns out to be $\frac{\pi}{4}$. So, to get $\pi$, you just multiply that number by 4.

The key here is to simulate the throwing of a lot of darts so as to make the fraction equal to the probability, an assertion valid only in the limit of a large number of trials of this random event. This comes from the [law of large number](https://en.wikipedia.org/wiki/Law_of_large_numbers) or the [frequentist definition of probability](https://en.wikipedia.org/wiki/Frequentist_probability).

See also the concept of [Buffon's Needle](https://en.wikipedia.org/wiki/Buffon%27s_needle_problem)

```

from math import pi,sqrt

import random

import matplotlib.pyplot as plt

import numpy as np

```

### Center point and the side of the square

```

# Center point

x,y = 0,0

# Side of the square

a = 2

```

### Function to simulate a random throw of a dart aiming at the square

```

def throw_dart():

"""

Simulates the randon throw of a dirt. It can land anywhere in the square (uniformly randomly)

"""

# Random final landing position of the dirt between -a/2 and +a/2 around the center point

position_x = x+a/2*(-1+2*random.random())

position_y = y+a/2*(-1+2*random.random())

return (position_x,position_y)

throw_dart()

```

### Function to determine if the dart landed inside the circle

```

def is_within_circle(x,y):

"""

Given the landing coordinate of a dirt, determines if it fell inside the circle

"""

# Side of the square

a = 2

distance_from_center = sqrt(x**2+y**2)

if distance_from_center < a/2:

return True

else:

return False

is_within_circle(1.9,1.9)

is_within_circle(0.2,-0.6)

```

### Now, throw a few darts

```

n_throws = 10

count_inside_circle=0

for i in range(n_throws):

r1,r2=throw_dart()

if is_within_circle(r1,r2):

count_inside_circle+=1

```

### Compute the ratio of `count_inside_circle` and `n_throws`

```

ratio = count_inside_circle/n_throws

```

### Is it approximately equal to $\frac{\pi}{4}$?

```

print(4*ratio)

```

### Not exactly. Let's try with a lot more darts!

```

n_throws = 10000

count_inside_circle=0

for i in range(n_throws):

r1,r2=throw_dart()

if is_within_circle(r1,r2):

count_inside_circle+=1

ratio = count_inside_circle/n_throws

print(4*ratio)

```

### Let's functionalize this process and run a number of times

```

def compute_pi_throwing_dart(n_throws):

"""

Computes pi by throwing a bunch of darts at the square

"""

n_throws = n_throws

count_inside_circle=0

for i in range(n_throws):

r1,r2=throw_dart()

if is_within_circle(r1,r2):

count_inside_circle+=1

result = 4*(count_inside_circle/n_throws)

return result

```

### Now let us run this experiment a few times and see what happens.

```

n_exp=[]

pi_exp=[]

n = [int(10**(0.5*i)) for i in range(1,15)]

for i in n:

p = compute_pi_throwing_dart(i)

pi_exp.append(p)

n_exp.append(i)

print("Computed value of pi by throwing {} darts is: {}".format(i,p))

plt.figure(figsize=(8,5))

plt.title("Computing pi with \nincreasing number of random throws",fontsize=20)

plt.semilogx(n_exp, pi_exp,c='k',marker='o',lw=3)

plt.xticks(fontsize=14)

plt.yticks(fontsize=14)

plt.xlabel("Number of random throws",fontsize=15)

plt.ylabel("Computed value of pi",fontsize=15)

plt.hlines(y=3.14159,xmin=1,xmax=1e7,linestyle='--')

plt.text(x=10,y=3.05,s="Value of pi",fontsize=17)

plt.grid(True)

plt.show()

```

|

github_jupyter

|

# Directly with curly braces

Set1 = {1,2}

print (Set1)

print(type(Set1))

my_list=[1,2,3,4]

my_set_from_list = set(my_list)

print(my_set_from_list)

my_set = set([1,3,5])

print("Here is my set:",my_set)

print("1 is in the set:",1 in my_set)

print("2 is in the set:",2 in my_set)

print("4 is NOT in the set:",4 not in my_set)

Univ = set([x for x in range(11)])

Super = set([x for x in range(11) if x%2==0])

disj = set([x for x in range(11) if x%2==1])

Sub = set([4,6])

Null = set([x for x in range(11) if x>10])

print("Universal set (all the positive integers up to 10):",Univ)

print("All the even positive integers up to 10:",Super)

print("All the odd positive integers up to 10:",disj)

print("Set of 2 elements, 4 and 6:",Sub)

print("A null set:", Null)

print('Is "Super" a superset of "Sub"?',Super.issuperset(Sub))

print('Is "Super" a subset of "Univ"?',Super.issubset(Univ))

print('Is "Sub" a superset of "Super"?',Sub.issuperset(Super))

print('Is "Super" disjoint with "disj"?',Sub.isdisjoint(disj))

S1 = {1,2}

S2 = {2,2,1,1,2}

print ("S1 and S2 are equal because order or repetition of elements do not matter for sets\nS1==S2:", S1==S2)

S1 = {1,2,3,4,5,6}

S2 = {1,2,3,4,0,6}

print ("S1 and S2 are NOT equal because at least one element is different\nS1==S2:", S1==S2)

# Define a set using list comprehension

S1 = set([x for x in range(1,11) if x%3==0])

print("S1:", S1)

S2 = set([x for x in range(1,7)])

print("S2:", S2)

# Both intersection method or & can be used

S_intersection = S1.intersection(S2)

print("Intersection of S1 and S2:", S_intersection)

S_intersection = S1 & S2

print("Intersection of S1 and S2:", S_intersection)

S3 = set([x for x in range(6,10)])

print("S3:", S3)

S1_S2_S3 = S1.intersection(S2).intersection(S3)

print("Intersection of S1, S2, and S3:", S1_S2_S3)

# Both union method or | can be used

S1 = set([x for x in range(1,11) if x%3==0])

print("S1:", S1)

S2 = set([x for x in range(1,5)])

print("S2:", S2)

S_union = S1.union(S2)

print("Union of S1 and S2:", S_union)

S_union = S1 | S2

print("Union of S1 and S2:", S_union)

S=set([x for x in range (21) if x%2==0])

print ("S is the set of even numbers between 0 and 20:", S)

S_complement = set([x for x in range (21) if x%2!=0])

print ("S_complement is the set of odd numbers between 0 and 20:", S_complement)

print ("Is the union of S and S_complement equal to all numbers between 0 and 20?",

S.union(S_complement)==set([x for x in range (21)]))

S1 = set([x for x in range(31) if x%3==0])

print ("Set S1:", S1)

S2 = set([x for x in range(31) if x%5==0])

print ("Set S2:", S2)

S_difference = S2-S1

print("Difference of S1 and S2 i.e. S2\S1:", S_difference)

S_difference = S1.difference(S2)

print("Difference of S2 and S1 i.e. S1\S2:", S_difference)

print("S1",S1)

print("S2",S2)

print("Symmetric difference", S1^S2)

print("Symmetric difference", S2.symmetric_difference(S1))

A = set(['a','b','c'])

S = {1,2,3}

def cartesian_product(S1,S2):

result = set()

for i in S1:

for j in S2:

result.add(tuple([i,j]))

return (result)

C = cartesian_product(A,S)

print("Cartesian product of A and S\n{} X {}:{}".format(A,S,C))

print("Length of the Cartesian product set:",len(C))

from itertools import product as prod

A = set([x for x in range(1,7)])

B = set([x for x in range(1,7)])

p=list(prod(A,B))

print("A is set of all possible throws of a dice:",A)

print("B is set of all possible throws of a dice:",B)

print ("\nProduct of A and B is the all possible combinations of A and B thrown together:\n",p)

A = {'Head','Tail'} # 2 element set

p2=list(prod(A,repeat=2)) # Power set of power 2

print("Cartesian power 2 with length {}: {}".format(len(p2),p2))

print()

p3=list(prod(A,repeat=3)) # Power set of power 3

print("Cartesian power 3 with length {}: {}".format(len(p3),p3))

import itertools

A = {'Red','Green','Blue'}

# Find all permutations of A

permute_all = set(itertools.permutations(A))

print("Permutations of {}".format(A))

print("-"*50)

for i in permute_all:

print(i)

print("-"*50)

print;print ("Number of permutations: ", len(permute_all))

import matplotlib.patches as mpatches

import matplotlib.pyplot as plt

from math import factorial

print("Factorial of 3:", factorial(3))

A = {'Red','Green','Blue','Violet'}

k=2

n = len(A)

permute_k = list(itertools.permutations(A, k))

print("{}-permutations of {}: ".format(k,A))

print("-"*50)

for i in permute_k:

print(i)

print("-"*50)

print ("Size of the permutation set = {}!/({}-{})! = {}".format(n,n,k, len(permute_k)))

factorial(4)/(factorial(4-2))

# Print all the k-combinations of A

choose_k = list(itertools.combinations(A,k))

print("%i-combinations of %s: " %(k,A))

for i in choose_k:

print(i)

print;print("Number of combinations = %i!/(%i!(%i-%i)!) = %i" %(n,k,n,k,len(choose_k) ))

n_dice = 2

dice_faces = {1,2,3,4,5,6}

# Construct the event space i.e. set of ALL POSSIBLE events

event_space = set(prod(dice_faces,repeat=n_dice))

for outcome in event_space:

print(outcome,end=', ')

# What is the set we are interested in?

favorable_outcome = []

for outcome in event_space:

x,y = outcome

if (x+y)%3==0:

favorable_outcome.append(outcome)

favorable_outcome = set(favorable_outcome)

for f_outcome in favorable_outcome:

print(f_outcome,end=', ')

prob = len(favorable_outcome)/len(event_space)

print("The probability of getting a sum which is a multiple of 3 is: ", prob)

n_dice = 5

dice_faces = {1,2,3,4,5,6}

# Construct the event space i.e. set of ALL POSSIBLE events

event_space = set(prod(dice_faces,repeat=n_dice))

6**5

# What is the set we are interested in?

favorable_outcome = []

for outcome in event_space:

d1,d2,d3,d4,d5 = outcome

if (d1+d2+d3+d4+d5)%5==0 and (d1+d2+d3+d4+d5)%3!=0 :

favorable_outcome.append(outcome)

favorable_outcome = set(favorable_outcome)

prob = len(favorable_outcome)/len(event_space)

print("The probability of getting a sum, which is a multiple of 5 but not a multiple of 3, is: ", prob)

multiple_of_5 = []

multiple_of_3 = []

for outcome in event_space:

d1,d2,d3,d4,d5 = outcome

if (d1+d2+d3+d4+d5)%5==0:

multiple_of_5.append(outcome)

if (d1+d2+d3+d4+d5)%3==0:

multiple_of_3.append(outcome)

favorable_outcome = set(multiple_of_5).difference(set(multiple_of_3))

for i in list(favorable_outcome)[:5]:

a1,a2,a3,a4,a5=i

print("{}, SUM: {}".format(i,a1+a2+a3+a4+a5))

prob = len(favorable_outcome)/len(event_space)

print("The probability of getting a sum, which is a multiple of 5 but not a multiple of 3, is: ", prob)

from math import pi,sqrt

import random

import matplotlib.pyplot as plt

import numpy as np

# Center point

x,y = 0,0

# Side of the square

a = 2

def throw_dart():

"""

Simulates the randon throw of a dirt. It can land anywhere in the square (uniformly randomly)

"""

# Random final landing position of the dirt between -a/2 and +a/2 around the center point

position_x = x+a/2*(-1+2*random.random())

position_y = y+a/2*(-1+2*random.random())

return (position_x,position_y)

throw_dart()

def is_within_circle(x,y):

"""

Given the landing coordinate of a dirt, determines if it fell inside the circle

"""

# Side of the square

a = 2

distance_from_center = sqrt(x**2+y**2)

if distance_from_center < a/2:

return True

else:

return False

is_within_circle(1.9,1.9)

is_within_circle(0.2,-0.6)

n_throws = 10

count_inside_circle=0

for i in range(n_throws):

r1,r2=throw_dart()

if is_within_circle(r1,r2):

count_inside_circle+=1

ratio = count_inside_circle/n_throws

print(4*ratio)

n_throws = 10000

count_inside_circle=0

for i in range(n_throws):

r1,r2=throw_dart()

if is_within_circle(r1,r2):

count_inside_circle+=1

ratio = count_inside_circle/n_throws

print(4*ratio)

def compute_pi_throwing_dart(n_throws):

"""

Computes pi by throwing a bunch of darts at the square

"""

n_throws = n_throws

count_inside_circle=0

for i in range(n_throws):

r1,r2=throw_dart()

if is_within_circle(r1,r2):

count_inside_circle+=1

result = 4*(count_inside_circle/n_throws)

return result

n_exp=[]

pi_exp=[]

n = [int(10**(0.5*i)) for i in range(1,15)]

for i in n:

p = compute_pi_throwing_dart(i)

pi_exp.append(p)

n_exp.append(i)

print("Computed value of pi by throwing {} darts is: {}".format(i,p))

plt.figure(figsize=(8,5))

plt.title("Computing pi with \nincreasing number of random throws",fontsize=20)

plt.semilogx(n_exp, pi_exp,c='k',marker='o',lw=3)

plt.xticks(fontsize=14)

plt.yticks(fontsize=14)

plt.xlabel("Number of random throws",fontsize=15)

plt.ylabel("Computed value of pi",fontsize=15)

plt.hlines(y=3.14159,xmin=1,xmax=1e7,linestyle='--')

plt.text(x=10,y=3.05,s="Value of pi",fontsize=17)

plt.grid(True)

plt.show()

| 0.298798 | 0.960621 |

# Amazon SageMaker Object Detection using the augmented manifest file format

1. [Introduction](#Introduction)

2. [Setup](#Setup)

3. [Specifying input Dataset](#Specifying-input-Dataset)

4. [Training](#Training)

## Introduction

Object detection is the process of identifying and localizing objects in an image. A typical object detection solution takes in an image as input and provides a bounding box on the image where an object of interest is, along with identifying what object the box encapsulates. But before we have this solution, we need to process a training dataset, create and setup a training job for the algorithm so that the aglorithm can learn about the dataset and then host the algorithm as an endpoint, to which we can supply the query image.

This notebook focuses on using the built-in SageMaker Single Shot multibox Detector ([SSD](https://arxiv.org/abs/1512.02325)) object detection algorithm to train model on your custom dataset. For dataset prepration or using the model for inference, please see other scripts in [this folder](./)

## Setup

To train the Object Detection algorithm on Amazon SageMaker, we need to setup and authenticate the use of AWS services. To begin with we need an AWS account role with SageMaker access. This role is used to give SageMaker access to your data in S3. In this example, we will use the same role that was used to start this SageMaker notebook.

```

%%time

import sagemaker

import boto3

from sagemaker import get_execution_role

role = get_execution_role()

print(role)

```

We also need the S3 bucket that has the training manifests and will be used to store the tranied model artifacts.

```

bucket = '<please replace with your s3 bucket name>'

prefix = 'demo'

```

## Specifying input Dataset

This notebook assumes you already have prepared two [Augmented Manifest Files](https://docs.aws.amazon.com/sagemaker/latest/dg/augmented-manifest.html) as training and validation input data for the object detection model.

There are many advantages to using **augmented manifest files** for your training input

* No format conversion is required if you are using SageMaker Ground Truth to generate the data labels

* Unlike the traditional approach of providing paths to the input images separately from its labels, augmented manifest file already combines both into one entry for each input image, reducing complexity in algorithm code for matching each image with labels. (Read this [blog post](https://aws.amazon.com/blogs/machine-learning/easily-train-models-using-datasets-labeled-by-amazon-sagemaker-ground-truth/) for more explanation.)

* When splitting your dataset for train/validation/test, you don't need to rearrange and re-upload image files to different s3 prefixes for train vs validation. Once you upload your image files to S3, you never need to move it again. You can just place pointers to these images in your augmented manifest file for training and validation. More on the train/validation data split in this post later.

* When using augmented manifest file, the training input images is loaded on to the training instance in *Pipe mode,* which means the input data is streamed directly to the training algorithm while it is running (vs. File mode, where all input files need to be downloaded to disk before the training starts). This results in faster training performance and less disk resource utilization. Read more in this [blog post](https://aws.amazon.com/blogs/machine-learning/accelerate-model-training-using-faster-pipe-mode-on-amazon-sagemaker/) on the benefits of pipe mode.

```

train_data_prefix = "demo"

# below uses the training data after augmentation

s3_train_data= "s3://{}/{}/all_augmented.json".format(bucket, train_data_prefix)

# uncomment below to use the non-augmented input

# s3_train_data= "s3://{}/training-manifest/{}/train.manifest".format(bucket, train_data_prefix)

s3_validation_data = "s3://{}/training-manifest/{}/validation.manifest".format(bucket, train_data_prefix)

print("Train data: {}".format(s3_train_data) )

print("Validation data: {}".format(s3_validation_data) )

train_input = {

"ChannelName": "train",

"DataSource": {

"S3DataSource": {

"S3DataType": "AugmentedManifestFile",

"S3Uri": s3_train_data,

"S3DataDistributionType": "FullyReplicated",

# This must correspond to the JSON field names in your augmented manifest.

"AttributeNames": ['source-ref', 'bb']

}

},

"ContentType": "application/x-recordio",

"RecordWrapperType": "RecordIO",

"CompressionType": "None"

}

validation_input = {

"ChannelName": "validation",

"DataSource": {

"S3DataSource": {

"S3DataType": "AugmentedManifestFile",

"S3Uri": s3_validation_data,

"S3DataDistributionType": "FullyReplicated",

# This must correspond to the JSON field names in your augmented manifest.

"AttributeNames": ['source-ref', 'bb']

}

},

"ContentType": "application/x-recordio",

"RecordWrapperType": "RecordIO",

"CompressionType": "None"

}

```

Below code computes the number of training samples, required in the training job request.

```

import json

import os

def read_manifest_file(file_path):

with open(file_path, 'r') as f:

output = [json.loads(line.strip()) for line in f.readlines()]

return output

!aws s3 cp $s3_train_data .

train_data = read_manifest_file(os.path.split(s3_train_data)[1])

num_training_samples = len(train_data)

num_training_samples

s3_output_path = 's3://{}/{}/output'.format(bucket, prefix)

s3_output_path

```

## Training

Now that we are done with all the setup that is needed, we are ready to train our object detector.

```

from sagemaker.amazon.amazon_estimator import get_image_uri

# This retrieves a docker container with the built in object detection SSD model.

training_image = sagemaker.amazon.amazon_estimator.get_image_uri(boto3.Session().region_name, 'object-detection', repo_version='latest')

print (training_image)

```

Create a unique job name

```

import time

job_name_prefix = 'od-demo'

timestamp = time.strftime('-%Y-%m-%d-%H-%M-%S', time.gmtime())

model_job_name = job_name_prefix + timestamp

model_job_name

```

The object detection algorithm at its core is the [Single-Shot Multi-Box detection algorithm (SSD)](https://arxiv.org/abs/1512.02325). This algorithm uses a `base_network`, which is typically a [VGG](https://arxiv.org/abs/1409.1556) or a [ResNet](https://arxiv.org/abs/1512.03385). (resnet is typically faster so for edge inferences, I'd recommend using this base network). The Amazon SageMaker object detection algorithm supports VGG-16 and ResNet-50 now. It also has a lot of options for hyperparameters that help configure the training job. The next step in our training, is to setup these hyperparameters and data channels for training the model. See the SageMaker Object Detection [documentation](https://docs.aws.amazon.com/sagemaker/latest/dg/object-detection.html) for more details on the hyperparameters.

To figure out which works best for your data, run a hyperparameter tuning job. There's some example notebooks at [https://github.com/awslabs/amazon-sagemaker-examples](https://github.com/awslabs/amazon-sagemaker-examples) that you can use for reference.

```

# This is where transfer learning happens. We use the pre-trained model and nuke the output layer by specifying

# the num_classes value. You can also run a hyperparameter tuning job to figure out which values work the best.

hyperparams = {

"base_network": 'resnet-50',

"use_pretrained_model": "1",

"num_classes": "2",

"mini_batch_size": "30",

"epochs": "30",

"learning_rate": "0.001",

"lr_scheduler_step": "10,20",

"lr_scheduler_factor": "0.25",

"optimizer": "sgd",

"momentum": "0.9",

"weight_decay": "0.0005",

"overlap_threshold": "0.5",

"nms_threshold": "0.45",

"image_shape": "512",

"label_width": "150",

"num_training_samples": str(num_training_samples)

}

```

Now that the hyperparameters are set up, we configure the rest of the training job parameters

```

training_params = \

{

"AlgorithmSpecification": {

"TrainingImage": training_image,

"TrainingInputMode": "Pipe"

},

"RoleArn": role,

"OutputDataConfig": {

"S3OutputPath": s3_output_path

},

"ResourceConfig": {

"InstanceCount": 1,

"InstanceType": "ml.p3.8xlarge",

"VolumeSizeInGB": 200

},

"TrainingJobName": model_job_name,

"HyperParameters": hyperparams,

"StoppingCondition": {

"MaxRuntimeInSeconds": 86400

},

"InputDataConfig": [

train_input,

validation_input

]

}

```

Now we create the SageMaker training job.

```

client = boto3.client(service_name='sagemaker')

client.create_training_job(**training_params)

# Confirm that the training job has started

status = client.describe_training_job(TrainingJobName=model_job_name)['TrainingJobStatus']

print('Training job current status: {}'.format(status))

```

To check the progess of the training job, you can repeatedly evaluate the following cell. When the training job status reads 'Completed', move on to the next part of the tutorial.

```

client = boto3.client(service_name='sagemaker')

print("Training job status: ", client.describe_training_job(TrainingJobName=model_job_name)['TrainingJobStatus'])

print("Secondary status: ", client.describe_training_job(TrainingJobName=model_job_name)['SecondaryStatus'])

```

# Next step

Once the training job completes, move on to the [next notebook](./03_local_inference_post_training.ipynb) to convert the trained model to a deployable format and run local inference

|

github_jupyter

|

%%time

import sagemaker

import boto3

from sagemaker import get_execution_role

role = get_execution_role()

print(role)

bucket = '<please replace with your s3 bucket name>'

prefix = 'demo'

train_data_prefix = "demo"

# below uses the training data after augmentation

s3_train_data= "s3://{}/{}/all_augmented.json".format(bucket, train_data_prefix)

# uncomment below to use the non-augmented input

# s3_train_data= "s3://{}/training-manifest/{}/train.manifest".format(bucket, train_data_prefix)

s3_validation_data = "s3://{}/training-manifest/{}/validation.manifest".format(bucket, train_data_prefix)

print("Train data: {}".format(s3_train_data) )

print("Validation data: {}".format(s3_validation_data) )

train_input = {

"ChannelName": "train",

"DataSource": {

"S3DataSource": {

"S3DataType": "AugmentedManifestFile",

"S3Uri": s3_train_data,

"S3DataDistributionType": "FullyReplicated",

# This must correspond to the JSON field names in your augmented manifest.

"AttributeNames": ['source-ref', 'bb']

}

},

"ContentType": "application/x-recordio",

"RecordWrapperType": "RecordIO",

"CompressionType": "None"

}

validation_input = {

"ChannelName": "validation",

"DataSource": {

"S3DataSource": {

"S3DataType": "AugmentedManifestFile",

"S3Uri": s3_validation_data,

"S3DataDistributionType": "FullyReplicated",

# This must correspond to the JSON field names in your augmented manifest.

"AttributeNames": ['source-ref', 'bb']

}

},

"ContentType": "application/x-recordio",

"RecordWrapperType": "RecordIO",

"CompressionType": "None"

}

import json

import os

def read_manifest_file(file_path):

with open(file_path, 'r') as f:

output = [json.loads(line.strip()) for line in f.readlines()]

return output

!aws s3 cp $s3_train_data .

train_data = read_manifest_file(os.path.split(s3_train_data)[1])

num_training_samples = len(train_data)

num_training_samples

s3_output_path = 's3://{}/{}/output'.format(bucket, prefix)

s3_output_path

from sagemaker.amazon.amazon_estimator import get_image_uri

# This retrieves a docker container with the built in object detection SSD model.

training_image = sagemaker.amazon.amazon_estimator.get_image_uri(boto3.Session().region_name, 'object-detection', repo_version='latest')

print (training_image)