code

stringlengths 2.5k

6.36M

| kind

stringclasses 2

values | parsed_code

stringlengths 0

404k

| quality_prob

float64 0

0.98

| learning_prob

float64 0.03

1

|

|---|---|---|---|---|

```

%load_ext autoreload

%reload_ext autoreload

%autoreload 2

%matplotlib inline

import os

import warnings

import numpy as np

from astropy.table import Table

import matplotlib.pyplot as plt

from matplotlib import rcParams

warnings.filterwarnings("ignore")

plt.rc('text', usetex=True)

rcParams.update({'axes.linewidth': 1.5})

rcParams.update({'xtick.direction': 'in'})

rcParams.update({'ytick.direction': 'in'})

rcParams.update({'xtick.minor.visible': 'True'})

rcParams.update({'ytick.minor.visible': 'True'})

rcParams.update({'xtick.major.pad': '7.0'})

rcParams.update({'xtick.major.size': '8.0'})

rcParams.update({'xtick.major.width': '1.5'})

rcParams.update({'xtick.minor.pad': '7.0'})

rcParams.update({'xtick.minor.size': '4.0'})

rcParams.update({'xtick.minor.width': '1.5'})

rcParams.update({'ytick.major.pad': '7.0'})

rcParams.update({'ytick.major.size': '8.0'})

rcParams.update({'ytick.major.width': '1.5'})

rcParams.update({'ytick.minor.pad': '7.0'})

rcParams.update({'ytick.minor.size': '4.0'})

rcParams.update({'ytick.minor.width': '1.5'})

rcParams.update({'axes.titlepad': '10.0'})

rcParams.update({'font.size': 25})

```

### Using the summary file for a galaxy's stellar mass, age, and metallicity maps

* For a given Illustris or TNG galaxy, we have measured basic properties of their stellar mass, age, and metallicity distributions.

* Each galaxy has data for the 3 primary projections: `xy`, `xz`, `yz`.

- We treat them as independent measurement. Result for each projection is saved in its own file.

* Each galaxy has three "components":

- `ins`: in-situ stellar component, meaning the stars formed in the halo of the main progenitor.

- `exs`: ex-situ stellar component, meaning the stars accreted from other halos.

- `gal`: the whole galaxy, a combination of `ins` and `exs`.

```

data_dir = '/Users/song/data/massive/simulation/riker/tng/sum'

```

* Naming of the file, for example: `tng100_z0.4_hres_158_181900_xy_sum.npy`

- `tng100`: Simulation used. `ori` for original Illustris; `tng` for Illustris TNG. Number indicates the volume of the simulation. e.g. `tng100`, `tng300`.

- `z0.4`: the snapshot is at z=0.4.

- `hres`: high-resolution map, which has a 1 kpc pixel; also have `lres` for low-resolution, which has a 5.33 kpc pixel.

- `158_181900` in the format of `INDEX_CATSHID`: `INDEX` is just the index in the dataset; `CATSHID` is the subhalo ID from the simulation, which is a unique number to indentify halo or galaxy.

- `xy`: projection used in this data.

* Let's try read in the summary files in all 3 projections for one galaxy:

```

xy_sum = np.load(os.path.join(data_dir, 'tng100_z0.4_hres_15_31188_xy_sum.npy'))

xz_sum = np.load(os.path.join(data_dir, 'tng100_z0.4_hres_15_31188_xz_sum.npy'))

yz_sum = np.load(os.path.join(data_dir, 'tng100_z0.4_hres_15_31188_yz_sum.npy'))

```

* The structure of the summary file is just a dictionary that contains the results from different steps of analysis, each of which is also a dictionary of data.

* For each projection, there are 4 components:

- **info**: basic info of the galaxy from simulation, including subhalo ID, stellar mass, halo mass (`M200c`), and average age and metallicity values. This can be used to identify and match galaxies. Also includes information about the size and pixel scale of the map.

- **geom**: basic geometry information of the galaxy on the stellar mass map. `x`, `y` for centroid; `ba` for axis ratio, `pa` for position angle in degree (`theta` is the same, but in radian).

- **aper**: stellar mass, age, and metallicity profiles for each component measured in a series of apertures. Will explain more later.

- **prof**: 1-D profile information from `Ellipse` profile fitting process. Will explain in details below.

```

# Take a look at the basic information

xy_sum['info']

# Basic geometric information of the galaxy

xy_sum['geom']

```

### Aperture measurements

* It is easier to see the content of the summary using a astropy table:

* Key information:

- `rad_inn`, `rad_out`, `rad_mid`: inner & outer edges, and the middle point of the radial bins.

- `maper_[gal/ins/exs]`: aperture masses enclosed in different radius (using elliptical apertures).

- `mprof_[gal/ins/exs]`: stellar mass in each radial bin.

- `age_[gal/ins/exs]_w`: mass-weighted stellar age.

- `met_[gal/ins/exs]_w`: mass-weighted stellar metallicity.

- There are also flags to indicate if the age and metallicity values can be trust. Any value >0 means there is an issue.

* Notice that the `age` and `met` values can be `NaN` in the outskirts.

* It is also possible that `mprof` has 0.0 value in an outer bin for some compact galaxies.

```

Table(xy_sum['aper'])

# Check the differences of the three projections in term of mass profiles

# They are very similar, but not the same

plt.plot(np.log10(xy_sum['aper']['rad_mid']), np.log10(xy_sum['aper']['mprof_gal']), label='xy')

plt.plot(np.log10(xz_sum['aper']['rad_mid']), np.log10(xz_sum['aper']['mprof_gal']), label='xz')

plt.plot(np.log10(yz_sum['aper']['rad_mid']), np.log10(yz_sum['aper']['mprof_gal']), label='yz')

plt.legend(loc='best')

_ = plt.xlabel(r'$\log\ [\rm R/kpc]$')

_ = plt.ylabel(r'$\log_{10}\ [M_{\star}/M_{\odot}]$')

```

### Summary of `Ellipse` results.

* We use the `Ellipse` code to extract the shape and mass density profiles for each component. So, if everything goes well, the `prof` summary dictionary should contain 6 profiles.

- `[gal/ins/exs]_shape`: We first run `Ellipse` allowing the shape and position angle to change along the radius. From this step, we can estimate the profile of ellipticity and position angle.

- `[gal/ins/exs]_mprof`: Then we fix the isophotal shape at the flux-weighted value and run `Ellipse` to extract 1-D mass density profiles along the major axis.

- Each profile itself is a small table, which can be visualize better using `astropy.table`

- For the `ins` and `exs` components, their mass density profiles are extracted using the average shape of the **whole galaxy**, which can be different from the average shape of these two components.

* In each profile, the useful information are:

- `r_pix` and `r_kpc`: radius in pixel and kpc unit.

- `ell` and `ell_err`: ellipticity and its error

- `pa` and `pa_err`: position angle (in degree) and its error. **Notice**: need to be normalized sometimes.

- `pa_norm`: position angle profile after normalization

- `growth_ori`: total stellar mass enclosed in each isophote. This is from integrating the profile using average values at each radii, could show small difference with the values from "aperture photometry".

- `intens` and `int_err`: average stellar mass per pixel at each radii. When divided by the pixel are in unit of kpc^2, it will give the surface mass density profile and the associated error.

- The amplitudes of first 4 Fourier deviations are available too: `a1/a2/a3/a4` & `b1/b2/b3/b4`. These values should be interpreted as relative deviation. Their errors are available too. **Please be cautious when using these values.**

* In some rare cases, `Ellipse` can fail to get useful profile. This is usually caused by on-going mergers and mostly happens for the `shape` profile.

- The corresponding field will be `None` when `Ellipse` failed. Please be careful!

- It is safe to assume these failed cases are "weird" and exclude them from most of the analysis.

```

# Check out the 1-D shape profile for the whole galaxy

Table(xy_sum['prof']['gal_mprof'])

# Check the ellipticity profiles of the three projections

plt.plot(np.log10(xy_sum['prof']['gal_shape']['r_kpc']), xy_sum['prof']['gal_shape']['ell'], label='xy')

plt.plot(np.log10(xz_sum['prof']['gal_shape']['r_kpc']), xz_sum['prof']['gal_shape']['ell'], label='xz')

plt.plot(np.log10(yz_sum['prof']['gal_shape']['r_kpc']), yz_sum['prof']['gal_shape']['ell'], label='yz')

_ = plt.xlabel(r'$\log\ [\rm R/kpc]$')

_ = plt.ylabel(r'$e$')

# And the mass profiles derived using varied and fixed shape can be different

plt.plot(np.log10(xy_sum['prof']['gal_shape']['r_kpc']), np.log10(xy_sum['prof']['gal_shape']['intens']),

label='Varied Shape')

plt.plot(np.log10(xy_sum['prof']['gal_mprof']['r_kpc']), np.log10(xy_sum['prof']['gal_mprof']['intens']),

label='Fixed Shape')

_ = plt.xlabel(r'$\log\ [\rm R/kpc]$')

_ = plt.ylabel(r'$\log\ \mu_{\star}$')

```

### Organize the data better

* For certain project, you can organize information from the summary file into a structure that is easier to use.

* Using the 3-D galaxy shape project as example:

```

xy_sum_for3d = {

# Subhalo ID

'catsh_id': xy_sum['info']['catsh_id'],

# Stellar mass of the galaxy

'logms': xy_sum['info']['logms'],

# Basic geometry of the galaxy

'aper_x0': xy_sum['geom']['x'],

'aper_y0': xy_sum['geom']['y'],

'aper_ba': xy_sum['geom']['ba'],

'aper_pa': xy_sum['geom']['pa'],

# Aperture mass profile

'aper_rkpc': xy_sum['aper']['rad_mid'],

# Total mass enclosed in the aperture

'aper_maper': xy_sum['aper']['maper_gal'],

# Stellar mass in the bin

'aper_mbins': xy_sum['aper']['mprof_gal'],

# 1-D profile with varied shape

'rkpc_shape': xy_sum['prof']['gal_shape']['r_kpc'],

# This is the surface mass density profile and its error

'mu_shape': xy_sum['prof']['gal_shape']['intens'] / (xy_sum['info']['pix'] ** 2.0),

'mu_err_shape': xy_sum['prof']['gal_shape']['int_err'] / (xy_sum['info']['pix'] ** 2.0),

# This is the ellipticity profiles

'e_shape': xy_sum['prof']['gal_shape']['ell'],

'e_err_shape': xy_sum['prof']['gal_shape']['ell_err'],

# This is the normalized position angle profile

'pa_shape': xy_sum['prof']['gal_shape']['pa_norm'],

'pa_err_shape': xy_sum['prof']['gal_shape']['pa_err'],

# Total mass enclosed by apertures

'maper_shape': xy_sum['prof']['gal_shape']['growth_ori'],

# 1-D profile using fixed shape

'rkpc_prof': xy_sum['prof']['gal_mprof']['r_kpc'],

# This is the surface mass density profile and its error

'mu_mprof': xy_sum['prof']['gal_mprof']['intens'] / (xy_sum['info']['pix'] ** 2.0),

'mu_err_mprof': xy_sum['prof']['gal_mprof']['int_err'] / (xy_sum['info']['pix'] ** 2.0),

# Total mass enclosed by apertures

'maper_mprof': xy_sum['prof']['gal_mprof']['growth_ori'],

# Fourier deviations

'a1_mprof': xy_sum['prof']['gal_mprof']['a1'],

'a1_err_mprof': xy_sum['prof']['gal_mprof']['a1_err'],

'a2_mprof': xy_sum['prof']['gal_mprof']['a2'],

'a2_err_mprof': xy_sum['prof']['gal_mprof']['a2_err'],

'a3_mprof': xy_sum['prof']['gal_mprof']['a3'],

'a3_err_mprof': xy_sum['prof']['gal_mprof']['a3_err'],

'a4_mprof': xy_sum['prof']['gal_mprof']['a4'],

'a4_err_mprof': xy_sum['prof']['gal_mprof']['a4_err'],

'b1_mprof': xy_sum['prof']['gal_mprof']['b1'],

'b1_err_mprof': xy_sum['prof']['gal_mprof']['b1_err'],

'b2_mprof': xy_sum['prof']['gal_mprof']['b2'],

'b2_err_mprof': xy_sum['prof']['gal_mprof']['b2_err'],

'b3_mprof': xy_sum['prof']['gal_mprof']['b3'],

'b3_err_mprof': xy_sum['prof']['gal_mprof']['b3_err'],

'b4_mprof': xy_sum['prof']['gal_mprof']['b4'],

'b4_err_mprof': xy_sum['prof']['gal_mprof']['b4_err']

}

```

### Visualizations

* `riker` provides a few routines to help you visualize the `aper` and `prof` result.

* You can `git clone git@github.com:dr-guangtou/riker.git` and the `python3 setup.py develop` to install it.

* Unfortunately, now it still depends on some of my personal code that is still under development. But it is fine to just make a few plots.

```

from riker import visual

# Summary plot of the aperture measurements

_ = visual.show_aper(xy_sum['info'], xy_sum['aper'])

# Fake some empty stellar mass maps structure

maps_fake = {'mass_gal': None, 'mass_ins': None, 'mass_exs': None}

# Organize the data

ell_plot = visual.prepare_show_ellipse(xy_sum['info'], maps_fake, xy_sum['prof'])

# Show a summary plot for the 1-D profiles

_ = visual.plot_ell_prof(ell_plot)

# You can also visualize the 1-D profiles of Fourier deviations for a certain profile

_ = visual.plot_ell_fourier(xy_sum['prof']['ins_mprof'], show_both=True)

```

|

github_jupyter

|

%load_ext autoreload

%reload_ext autoreload

%autoreload 2

%matplotlib inline

import os

import warnings

import numpy as np

from astropy.table import Table

import matplotlib.pyplot as plt

from matplotlib import rcParams

warnings.filterwarnings("ignore")

plt.rc('text', usetex=True)

rcParams.update({'axes.linewidth': 1.5})

rcParams.update({'xtick.direction': 'in'})

rcParams.update({'ytick.direction': 'in'})

rcParams.update({'xtick.minor.visible': 'True'})

rcParams.update({'ytick.minor.visible': 'True'})

rcParams.update({'xtick.major.pad': '7.0'})

rcParams.update({'xtick.major.size': '8.0'})

rcParams.update({'xtick.major.width': '1.5'})

rcParams.update({'xtick.minor.pad': '7.0'})

rcParams.update({'xtick.minor.size': '4.0'})

rcParams.update({'xtick.minor.width': '1.5'})

rcParams.update({'ytick.major.pad': '7.0'})

rcParams.update({'ytick.major.size': '8.0'})

rcParams.update({'ytick.major.width': '1.5'})

rcParams.update({'ytick.minor.pad': '7.0'})

rcParams.update({'ytick.minor.size': '4.0'})

rcParams.update({'ytick.minor.width': '1.5'})

rcParams.update({'axes.titlepad': '10.0'})

rcParams.update({'font.size': 25})

data_dir = '/Users/song/data/massive/simulation/riker/tng/sum'

xy_sum = np.load(os.path.join(data_dir, 'tng100_z0.4_hres_15_31188_xy_sum.npy'))

xz_sum = np.load(os.path.join(data_dir, 'tng100_z0.4_hres_15_31188_xz_sum.npy'))

yz_sum = np.load(os.path.join(data_dir, 'tng100_z0.4_hres_15_31188_yz_sum.npy'))

# Take a look at the basic information

xy_sum['info']

# Basic geometric information of the galaxy

xy_sum['geom']

Table(xy_sum['aper'])

# Check the differences of the three projections in term of mass profiles

# They are very similar, but not the same

plt.plot(np.log10(xy_sum['aper']['rad_mid']), np.log10(xy_sum['aper']['mprof_gal']), label='xy')

plt.plot(np.log10(xz_sum['aper']['rad_mid']), np.log10(xz_sum['aper']['mprof_gal']), label='xz')

plt.plot(np.log10(yz_sum['aper']['rad_mid']), np.log10(yz_sum['aper']['mprof_gal']), label='yz')

plt.legend(loc='best')

_ = plt.xlabel(r'$\log\ [\rm R/kpc]$')

_ = plt.ylabel(r'$\log_{10}\ [M_{\star}/M_{\odot}]$')

# Check out the 1-D shape profile for the whole galaxy

Table(xy_sum['prof']['gal_mprof'])

# Check the ellipticity profiles of the three projections

plt.plot(np.log10(xy_sum['prof']['gal_shape']['r_kpc']), xy_sum['prof']['gal_shape']['ell'], label='xy')

plt.plot(np.log10(xz_sum['prof']['gal_shape']['r_kpc']), xz_sum['prof']['gal_shape']['ell'], label='xz')

plt.plot(np.log10(yz_sum['prof']['gal_shape']['r_kpc']), yz_sum['prof']['gal_shape']['ell'], label='yz')

_ = plt.xlabel(r'$\log\ [\rm R/kpc]$')

_ = plt.ylabel(r'$e$')

# And the mass profiles derived using varied and fixed shape can be different

plt.plot(np.log10(xy_sum['prof']['gal_shape']['r_kpc']), np.log10(xy_sum['prof']['gal_shape']['intens']),

label='Varied Shape')

plt.plot(np.log10(xy_sum['prof']['gal_mprof']['r_kpc']), np.log10(xy_sum['prof']['gal_mprof']['intens']),

label='Fixed Shape')

_ = plt.xlabel(r'$\log\ [\rm R/kpc]$')

_ = plt.ylabel(r'$\log\ \mu_{\star}$')

xy_sum_for3d = {

# Subhalo ID

'catsh_id': xy_sum['info']['catsh_id'],

# Stellar mass of the galaxy

'logms': xy_sum['info']['logms'],

# Basic geometry of the galaxy

'aper_x0': xy_sum['geom']['x'],

'aper_y0': xy_sum['geom']['y'],

'aper_ba': xy_sum['geom']['ba'],

'aper_pa': xy_sum['geom']['pa'],

# Aperture mass profile

'aper_rkpc': xy_sum['aper']['rad_mid'],

# Total mass enclosed in the aperture

'aper_maper': xy_sum['aper']['maper_gal'],

# Stellar mass in the bin

'aper_mbins': xy_sum['aper']['mprof_gal'],

# 1-D profile with varied shape

'rkpc_shape': xy_sum['prof']['gal_shape']['r_kpc'],

# This is the surface mass density profile and its error

'mu_shape': xy_sum['prof']['gal_shape']['intens'] / (xy_sum['info']['pix'] ** 2.0),

'mu_err_shape': xy_sum['prof']['gal_shape']['int_err'] / (xy_sum['info']['pix'] ** 2.0),

# This is the ellipticity profiles

'e_shape': xy_sum['prof']['gal_shape']['ell'],

'e_err_shape': xy_sum['prof']['gal_shape']['ell_err'],

# This is the normalized position angle profile

'pa_shape': xy_sum['prof']['gal_shape']['pa_norm'],

'pa_err_shape': xy_sum['prof']['gal_shape']['pa_err'],

# Total mass enclosed by apertures

'maper_shape': xy_sum['prof']['gal_shape']['growth_ori'],

# 1-D profile using fixed shape

'rkpc_prof': xy_sum['prof']['gal_mprof']['r_kpc'],

# This is the surface mass density profile and its error

'mu_mprof': xy_sum['prof']['gal_mprof']['intens'] / (xy_sum['info']['pix'] ** 2.0),

'mu_err_mprof': xy_sum['prof']['gal_mprof']['int_err'] / (xy_sum['info']['pix'] ** 2.0),

# Total mass enclosed by apertures

'maper_mprof': xy_sum['prof']['gal_mprof']['growth_ori'],

# Fourier deviations

'a1_mprof': xy_sum['prof']['gal_mprof']['a1'],

'a1_err_mprof': xy_sum['prof']['gal_mprof']['a1_err'],

'a2_mprof': xy_sum['prof']['gal_mprof']['a2'],

'a2_err_mprof': xy_sum['prof']['gal_mprof']['a2_err'],

'a3_mprof': xy_sum['prof']['gal_mprof']['a3'],

'a3_err_mprof': xy_sum['prof']['gal_mprof']['a3_err'],

'a4_mprof': xy_sum['prof']['gal_mprof']['a4'],

'a4_err_mprof': xy_sum['prof']['gal_mprof']['a4_err'],

'b1_mprof': xy_sum['prof']['gal_mprof']['b1'],

'b1_err_mprof': xy_sum['prof']['gal_mprof']['b1_err'],

'b2_mprof': xy_sum['prof']['gal_mprof']['b2'],

'b2_err_mprof': xy_sum['prof']['gal_mprof']['b2_err'],

'b3_mprof': xy_sum['prof']['gal_mprof']['b3'],

'b3_err_mprof': xy_sum['prof']['gal_mprof']['b3_err'],

'b4_mprof': xy_sum['prof']['gal_mprof']['b4'],

'b4_err_mprof': xy_sum['prof']['gal_mprof']['b4_err']

}

from riker import visual

# Summary plot of the aperture measurements

_ = visual.show_aper(xy_sum['info'], xy_sum['aper'])

# Fake some empty stellar mass maps structure

maps_fake = {'mass_gal': None, 'mass_ins': None, 'mass_exs': None}

# Organize the data

ell_plot = visual.prepare_show_ellipse(xy_sum['info'], maps_fake, xy_sum['prof'])

# Show a summary plot for the 1-D profiles

_ = visual.plot_ell_prof(ell_plot)

# You can also visualize the 1-D profiles of Fourier deviations for a certain profile

_ = visual.plot_ell_fourier(xy_sum['prof']['ins_mprof'], show_both=True)

| 0.494385 | 0.843573 |

```

from timeit import default_timer as timer

from functools import partial

import yaml

import sys

import os

from estimagic.optimization.optimize import maximize

from scipy.optimize import root_scalar

from scipy.stats import chi2

import numdifftools as nd

import pandas as pd

import respy as rp

import numpy as np

sys.path.insert(0, "python")

from auxiliary import plot_bootstrap_distribution # noqa: E402

from auxiliary import plot_computational_budget # noqa: E402

from auxiliary import plot_smoothing_parameter # noqa: E402

from auxiliary import plot_score_distribution # noqa: E402

from auxiliary import plot_score_function # noqa: E402

from auxiliary import plot_likelihood # noqa: E402

```

# Maximum likelihood estimation

## Introduction

EKW models are calibrated to data on observed individual decisions and experiences under the hypothesis that the individual's behavior is generated from the solution to the model. The goal is to back out information on reward functions, preference parameters, and transition probabilities. This requires the full parameterization $\theta$ of the model.

Economists have access to information for $i = 1, ..., N$ individuals in each time period $t$. For every observation $(i, t)$ in the data, we observe action $a_{it}$, reward $r_{it}$, and a subset $x_{it}$ of the state $s_{it}$. Therefore, from an economist's point of view, we need to distinguish between two types of state variables $s_{it} = (x_{it}, \epsilon_{it})$. At time $t$, the economist and individual both observe $x_{it}$ while $\epsilon_{it}$ is only observed by the individual. In summary, the data $\mathcal{D}$ has the following structure:

\begin{align*}

\mathcal{D} = \{a_{it}, x_{it}, r_{it}: i = 1, ..., N; t = 1, ..., T_i\},

\end{align*}

where $T_i$ is the number of observations for which we observe individual $i$.

Likelihood-based calibration seeks to find the parameterization $\hat{\theta}$ that maximizes the likelihood function $\mathcal{L}(\theta\mid\mathcal{D})$, i.e. the probability of observing the given data as a function of $\theta$. As we only observe a subset $x_t$ of the state, we can determine the probability $p_{it}(a_{it}, r_{it} \mid x_{it}, \theta)$ of individual $i$ at time $t$ in $x_{it}$ choosing $a_{it}$ and receiving $r_{it}$ given parametric assumptions about the distribution of $\epsilon_{it}$. The objective function takes the following form:

\begin{align*}

\hat{\theta} \equiv \text{argmax}{\theta \in \Theta} \underbrace{\prod^N_{i= 1} \prod^{T_i}_{t= 1}\, p_{it}(a_{it}, r_{it} \mid x_{it}, \theta)}_{\mathcal{L}(\theta\mid\mathcal{D})}.

\end{align*}

We will explore the following issues:

* likelihood function

* score function and statistic

* asymptotic distribution

* linearity

* confidence intervals

* Wald

* likelihood - based

* Bootstrap

* numerical approximations

* smoothing of choice probabilities

* grid search

Most of the material is from the following two references:

* Pawitan, Y. (2001). [In all likelihood: Statistical modelling and inference using likelihood](https://www.amazon.de/dp/0199671222/ref=sr_1_1?keywords=in+all+likelihood&qid=1573806115&sr=8-1). Clarendon Press, Oxford.

* Casella, G., & Berger, R. L. (2002). [Statistical inference](https://www.amazon.de/dp/0534243126/ref=sr_1_1?keywords=casella+berger&qid=1573806129&sr=8-1). Duxbury, Belmont, CA.

Let's get started!

```

options_base = yaml.safe_load(open(os.environ["ROBINSON_SPEC"] + "/robinson.yaml", "r"))

params_base = pd.read_csv(open(os.environ["ROBINSON_SPEC"] + "/robinson.csv", "r"))

params_base.set_index(["category", "name"], inplace=True)

simulate = rp.get_simulate_func(params_base, options_base)

df = simulate(params_base)

```

Let us briefly inspect the parameterization.

```

params_base

```

Several options need to be specified as well.

```

options_base

```

We can now look at the simulated dataset.

```

df.head()

```

## Likelihood function

We can now start exploring the likelihood function that provides an order of preference on $\theta$. The likelihood function is a measure of information about the potentially unknown parameters of the model. The information will usually be incomplete and the likelihood function also expresses the degree of incompleteness

We will usually work with the sum of the individual log-likelihoods throughout as the likelihood cannot be represented without raising problems of numerical overflow. Note that the criterion function of the ``respy`` package returns to the average log-likelihood across the sample. Thus, we need to be careful with scaling it up when computing some of the test statistics later in the notebook.

We will first trace out the likelihood over reasonable parameter values.

```

params_base["lower"] = [0.948, 0.0695, -0.11, 1.04, 0.0030, 0.005, -0.10]

params_base["upper"] = [0.952, 0.0705, -0.09, 1.05, 0.1000, 0.015, +0.10]

```

We plot the normalized likelihood, i.e. set the maximum of the likelihood function to one by dividing it by its maximum.

```

crit_func = rp.get_log_like_func(params_base, options_base, df)

rslts = dict()

for index in params_base.index:

upper, lower = params_base.loc[index][["upper", "lower"]]

grid = np.linspace(lower, upper, 20)

fvals = list()

for value in grid:

params = params_base.copy()

params.loc[index, "value"] = value

fval = options_base["simulation_agents"] * crit_func(params)

fvals.append(fval)

rslts[index] = fvals

```

Let's visualize the results.

```

plot_likelihood(rslts, params_base)

```

### Maximum likelihood estimate

So far, we looked at the likelihood function in its entirety. Going forward, we will take a narrower view and just focus on the maximum likelihood estimate. We restrict our attention to the discount factor $\delta$ and treat it as the only unknown parameter. We will use [estimagic](https://estimagic.readthedocs.io/) for all our estimations.

```

crit_func = rp.get_log_like_func(params_base, options_base, df)

```

However, we will make our life even easier and fix all parameters but the discount factor $\delta$.

```

constr_base = [

{"loc": "shocks_sdcorr", "type": "fixed"},

{"loc": "wage_fishing", "type": "fixed"},

{"loc": "nonpec_fishing", "type": "fixed"},

{"loc": "nonpec_hammock", "type": "fixed"},

]

```

We will start the estimation with a perturbation of the true value.

```

params_start = params_base.copy()

params_start.loc[("delta", "delta"), "value"] = 0.91

```

Now we are ready to deal with the selection and specification of the optimization algorithm.

```

algo_options = {"maxeval": 100}

algo_name = "nlopt_bobyqa"

results, params_rslt = maximize(

crit_func,

params_base,

algo_name,

algo_options=algo_options,

constraints=constr_base,

)

```

Let's look at the results.

```

params_rslt

fval = results["fitness"] * options_base["simulation_agents"]

print(f"criterion function at optimum {fval:5.3f}")

```

We need to set up a proper interface to use some other Python functionality going forward.

```

def wrapper_crit_func(crit_func, options_base, params_base, value):

params = params_base.copy()

params.loc["delta", "value"] = value

return options_base["simulation_agents"] * crit_func(params)

p_wrapper_crit_func = partial(wrapper_crit_func, crit_func, options_base, params_base)

```

We need to use the MLE repeatedly going forward.

```

delta_hat = params_rslt.loc[("delta", "delta"), "value"]

```

At the maximum, the second derivative of the log-likelihood is negative and we define the observed Fisher information as follows

\begin{align*}

I(\hat{\theta}) \equiv -\frac{\partial^2 \log L(\hat{\theta})}{\partial^2 \theta}

\end{align*}

A larger curvature is associated with a strong peak, thus indicating less uncertainty about $\theta$.

```

delta_fisher = -nd.Derivative(p_wrapper_crit_func, n=2)([delta_hat])

delta_fisher

```

### Score statistic and Score function

The Score function is the first-derivative of the log-likelihood.

\begin{align*}

S(\theta) \equiv \frac{\partial \log L(\theta)}{\partial \theta}

\end{align*}

#### Distribution

The asymptotic normality of the score statistic is of key importance in deriving the asymptotic normality of the maximum likelihood estimator. Here we simulate $1,000$ samples of $10,000$ individuals and compute the score function at the true values. I had to increase the number of simulated individuals as convergence to the asymptotic distribution just took way to long.

```

plot_score_distribution()

```

#### Linearity

We seek linearity of the score function around the true value so that the log-likelihood is reasonably well approximated by a second order Taylor-polynomial.

\begin{align*}

\log L(\theta) \approx \log L(\hat{\theta}) + S(\hat{\theta})(\theta - \hat{\theta}) - \tfrac{1}{2} I(\hat{\theta}))(\theta - \hat{\theta})^2

\end{align*}

Since $S(\hat{\theta}) = 0$, we get:

\begin{align*}

\log\left(\frac{L(\theta)}{L(\hat{\theta})}\right) \approx - \tfrac{1}{2} I(\hat{\theta})(\theta - \hat{\theta})^2

\end{align*}

Taking the derivative to work with the score function, the following relationship is approximately true if the usual regularity conditions hold:

\begin{align*}

- I^{-1/2}(\hat{\theta}) S(\theta) \approx I^{1/2}(\hat{\theta}) (\theta - \hat{\theta})

\end{align*}

```

num_points, index = 10, ("delta", "delta")

upper, lower = params_base.loc[index, ["upper", "lower"]]

grid = np.linspace(lower, upper, num_points)

fds = np.tile(np.nan, num_points)

for i, point in enumerate(grid):

fds[i] = nd.Derivative(p_wrapper_crit_func, n=1)([point])

norm_fds = fds * -(1 / np.sqrt(delta_fisher))

norm_grid = (grid - delta_hat) * (np.sqrt(delta_fisher))

```

In the best case we see a standard normal distribution of $I^{1/2} (\hat{\theta}) (\theta - \hat{\theta})$ and so it is common practice to evaluate the linearity over $-2$ and $2$.

```

plot_score_function(norm_grid, norm_fds)

```

Alternative shapes are possible.

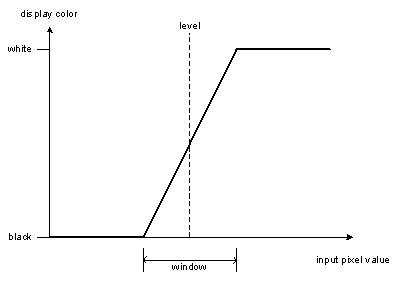

<img src="material/fig-quadratic-approximation.png" width="700" >

### Confidence intervals

How do we communicate the statistical evidence using the likelihood? Several notions exist that have different demands on the score function. Wile the Wald intervals rely on the asymptotic normality and linearity, likelihood-based intervals only require asymptotic normality. In well-behaved problems, both measures of uncertainty agree.

#### Wald intervals

```

rslt = list()

rslt.append(delta_hat - 1.96 * 1 / np.sqrt(delta_fisher))

rslt.append(delta_hat + 1.96 * 1 / np.sqrt(delta_fisher))

"{:5.3f} / {:5.3f}".format(*rslt)

```

#### Likelihood-based intervals

```

def root_wrapper(delta, options_base, alpha, index):

crit_val = -0.5 * chi2.ppf(1 - alpha, 1)

params_eval = params_base.copy()

params_eval.loc[("delta", "delta"), "value"] = delta

likl_ratio = options_base["simulation_agents"] * (

crit_func(params_eval) - crit_func(params_base)

)

return likl_ratio - crit_val

brackets = [[0.75, 0.95], [0.95, 1.10]]

rslt = list()

for bracket in brackets:

root = root_scalar(

root_wrapper,

method="bisect",

bracket=bracket,

args=(options_base, 0.05, index),

).root

rslt.append(root)

print("{:5.3f} / {:5.3f}".format(*rslt))

```

## Bootstrap

We can now run a simple bootstrap to see how the asymptotic standard errors line up.

Here are some useful resources on the topic:

* Davison, A., & Hinkley, D. (1997). [Bootstrap methods and their application](https://www.amazon.de/dp/B00D2WQ02U/ref=sr_1_1?keywords=bootstrap+methods+and+their+application&qid=1574070350&s=digital-text&sr=1-1). Cambridge University Press, Cambridge.

* Hesterberg, T. C. (2015). [What teachers should know about the bootstrap: Resampling in the undergraduate statistics curriculum](https://amstat.tandfonline.com/doi/full/10.1080/00031305.2015.1089789#.XdZhBldKjIV), *The American Statistician, 69*(4), 371-386.

* Horowitz, J. L. (2001). [Chapter 52. The bootstrap](https://www.scholars.northwestern.edu/en/publications/chapter-52-the-bootstrap). In Heckman, J.J., & Leamer, E.E., editors, *Handbook of Econometrics, 5*, 3159-3228. Elsevier Science B.V.

```

plot_bootstrap_distribution()

```

We can now construct the bootstrap confidence interval.

```

fname = "material/bootstrap.delta_perturb_true.pkl"

boot_params = pd.read_pickle(fname)

rslt = list()

for quantile in [0.025, 0.975]:

rslt.append(boot_params.loc[("delta", "delta"), :].quantile(quantile))

print("{:5.3f} / {:5.3f}".format(*rslt))

```

### Numerical aspects

The shape and properties of the likelihood function are determined by different numerical tuning parameters such as quality of numerical integration, smoothing of choice probabilities. We would simply choose all components to be the "best", but that comes at the cost of increasing the time to solution.

```

grid = np.linspace(100, 1000, 100, dtype=int)

rslts = list()

for num_draws in grid:

options = options_base.copy()

options["estimation_draws"] = num_draws

options["solution_draws"] = num_draws

start = timer()

rp.get_solve_func(params_base, options)

finish = timer()

rslts.append(finish - start)

```

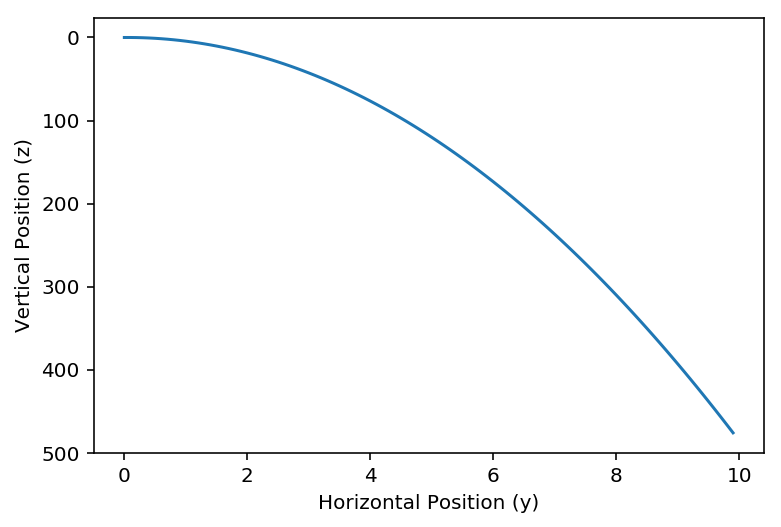

We are ready to see how time to solution increases as we improve the quality of the numerical integration by increasing the number of Monte Carlo draws.

```

plot_computational_budget(grid, rslts)

```

We need to learn where to invest a limited computational budget. We focus on the following going forward:

* smoothing parameter for logit accept-reject simulator

* grid search across core parameters

#### Smoothing parameter

We now show the shape of the likelihood function for alternative choices of the smoothing parameter $\tau$. There exists no closed-form solution for the choice probabilities, so these are simulated. Application of a basic accept-reject (AR) simulator poses the two challenges. First, there is the ocurrance of zero probability simulation for low probability events which causes problems for the evaluation of the log-likelihood. Second, the choice probabilities are not smooth in the parameters and instead are a step function. This is why McFadden (1989) introduces a class of smoothed AR simulators. The logit-smoothed AR simulator is the most popular one and also implemented in `respy`. The implementation requires to specify the smoothing parameter $\tau$. As $\tau \rightarrow 0$ the logit smoother approaches the original indicator function.

* McFadden, D. (1989). [A method of simulated moments for estimation of discrete response models without numerical integration](https://www.jstor.org/stable/1913621?seq=1#metadata_info_tab_contents). *Econometrica, 57*(5), 995-1026.

* Train, K. (2009). [Discrete choice methods with simulation](https://eml.berkeley.edu/books/train1201.pdf). Cambridge University Press, Cambridge.

```

rslts = dict()

for tau in [0.01, 0.001, 0.0001]:

index = ("delta", "delta")

options = options_base.copy()

options["estimation_tau"] = tau

crit_func = rp.get_log_like_func(params_base, options, df)

grid = np.linspace(0.948, 0.952, 20)

fvals = list()

for value in grid:

params = params_base.copy()

params.loc[index, "value"] = value

fvals.append(crit_func(params))

rslts[tau] = fvals - np.max(fvals)

```

Now we are ready to inspect the shape of the likelihood function.

```

plot_smoothing_parameter(rslts, params_base, grid)

```

#### Grid search

We can look at the interplay of several major numerical tuning parameters. We combine choices for `simulation_agents`, `solution_draws`, `estimation_draws`, and `tau` to see how the maximum of the likelihood function changes.

```

df = pd.read_pickle("material/tuning.delta.pkl")

df.loc[((10000), slice(None)), :]

```

|

github_jupyter

|

from timeit import default_timer as timer

from functools import partial

import yaml

import sys

import os

from estimagic.optimization.optimize import maximize

from scipy.optimize import root_scalar

from scipy.stats import chi2

import numdifftools as nd

import pandas as pd

import respy as rp

import numpy as np

sys.path.insert(0, "python")

from auxiliary import plot_bootstrap_distribution # noqa: E402

from auxiliary import plot_computational_budget # noqa: E402

from auxiliary import plot_smoothing_parameter # noqa: E402

from auxiliary import plot_score_distribution # noqa: E402

from auxiliary import plot_score_function # noqa: E402

from auxiliary import plot_likelihood # noqa: E402

options_base = yaml.safe_load(open(os.environ["ROBINSON_SPEC"] + "/robinson.yaml", "r"))

params_base = pd.read_csv(open(os.environ["ROBINSON_SPEC"] + "/robinson.csv", "r"))

params_base.set_index(["category", "name"], inplace=True)

simulate = rp.get_simulate_func(params_base, options_base)

df = simulate(params_base)

params_base

options_base

df.head()

params_base["lower"] = [0.948, 0.0695, -0.11, 1.04, 0.0030, 0.005, -0.10]

params_base["upper"] = [0.952, 0.0705, -0.09, 1.05, 0.1000, 0.015, +0.10]

crit_func = rp.get_log_like_func(params_base, options_base, df)

rslts = dict()

for index in params_base.index:

upper, lower = params_base.loc[index][["upper", "lower"]]

grid = np.linspace(lower, upper, 20)

fvals = list()

for value in grid:

params = params_base.copy()

params.loc[index, "value"] = value

fval = options_base["simulation_agents"] * crit_func(params)

fvals.append(fval)

rslts[index] = fvals

plot_likelihood(rslts, params_base)

crit_func = rp.get_log_like_func(params_base, options_base, df)

constr_base = [

{"loc": "shocks_sdcorr", "type": "fixed"},

{"loc": "wage_fishing", "type": "fixed"},

{"loc": "nonpec_fishing", "type": "fixed"},

{"loc": "nonpec_hammock", "type": "fixed"},

]

params_start = params_base.copy()

params_start.loc[("delta", "delta"), "value"] = 0.91

algo_options = {"maxeval": 100}

algo_name = "nlopt_bobyqa"

results, params_rslt = maximize(

crit_func,

params_base,

algo_name,

algo_options=algo_options,

constraints=constr_base,

)

params_rslt

fval = results["fitness"] * options_base["simulation_agents"]

print(f"criterion function at optimum {fval:5.3f}")

def wrapper_crit_func(crit_func, options_base, params_base, value):

params = params_base.copy()

params.loc["delta", "value"] = value

return options_base["simulation_agents"] * crit_func(params)

p_wrapper_crit_func = partial(wrapper_crit_func, crit_func, options_base, params_base)

delta_hat = params_rslt.loc[("delta", "delta"), "value"]

delta_fisher = -nd.Derivative(p_wrapper_crit_func, n=2)([delta_hat])

delta_fisher

plot_score_distribution()

num_points, index = 10, ("delta", "delta")

upper, lower = params_base.loc[index, ["upper", "lower"]]

grid = np.linspace(lower, upper, num_points)

fds = np.tile(np.nan, num_points)

for i, point in enumerate(grid):

fds[i] = nd.Derivative(p_wrapper_crit_func, n=1)([point])

norm_fds = fds * -(1 / np.sqrt(delta_fisher))

norm_grid = (grid - delta_hat) * (np.sqrt(delta_fisher))

plot_score_function(norm_grid, norm_fds)

rslt = list()

rslt.append(delta_hat - 1.96 * 1 / np.sqrt(delta_fisher))

rslt.append(delta_hat + 1.96 * 1 / np.sqrt(delta_fisher))

"{:5.3f} / {:5.3f}".format(*rslt)

def root_wrapper(delta, options_base, alpha, index):

crit_val = -0.5 * chi2.ppf(1 - alpha, 1)

params_eval = params_base.copy()

params_eval.loc[("delta", "delta"), "value"] = delta

likl_ratio = options_base["simulation_agents"] * (

crit_func(params_eval) - crit_func(params_base)

)

return likl_ratio - crit_val

brackets = [[0.75, 0.95], [0.95, 1.10]]

rslt = list()

for bracket in brackets:

root = root_scalar(

root_wrapper,

method="bisect",

bracket=bracket,

args=(options_base, 0.05, index),

).root

rslt.append(root)

print("{:5.3f} / {:5.3f}".format(*rslt))

plot_bootstrap_distribution()

fname = "material/bootstrap.delta_perturb_true.pkl"

boot_params = pd.read_pickle(fname)

rslt = list()

for quantile in [0.025, 0.975]:

rslt.append(boot_params.loc[("delta", "delta"), :].quantile(quantile))

print("{:5.3f} / {:5.3f}".format(*rslt))

grid = np.linspace(100, 1000, 100, dtype=int)

rslts = list()

for num_draws in grid:

options = options_base.copy()

options["estimation_draws"] = num_draws

options["solution_draws"] = num_draws

start = timer()

rp.get_solve_func(params_base, options)

finish = timer()

rslts.append(finish - start)

plot_computational_budget(grid, rslts)

rslts = dict()

for tau in [0.01, 0.001, 0.0001]:

index = ("delta", "delta")

options = options_base.copy()

options["estimation_tau"] = tau

crit_func = rp.get_log_like_func(params_base, options, df)

grid = np.linspace(0.948, 0.952, 20)

fvals = list()

for value in grid:

params = params_base.copy()

params.loc[index, "value"] = value

fvals.append(crit_func(params))

rslts[tau] = fvals - np.max(fvals)

plot_smoothing_parameter(rslts, params_base, grid)

df = pd.read_pickle("material/tuning.delta.pkl")

df.loc[((10000), slice(None)), :]

| 0.484624 | 0.968649 |

# N-grams and Markov chains

By [Allison Parrish](http://www.decontextualize.com/)

Markov chain text generation is [one of the oldest](https://elmcip.net/creative-work/travesty) strategies for predictive text generation. This notebook takes you through the basics of implementing a simple and concise Markov chain text generation procedure in Python.

If all you want is to generate text with a Markov chain and you don't care about how the functions are implemented (or if you already went through this notebook and want to use the functions without copy-and-pasting them), you can [download a Python file with all of the functions here](https://gist.github.com/aparrish/14cb94ce539a868e6b8714dd84003f06). Just download the file, put it in the same directory as your code, type `from shmarkov import *` at the top, and you're good to go.

## Tuples: a quick introduction

Before we get to all that, I need to review a helpful Python data structure: the tuple.

Tuples (rhymes with "supple") are data structures very similar to lists. You can create a tuple using parentheses (instead of square brackets, as you would with a list):

```

t = ("alpha", "beta", "gamma", "delta")

t

```

You can access the values in a tuple in the same way as you access the values in a list: using square bracket indexing syntax. Tuples support slice syntax and negative indexes, just like lists:

```

t[-2]

t[1:3]

```

The difference between a list and a tuple is that the values in a tuple can't be changed after the tuple is created. This means, for example, that attempting to .append() a value to a tuple will fail:

```

t.append("epsilon")

t[2] = "bravo"

```

"So," you think to yourself. "Tuples are just like... broken lists. That's strange and a little unreasonable. Why even have them in your programming language?" That's a fair question, and answering it requires a bit of knowledge of how Python works with these two kinds of values (lists and tuples) behind the scenes.

Essentially, tuples are faster and smaller than lists. Because lists can be modified, potentially becoming larger after they're initialized, Python has to allocate more memory than is strictly necessary whenever you create a list value. If your list grows beyond what Python has already allocated, Python has to allocate more memory. Allocating memory, copying values into memory, and then freeing memory when it's when no longer needed, are all (perhaps surprisingly) slow processes---slower, at least, than using data already loaded into memory when your program begins.

Because a tuple can't grow or shrink after it's created, Python knows exactly how much memory to allocate when you create a tuple in your program. That means: less wasted memory, and less wasted time allocating a deallocating memory. The cost of this decreased resource footprint is less versatility.

Tuples are often called an immutable data type. "Immutable" in this context simply means that it can't be changed after it's created.

For our purposes, the most important aspect of tuples is that–unlike lists–they can be *keys in dictionaries*. The utility of this will become apparent later in this tutorial, but to illustrate, let's start with an empty dictionary:

```

my_stuff = {}

```

I can use a string as a key, of course, no problem:

```

my_stuff["cheese"] = 1

```

I can also use an integer:

```

my_stuff[17] = "hello"

my_stuff

```

But I can't use a *list* as a key:

```

my_stuff[ ["a", "b"] ] = "asdf"

```

That's because a list, as a mutable data type, is "unhashable": since its contents can change, it's impossible to come up with a single value to represent it, as is required of dictionary keys. However, because tuples are *immutable*, you can use them as dictionary keys:

```

my_stuff[ ("a", "b") ] = "asdf"

my_stuff

```

This behavior is helpful when you want to make a data structure that maps *sequences* as keys to corresponding values. As we'll see below!

It's easy to make a list that is a copy of a tuple, and a tuple that is a copy of a list, using the `list()` and `tuple()` functions, respectively:

```

t = (1, 2, 3)

list(t)

things = [4, 5, 6]

tuple(things)

```

## N-grams

The first kind of text analysis that we’ll look at today is an n-gram model. An n-gram is simply a sequence of units drawn from a longer sequence; in the case of text, the unit in question is usually a character or a word. For convenience, we'll call the unit of the n-gram is called its *level*; the length of the n-gram is called its *order*. For example, the following is a list of all unique character-level order-2 n-grams in the word `condescendences`:

co

on

nd

de

es

sc

ce

en

nc

And the following is an excerpt from the list of all unique word-level order-5 n-grams in *The Road Not Taken*:

Two roads diverged in a

roads diverged in a yellow

diverged in a yellow wood,

in a yellow wood, And

a yellow wood, And sorry

yellow wood, And sorry I

N-grams are used frequently in natural language processing and are a basic tool text analysis. Their applications range from programs that correct spelling to creative visualizations to compression algorithms to stylometrics to generative text. They can be used as the basis of a Markov chain algorithm—and, in fact, that’s one of the applications we’ll be using them for later in this lesson.

### Finding and counting word pairs

So how would we go about writing Python code to find n-grams? We'll start with a simple task: finding *word pairs* in a text. A word pair is essentially a word-level order-2 n-gram; once we have code to find word pairs, we’ll generalize it to handle n-grams of any order.

To find word pairs, we'll first need some words!

```

text = open("genesis.txt").read()

words = text.split()

```

The data structure we want to end up with is a *list* of *tuples*, where the tuples have two elements, i.e., each successive pair of words from the text. There are a number of clever ways to go about creating this list. Here's one: imagine our starting list of strings, with their corresponding indices:

['a', 'b', 'c', 'd', 'e']

0 1 2 3 4

The first item of the list of pairs should consist of the elements at index 0 and index 1 from this list; the second item should consist of the elements at index 1 and index 2; and so forth. We can accomplish this using a list comprehension over the range of numbers from zero up until the end of the list minus one:

```

pairs = [(words[i], words[i+1]) for i in range(len(words)-1)]

```

(Why `len(words) - 1`? Because the final element of the list can only be the *second* element of a pair. Otherwise we'd be trying to access an element beyond the end of the list.)

The corresponding way to write this with a `for` loop:

```

pairs = []

for i in range(len(words)-1):

this_pair = (words[i], words[i+1])

pairs.append(this_pair)

```

In either case, the list of n-grams ends up looking like this. (I'm only showing the first 25 for the sake of brevity; remove `[:25]` to see the whole list.)

```

pairs[:25]

```

Now that we have a list of word pairs, we can count them using a `Counter` object.

```

from collections import Counter

pair_counts = Counter(pairs)

```

The `.most_common()` method of the `Counter` shows us the items in our list that occur most frequently:

```

pair_counts.most_common(10)

```

So the phrase "And God" occurs 21 times, by far the most common word pair in the text. In fact, "And God" comprises about 3% of all word pairs found in the text:

```

pair_counts[("And", "God")] / sum(pair_counts.values())

```

You can do the same calculation with character-level pairs with pretty much exactly the same code, owing to the fact that strings and lists can be indexed using the same syntax:

```

char_pairs = [(text[i], text[i+1]) for i in range(len(text)-1)]

```

The variable `char_pairs` now has a list of all pairs of *characters* in the text. Using `Counter` again, we can find the most common pairs of characters:

```

char_pair_counts = Counter(char_pairs)

char_pair_counts.most_common(10)

```

> What are the practical applications of this kind of analysis? For one, you can use n-gram counts to judge *similarity* between two texts. If two texts have the same n-grams in similar proportions, then those texts probably have similar compositions, meanings, or authorship. N-grams can also be a basis for fast text searching; [Google Books Ngram Viewer](https://books.google.com/ngrams) works along these lines.

### N-grams of arbitrary lengths

The step from pairs to n-grams of arbitrary lengths is a only a matter of using slice indexes to get a slice of length `n`, where `n` is the length of the desired n-gram. For example, to get all of the word-level order 7 n-grams from the list of words in `genesis.txt`:

```

seven_grams = [tuple(words[i:i+7]) for i in range(len(words)-6)]

seven_grams[:20]

```

Two tricky things in this expression: in `tuple(words[i:i+7])`, I call `tuple()` to convert the list slice (`words[i:i+7]`) into a tuple. In `range(len(words)-6)`, the `6` is there because it's one fewer than the length of the n-gram. Just as with the pairs above, we need to stop counting before we reach the end of the list with enough room to make sure we're always grabbing slices of the desired length.

For the sake of convenience, here's a function that will return n-grams of a desired length from any sequence, whether list or string:

```

def ngrams_for_sequence(n, seq):

return [tuple(seq[i:i+n]) for i in range(len(seq)-n+1)]

```

Using this function, here are random character-level n-grams of order 9 from `genesis.txt`:

```

import random

genesis_9grams = ngrams_for_sequence(9, open("genesis.txt").read())

random.sample(genesis_9grams, 10)

```

Or all the word-level 5-grams from `frost.txt`:

```

frost_word_5grams = ngrams_for_sequence(5, open("frost.txt").read().split())

frost_word_5grams

```

All of the bigrams (ngrams of order 2) from the string `condescendences`:

```

ngrams_for_sequence(2, "condescendences")

```

This function works with non-string sequences as well:

```

ngrams_for_sequence(4, [5, 10, 15, 20, 25, 30])

```

And of course we can use it in conjunction with a `Counter` to find the most common n-grams in a text:

```

Counter(ngrams_for_sequence(3, open("genesis.txt").read())).most_common(20)

```

## Markov models: what comes next?

Now that we have the ability to find and record the n-grams in a text, it’s time to take our analysis one step further. The next question we’re going to try to answer is this: Given a particular n-gram in a text, what is most likely to come next?

We can imagine the kind of algorithm we’ll need to extract this information from the text. It will look very similar to the code to find n-grams above, but it will need to keep track not just of the n-grams but also a list of all units (word, character, whatever) that *follow* those n-grams.

Let’s do a quick example by hand. This is the same character-level order-2 n-gram analysis of the (very brief) text “condescendences” as above, but this time keeping track of all characters that follow each n-gram:

| n-grams | next? |

| ------- | ----- |

|co| n|

|on| d|

|nd| e, e|

|de| s, n|

|es| c, (end of text)|

|sc| e|

|ce| n, s|

|en| d, c|

|nc| e|

From this table, we can determine that while the n-gram `co` is followed by n 100% of the time, and while the n-gram `on` is followed by `d` 100% of the time, the n-gram `de` is followed by `s` 50% of the time, and `n` the rest of the time. Likewise, the n-gram `es` is followed by `c` 50% of the time, and followed by the end of the text the other 50% of the time.

The easiest way to represent this model is with a dictionary whose keys are the n-grams and whose values are all of the possible "nexts." Here's what the Python code looks like to construct this model from a string. We'll use the special token `$` to represent the notion of the "end of text" in the table above.

```

src = "condescendences"

src += "$" # to indicate the end of the string

model = {}

for i in range(len(src)-2):

ngram = tuple(src[i:i+2]) # get a slice of length 2 from current position

next_item = src[i+2] # next item is current index plus two (i.e., right after the slice)

if ngram not in model: # check if we've already seen this ngram; if not...

model[ngram] = [] # value for this key is an empty list

model[ngram].append(next_item) # append this next item to the list for this ngram

model

```

The functions in the cell below generalize this to n-grams of arbitrary length (and use the special Python value `None` to indicate the end of a sequence). The `markov_model()` function creates an empty dictionary and takes an n-gram length and a sequence (which can be a string or a list) and calls the `add_to_model()` function on that sequence. The `add_to_model()` function does the same thing as the code above: iterates over every index of the sequence and grabs an n-gram of the desired length, adding keys and values to the dictionary as necessary.

```

def add_to_model(model, n, seq):

# make a copy of seq and append None to the end

seq = list(seq[:]) + [None]

for i in range(len(seq)-n):

# tuple because we're using it as a dict key!

gram = tuple(seq[i:i+n])

next_item = seq[i+n]

if gram not in model:

model[gram] = []

model[gram].append(next_item)

def markov_model(n, seq):

model = {}

add_to_model(model, n, seq)

return model

```

So, e.g., an order-2 character-level Markov model of `condescendences`:

```

markov_model(2, "condescendences")

```

Or an order 3 word-level Markov model of `genesis.txt`:

```

genesis_markov_model = markov_model(3, open("genesis.txt").read().split())

genesis_markov_model

```

We can now use the Markov model to make *predictions*. Given the information in the Markov model of `genesis.txt`, what words are likely to follow the sequence of words `and over the`? We can find out simply by getting the value for the key for that sequence:

```

genesis_markov_model[('and', 'over', 'the')]

```

This tells us that the sequence `and over the` is followed by `fowl` 50% of the time, `night,` 25% of the time and `cattle,` 25% of the time.

### Markov chains: Generating text from a Markov model

The Markov models we created above don't just give us interesting statistical probabilities. It also allows us generate a *new* text with those probabilities by *chaining together predictions*. Here’s how we’ll do it, starting with the order 2 character-level Markov model of `condescendences`: (1) start with the initial n-gram (`co`)—those are the first two characters of our output. (2) Now, look at the last *n* characters of output, where *n* is the order of the n-grams in our table, and find those characters in the “n-grams” column. (3) Choose randomly among the possibilities in the corresponding “next” column, and append that letter to the output. (Sometimes, as with `co`, there’s only one possibility). (4) If you chose “end of text,” then the algorithm is over. Otherwise, repeat the process starting with (2). Here’s a record of the algorithm in action:

co

con

cond

conde

conden

condend

condendes

condendesc

condendesce

condendesces

As you can see, we’ve come up with a word that looks like the original word, and could even be passed off as a genuine English word (if you squint at it). From a statistical standpoint, the output of our algorithm is nearly indistinguishable from the input. This kind of algorithm—moving from one state to the next, according to a list of probabilities—is known as a Markov chain.

Implementing this procedure in code is a little bit tricky, but it looks something like this. First, we'll make a Markov model of `condescendences`:

```

cmodel = markov_model(2, "condescendences")

cmodel

```

We're going to generate output as we go. We'll initialize the output to the characters we want to start with, i.e., `co`:

```

output = "co"

```

Now what we have to do is get the last two characters of the output, look them up in the model, and select randomly among the characters in the value for that key (which should be a list). Finally, we'll append that randomly-selected value to the end of the string:

```

ngram = tuple(output[-2:])

next_item = random.choice(cmodel[ngram])

output += next_item

print(output)

```

Try running the cell above multiple times: the `output` variable will get longer and longer—until you get an error. You can also put it into a `for` loop:

```

output = "co"

for i in range(100):

ngram = tuple(output[-2:])

next_item = random.choice(cmodel[ngram])

output += next_item

print(output)

```

The `TypeError` you see above is what happens when we stumble upon the "end of text" condition, which we'd chosen above to represent with the special Python value `None`. When this value comes up, it means that statistically speaking, we've reached the end of the text, and so can stop generating. We'll obey this directive by skipping out of the loop early with the `break` keyword:

```

output = "co"

for i in range(100):

ngram = tuple(output[-2:])

next_item = random.choice(cmodel[ngram])

if next_item is None:

break # "break" tells Python to immediately exit the loop, skipping any remaining values

else:

output += next_item

print(output)

```

Why `range(100)`? No reason, really—I just picked 100 as a reasonable number for the maximum number of times the Markov chain should produce attempt to append to the output. Because there's a loop in this particular model (`nd` -> `e`, `de` -> `n`, `en` -> `d`), any time you generate text from this Markov chain, it could potentially go on infinitely. Limiting the number to `100` makes sure that it doesn't ever actually do that. You should adjust the number based on what you need the Markov chain to do.

### A function to generate from a Markov model

The `gen_from_model()` function below is a more general version of the code that we just wrote that works with lists and strings and n-grams of any length:

```

import random

def gen_from_model(n, model, start=None, max_gen=100):

if start is None:

start = random.choice(list(model.keys()))

output = list(start)

for i in range(max_gen):

start = tuple(output[-n:])

next_item = random.choice(model[start])

if next_item is None:

break

else:

output.append(next_item)

return output

```

The `gen_from_model()` function's first parameter is the length of n-gram; the second parameter is a Markov model, as returned from `markov_model()` defined above, and the third parameter is the "seed" n-gram to start the generation from. The `gen_from_model()` function always returns a list:

```

gen_from_model(2, cmodel, ('c', 'o'))

```

So if you're working with a character-level Markov chain, you'll want to glue the list back together into a string:

```

''.join(gen_from_model(2, cmodel, ('c', 'o')))

```

If you leave out the "seed," this function will just pick a random n-gram to start with:

```

sea_model = markov_model(3, "she sells seashells by the seashore")

for i in range(12):

print(''.join(gen_from_model(3, sea_model)))

```

### Advanced Markov style: Generating lines

You can use the `gen_from_model()` function to generate word-level Markov chains as well:

```

genesis_word_model = markov_model(2, open("genesis.txt").read().split())

generated_words = gen_from_model(2, genesis_word_model, ('In', 'the'))

print(' '.join(generated_words))

```

This looks good! But there's a problem: the generation of the text just sorta... keeps going. Actually it goes on for exactly 100 words, which is also the maximum number of iterations specified in the function. We can make it go even longer by supplying a fourth parameter to the function:

```

generated_words = gen_from_model(2, genesis_word_model, ('In', 'the'), 500)

print(' '.join(generated_words))

```

The reason for this is that unless the Markov chain generator reaches the "end of text" token, it'll just keep going on forever. And the longer the text, the less likely it is that the "end of text" token will be reached.

Maybe this is okay, but the underlying text actually has some structure in it: each line of the file is actually a verse. If you want to generate individual *verses*, you need to treat each line separately, producing an end-of-text token for each line. The following function does just this by creating a model, adding each item from a list to the model as a separate item, and returning the combined model:

```

def markov_model_from_sequences(n, sequences):

model = {}

for item in sequences:

add_to_model(model, n, item)

return model

```

This function expects to receive a list of sequences (the sequences can be either lists or strings, depending on if you want a word-level model or a character-level model). So, for example:

```

genesis_lines = open("genesis.txt").readlines() # all of the lines from the file

# genesis_lines_words will be a list of lists of words in each line

genesis_lines_words = [line.strip().split() for line in genesis_lines] # strip whitespace and split into words

genesis_lines_model = markov_model_from_sequences(2, genesis_lines_words)

```

The `genesis_lines_model` variable now contains a Markov model with end-of-text tokens where they should be, at the end of each line. Generating from this model, we get:

```

for i in range(10):

print("verse", i, "-", ' '.join(gen_from_model(2, genesis_lines_model)))

```

Better—the verses are ending at appropriate places—but still not quite right, since we're generating from random keys in the Markov model! To make this absolutely correct, we'd want to *start* each line with an n-gram that also occurred at the start of each line in the original text file. To do this, we'll work in two passes. First, get the list of lists of words:

```

genesis_lines = open("genesis.txt").readlines() # all of the lines from the file

# genesis_lines_words will be a list of lists of words in each line

genesis_lines_words = [line.strip().split() for line in genesis_lines] # strip whitespace and split into words

```

Now, get the n-grams at the start of each line:

```

genesis_starts = [item[:2] for item in genesis_lines_words if len(item) >= 2]

```

Now create the Markov model:

```

genesis_lines_model = markov_model_from_sequences(2, genesis_lines_words)

```

And generate from it, picking a random "start" for each line:

```

for i in range(10):

start = random.choice(genesis_starts)

generated = gen_from_model(2, genesis_lines_model, random.choice(genesis_starts))

print("verse", i, "-", ' '.join(generated))

```

### Putting it together

The `markov_generate_from_sequences()` function below wraps up everything above into one function that takes an n-gram length, a list of sequences (e.g., a list of lists of words for a word-level Markov model, or a list of strings for a character-level Markov model), and a number of lines to generate, and returns that many generated lines, starting the generation only with n-grams that begin lines in the source file:

```

def markov_generate_from_sequences(n, sequences, count, max_gen=100):

starts = [item[:n] for item in sequences if len(item) >= n]

model = markov_model_from_sequences(n, sequences)

return [gen_from_model(n, model, random.choice(starts), max_gen)

for i in range(count)]

```

Here's how to use this function to generate from a character-level Markov model of `frost.txt`:

```

frost_lines = [line.strip() for line in open("frost.txt").readlines()]

for item in markov_generate_from_sequences(5, frost_lines, 20):

print(''.join(item))

```

And from a word-level Markov model of Shakespeare's sonnets:

```

sonnets_words = [line.strip().split() for line in open("sonnets.txt").readlines()]

for item in markov_generate_from_sequences(2, sonnets_words, 14):

print(' '.join(item))

```

A fun thing to do is combine *two* source texts and make a Markov model from the combination. So for example, read in the lines of both *The Road Not Taken* and `genesis.txt` and put them into the same list:

```

frost_lines = [line.strip() for line in open("frost.txt").readlines()]

genesis_lines = [line.strip() for line in open("genesis.txt").readlines()]

both_lines = frost_lines + genesis_lines

for item in markov_generate_from_sequences(5, both_lines, 14, max_gen=150):

print(''.join(item))

```

The resulting text has properties of both of the underlying source texts! Whoa.

### Putting it all *even more together*

If you're really super lazy, the `markov_generate_from_lines_in_file()` function below does allll the work for you. It takes an n-gram length, an open filehandle to read from, the number of lines to generate, and the string `char` for a character-level Markov model and `word` for a word-level model. It returns the requested number of lines generated from a Markov model of the desired order and level.

```

def markov_generate_from_lines_in_file(n, filehandle, count, level='char', max_gen=100):

if level == 'char':

glue = ''

sequences = [item.strip() for item in filehandle.readlines()]

elif level == 'word':

glue = ' '

sequences = [item.strip().split() for item in filehandle.readlines()]

generated = markov_generate_from_sequences(n, sequences, count, max_gen)

return [glue.join(item) for item in generated]

```

So, for example, to generate twenty lines from an order-3 model of H.D.'s *Sea Rose*:

```

for item in markov_generate_from_lines_in_file(3, open("sea_rose.txt"), 20, 'char'):

print(item)

```

Or an order-3 word-level model of `genesis.txt`:

```

for item in markov_generate_from_lines_in_file(3, open("genesis.txt"), 5, 'word'):

print(item)

print("")

```

|

github_jupyter

|

t = ("alpha", "beta", "gamma", "delta")

t

t[-2]

t[1:3]

t.append("epsilon")

t[2] = "bravo"

my_stuff = {}

my_stuff["cheese"] = 1

my_stuff[17] = "hello"

my_stuff

my_stuff[ ["a", "b"] ] = "asdf"

my_stuff[ ("a", "b") ] = "asdf"

my_stuff

t = (1, 2, 3)

list(t)

things = [4, 5, 6]

tuple(things)

text = open("genesis.txt").read()

words = text.split()

pairs = [(words[i], words[i+1]) for i in range(len(words)-1)]

pairs = []

for i in range(len(words)-1):

this_pair = (words[i], words[i+1])

pairs.append(this_pair)

pairs[:25]

from collections import Counter

pair_counts = Counter(pairs)

pair_counts.most_common(10)

pair_counts[("And", "God")] / sum(pair_counts.values())

char_pairs = [(text[i], text[i+1]) for i in range(len(text)-1)]

char_pair_counts = Counter(char_pairs)

char_pair_counts.most_common(10)

seven_grams = [tuple(words[i:i+7]) for i in range(len(words)-6)]

seven_grams[:20]

def ngrams_for_sequence(n, seq):

return [tuple(seq[i:i+n]) for i in range(len(seq)-n+1)]

import random

genesis_9grams = ngrams_for_sequence(9, open("genesis.txt").read())

random.sample(genesis_9grams, 10)

frost_word_5grams = ngrams_for_sequence(5, open("frost.txt").read().split())

frost_word_5grams

ngrams_for_sequence(2, "condescendences")

ngrams_for_sequence(4, [5, 10, 15, 20, 25, 30])

Counter(ngrams_for_sequence(3, open("genesis.txt").read())).most_common(20)

src = "condescendences"

src += "$" # to indicate the end of the string

model = {}

for i in range(len(src)-2):

ngram = tuple(src[i:i+2]) # get a slice of length 2 from current position

next_item = src[i+2] # next item is current index plus two (i.e., right after the slice)

if ngram not in model: # check if we've already seen this ngram; if not...

model[ngram] = [] # value for this key is an empty list

model[ngram].append(next_item) # append this next item to the list for this ngram

model

def add_to_model(model, n, seq):

# make a copy of seq and append None to the end

seq = list(seq[:]) + [None]

for i in range(len(seq)-n):

# tuple because we're using it as a dict key!

gram = tuple(seq[i:i+n])

next_item = seq[i+n]

if gram not in model:

model[gram] = []

model[gram].append(next_item)

def markov_model(n, seq):

model = {}

add_to_model(model, n, seq)

return model

markov_model(2, "condescendences")

genesis_markov_model = markov_model(3, open("genesis.txt").read().split())

genesis_markov_model

genesis_markov_model[('and', 'over', 'the')]

cmodel = markov_model(2, "condescendences")

cmodel

output = "co"

ngram = tuple(output[-2:])

next_item = random.choice(cmodel[ngram])

output += next_item

print(output)

output = "co"

for i in range(100):

ngram = tuple(output[-2:])

next_item = random.choice(cmodel[ngram])

output += next_item

print(output)

output = "co"

for i in range(100):

ngram = tuple(output[-2:])

next_item = random.choice(cmodel[ngram])

if next_item is None:

break # "break" tells Python to immediately exit the loop, skipping any remaining values

else:

output += next_item

print(output)

import random

def gen_from_model(n, model, start=None, max_gen=100):

if start is None:

start = random.choice(list(model.keys()))

output = list(start)

for i in range(max_gen):

start = tuple(output[-n:])

next_item = random.choice(model[start])

if next_item is None:

break

else:

output.append(next_item)

return output

gen_from_model(2, cmodel, ('c', 'o'))

''.join(gen_from_model(2, cmodel, ('c', 'o')))

sea_model = markov_model(3, "she sells seashells by the seashore")

for i in range(12):

print(''.join(gen_from_model(3, sea_model)))

genesis_word_model = markov_model(2, open("genesis.txt").read().split())

generated_words = gen_from_model(2, genesis_word_model, ('In', 'the'))

print(' '.join(generated_words))

generated_words = gen_from_model(2, genesis_word_model, ('In', 'the'), 500)

print(' '.join(generated_words))

def markov_model_from_sequences(n, sequences):

model = {}

for item in sequences:

add_to_model(model, n, item)

return model

genesis_lines = open("genesis.txt").readlines() # all of the lines from the file

# genesis_lines_words will be a list of lists of words in each line

genesis_lines_words = [line.strip().split() for line in genesis_lines] # strip whitespace and split into words

genesis_lines_model = markov_model_from_sequences(2, genesis_lines_words)

for i in range(10):

print("verse", i, "-", ' '.join(gen_from_model(2, genesis_lines_model)))

genesis_lines = open("genesis.txt").readlines() # all of the lines from the file

# genesis_lines_words will be a list of lists of words in each line

genesis_lines_words = [line.strip().split() for line in genesis_lines] # strip whitespace and split into words

genesis_starts = [item[:2] for item in genesis_lines_words if len(item) >= 2]

genesis_lines_model = markov_model_from_sequences(2, genesis_lines_words)

for i in range(10):

start = random.choice(genesis_starts)

generated = gen_from_model(2, genesis_lines_model, random.choice(genesis_starts))

print("verse", i, "-", ' '.join(generated))

def markov_generate_from_sequences(n, sequences, count, max_gen=100):

starts = [item[:n] for item in sequences if len(item) >= n]

model = markov_model_from_sequences(n, sequences)

return [gen_from_model(n, model, random.choice(starts), max_gen)

for i in range(count)]

frost_lines = [line.strip() for line in open("frost.txt").readlines()]

for item in markov_generate_from_sequences(5, frost_lines, 20):

print(''.join(item))

sonnets_words = [line.strip().split() for line in open("sonnets.txt").readlines()]

for item in markov_generate_from_sequences(2, sonnets_words, 14):

print(' '.join(item))

frost_lines = [line.strip() for line in open("frost.txt").readlines()]

genesis_lines = [line.strip() for line in open("genesis.txt").readlines()]

both_lines = frost_lines + genesis_lines

for item in markov_generate_from_sequences(5, both_lines, 14, max_gen=150):

print(''.join(item))

def markov_generate_from_lines_in_file(n, filehandle, count, level='char', max_gen=100):

if level == 'char':

glue = ''

sequences = [item.strip() for item in filehandle.readlines()]

elif level == 'word':

glue = ' '

sequences = [item.strip().split() for item in filehandle.readlines()]

generated = markov_generate_from_sequences(n, sequences, count, max_gen)

return [glue.join(item) for item in generated]

for item in markov_generate_from_lines_in_file(3, open("sea_rose.txt"), 20, 'char'):

print(item)

for item in markov_generate_from_lines_in_file(3, open("genesis.txt"), 5, 'word'):

print(item)

print("")

| 0.291283 | 0.987092 |

# Introduction to NumPy

The topic is very broad: datasets can come from a wide range of sources and a wide range of formats, including be collections of documents, collections of images, collections of sound clips, collections of numerical measurements, or nearly anything else.

Despite this apparent heterogeneity, it will help us to think of all data fundamentally as arrays of numbers.

For this reason, efficient storage and manipulation of numerical arrays is absolutely fundamental to the process of doing data science.

NumPy (short for *Numerical Python*) provides an efficient interface to store and operate on dense data buffers.

In some ways, NumPy arrays are like Python's built-in ``list`` type, but NumPy arrays provide much more efficient storage and data operations as the arrays grow larger in size.

NumPy arrays form the core of nearly the entire ecosystem of data science tools in Python, so time spent learning to use NumPy effectively will be valuable no matter what aspect of data science interests you.

```

import numpy

numpy.__version__

```

By convention, you'll find that most people in the SciPy/PyData world will import NumPy using ``np`` as an alias:

```

import numpy as np

```