modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-02 18:52:31

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 533

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-02 18:52:05

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

lixiangchun/imagenet-swav-resnet50w2

|

lixiangchun

| 2022-10-28T04:13:37Z | 0 | 0 |

tf-keras

|

[

"tf-keras",

"onnx",

"region:us"

] | null | 2022-10-20T04:06:01Z |

```python

import trace_layer2 as models

import torch

x=torch.randn(1, 3, 224, 224)

state_dict = torch.load('swav_imagenet_layer2.pt', map_location='cpu')

model = models.resnet50w2()

model.load_state_dict(state_dict)

model.eval()

feature = model(x)

traced_model = torch.jit.load('traced_swav_imagenet_layer2.pt', map_location='cpu')

traced_model.eval()

feature = traced_model(x)

```

|

agungbesti/house

|

agungbesti

| 2022-10-28T02:59:23Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2022-10-28T02:53:02Z |

---

title: Protas

emoji: 🏃

colorFrom: yellow

colorTo: pink

sdk: gradio

app_file: app.py

pinned: false

license: apache-2.0

---

# Configuration

`title`: _string_

Display title for the Space

`emoji`: _string_

Space emoji (emoji-only character allowed)

`colorFrom`: _string_

Color for Thumbnail gradient (red, yellow, green, blue, indigo, purple, pink, gray)

`colorTo`: _string_

Color for Thumbnail gradient (red, yellow, green, blue, indigo, purple, pink, gray)

`sdk`: _string_

Can be either `gradio` or `streamlit`

`sdk_version` : _string_

Only applicable for `streamlit` SDK.

See [doc](https://hf.co/docs/hub/spaces) for more info on supported versions.

`app_file`: _string_

Path to your main application file (which contains either `gradio` or `streamlit` Python code).

Path is relative to the root of the repository.

`pinned`: _boolean_

Whether the Space stays on top of your list.

|

helloway/simple

|

helloway

| 2022-10-28T02:00:19Z | 0 | 0 | null |

[

"audio-classification",

"license:apache-2.0",

"region:us"

] |

audio-classification

| 2022-10-28T01:51:37Z |

---

license: apache-2.0

tags:

- audio-classification

---

|

Kolgrima/Luna

|

Kolgrima

| 2022-10-28T01:39:20Z | 0 | 0 | null |

[

"license:openrail",

"region:us"

] | null | 2022-10-27T23:48:49Z |

---

license: openrail

---

## Model of Evanna Lynch as Luna Lovegood

If you've ever tried to create an image of Luna Lovegood from the movies, you'll have noticed Stable Diffusion is not good at this! That's where this model comes in.

This has been trained on 38 images of Evanna Lynch as Luna Lovegood.

## Usage

Simply use the keyword "**Luna**" anywhere in your prompt.

### Output Examples

Each image has embedded data that can be read from the PNG info tab in Stable diffusion Web UI.

|

skang/distilbert-base-uncased-finetuned-imdb

|

skang

| 2022-10-28T01:38:56Z | 161 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"fill-mask",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-10-28T01:30:51Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

model-index:

- name: distilbert-base-uncased-finetuned-imdb

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-imdb

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6627

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.76 | 1.0 | 157 | 0.6640 |

| 0.688 | 2.0 | 314 | 0.6581 |

| 0.6768 | 3.0 | 471 | 0.6604 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

ByungjunKim/distilbert-base-uncased-finetuned-imdb

|

ByungjunKim

| 2022-10-28T01:36:12Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"fill-mask",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-10-28T01:27:52Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

model-index:

- name: distilbert-base-uncased-finetuned-imdb

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-imdb

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6627

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.76 | 1.0 | 157 | 0.6640 |

| 0.688 | 2.0 | 314 | 0.6581 |

| 0.6768 | 3.0 | 471 | 0.6604 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

huggingtweets/revmaxxing

|

huggingtweets

| 2022-10-28T01:23:51Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-10-27T23:49:45Z |

---

language: en

thumbnail: https://github.com/borisdayma/huggingtweets/blob/master/img/logo.png?raw=true

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1578729528695963649/mmiLKGp1_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Rev 🇷🇺 🌾 🛞</div>

<div style="text-align: center; font-size: 14px;">@revmaxxing</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Rev 🇷🇺 🌾 🛞.

| Data | Rev 🇷🇺 🌾 🛞 |

| --- | --- |

| Tweets downloaded | 3097 |

| Retweets | 241 |

| Short tweets | 416 |

| Tweets kept | 2440 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/1nfmh3no/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @revmaxxing's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/zust2rmi) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/zust2rmi/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/revmaxxing')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

Rogerooo/bordaloii

|

Rogerooo

| 2022-10-28T00:57:28Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2022-10-28T00:49:17Z |

---

license: creativeml-openrail-m

---

|

OpenMatch/cocodr-large-msmarco-idro-only

|

OpenMatch

| 2022-10-28T00:45:35Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"fill-mask",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-10-28T00:42:33Z |

---

license: mit

---

This model has been pretrained on MS MARCO corpus and then finetuned on MS MARCO training data with implicit distributionally robust optimization (iDRO), following the approach described in the paper **COCO-DR: Combating Distribution Shifts in Zero-Shot Dense Retrieval with Contrastive and Distributionally Robust Learning**. The associated GitHub repository is available here https://github.com/OpenMatch/COCO-DR.

This model is trained with BERT-large as the backbone with 335M hyperparameters.

|

caffsean/bert-base-cased-deep-ritmo

|

caffsean

| 2022-10-28T00:17:00Z | 161 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"fill-mask",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-10-27T03:19:50Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: bert-base-cased-deep-ritmo

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-deep-ritmo

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.5837

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 4.0463 | 1.0 | 1875 | 3.7428 |

| 3.3393 | 2.0 | 3750 | 3.0259 |

| 2.7435 | 3.0 | 5625 | 2.5837 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

allenai/scirepeval_adapters_rgn

|

allenai

| 2022-10-28T00:05:08Z | 6 | 0 |

adapter-transformers

|

[

"adapter-transformers",

"adapterhub:scirepeval/regression",

"bert",

"dataset:allenai/scirepeval",

"region:us"

] | null | 2022-10-28T00:04:59Z |

---

tags:

- adapterhub:scirepeval/regression

- adapter-transformers

- bert

datasets:

- allenai/scirepeval

---

# Adapter `allenai/scirepeval_adapters_rgn` for malteos/scincl

An [adapter](https://adapterhub.ml) for the `malteos/scincl` model that was trained on the [scirepeval/regression](https://adapterhub.ml/explore/scirepeval/regression/) dataset.

This adapter was created for usage with the **[adapter-transformers](https://github.com/Adapter-Hub/adapter-transformers)** library.

## Usage

First, install `adapter-transformers`:

```

pip install -U adapter-transformers

```

_Note: adapter-transformers is a fork of transformers that acts as a drop-in replacement with adapter support. [More](https://docs.adapterhub.ml/installation.html)_

Now, the adapter can be loaded and activated like this:

```python

from transformers import AutoAdapterModel

model = AutoAdapterModel.from_pretrained("malteos/scincl")

adapter_name = model.load_adapter("allenai/scirepeval_adapters_rgn", source="hf", set_active=True)

```

## Architecture & Training

<!-- Add some description here -->

## Evaluation results

<!-- Add some description here -->

## Citation

<!-- Add some description here -->

|

OpenMatch/condenser-large

|

OpenMatch

| 2022-10-28T00:04:23Z | 25 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"fill-mask",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-10-27T23:44:05Z |

---

license: mit

---

This model has been pretrained on BookCorpus and English Wikipedia following the approach described in the paper **Condenser: a Pre-training Architecture for Dense Retrieval**. The model can be used to reproduce the experimental results within the GitHub repository https://github.com/OpenMatch/COCO-DR.

This model is trained with BERT-large as the backbone with 335M hyperparameters.

|

OpenMatch/co-condenser-large

|

OpenMatch

| 2022-10-28T00:03:42Z | 33 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"fill-mask",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-10-27T23:56:37Z |

---

license: mit

---

This model has been pretrained on MS MARCO following the approach described in the paper **Unsupervised Corpus Aware Language Model Pre-training for Dense Passage Retrieval**. The model can be used to reproduce the experimental results within the GitHub repository https://github.com/OpenMatch/COCO-DR.

This model is trained with BERT-large as the backbone with 335M hyperparameters.

|

allenai/scirepeval_adapters_clf

|

allenai

| 2022-10-28T00:03:35Z | 14 | 0 |

adapter-transformers

|

[

"adapter-transformers",

"adapterhub:scirepeval/classification",

"bert",

"dataset:allenai/scirepeval",

"region:us"

] | null | 2022-10-28T00:03:26Z |

---

tags:

- adapterhub:scirepeval/classification

- adapter-transformers

- bert

datasets:

- allenai/scirepeval

---

# Adapter `allenai/scirepeval_adapters_clf` for malteos/scincl

An [adapter](https://adapterhub.ml) for the `malteos/scincl` model that was trained on the [scirepeval/classification](https://adapterhub.ml/explore/scirepeval/classification/) dataset.

This adapter was created for usage with the **[adapter-transformers](https://github.com/Adapter-Hub/adapter-transformers)** library.

## Usage

First, install `adapter-transformers`:

```

pip install -U adapter-transformers

```

_Note: adapter-transformers is a fork of transformers that acts as a drop-in replacement with adapter support. [More](https://docs.adapterhub.ml/installation.html)_

Now, the adapter can be loaded and activated like this:

```python

from transformers import AutoAdapterModel

model = AutoAdapterModel.from_pretrained("malteos/scincl")

adapter_name = model.load_adapter("allenai/scirepeval_adapters_clf", source="hf", set_active=True)

```

## Architecture & Training

<!-- Add some description here -->

## Evaluation results

<!-- Add some description here -->

## Citation

<!-- Add some description here -->

|

rajistics/setfit-model

|

rajistics

| 2022-10-27T23:47:04Z | 2 | 1 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"mpnet",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-10-27T23:46:48Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 40 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 40,

"warmup_steps": 4,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

ViktorDo/SciBERT-POWO_Climber_Finetuned

|

ViktorDo

| 2022-10-27T22:39:38Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-10-27T21:19:57Z |

---

tags:

- generated_from_trainer

model-index:

- name: SciBERT-POWO_Climber_Finetuned

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# SciBERT-POWO_Climber_Finetuned

This model is a fine-tuned version of [allenai/scibert_scivocab_uncased](https://huggingface.co/allenai/scibert_scivocab_uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1086

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.1033 | 1.0 | 2133 | 0.1151 |

| 0.0853 | 2.0 | 4266 | 0.1058 |

| 0.0792 | 3.0 | 6399 | 0.1086 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

JamesH/Translation_en_to_fr_project

|

JamesH

| 2022-10-27T21:52:09Z | 4 | 1 |

transformers

|

[

"transformers",

"pytorch",

"autotrain",

"translation",

"en",

"fr",

"dataset:JamesH/autotrain-data-second-project-en2fr",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

] |

translation

| 2022-10-27T19:57:24Z |

---

tags:

- autotrain

- translation

language:

- en

- fr

datasets:

- JamesH/autotrain-data-second-project-en2fr

co2_eq_emissions:

emissions: 0.6863820434350988

---

# Model Trained Using AutoTrain

- Problem type: Translation

- Model ID: 1907464829

- CO2 Emissions (in grams): 0.6864

## Validation Metrics

- Loss: 1.117

- SacreBLEU: 16.546

- Gen len: 14.511

|

wavymulder/zelda-diffusion-HN

|

wavymulder

| 2022-10-27T21:32:27Z | 0 | 18 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2022-10-25T01:06:42Z |

---

license: creativeml-openrail-m

---

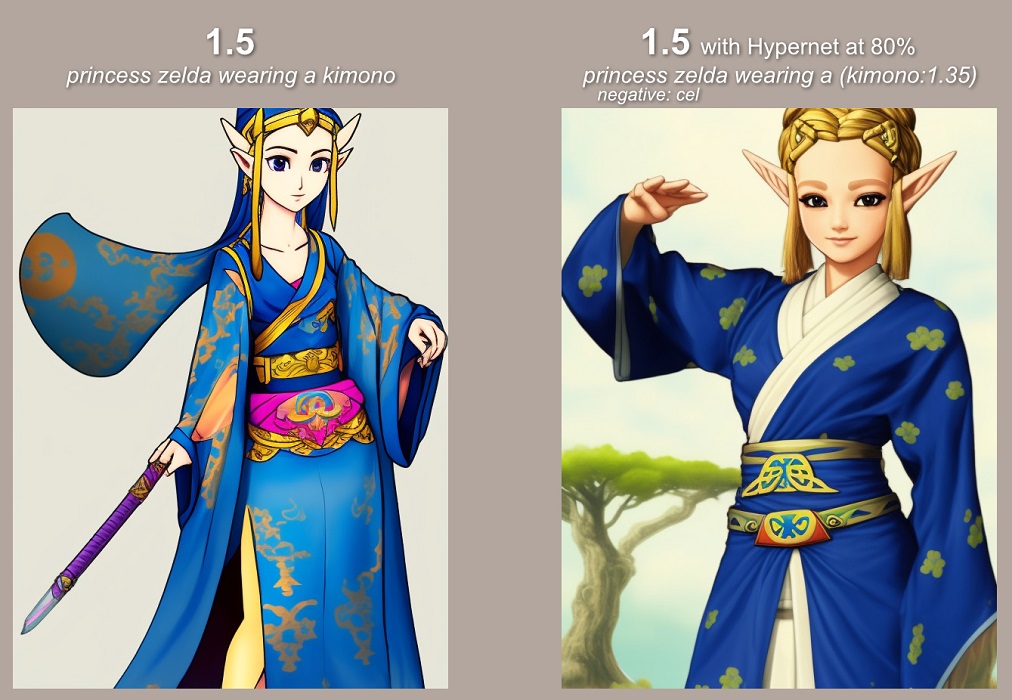

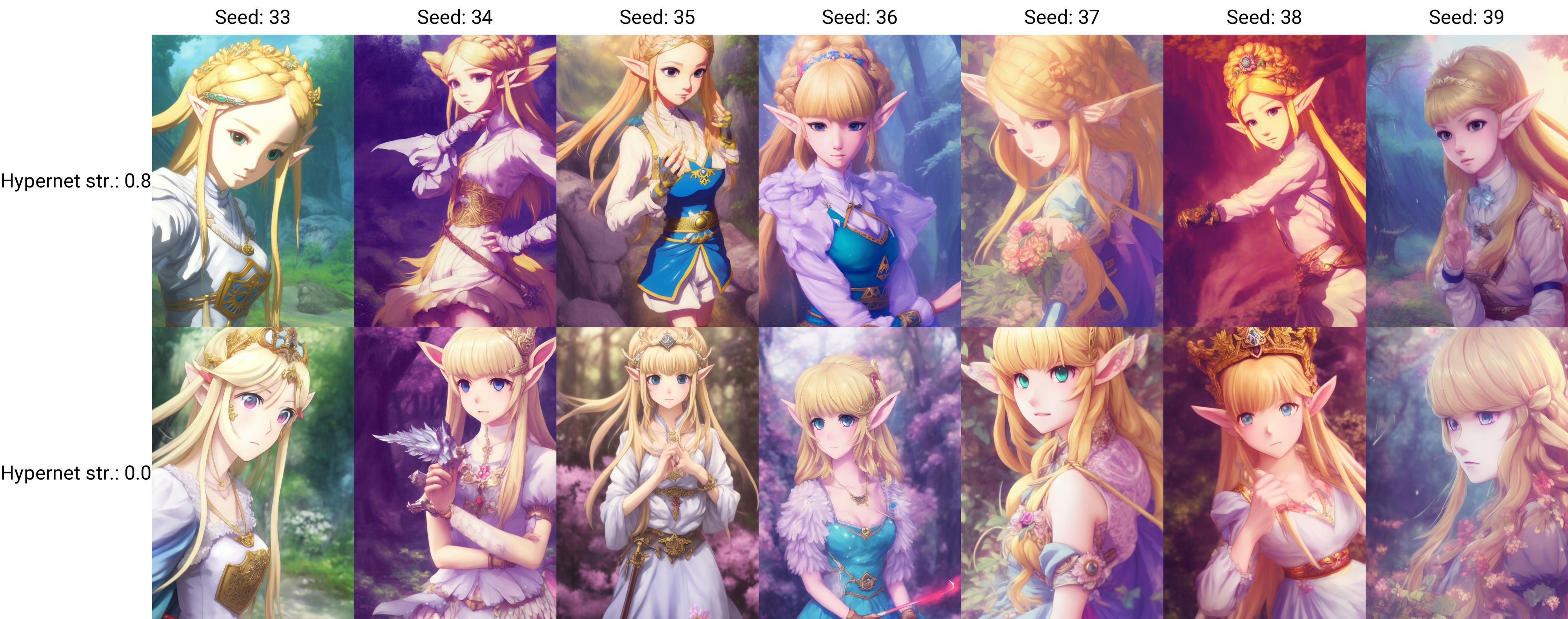

**Zelda Diffusion - Hypernet**

[*DOWNLOAD LINK*](https://huggingface.co/wavymulder/zelda-diffusion-HN/resolve/main/zeldaBOTW.pt) - This is a hypernet trained on screenshots of Princess Zelda from BOTW

Here's a random batch of 9 images to show the hypernet uncherrypicked. The prompt is "anime princess zelda volumetric lighting" and the negative prompt is "cel render 3d animation"

and [a link to more](https://i.imgur.com/NixQGid.jpg)

---

Tips:

You'll want to adjust the hypernetwork strength depending on what style you're trying to put Zelda into. I usually keep it at 80% strength and go from there.

This hypernetwork helps make Zelda look more like the BOTW Zelda. You still have to prompt for what you want. Extra weight might sometimes need to be applied to get her to wear costumes. You may also have luck putting her name closer to the end of the prompt than you normally would.

Since the hypernetwork is trained on screenshots from the videogame, it imparts a heavy Cel Shading effect [(Example here)](https://huggingface.co/wavymulder/zelda-diffusion-HN/resolve/main/00108-920950.png). You can minimize this by negative prompting "cel". I believe every example posted here uses this.

The hypernet can be used either with very simple prompting, as shown above, or a prompt of your favourite artists.

You can put this hypernet on top of different models to create some really cool Zeldas, such as this one made with [Nitrosocke](https://huggingface.co/nitrosocke)'s [Modern Disney Model](https://huggingface.co/nitrosocke/modern-disney-diffusion).

|

RUCAIBox/elmer

|

RUCAIBox

| 2022-10-27T21:30:13Z | 4 | 4 |

transformers

|

[

"transformers",

"pytorch",

"bart",

"text2text-generation",

"text-generation",

"non-autoregressive-generation",

"early-exit",

"en",

"arxiv:2210.13304",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-10-27T21:14:19Z |

---

license: apache-2.0

language:

- en

tags:

- text-generation

- non-autoregressive-generation

- early-exit

---

# ELMER

The ELMER model was proposed in [**ELMER: A Non-Autoregressive Pre-trained Language Model for Efficient and Effective Text Generation**](https://arxiv.org/abs/2210.13304) by Junyi Li, Tianyi Tang, Wayne Xin Zhao, Jian-Yun Nie and Ji-Rong Wen.

The detailed information and instructions can be found [https://github.com/RUCAIBox/ELMER](https://github.com/RUCAIBox/ELMER).

## Model Description

ELMER is an efficient and effective PLM for NAR text generation, which generates tokens at different layers by leveraging the early exit technique.

The architecture of ELMER is a variant of the standard Transformer encoder-decoder and poses three technical contributions:

1. For decoder, we replace the original masked multi-head attention with bi-directional multi-head attention akin to the encoder. Therefore, ELMER dynamically adjusts the output length by emitting an end token "[EOS]" at any position.

2. Leveraging early exit, ELMER injects "off-ramps" at each decoder layer, which make predictions with intermediate hidden states. If ELMER exits at the $l$-th layer, we copy the $l$-th hidden states to the subsequent layers.

3. ELMER utilizes a novel pre-training objective, layer permutation language modeling (LPLM), to pre-train on the large-scale corpus. LPLM permutes the exit layer for each token from 1 to the maximum layer $L$.

## Examples

To fine-tune ELMER on non-autoregressive text generation:

```python

>>> from transformers import BartTokenizer as ElmerTokenizer

>>> from transformers import BartForConditionalGeneration as ElmerForConditionalGeneration

>>> tokenizer = ElmerTokenizer.from_pretrained("RUCAIBox/elmer")

>>> model = ElmerForConditionalGeneration.from_pretrained("RUCAIBox/elmer")

```

## Citation

```bibtex

@article{lijunyi2022elmer,

title={ELMER: A Non-Autoregressive Pre-trained Language Model for Efficient and Effective Text Generation},

author={Li, Junyi and Tang, Tianyi and Zhao, Wayne Xin and Nie, Jian-Yun and Wen, Ji-Rong},

booktitle={EMNLP 2022},

year={2022}

}

```

|

OpenMatch/cocodr-base-msmarco-idro-only

|

OpenMatch

| 2022-10-27T21:26:19Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"feature-extraction",

"license:mit",

"endpoints_compatible",

"region:us"

] |

feature-extraction

| 2022-10-27T21:21:56Z |

---

license: mit

---

This model has been pretrained on MS MARCO corpus and then finetuned on MS MARCO training data with implicit distributionally robust optimization (iDRO), following the approach described in the paper **COCO-DR: Combating Distribution Shifts in Zero-Shot Dense Retrieval with Contrastive and Distributionally Robust Learning**. The associated GitHub repository is available here https://github.com/OpenMatch/COCO-DR.

This model is trained with BERT-base as the backbone with 110M hyperparameters.

|

Phantasion/phaninc

|

Phantasion

| 2022-10-27T21:03:33Z | 0 | 1 | null |

[

"region:us"

] | null | 2022-10-27T20:18:49Z |

Phaninc is a model based on my cyberpunk tumblr blog phantasyinc. One thing that has frustrated me with AI art is the generic quality of prompting for cyberpunk imagery, so I went through my blog and curated a dataset for 25 new keywords to get the results I desire. I have been heavily inspired by the work of nousr on robodiffusion whose model gave me a lot of results I love.

I have utilised the new FAST dreambooth method, and run it at 20000 steps on 684 images (around 800 steps per concept). At the time of writing the model is still training but I thought I would use my training time to summarise my intent with each keyword. I expect there to be problems and some of my experiments to not pan out so well, but I thought I would share.

*Post training update: the entire model is contaminated, most prompts are gonna churn out cyberpunk work, but the keywords are still good against one another and work as desired, and the base model has had some interesting lessons taught to it.*

**phanborg**

This set was the first to be tested, it is a combination of portraits of cyborgs much like phancyborg and phandroid. The difference between the three is that phanborg uses a combination of images with the face covered and uncovered by machinery, while phancyborg uses only uncovered cyborgs and phandroid only covered cyborgs. The images used in all three are entirely different so that I can play with a diversity of trained features with my keywords.

**phanbrutal**

Images I consider a combination of cyberpunk and brutalism.

**phanbw**

This one is one of my more experimental keywords, utilising monochrome cyberpunk images I find quite striking in black and white. However apart from sticking to a cyberpunk theme, there is no consistent subject matter and may just end up being a generic monochrome keyword.

**phancircle**

another experimental keyword, this keyword utilises a selection of architectural, textural and 3d design images with circles and spheres as a recurring motif. My hope is this keyword will help provide a cyberpunk texture to other prompts with a circular motif.

**phancity**

Bleak futuristic cityscapes, but like phanbw this experiment may fail due to being too varied subject matter.

**phanconcrete**

concrete, images of architecture with mostly concrete finishes, might be overkill with phanbrutal above, but I like that there will still be nuanced differences to play with.

**phanconsole**

A command centre needs buttons to beep and switches to boop, this keyword is all about screens and buttons.

**phancorridor**

images of spaceship corridors and facilities to provide a more futuristic interior design.

**phancyborg**

phancyborg is an image selection of cyborgs with some or all of a human face uncovered.

**phandraw**

a selection focused on drawn cyberpunk artwork with bright neon colors and defined linework

**phandroid**

this is where I pay most homage to nousrs robodiffusion, using only cyborgs with their faces concealed or just plain humanoid robots

**phandustrial**

futuristic ndustrial imagery of pipes wires and messes of cables.

**phanfashion**

trying to get that urbanwear hoodie look but with some variations.

**phanfem**

a series of cyberpunk women

**phanglitch**

Glitch art I had reblogged on the blog with a cyberpunk feel. Quite colorful.

**phangrunge**

Dilapidated dens for the scum of the city. Hopefully will add a good dose of urban decay to your prompt.

**phanlogo**

Sleek graphic design, typography and logos.

**phanmachine**

Built with unclear subject matter, phanmachine focuses on the details of futuristic shiny machinery in hopes of it coming out as a style or texture that can be applied in prompts.

**phanmecha**

The three cyborg keywords are sleek and humanoid, phanmecha focuses more on creating unique robot bodytypes.

**phanmilitary**

Future soldiers, man and machine. Likely to attach a gun to your prompt's character.

**phanneon**

Bright neon lights taking over the scene, this feature is what annoyed me with a lot of cyberpunk prompts in ai models. Overall I have it pretty isolated to this keyword, if you want those futuristic glowies.

**phanrooms**

Totally seperate to the rest of the theming, phanrooms is trained on backrooms and liminal space imagery. Which like cyberpunk is of high visual interest to me, and something the base model can sometimes struggle with.

**phansterile**

This is like cyberpunk cleancore, lots of white, very clean, clinical theming.

**phantex**

I don't know why latex outfits are cyberpunk but they just are, these images were selected for the accessorising on top of just the latex outfits.

**phanture**

Abstract textures that were cyberpunk enough for me to put on my blog.

|

motmono/ppo-LunarLander-v2

|

motmono

| 2022-10-27T20:39:35Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-10-27T20:39:09Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 272.74 +/- 15.00

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

andrewzhang505/sf2-lunar-lander

|

andrewzhang505

| 2022-10-27T19:51:07Z | 2 | 0 |

sample-factory

|

[

"sample-factory",

"tensorboard",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-10-27T19:50:47Z |

---

library_name: sample-factory

tags:

- deep-reinforcement-learning

- reinforcement-learning

- sample-factory

model-index:

- name: APPO

results:

- metrics:

- type: mean_reward

value: 126.58 +/- 137.36

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLanderContinuous-v2

type: LunarLanderContinuous-v2

---

A(n) **APPO** model trained on the **LunarLanderContinuous-v2** environment.

This model was trained using Sample Factory 2.0: https://github.com/alex-petrenko/sample-factory

|

Aitor/testpyramidsrnd

|

Aitor

| 2022-10-27T19:45:32Z | 1 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Pyramids",

"region:us"

] |

reinforcement-learning

| 2022-10-27T19:45:24Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Pyramids

library_name: ml-agents

---

# **ppo** Agent playing **Pyramids**

This is a trained model of a **ppo** agent playing **Pyramids** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Pyramids

2. Step 1: Write your model_id: Aitor/testpyramidsrnd

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

PraveenKishore/Reinforce-CartPole-v1

|

PraveenKishore

| 2022-10-27T18:59:10Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-10-27T18:50:31Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-CartPole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 94.10 +/- 36.62

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

Eleusinian/haladas

|

Eleusinian

| 2022-10-27T18:07:35Z | 0 | 0 | null |

[

"license:unknown",

"region:us"

] | null | 2022-10-27T18:00:03Z |

---

license: unknown

---

<div style='display: flex; flex-wrap: wrap; column-gap: 0.75rem;'>

<img src='https://s3.amazonaws.com/moonup/production/uploads/1666893412370-noauth.jpeg' width='400' height='400'>

<img src='https://s3.amazonaws.com/moonup/production/uploads/1666893411703-noauth.jpeg' width='400' height='400'>

<img src='https://s3.amazonaws.com/moonup/production/uploads/1666893411826-noauth.jpeg' width='400' height='400'>

<img src='https://s3.amazonaws.com/moonup/production/uploads/1666893411866-noauth.jpeg' width='400' height='400'>

</div>

|

vict0rsch/climateGAN

|

vict0rsch

| 2022-10-27T17:49:52Z | 0 | 2 | null |

[

"Climate Change",

"GAN",

"Domain Adaptation",

"en",

"license:gpl-3.0",

"region:us"

] | null | 2022-10-24T13:17:28Z |

---

language:

- en

tags:

- Climate Change

- GAN

- Domain Adaptation

license: gpl-3.0

title: ClimateGAN

emoji: 🌎

colorFrom: blue

colorTo: green

sdk: gradio

sdk_version: 3.6

app_file: app.py

inference: true

pinned: true

---

# ClimateGAN: Raising Awareness about Climate Change by Generating Images of Floods

This repository contains the code used to train the model presented in our **[paper](https://openreview.net/forum?id=EZNOb_uNpJk)**.

It is not simply a presentation repository but the code we have used over the past 30 months to come to our final architecture. As such, you will find many scripts, classes, blocks and options which we actively use for our own development purposes but are not directly relevant to reproduce results or use pretrained weights.

If you use this code, data or pre-trained weights, please cite our ICLR 2022 paper:

```

@inproceedings{schmidt2022climategan,

title = {Climate{GAN}: Raising Climate Change Awareness by Generating Images of Floods},

author = {Victor Schmidt and Alexandra Luccioni and M{\'e}lisande Teng and Tianyu Zhang and Alexia Reynaud and Sunand Raghupathi and Gautier Cosne and Adrien Juraver and Vahe Vardanyan and Alex Hern{\'a}ndez-Garc{\'\i}a and Yoshua Bengio},

booktitle = {International Conference on Learning Representations},

year = {2022},

url = {https://openreview.net/forum?id=EZNOb_uNpJk}

}

```

## Using pre-trained weights from this Huggingface Space and Stable Diffusion In-painting

<p align="center">

<strong>Huggingface ClimateGAN Space:</strong>

<a href="https://huggingface.co/spaces/vict0rsch/climateGAN" target="_blank">

<img src="https://huggingface.co/vict0rsch/climateGAN/resolve/main/images/hf-cg.png">

</a>

</p>

1. Download code and model

```bash

git lfs install

git clone https://huggingface.co/vict0rsch/climateGAN

git lfs pull # optional if you don't have the weights

```

2. Install requirements

```

pip install requirements.txt

```

3. **Enable Stable Diffusion Inpainting** by visiting the model's card: https://huggingface.co/runwayml/stable-diffusion-inpainting **and** running `$ huggingface-cli login`

4. Run `$ python climategan_wrapper.py help` for usage instructions on how to infer on a folder's images.

5. Run `$ python app.py` to see the Gradio app.

1. To use Google Street View you'll need an API key and set the `GMAPS_API_KEY` environment variable.

2. To use Stable Diffusion if you can't run `$ huggingface-cli login` (on a Huggingface Space for instance) set the `HF_AUTH_TOKEN` env variable to a [Huggingface authorization token](https://huggingface.co/settings/tokens)

3. To change the UI without model overhead, set the `CG_DEV_MODE` environment variable to `true`.

For a more fine-grained control on ClimateGAN's inferences, refer to `apply_events.py` (does not support Stable Diffusion painter)

**Note:** you don't have control on the prompt by design because I disabled the safety checker. Fork this space/repo and do it yourself if you really need to change the prompt. At least [open a discussion](https://huggingface.co/spaces/vict0rsch/climateGAN/discussions).

## Using pre-trained weights from source

In the paper, we present ClimateGAN as a solution to produce images of floods. It can actually do **more**:

* reusing the segmentation map, we are able to isolate the sky, turn it red and in a few more steps create an image resembling the consequences of a wildfire on a neighboring area, similarly to the [California wildfires](https://www.google.com/search?q=california+wildfires+red+sky&source=lnms&tbm=isch&sa=X&ved=2ahUKEwisws-hx7zxAhXxyYUKHQyKBUwQ_AUoAXoECAEQBA&biw=1680&bih=917&dpr=2).

* reusing the depth map, we can simulate the consequences of a smog event on an image, scaling the intensity of the filter by the distance of an object to the camera, as per [HazeRD](http://www2.ece.rochester.edu/~gsharma/papers/Zhang_ICIP2017_HazeRD.pdf)

In this section we'll explain how to produce the `Painted Input` along with the Smog and Wildfire outputs of a pre-trained ClimateGAN model.

### Installation

This repository and associated model have been developed using Python 3.8.2 and **Pytorch 1.7.0**.

```bash

$ git clone git@github.com:cc-ai/climategan.git

$ cd climategan

$ pip install -r requirements-3.8.2.txt # or `requirements-any.txt` for other Python versions (not tested but expected to be fine)

```

Our pipeline uses [comet.ml](https://comet.ml) to log images. You don't *have* to use their services but we recommend you do as images can be uploaded on your workspace instead of being written to disk.

If you want to use Comet, make sure you have the [appropriate configuration in place (API key and workspace at least)](https://www.comet.ml/docs/python-sdk/advanced/#non-interactive-setup)

### Inference

1. Download and unzip the weights [from this link](https://drive.google.com/u/0/uc?id=18OCUIy7JQ2Ow_-cC5xn_hhDn-Bp45N1K&export=download) (checkout [`gdown`](https://github.com/wkentaro/gdown) for a commandline interface) and put them in `config/`

```

$ pip install gdown

$ mkdir config

$ cd config

$ gdown https://drive.google.com/u/0/uc?id=18OCUIy7JQ2Ow_-cC5xn_hhDn-Bp45N1K

$ unzip release-github-v1.zip

$ cd ..

```

2. Run from the repo's root:

1. With `comet`:

```bash

python apply_events.py --batch_size 4 --half --images_paths path/to/a/folder --resume_path config/model/masker --upload

```

2. Without `comet` (and shortened args compared to the previous example):

```bash

python apply_events.py -b 4 --half -i path/to/a/folder -r config/model/masker --output_path path/to/a/folder

```

The `apply_events.py` script has many options, for instance to use a different output size than the default systematic `640 x 640` pixels, look at the code or `python apply_events.py --help`.

## Training from scratch

ClimateGAN is split in two main components: the Masker producing a binary mask of where water should go and the Painter generating water within this mask given an initial image's context.

### Configuration

The code is structured to use `shared/trainer/defaults.yaml` as default configuration. There are 2 ways of overriding those for your purposes (without altering that file):

1. By providing an alternative configuration as command line argument `config=path/to/config.yaml`

1. The code will first load `shared/trainer/defaults.yaml`

2. *then* update the resulting dictionary with values read in the provided `config` argument.

3. The folder `config/` is NOT tracked by git so you would typically put them there

2. By overwriting specific arguments from the command-line like `python train.py data.loaders.batch_size=8`

### Data

#### Masker

##### Real Images

Because of copyrights issues we are not able to share the real images scrapped from the internet. You would have to do that yourself. In the `yaml` config file, the code expects a key pointing to a `json` file like `data.files.<train or val>.r: <path/to/a/json/file>`. This `json` file should be a list of dictionaries with tasks as keys and files as values. Example:

```json

[

{

"x": "path/to/a/real/image",

"s": "path/to/a/segmentation_map",

"d": "path/to/a/depth_map"

},

...

]

```

Following the [ADVENT](https://github.com/valeoai/ADVENT) procedure, only `x` should be required. We use `s` and `d` inferred from pre-trained models (DeepLab v3+ and MiDAS) to use those pseudo-labels in the first epochs of training (see `pseudo:` in the config file)

##### Simulated Images

We share snapshots of the Virtual World we created in the [Mila-Simulated-Flood dataset](). You can download and unzip one water-level and then produce json files similar to that of the real data, with an additional key `"m": "path/to/a/ground_truth_sim_mask"`. Lastly, edit the config file: `data.files.<train or val>.s: <path/to/a/json/file>`

#### Painter

The painter expects input images and binary masks to train using the [GauGAN](https://github.com/NVlabs/SPADE) training procedure. Unfortunately we cannot share openly the collected data, but similarly as for the Masker's real data you would point to the data using a `json` file as:

```json

[

{

"x": "path/to/a/real/image",

"m": "path/to/a/water_mask",

},

...

]

```

And put those files as values to `data.files.<train or val>.rf: <path/to/a/json/file>` in the configuration.

## Coding conventions

* Tasks

* `x` is an input image, in [-1, 1]

* `s` is a segmentation target with `long` classes

* `d` is a depth map target in R, may be actually `log(depth)` or `1/depth`

* `m` is a binary mask with 1s where water is/should be

* Domains

* `r` is the *real* domain for the masker. Input images are real pictures of urban/suburban/rural areas

* `s` is the *simulated* domain for the masker. Input images are taken from our Unity world

* `rf` is the *real flooded* domain for the painter. Training images are pairs `(x, m)` of flooded scenes for which the water should be reconstructed, in the validation data input images are not flooded and we provide a manually labeled mask `m`

* `kitti` is a special `s` domain to pre-train the masker on [Virtual Kitti 2](https://europe.naverlabs.com/research/computer-vision/proxy-virtual-worlds-vkitti-2/)

* it alters the `trainer.loaders` dict to select relevant data sources from `trainer.all_loaders` in `trainer.switch_data()`. The rest of the code is identical.

* Flow

* This describes the call stack for the trainers standard training procedure

* `train()`

* `run_epoch()`

* `update_G()`

* `zero_grad(G)`

* `get_G_loss()`

* `get_masker_loss()`

* `masker_m_loss()` -> masking loss

* `masker_s_loss()` -> segmentation loss

* `masker_d_loss()` -> depth estimation loss

* `get_painter_loss()` -> painter's loss

* `g_loss.backward()`

* `g_opt_step()`

* `update_D()`

* `zero_grad(D)`

* `get_D_loss()`

* painter's disc losses

* `masker_m_loss()` -> masking AdvEnt disc loss

* `masker_s_loss()` -> segmentation AdvEnt disc loss

* `d_loss.backward()`

* `d_opt_step()`

* `update_learning_rates()` -> update learning rates according to schedules defined in `opts.gen.opt` and `opts.dis.opt`

* `run_validation()`

* compute val losses

* `eval_images()` -> compute metrics

* `log_comet_images()` -> compute and upload inferences

* `save()`

|

Houryy/Houry

|

Houryy

| 2022-10-27T16:27:08Z | 0 | 0 | null |

[

"license:bigscience-openrail-m",

"region:us"

] | null | 2022-10-27T16:27:08Z |

---

license: bigscience-openrail-m

---

|

hagerty7/recyclable-materials-classification

|

hagerty7

| 2022-10-27T15:54:32Z | 42 | 0 |

transformers

|

[

"transformers",

"pytorch",

"vit",

"image-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-10-24T15:10:05Z |

ViT for Recyclable Material Classification

|

mgb-dx-meetup/distilbert-multilingual-finetuned-sentiment

|

mgb-dx-meetup

| 2022-10-27T15:43:10Z | 100 | 0 |

transformers

|

[

"transformers",

"pytorch",

"autotrain",

"text-classification",

"unk",

"dataset:lewtun/autotrain-data-mgb-product-reviews-mbert",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-10-27T15:34:22Z |

---

tags:

- autotrain

- text-classification

language:

- unk

widget:

- text: "I love AutoTrain 🤗"

datasets:

- lewtun/autotrain-data-mgb-product-reviews-mbert

co2_eq_emissions:

emissions: 5.523107849339405

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 1904564767

- CO2 Emissions (in grams): 5.5231

## Validation Metrics

- Loss: 1.135

- Accuracy: 0.514

- Macro F1: 0.504

- Micro F1: 0.514

- Weighted F1: 0.505

- Macro Precision: 0.506

- Micro Precision: 0.514

- Weighted Precision: 0.507

- Macro Recall: 0.513

- Micro Recall: 0.514

- Weighted Recall: 0.514

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/lewtun/autotrain-mgb-product-reviews-mbert-1904564767

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("lewtun/autotrain-mgb-product-reviews-mbert-1904564767", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("lewtun/autotrain-mgb-product-reviews-mbert-1904564767", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

mgb-dx-meetup/xlm-roberta-finetuned-sentiment

|

mgb-dx-meetup

| 2022-10-27T15:37:04Z | 102 | 0 |

transformers

|

[

"transformers",

"pytorch",

"autotrain",

"text-classification",

"unk",

"dataset:lewtun/autotrain-data-mgb-product-reviews-xlm-r",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-10-27T15:17:01Z |

---

tags:

- autotrain

- text-classification

language:

- unk

widget:

- text: "I love AutoTrain 🤗"

datasets:

- lewtun/autotrain-data-mgb-product-reviews-xlm-r

co2_eq_emissions:

emissions: 19.116414139555882

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 1904264758

- CO2 Emissions (in grams): 19.1164

## Validation Metrics

- Loss: 1.021

- Accuracy: 0.563

- Macro F1: 0.555

- Micro F1: 0.563

- Weighted F1: 0.556

- Macro Precision: 0.555

- Micro Precision: 0.563

- Weighted Precision: 0.556

- Macro Recall: 0.562

- Micro Recall: 0.563

- Weighted Recall: 0.563

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/lewtun/autotrain-mgb-product-reviews-xlm-r-1904264758

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("lewtun/autotrain-mgb-product-reviews-xlm-r-1904264758", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("lewtun/autotrain-mgb-product-reviews-xlm-r-1904264758", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

Sennodipoi/LayoutLMv3-FUNSD-ft

|

Sennodipoi

| 2022-10-27T15:29:16Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"layoutlmv3",

"token-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-08-23T08:14:07Z |

LayoutLMv3 fine-tuned on the FUNSD dataset. Code and results are available at the official GitHub repository of my [Master Degree thesis ](https://github.com/AleRosae/thesis-layoutlm).

Results obtained using seqeval in strict mode:

| | Precision | Recall | F1-score | Variance (F1) |

|--------------|-----------|--------|----------|---------------|

| Answer | 0.90 | 0.91 | 0.90 | 3e-5 |

| Header | 0.61 | 0.66 | 0.63 | 4e-4 |

| Question | 0.88 | 0.87 | 0.88 | 1e-4 |

| Micro avg | 0.87 | 0.88 | 0.87 | 3e-5 |

| Macro avg | 0.79 | 0.82 | 0.80 | 3e-5 |

| Weighted avg | 0.87 | 0.88 | 0.87 | 3e-5 |

|

Sennodipoi/LayoutLMv1-FUNSD-ft

|

Sennodipoi

| 2022-10-27T15:27:32Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"layoutlm",

"token-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-08-23T08:10:54Z |

LayoutLMv1 fine-tuned on the FUNSD dataset. Code and results are available at the official GitHub repository of my [Master Degree thesis ](https://github.com/AleRosae/thesis-layoutlm).

Results obtained using seqeval in strict mode:

| | Precision | Recall | F1-score | Variance (F1) |

|--------------|-----------|--------|----------|---------------|

| ANSWER | 0.80 | 0.78 | 0.80 | 1e-4 |

| HEADER | 0.62 | 0.47 | 0.53 | 2e-4 |

| QUESTION | 0.85 | 0.71 | 0.83 | 3e-5 |

| Micro avg | 0.83 | 0.77 | 0.81 | 1e-4 |

| Macro avg | 0.77 | 0.56 | 0.72 | 3e-5 |

| Weighted avg | 0.83 | 0.78 | 0.80 | 1e-4 |

|

Sennodipoi/LayoutLMv3-kleisterNDA

|

Sennodipoi

| 2022-10-27T15:26:00Z | 5 | 1 |

transformers

|

[

"transformers",

"pytorch",

"layoutlmv3",

"token-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-08-24T15:26:45Z |

LayoutLMv3 fine-tuned on the Kleister-NDA dataset. Code (including pre-processing) and results are available at the official GitHub repository of my [Master Degree thesis ](https://github.com/AleRosae/thesis-layoutlm).

Results obtained with seqeval in strict mode:

| | Precision | Recall | F1-score | Variance (F1) |

|----------------|-----------|--------|----------|---------------|

| EFFECTIVE_DATE | 0.92 | 0.99 | 0.95 | 5e-5 |

| JURISDICTION | 0.87 | 0.88 | 0.88 | 8e-6 |

| PARTY | 0.92 | 0.99 | 0.95 | 5e-5 |

| TERM | 1 | 1 | 1 | 0 |

| Micro avg | 0.91 | 0.96 | 0.94 | 2e-5 |

| Macro avg | 0.92 | 0.96 | 0.94 | 3e-7 |

| Weighted avg | 0.91 | 0.96 | 0.94 | 2e-5 |

Since I used the same segmentation strategy of the original paper i.e. using the labels to create segments, the scores are not directly comparable with the other LayoutLM versions.

|

Sennodipoi/LayoutLMv1-kleisterNDA

|

Sennodipoi

| 2022-10-27T15:18:42Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"layoutlm",

"token-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-08-24T07:33:25Z |

LayoutLMv1 fine-tuned on the Kleister-NDA dataset. Code (including pre-processing) and results are available at the official GitHub repository of my [Master Degree thesis ](https://github.com/AleRosae/thesis-layoutlm)

Results obtained with seqeval in strict mode:

| | Precision | Recall| F1-score | Variance (F1) |

|--------------------------|--------------------|-----------------|-------------------|------------------------|

| EFFECTIVE\_DATE | 0.87 | 0.51 | 0.64 | 2e-6 |

| JURISDICTION | 0.75 | 0.84 | 0.80 | 4e-7 |

| PARTY | 0.84 | 0.71 | 0.77 | 9e-6 |

| TERM | 0.69 | 0.51 | 0.58 | 1e-3 |

| Micro avg | 0.81 | 0.72 | 0.77 | 2e-6 |

| Macro avg | 0.79 | 0.65 | 0.70 | 9e-5 |

| Weighted avg | 0.82 | 0.73 | 0.76 | 3e-6 |

|

alanakbik/test-push-public

|

alanakbik

| 2022-10-27T15:10:07Z | 3 | 0 |

flair

|

[

"flair",

"pytorch",

"token-classification",

"sequence-tagger-model",

"region:us"

] |

token-classification

| 2022-10-27T15:07:07Z |

---

tags:

- flair

- token-classification

- sequence-tagger-model

---

### Demo: How to use in Flair

Requires:

- **[Flair](https://github.com/flairNLP/flair/)** (`pip install flair`)

```python

from flair.data import Sentence

from flair.models import SequenceTagger

# load tagger

tagger = SequenceTagger.load("alanakbik/test-push-public")

# make example sentence

sentence = Sentence("On September 1st George won 1 dollar while watching Game of Thrones.")

# predict NER tags

tagger.predict(sentence)

# print sentence

print(sentence)

# print predicted NER spans

print('The following NER tags are found:')

# iterate over entities and print

for entity in sentence.get_spans('ner'):

print(entity)

```

|

yubol/bert-finetuned-ner-30

|

yubol

| 2022-10-27T15:03:09Z | 14 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-10-27T13:19:19Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-finetuned-ner

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0453

- Precision: 0.9275

- Recall: 0.9492

- F1: 0.9382

- Accuracy: 0.9934

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 30

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 407 | 0.0539 | 0.8283 | 0.8758 | 0.8514 | 0.9866 |

| 0.1524 | 2.0 | 814 | 0.0333 | 0.8931 | 0.9123 | 0.9026 | 0.9915 |

| 0.0381 | 3.0 | 1221 | 0.0345 | 0.8835 | 0.9280 | 0.9052 | 0.9906 |

| 0.0179 | 4.0 | 1628 | 0.0351 | 0.8890 | 0.9361 | 0.9119 | 0.9909 |

| 0.0089 | 5.0 | 2035 | 0.0310 | 0.9102 | 0.9372 | 0.9235 | 0.9924 |

| 0.0089 | 6.0 | 2442 | 0.0344 | 0.9198 | 0.9383 | 0.9289 | 0.9922 |

| 0.0057 | 7.0 | 2849 | 0.0331 | 0.9144 | 0.9448 | 0.9294 | 0.9931 |

| 0.0039 | 8.0 | 3256 | 0.0340 | 0.9144 | 0.9481 | 0.9309 | 0.9928 |

| 0.0027 | 9.0 | 3663 | 0.0423 | 0.9032 | 0.9481 | 0.9251 | 0.9921 |

| 0.0018 | 10.0 | 4070 | 0.0373 | 0.9047 | 0.9507 | 0.9271 | 0.9923 |

| 0.0018 | 11.0 | 4477 | 0.0448 | 0.8932 | 0.9474 | 0.9195 | 0.9910 |

| 0.0014 | 12.0 | 4884 | 0.0380 | 0.9079 | 0.9474 | 0.9272 | 0.9928 |

| 0.0015 | 13.0 | 5291 | 0.0360 | 0.9231 | 0.9474 | 0.9351 | 0.9936 |

| 0.0013 | 14.0 | 5698 | 0.0378 | 0.9243 | 0.9456 | 0.9348 | 0.9935 |

| 0.0013 | 15.0 | 6105 | 0.0414 | 0.9197 | 0.9496 | 0.9344 | 0.9930 |

| 0.0009 | 16.0 | 6512 | 0.0405 | 0.9202 | 0.9478 | 0.9338 | 0.9929 |

| 0.0009 | 17.0 | 6919 | 0.0385 | 0.9305 | 0.9441 | 0.9373 | 0.9933 |

| 0.0006 | 18.0 | 7326 | 0.0407 | 0.9285 | 0.9437 | 0.9360 | 0.9934 |

| 0.0009 | 19.0 | 7733 | 0.0428 | 0.9203 | 0.9488 | 0.9343 | 0.9929 |

| 0.0006 | 20.0 | 8140 | 0.0455 | 0.9232 | 0.9536 | 0.9382 | 0.9928 |

| 0.0004 | 21.0 | 8547 | 0.0462 | 0.9261 | 0.9529 | 0.9393 | 0.9930 |

| 0.0004 | 22.0 | 8954 | 0.0423 | 0.9359 | 0.9492 | 0.9425 | 0.9940 |

| 0.0005 | 23.0 | 9361 | 0.0446 | 0.9180 | 0.9529 | 0.9351 | 0.9931 |

| 0.0005 | 24.0 | 9768 | 0.0430 | 0.9361 | 0.9467 | 0.9413 | 0.9938 |

| 0.0002 | 25.0 | 10175 | 0.0436 | 0.9322 | 0.9496 | 0.9408 | 0.9935 |

| 0.0002 | 26.0 | 10582 | 0.0440 | 0.9275 | 0.9492 | 0.9382 | 0.9935 |

| 0.0002 | 27.0 | 10989 | 0.0450 | 0.9272 | 0.9488 | 0.9379 | 0.9932 |

| 0.0002 | 28.0 | 11396 | 0.0445 | 0.9304 | 0.9470 | 0.9386 | 0.9935 |

| 0.0003 | 29.0 | 11803 | 0.0449 | 0.9278 | 0.9481 | 0.9378 | 0.9934 |

| 0.0001 | 30.0 | 12210 | 0.0453 | 0.9275 | 0.9492 | 0.9382 | 0.9934 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

pig4431/sst2_bert_3epoch

|

pig4431

| 2022-10-27T15:01:53Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-10-27T14:55:30Z |

---

tags:

- generated_from_trainer

model-index:

- name: sst2_bert_3epoch

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sst2_bert_3epoch

This model was trained from scratch on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

Shri3/q-FrozenLake-v1-4x4-noSlippery

|

Shri3

| 2022-10-27T14:33:14Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-10-27T14:07:26Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="Shri3/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

huggingtweets/tykesinties

|

huggingtweets

| 2022-10-27T14:31:37Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-10-25T19:33:52Z |

---

language: en

thumbnail: http://www.huggingtweets.com/tykesinties/1666881093237/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/917201427583438848/X-zHDjYL_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">RegressCo H.R.</div>

<div style="text-align: center; font-size: 14px;">@tykesinties</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from RegressCo H.R..

| Data | RegressCo H.R. |

| --- | --- |

| Tweets downloaded | 1844 |

| Retweets | 215 |

| Short tweets | 27 |

| Tweets kept | 1602 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/2pqqtat7/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @tykesinties's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/1vqh1gov) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/1vqh1gov/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/tykesinties')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

yeahrmek/arxiv-math-lean

|

yeahrmek

| 2022-10-27T14:05:48Z | 0 | 0 | null |

[

"region:us"

] | null | 2022-10-27T12:23:41Z |

This is a BPE tokenizer based on "Salesforce/codegen-350M-mono".

The tokenizer has been trained to treat spaces like parts of the tokens (a bit like sentencepiece)

so a word will be encoded differently whether it is at the beginning of the sentence (without space) or not.

We used ArXiv subset of The Pile dataset and proof steps from [lean-step-public](https://github.com/jesse-michael-han/lean-step-public) datasets to train the tokenizer.

|

OWG/imagegpt-small

|

OWG

| 2022-10-27T13:10:17Z | 0 | 0 | null |

[

"onnx",

"vision",

"dataset:imagenet-21k",

"license:apache-2.0",

"region:us"

] | null | 2022-10-27T11:52:39Z |

---

license: apache-2.0

tags:

- vision

datasets:

- imagenet-21k

---

# ImageGPT (small-sized model)

ImageGPT (iGPT) model pre-trained on ImageNet ILSVRC 2012 (14 million images, 21,843 classes) at resolution 32x32. It was introduced in the paper [Generative Pretraining from Pixels](https://cdn.openai.com/papers/Generative_Pretraining_from_Pixels_V2.pdf) by Chen et al. and first released in [this repository](https://github.com/openai/image-gpt). See also the official [blog post](https://openai.com/blog/image-gpt/).

## Model description

The ImageGPT (iGPT) is a transformer decoder model (GPT-like) pretrained on a large collection of images in a self-supervised fashion, namely ImageNet-21k, at a resolution of 32x32 pixels.

The goal for the model is simply to predict the next pixel value, given the previous ones.

By pre-training the model, it learns an inner representation of images that can then be used to:

- extract features useful for downstream tasks: one can either use ImageGPT to produce fixed image features, in order to train a linear model (like a sklearn logistic regression model or SVM). This is also referred to as "linear probing".

- perform (un)conditional image generation.