modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-05 06:27:37

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 539

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-05 06:27:15

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

gcmsrc/xlm-roberta-base-finetuned-panx-it

|

gcmsrc

| 2022-09-20T09:19:59Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-20T09:17:52Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-it

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

args: PAN-X.it

metrics:

- name: F1

type: f1

value: 0.8207236842105264

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-it

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2571

- F1: 0.8207

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.8262 | 1.0 | 70 | 0.3182 | 0.7502 |

| 0.2785 | 2.0 | 140 | 0.2685 | 0.7966 |

| 0.1816 | 3.0 | 210 | 0.2571 | 0.8207 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.1+cu102

- Datasets 1.16.1

- Tokenizers 0.10.3

|

gcmsrc/xlm-roberta-base-finetuned-panx-de-fr

|

gcmsrc

| 2022-09-20T09:11:56Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-19T16:28:48Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de-fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de-fr

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1642

- F1: 0.8589

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2886 | 1.0 | 715 | 0.1804 | 0.8293 |

| 0.1458 | 2.0 | 1430 | 0.1574 | 0.8494 |

| 0.0931 | 3.0 | 2145 | 0.1642 | 0.8589 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.1+cu102

- Datasets 1.16.1

- Tokenizers 0.10.3

|

qunaieer/distilbert-base-uncased-finetuned-emotion

|

qunaieer

| 2022-09-20T08:23:41Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-20T08:13:34Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.926

- name: F1

type: f1

value: 0.9259893400415584

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2248

- Accuracy: 0.926

- F1: 0.9260

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8323 | 1.0 | 250 | 0.3238 | 0.908 | 0.9053 |

| 0.2571 | 2.0 | 500 | 0.2248 | 0.926 | 0.9260 |

### Framework versions

- Transformers 4.13.0

- Pytorch 1.12.1+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

khave/anirec-model-v1

|

khave

| 2022-09-20T08:16:05Z | 1 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"distilbert",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-09-20T08:11:59Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 100000 with parameters:

```

{'batch_size': 64, 'sampler': 'torch.utils.data.sampler.SequentialSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`gpl.toolkit.loss.MarginDistillationLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 100000,

"warmup_steps": 1000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 350, 'do_lower_case': False}) with Transformer model: DistilBertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

MiguelCosta/distilbert-1-finetuned-cisco

|

MiguelCosta

| 2022-09-20T08:02:41Z | 74 | 0 |

transformers

|

[

"transformers",

"tf",

"distilbert",

"fill-mask",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-09-20T07:35:16Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: MiguelCosta/distilbert-1-finetuned-cisco

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# MiguelCosta/distilbert-1-finetuned-cisco

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 2.2723

- Validation Loss: 2.4284

- Epoch: 39

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 2e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': -964, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 4.4357 | 4.3213 | 0 |

| 4.1763 | 3.9111 | 1 |

| 3.8803 | 3.6751 | 2 |

| 3.7135 | 3.5458 | 3 |

| 3.5861 | 3.4489 | 4 |

| 3.5176 | 3.4323 | 5 |

| 3.4022 | 3.3658 | 6 |

| 3.3259 | 3.2113 | 7 |

| 3.2499 | 3.0623 | 8 |

| 3.2129 | 3.0298 | 9 |

| 3.1177 | 2.9181 | 10 |

| 3.0144 | 2.9550 | 11 |

| 2.9502 | 2.8758 | 12 |

| 2.9074 | 2.8674 | 13 |

| 2.8922 | 2.7877 | 14 |

| 2.8333 | 2.8283 | 15 |

| 2.7982 | 2.7717 | 16 |

| 2.7453 | 2.7578 | 17 |

| 2.6611 | 2.5425 | 18 |

| 2.6330 | 2.6145 | 19 |

| 2.5642 | 2.5415 | 20 |

| 2.5352 | 2.5437 | 21 |

| 2.4939 | 2.4214 | 22 |

| 2.4287 | 2.4882 | 23 |

| 2.4142 | 2.5091 | 24 |

| 2.3676 | 2.3997 | 25 |

| 2.3121 | 2.4515 | 26 |

| 2.3085 | 2.2349 | 27 |

| 2.2839 | 2.3205 | 28 |

| 2.3248 | 2.3273 | 29 |

| 2.2763 | 2.2583 | 30 |

| 2.2710 | 2.3896 | 31 |

| 2.2950 | 2.3224 | 32 |

| 2.3026 | 2.3910 | 33 |

| 2.3116 | 2.3255 | 34 |

| 2.2640 | 2.3186 | 35 |

| 2.2958 | 2.3332 | 36 |

| 2.3256 | 2.3646 | 37 |

| 2.2831 | 2.3751 | 38 |

| 2.2723 | 2.4284 | 39 |

### Framework versions

- Transformers 4.22.1

- TensorFlow 2.8.2

- Datasets 2.4.0

- Tokenizers 0.12.1

|

michael20at/testpyramidsrnd

|

michael20at

| 2022-09-20T07:59:27Z | 8 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Pyramids",

"region:us"

] |

reinforcement-learning

| 2022-09-20T07:59:19Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Pyramids

library_name: ml-agents

---

# **ppo** Agent playing **Pyramids**

This is a trained model of a **ppo** agent playing **Pyramids** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Pyramids

2. Step 1: Write your model_id: michael20at/testpyramidsrnd

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

jonas/sdg_classifier_osdg

|

jonas

| 2022-09-20T06:46:22Z | 134 | 7 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"en",

"dataset:jonas/osdg_sdg_data_processed",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-05-24T11:49:08Z |

---

language: en

widget:

- text: "Ending all forms of discrimination against women and girls is not only a basic human right, but it also crucial to accelerating sustainable development. It has been proven time and again, that empowering women and girls has a multiplier effect, and helps drive up economic growth and development across the board.

Since 2000, UNDP, together with our UN partners and the rest of the global community, has made gender equality central to our work. We have seen remarkable progress since then. More girls are now in school compared to 15 years ago, and most regions have reached gender parity in primary education. Women now make up to 41 percent of paid workers outside of agriculture, compared to 35 percent in 1990."

datasets:

- jonas/osdg_sdg_data_processed

co2_eq_emissions: 0.0653263174784986

---

# About

Machine Learning model for classifying text according to the first 15 of the 17 Sustainable Development Goals from the United Nations. Note that model is trained on quite short paragraphs (around 100 words) and performs best with similar input sizes.

Data comes from the amazing https://osdg.ai/ community!

* There is an improved version (finetuned Roberta) of the model available here: https://huggingface.co/jonas/roberta-base-finetuned-sdg

# Model Training Specifics

- Problem type: Multi-class Classification

- Model ID: 900229515

- CO2 Emissions (in grams): 0.0653263174784986

## Validation Metrics

- Loss: 0.3644874095916748

- Accuracy: 0.8972544579677328

- Macro F1: 0.8500873710954522

- Micro F1: 0.8972544579677328

- Weighted F1: 0.8937529692986061

- Macro Precision: 0.8694369727467804

- Micro Precision: 0.8972544579677328

- Weighted Precision: 0.8946984684977016

- Macro Recall: 0.8405065997404059

- Micro Recall: 0.8972544579677328

- Weighted Recall: 0.8972544579677328

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/jonas/autotrain-osdg-sdg-classifier-900229515

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("jonas/sdg_classifier_osdg", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("jonas/sdg_classifier_osdg", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

MiguelCosta/distilbert-finetuned-cisco

|

MiguelCosta

| 2022-09-20T05:49:33Z | 65 | 0 |

transformers

|

[

"transformers",

"tf",

"distilbert",

"fill-mask",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-09-17T07:33:44Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: MiguelCosta/distilbert-finetuned-cisco

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# MiguelCosta/distilbert-finetuned-cisco

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 4.4181

- Validation Loss: 4.2370

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 2e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': -964, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 4.4181 | 4.2370 | 0 |

### Framework versions

- Transformers 4.22.1

- TensorFlow 2.8.2

- Datasets 2.4.0

- Tokenizers 0.12.1

|

wormed/DialoGPT-small-denai

|

wormed

| 2022-09-20T03:53:22Z | 0 | 0 | null |

[

"conversational",

"region:us"

] | null | 2022-09-20T03:42:39Z |

---

tags:

- conversational

---

|

sd-concepts-library/bloo

|

sd-concepts-library

| 2022-09-20T03:24:28Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-20T03:24:23Z |

---

license: mit

---

### Bloo on Stable Diffusion

This is the `<owl-guy>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/cumbia-peruana

|

sd-concepts-library

| 2022-09-20T03:14:35Z | 0 | 3 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-20T03:14:28Z |

---

license: mit

---

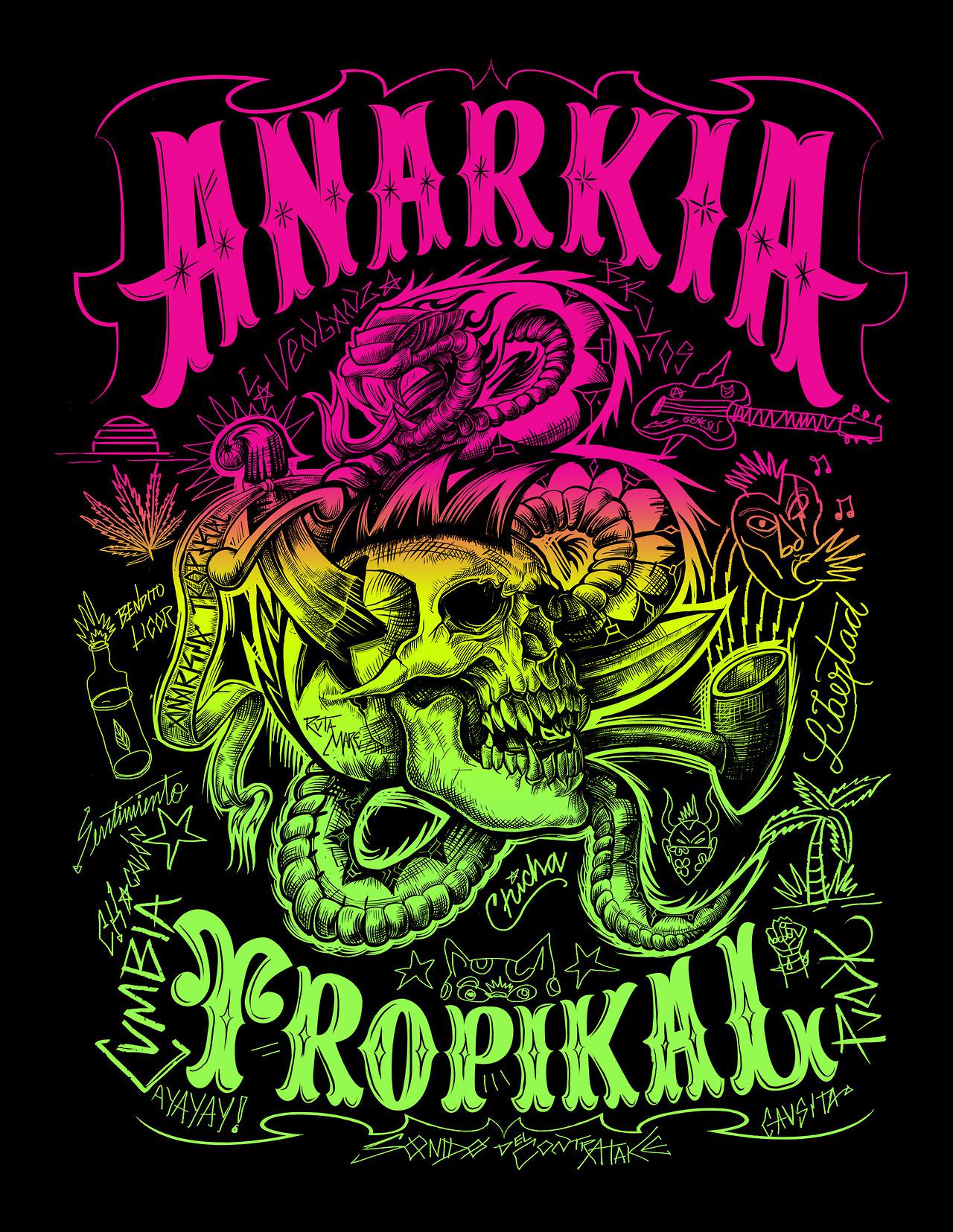

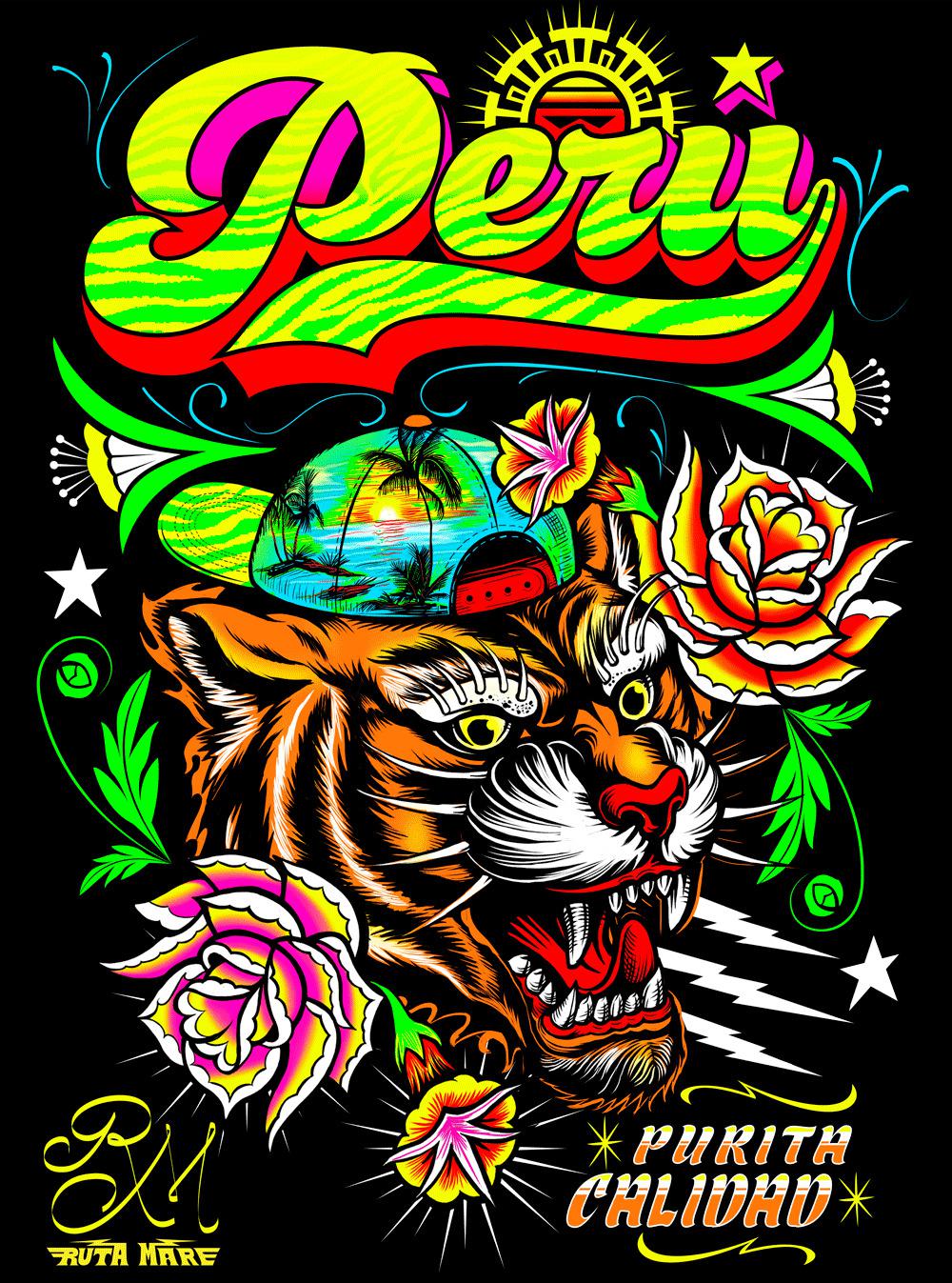

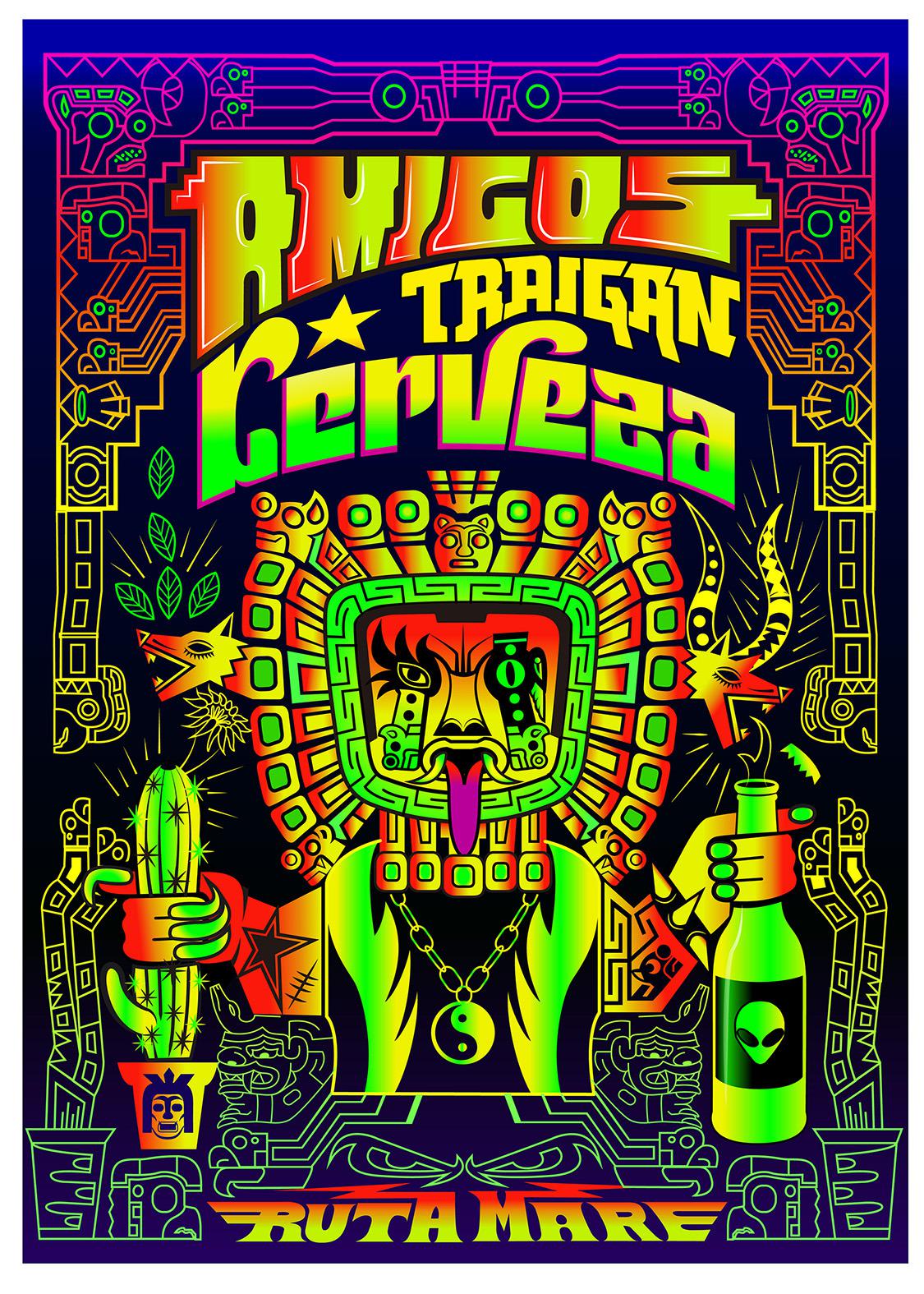

### cumbia peruana on Stable Diffusion

This is the `<cumbia-peru>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

rram12/q-Taxi-v3

|

rram12

| 2022-09-20T02:39:05Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-20T02:15:13Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="rram12/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

rram12/q-FrozenLake-v1-4x4-noSlippery

|

rram12

| 2022-09-20T02:26:17Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-20T02:26:11Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="rram12/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

weirdguitarist/wav2vec2-base-stac-local

|

weirdguitarist

| 2022-09-20T01:58:36Z | 19 | 0 |

transformers

|

[

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-09-13T10:27:39Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: wav2vec2-base-stac-local

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-stac-local

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.9746

- Wer: 0.7828

- Cer: 0.3202

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 2

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Cer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|

| 2.0603 | 1.0 | 2369 | 2.1282 | 0.9517 | 0.5485 |

| 1.6155 | 2.0 | 4738 | 1.6196 | 0.9060 | 0.4565 |

| 1.3462 | 3.0 | 7107 | 1.4331 | 0.8379 | 0.3983 |

| 1.1819 | 4.0 | 9476 | 1.3872 | 0.8233 | 0.3717 |

| 1.0189 | 5.0 | 11845 | 1.4066 | 0.8328 | 0.3660 |

| 0.9026 | 6.0 | 14214 | 1.3502 | 0.8198 | 0.3508 |

| 0.777 | 7.0 | 16583 | 1.3016 | 0.7922 | 0.3433 |

| 0.7109 | 8.0 | 18952 | 1.2662 | 0.8302 | 0.3510 |

| 0.6766 | 9.0 | 21321 | 1.4321 | 0.8103 | 0.3368 |

| 0.6078 | 10.0 | 23690 | 1.3592 | 0.7871 | 0.3360 |

| 0.5958 | 11.0 | 26059 | 1.4389 | 0.7819 | 0.3397 |

| 0.5094 | 12.0 | 28428 | 1.3391 | 0.8017 | 0.3239 |

| 0.4567 | 13.0 | 30797 | 1.4718 | 0.8026 | 0.3347 |

| 0.4448 | 14.0 | 33166 | 1.7450 | 0.8043 | 0.3424 |

| 0.3976 | 15.0 | 35535 | 1.4581 | 0.7888 | 0.3283 |

| 0.3449 | 16.0 | 37904 | 1.5688 | 0.8078 | 0.3397 |

| 0.3046 | 17.0 | 40273 | 1.8630 | 0.8060 | 0.3448 |

| 0.2983 | 18.0 | 42642 | 1.8400 | 0.8190 | 0.3425 |

| 0.2728 | 19.0 | 45011 | 1.6726 | 0.8034 | 0.3280 |

| 0.2579 | 20.0 | 47380 | 1.6661 | 0.8138 | 0.3249 |

| 0.2169 | 21.0 | 49749 | 1.7389 | 0.8138 | 0.3277 |

| 0.2498 | 22.0 | 52118 | 1.7205 | 0.7948 | 0.3207 |

| 0.1831 | 23.0 | 54487 | 1.8641 | 0.8103 | 0.3229 |

| 0.1927 | 24.0 | 56856 | 1.8724 | 0.7784 | 0.3251 |

| 0.1649 | 25.0 | 59225 | 1.9187 | 0.7974 | 0.3277 |

| 0.1594 | 26.0 | 61594 | 1.9022 | 0.7828 | 0.3220 |

| 0.1338 | 27.0 | 63963 | 1.9303 | 0.7862 | 0.3212 |

| 0.1441 | 28.0 | 66332 | 1.9528 | 0.7845 | 0.3207 |

| 0.129 | 29.0 | 68701 | 1.9676 | 0.7819 | 0.3212 |

| 0.1169 | 30.0 | 71070 | 1.9746 | 0.7828 | 0.3202 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.8.1+cu102

- Datasets 1.18.3

- Tokenizers 0.12.1

|

huggingtweets/markiplier-mrbeast-xqc

|

huggingtweets

| 2022-09-20T00:43:59Z | 110 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-09-20T00:43:52Z |

---

language: en

thumbnail: https://github.com/borisdayma/huggingtweets/blob/master/img/logo.png?raw=true

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/994592419705274369/RLplF55e_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1571030673078591490/TqoPeGER_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1511102924310544387/j6E29xq6_400x400.jpg')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">MrBeast & xQc & Mark</div>

<div style="text-align: center; font-size: 14px;">@markiplier-mrbeast-xqc</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from MrBeast & xQc & Mark.

| Data | MrBeast | xQc | Mark |

| --- | --- | --- | --- |

| Tweets downloaded | 3248 | 3241 | 3226 |

| Retweets | 119 | 116 | 306 |

| Short tweets | 725 | 410 | 392 |

| Tweets kept | 2404 | 2715 | 2528 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3p1p4x3v/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @markiplier-mrbeast-xqc's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/13fbl2ac) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/13fbl2ac/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/markiplier-mrbeast-xqc')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

sd-concepts-library/wojaks-now

|

sd-concepts-library

| 2022-09-20T00:19:17Z | 0 | 4 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-20T00:19:10Z |

---

license: mit

---

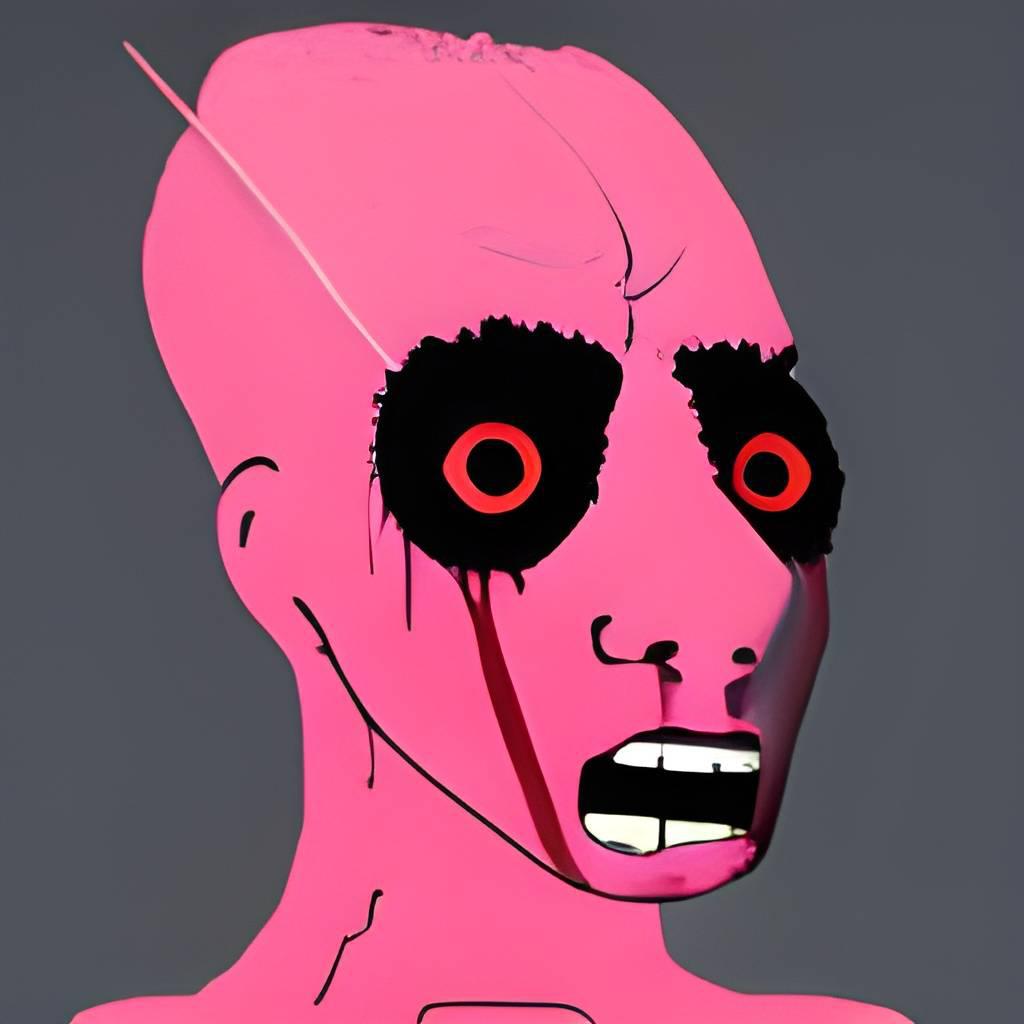

### wojaks-now on Stable Diffusion

This is the `<red-wojak>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/all-rings-albuns

|

sd-concepts-library

| 2022-09-19T23:53:52Z | 0 | 2 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-19T23:53:38Z |

---

license: mit

---

### all rings albuns on Stable Diffusion

This is the `<rings-all-albuns>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

SandraB/mt5-small-mlsum_training_sample

|

SandraB

| 2022-09-19T23:36:24Z | 111 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"summarization",

"generated_from_trainer",

"dataset:mlsum",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-09-19T13:17:26Z |

---

license: apache-2.0

tags:

- summarization

- generated_from_trainer

datasets:

- mlsum

metrics:

- rouge

model-index:

- name: mt5-small-mlsum_training_sample

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: mlsum

type: mlsum

config: de

split: train

args: de

metrics:

- name: Rouge1

type: rouge

value: 28.2078

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-mlsum_training_sample

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the mlsum dataset.

It achieves the following results on the evaluation set:

- Loss: 1.9727

- Rouge1: 28.2078

- Rouge2: 19.0712

- Rougel: 26.2267

- Rougelsum: 26.9462

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|:-------:|:---------:|

| 1.3193 | 1.0 | 6875 | 2.1352 | 25.8941 | 17.4672 | 24.2858 | 24.924 |

| 1.2413 | 2.0 | 13750 | 2.0528 | 26.6221 | 18.1166 | 24.8233 | 25.5111 |

| 1.1844 | 3.0 | 20625 | 1.9783 | 27.0518 | 18.3457 | 25.2288 | 25.8919 |

| 1.0403 | 4.0 | 27500 | 1.9487 | 27.8154 | 18.9701 | 25.9435 | 26.6578 |

| 0.9582 | 5.0 | 34375 | 1.9374 | 27.6863 | 18.7723 | 25.7667 | 26.4694 |

| 0.8992 | 6.0 | 41250 | 1.9353 | 27.8959 | 18.919 | 26.0434 | 26.7262 |

| 0.8109 | 7.0 | 48125 | 1.9492 | 28.0644 | 18.8873 | 26.0628 | 26.757 |

| 0.7705 | 8.0 | 55000 | 1.9727 | 28.2078 | 19.0712 | 26.2267 | 26.9462 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Isaacp/xlm-roberta-base-finetuned-panx-de-fr

|

Isaacp

| 2022-09-19T23:18:16Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-15T21:55:15Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de-fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de-fr

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1637

- F1: 0.8599

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2897 | 1.0 | 715 | 0.1759 | 0.8369 |

| 0.1462 | 2.0 | 1430 | 0.1587 | 0.8506 |

| 0.0931 | 3.0 | 2145 | 0.1637 | 0.8599 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.1+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

sd-concepts-library/kawaii-colors

|

sd-concepts-library

| 2022-09-19T23:08:01Z | 0 | 26 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-15T20:07:40Z |

---

license: mit

---

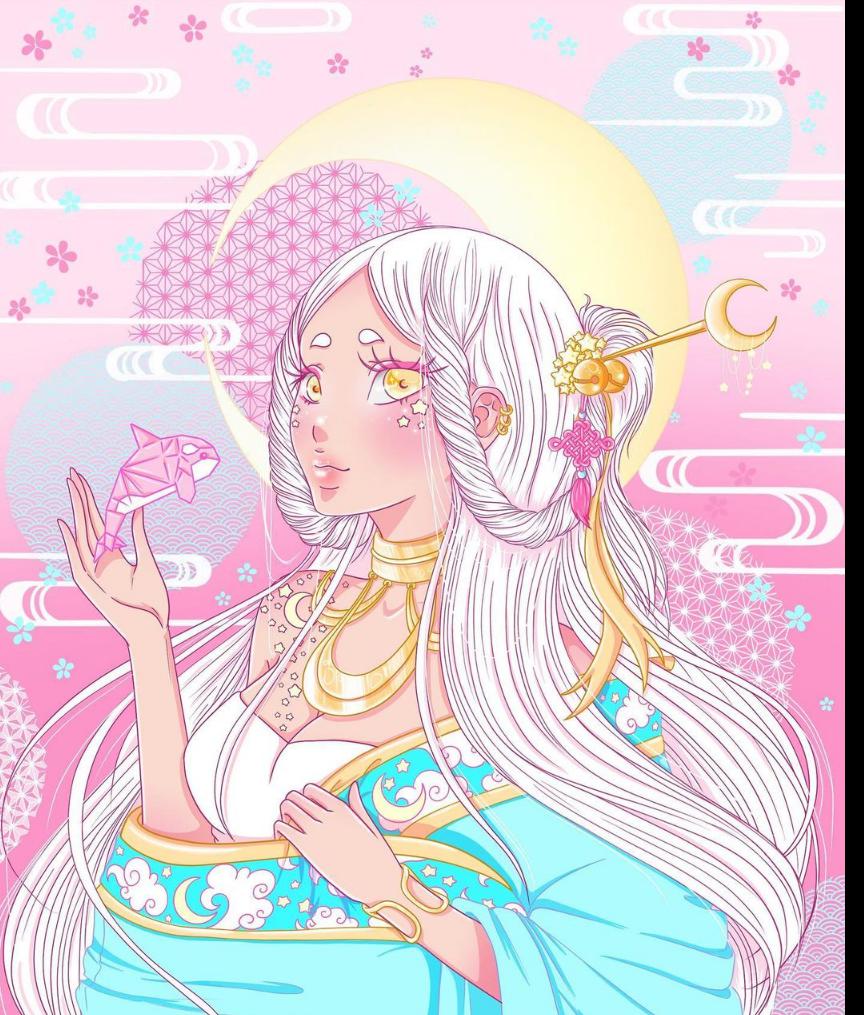

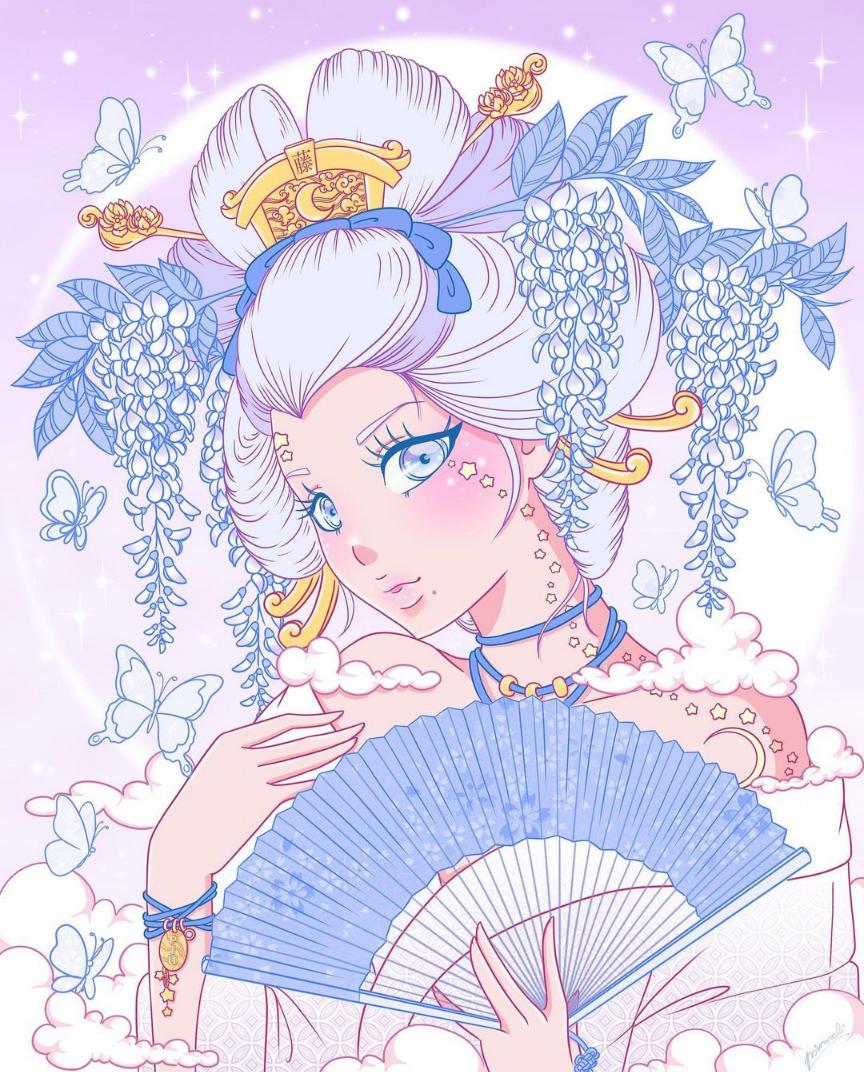

### Kawaii Colors on Stable Diffusion

This is the `<kawaii-colors-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

research-backup/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated

|

research-backup

| 2022-09-19T21:47:15Z | 104 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"feature-extraction",

"dataset:relbert/semeval2012_relational_similarity",

"model-index",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

feature-extraction

| 2022-09-17T11:45:40Z |

---

datasets:

- relbert/semeval2012_relational_similarity

model-index:

- name: relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.8450793650793651

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6176470588235294

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6261127596439169

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.7498610339077265

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.886

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.618421052631579

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6203703703703703

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9199939731806539

- name: F1 (macro)

type: f1_macro

value: 0.9158483158560947

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8457746478873239

- name: F1 (macro)

type: f1_macro

value: 0.6760195209742395

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.6684723726977249

- name: F1 (macro)

type: f1_macro

value: 0.65910797043685

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.959379564582319

- name: F1 (macro)

type: f1_macro

value: 0.8779321856206035

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9031651519899718

- name: F1 (macro)

type: f1_macro

value: 0.9015700872047177

---

# relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated

RelBERT fine-tuned from [roberta-large](https://huggingface.co/roberta-large) on

[relbert/semeval2012_relational_similarity](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.6176470588235294

- Accuracy on SAT: 0.6261127596439169

- Accuracy on BATS: 0.7498610339077265

- Accuracy on U2: 0.618421052631579

- Accuracy on U4: 0.6203703703703703

- Accuracy on Google: 0.886

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.9199939731806539

- Micro F1 score on CogALexV: 0.8457746478873239

- Micro F1 score on EVALution: 0.6684723726977249

- Micro F1 score on K&H+N: 0.959379564582319

- Micro F1 score on ROOT09: 0.9031651519899718

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.8450793650793651

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-large

- max_length: 64

- mode: average_no_mask

- data: relbert/semeval2012_relational_similarity

- split: train

- data_eval: relbert/conceptnet_high_confidence

- split_eval: full

- template_mode: manual

- template: I wasn’t aware of this relationship, but I just read in the encyclopedia that <obj> is <subj>’s <mask>

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 29

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 0

- exclude_relation: None

- exclude_relation_eval: None

- n_sample: 640

- gradient_accumulation: 8

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

research-backup/roberta-large-semeval2012-average-no-mask-prompt-b-nce-conceptnet-validated

|

research-backup

| 2022-09-19T21:39:01Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"feature-extraction",

"dataset:relbert/semeval2012_relational_similarity",

"model-index",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

feature-extraction

| 2022-09-16T13:22:04Z |

---

datasets:

- relbert/semeval2012_relational_similarity

model-index:

- name: relbert/roberta-large-semeval2012-average-no-mask-prompt-b-nce-conceptnet-validated

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.8476587301587302

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5962566844919787

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5964391691394659

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.7559755419677598

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.87

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5043859649122807

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5902777777777778

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9135151423836071

- name: F1 (macro)

type: f1_macro

value: 0.9077476621792441

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8568075117370892

- name: F1 (macro)

type: f1_macro

value: 0.6862949146842514

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.6793066088840737

- name: F1 (macro)

type: f1_macro

value: 0.6733689760415943

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9559713431174793

- name: F1 (macro)

type: f1_macro

value: 0.8691131481598299

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8934503290504543

- name: F1 (macro)

type: f1_macro

value: 0.8925413349776822

---

# relbert/roberta-large-semeval2012-average-no-mask-prompt-b-nce-conceptnet-validated

RelBERT fine-tuned from [roberta-large](https://huggingface.co/roberta-large) on

[relbert/semeval2012_relational_similarity](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-b-nce-conceptnet-validated/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.5962566844919787

- Accuracy on SAT: 0.5964391691394659

- Accuracy on BATS: 0.7559755419677598

- Accuracy on U2: 0.5043859649122807

- Accuracy on U4: 0.5902777777777778

- Accuracy on Google: 0.87

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-b-nce-conceptnet-validated/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.9135151423836071

- Micro F1 score on CogALexV: 0.8568075117370892

- Micro F1 score on EVALution: 0.6793066088840737

- Micro F1 score on K&H+N: 0.9559713431174793

- Micro F1 score on ROOT09: 0.8934503290504543

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-b-nce-conceptnet-validated/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.8476587301587302

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/roberta-large-semeval2012-average-no-mask-prompt-b-nce-conceptnet-validated")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-large

- max_length: 64

- mode: average_no_mask

- data: relbert/semeval2012_relational_similarity

- split: train

- data_eval: relbert/conceptnet_high_confidence

- split_eval: full

- template_mode: manual

- template: Today, I finally discovered the relation between <subj> and <obj> : <obj> is <subj>'s <mask>

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 29

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 0

- exclude_relation: None

- exclude_relation_eval: None

- n_sample: 640

- gradient_accumulation: 8

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-b-nce-conceptnet-validated/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

research-backup/roberta-large-semeval2012-average-no-mask-prompt-a-nce-conceptnet-validated

|

research-backup

| 2022-09-19T21:35:10Z | 104 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"feature-extraction",

"dataset:relbert/semeval2012_relational_similarity",

"model-index",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

feature-extraction

| 2022-09-16T05:55:39Z |

---

datasets:

- relbert/semeval2012_relational_similarity

model-index:

- name: relbert/roberta-large-semeval2012-average-no-mask-prompt-a-nce-conceptnet-validated

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.9175

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6657754010695187

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.658753709198813

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.783212896053363

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.922

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6008771929824561

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6481481481481481

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9198433026970017

- name: F1 (macro)

type: f1_macro

value: 0.9160770870840453

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8568075117370892

- name: F1 (macro)

type: f1_macro

value: 0.6976908408325354

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.685807150595883

- name: F1 (macro)

type: f1_macro

value: 0.6809745362689802

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9570842317590597

- name: F1 (macro)

type: f1_macro

value: 0.8743204606688812

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9059855844562832

- name: F1 (macro)

type: f1_macro

value: 0.9055132716987447

---

# relbert/roberta-large-semeval2012-average-no-mask-prompt-a-nce-conceptnet-validated

RelBERT fine-tuned from [roberta-large](https://huggingface.co/roberta-large) on

[relbert/semeval2012_relational_similarity](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-a-nce-conceptnet-validated/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.6657754010695187

- Accuracy on SAT: 0.658753709198813

- Accuracy on BATS: 0.783212896053363

- Accuracy on U2: 0.6008771929824561

- Accuracy on U4: 0.6481481481481481

- Accuracy on Google: 0.922

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-a-nce-conceptnet-validated/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.9198433026970017

- Micro F1 score on CogALexV: 0.8568075117370892

- Micro F1 score on EVALution: 0.685807150595883

- Micro F1 score on K&H+N: 0.9570842317590597

- Micro F1 score on ROOT09: 0.9059855844562832

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-a-nce-conceptnet-validated/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.9175

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/roberta-large-semeval2012-average-no-mask-prompt-a-nce-conceptnet-validated")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-large

- max_length: 64

- mode: average_no_mask

- data: relbert/semeval2012_relational_similarity

- split: train

- data_eval: relbert/conceptnet_high_confidence

- split_eval: full

- template_mode: manual

- template: Today, I finally discovered the relation between <subj> and <obj> : <subj> is the <mask> of <obj>

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 27

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 0

- exclude_relation: None

- exclude_relation_eval: None

- n_sample: 640

- gradient_accumulation: 8

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-a-nce-conceptnet-validated/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

research-backup/roberta-large-semeval2012-average-prompt-d-nce-conceptnet-validated

|

research-backup

| 2022-09-19T21:27:36Z | 104 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"feature-extraction",

"dataset:relbert/semeval2012_relational_similarity",

"model-index",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

feature-extraction

| 2022-09-15T14:31:09Z |

---

datasets:

- relbert/semeval2012_relational_similarity

model-index:

- name: relbert/roberta-large-semeval2012-average-prompt-d-nce-conceptnet-validated

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.8137698412698413

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6898395721925134

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6884272997032641

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.8293496386881601

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.958

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6666666666666666

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6597222222222222

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9187886093114359

- name: F1 (macro)

type: f1_macro

value: 0.9155322599832632

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.865962441314554

- name: F1 (macro)

type: f1_macro

value: 0.7168264001292298

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.6879739978331527

- name: F1 (macro)

type: f1_macro

value: 0.6688500009556503

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.955762676497183

- name: F1 (macro)

type: f1_macro

value: 0.8742975353162309

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9169539329363836

- name: F1 (macro)

type: f1_macro

value: 0.9152963472472981

---

# relbert/roberta-large-semeval2012-average-prompt-d-nce-conceptnet-validated

RelBERT fine-tuned from [roberta-large](https://huggingface.co/roberta-large) on

[relbert/semeval2012_relational_similarity](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-prompt-d-nce-conceptnet-validated/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.6898395721925134

- Accuracy on SAT: 0.6884272997032641

- Accuracy on BATS: 0.8293496386881601

- Accuracy on U2: 0.6666666666666666

- Accuracy on U4: 0.6597222222222222

- Accuracy on Google: 0.958

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-prompt-d-nce-conceptnet-validated/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.9187886093114359

- Micro F1 score on CogALexV: 0.865962441314554

- Micro F1 score on EVALution: 0.6879739978331527

- Micro F1 score on K&H+N: 0.955762676497183

- Micro F1 score on ROOT09: 0.9169539329363836

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-prompt-d-nce-conceptnet-validated/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.8137698412698413

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/roberta-large-semeval2012-average-prompt-d-nce-conceptnet-validated")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-large

- max_length: 64

- mode: average

- data: relbert/semeval2012_relational_similarity

- split: train

- data_eval: relbert/conceptnet_high_confidence

- split_eval: full

- template_mode: manual

- template: I wasn’t aware of this relationship, but I just read in the encyclopedia that <subj> is the <mask> of <obj>

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 26

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 0

- exclude_relation: None

- exclude_relation_eval: None

- n_sample: 640

- gradient_accumulation: 8

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/roberta-large-semeval2012-average-prompt-d-nce-conceptnet-validated/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

research-backup/roberta-large-semeval2012-average-prompt-c-nce-conceptnet-validated

|

research-backup

| 2022-09-19T21:23:56Z | 106 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"feature-extraction",

"dataset:relbert/semeval2012_relational_similarity",

"model-index",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

feature-extraction

| 2022-09-15T07:04:30Z |

---

datasets:

- relbert/semeval2012_relational_similarity

model-index:

- name: relbert/roberta-large-semeval2012-average-prompt-c-nce-conceptnet-validated

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.8667460317460317

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5935828877005348

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5964391691394659

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions