code

stringlengths 2.5k

6.36M

| kind

stringclasses 2

values | parsed_code

stringlengths 0

404k

| quality_prob

float64 0

0.98

| learning_prob

float64 0.03

1

|

|---|---|---|---|---|

# 线性回归的简洁实现

随着深度学习框架的发展,开发深度学习应用变得越来越便利。实践中,我们通常可以用比上一节更简洁的代码来实现同样的模型。在本节中,我们将介绍如何使用tensorflow2.0推荐的keras接口更方便地实现线性回归的训练。

## 生成数据集

我们生成与上一节中相同的数据集。其中`features`是训练数据特征,`labels`是标签。

```

import tensorflow as tf

num_inputs = 2

num_examples = 1000

true_w = [2, -3.4]

true_b = 4.2

features = tf.random.normal(shape=(num_examples, num_inputs), stddev=1)

labels = true_w[0] * features[:, 0] + true_w[1] * features[:, 1] + true_b

labels += tf.random.normal(labels.shape, stddev=0.01)

```

虽然tensorflow2.0对于线性回归可以直接拟合,不用再划分数据集,但我们仍学习一下读取数据的方法

```

from tensorflow import data as tfdata

batch_size = 10

# 将训练数据的特征和标签组合

dataset = tfdata.Dataset.from_tensor_slices((features, labels))

# 随机读取小批量

dataset = dataset.shuffle(buffer_size=num_examples)

dataset = dataset.batch(batch_size)

data_iter = iter(dataset)

for X, y in data_iter:

print(X, y)

break

```

定义模型,tensorflow 2.0推荐使用keras定义网络,故使用keras定义网络

我们先定义一个模型变量`model`,它是一个`Sequential`实例。

在keras中,`Sequential`实例可以看作是一个串联各个层的容器。

在构造模型时,我们在该容器中依次添加层。

当给定输入数据时,容器中的每一层将依次计算并将输出作为下一层的输入。

重要的一点是,在keras中我们无须指定每一层输入的形状。

因为为线性回归,输入层与输出层全连接,故定义一层

```

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow import initializers as init

model = keras.Sequential()

model.add(layers.Dense(1, kernel_initializer=init.RandomNormal(stddev=0.01)))

```

定义损失函数和优化器:损失函数为mse,优化器选择sgd随机梯度下降

在keras中,定义完模型后,调用`compile()`方法可以配置模型的损失函数和优化方法。定义损失函数只需传入`loss`的参数,keras定义了各种损失函数,并直接使用它提供的平方损失`mse`作为模型的损失函数。同样,我们也无须实现小批量随机梯度下降,只需传入`optimizer`的参数,keras定义了各种优化算法,我们这里直接指定学习率为0.01的小批量随机梯度下降`tf.keras.optimizers.SGD(0.03)`为优化算法

```

from tensorflow import losses

loss = losses.MeanSquaredError()

from tensorflow.keras import optimizers

trainer = optimizers.SGD(learning_rate=0.03)

loss_history = []

```

在使用keras训练模型时,我们通过调用`model`实例的`fit`函数来迭代模型。`fit`函数只需传入你的输入x和输出y,还有epoch遍历数据的次数,每次更新梯度的大小batch_size, 这里定义epoch=3,batch_size=10。

使用keras甚至完全不需要去划分数据集

```

num_epochs = 3

for epoch in range(1, num_epochs + 1):

for (batch, (X, y)) in enumerate(dataset):

with tf.GradientTape() as tape:

l = loss(model(X, training=True), y)

loss_history.append(l.numpy().mean())

grads = tape.gradient(l, model.trainable_variables)

trainer.apply_gradients(zip(grads, model.trainable_variables))

l = loss(model(features), labels)

print('epoch %d, loss: %f' % (epoch, l.numpy().mean()))

```

下面我们分别比较学到的模型参数和真实的模型参数。我们可以通过model的`get_weights()`来获得其权重(`weight`)和偏差(`bias`)。学到的参数和真实的参数很接近。

```

true_w, model.get_weights()[0]

true_b, model.get_weights()[1]

loss_history

```

|

github_jupyter

|

import tensorflow as tf

num_inputs = 2

num_examples = 1000

true_w = [2, -3.4]

true_b = 4.2

features = tf.random.normal(shape=(num_examples, num_inputs), stddev=1)

labels = true_w[0] * features[:, 0] + true_w[1] * features[:, 1] + true_b

labels += tf.random.normal(labels.shape, stddev=0.01)

from tensorflow import data as tfdata

batch_size = 10

# 将训练数据的特征和标签组合

dataset = tfdata.Dataset.from_tensor_slices((features, labels))

# 随机读取小批量

dataset = dataset.shuffle(buffer_size=num_examples)

dataset = dataset.batch(batch_size)

data_iter = iter(dataset)

for X, y in data_iter:

print(X, y)

break

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow import initializers as init

model = keras.Sequential()

model.add(layers.Dense(1, kernel_initializer=init.RandomNormal(stddev=0.01)))

from tensorflow import losses

loss = losses.MeanSquaredError()

from tensorflow.keras import optimizers

trainer = optimizers.SGD(learning_rate=0.03)

loss_history = []

num_epochs = 3

for epoch in range(1, num_epochs + 1):

for (batch, (X, y)) in enumerate(dataset):

with tf.GradientTape() as tape:

l = loss(model(X, training=True), y)

loss_history.append(l.numpy().mean())

grads = tape.gradient(l, model.trainable_variables)

trainer.apply_gradients(zip(grads, model.trainable_variables))

l = loss(model(features), labels)

print('epoch %d, loss: %f' % (epoch, l.numpy().mean()))

true_w, model.get_weights()[0]

true_b, model.get_weights()[1]

loss_history

| 0.664758 | 0.962953 |

```

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_theme(style="darkgrid")

dataframe = pd.read_csv('PODs.csv', delimiter=';', header=0, index_col=0)

dataframe=dataframe.astype(float)

ax = plt.gca()

dataframe.plot(kind='line', y='CU MEMORY (MiBytes)', label='vCU', ax=ax)

dataframe.plot(kind='line', y='DU MEMORY (MiBytes)', label='vDU', ax=ax)

dataframe.plot(kind='line', y='RU MEMORY (MiBytes)', label='vRU', ax=ax)

#dataframe.plot(kind='line', y='K8S MEMORY (MiBytes)', label='K8S', ax=ax)

dataframe.plot(kind='line', y='OPERATOR MEMORY (MiBytes)', label='PlaceRAN Orchestrator', ax=ax)

ax.annotate('$\it{t0}$', xy=(0,94), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 0,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t1}$', xy=(70,94), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 70,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t2}$', xy=(130,147), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 130,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t3}$', xy=(185,1396), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 185,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t4}$', xy=(245,1580), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 245,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t5}$', xy=(300,1685), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 300,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t6}$', xy=(360,1688), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 360,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t7}$', xy=(415,1688), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 415,linestyle ="dotted", color='black', alpha=0.3)

plt.xlabel("Time (s)")

plt.ylabel("MEMORY (MiBytes)")

plt.legend()

plt.savefig('out/MEM_PODS.pdf', bbox_inches='tight')

plt.savefig('out/MEM_PODS.png', dpi=300, bbox_inches='tight')

```

|

github_jupyter

|

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_theme(style="darkgrid")

dataframe = pd.read_csv('PODs.csv', delimiter=';', header=0, index_col=0)

dataframe=dataframe.astype(float)

ax = plt.gca()

dataframe.plot(kind='line', y='CU MEMORY (MiBytes)', label='vCU', ax=ax)

dataframe.plot(kind='line', y='DU MEMORY (MiBytes)', label='vDU', ax=ax)

dataframe.plot(kind='line', y='RU MEMORY (MiBytes)', label='vRU', ax=ax)

#dataframe.plot(kind='line', y='K8S MEMORY (MiBytes)', label='K8S', ax=ax)

dataframe.plot(kind='line', y='OPERATOR MEMORY (MiBytes)', label='PlaceRAN Orchestrator', ax=ax)

ax.annotate('$\it{t0}$', xy=(0,94), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 0,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t1}$', xy=(70,94), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 70,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t2}$', xy=(130,147), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 130,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t3}$', xy=(185,1396), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 185,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t4}$', xy=(245,1580), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 245,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t5}$', xy=(300,1685), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 300,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t6}$', xy=(360,1688), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 360,linestyle ="dotted", color='black', alpha=0.3)

ax.annotate('$\it{t7}$', xy=(415,1688), xytext=(15, 15), textcoords='offset points', arrowprops=dict(arrowstyle='->', color='black'), fontsize=10)

plt.axvline(x = 415,linestyle ="dotted", color='black', alpha=0.3)

plt.xlabel("Time (s)")

plt.ylabel("MEMORY (MiBytes)")

plt.legend()

plt.savefig('out/MEM_PODS.pdf', bbox_inches='tight')

plt.savefig('out/MEM_PODS.png', dpi=300, bbox_inches='tight')

| 0.503662 | 0.410018 |

# Components

gdsfactory provides a generic customizable components library in `gf.components`

## Basic shapes

### Rectangle

To create a simple rectangle, there are two functions:

``gf.components.rectangle()`` can create a basic rectangle:

```

import gdsfactory as gf

gf.components.rectangle(size=(4.5, 2), layer=(1, 0))

```

``gf.components.bbox()`` can also create a rectangle based on a bounding box.

This is useful if you want to create a rectangle which exactly surrounds a piece of existing geometry.

For example, if we have an arc geometry and we want to define a box around it, we can use ``gf.components.bbox()``:

```

D = gf.Component()

arc = D << gf.components.bend_circular(radius=10, width=0.5, angle=90, layer=(1, 0))

arc.rotate(90)

# Draw a rectangle around the arc we created by using the arc's bounding box

rect = D << gf.components.bbox(bbox=arc.bbox, layer=(0, 0))

D

```

### Cross

The ``gf.components.cross()`` function creates a cross structure:

```

gf.components.cross(length=10, width=0.5, layer=(1, 0))

```

### Ellipse

The ``gf.components.ellipse()`` function creates an ellipse by defining the major and minor radii:

```

gf.components.ellipse(radii=(10, 5), angle_resolution=2.5, layer=(1, 0))

```

### Circle

The ``gf.components.circle()`` function creates a circle:

```

gf.components.circle(radius=10, angle_resolution=2.5, layer=(1, 0))

```

### Ring

The ``gf.components.ring()`` function creates a ring. The radius refers to the center radius of the ring structure (halfway between the inner and outer radius).

```

gf.components.ring(radius=5, width=0.5, angle_resolution=2.5, layer=(1, 0))

gf.components.ring_single(

width=0.5, gap=0.2, radius=10, length_x=4, length_y=2, layer=(1, 0)

)

import gdsfactory as gf

gf.components.ring_double(

width=0.5, gap=0.2, radius=10, length_x=4, length_y=2, layer=(1, 0)

)

gf.components.ring_double(

width=0.5,

gap=0.2,

radius=10,

length_x=4,

length_y=2,

layer=(1, 0),

bend=gf.components.bend_circular,

)

```

### Bend circular

The ``gf.components.bend_circular()`` function creates an arc. The radius refers to the center radius of the arc (halfway between the inner and outer radius).

```

gf.components.bend_circular(radius=2.0, width=0.5, angle=90, npoints=720, layer=(1, 0))

```

### Bend euler

The ``gf.components.bend_euler()`` function creates an adiabatic bend in which the bend radius changes gradually. Euler bends have lower loss than circular bends.

```

gf.components.bend_euler(radius=2.0, width=0.5, angle=90, npoints=720, layer=(1, 0))

```

### Tapers

`gf.components.taper()`is defined by setting its length and its start and end length. It has two ports, ``1`` and ``2``, on either end, allowing you to easily connect it to other structures.

```

gf.components.taper(length=10, width1=6, width2=4, port=None, layer=(1, 0))

```

`gf.components.ramp()` is a structure is similar to `taper()` except it is asymmetric. It also has two ports, ``1`` and ``2``, on either end.

```

gf.components.ramp(length=10, width1=4, width2=8, layer=(1, 0))

```

### Common compound shapes

The `gf.components.L()` function creates a "L" shape with ports on either end named ``1`` and ``2``.

```

gf.components.L(width=7, size=(10, 20), layer=(1, 0))

```

The `gf.components.C()` function creates a "C" shape with ports on either end named ``1`` and ``2``.

```

gf.components.C(width=7, size=(10, 20), layer=(1, 0))

```

## Text

Gdsfactory has an implementation of the DEPLOF font with the majority of english ASCII characters represented (thanks to phidl)

```

gf.components.text(

text="Hello world!\nMultiline text\nLeft-justified",

size=10,

justify="left",

layer=(1, 0),

)

# `justify` should be either 'left', 'center', or 'right'

```

## Grid / packer / align / distribute

### Grid

The ``gf.components.grid()`` function can take a list (or 2D array) of objects and arrange them along a grid. This is often useful for making parameter sweeps. If the `separation` argument is true, grid is arranged such that the elements are guaranteed not to touch, with a `spacing` distance between them. If `separation` is false, elements are spaced evenly along a grid. The `align_x`/`align_y` arguments specify intra-row/intra-column alignment. The`edge_x`/`edge_y` arguments specify inter-row/inter-column alignment (unused if `separation = True`).

```

import gdsfactory as gf

components_list = []

for width1 in [1, 6, 9]:

for width2 in [1, 2, 4, 8]:

D = gf.components.taper(length=10, width1=width1, width2=width2, layer=(1, 0))

components_list.append(D)

c = gf.grid(

components_list,

spacing=(5, 1),

separation=True,

shape=(3, 4),

align_x="x",

align_y="y",

edge_x="x",

edge_y="ymax",

)

c

```

### Packer

The ``gf.pack()`` function packs geometries together into rectangular bins. If a ``max_size`` is specified, the function will create as many bins as is necessary to pack all the geometries and then return a list of the filled-bin Devices.

Here we generate several random shapes then pack them together automatically. We allow the bin to be as large as needed to fit all the Devices by specifying ``max_size = (None, None)``. By setting ``aspect_ratio = (2,1)``, we specify the rectangular bin it tries to pack them into should be twice as wide as it is tall:

```

import numpy as np

import gdsfactory as gf

np.random.seed(5)

D_list = [gf.components.rectangle(size=(i, i)) for i in range(1, 10)]

D_packed_list = gf.pack(

D_list, # Must be a list or tuple of Devices

spacing=1.25, # Minimum distance between adjacent shapes

aspect_ratio=(2, 1), # (width, height) ratio of the rectangular bin

max_size=(None, None), # Limits the size into which the shapes will be packed

density=1.05, # Values closer to 1 pack tighter but require more computation

sort_by_area=True, # Pre-sorts the shapes by area

)

D = D_packed_list[0] # Only one bin was created, so we plot that

D

```

Say we need to pack many shapes into multiple 500x500 unit die. If we set ``max_size = (500,500)`` the shapes will be packed into as many 500x500 unit die as required to fit them all:

```

np.random.seed(1)

D_list = [

gf.components.ellipse(radii=tuple(np.random.rand(2) * n + 2)) for n in range(120)

]

D_packed_list = gf.pack(

D_list, # Must be a list or tuple of Devices

spacing=4, # Minimum distance between adjacent shapes

aspect_ratio=(1, 1), # Shape of the box

max_size=(500, 500), # Limits the size into which the shapes will be packed

density=1.05, # Values closer to 1 pack tighter but require more computation

sort_by_area=True, # Pre-sorts the shapes by area

)

# Put all packed bins into a single device and spread them out with distribute()

F = gf.Component()

[F.add_ref(D) for D in D_packed_list]

F.distribute(elements="all", direction="x", spacing=100, separation=True)

F

```

Note that the packing problem is an NP-complete problem, so ``gf.components.packer()`` may be slow if there are more than a few hundred Devices to pack (in that case, try pre-packing a few dozen at a time then packing the resulting bins). Requires the ``rectpack`` python package.

### Distribute

The ``distribute()`` function allows you to space out elements within a Device evenly in the x or y direction. It is meant to duplicate the distribute functionality present in Inkscape / Adobe Illustrator:

Say we start out with a few random-sized rectangles we want to space out:

```

c = gf.Component()

# Create different-sized rectangles and add them to D

[

c.add_ref(

gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20], layer=(0, 0))

).move([n, n * 4])

for n in [0, 2, 3, 1, 2]

]

c

```

Oftentimes, we want to guarantee some distance between the objects. By setting ``separation = True`` we move each object such that there is ``spacing`` distance between them:

```

D = gf.Component()

# Create different-sized rectangles and add them to D

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move((n, n * 4))

for n in [0, 2, 3, 1, 2]

]

# Distribute all the rectangles in D along the x-direction with a separation of 5

D.distribute(

elements="all", # either 'all' or a list of objects

direction="x", # 'x' or 'y'

spacing=5,

separation=True,

)

D

```

Alternatively, we can spread them out on a fixed grid by setting ``separation = False``. Here we align the left edge (``edge = 'min'``) of each object along a grid spacing of 100:

```

D = gf.Component()

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move((n, n * 4))

for n in [0, 2, 3, 1, 2]

]

D.distribute(

elements="all", direction="x", spacing=100, separation=False, edge="xmin"

) # edge must be either 'xmin' (left), 'xmax' (right), or 'x' (center)

D

```

The alignment can be done along the right edge as well by setting ``edge = 'max'``, or along the center by setting ``edge = 'center'`` like in the following:

```

D = gf.Component()

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move(

(n - 10, n * 4)

)

for n in [0, 2, 3, 1, 2]

]

D.distribute(

elements="all", direction="x", spacing=100, separation=False, edge="x"

) # edge must be either 'xmin' (left), 'xmax' (right), or 'x' (center)

D

```

### Align

The ``align()`` function allows you to elements within a Device horizontally or vertically. It is meant to duplicate the alignment functionality present in Inkscape / Adobe Illustrator:

Say we ``distribute()`` a few objects, but they're all misaligned:

```

D = gf.Component()

# Create different-sized rectangles and add them to D then distribute them

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move((n, n * 4))

for n in [0, 2, 3, 1, 2]

]

D.distribute(elements="all", direction="x", spacing=5, separation=True)

D

```

we can use the ``align()`` function to align their top edges (``alignment = 'ymax'):

```

D = gf.Component()

# Create different-sized rectangles and add them to D then distribute them

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move((n, n * 4))

for n in [0, 2, 3, 1, 2]

]

D.distribute(elements="all", direction="x", spacing=5, separation=True)

# Align top edges

D.align(elements="all", alignment="ymax")

D

```

or align their centers (``alignment = 'y'):

```

D = gf.Component()

# Create different-sized rectangles and add them to D then distribute them

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move((n, n * 4))

for n in [0, 2, 3, 1, 2]

]

D.distribute(elements="all", direction="x", spacing=5, separation=True)

# Align top edges

D.align(elements="all", alignment="y")

D

```

other valid alignment options include ``'xmin', 'x', 'xmax', 'ymin', 'y', and 'ymax'``

## Boolean / outline / offset / invert

There are several common boolean-type operations available in the geometry library. These include typical boolean operations (and/or/not/xor), offsetting (expanding/shrinking polygons), outlining, and inverting.

### Boolean

The ``gf.boolean.boolean()`` function can perform AND/OR/NOT/XOR operations, and will return a new geometry with the result of that operation.

Speedup note: The ``num_divisions`` argument can be used to divide up the geometry into multiple rectangular regions and process each region sequentially (which is more computationally efficient). If you have a large geometry that takes a long time to process, try using ``num_divisions = [10,10]`` to optimize the operation.

```

import gdsfactory as gf

E = gf.components.ellipse(radii=(10, 5), layer=(1, 0))

R = gf.components.rectangle(size=[15, 5], layer=(2, 0))

C = gf.geometry.boolean(

A=E, B=R, operation="not", precision=1e-6, num_divisions=[1, 1], layer=(0, 0)

)

# Other operations include 'and', 'or', 'xor', or equivalently 'A-B', 'B-A', 'A+B'

# Plot the originals and the result

D = gf.Component()

D.add_ref(E)

D.add_ref(R).movey(-1.5)

D.add_ref(C).movex(30)

D

```

To learn how booleans work you can try all the different operations `not`, `and`, `or`, `xor`

```

import gdsfactory as gf

operation = "not"

operation = "and"

operation = "or"

operation = "xor"

r1 = (8, 8)

r2 = (11, 4)

r1 = (80, 80)

r2 = (110, 40)

angle_resolution = 0.1

c1 = gf.components.ellipse(radii=r1, layer=(1, 0), angle_resolution=angle_resolution)

c2 = gf.components.ellipse(radii=r2, layer=(1, 0), angle_resolution=angle_resolution)

%time

c3 = gf.geometry.boolean_klayout(

c1, c2, operation=operation, layer3=(1, 0)

) # klayout booleans

c3

%time

c4 = gf.geometry.boolean(c1, c2, operation=operation)

c4

```

### Offset

The ``offset()`` function takes the polygons of the input geometry, combines them together, and expands/contracts them. The function returns polygons on a single layer -- it does not respect layers.

Speedup note: The ``num_divisions`` argument can be used to divide up the geometry into multiple rectangular regions and process each region sequentially (which is more computationally efficient). If you have a large geometry that takes a long time to process, try using ``num_divisions = [10,10]`` to optimize the operation.

```

import gdsfactory as gf

# Create `T`, an ellipse and rectangle which will be offset (expanded / contracted)

T = gf.Component("ellipse_and_rectangle")

e = T << gf.components.ellipse(radii=(10, 5), layer=(1, 0))

r = T << gf.components.rectangle(size=[15, 5], layer=(2, 0))

r.move([3, -2.5])

Texpanded = gf.geometry.offset(

T, distance=2, join_first=True, precision=1e-6, num_divisions=[1, 1], layer=(0, 0)

)

Texpanded.name = "expanded"

Tshrink = gf.geometry.offset(

T,

distance=-1.5,

join_first=True,

precision=1e-6,

num_divisions=[1, 1],

layer=(0, 0),

)

Tshrink.name = "shrink"

# Plot the original geometry, the expanded, and the shrunk versions

D = gf.Component("top")

t1 = D.add_ref(T)

t2 = D.add_ref(Texpanded)

t3 = D.add_ref(Tshrink)

D.distribute([t1, t2, t3], direction="x", spacing=5)

D

```

### Outline

The ``outline()`` function takes the polygons of the input geometry then performs an offset and "not" boolean operation to create an outline. The function returns polygons on a single layer -- it does not respect layers.

Speedup note: The ``num_divisions`` argument can be used to divide up the geometry into multiple rectangular regions and process each region sequentially (which is more computationally efficient). If you have a large geometry that takes a long time to process, try using ``num_divisions = [10,10]`` to optimize the operation.

```

import gdsfactory as gf

# Create a blank device and add two shapes

X = gf.Component()

X.add_ref(gf.components.cross(length=25, width=1, layer=(1, 0)))

X.add_ref(gf.components.ellipse(radii=[10, 5], layer=(2, 0)))

O = gf.geometry.outline(X, distance=1.5, precision=1e-6, layer=(0, 0))

# Plot the original geometry and the result

D = gf.Component()

D.add_ref(X)

D.add_ref(O).movex(30)

D

```

The ``open_ports`` argument opens holes in the outlined geometry at each Port location.

- If not False, holes will be cut in the outline such that the Ports are not covered.

- If True, the holes will have the same width as the Ports.

- If a float, the holes will be widened by that value.

- If a float equal to the outline ``distance``, the outline will be flush with the port (useful positive-tone processes).

```

D = gf.components.L(width=7, size=(10, 20), layer=(1, 0))

D

# Outline the geometry and open a hole at each port

N = gf.geometry.outline(D, distance=5, open_ports=False) # No holes

N

O = gf.geometry.outline(

D, distance=5, open_ports=True

) # Hole is the same width as the port

O

P = gf.geometry.outline(

D, distance=5, open_ports=2.9

) # Change the hole size by entering a float

P

Q = gf.geometry.outline(

D, distance=5, open_ports=5

) # Creates flush opening (open_ports > distance)

Q

```

### Invert

The ``gf.boolean.invert()`` function creates an inverted version of the input geometry. The function creates a rectangle around the geometry (with extra padding of distance ``border``), then subtract all polygons from all layers from that rectangle, resulting in an inverted version of the geometry.

Speedup note: The ``num_divisions`` argument can be used to divide up the geometry into multiple rectangular regions and process each region sequentially (which is more computationally efficient). If you have a large geometry that takes a long time to process, try using ``num_divisions = [10,10]`` to optimize the operation.

```

import gdsfactory as gf

E = gf.components.ellipse(radii=(10, 5))

D = gf.geometry.invert(E, border=0.5, precision=1e-6, layer=(0, 0))

D

```

### Union

The ``union()`` function is a "join" function, and is functionally identical to the "OR" operation of ``gf.boolean()``. The one difference is it's able to perform this function layer-wise, so each layer can be individually combined.

```

import gdsfactory as gf

D = gf.Component()

e0 = D << gf.components.ellipse(layer=(1, 0))

e1 = D << gf.components.ellipse(layer=(2, 0))

e2 = D << gf.components.ellipse(layer=(3, 0))

e3 = D << gf.components.ellipse(layer=(4, 0))

e4 = D << gf.components.ellipse(layer=(5, 0))

e5 = D << gf.components.ellipse(layer=(6, 0))

e1.rotate(15 * 1)

e2.rotate(15 * 2)

e3.rotate(15 * 3)

e4.rotate(15 * 4)

e5.rotate(15 * 5)

D

# We have two options to unioning - take all polygons, regardless of

# layer, and join them together (in this case on layer (0,0) like so:

D_joined = gf.geometry.union(D, by_layer=False, layer=(0, 0))

D_joined

# Or we can perform the union operate by-layer

D_joined_by_layer = gf.geometry.union(D, by_layer=True)

D_joined_by_layer

```

### XOR / diff

The ``xor_diff()`` function can be used to compare two geometries and identify where they are different. Specifically, it performs a layer-wise XOR operation. If two geometries are identical, the result will be an empty Device. If they are not identical, any areas not shared by the two geometries will remain.

```

import gdsfactory as gf

A = gf.Component()

A.add_ref(gf.components.ellipse(radii=[10, 5], layer=(1, 0)))

A.add_ref(gf.components.text("A")).move([3, 0])

B = gf.Component()

B.add_ref(gf.components.ellipse(radii=[11, 4], layer=(1, 0))).movex(4)

B.add_ref(gf.components.text("B")).move([3.2, 0])

X = gf.geometry.xor_diff(A=A, B=B, precision=1e-6)

# Plot the original geometry and the result

# Upper left: A / Upper right: B

# Lower left: A and B / Lower right: A xor B "diff" comparison

D = gf.Component()

D.add_ref(A).move([-15, 25])

D.add_ref(B).move([15, 25])

D.add_ref(A).movex(-15)

D.add_ref(B).movex(-15)

D.add_ref(X).movex(15)

D

```

## Lithography structures

### Step-resolution

The `gf.components.litho_steps()` function creates lithographic test structure that is useful for measuring resolution of photoresist or electron-beam resists. It provides both positive-tone and negative-tone resolution tests.

```

D = gf.components.litho_steps(

line_widths=[1, 2, 4, 8, 16], line_spacing=10, height=100, layer=(1, 0)

)

D

```

### Calipers (inter-layer alignment)

The `gf.components.litho_calipers()` function is used to detect offsets in multilayer fabrication. It creates a two sets of notches on different layers. When an fabrication error/offset occurs, it is easy to detect how much the offset is because both center-notches are no longer aligned.

```

D = gf.components.litho_calipers(

notch_size=[1, 5],

notch_spacing=2,

num_notches=7,

offset_per_notch=0.1,

row_spacing=0,

layer1=(1, 0),

layer2=(2, 0),

)

D

```

## Paths / straights

See the **Path tutorial** for more details -- this is just an enumeration of the available built-in Path functions

### Circular arc

```

P = gf.path.arc(radius=10, angle=135, npoints=720)

gf.plot(P)

```

### Straight

```

import gdsfactory as gf

P = gf.path.straight(length=5, npoints=100)

gf.plot(P)

```

### Euler curve

Also known as a straight-to-bend, clothoid, racetrack, or track transition, this Path tapers adiabatically from straight to curved. Often used to minimize losses in photonic straights. If `p < 1.0`, will create a "partial euler" curve as described in Vogelbacher et. al. https://dx.doi.org/10.1364/oe.27.031394. If the `use_eff` argument is false, `radius` corresponds to minimum radius of curvature of the bend. If `use_eff` is true, `radius` corresponds to the "effective" radius of the bend-- The curve will be scaled such that the endpoints match an arc with parameters `radius` and `angle`.

```

P = gf.path.euler(radius=3, angle=90, p=1.0, use_eff=False, npoints=720)

gf.plot(P)

P

```

### Smooth path from waypoints

```

import numpy as np

import gdsfactory as gf

points = np.array([(20, 10), (40, 10), (20, 40), (50, 40), (50, 20), (70, 20)])

P = gf.path.smooth(

points=points,

radius=2,

bend=gf.path.euler,

use_eff=False,

)

gf.plot(P)

```

### Delay spiral

```

gf.components.spiral()

```

## Importing GDS files

`gf.import_gds()` allows you to easily import external GDSII files. It imports a single cell from the external GDS file and converts it into a gdsfactory component.

```

D = gf.components.ellipse()

D.write_gds("myoutput.gds")

D = gf.import_gds(gdspath="myoutput.gds", cellname=None, flatten=False)

D

```

## LayerSet

The `LayerSet` class allows you to predefine a collection of layers and specify their properties including: gds layer/datatype, name, and color. It also comes with a handy preview function called `gf.layers.preview_layerset()`

```

import gdsfactory as gf

lys = gf.layers.LayerSet()

lys.add_layer("p", color="lightblue", gds_layer=1, gds_datatype=0)

lys.add_layer("p+", color="blue", gds_layer=2, gds_datatype=0)

lys.add_layer("p++", color="darkblue", gds_layer=3, gds_datatype=0)

lys.add_layer("n", color="lightgreen", gds_layer=4, gds_datatype=0)

lys.add_layer("n+", color="green", gds_layer=4, gds_datatype=98)

lys.add_layer("n++", color="darkgreen", gds_layer=5, gds_datatype=99)

D = gf.layers.preview_layerset(lys, size=100, spacing=100)

D

```

## Useful contact pads / connectors

These functions are common shapes with ports, often used to make contact pads

```

D = gf.components.compass(size=(4, 2), layer=(1, 0))

D

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.compass_multi(size=(4, 2), ports={"N": 3, "S": 4}, layer=(1, 0))

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.flagpole(

size=(50, 25), stub_size=(4, 8), shape="p", taper_type="fillet", layer=(1, 0)

)

# taper_type should be None, 'fillet', or 'straight'

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.straight(size=(4, 2), layer=(1, 0))

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.connector(midpoint=(0, 0), width=1, orientation=0)

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.tee(size=(8, 4), stub_size=(1, 2), taper_type="fillet", layer=(1, 0))

# taper_type should be None, 'fillet', or 'straight'

plot(D)

```

## Chip / die template

```

import gdsfactory as gf

D = gf.components.die(

size=(10000, 5000), # Size of die

street_width=100, # Width of corner marks for die-sawing

street_length=1000, # Length of corner marks for die-sawing

die_name="chip99", # Label text

text_size=500, # Label text size

text_location="SW", # Label text compass location e.g. 'S', 'SE', 'SW'

layer=(2,0),

bbox_layer=(3,0),

)

D

```

## Optimal superconducting curves

The following structures are meant to reduce "current crowding" in superconducting thin-film structures (such as superconducting nanowires). They are the result of conformal mapping equations derived in Clem, J. & Berggren, K. "[Geometry-dependent critical currents in superconducting nanocircuits." Phys. Rev. B 84, 1–27 (2011).](http://dx.doi.org/10.1103/PhysRevB.84.174510)

```

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.optimal_hairpin(

width=0.2, pitch=0.6, length=10, turn_ratio=4, num_pts=50, layer=0

)

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.optimal_step(

start_width=10,

end_width=22,

num_pts=50,

width_tol=1e-3,

anticrowding_factor=1.2,

symmetric=False,

layer=0,

)

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.optimal_90deg(width=100.0, num_pts=15, length_adjust=1, layer=0)

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.snspd(

wire_width=0.2,

wire_pitch=0.6,

size=(10, 8),

num_squares=None,

turn_ratio=4,

terminals_same_side=False,

layer=0,

)

plot(D) # quickplot the geometry

D = pg.snspd_expanded(

wire_width=0.3,

wire_pitch=0.6,

size=(10, 8),

num_squares=None,

connector_width=1,

connector_symmetric=False,

turn_ratio=4,

terminals_same_side=False,

layer=0,

)

plot(D) # quickplot the geometry

```

## Copying and extracting geometry

```

E = gf.Component()

E.add_ref(gf.components.ellipse(layer=(1, 0)))

D = E.extract(layers=[(1, 0)])

D

import gdsfactory as gf

X = gf.components.ellipse(layer=(2, 0))

c = X.copy()

c

```

|

github_jupyter

|

import gdsfactory as gf

gf.components.rectangle(size=(4.5, 2), layer=(1, 0))

D = gf.Component()

arc = D << gf.components.bend_circular(radius=10, width=0.5, angle=90, layer=(1, 0))

arc.rotate(90)

# Draw a rectangle around the arc we created by using the arc's bounding box

rect = D << gf.components.bbox(bbox=arc.bbox, layer=(0, 0))

D

gf.components.cross(length=10, width=0.5, layer=(1, 0))

gf.components.ellipse(radii=(10, 5), angle_resolution=2.5, layer=(1, 0))

gf.components.circle(radius=10, angle_resolution=2.5, layer=(1, 0))

gf.components.ring(radius=5, width=0.5, angle_resolution=2.5, layer=(1, 0))

gf.components.ring_single(

width=0.5, gap=0.2, radius=10, length_x=4, length_y=2, layer=(1, 0)

)

import gdsfactory as gf

gf.components.ring_double(

width=0.5, gap=0.2, radius=10, length_x=4, length_y=2, layer=(1, 0)

)

gf.components.ring_double(

width=0.5,

gap=0.2,

radius=10,

length_x=4,

length_y=2,

layer=(1, 0),

bend=gf.components.bend_circular,

)

gf.components.bend_circular(radius=2.0, width=0.5, angle=90, npoints=720, layer=(1, 0))

gf.components.bend_euler(radius=2.0, width=0.5, angle=90, npoints=720, layer=(1, 0))

gf.components.taper(length=10, width1=6, width2=4, port=None, layer=(1, 0))

gf.components.ramp(length=10, width1=4, width2=8, layer=(1, 0))

gf.components.L(width=7, size=(10, 20), layer=(1, 0))

gf.components.C(width=7, size=(10, 20), layer=(1, 0))

gf.components.text(

text="Hello world!\nMultiline text\nLeft-justified",

size=10,

justify="left",

layer=(1, 0),

)

# `justify` should be either 'left', 'center', or 'right'

import gdsfactory as gf

components_list = []

for width1 in [1, 6, 9]:

for width2 in [1, 2, 4, 8]:

D = gf.components.taper(length=10, width1=width1, width2=width2, layer=(1, 0))

components_list.append(D)

c = gf.grid(

components_list,

spacing=(5, 1),

separation=True,

shape=(3, 4),

align_x="x",

align_y="y",

edge_x="x",

edge_y="ymax",

)

c

import numpy as np

import gdsfactory as gf

np.random.seed(5)

D_list = [gf.components.rectangle(size=(i, i)) for i in range(1, 10)]

D_packed_list = gf.pack(

D_list, # Must be a list or tuple of Devices

spacing=1.25, # Minimum distance between adjacent shapes

aspect_ratio=(2, 1), # (width, height) ratio of the rectangular bin

max_size=(None, None), # Limits the size into which the shapes will be packed

density=1.05, # Values closer to 1 pack tighter but require more computation

sort_by_area=True, # Pre-sorts the shapes by area

)

D = D_packed_list[0] # Only one bin was created, so we plot that

D

np.random.seed(1)

D_list = [

gf.components.ellipse(radii=tuple(np.random.rand(2) * n + 2)) for n in range(120)

]

D_packed_list = gf.pack(

D_list, # Must be a list or tuple of Devices

spacing=4, # Minimum distance between adjacent shapes

aspect_ratio=(1, 1), # Shape of the box

max_size=(500, 500), # Limits the size into which the shapes will be packed

density=1.05, # Values closer to 1 pack tighter but require more computation

sort_by_area=True, # Pre-sorts the shapes by area

)

# Put all packed bins into a single device and spread them out with distribute()

F = gf.Component()

[F.add_ref(D) for D in D_packed_list]

F.distribute(elements="all", direction="x", spacing=100, separation=True)

F

c = gf.Component()

# Create different-sized rectangles and add them to D

[

c.add_ref(

gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20], layer=(0, 0))

).move([n, n * 4])

for n in [0, 2, 3, 1, 2]

]

c

D = gf.Component()

# Create different-sized rectangles and add them to D

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move((n, n * 4))

for n in [0, 2, 3, 1, 2]

]

# Distribute all the rectangles in D along the x-direction with a separation of 5

D.distribute(

elements="all", # either 'all' or a list of objects

direction="x", # 'x' or 'y'

spacing=5,

separation=True,

)

D

D = gf.Component()

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move((n, n * 4))

for n in [0, 2, 3, 1, 2]

]

D.distribute(

elements="all", direction="x", spacing=100, separation=False, edge="xmin"

) # edge must be either 'xmin' (left), 'xmax' (right), or 'x' (center)

D

D = gf.Component()

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move(

(n - 10, n * 4)

)

for n in [0, 2, 3, 1, 2]

]

D.distribute(

elements="all", direction="x", spacing=100, separation=False, edge="x"

) # edge must be either 'xmin' (left), 'xmax' (right), or 'x' (center)

D

D = gf.Component()

# Create different-sized rectangles and add them to D then distribute them

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move((n, n * 4))

for n in [0, 2, 3, 1, 2]

]

D.distribute(elements="all", direction="x", spacing=5, separation=True)

D

D = gf.Component()

# Create different-sized rectangles and add them to D then distribute them

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move((n, n * 4))

for n in [0, 2, 3, 1, 2]

]

D.distribute(elements="all", direction="x", spacing=5, separation=True)

# Align top edges

D.align(elements="all", alignment="ymax")

D

D = gf.Component()

# Create different-sized rectangles and add them to D then distribute them

[

D.add_ref(gf.components.rectangle(size=[n * 15 + 20, n * 15 + 20])).move((n, n * 4))

for n in [0, 2, 3, 1, 2]

]

D.distribute(elements="all", direction="x", spacing=5, separation=True)

# Align top edges

D.align(elements="all", alignment="y")

D

import gdsfactory as gf

E = gf.components.ellipse(radii=(10, 5), layer=(1, 0))

R = gf.components.rectangle(size=[15, 5], layer=(2, 0))

C = gf.geometry.boolean(

A=E, B=R, operation="not", precision=1e-6, num_divisions=[1, 1], layer=(0, 0)

)

# Other operations include 'and', 'or', 'xor', or equivalently 'A-B', 'B-A', 'A+B'

# Plot the originals and the result

D = gf.Component()

D.add_ref(E)

D.add_ref(R).movey(-1.5)

D.add_ref(C).movex(30)

D

import gdsfactory as gf

operation = "not"

operation = "and"

operation = "or"

operation = "xor"

r1 = (8, 8)

r2 = (11, 4)

r1 = (80, 80)

r2 = (110, 40)

angle_resolution = 0.1

c1 = gf.components.ellipse(radii=r1, layer=(1, 0), angle_resolution=angle_resolution)

c2 = gf.components.ellipse(radii=r2, layer=(1, 0), angle_resolution=angle_resolution)

%time

c3 = gf.geometry.boolean_klayout(

c1, c2, operation=operation, layer3=(1, 0)

) # klayout booleans

c3

%time

c4 = gf.geometry.boolean(c1, c2, operation=operation)

c4

import gdsfactory as gf

# Create `T`, an ellipse and rectangle which will be offset (expanded / contracted)

T = gf.Component("ellipse_and_rectangle")

e = T << gf.components.ellipse(radii=(10, 5), layer=(1, 0))

r = T << gf.components.rectangle(size=[15, 5], layer=(2, 0))

r.move([3, -2.5])

Texpanded = gf.geometry.offset(

T, distance=2, join_first=True, precision=1e-6, num_divisions=[1, 1], layer=(0, 0)

)

Texpanded.name = "expanded"

Tshrink = gf.geometry.offset(

T,

distance=-1.5,

join_first=True,

precision=1e-6,

num_divisions=[1, 1],

layer=(0, 0),

)

Tshrink.name = "shrink"

# Plot the original geometry, the expanded, and the shrunk versions

D = gf.Component("top")

t1 = D.add_ref(T)

t2 = D.add_ref(Texpanded)

t3 = D.add_ref(Tshrink)

D.distribute([t1, t2, t3], direction="x", spacing=5)

D

import gdsfactory as gf

# Create a blank device and add two shapes

X = gf.Component()

X.add_ref(gf.components.cross(length=25, width=1, layer=(1, 0)))

X.add_ref(gf.components.ellipse(radii=[10, 5], layer=(2, 0)))

O = gf.geometry.outline(X, distance=1.5, precision=1e-6, layer=(0, 0))

# Plot the original geometry and the result

D = gf.Component()

D.add_ref(X)

D.add_ref(O).movex(30)

D

D = gf.components.L(width=7, size=(10, 20), layer=(1, 0))

D

# Outline the geometry and open a hole at each port

N = gf.geometry.outline(D, distance=5, open_ports=False) # No holes

N

O = gf.geometry.outline(

D, distance=5, open_ports=True

) # Hole is the same width as the port

O

P = gf.geometry.outline(

D, distance=5, open_ports=2.9

) # Change the hole size by entering a float

P

Q = gf.geometry.outline(

D, distance=5, open_ports=5

) # Creates flush opening (open_ports > distance)

Q

import gdsfactory as gf

E = gf.components.ellipse(radii=(10, 5))

D = gf.geometry.invert(E, border=0.5, precision=1e-6, layer=(0, 0))

D

import gdsfactory as gf

D = gf.Component()

e0 = D << gf.components.ellipse(layer=(1, 0))

e1 = D << gf.components.ellipse(layer=(2, 0))

e2 = D << gf.components.ellipse(layer=(3, 0))

e3 = D << gf.components.ellipse(layer=(4, 0))

e4 = D << gf.components.ellipse(layer=(5, 0))

e5 = D << gf.components.ellipse(layer=(6, 0))

e1.rotate(15 * 1)

e2.rotate(15 * 2)

e3.rotate(15 * 3)

e4.rotate(15 * 4)

e5.rotate(15 * 5)

D

# We have two options to unioning - take all polygons, regardless of

# layer, and join them together (in this case on layer (0,0) like so:

D_joined = gf.geometry.union(D, by_layer=False, layer=(0, 0))

D_joined

# Or we can perform the union operate by-layer

D_joined_by_layer = gf.geometry.union(D, by_layer=True)

D_joined_by_layer

import gdsfactory as gf

A = gf.Component()

A.add_ref(gf.components.ellipse(radii=[10, 5], layer=(1, 0)))

A.add_ref(gf.components.text("A")).move([3, 0])

B = gf.Component()

B.add_ref(gf.components.ellipse(radii=[11, 4], layer=(1, 0))).movex(4)

B.add_ref(gf.components.text("B")).move([3.2, 0])

X = gf.geometry.xor_diff(A=A, B=B, precision=1e-6)

# Plot the original geometry and the result

# Upper left: A / Upper right: B

# Lower left: A and B / Lower right: A xor B "diff" comparison

D = gf.Component()

D.add_ref(A).move([-15, 25])

D.add_ref(B).move([15, 25])

D.add_ref(A).movex(-15)

D.add_ref(B).movex(-15)

D.add_ref(X).movex(15)

D

D = gf.components.litho_steps(

line_widths=[1, 2, 4, 8, 16], line_spacing=10, height=100, layer=(1, 0)

)

D

D = gf.components.litho_calipers(

notch_size=[1, 5],

notch_spacing=2,

num_notches=7,

offset_per_notch=0.1,

row_spacing=0,

layer1=(1, 0),

layer2=(2, 0),

)

D

P = gf.path.arc(radius=10, angle=135, npoints=720)

gf.plot(P)

import gdsfactory as gf

P = gf.path.straight(length=5, npoints=100)

gf.plot(P)

P = gf.path.euler(radius=3, angle=90, p=1.0, use_eff=False, npoints=720)

gf.plot(P)

P

import numpy as np

import gdsfactory as gf

points = np.array([(20, 10), (40, 10), (20, 40), (50, 40), (50, 20), (70, 20)])

P = gf.path.smooth(

points=points,

radius=2,

bend=gf.path.euler,

use_eff=False,

)

gf.plot(P)

gf.components.spiral()

D = gf.components.ellipse()

D.write_gds("myoutput.gds")

D = gf.import_gds(gdspath="myoutput.gds", cellname=None, flatten=False)

D

import gdsfactory as gf

lys = gf.layers.LayerSet()

lys.add_layer("p", color="lightblue", gds_layer=1, gds_datatype=0)

lys.add_layer("p+", color="blue", gds_layer=2, gds_datatype=0)

lys.add_layer("p++", color="darkblue", gds_layer=3, gds_datatype=0)

lys.add_layer("n", color="lightgreen", gds_layer=4, gds_datatype=0)

lys.add_layer("n+", color="green", gds_layer=4, gds_datatype=98)

lys.add_layer("n++", color="darkgreen", gds_layer=5, gds_datatype=99)

D = gf.layers.preview_layerset(lys, size=100, spacing=100)

D

D = gf.components.compass(size=(4, 2), layer=(1, 0))

D

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.compass_multi(size=(4, 2), ports={"N": 3, "S": 4}, layer=(1, 0))

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.flagpole(

size=(50, 25), stub_size=(4, 8), shape="p", taper_type="fillet", layer=(1, 0)

)

# taper_type should be None, 'fillet', or 'straight'

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.straight(size=(4, 2), layer=(1, 0))

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.connector(midpoint=(0, 0), width=1, orientation=0)

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.tee(size=(8, 4), stub_size=(1, 2), taper_type="fillet", layer=(1, 0))

# taper_type should be None, 'fillet', or 'straight'

plot(D)

import gdsfactory as gf

D = gf.components.die(

size=(10000, 5000), # Size of die

street_width=100, # Width of corner marks for die-sawing

street_length=1000, # Length of corner marks for die-sawing

die_name="chip99", # Label text

text_size=500, # Label text size

text_location="SW", # Label text compass location e.g. 'S', 'SE', 'SW'

layer=(2,0),

bbox_layer=(3,0),

)

D

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.optimal_hairpin(

width=0.2, pitch=0.6, length=10, turn_ratio=4, num_pts=50, layer=0

)

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.optimal_step(

start_width=10,

end_width=22,

num_pts=50,

width_tol=1e-3,

anticrowding_factor=1.2,

symmetric=False,

layer=0,

)

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.optimal_90deg(width=100.0, num_pts=15, length_adjust=1, layer=0)

plot(D) # quickplot the geometry

import phidl.geometry as pg

from phidl import quickplot as plot

D = pg.snspd(

wire_width=0.2,

wire_pitch=0.6,

size=(10, 8),

num_squares=None,

turn_ratio=4,

terminals_same_side=False,

layer=0,

)

plot(D) # quickplot the geometry

D = pg.snspd_expanded(

wire_width=0.3,

wire_pitch=0.6,

size=(10, 8),

num_squares=None,

connector_width=1,

connector_symmetric=False,

turn_ratio=4,

terminals_same_side=False,

layer=0,

)

plot(D) # quickplot the geometry

E = gf.Component()

E.add_ref(gf.components.ellipse(layer=(1, 0)))

D = E.extract(layers=[(1, 0)])

D

import gdsfactory as gf

X = gf.components.ellipse(layer=(2, 0))

c = X.copy()

c

| 0.743913 | 0.960842 |

```

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('Data/fashion', one_hot=True)

n_classes = 10

input_size = 784

x = tf.placeholder(tf.float32, shape=[None, input_size])

y = tf.placeholder(tf.float32, shape=[None, n_classes])

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

W_conv1 = weight_variable([7, 7, 1, 100])

b_conv1 = bias_variable([100])

x_image = tf.reshape(x, [-1,28,28,1])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

W_conv2 = weight_variable([4, 4, 100, 150])

b_conv2 = bias_variable([150])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

W_conv3 = weight_variable([4, 4, 150, 250])

b_conv3 = bias_variable([250])

h_conv3 = tf.nn.relu(conv2d(h_pool2, W_conv3) + b_conv3)

h_pool3 = max_pool_2x2(h_conv3)

W_fc1 = weight_variable([4 * 4 * 250, 300])

b_fc1 = bias_variable([300])

h_pool3_flat = tf.reshape(h_pool3, [-1, 4*4*250])

h_fc1 = tf.nn.relu(tf.matmul(h_pool3_flat, W_fc1) + b_fc1)

keep_prob = tf.placeholder(tf.float32)

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

W_fc2 = weight_variable([300, n_classes])

b_fc2 = bias_variable([n_classes])

y_pred = tf.matmul(h_fc1_drop, W_fc2) + b_fc2

with tf.name_scope('cross_entropy'):

diff = tf.nn.softmax_cross_entropy_with_logits(labels=y, logits=y_pred)

with tf.name_scope('total'):

cross_entropy = tf.reduce_mean(diff)

tf.summary.scalar('cross_entropy', cross_entropy)

learning_rate = 0.001

train_step = tf.train.AdamOptimizer(learning_rate).minimize(cross_entropy)

with tf.name_scope('accuracy'):

correct_prediction = tf.equal(tf.argmax(y_pred, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

tf.summary.scalar('accuracy', accuracy)

sess = tf.InteractiveSession()

log_dir = 'tensorboard-example'

merged = tf.summary.merge_all()

train_writer = tf.summary.FileWriter(log_dir + '/train', sess.graph)

val_writer = tf.summary.FileWriter(log_dir + '/val')

n_steps = 1000

batch_size = 128

dropout = 0.25

evaluate_every = 10

tf.global_variables_initializer().run()

for i in range(n_steps):

x_batch, y_batch = mnist.train.next_batch(batch_size)

summary, _, train_acc = sess.run([merged, train_step, accuracy], feed_dict={x: x_batch, y: y_batch, keep_prob: dropout})

train_writer.add_summary(summary, i)

if i % evaluate_every == 0:

summary, val_acc = sess.run([merged, accuracy], feed_dict={x: mnist.test.images, y: mnist.test.labels, keep_prob: 1.0})

val_writer.add_summary(summary, i)

print('Step {:04.0f}: train_acc: {:.4f}; val_acc {:.4f}'.format(i, train_acc, val_acc))

train_writer.close()

val_writer.close()

```

|

github_jupyter

|

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('Data/fashion', one_hot=True)

n_classes = 10

input_size = 784

x = tf.placeholder(tf.float32, shape=[None, input_size])

y = tf.placeholder(tf.float32, shape=[None, n_classes])

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

W_conv1 = weight_variable([7, 7, 1, 100])

b_conv1 = bias_variable([100])

x_image = tf.reshape(x, [-1,28,28,1])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

W_conv2 = weight_variable([4, 4, 100, 150])

b_conv2 = bias_variable([150])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

W_conv3 = weight_variable([4, 4, 150, 250])

b_conv3 = bias_variable([250])

h_conv3 = tf.nn.relu(conv2d(h_pool2, W_conv3) + b_conv3)

h_pool3 = max_pool_2x2(h_conv3)

W_fc1 = weight_variable([4 * 4 * 250, 300])

b_fc1 = bias_variable([300])

h_pool3_flat = tf.reshape(h_pool3, [-1, 4*4*250])

h_fc1 = tf.nn.relu(tf.matmul(h_pool3_flat, W_fc1) + b_fc1)

keep_prob = tf.placeholder(tf.float32)

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

W_fc2 = weight_variable([300, n_classes])

b_fc2 = bias_variable([n_classes])

y_pred = tf.matmul(h_fc1_drop, W_fc2) + b_fc2

with tf.name_scope('cross_entropy'):

diff = tf.nn.softmax_cross_entropy_with_logits(labels=y, logits=y_pred)

with tf.name_scope('total'):

cross_entropy = tf.reduce_mean(diff)

tf.summary.scalar('cross_entropy', cross_entropy)

learning_rate = 0.001

train_step = tf.train.AdamOptimizer(learning_rate).minimize(cross_entropy)

with tf.name_scope('accuracy'):

correct_prediction = tf.equal(tf.argmax(y_pred, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

tf.summary.scalar('accuracy', accuracy)

sess = tf.InteractiveSession()

log_dir = 'tensorboard-example'

merged = tf.summary.merge_all()

train_writer = tf.summary.FileWriter(log_dir + '/train', sess.graph)

val_writer = tf.summary.FileWriter(log_dir + '/val')

n_steps = 1000

batch_size = 128

dropout = 0.25

evaluate_every = 10

tf.global_variables_initializer().run()

for i in range(n_steps):

x_batch, y_batch = mnist.train.next_batch(batch_size)

summary, _, train_acc = sess.run([merged, train_step, accuracy], feed_dict={x: x_batch, y: y_batch, keep_prob: dropout})

train_writer.add_summary(summary, i)

if i % evaluate_every == 0:

summary, val_acc = sess.run([merged, accuracy], feed_dict={x: mnist.test.images, y: mnist.test.labels, keep_prob: 1.0})

val_writer.add_summary(summary, i)

print('Step {:04.0f}: train_acc: {:.4f}; val_acc {:.4f}'.format(i, train_acc, val_acc))

train_writer.close()

val_writer.close()

| 0.752286 | 0.790773 |

```

%matplotlib inline

import matplotlib

import pandas

import numpy as np

import matplotlib.pyplot as plt

from sklearn.cluster import KMeans

from scipy.spatial.distance import cdist, pdist

cluster_data = pandas.read_csv('cluster_data.csv')

sub_cluster_data = cluster_data.drop(cluster_data.columns[[0]], axis=1)

#sub_cluster_data

pandas.tools.plotting.scatter_matrix(sub_cluster_data, alpha=0.2, figsize=(12,12), diagonal='kde')

## K-means clustering

# Use Silhouette Scoring to identify the ideal number of clusters

from sklearn.metrics import silhouette_score

s = []

N_clusters = range(2,15)

for n_clusters in N_clusters:

kmeans = KMeans(n_clusters=n_clusters)

kmeans.fit(sub_cluster_data.as_matrix())

# Calculate S. score for current number of clusters

s.append(silhouette_score(sub_cluster_data.as_matrix(), kmeans.labels_, metric='euclidean'))

# Plot the results

kIdx = 5

plt.plot(N_clusters,s)

plt.plot(N_clusters[kIdx],s[kIdx], marker='o', markersize=12,

markeredgewidth=2, markeredgecolor='r', markerfacecolor='None')

plt.ylabel("Mean Silhouette Coeff.")

plt.xlabel("k")

plt.title("Mean Silhouette Coefficient vs k clusters")

plt.grid()

# Confirm the choice of number of clusters using the Elbow method

# Taken from:

## http://datascience.stackexchange.com/questions/6508/k-means-incoherent-behaviour-choosing-k-with-elbow-method-bic-variance-explain

K = range(2,15)

KM = [KMeans(n_clusters=k).fit(sub_cluster_data.as_matrix()) for k in K]

centroids = [k.cluster_centers_ for k in KM]

D_k = [cdist(sub_cluster_data, cent, 'euclidean') for cent in centroids]

cIdx = [np.argmin(D,axis=1) for D in D_k]

dist = [np.min(D,axis=1) for D in D_k]

avgWithinSS = [sum(d)/sub_cluster_data.shape[0] for d in dist]

# Total with-in sum of square

wcss = [sum(d**2) for d in dist]

tss = sum(pdist(sub_cluster_data)**2)/sub_cluster_data.shape[0]

bss = tss-wcss

kIdx = 6

# elbow curve

fig = plt.figure()

ax = fig.add_subplot(111)

ax.plot(K, avgWithinSS, 'b*-')

ax.plot(K[kIdx], avgWithinSS[kIdx], marker='o', markersize=12,

markeredgewidth=2, markeredgecolor='r', markerfacecolor='None')

plt.grid(True)

plt.xlabel('Number of clusters')

plt.ylabel('Average within-cluster sum of squares')

plt.title('Elbow for KMeans clustering')

fig = plt.figure()

ax = fig.add_subplot(111)

ax.plot(K, bss/tss*100, 'b*-')

ax.plot(K[kIdx], bss[kIdx]/tss*100, marker='o', markersize=12,

markeredgewidth=2, markeredgecolor='r', markerfacecolor='None')

plt.grid(True)

plt.xlabel('Number of clusters')

plt.ylabel('Percentage of variance explained')

plt.title('Elbow for KMeans clustering')

# Re-do K-means clustering using optimum values

np.random.seed(5)

optimum_k = 8

kmeans = KMeans(n_clusters=optimum_k)

kmeans.fit(sub_cluster_data.as_matrix())

labels = kmeans.labels_

centroids = kmeans.cluster_centers_

# Use PCA to identify the key dimensionality in the data

from sklearn.decomposition import PCA

pca = PCA(n_components=5)

pca.fit(sub_cluster_data)

pca.explained_variance_ratio_

# The first two principal components explain ~91% of the variation, so could simplify to 2-d

# Transform the raw data and the identified centroids on to a 2-d plane

np.random.seed(5)

pca = PCA(n_components=2)

pca.fit(sub_cluster_data)

points_pc = pca.transform(sub_cluster_data)

centroids_pc = pca.transform(centroids)

# Plot

fig = plt.figure(figsize=(12,12))

ax = fig.add_subplot(111)

ax.scatter(points_pc[:,0],points_pc[:,1], c=labels, alpha=0.75)

ax.scatter(centroids_pc[:,0],centroids_pc[:,1],marker="+",s=200,linewidths=3,c="k")

for label, x, y in zip(range(optimum_k), centroids_pc[:,0],centroids_pc[:,1]):

plt.annotate(

label,

xy = (x, y), xytext = (-20, 20),

textcoords = 'offset points', ha = 'right', va = 'bottom',

bbox = dict(boxstyle = 'round,pad=0.5', fc = 'yellow', alpha = 0.75),

arrowprops = dict(arrowstyle = '->', connectionstyle = 'arc3,rad=0'))

plt.grid(True)

plt.xlabel('PC 1')

plt.ylabel('PC 2')

plt.title('Clusters transformed on to principal components')

plt.show()

np.set_printoptions(precision=3)

print(centroids)

grouped = sub_cluster_data.groupby(labels)

grouped.size()

# Plot

i=0

fig = plt.figure(figsize=(12,12))

for group in range(optimum_k):

for dim in list(sub_cluster_data.columns.values):

i+=1

ax = fig.add_subplot(optimum_k,5,i)

ax.hist(grouped.get_group(group)[dim].values - grouped.get_group(group)[dim].mean(), 15)

ax.set_ylim([0,20])

plt.grid(True)

plt.title(str(group) + dim, {'verticalalignment':'top'})

plt.show()

grouped.std()

new_points = list()

labels2 = list()

for group in range(optimum_k):

new_points.append(np.random.multivariate_normal(centroids[group,:],

grouped.std().values[group,:] * np.eye(5),

100))

labels2.append(group * np.ones(100))

new_points = pandas.DataFrame(np.vstack(new_points), columns=['a','b','c','d','e'])

labels2 = np.vstack(labels2)

group=1

centroids[group,:]

grouped.std().values[group,:] * np.eye(5)

np.random.multivariate_normal(centroids[group,:],

grouped.std().values[group,:] * np.eye(5),

100)

pandas.tools.plotting.scatter_matrix(new_points, alpha=0.2, figsize=(12,12), diagonal='kde')

# Re-do K-means clustering using optimum values

np.random.seed(5)

optimum_k = 8

kmeans2 = KMeans(n_clusters=optimum_k)

kmeans2.fit(new_points)

labels2 = kmeans2.labels_

centroids2 = kmeans2.cluster_centers_

# Transform the raw data and the identified centroids on to a 2-d plane

np.random.seed(5)

pca2 = PCA(n_components=2)

pca2.fit(new_points)

points_pc2 = pca.transform(new_points)

centroids_pc2 = pca.transform(centroids2)

# Plot

fig = plt.figure(figsize=(12,12))

ax = fig.add_subplot(111)

ax.scatter(points_pc2[:,0],points_pc2[:,1], c=labels2, alpha=0.75)

ax.scatter(centroids_pc2[:,0],centroids_pc2[:,1],marker="+",s=200,linewidths=3,c="k")

for label, x, y in zip(range(optimum_k), centroids_pc2[:,0],centroids_pc2[:,1]):

plt.annotate(

label,

xy = (x, y), xytext = (-20, 20),

textcoords = 'offset points', ha = 'right', va = 'bottom',

bbox = dict(boxstyle = 'round,pad=0.5', fc = 'yellow', alpha = 0.5),

arrowprops = dict(arrowstyle = '->', connectionstyle = 'arc3,rad=0'))

plt.grid(True)

plt.xlabel('PC 1')

plt.ylabel('PC 2')

plt.title('Clusters transformed on to principal components')

plt.show()

```

|

github_jupyter

|

%matplotlib inline

import matplotlib

import pandas

import numpy as np

import matplotlib.pyplot as plt

from sklearn.cluster import KMeans

from scipy.spatial.distance import cdist, pdist

cluster_data = pandas.read_csv('cluster_data.csv')

sub_cluster_data = cluster_data.drop(cluster_data.columns[[0]], axis=1)

#sub_cluster_data

pandas.tools.plotting.scatter_matrix(sub_cluster_data, alpha=0.2, figsize=(12,12), diagonal='kde')

## K-means clustering

# Use Silhouette Scoring to identify the ideal number of clusters

from sklearn.metrics import silhouette_score

s = []

N_clusters = range(2,15)

for n_clusters in N_clusters:

kmeans = KMeans(n_clusters=n_clusters)

kmeans.fit(sub_cluster_data.as_matrix())

# Calculate S. score for current number of clusters

s.append(silhouette_score(sub_cluster_data.as_matrix(), kmeans.labels_, metric='euclidean'))

# Plot the results

kIdx = 5

plt.plot(N_clusters,s)

plt.plot(N_clusters[kIdx],s[kIdx], marker='o', markersize=12,

markeredgewidth=2, markeredgecolor='r', markerfacecolor='None')

plt.ylabel("Mean Silhouette Coeff.")

plt.xlabel("k")

plt.title("Mean Silhouette Coefficient vs k clusters")

plt.grid()

# Confirm the choice of number of clusters using the Elbow method

# Taken from:

## http://datascience.stackexchange.com/questions/6508/k-means-incoherent-behaviour-choosing-k-with-elbow-method-bic-variance-explain

K = range(2,15)

KM = [KMeans(n_clusters=k).fit(sub_cluster_data.as_matrix()) for k in K]

centroids = [k.cluster_centers_ for k in KM]

D_k = [cdist(sub_cluster_data, cent, 'euclidean') for cent in centroids]

cIdx = [np.argmin(D,axis=1) for D in D_k]

dist = [np.min(D,axis=1) for D in D_k]

avgWithinSS = [sum(d)/sub_cluster_data.shape[0] for d in dist]

# Total with-in sum of square

wcss = [sum(d**2) for d in dist]

tss = sum(pdist(sub_cluster_data)**2)/sub_cluster_data.shape[0]

bss = tss-wcss

kIdx = 6

# elbow curve

fig = plt.figure()

ax = fig.add_subplot(111)

ax.plot(K, avgWithinSS, 'b*-')

ax.plot(K[kIdx], avgWithinSS[kIdx], marker='o', markersize=12,

markeredgewidth=2, markeredgecolor='r', markerfacecolor='None')

plt.grid(True)

plt.xlabel('Number of clusters')

plt.ylabel('Average within-cluster sum of squares')

plt.title('Elbow for KMeans clustering')

fig = plt.figure()

ax = fig.add_subplot(111)

ax.plot(K, bss/tss*100, 'b*-')

ax.plot(K[kIdx], bss[kIdx]/tss*100, marker='o', markersize=12,

markeredgewidth=2, markeredgecolor='r', markerfacecolor='None')

plt.grid(True)

plt.xlabel('Number of clusters')

plt.ylabel('Percentage of variance explained')

plt.title('Elbow for KMeans clustering')

# Re-do K-means clustering using optimum values

np.random.seed(5)

optimum_k = 8

kmeans = KMeans(n_clusters=optimum_k)

kmeans.fit(sub_cluster_data.as_matrix())

labels = kmeans.labels_

centroids = kmeans.cluster_centers_

# Use PCA to identify the key dimensionality in the data

from sklearn.decomposition import PCA

pca = PCA(n_components=5)

pca.fit(sub_cluster_data)

pca.explained_variance_ratio_

# The first two principal components explain ~91% of the variation, so could simplify to 2-d

# Transform the raw data and the identified centroids on to a 2-d plane

np.random.seed(5)

pca = PCA(n_components=2)

pca.fit(sub_cluster_data)

points_pc = pca.transform(sub_cluster_data)

centroids_pc = pca.transform(centroids)

# Plot

fig = plt.figure(figsize=(12,12))

ax = fig.add_subplot(111)

ax.scatter(points_pc[:,0],points_pc[:,1], c=labels, alpha=0.75)

ax.scatter(centroids_pc[:,0],centroids_pc[:,1],marker="+",s=200,linewidths=3,c="k")

for label, x, y in zip(range(optimum_k), centroids_pc[:,0],centroids_pc[:,1]):

plt.annotate(

label,

xy = (x, y), xytext = (-20, 20),

textcoords = 'offset points', ha = 'right', va = 'bottom',

bbox = dict(boxstyle = 'round,pad=0.5', fc = 'yellow', alpha = 0.75),

arrowprops = dict(arrowstyle = '->', connectionstyle = 'arc3,rad=0'))

plt.grid(True)

plt.xlabel('PC 1')

plt.ylabel('PC 2')

plt.title('Clusters transformed on to principal components')

plt.show()

np.set_printoptions(precision=3)

print(centroids)

grouped = sub_cluster_data.groupby(labels)

grouped.size()

# Plot

i=0

fig = plt.figure(figsize=(12,12))

for group in range(optimum_k):

for dim in list(sub_cluster_data.columns.values):

i+=1

ax = fig.add_subplot(optimum_k,5,i)

ax.hist(grouped.get_group(group)[dim].values - grouped.get_group(group)[dim].mean(), 15)

ax.set_ylim([0,20])

plt.grid(True)

plt.title(str(group) + dim, {'verticalalignment':'top'})

plt.show()

grouped.std()

new_points = list()

labels2 = list()

for group in range(optimum_k):

new_points.append(np.random.multivariate_normal(centroids[group,:],

grouped.std().values[group,:] * np.eye(5),

100))

labels2.append(group * np.ones(100))

new_points = pandas.DataFrame(np.vstack(new_points), columns=['a','b','c','d','e'])

labels2 = np.vstack(labels2)

group=1

centroids[group,:]

grouped.std().values[group,:] * np.eye(5)

np.random.multivariate_normal(centroids[group,:],

grouped.std().values[group,:] * np.eye(5),

100)

pandas.tools.plotting.scatter_matrix(new_points, alpha=0.2, figsize=(12,12), diagonal='kde')

# Re-do K-means clustering using optimum values

np.random.seed(5)

optimum_k = 8

kmeans2 = KMeans(n_clusters=optimum_k)

kmeans2.fit(new_points)

labels2 = kmeans2.labels_

centroids2 = kmeans2.cluster_centers_

# Transform the raw data and the identified centroids on to a 2-d plane

np.random.seed(5)

pca2 = PCA(n_components=2)

pca2.fit(new_points)

points_pc2 = pca.transform(new_points)

centroids_pc2 = pca.transform(centroids2)

# Plot

fig = plt.figure(figsize=(12,12))

ax = fig.add_subplot(111)

ax.scatter(points_pc2[:,0],points_pc2[:,1], c=labels2, alpha=0.75)

ax.scatter(centroids_pc2[:,0],centroids_pc2[:,1],marker="+",s=200,linewidths=3,c="k")

for label, x, y in zip(range(optimum_k), centroids_pc2[:,0],centroids_pc2[:,1]):

plt.annotate(

label,

xy = (x, y), xytext = (-20, 20),

textcoords = 'offset points', ha = 'right', va = 'bottom',

bbox = dict(boxstyle = 'round,pad=0.5', fc = 'yellow', alpha = 0.5),

arrowprops = dict(arrowstyle = '->', connectionstyle = 'arc3,rad=0'))

plt.grid(True)

plt.xlabel('PC 1')

plt.ylabel('PC 2')

plt.title('Clusters transformed on to principal components')

plt.show()

| 0.814717 | 0.650134 |

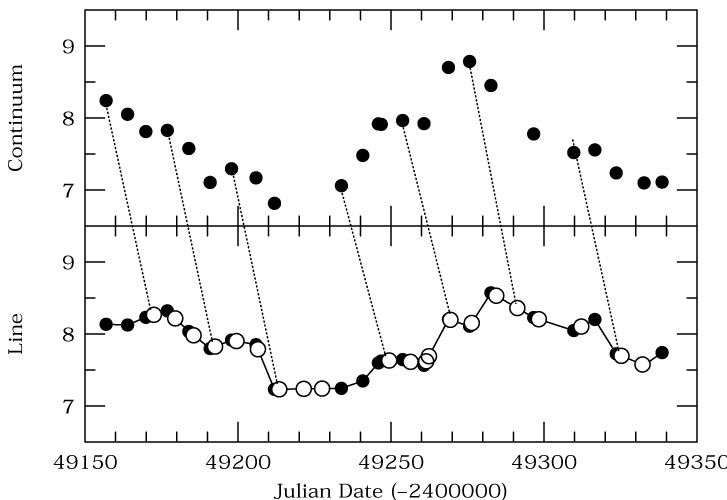

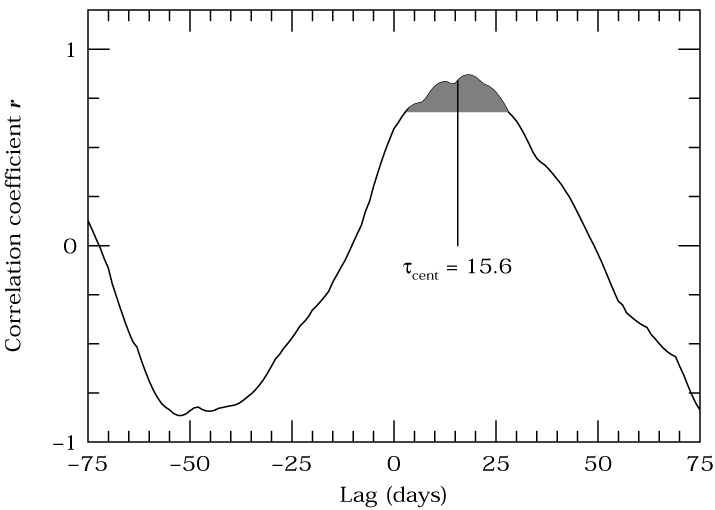

## Analysis of Stochastic Processes ($\S$ 10.5)

If a system is always variable, but the variability is not (infinitely) predictable, then we have a [**stochastic**](https://en.wikipedia.org/wiki/Stochastic) process. Counter to what you may think, these processes can also be characterized.

Last time we saw that, even with so little data that we couldn't see the periodicity, it was still possible to apply machine learning to find the period.

In the same way, we can find "structure" in otherwise random data. For example the following light curves taken from [Moreno et al. (2019)](https://iopscience.iop.org/article/10.1088/1538-3873/ab1597) may all be random, but I think that we can agree that they are random in very different ways.

Depending on what one is trying to do, we can use a number of different tools to try to characterize the data from Auto-Covariance Functions (ACVF), Auto-Correlation Functions (ACF), Power Spectral Densities (PSD), and Structure Functions (SF). In fact, these are all slightly different ways to describe the same information.

But let's start by talking about auto-regressive (AR) and moving-average (MA) processes as building blocks of stochastic data.

## Autoregressive Models

For processes like these that are not periodic, but that nevertheless "retain memory" of previous states, we can use [autoregressive models](https://en.wikipedia.org/wiki/Autoregressive_model).

For linear regression, we are predicting the dependent variable from the independent variable $$y = mx+b.$$ For auto-regression the dependent and independent variable is the same and we are predicting a future value of $y$ based on a past (or multiple past) values of $y$: $$y_i = \phi_i y_{i-1} + \ldots,$$

where $\phi_i$ is the "lag coefficient".

A random walk is an example of such a process; every new value is given by the preceeding value plus some noise:

$$y_i = y_{i-1} + \epsilon_i.$$

That is, $\phi_i=1$. If $\phi_i>1$ then it is known as a geometric random walk, which is typical of the stock market (a largely random process that nevertheless increases with time [on long time scales]). (So, when you interview for a quant position on Wall Street, you tell them that you are an expert in using autoregressive geometric random walks to model stochastic processes.)

In the random walk case above, each new value depends only on the immediately preceeding value. But we can generalized this to include $p$ values:

$$y_i = \sum_{j=1}^pa_jy_{i-j} + \epsilon_i$$

We refer to this as an [**autoregressive (AR)**](https://en.wikipedia.org/wiki/Autoregressive_model) process of order $p$: $AR(p)$. For a random walk, we have $p=1$, and $a_1=1$ (where now I'm using $a$ instead of $\phi$ to match the notation of the book).

If the data are drawn from a "stationary" process (one where it doesn't matter what region of the light curve you sample [so long as it is representative], the stock market *not* being an example of a stationary process), the $a_j$ satisfy certain conditions.

One thing that we might do then is ask whether a system is more consistent with $a_1=0$ or $a_1=1$ (noise vs. a random walk).