prompt

stringlengths 501

4.98M

| target

stringclasses 1

value | chunk_prompt

bool 1

class | kind

stringclasses 2

values | prob

float64 0.2

0.97

⌀ | path

stringlengths 10

394

⌀ | quality_prob

float64 0.4

0.99

⌀ | learning_prob

float64 0.15

1

⌀ | filename

stringlengths 4

221

⌀ |

|---|---|---|---|---|---|---|---|---|

```

# Putting the initialisation at the top now!

import veneer

%matplotlib inline

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

v = veneer.Veneer(port=9876)

```

# Session 6 - Model Setup and Reconfiguration

This session covers functionality in Veneer and veneer-py for making larger changes to model setup, including structural changes.

Using this functionality, it is possible to:

* Create (and remove) nodes and links

* Change model algorithms, such as changing links from Straight Through Routing to Storage Routing

* Assign input time series to model variables

* Query and modify parameters across similar nodes/links/catchments/functional-units

## Overview

- (This is a Big topic)

- Strengths and limitations of configuring from outside

- +ve repeatability

- +ve clarity around common elements - e.g. do one thing everywhere, parameterised by spatial data

- -ve feedback - need to query the system to find out what you need to do vs a GUI that displays it

- Obvious and compelling use cases

- Catchments: Applying a constituent model everywhere and assigning parameters using spatial data

- Catchments: Climate data

- How it works:

- The Python <-> IronPython bridge

- What’s happening under the hood

- Layers of helper functions

- How to discover parameters

- Harder examples (not fully worked)

- Creating and configuring a storage from scratch

- Extending the system

## Which Model?

**Note:** This session uses `ExampleProject/RiverModel2.rsproj`. You are welcome to work with your own model instead, however you will need to change the notebook text at certain points to reflect the names of nodes, links and functions in your model file.

## Warning: Big Topic

This is a big topic and the material in this session will only touch on some of the possibilities.

Furthermore, its an evolving area - so while there is general purpose functionality that is quite stable, making the functionality easy to use for particular tasks is a case by case basis that has been tackled on an as-needed basis. **There are lots of gaps!**

## Motivations, Strengths and Limitations of Scripting configuration

There are various motivations for the type of automation of Source model setup described here. Some of these motivations are more practical to achieve than others!

### Automatically build a model from scratch, using an executable 'recipe'

Could you build a complete Source model from scratch using a script?

In theory, yes you could. However it is not practical at this point in time using Veneer. (Though the idea of building a catchments-style model is more foreseeable than building a complex river model).

For some people, building a model from script would be desirable as it would have some similarities to configuring models in text files as was done with the previous generation of river models. A script would be more powerful though, because it has the ability to bring in adhoc data sources (GIS layers, CSV files, etc) to define the model structure. The scripting approach presented here wouldn't be the most convenient way to describe a model node-by-node, link-by-link - it would be quite cumbersome. However it would be possible to build a domain-specific language for describing models that makes use of the Python scripting.

### Automate bulk changes to a model

Most of the practical examples to date have involved applying some change across a model (whether that model is a catchments-style geographic model or a schematic style network). Examples include:

* **Apply a new constituent generation model:** A new generation model was being tested and needed to be applied to every catchment in the model. Some of the parameters would subsequently be calibrated (using PEST), but others needed to be derived from spatial data.

* **Add and configure nodes for point source inputs:** A series of point sources needed to be represented in the models. This involved adding inflow nodes for each point source, connecting those inflows to the most appropriate (and available) downstream node and computing and configuring time series inputs for the inflows.

* **Bulk rename nodes and links based on a CSV file:** A complex model needed a large number of nodes and links renamed to introduce naming conventions that would allow automatic post-processing and visualisation. A CSV was created with old node/link names (extracted from Source using veneer-py). A second column in the CSV was then populated (by hand) with new node/link names. This CSV file was read into Python and used to apply new names to affected nodes/links.

### Change multiple models in a consistent way

* **Testing a plugin in multiple catchments:** A new plugin model was being tested across multiple catchments models, including calibration. A notebook was written to apply the plugin to a running Source model, parameterise the plugin and configure PEST. This notebook was then applied to each distinct Source model in turn.

### Change a model without making the changes permanent

There are several reasons for making changes to the Source model without wanting the changes to be permanently saved in the model.

1. Testing an alternative setup, such as a different set of routing parameters. Automating the application of new parameters means you can test, and then re-test at a later date, without needing manual rework.

2. Maintaining a single point-of-truth for a core model that needs to support different purposes and users.

3. Persistence not available. In the earlier examples of testing new plugin models, the automated application of model setup allowed sophisticated testing, including calibration by PEST, to take place before the plugin was stable enough to be persisted using the Source data management system.

## Example - Switching routing methods and configuring

This example uses the earlier `RiverModel.rsproj` example file although it will work with other models.

Here, we will convert all links to use Storage Routing except for links that lead to a water user.

**Note:** To work through this example (and the others that follow), you will need to ensure the 'Allow Scripts' option is enabled in the Web Server Monitoring window.

### The `v.model` namepsace

Most of our work in this session will involve the `v.model` namespace. This namespace contains functionality that provides query and modification of the model structure. Everything in `v.model` relies on the 'Allow Scripts' option.

As with other parts of veneer-py (and Python packages in general), you can use `<tab>` completion to explore the available functions and the `help()` function (or the `?` suffix) to get help.

### Finding the current routing type

We can use `v.model.link.routing.get_models()` to find the routing models used on each link

```

existing_models = v.model.link.routing.get_models()

existing_models

```

**Note:**

* The `get_models()` functions is available in various places through the `v.model` namespace. For example, `v.model.catchments.runoff.get_models()` queries the rainfall runoff models in subcatchments (actually in functional units). There are other such methods, available in multiple places, including:

* `set_models`

* `get_param_values`

* `set_param_values`

* These functions are all *bulk* functions - that is they operate across *all* matching elements (all nodes, all links, etc).

* Each of these functions accept parameters to restrict the search, such as only including links with certain names. These query parameters differ in different contexts (ie between runoff models and routing models), but they are consistent between the functions in a given context. Confused?

* For example, in link routing you can look for links of certain names and you can do this with the different methods:

```python

v.model.link.routing.get_models(links='Default Link #3')

v.model.link.routing.set_models('RiverSystem.Flow.LaggedFlowRoutingWrapper',links='Default Link #3')

```

Whereas, with runoff models, you can restrict by catchment or by fus:

```python

v.model.catchment.runoff.get_models(fus='Grazing')

v.model.catchment.runoff.set_models('MyFancyRunoffModel',fus='Grazing')

```

* You can find out what query parameters are available by looking at the help, one level up:

```python

help(v.model.link.routing)

```

* The call to `get_models()` return a list of model names. Two observations about this:

1. The model name is the fully qualified class name as used internally in Source. This is a common pattern through the `v.model` namespace - it uses the terminology within Source. There are, however, help functions for finding what you need. For example:

```python

v.model.find_model_type('gr4')

v.model.find_parameters('RiverSystem.Flow.LaggedFlowRoutingWrapper')

```

2. Returning a list doesn't tell you which link has which model - so how are you going to determine which ones should be Storage Routing and which should stay as Straight Through? In general the `get_` functions return lists (although there is a `by_name` option being implemented) and the `set_` functions accept lists (unless you provide a single value in which case it is applied uniformly). It is up to you to interpret the lists returned by `get_*` and to provide `set_*` with a list in the right order. The way to get it right is to separately query for the _names_ of the relevant elements (nodes/links/catchments) and order accordingly. This will be demonstrated!

### Identifying which link should stay as StraightThroughRouting

We can ask for the names of each link in order to establish which ones should be Storage Routing and which should stay as Straight Through

```

link_names_order = v.model.link.routing.names()

link_names_order

```

OK - that gives us the names - but it doesn't help directly. We could look at the model in Source to

work out which one is connected to the Water User - but that's cheating!

More generally, we can ask Veneer for the network and perform a topological query

```

network = v.network()

```

Now that we've got the network, we want all the water users.

Now, the information we've been returned regarding the network is in GeoJSON format and is intended for use in visualisation. It doesn't explicitly say 'this is a water user' at any point, but it does tell us indirectly tell us this by telling us about the icon in use:

```

network['features']._unique_values('icon')

```

So, we can find all the water users in the network, by finding all the network features with `'/resources/WaterUserNodeModel'` as their icon!

```

water_users = network['features'].find_by_icon('/resources/WaterUserNodeModel')

water_users

```

Now, we can query the network for links upstream of each water user.

We'll loop over the `water_users` list (just one in the sample model)

```

links_upstream_of_water_users=[]

for water_user in water_users:

links_upstream_of_water_users += network.upstream_links(water_user)

links_upstream_of_water_users

```

Just one link (to be expected) in the sample model. Its the name we care about though:

```

names_of_water_user_links = [link['properties']['name'] for link in links_upstream_of_water_users]

names_of_water_user_links

```

To recap, we now have:

* `existing_models` - A list of routing models used on links

* `link_names_order` - The name of each link, in the same order as for `existing_models`

* `names_of_water_user_links` - The names of links immediately upstream of water users. These links need to stay as Straight Through Routing

We're ultimately going to call

```python

v.model.link.routing.set_models(new_models,fromList=True)

```

so we need to construct `new_models`, which will be a list of model names to assign to links, with the right mix and order of storage routing and straight through. We'll want `new_models` to be the same length as `existing_models` so there is one entry per link. (There are cases where you my use `set_models` or `set_param_values` with shorter lists. You'll get R-style 'recycling' of values, but its more useful in catchments where you're iterating over catchments AND functional units)

The entries in `new_models` need to be strings - those long, fully qualified class names from the Source world. We can find them using `v.model.find_model_type`

```

v.model.find_model_type('StorageRo')

v.model.find_model_type('StraightThrough')

```

We can construct our list using a list comprehension, this time with a bit of extra conditional logic thrown in

```

new_models = ['RiverSystem.Flow.StraightThroughRouting' if link_name in names_of_water_user_links

else 'RiverSystem.Flow.StorageRouting'

for link_name in link_names_order]

new_models

```

This is a more complex list comprehension than we've used before. It goes like this, reading from the end:

* Iterate over all the link names. This will be the right number of elements - and it tells us which link we're dealling with

```python

for link_name in link_names_order]

```

* If the current `link_name` is present in the list of links upstream of water users, use straight through routing

```python

['RiverSystem.Flow.StraightThroughRouting' if link_name in names_of_water_user_links

```

* Otherwise use storage routing

```python

else 'RiverSystem.Flow.StorageRouting'

```

All that's left is to apply this to the model

```

v.model.link.routing.set_models(new_models,fromList=True)

```

**Notes:**

* The Source Applications draws links with different line styles based on their routing types - but it might not redraw until you prompt it - eg by resizing the window

* The `fromList` parameter tells the `set_models` function that you want the list to be applied one element at a time.

Now that you have Storage Routing used in most links, you can start to parameterise the links from the script.

To do so, you could use an input set, as per the previous session. To change parameters via input sets, you would first need to know the wording to use in the input set commands - and at this stage you need to find that wording in the Source user interface.

Alternatively, you can set the parameters directly using `v.model.link.routing.set_param_values`, which expects the variable name as used internally by Source. You can query for the parameter names for a particular model, using `v.model.find_parameters(model_type)` and, if that doesn't work `v.model.find_properties(model_type)`.

We'll start by using `find_parameters`:

```

v.model.find_parameters('RiverSystem.Flow.StorageRouting')

```

The function `v.model.find_parameters`, accepts a model type (actually, you can give it a list of model types) and it returns a list of parameters.

This list is determined by the internal code of Source - a parameter will only be returned if it has a `[Parameter]` tag in the C\# code.

From the list above, we see some parameters that we expect to see, but not all of the parameters for a Storage Routing reach. For example, the list of parameters doesn't seem to say how we'd switch from Generic to Piecewise routing mode. This is because the model property in question (`IsGeneric`) doesn't have a `[Property]` attribute.

We can find a list of all fields and properties of the model using `v.model.find_properties`. It's a lot more information, but it can be helpful:

```

v.model.find_properties('RiverSystem.Flow.StorageRouting')

```

Lets apply an initial parameter set to every Storage Routing link by setting:

* `RoutingConstant` to 86400, and

* `RoutingPower` to 1

We will call `set_param_values`

```

help(v.model.link.routing.set_param_values)

v.model.link.routing.set_param_values('RoutingConstant',86400.0)

v.model.link.routing.set_param_values('RoutingPower',1.0)

```

You can check in the Source user interface to see that the parameters have been applied

### Setting parameters as a function of other values

Often, you will want to calculate model parameters based on some other information, either within the model or from some external data source.

The `set_param_values` can accept a list of values, where each item in the list is applied, in turn, to the corresponding models - in much the same way that we used the known link order to set the routing type.

The list of values can be computed in your Python script based on any available information. A common use case is to compute catchment or functional unit parameters based on spatial data.

We will demonstrate the list functionality here with a contrived example!

We will set a different value of `RoutingPower` for each link. We will compute a different value of `RoutingPower` from 1.0 down to >0, based on the number of storage routing links

```

number_of_links = len(new_models) - len(names_of_water_user_links)

power_vals = np.arange(1.0,0.0,-1.0/number_of_links)

power_vals

v.model.link.routing.set_param_values('RoutingPower',power_vals,fromList=True)

```

If you open the Feature Table for storage routing, you'll now see these values propagated.

The `fromList` option has another characteristic that can be useful - particularly for catchments models with multiple functional units: value recycling.

If you provide a list with few values than are required, the system will start again from the start of the list.

So, for example, the following code will assign the three values: `[0.5,0.75,1.0]`

```

v.model.link.routing.set_param_values('RoutingPower',[0.5,0.75,1.0],fromList=True)

```

Check the Feature Table to see the effect.

**Note:** You can run these scripts with the Feature Table open and the model will be updated - but the feature table won't reflect the new values until you Cancel the feature table and reopen it.

## How it Works

As mentioned, everything under `v.model` works by sending an IronPython script to Source to be run within the Source software itself.

IronPython is a native, .NET, version of Python and hence can access all the classes and objects that make up Source.

When you call a function witnin `v.model`, veneer-py is *generating* an IronPython script for Source.

To this point, we haven't seen what these IronPython scripts look like - they are hidden from view. We can see the scripts that get sent to Source by setting the option `veneer.general.PRINT_SCRIPTS=True`

```

veneer.general.PRINT_SCRIPTS=True

v.model.link.routing.get_models(links=['Default Link #3','Default Link #4'])

veneer.general.PRINT_SCRIPTS=False

```

*Writing these IronPython scripts from scratch requires an understanding of the internal data structures of Source. The functions under `v.model` are designed to shield you from these details.*

That said, if you have an idea of the data structures, you may wish to try writing IronPython scripts, OR, try working with some of the lower-level functionality offered in `v.model`.

Most of the `v.model` functions that we've used, are ultimately based upon two, low level thems:

* `v.model.get` and

* `v.model.set`

Both `get` and `set` expect a query to perform on a Source scenario object. Structuring this query is where an understanding of Source data structures comes in.

For example, the following query will return the number of nodes in the network. (We'll use the PRINT_SCRIPTS option to show how the query translates to a script):

```

veneer.general.PRINT_SCRIPTS=True

num_nodes = v.model.get('scenario.Network.Nodes.Count()')

num_nodes

```

The follow example returns the names of each node in the network. The `.*` notation tells veneer-py to generate a loop over every element in a collection

```

node_names = v.model.get('scenario.Network.Nodes.*Name')

node_names

```

You can see from the script output that veneer-py has generated a Python for loop to iterate over the nodes:

```python

for i_0 in scenario.Network.Nodes:

```

There are other characteristics in there, such as ignoring exceptions - this is a common default used in `v.model` to silently skip nodes/links/catchments/etc that don't have a particular property.

The same query approach can work for `set`, which can set a particular property (on one or more objects) to a particular value (which can be the same value everywhere, or drawn from a list)

```

# Generate a new name for each node (based on num_nodes)

names = ['New Name %d'%i for i in range(num_nodes)]

names

v.model.set('scenario.Network.Nodes.*Name',names,fromList=True,literal=True)

```

If you look at the Source model now (you may need to trigger a redraw by resizing the window), all the nodes have been renamed.

(Lets reset the names - note how we saved `node_names` earlier on!)

```

v.model.set('scenario.Network.Nodes.*Name',node_names,fromList=True,literal=True)

```

**Note:** The `literal=True` option is currently necessary setting text properties using `v.model.set`. This tells the IronPython generator to wrap the strings in quotes in the final script. Otherwise, IronPython would be looking for symbols (eg classes) with the same names

The examples of `v.model.get` and `v.model.set` illustrate some of the low level functionality for manipulating the source model.

The earlier, high-level, functions (eg `v.model.link.routing.set_param_values`) take care of computing the query string for you, including context dependent code such as searching for links of a particular name, or nodes of a particular type. They then call the lower level functions, which takes care of generating the actual IronPython script.

The `v.model` namespace is gradually expanding with new capabilities and functions - but at their essence, most new functions provide a high level wrapper, around `v.model.get` and `v.model.set` for some new area of the Source data structures. So, for example, you could envisage a `v.model.resource_assessment` which provides high level wrappers around resource assessment functionality.

### Exploring the system

Writing the high level wrappers (as with writing the query strings for `v.model.get/set`) requires an understanding of the internal data structures of Source. You can get this from the C# code for Source, or, to a degree, from a help function `v.model.sourceHelp`.

Lets say you want to discover how to change the description of the scenario (say, to automatically add a note about the changes made by your script)

Start, by asking for help on `'scenario'` and explore from there

```

veneer.general.PRINT_SCRIPTS=False

v.model.sourceHelp('scenario')

```

This tells you everything that is available on a Source scenario. It's a lot, but `Description` looks promising:

```

existing_description = v.model.get('scenario.Description')

existing_description

```

OK. It looks like there is no description in the existing scenario. Lets set one

```

v.model.set('scenario.Description','Model modified by script',literal=True)

```

## Harder examples

Lets look at a simple model building example.

We will test out different routing parameters, by setting up a scenario with several parallel networks. Each network will consist of an Inflow Node and a Gauge Node, joined by a Storage Routing link.

The inflows will all use the same time series of flows, so the only difference will be the routing parameters.

To proceed,

1. Start a new copy of Source (in the following code, I've assumed that you're leaving the existing copy open)

2. Create a new schematic model - but don't add any nodes or links

3. Open Tools|Web Server Monitoring

4. Once Veneer has started, make a note of what port number it is using - it will probably be 9877 if you've left the other copy of Source open.

5. Make sure you tick the 'Allow Scripts' option

Now, create a new veneer client (creatively called `v2` here)

```

v2 = veneer.Veneer(port=9877)

```

And check that the network has nothing in it at the moment

```

v2.network()

```

We can create nodes with `v.model.node.create`

```

help(v2.model.node.create)

```

There are also functions to create different node types:

```

help(v2.model.node.new_gauge)

```

First, we'll do a bit of a test run. Ultimately, we'll want to create a number of such networks - and the nodes will definitely need unique names then

```

loc = [10,10]

v2.model.node.new_inflow('The Inflow',schematic_location=loc,location=loc)

loc = [20,10]

v2.model.node.new_gauge('The Gauge',schematic_location=loc,location=loc)

```

**Note:** At this stage (and after some frustration) we can't set the location of the node on the schematic. We can set the 'geographic' location - which doesn't have to be true geographic coordinates, so that's what we'll do here.

Creating a link can be done with `v2.model.link.create`

```

help(v2.model.link.create)

v2.model.link.create('The Inflow','The Gauge','The Link')

```

Now, lets look at the information from `v2.network()` to see that it's all there. (We should also see the model in the geographic view)

```

v2.network().as_dataframe()

```

Now, after all that, we'll delete everything we've created and then recreate it all in a loop to give us parallel networks

```

v2.model.node.remove('The Inflow')

v2.model.node.remove('The Gauge')

```

So, now we can create (and delete) nodes and links, lets create multiple parallel networks, to test out our flow routing parameters. We'll create 20, because we can!

```

num_networks=20

for i in range(1,num_networks+1): # Loop from 1 to 20

veneer.log('Creating network %d'%i)

x = i

loc_inflow = [i,10]

loc_gauge = [i,0]

name_inflow = 'Inflow %d'%i

name_gauge = 'Gauge %d'%i

v2.model.node.new_inflow(name_inflow,location=loc_inflow,schematic_location=loc_inflow)

v2.model.node.new_gauge(name_gauge,location=loc_gauge,schematic_location=loc_gauge)

# Create the link

name_link = 'Link %d'%i

v2.model.link.create(name_inflow,name_gauge,name_link)

# Set the routing type to storage routing (we *could* do this at the end, outside the loop)

v2.model.link.routing.set_models('RiverSystem.Flow.StorageRouting',links=name_link)

```

We'll use one of the flow files from the earlier model to drive each of our inflow nodes. We need to know where that data is. Here, I'm assuming its in the `ExampleProject` directory within the same directory as this notebook. We'll need the absolute path for Source, and the Python `os` package helps with this type of filesystem operation

```

import os

os.path.exists('ExampleProject/Fish_G_flow.csv')

absolute_path = os.path.abspath('ExampleProject/Fish_G_flow.csv')

absolute_path

```

We can use `v.model.node.assign_time_series` to attach a time series of inflows to the inflow node. We could have done this in the for loop, one node at a time, but, like `set_param_values` we can assign time series to multiple nodes at once.

One thing that we do need to know is the parameter that we're assigning the time series to (because, after all, this could be any type of node - veneer-py doesn't know at this stage). We can find the model type, then check `v.model.find_parameters` and, if that doesn't work, `v.model.find_inputs`:

```

v2.model.node.get_models(nodes='Inflow 1')

v2.model.find_parameters('RiverSystem.Nodes.Inflow.InjectedFlow')

v2.model.find_inputs('RiverSystem.Nodes.Inflow.InjectedFlow')

```

So `'Flow'` it is!

```

v2.model.node.assign_time_series('Flow',absolute_path,'Inflows')

```

Almost there.

Now, lets set a range of storage routing parameters (much like we did before)

```

power_vals = np.arange(1.0,0.0,-1.0/num_networks)

power_vals

```

And assign those to the links

```

v2.model.link.routing.set_param_values('RoutingConstant',86400.0)

v2.model.link.routing.set_param_values('RoutingPower',power_vals,fromList=True)

```

Now, configure recording

```

v2.configure_recording(disable=[{}],enable=[{'RecordingVariable':'Downstream Flow Volume'}])

```

And one last thing - work out the time period for the run from the inflow time series

```

inflow_ts = pd.read_csv(absolute_path,index_col=0)

start,end=inflow_ts.index[[0,-1]]

start,end

```

That looks a bit much. Lets run for a year

```

v2.run_model(start='01/01/1999',end='31/12/1999')

```

Now, we can retrieve some results. Because we used a naming convention for all the nodes, its possible to grab relevant results using those conventions

```

upstream = v2.retrieve_multiple_time_series(criteria={'RecordingVariable':'Downstream Flow Volume','NetworkElement':'Inflow.*'})

downstream = v2.retrieve_multiple_time_series(criteria={'RecordingVariable':'Downstream Flow Volume','NetworkElement':'Gauge.*'})

downstream[['Gauge 1:Downstream Flow Volume','Gauge 20:Downstream Flow Volume']].plot(figsize=(10,10))

```

If you'd like to change and rerun this example, the following code block can be used to delete all the existing nodes. (Or, just start a new project in Source)

```

#nodes = v2.network()['features'].find_by_feature_type('node')._all_values('name')

#for n in nodes:

# v2.model.node.remove(n)

```

## Conclusion

This session has looked at structural modifications of Source using Veneer, veneer-py and the use of IronPython scripts that run within Source.

Writing IronPython scripts requires a knowledge of internal Source data structures, but there is a growing collection of helper functions, under the `v.model` namespace to assist.

| true |

code

| 0.696346 | null | null | null | null |

|

# [Hashformers](https://github.com/ruanchaves/hashformers)

Hashformers is a framework for hashtag segmentation with transformers. For more information, please check the [GitHub repository](https://github.com/ruanchaves/hashformers).

# Installation

The steps below will install the hashformers framework on Google Colab.

Make sure you are on GPU mode.

```

!nvidia-smi

```

Here we install `mxnet-cu110`, which is compatible with Google Colab.

If installing in another environment, replace it by the mxnet package compatible with your CUDA version.

```

%%capture

!pip install mxnet-cu110

!pip install hashformers

```

# Segmenting hashtags

Visit the [HuggingFace Model Hub](https://huggingface.co/models) and choose any GPT-2 and a BERT models for the WordSegmenter class.

The GPT-2 model should be informed as `segmenter_model_name_or_path` and the BERT model as `reranker_model_name_or_path`.

Here we choose `distilgpt2` and `distilbert-base-uncased`.

```

%%capture

from hashformers import TransformerWordSegmenter as WordSegmenter

ws = WordSegmenter(

segmenter_model_name_or_path="distilgpt2",

reranker_model_name_or_path="distilbert-base-uncased"

)

```

Now we can simply segment lists of hashtags with the default settings and look at the segmentations.

```

hashtag_list = [

"#myoldphonesucks",

"#latinosinthedeepsouth",

"#weneedanationalpark"

]

segmentations = ws.segment(hashtag_list)

print(*segmentations, sep='\n')

```

Remember that any pair of BERT and GPT-2 models will work. This means you can use **hashformers** to segment hashtags in any language, not just English.

```

%%capture

from hashformers import TransformerWordSegmenter as WordSegmenter

portuguese_ws = WordSegmenter(

segmenter_model_name_or_path="pierreguillou/gpt2-small-portuguese",

reranker_model_name_or_path="neuralmind/bert-base-portuguese-cased"

)

hashtag_list = [

"#benficamemes",

"#mouraria",

"#CristianoRonaldo"

]

segmentations = portuguese_ws.segment(hashtag_list)

print(*segmentations, sep='\n')

```

# Advanced usage

## Speeding up

If you want to investigate the speed-accuracy trade-off, here are a few things that can be done to improve the speed of the segmentations:

* Turn off the reranker model by passing `use_reranker = False` to the `ws.segment` method.

* Adjust the `segmenter_gpu_batch_size` (default: `1` ) and the `reranker_gpu_batch_size` (default: `2000`) parameters in the `WordSegmenter` initialization.

* Decrease the beamsearch parameters `topk` (default: `20`) and `steps` (default: `13`) when calling the `ws.segment` method.

```

%%capture

from hashformers import TransformerWordSegmenter as WordSegmenter

ws = WordSegmenter(

segmenter_model_name_or_path="distilgpt2",

reranker_model_name_or_path="distilbert-base-uncased",

segmenter_gpu_batch_size=1,

reranker_gpu_batch_size=2000

)

%%timeit

hashtag_list = [

"#myoldphonesucks",

"#latinosinthedeepsouth",

"#weneedanationalpark"

]

segmentations = ws.segment(hashtag_list)

%%timeit

hashtag_list = [

"#myoldphonesucks",

"#latinosinthedeepsouth",

"#weneedanationalpark"

]

segmentations = ws.segment(

hashtag_list,

topk=5,

steps=5,

use_reranker=False

)

```

## Getting the ranks

If you pass `return_ranks == True` to the `ws.segment` method, you will receive a dictionary with the ranks generated by the segmenter and the reranker, the dataframe utilized by the ensemble and the final segmentations. A segmentation will rank higher if its score value is **lower** than the other segmentation scores.

Rank outputs are useful if you want to combine the segmenter rank and the reranker rank in ways which are more sophisticated than what is done by the basic ensembler that comes by default with **hashformers**.

For instance, you may want to take two or more ranks ( also called "runs" ), convert them to the trec format and combine them through a rank fusion technique on the [trectools library](https://github.com/joaopalotti/trectools).

```

hashtag_list = [

"#myoldphonesucks",

"#latinosinthedeepsouth",

"#weneedanationalpark"

]

ranks = ws.segment(

hashtag_list,

use_reranker=True,

return_ranks=True

)

# Segmenter rank

ranks.segmenter_rank

# Reranker rank

ranks.reranker_rank

```

## Evaluation

The `evaluate_df` function can evaluate the accuracy, precision and recall of our segmentations. It uses exactly the same evaluation method as previous authors in the field of hashtag segmentation ( Çelebi et al., [BOUN Hashtag Segmentor](https://tabilab.cmpe.boun.edu.tr/projects/hashtag_segmentation/) ).

We have to pass a dataframe with fields for the gold segmentations ( a `gold_field` ) and your candidate segmentations ( a `segmentation_field` ).

The relationship between gold and candidate segmentations does not have to be one-to-one. If we pass more than one candidate segmentation for a single hashtag, `evaluate_df` will measure what is the upper boundary that can be achieved on our ranks ( e.g. Acc@10, Recall@10 ).

### Minimal example

```

# Let's measure the actual performance of the segmenter:

# we will evaluate only the top-1.

import pandas as pd

from hashformers.experiments.evaluation import evaluate_df

gold_segmentations = {

"myoldphonesucks" : "my old phone sucks",

"latinosinthedeepsouth": "latinos in the deep south",

"weneedanationalpark": "we need a national park"

}

gold_df = pd.DataFrame(gold_segmentations.items(),

columns=["characters", "gold"])

segmenter_top_1 = ranks.segmenter_rank.groupby('characters').head(1)

eval_df = pd.merge(gold_df, segmenter_top_1, on="characters")

eval_df

evaluate_df(

eval_df,

gold_field="gold",

segmentation_field="segmentation"

)

```

### Benchmarking

Here we evaluate a `distilgpt2` model on 1000 hashtags.

We collect our hashtags from 10 word segmentation datasets by taking the first 100 hashtags from each dataset.

```

%%capture

!pip install datasets

%%capture

from hashformers.experiments.evaluation import evaluate_df

import pandas as pd

from hashformers import TransformerWordSegmenter

from datasets import load_dataset

user = "ruanchaves"

dataset_names = [

"boun",

"stan_small",

"stan_large",

"dev_stanford",

"test_stanford",

"snap",

"hashset_distant",

"hashset_manual",

"hashset_distant_sampled",

"nru_hse"

]

dataset_names = [ f"{user}/{dataset}" for dataset in dataset_names ]

ws = TransformerWordSegmenter(

segmenter_model_name_or_path="distilgpt2",

reranker_model_name_or_path=None

)

def generate_experiments(datasets, splits, samples=100):

for dataset_name in datasets:

for split in splits:

try:

dataset = load_dataset(dataset_name, split=f"{split}[0:{samples}]")

yield {

"dataset": dataset,

"split": split,

"name": dataset_name

}

except:

continue

benchmark = []

for experiment in generate_experiments(dataset_names, ["train", "validation", "test"], samples=100):

hashtags = experiment['dataset']['hashtag']

annotations = experiment['dataset']['segmentation']

segmentations = ws.segment(hashtags, use_reranker=False, return_ranks=False)

eval_df = [{

"gold": gold,

"hashtags": hashtag,

"segmentation": segmentation

} for gold, hashtag, segmentation in zip(annotations, hashtags, segmentations)]

eval_df = pd.DataFrame(eval_df)

eval_results = evaluate_df(

eval_df,

gold_field="gold",

segmentation_field="segmentation"

)

eval_results.update({

"name": experiment["name"],

"split": experiment["split"]

})

benchmark.append(eval_results)

benchmark_df = pd.DataFrame(benchmark)

benchmark_df["name"] = benchmark_df["name"].apply(lambda x: x[(len(user) + 1):])

benchmark_df = benchmark_df.set_index(["name", "split"])

benchmark_df = benchmark_df.round(3)

benchmark_df

benchmark_df.agg(['mean', 'std']).round(3)

```

| true |

code

| 0.518973 | null | null | null | null |

|

A quick look at GAMA bulge and disk colours in multi-band GALAPAGOS fits versus single-band GALAPAGOS and SIGMA fits.

Pretty plots at the bottom.

```

%matplotlib inline

from matplotlib import pyplot as plt

# better-looking plots

plt.rcParams['font.family'] = 'serif'

plt.rcParams['figure.figsize'] = (10.0*1.3, 8*1.3)

plt.rcParams['font.size'] = 18*1.3

import pandas

#from galapagos_to_pandas import galapagos_to_pandas

## convert the GALAPAGOS data

#galapagos_to_pandas()

## convert the SIGMA data

#galapagos_to_pandas('/home/ppzsb1/projects/gama/qc/raw/StructureCat_SersicExp.fits',

# '/home/ppzsb1/quickdata/StructureCat_SersicExp.h5')

## read in GALAPAGOS data

## no attempt has been made to select only reliable bulges and discs

store = pandas.HDFStore('/home/ppzsb1/quickdata/GAMA_9_all_combined_gama_only_bd6.h5')

data = store['data'].set_index('CATAID')

print len(data)

## read in SIGMA data - this is the raw sersic+exponential catalogue

## no attempt has been made here to select true two-component systems

store = pandas.HDFStore('/home/ppzsb1/quickdata/StructureCat_SersicExp.h5')

sigma = store['data'].set_index('CATAID')

print len(sigma)

## get overlap between the catalogue objects

data = data.join(sigma, how='inner', rsuffix='_SIGMA')

len(data)

## restrict to bright objects

data = data[data['MAG_GALFIT'] < 18.0]

len(data)

## band information

allbands = list('ugrizYJHK')

#band_wl = pandas.Series([3543,4770,6231,7625,9134,10395,12483,16313,22010], index=allbands)

normband = 'K'

bands = list('ugrizYJH')

band_labels = ['${}$'.format(i) for i in bands]

band_wl = pandas.Series([3543,4770,6231,7625,9134,10395,12483,16313], index=bands)

#normband = 'Z'

#bands = list('ugriYJHK')

#band_wl = numpy.array([3543,4770,6231,7625,10395,12483,16313,22010])

## extract magnitudes and use consistent column labels

mags_b = data[['MAG_GALFIT_BAND_B_{}'.format(b.upper()) for b in allbands]]

mags_d = data[['MAG_GALFIT_BAND_D_{}'.format(b.upper()) for b in allbands]]

mags_b_single = data[['SINGLE_MAG_GALFIT_B_{}'.format(b.upper()) for b in allbands]]

mags_d_single = data[['SINGLE_MAG_GALFIT_D_{}'.format(b.upper()) for b in allbands]]

mags_b_sigma = data[['GALMAG_01_{}'.format(b) for b in allbands]]

mags_d_sigma = data[['GALMAG_02_{}'.format(b) for b in allbands]]

mags_b.columns = mags_d.columns = allbands

mags_b_single.columns = mags_d_single.columns = allbands

mags_b_sigma.columns = mags_d_sigma.columns = allbands

## normalise SEDs and select only objects for which all magnitudes are sensible

def get_normsed(mags, bands, normband):

normsed = mags[bands]

normsed = normsed.sub(mags[normband], axis='index')

good = ((normsed > -50) & (normsed < 50)).T.all()

good &= ((mags[normband] > -50) & (mags[normband] < 50))

return normsed, good

## get normalised SEDs

normsed_b, good_b = get_normsed(mags_b, bands, normband)

normsed_b_single, good_b_single = get_normsed(mags_b_single, bands, normband)

normsed_b_sigma, good_b_sigma = get_normsed(mags_b_sigma, bands, normband)

normsed_d, good_d = get_normsed(mags_d, bands, normband)

normsed_d_single, good_d_single = get_normsed(mags_d_single, bands, normband)

normsed_d_sigma, good_d_sigma = get_normsed(mags_d_sigma, bands, normband)

print len(normsed_d)

## restrict sample to set of object that are good in all three catalogues

good_b &= good_b_single & good_b_sigma

good_d &= good_d_single & good_d_sigma

normsed_b_single = normsed_b_single[good_b]

normsed_d_single = normsed_d_single[good_d]

normsed_b_sigma = normsed_b_sigma[good_b]

normsed_d_sigma = normsed_d_sigma[good_d]

normsed_b = normsed_b[good_b]

normsed_d = normsed_d[good_d]

print len(normsed_d)

## overlay all SEDS

def plot_labels(i, label):

if i == 1:

plt.title('bulges')

if i == 2:

plt.title('discs')

if i == 3:

plt.ylabel('mag offset from $K$-band')

if i % 2 == 0:

plt.ylabel(label)

fig = plt.figure(figsize=(12,8))

def plot(d, label):

if not hasattr(plot, "plotnum"):

plot.plotnum = 0

plot.plotnum += 1

ax = plt.subplot(3, 2, plot.plotnum)

d.T.plot(ax=ax, x=band_wl, ylim=(5,-2), legend=False, color='r', alpha=0.2)

ax.xaxis.set_ticks(band_wl)

ax.xaxis.set_ticklabels(bands)

plot_labels(plot.plotnum, label)

plt.axis(ymin=8, ymax=-5)

plot(normsed_b, 'GALA multi')

plot(normsed_d, 'GALA multi')

plot(normsed_b_single, 'GALA single')

plot(normsed_d_single, 'GALA single')

plot(normsed_b_sigma, 'SIGMA')

plot(normsed_d_sigma, 'SIGMA')

plt.subplots_adjust(wspace=0.25, hspace=0.25)

## produce boxplots

fig = plt.figure(figsize=(12,8))

def boxplot(d, label):

if not hasattr(boxplot, "plotnum"):

boxplot.plotnum = 0

boxplot.plotnum += 1

plt.subplot(3, 2, boxplot.plotnum)

d.boxplot(sym='b.')

plot_labels(boxplot.plotnum, label)

plt.axis(ymin=8, ymax=-5)

boxplot(normsed_b, 'GALA multi')

boxplot(normsed_d, 'GALA multi')

boxplot(normsed_b_single, 'GALA single')

boxplot(normsed_d_single, 'GALA single')

boxplot(normsed_b_sigma, 'SIGMA')

boxplot(normsed_d_sigma, 'SIGMA')

plt.subplots_adjust(wspace=0.25, hspace=0.25)

## functions to produce nice asymmetric violin plots

## clip tails of the distributions to produce neater violins

from scipy.stats import scoreatpercentile

def clip(x, p=1):

y = []

for xi in x:

p_lo = scoreatpercentile(xi, p)

p_hi = scoreatpercentile(xi, 100-p)

y.append(xi.clip(p_lo, p_hi))

return y

## fancy legend text, which mimics the appearance of the violin plots

import matplotlib.patheffects as PathEffects

def outlined_text(x, y, text, color='k', rotation=0):

## \u2009 is a hairspace

## DejaVu Serif is specified as the default serif fonts on my system don't have this character

plt.text(x, y, u'\u2009'.join(text), color='white', alpha=0.5,

fontname='DejaVu Serif', rotation=rotation,

path_effects=[PathEffects.withStroke(linewidth=2.5, foreground=color, alpha=1.0)])

## produce asymmetric violin plots

from statsmodels.graphics.boxplots import violinplot

def bdviolinplot(bm, bs, dm, ds, mtext='', stext=''):

wl = np.log10(band_wl)*10

vw = 0.5

vlw = 1.5

p = violinplot(clip(bm.T.values), labels=band_labels, positions=wl,

side='left', show_boxplot=False,

plot_opts={'violin_width':vw, 'violin_fc':'red',

'violin_ec':'darkred', 'violin_lw':vlw})

p = violinplot(clip(bs.T.values), ax=plt.gca(), labels=band_labels,

positions=wl, side='right', show_boxplot=False,

plot_opts={'violin_width':vw, 'violin_fc':'red',

'violin_ec':'darkred', 'violin_lw':vlw})

p = violinplot(clip(dm.T.values), ax=plt.gca(), labels=band_labels,

positions=wl, side='left', show_boxplot=False,

plot_opts={'violin_width':vw, 'violin_fc':'blue',

'violin_ec':'darkblue', 'violin_lw':vlw})

p = violinplot(clip(ds.T.values), ax=plt.gca(), labels=band_labels,

positions=wl, side='right', show_boxplot=False,

plot_opts={'violin_width':vw, 'violin_fc':'blue',

'violin_ec':'darkblue', 'violin_lw':vlw})

## overlay median trends

plt.plot(wl, bm.median(), color='r', lw=2)

plt.plot(wl, bs.median(), color='r', ls='--', lw=2)

plt.plot(wl, dm.median(), color='b', lw=2)

plt.plot(wl, ds.median(), color='b', ls='--', lw=2)

## tidy up

plt.axis(ymin=8, ymax=-5)

plt.ylabel('mag offset from $K$-band')

plt.text(38.5, 7.3, '{} galaxies'.format(len(bm)))

## legend

x, y = (41.0, 6.9)

outlined_text(x, y, 'discs', 'darkblue')

outlined_text(x, y+0.75, 'bulges', 'darkred')

x, y = (x-0.35, 2.2)

outlined_text(x, y, 'multi-band', '0.1', rotation=90)

outlined_text(x+0.4, y, 'single-band', '0.1', rotation=90)

outlined_text(x-0.3, y, mtext, '0.3', rotation=90)

outlined_text(x+0.7, y, stext, '0.3', rotation=90)

bdviolinplot(normsed_b, normsed_b_single, normsed_d, normsed_d_single,

'GALAPAGOS', 'GALAPAGOS')

```

The figure is an asymmetric violin plot, which compares the distribution of disc and bulge SEDs with one another and between multi- and single-band fitting approaches. For the multi-band fits, all the images were fit simultaneously, constrained to the same structural parameters, but with magnitude free to vary. For the single-band fits, each image was fit completely independently. All the fits were performed with GALAPAGOS and GALFITM, which allows a simple, fair comparison. However, as SIGMA contains logic to retry fits which do not meet physical expectations, it is likely to perform somewhat differently. The same sample used is ~400 galaxies with r < 18 mag and 0.025 < redshift < 0.06 for which none of the fits crashed (more sophisticated cleaning could certainly be done). The SEDs are normalised to the K-band magnitude.

The disc data are shown in blue, while the bulge data are shown in red. The shape of each side of a violin represents the distribution of magnitude offset for that band. The left-side of each violin presents the multi-band fit results, while the right-sides present the single-band results. The medians of each distribution are also plotted in their corresponding colour, with solid lines for multi-band and dashed lines for single-band results.

The single-band results do not distinguish very much between the SEDs of bulge and disc components, as can be seen from the coincidence between the dashed lines and the fact that the right-sides of the red and blue violins mostly overlap.

In constrast, the multi-band results show a significant difference in the SEDs of bulges and discs, in terms of both medians, and overall distributions. Note that there is no colour difference between the components in the initial parameters. The colour difference simply arises from the improved decomposition afforded by the multi-band approach.

```

bdviolinplot(normsed_b, normsed_b_sigma, normsed_d, normsed_d_sigma,

'GALAPAGOS', 'SIGMA')

```

The figure is the same as above, but now compares the GALAPAGOS multi-band fit results to single-band fits using SIGMA. The SIGMA results show less scatter the the GALAPAGS single-band fits, but there is still very little differentiation between the SEDs of bulges and discs.

| true |

code

| 0.577734 | null | null | null | null |

|

# Creating your own dataset from Google Images

*by: Francisco Ingham and Jeremy Howard. Inspired by [Adrian Rosebrock](https://www.pyimagesearch.com/2017/12/04/how-to-create-a-deep-learning-dataset-using-google-images/)*

In this tutorial we will see how to easily create an image dataset through Google Images. **Note**: You will have to repeat these steps for any new category you want to Google (e.g once for dogs and once for cats).

```

from fastai.vision.all import *

from nbdev.showdoc import *

```

## Get a list of URLs

### Search and scroll

Go to [Google Images](http://images.google.com) and search for the images you are interested in. The more specific you are in your Google Search, the better the results and the less manual pruning you will have to do.

Scroll down until you've seen all the images you want to download, or until you see a button that says 'Show more results'. All the images you scrolled past are now available to download. To get more, click on the button, and continue scrolling. The maximum number of images Google Images shows is 700.

It is a good idea to put things you want to exclude into the search query, for instance if you are searching for the Eurasian wolf, "canis lupus lupus", it might be a good idea to exclude other variants:

"canis lupus lupus" -dog -arctos -familiaris -baileyi -occidentalis

You can also limit your results to show only photos by clicking on Tools and selecting Photos from the Type dropdown.

### Download into file

Now you must run some Javascript code in your browser which will save the URLs of all the images you want for you dataset.

Press <kbd>Ctrl</kbd><kbd>Shift</kbd><kbd>J</kbd> in Windows/Linux and <kbd>Cmd</kbd><kbd>Opt</kbd><kbd>J</kbd> in Mac, and a small window the javascript 'Console' will appear. That is where you will paste the JavaScript commands.

You will need to get the urls of each of the images. Before running the following commands, you may want to disable ad blocking extensions (uBlock, AdBlockPlus etc.) in Chrome. Otherwise the window.open() command doesn't work. Then you can run the following commands:

```javascript

urls = Array.from(document.querySelectorAll('.rg_di .rg_meta')).map(el=>JSON.parse(el.textContent).ou);

window.open('data:text/csv;charset=utf-8,' + escape(urls.join('\n')));

```

### Create directory and upload urls file into your server

Choose an appropriate name for your labeled images. You can run these steps multiple times to create different labels.

```

path = Config().data/'bears'

path.mkdir(parents=True, exist_ok=True)

path.ls()

```

Finally, upload your urls file. You just need to press 'Upload' in your working directory and select your file, then click 'Upload' for each of the displayed files.

## Download images

Now you will need to download your images from their respective urls.

fast.ai has a function that allows you to do just that. You just have to specify the urls filename as well as the destination folder and this function will download and save all images that can be opened. If they have some problem in being opened, they will not be saved.

Let's download our images! Notice you can choose a maximum number of images to be downloaded. In this case we will not download all the urls.

```

classes = ['teddy','grizzly','black']

for c in classes:

print(c)

file = f'urls_{c}.csv'

download_images(path/c, path/file, max_pics=200)

# If you have problems download, try with `max_workers=0` to see exceptions:

#download_images(path/file, dest, max_pics=20, max_workers=0)

```

Then we can remove any images that can't be opened:

```

for c in classes:

print(c)

verify_images(path/c, delete=True, max_size=500)

```

## View data

```

np.random.seed(42)

dls = ImageDataLoaders.from_folder(path, train=".", valid_pct=0.2, item_tfms=RandomResizedCrop(460, min_scale=0.75),

bs=64, batch_tfms=[*aug_transforms(size=224, max_warp=0), Normalize.from_stats(*imagenet_stats)])

# If you already cleaned your data, run this cell instead of the one before

# np.random.seed(42)

# dls = ImageDataLoaders.from_csv(path, folder=".", valid_pct=0.2, csv_labels='cleaned.csv',

# item_tfms=RandomResizedCrop(460, min_scale=0.75), bs=64,

# batch_tfms=[*aug_transforms(size=224, max_warp=0), Normalize.from_stats(*imagenet_stats)])

```

Good! Let's take a look at some of our pictures then.

```

dls.vocab

dls.show_batch(rows=3, figsize=(7,8))

dls.vocab, dls.c, len(dls.train_ds), len(dls.valid_ds)

```

## Train model

```

learn = cnn_learner(dls, resnet34, metrics=error_rate)

learn.fit_one_cycle(4)

learn.save('stage-1')

learn.unfreeze()

```

If the plot is not showing try to give a start and end learning rate:

`learn.lr_find(start_lr=1e-5, end_lr=1e-1)`

```

learn.lr_find()

learn.load('stage-1')

learn.fit_one_cycle(2, lr_max=slice(3e-5,3e-4))

learn.save('stage-2')

```

## Interpretation

```

learn.load('stage-2');

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()

```

## Putting your model in production

First thing first, let's export the content of our `Learner` object for production:

```

learn.export()

```

This will create a file named 'export.pkl' in the directory where we were working that contains everything we need to deploy our model (the model, the weights but also some metadata like the classes or the transforms/normalization used).

You probably want to use CPU for inference, except at massive scale (and you almost certainly don't need to train in real-time). If you don't have a GPU that happens automatically. You can test your model on CPU like so:

```

defaults.device = torch.device('cpu')

img = Image.open(path/'black'/'00000021.jpg')

img

```

We create our `Learner` in production environment like this, just make sure that `path` contains the file 'export.pkl' from before.

```

learn = torch.load(path/'export.pkl')

pred_class,pred_idx,outputs = learn.predict(path/'black'/'00000021.jpg')

pred_class

```

So you might create a route something like this ([thanks](https://github.com/simonw/cougar-or-not) to Simon Willison for the structure of this code):

```python

@app.route("/classify-url", methods=["GET"])

async def classify_url(request):

bytes = await get_bytes(request.query_params["url"])

img = PILImage.create(bytes)

_,_,probs = learner.predict(img)

return JSONResponse({

"predictions": sorted(

zip(cat_learner.dls.vocab, map(float, probs)),

key=lambda p: p[1],

reverse=True

)

})

```

(This example is for the [Starlette](https://www.starlette.io/) web app toolkit.)

## Things that can go wrong

- Most of the time things will train fine with the defaults

- There's not much you really need to tune (despite what you've heard!)

- Most likely are

- Learning rate

- Number of epochs

### Learning rate (LR) too high

```

learn = cnn_learner(dls, resnet34, metrics=error_rate)

learn.fit_one_cycle(1, lr_max=0.5)

```

### Learning rate (LR) too low

```

learn = cnn_learner(dls, resnet34, metrics=error_rate)

```

Previously we had this result:

```

Total time: 00:57

epoch train_loss valid_loss error_rate

1 1.030236 0.179226 0.028369 (00:14)

2 0.561508 0.055464 0.014184 (00:13)

3 0.396103 0.053801 0.014184 (00:13)

4 0.316883 0.050197 0.021277 (00:15)

```

```

learn.fit_one_cycle(5, lr_max=1e-5)

learn.recorder.plot_loss()

```

As well as taking a really long time, it's getting too many looks at each image, so may overfit.

### Too few epochs

```

learn = cnn_learner(dls, resnet34, metrics=error_rate, pretrained=False)

learn.fit_one_cycle(1)

```

### Too many epochs

```

from fastai.basics import *

from fastai.callback.all import *

from fastai.vision.all import *

from nbdev.showdoc import *

path = Config().data/'bears'

np.random.seed(42)

dls = ImageDataLoaders.from_folder(path, train=".", valid_pct=0.8, item_tfms=RandomResizedCrop(460, min_scale=0.75),

bs=32, batch_tfms=[AffineCoordTfm(size=224), Normalize.from_stats(*imagenet_stats)])

learn = cnn_learner(dls, resnet50, metrics=error_rate, config=cnn_config(ps=0))

learn.unfreeze()

learn.fit_one_cycle(40, slice(1e-6,1e-4), wd=0)

```

| true |

code

| 0.522202 | null | null | null | null |

|

# NLP Feature Engineering

## Feature Creation

```

# Read in the text data

import pandas as pd

data = pd.read_csv("./data/SMSSpamCollection.tsv", sep='\t')

data.columns = ['label', 'body_text']

```

### Create feature for text message length

```

data['body_len'] = data['body_text'].apply(lambda x: len(x) - x.count(" "))

data.head()

```

### Create feature for % of text that is punctuation

```

import string

# Create a function to count punctuation

def count_punct(text):

count = sum([1 for char in text if char in string.punctuation])

return round(count/(len(text) - text.count(" ")), 3)*100

# Create a column for the % of punctuation in each body text

data['punct%'] = data['body_text'].apply(lambda x: count_punct(x))

data.head()

```

## Evaluate Created Features

```

# Import the dependencies

from matplotlib import pyplot

import numpy as np

%matplotlib inline

# Create a plot that demonstrates the length of the message for 'ham' and 'spam'

bins = np.linspace(0, 200, 40)

pyplot.hist(data[data['label']=='spam']['body_len'], bins, alpha=0.5, normed=True, label='spam')

pyplot.hist(data[data['label']=='ham']['body_len'], bins, alpha=0.5, normed=True, label='ham')

pyplot.legend(loc='upper left')

pyplot.show()

# Create a plot that demonstrates the punctuation % for 'ham' and 'spam'

bins = np.linspace(0, 50, 40)

pyplot.hist(data[data['label']=='spam']['punct%'], bins, alpha=0.5, normed=True, label='spam')

pyplot.hist(data[data['label']=='ham']['punct%'], bins, alpha=0.5, normed=True, label='ham')

pyplot.legend(loc='upper right')

pyplot.show()

```

## Transformation

### Plot the two new features

```

bins = np.linspace(0, 200, 40)

pyplot.hist(data['body_len'], bins)

pyplot.title("Body Length Distribution")

pyplot.show()

bins = np.linspace(0, 50, 40)

pyplot.hist(data['punct%'], bins)

pyplot.title("Punctuation % Distribution")

pyplot.show()

```

### Transform the punctuation % feature

### Box-Cox Power Transformation

**Base Form**: $$ y^x $$

| X | Base Form | Transformation |

|------|--------------------------|--------------------------|

| -2 | $$ y ^ {-2} $$ | $$ \frac{1}{y^2} $$ |

| -1 | $$ y ^ {-1} $$ | $$ \frac{1}{y} $$ |

| -0.5 | $$ y ^ {\frac{-1}{2}} $$ | $$ \frac{1}{\sqrt{y}} $$ |

| 0 | $$ y^{0} $$ | $$ log(y) $$ |

| 0.5 | $$ y ^ {\frac{1}{2}} $$ | $$ \sqrt{y} $$ |

| 1 | $$ y^{1} $$ | $$ y $$ |

| 2 | $$ y^{2} $$ | $$ y^2 $$ |

**Process**

1. Determine what range of exponents to test

2. Apply each transformation to each value of your chosen feature

3. Use some criteria to determine which of the transformations yield the best distribution

| true |

code

| 0.473049 | null | null | null | null |

|

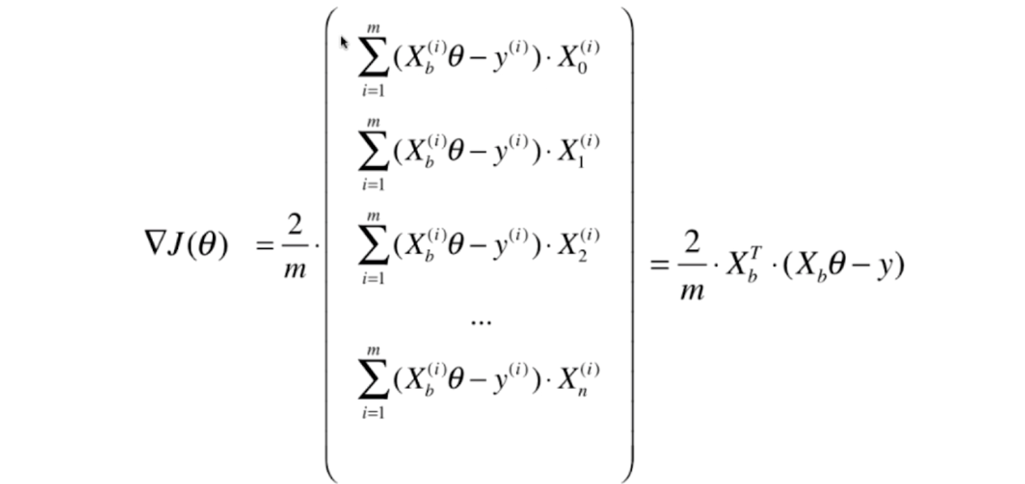

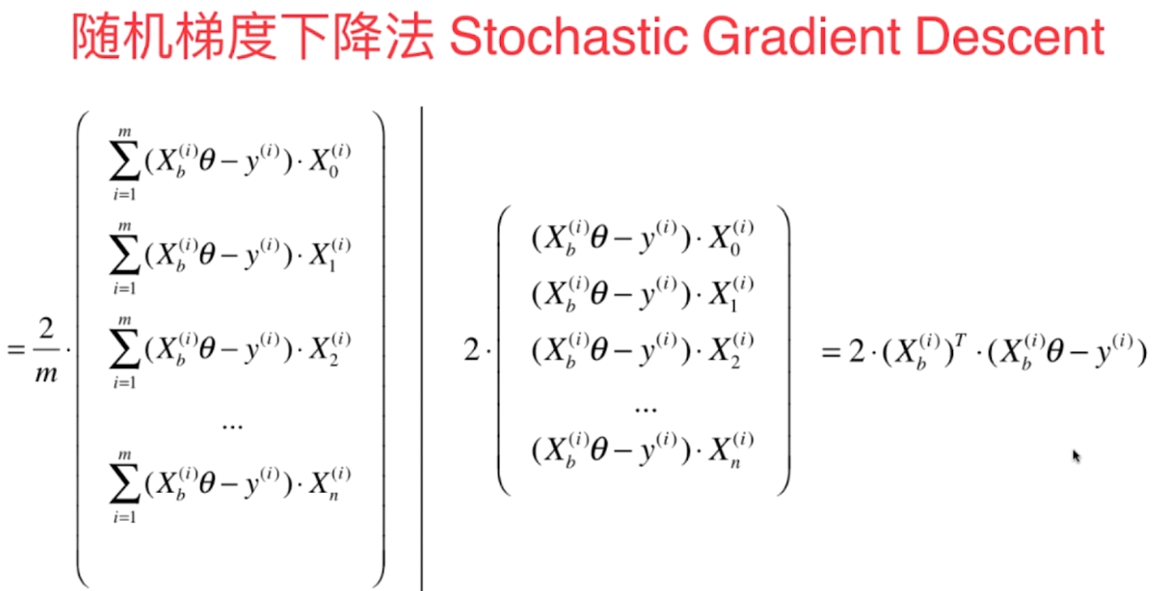

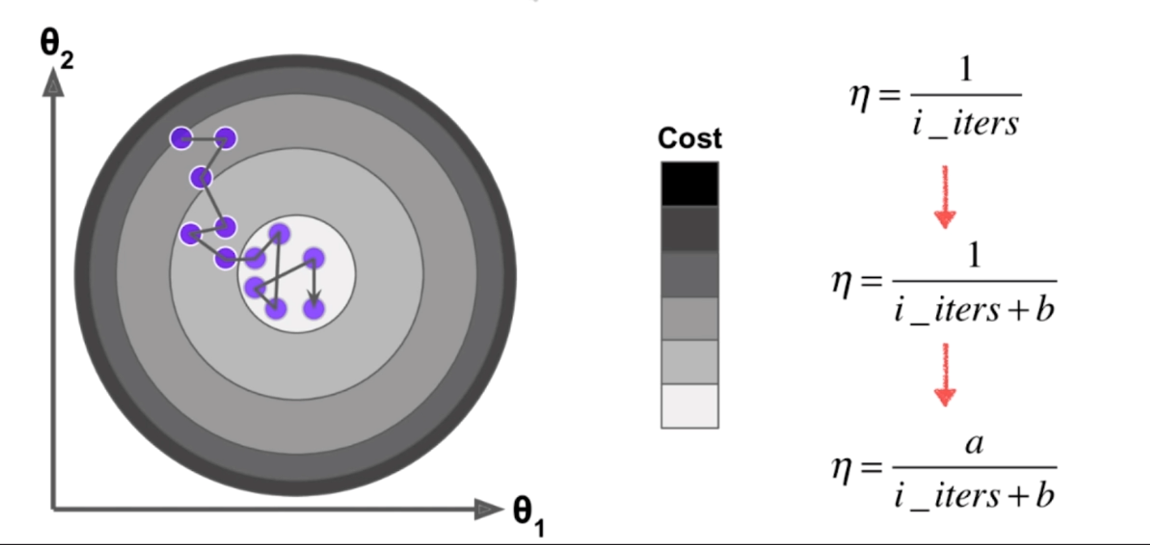

# Stochastic Gradient Descent

- 上节梯度下降法如图所示

[](https://imgchr.com/i/8mATJK)

- 我们每次都把所有的梯度算出来,称为**批量梯度下降法**

- 但是这样在样本容量很大时,也是比较耗时的,解决方法是**随机梯度下降法**

[](https://imgchr.com/i/8mALsH)

- 我们随机的取一个 $i$ ,然后用这个 $i$ 得到一个向量,然后向这个方向搜索迭代

[](https://imgchr.com/i/8mAHzD)

- 在随机梯度下降法中,我们不能保证寻找的方向就是损失函数减小的方向

- 更不能保证时减小的最快的方向

- 我们希望 $\eta$ 随着迭代次数增大越来越小,于是 $\eta$ 就有右边表示形式

- 其中 a 和 b 是两个超参数

### 1. 批量梯度下降算法

```

import numpy as np

import matplotlib.pyplot as plt

m = 100000

x = np.random.normal(size = m)

X = x.reshape(-1, 1)

y = 4. * x + 3. + np.random.normal(0, 3, size=m)

def J(theta, X_b, y):

try:

return np.sum((y - X_b.dot(theta))**2) / len(y)

except:

return float('inf')

def dJ(theta, X_b, y):

return X_b.T.dot(X_b.dot(theta) - y) * 2. / len(y)

def gradient_descent(X_b, y, initial_theta, eta, n_iters = 1e4, epsilon=1e-8):

theta = initial_theta

i_iter = 0

while i_iter < n_iters:

gradient = dJ(theta, X_b, y)

last_theta = theta

theta = theta - eta * gradient

if np.abs(J(theta, X_b, y) - J(last_theta, X_b, y)) < epsilon:

break

i_iter += 1

return theta

X_b = np.hstack([np.ones([len(X), 1]), X])

initial_theta = np.zeros(X_b.shape[1])

eta = 0.01

theta = gradient_descent(X_b, y, initial_theta, eta)

theta

```

### 2. 随机梯度下降法

```

# 传入具体的某一行

def dJ(theta, X_b_i, y_i):

return X_b_i.T.dot(X_b_i.dot(theta) - y_i) * 2.

def sgd(X_b, y, initial_theta, n_iters):

t0 = 5

t1 = 50

def learning_rate(cur_iter):

return t0 / (cur_iter + t1)

theta = initial_theta

for cur_iter in range(n_iters):

# 随机取一个 i

rand_i = np.random.randint(len(X_b))

gradient = dJ(theta, X_b[rand_i], y[rand_i])

# 迭代 theta

theta = theta - learning_rate(cur_iter) * gradient

return theta

X_b = np.hstack([np.ones([len(X), 1]), X])

initial_theta = np.zeros(X_b.shape[1])

theta = sgd(X_b, y, initial_theta, n_iters=len(X_b)//3)

# 可以看出,我们只使用了三分之一的样本,就达到了很好的效果

theta

# theta 值和批量梯度下降算法几乎一致

```

### 3. 使用我们自己的SGD

```

from LR.LinearRegression import LinearRegression

lin_reg = LinearRegression()

lin_reg.fit_sgd(X, y, n_iters=2)

lin_reg.coef_

lin_reg.intercept_

```

#### 使用真实数据

```

from sklearn import datasets

boston = datasets.load_boston()

X = boston.data

y = boston.target

X = X[y < 50.0]

y = y[y < 50.0]

from LR.model_selection import train_test_split

# 数据集分割

X_train, X_test, y_train, y_test = train_test_split(X, y, seed=333)

# 归一化处理

from sklearn.preprocessing import StandardScaler

standardScaler = StandardScaler()

standardScaler.fit(X_train)

X_train_standard = standardScaler.transform(X_train)

X_test_standard = standardScaler.transform(X_test)

lin_reg2 = LinearRegression()

%time lin_reg2.fit_sgd(X_train_standard, y_train)

lin_reg2.score(X_test_standard, y_test)

```

### 4. scikit-learn中的SGD

```

from sklearn.linear_model import SGDRegressor

sgd_reg = SGDRegressor()

%time sgd_reg.fit(X_train_standard, y_train)

sgd_reg.score(X_test_standard, y_test)

SGDRegressor?

sgd_reg = SGDRegressor(n_iter=100)

%time sgd_reg.fit(X_train_standard, y_train)

sgd_reg.score(X_test_standard, y_test)

```

| true |

code

| 0.53522 | null | null | null | null |

|

# Siamese Convolutional Neural Network

```

from model import siamese_CNN

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

import pickle

import numpy as np

from pandas import DataFrame

import tensorflow as tf

import keras.backend as K

# model imports

from keras.models import Sequential, Model, Input

from keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

from keras.layers import Dropout, BatchNormalization

from keras.layers import Lambda, concatenate

from keras.initializers import RandomNormal

from tensorflow.keras.regularizers import l2

from keras.optimizers import Adam, RMSprop

from keras.callbacks import EarlyStopping

# plotting

from tensorflow.keras.utils import plot_model

import pydotplus as pydot

import matplotlib.pyplot as plt

%matplotlib inline

```

## Setting up datasets

```

def load_pickle(file):

with open(file, 'rb') as f:

return pickle.load(f)

def load_dataset(i):

print("\nLoading dataset...", end="")

data = load_pickle(PATHS[i][0]) # training data

pairs = load_pickle(PATHS[i][1]) # pairs of data

pairs = [pairs[0], pairs[1]]

targets = load_pickle(PATHS[i][2]) # targets of the data

print("dataset {0} loaded successfully!\n".format(PATHS.index(PATHS[i])))

return data, pairs, targets

def data_shapes():

print("\nNumber of classes : ", data.shape[0])

print("Original signatures : ", len(data[0][0]))

print("Forged signatures : ", len(data[0][1]))

print("Image shape : ", data[0][0][0].shape)

print("Total number of pairs : ", pairs[0].shape[0])

print("Number of pairs for each class : ", pairs[0].shape[0]//data.shape[0])

print("Targets shape : ", targets.shape)

print()

def plot_13(id1, id2, id3):

fig, ax = plt.subplots(1, 3, sharex=True, sharey=True, figsize=(8,8))

ax[0].imshow(pairs[0][id1])

ax[1].imshow(pairs[1][id2])

ax[2].imshow(pairs[1][id3])

# subplot titles

ax[0].set_title('Anchor image of class {0}'.format(id1//42))

ax[1].set_title('Target: {0}'.format(targets[id2]))

ax[2].set_title('Target: {0}'.format(targets[id3]))

fig.tight_layout()

```

## Setting up models

```

def contrastive_loss(y_true, y_pred):

"""Contrastive loss.

if y = true and d = pred,

d(y,d) = mean(y * d^2 + (1-y) * (max(margin-d, 0))^2)

Args:

y_true : true values.

y_pred : predicted values.

Returns:

contrastive loss

"""

margin = 1

return K.mean(y_true * K.square(y_pred) + (1 - y_true) * K.square(K.maximum(margin - y_pred, 0)))

def model_setup(verbose=False):

rms = RMSprop(lr=1e-4, rho=0.9, epsilon=1e-08)

model = siamese_CNN((224, 224, 1))

model.compile(optimizer=rms, loss=contrastive_loss)

if verbose:

model.summary()

tf.keras.utils.plot_model(

model,

show_shapes=True,

show_layer_names=True,

to_file="resources\\model_plot.png"

)

return model

```

## Training

```

def model_training(model, weights_name):

print("\nStarting training!\n")

# hyperparameters

EPOCHS = 100 # number of epochs

BS = 32 # batch size

# callbacks

callbacks = [EarlyStopping(monitor='val_loss', patience=3, verbose=1,)]

history = model.fit(

pairs, targets,

batch_size=BS,

epochs=EPOCHS,

verbose=1,

callbacks=callbacks,

validation_split=0.25,

)

ALL_HISTORY.append(history)

print("\nSaving weight for model...", end="")

siamese_net.save_weights('weights\\{0}.h5'.format(weights_name))

print("saved successfully!")

```

## Evaluation

```

def compute_accuracy_roc(predictions, labels):

"""Compute ROC accuracyand threshold.

Also, plot FAR-FRR curves and P-R curves for input data.

Args:

predictions -- np.array : array of predictions.

labels -- np.array : true labels (0 or 1).

plot_far_frr -- bool : plots curves of True.

Returns:

max_acc -- float : maximum accuracy of model.

best_thresh --float : best threshold for the model.

"""

dmax = np.max(predictions)

dmin = np.min(predictions)

nsame = np.sum(labels == 1) #similar

ndiff = np.sum(labels == 0) #different

step = 0.01

max_acc = 0

best_thresh = -1

frr_plot = []

far_plot = []

pr_plot = []

re_plot = []

ds = []

for d in np.arange(dmin, dmax+step, step):

idx1 = predictions.ravel() <= d # guessed genuine

idx2 = predictions.ravel() > d # guessed forged

tp = float(np.sum(labels[idx1] == 1))

tn = float(np.sum(labels[idx2] == 0))

fp = float(np.sum(labels[idx1] == 0))

fn = float(np.sum(labels[idx2] == 1))

tpr = float(np.sum(labels[idx1] == 1)) / nsame

tnr = float(np.sum(labels[idx2] == 0)) / ndiff

acc = 0.5 * (tpr + tnr)

pr = tp / (tp + fp)

re = tp / (tp + fn)

if (acc > max_acc):

max_acc, best_thresh = acc, d

far = fp / (fp + tn)

frr = fn / (fn + tp)

frr_plot.append(frr)

pr_plot.append(pr)

re_plot.append(re)

far_plot.append(far)

ds.append(d)

plot_metrics = [ds, far_plot, frr_plot, pr_plot, re_plot]

return max_acc, best_thresh, plot_metrics

def model_evaluation(model):

print("\nEvaluating model...", end="")

pred = model.predict(pairs)

acc, thresh, plot_metrics = compute_accuracy_roc(pred, targets)

print("evaluation finished!\n")

ACCURACIES.append(acc)

THRESHOLDS.append(thresh)

PLOTS.append(plot_metrics)

```

## Visualizing models

```

def visualize_history():

losses = ['loss', 'val_loss']

accs = ['accuracy', 'val_accuracy']

fig, ax = plt.subplots(3, 2, sharex=True, sharey=True, figsize=(8,8))

for i in range(3):

for x, y in zip(losses, accs):

ax[i,0].plot(ALL_HISTORY[i].history[x])

ax[i,0].set_title('Losses')

ax[i,1].plot(ALL_HISTORY[i].history[y])

ax[i,1].set_title('Accuracies')

ax[i,0].legend(losses)

ax[i,1].legend(accs)

plt.grid(True)

plt.tight_layout()

def evaluation_plots(metrics):

ds = metrics[0]

far_plot = metrics[1]

frr_plot = metrics[2]

pr_plot = metrics[3]

re_plot = metrics[4]

fig = plt.figure(figsize=(15,6))

# error rate

ax = fig.add_subplot(121)

ax.plot(ds, far_plot, color='red')

ax.plot(ds, frr_plot, color='blue')

ax.set_title('Error rate')

ax.legend(['FAR', 'FRR'])

ax.set(xlabel = 'Thresholds', ylabel='Error rate')

# precision-recall curve

ax1 = fig.add_subplot(122)

ax1.plot(ds, pr_plot, color='green')

ax1.plot(ds, re_plot, color='magenta')

ax1.set_title('P-R curve')

ax1.legend(['Precision', 'Recall'])

ax.set(xlabel = 'Thresholds', ylabel='Error rate')

plt.show()

```

## Everything put together

```

# paths to datasets

PATHS = [

[

'data\\pickle-files\\cedar_pairs1_train.pickle',

'data\\pickle-files\\cedar_pairs1_pairs.pickle',

'data\\pickle-files\\cedar_pairs1_targets.pickle'

],

[

"data\\pickle-files\\bengali_pairs1_pairs.pickle"

'data\\pickle-files\\bengali_pairs1_train.pickle',

'data\\pickle-files\\bengali_pairs1_targets.pickle'

],

[

'data\\pickle-files\\hindi_pairs1_train.pickle',

'data\\pickle-files\\hindi_pairs1_pairs.pickle',

'data\\pickle-files\\hindi_pairs1_targets.pickle'

]

]

# for kaggle

# PATHS = [

# [

# '../usr/lib/preprocess/cedar_pairs1_train.pickle',

# '../usr/lib/preprocess/cedar_pairs1_pairs.pickle',

# '../usr/lib/preprocess/cedar_pairs1_targets.pickle'

# ],

# [

# '../usr/lib/preprocess/bengali_pairs1_train.pickle',

# '../usr/lib/preprocess/bengali_pairs1_pairs.pickle',

# '../usr/lib/preprocess/bengali_pairs1_targets.pickle'

# ],

# [

# '../usr/lib/preprocess/hindi_pairs1_train.pickle',

# '../usr/lib/preprocess/hindi_pairs1_pairs.pickle',

# '../usr/lib/preprocess/hindi_pairs1_targets.pickle'

# ]

# ]

# evaluation

ALL_HISTORY = []

ACCURACIES = []

THRESHOLDS = []

PLOTS = []

for i in range(3):

data, pairs, targets = load_dataset(i)

data_shapes()

for bs in range(0, 3*42, 42):

plot_13(0+bs, 20+bs, 41+bs)

print()

if i == 0:

siamese_net = model_setup(True)

model_training(siamese_net, 'siamese_cedar')

elif i == 1:

siamese_net = model_setup()

model_training(siamese_net, 'siamese_bengali')

elif i == 2:

siamese_net = model_setup()

model_training(siamese_net, 'siamese_hindi')

model_evaluation(siamese_net)

del data

del pairs

del targets

visualize_history()

df = DataFrame.from_dict({'Accuracies': ACCURACIES,

'Thresholds': THRESHOLDS})

df.index = ['Cedar', 'BhSig260 Bengali', 'BhSig260 Hindi']

df

for met in PLOTS:

evaluation_plots(met)

```

| true |

code

| 0.740479 | null | null | null | null |

|

# Example 10 A: Inverted Pendulum with Wall

```

import numpy as np

import scipy.linalg as spa

import pypolycontain as pp

import pydrake.solvers.mathematicalprogram as MP

import pydrake.solvers.gurobi as Gurobi_drake

# use Gurobi solver

global gurobi_solver, license

gurobi_solver=Gurobi_drake.GurobiSolver()

license = gurobi_solver.AcquireLicense()

import pypolycontain as pp

import pypolycontain.pwa_control as pwa

import matplotlib.pyplot as plt

```

## Dynamcis and matrices

The system is constrained to $|\theta| \le 0.12$, $|\dot{\theta}| \le 1$, $|u| \le 4$, and the wall is situated at $\theta=0.1$. The problem is to identify a set of states $\mathcal{X} \in \mathbb{R}^2$ and the associated control law $\mu: [-0.12,0.12] \times [-1,1] \rightarrow [-4,4]$ such that all states in $\mathcal{X}$ are steered toward origin in finite time, while respecting the constraints. It is desired that $\mathcal{X}$ is as large as possible. The dynamical system is described as a hybrid system with two modes associated with ``contact-free" and ``contact". The piecewise affine dynamics is given as:

\begin{equation*}

A_1=

\left(

\begin{array}{cc}

1 & 0.01 \\

0.1 & 1

\end{array}

\right),

A_2=

\left(

\begin{array}{cc}

1 & 0.01 \\

-9.9 & 1

\end{array}

\right),

\end{equation*}

\begin{equation*}

B_1=B_2=

\left(

\begin{array}{c}

0 \\ 0.01

\end{array}

\right),

c_1=

\left(

\begin{array}{c}

0 \\ 0

\end{array}

\right) ,

c_2=

\left(

\begin{array}{c}

0 \\ 1

\end{array}

\right),

\end{equation*}

where mode 1 and 2 correspond to contact-free $\theta \le 0.1$ and contact dynamics $\theta >0.1$, respectively.

```

A=np.array([[1,0.01],[0.1,1]])

B=np.array([0,0.01]).reshape(2,1)

c=np.array([0,0]).reshape(2,1)

C=pp.unitbox(N=3).H_polytope

C.h=np.array([0.1,1,4,0.1,1,4]).reshape(6,1)

S1=pwa.affine_system(A,B,c,name='free',XU=C)

# X=pp.zonotope(G=np.array([[0.1,0],[0,1]]))

# U=pp.zonotope(G=np.ones((1,1))*4)

# W=pp.zonotope(G=np.array([[0.1,0],[0,1]]))

# Omega=rci_old(A, B, X, U , W, q=5,eta=0.001)

import pickle

(H,h)=pickle.load(open('example_inverted_pendulum_H.pkl','rb'))

Omega=pp.H_polytope(H, h)

A=np.array([[1,0.01],[-9.9,1]])

B=np.array([0,0.01]).reshape(2,1)

c=np.array([0,1]).reshape(2,1)

C=pp.unitbox(N=3).H_polytope

C.h=np.array([0.12,1,4,-0.1,1,4]).reshape(6,1)