modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-13 00:37:47

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 555

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-13 00:35:18

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

sd-concepts-library/fish

|

sd-concepts-library

| 2022-09-18T06:57:04Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-18T06:56:57Z |

---

license: mit

---

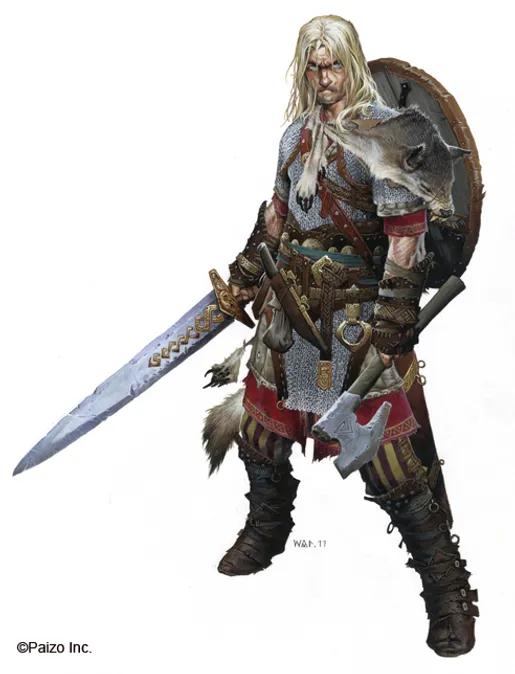

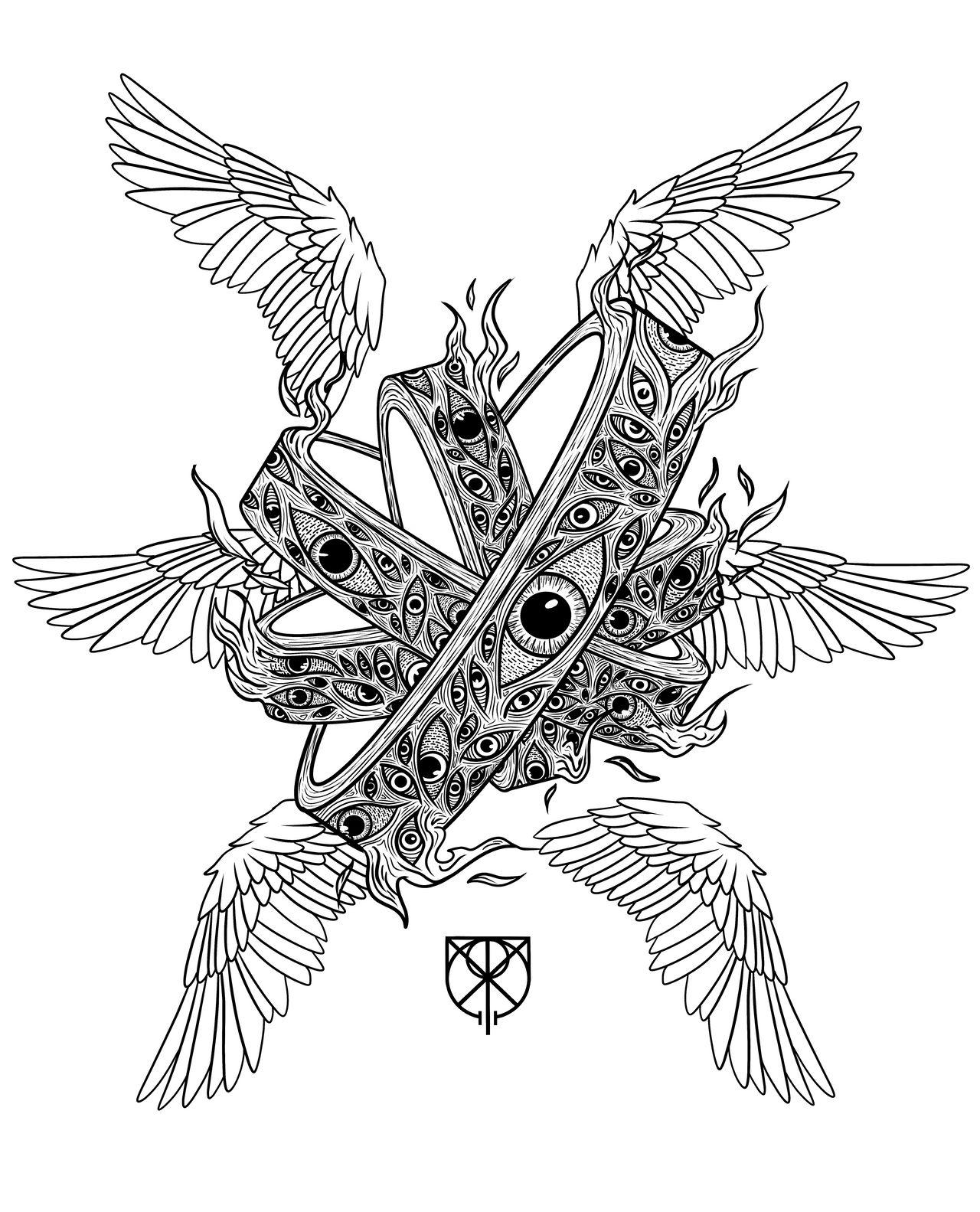

### fish on Stable Diffusion

This is the `<fish>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/dsmuses

|

sd-concepts-library

| 2022-09-18T06:37:28Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-18T06:37:17Z |

---

license: mit

---

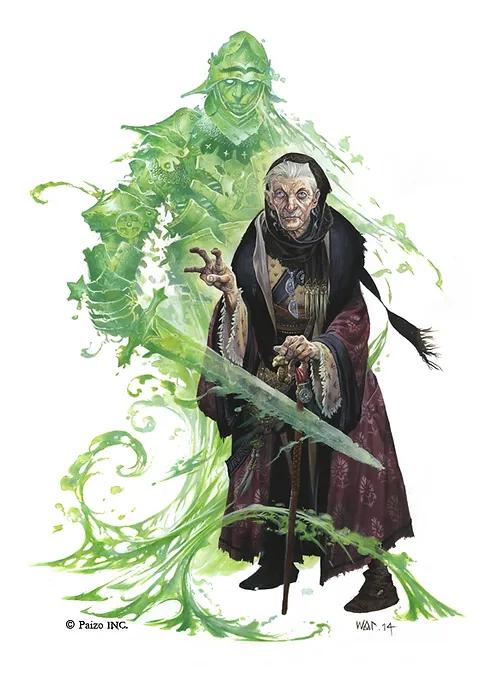

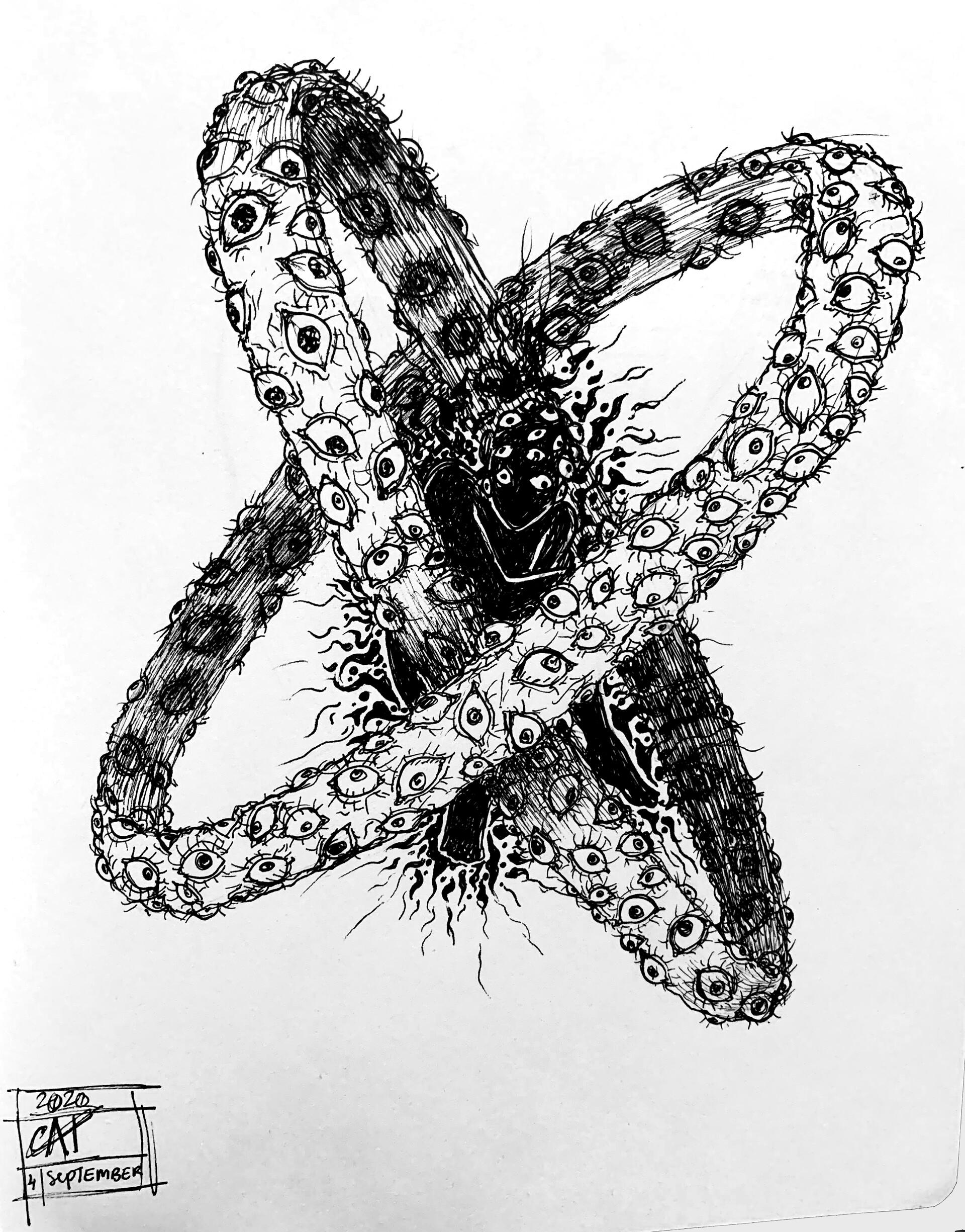

### DSmuses on Stable Diffusion

This is the `<DSmuses>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

roupenminassian/swin-tiny-patch4-window7-224-finetuned-eurosat

|

roupenminassian

| 2022-09-18T06:29:15Z | 221 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"swin",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-09-18T05:56:58Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: swin-tiny-patch4-window7-224-finetuned-eurosat

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.587248322147651

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-finetuned-eurosat

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6712

- Accuracy: 0.5872

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.6811 | 1.0 | 21 | 0.6773 | 0.5604 |

| 0.667 | 2.0 | 42 | 0.6743 | 0.5805 |

| 0.6521 | 3.0 | 63 | 0.6712 | 0.5872 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/threestooges

|

sd-concepts-library

| 2022-09-18T05:40:11Z | 0 | 1 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-18T05:40:07Z |

---

license: mit

---

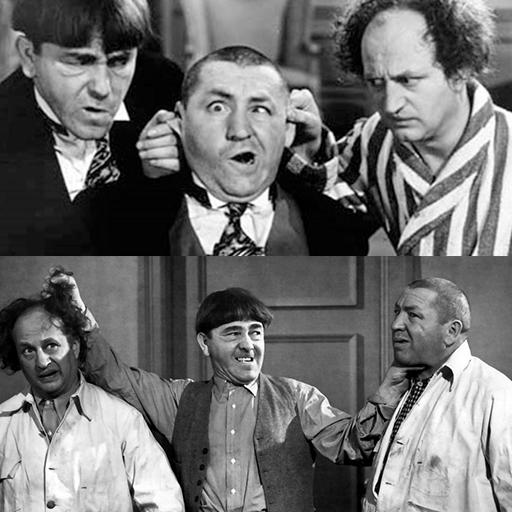

### threestooges on Stable Diffusion

This is the `<threestooges>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

gogin333/model

|

gogin333

| 2022-09-18T04:49:52Z | 0 | 0 | null |

[

"region:us"

] | null | 2022-09-18T04:47:39Z |

летучий глаз с монолизай

|

rosskrasner/testcatdog

|

rosskrasner

| 2022-09-18T03:56:03Z | 0 | 0 |

fastai

|

[

"fastai",

"region:us"

] | null | 2022-09-14T03:29:28Z |

---

tags:

- fastai

---

# Amazing!

🥳 Congratulations on hosting your fastai model on the Hugging Face Hub!

# Some next steps

1. Fill out this model card with more information (see the template below and the [documentation here](https://huggingface.co/docs/hub/model-repos))!

2. Create a demo in Gradio or Streamlit using 🤗 Spaces ([documentation here](https://huggingface.co/docs/hub/spaces)).

3. Join the fastai community on the [Fastai Discord](https://discord.com/invite/YKrxeNn)!

Greetings fellow fastlearner 🤝! Don't forget to delete this content from your model card.

---

# Model card

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

|

tkuye/binary-skills-classifier

|

tkuye

| 2022-09-17T23:11:29Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-17T20:42:34Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: binary-skills-classifier

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# binary-skills-classifier

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1373

- Accuracy: 0.9702

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.098 | 1.0 | 1557 | 0.0917 | 0.9663 |

| 0.0678 | 2.0 | 3114 | 0.0982 | 0.9712 |

| 0.0344 | 3.0 | 4671 | 0.1140 | 0.9712 |

| 0.0239 | 4.0 | 6228 | 0.1373 | 0.9702 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

reinoudbosch/pegasus-samsum

|

reinoudbosch

| 2022-09-17T23:03:24Z | 99 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"pegasus",

"text2text-generation",

"generated_from_trainer",

"dataset:samsum",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-17T22:26:31Z |

---

tags:

- generated_from_trainer

datasets:

- samsum

model-index:

- name: pegasus-samsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pegasus-samsum

This model is a fine-tuned version of [google/pegasus-cnn_dailymail](https://huggingface.co/google/pegasus-cnn_dailymail) on the samsum dataset.

It achieves the following results on the evaluation set:

- Loss: 1.4814

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.7052 | 0.54 | 500 | 1.4814 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.11.0

- Datasets 2.0.0

- Tokenizers 0.11.0

|

sd-concepts-library/cgdonny1

|

sd-concepts-library

| 2022-09-17T22:24:07Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-17T22:24:00Z |

---

license: mit

---

### cgdonny1 on Stable Diffusion

This is the `<donny1>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

anechaev/Reinforce-U5Pixelcopter

|

anechaev

| 2022-09-17T22:11:25Z | 0 | 0 | null |

[

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-17T22:11:15Z |

---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-U5Pixelcopter

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 17.10 +/- 15.09

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

sd-concepts-library/r-crumb-style

|

sd-concepts-library

| 2022-09-17T21:15:16Z | 0 | 5 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-17T21:15:11Z |

---

license: mit

---

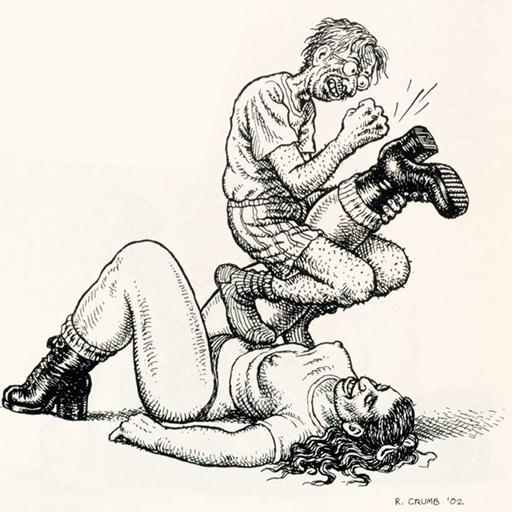

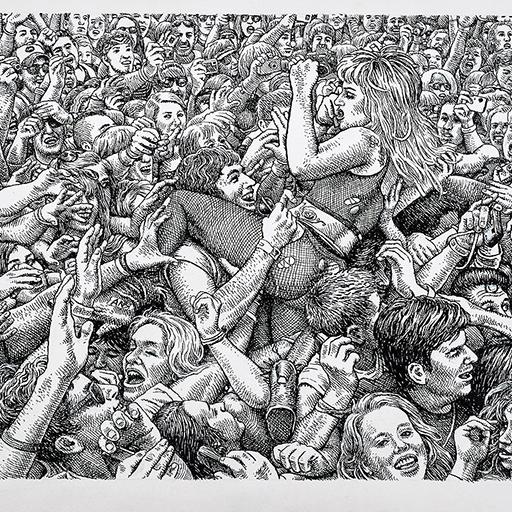

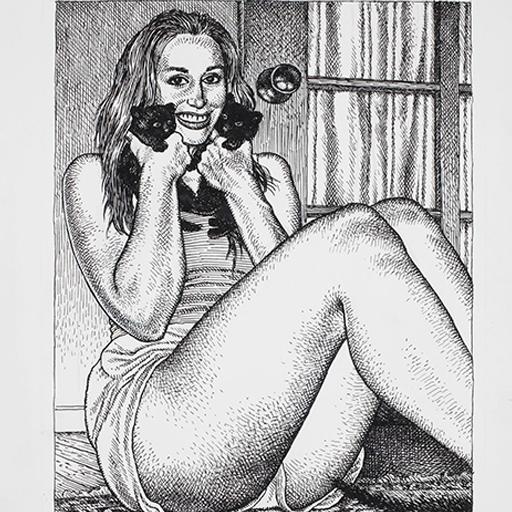

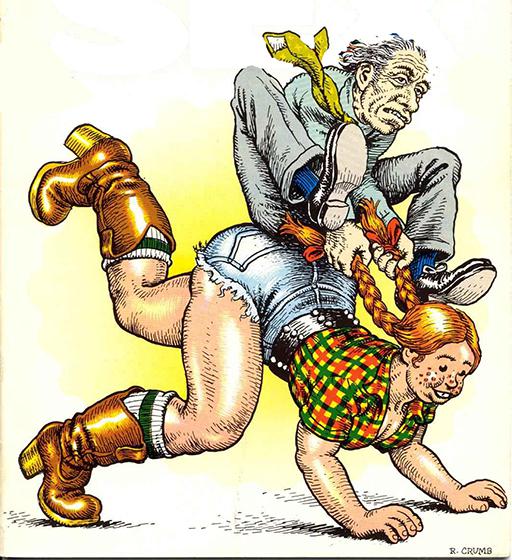

### r crumb style on Stable Diffusion

This is the `<rcrumb>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/3d-female-cyborgs

|

sd-concepts-library

| 2022-09-17T20:15:59Z | 0 | 39 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-17T20:15:45Z |

---

license: mit

---

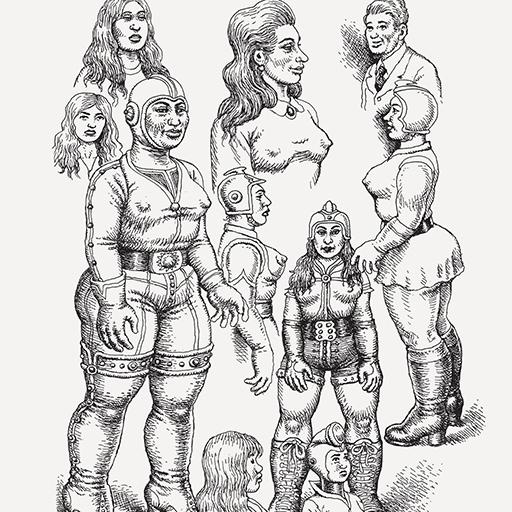

### 3d Female Cyborgs on Stable Diffusion

This is the `<A female cyborg>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

tkuye/skills-classifier

|

tkuye

| 2022-09-17T19:16:20Z | 117 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-17T17:56:54Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: skills-classifier

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# skills-classifier

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3051

- Accuracy: 0.9242

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 312 | 0.2713 | 0.9058 |

| 0.361 | 2.0 | 624 | 0.2539 | 0.9182 |

| 0.361 | 3.0 | 936 | 0.2802 | 0.9238 |

| 0.1532 | 4.0 | 1248 | 0.3058 | 0.9202 |

| 0.0899 | 5.0 | 1560 | 0.3051 | 0.9242 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/wish-artist-stile

|

sd-concepts-library

| 2022-09-17T19:03:21Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-17T19:03:15Z |

---

license: mit

---

### Wish artist stile on Stable Diffusion

This is the `<wish-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

Tritkoman/Kvenfinnishtranslator

|

Tritkoman

| 2022-09-17T18:38:22Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"autotrain",

"translation",

"en",

"fi",

"dataset:Tritkoman/autotrain-data-wnkeknrr",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

] |

translation

| 2022-09-17T18:36:53Z |

---

tags:

- autotrain

- translation

language:

- en

- fi

datasets:

- Tritkoman/autotrain-data-wnkeknrr

co2_eq_emissions:

emissions: 0.007023045912239053

---

# Model Trained Using AutoTrain

- Problem type: Translation

- Model ID: 1495654541

- CO2 Emissions (in grams): 0.0070

## Validation Metrics

- Loss: 2.873

- SacreBLEU: 22.653

- Gen len: 7.114

|

dumitrescustefan/gpt-neo-romanian-780m

|

dumitrescustefan

| 2022-09-17T18:24:19Z | 260 | 12 |

transformers

|

[

"transformers",

"pytorch",

"gpt_neo",

"text-generation",

"romanian",

"text generation",

"causal lm",

"gpt-neo",

"ro",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-08-29T15:31:26Z |

---

language:

- ro

license: mit # Example: apache-2.0 or any license from https://hf.co/docs/hub/repositories-licenses

tags:

- romanian

- text generation

- causal lm

- gpt-neo

---

# GPT-Neo Romanian 780M

This model is a GPT-Neo transformer decoder model designed using EleutherAI's replication of the GPT-3 architecture.

It was trained on a thoroughly cleaned corpus of Romanian text of about 40GB composed of Oscar, Opus, Wikipedia, literature and various other bits and pieces of text, joined together and deduplicated. It was trained for about a month, totaling 1.5M steps on a v3-32 TPU machine.

### Authors:

* Dumitrescu Stefan

* Mihai Ilie

### Evaluation

Evaluation to be added soon, also on [https://github.com/dumitrescustefan/Romanian-Transformers](https://github.com/dumitrescustefan/Romanian-Transformers)

### Acknowledgements

Thanks [TPU Research Cloud](https://sites.research.google/trc/about/) for the TPUv3 machine needed to train this model!

|

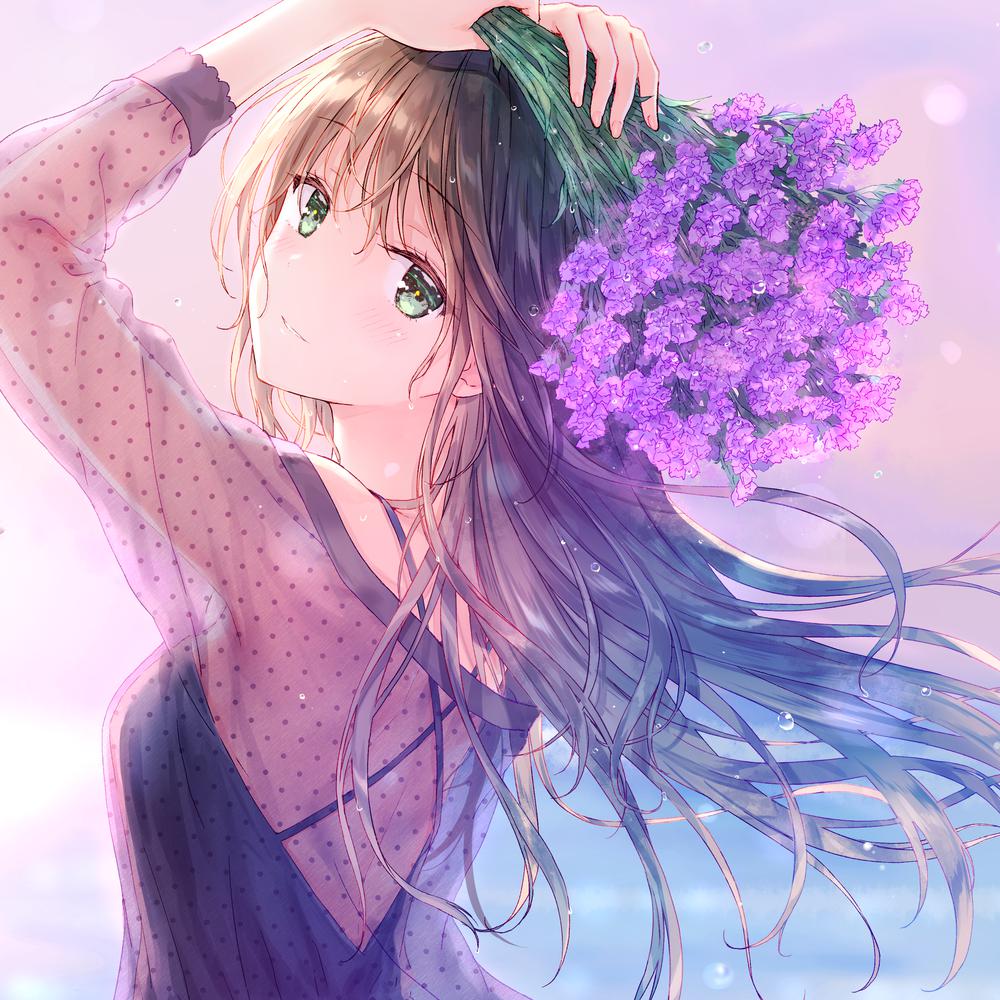

sd-concepts-library/hiten-style-nao

|

sd-concepts-library

| 2022-09-17T17:52:12Z | 0 | 26 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-17T17:43:38Z |

---

license: mit

---

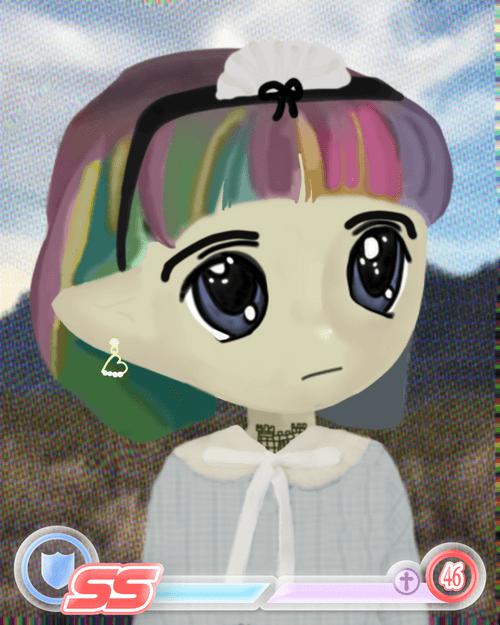

### NOTE: USED WAIFU DIFFUSION

<https://huggingface.co/hakurei/waifu-diffusion>

### hiten-style-nao on Stable Diffusion

Artist: <https://www.pixiv.net/en/users/490219>

This is the `<hiten-style-nao>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

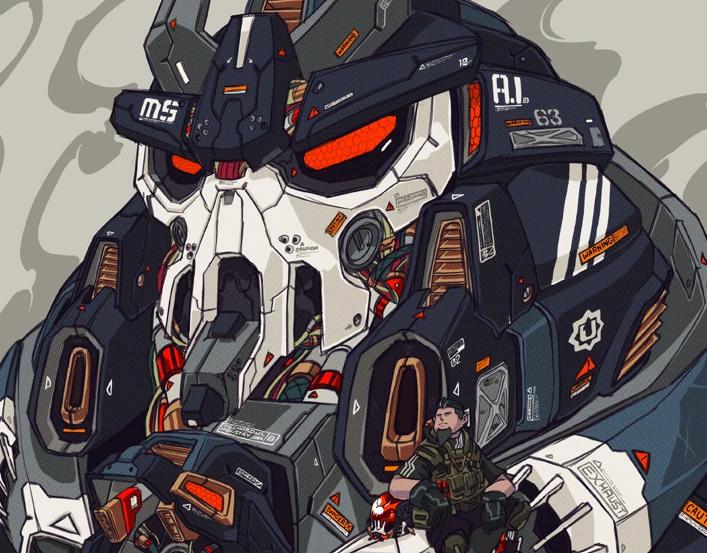

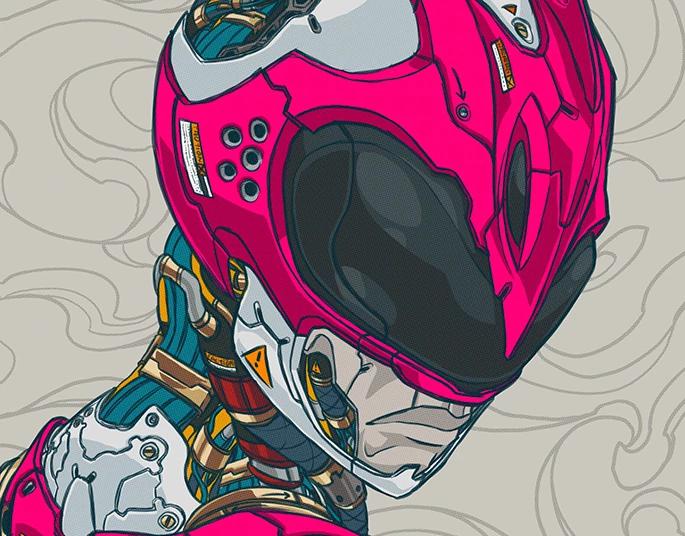

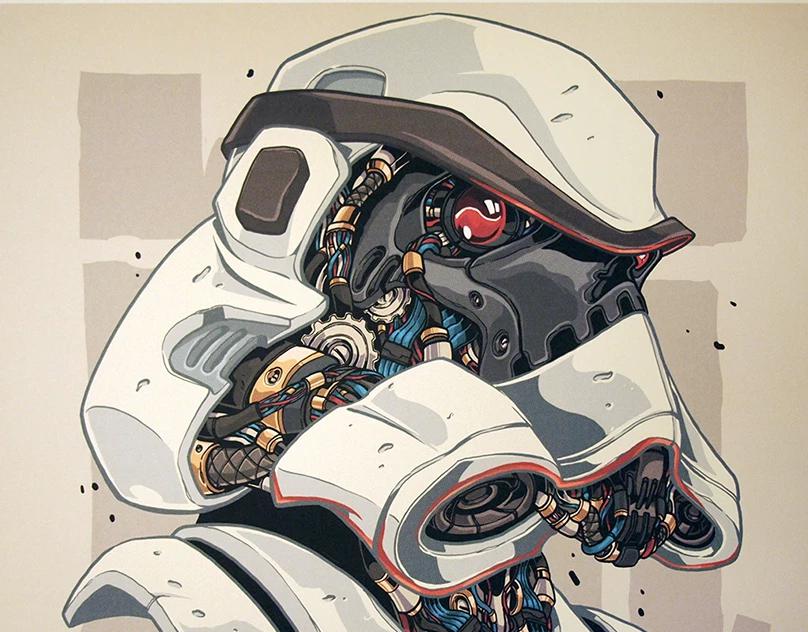

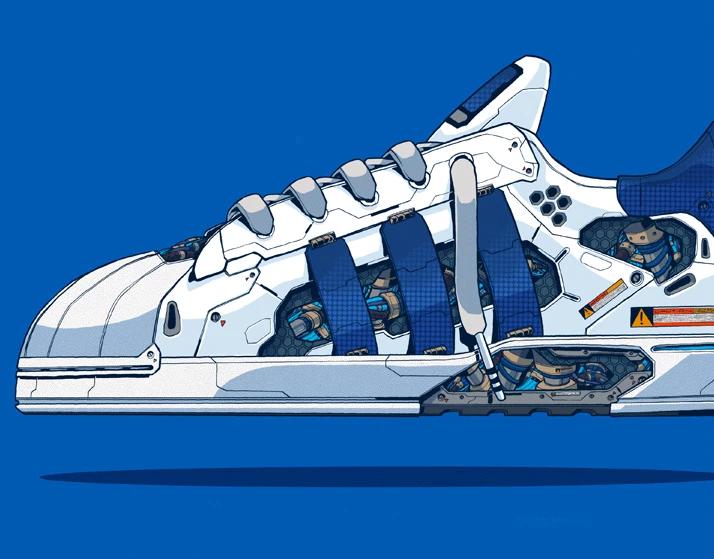

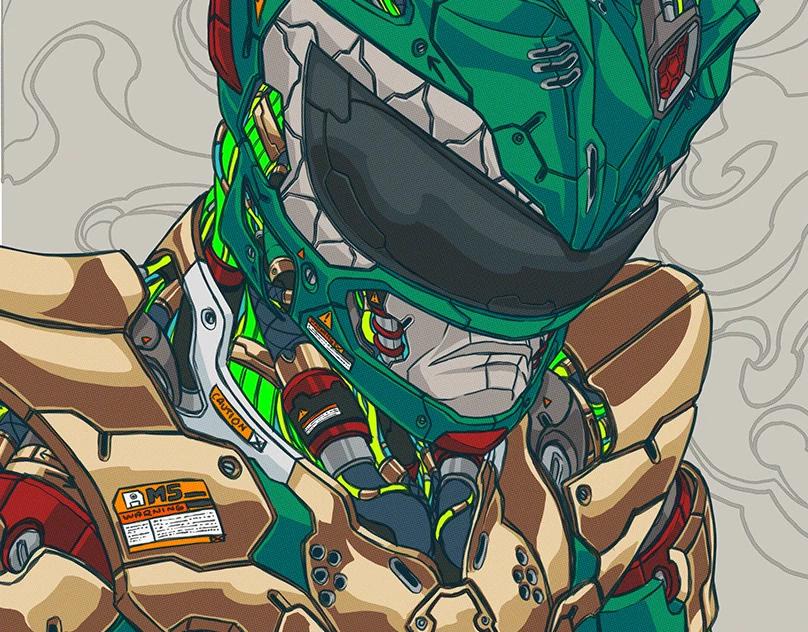

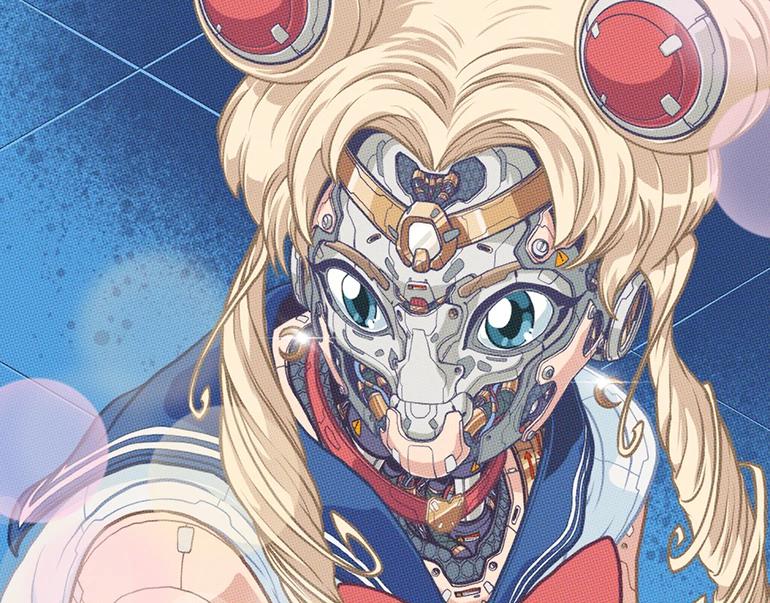

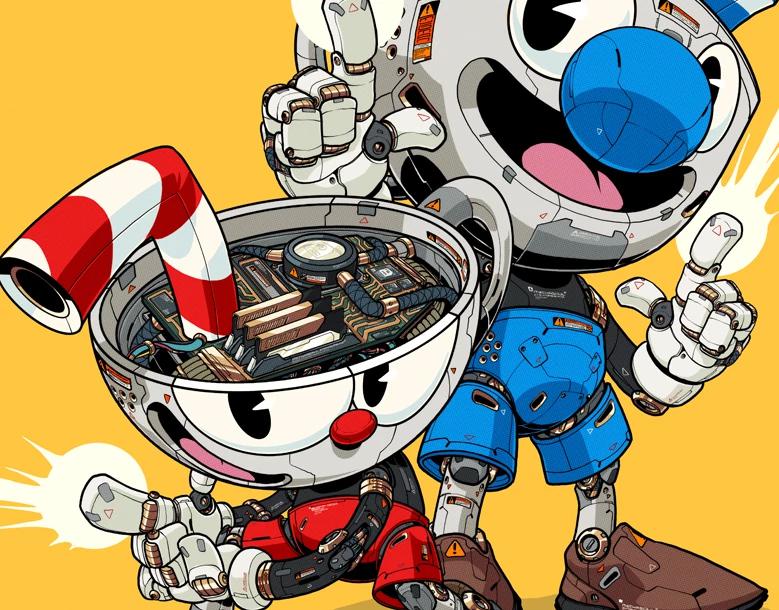

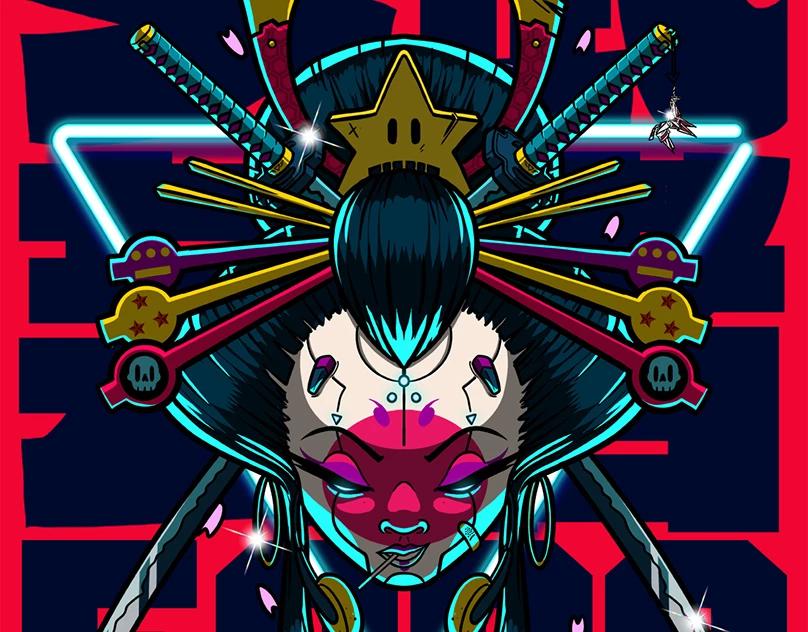

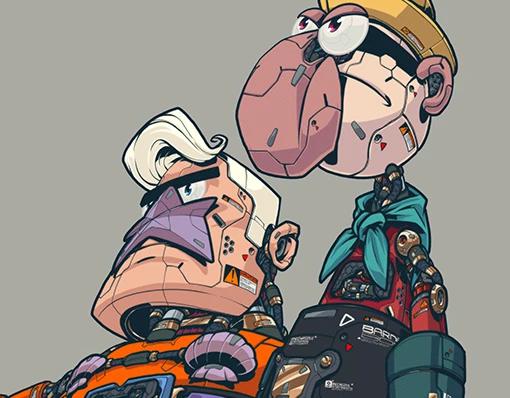

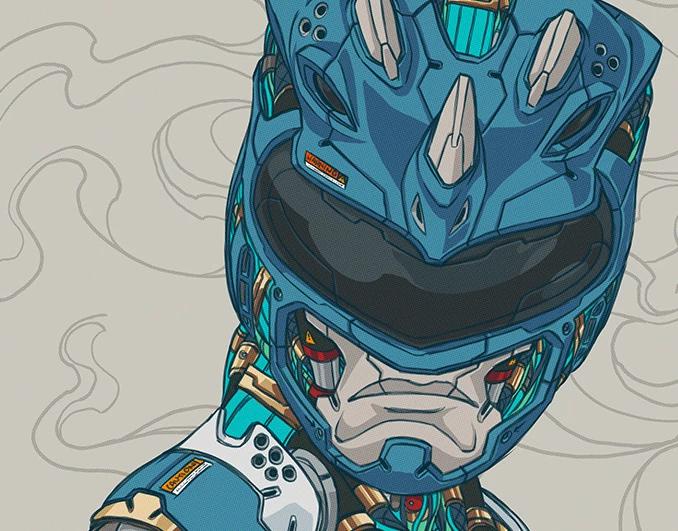

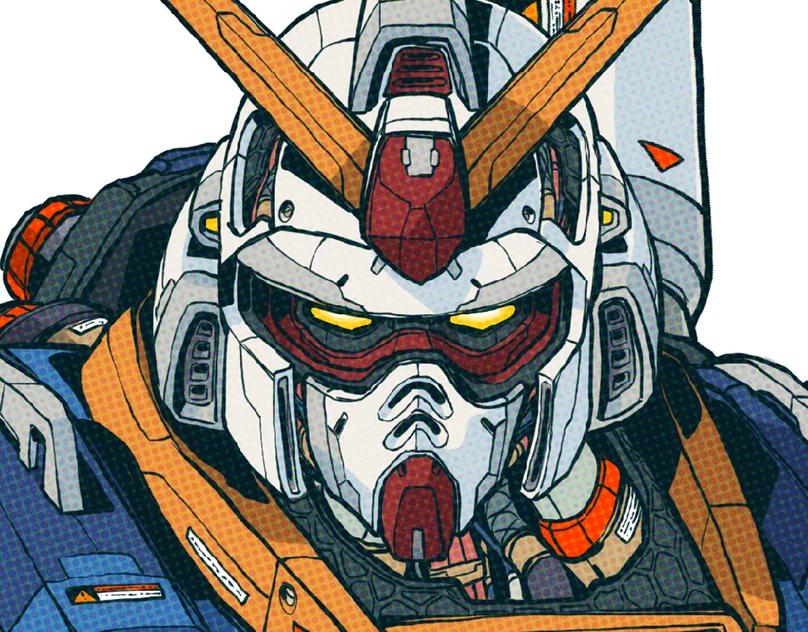

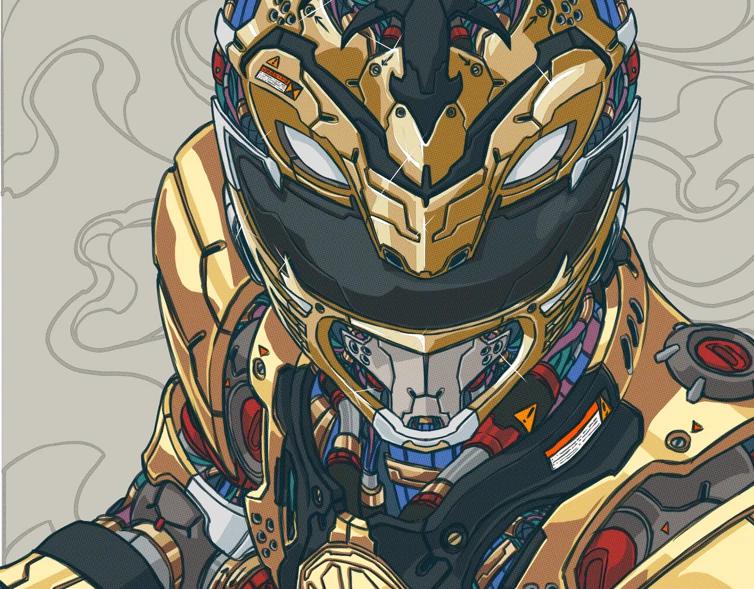

sd-concepts-library/mechasoulall

|

sd-concepts-library

| 2022-09-17T17:44:02Z | 0 | 21 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-17T17:43:55Z |

---

license: mit

---

### mechasoulall on Stable Diffusion

This is the `<mechasoulall>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/durer-style

|

sd-concepts-library

| 2022-09-17T16:36:56Z | 0 | 7 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-17T16:36:49Z |

---

license: mit

---

### durer style on Stable Diffusion

This is the `<drr-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/led-toy

|

sd-concepts-library

| 2022-09-17T16:33:57Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-17T16:33:50Z |

---

license: mit

---

### led-toy on Stable Diffusion

This is the `<led-toy>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/she-hulk-law-art

|

sd-concepts-library

| 2022-09-17T16:10:47Z | 0 | 2 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-17T16:10:35Z |

---

license: mit

---

### She-Hulk Law Art on Stable Diffusion

This is the `<shehulk-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

theojolliffe/pegasus-model-3-x25

|

theojolliffe

| 2022-09-17T15:48:03Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"pegasus",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-17T14:27:08Z |

---

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: pegasus-model-3-x25

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pegasus-model-3-x25

This model is a fine-tuned version of [theojolliffe/pegasus-cnn_dailymail-v4-e1-e4-feedback](https://huggingface.co/theojolliffe/pegasus-cnn_dailymail-v4-e1-e4-feedback) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5668

- Rouge1: 61.9972

- Rouge2: 48.1531

- Rougel: 48.845

- Rougelsum: 59.5019

- Gen Len: 123.0814

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:------:|:---------:|:--------:|

| 1.144 | 1.0 | 883 | 0.5668 | 61.9972 | 48.1531 | 48.845 | 59.5019 | 123.0814 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Tritkoman/Interlinguetranslator

|

Tritkoman

| 2022-09-17T15:45:24Z | 94 | 0 |

transformers

|

[

"transformers",

"pytorch",

"autotrain",

"translation",

"en",

"es",

"dataset:Tritkoman/autotrain-data-akakka",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

] |

translation

| 2022-09-17T15:07:31Z |

---

tags:

- autotrain

- translation

language:

- en

- es

datasets:

- Tritkoman/autotrain-data-akakka

co2_eq_emissions:

emissions: 0.26170356193686023

---

# Model Trained Using AutoTrain

- Problem type: Translation

- Model ID: 1492154444

- CO2 Emissions (in grams): 0.2617

## Validation Metrics

- Loss: 0.770

- SacreBLEU: 62.097

- Gen len: 8.635

|

matemato/q-Taxi-v3

|

matemato

| 2022-09-17T15:11:44Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-17T15:11:35Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.54 +/- 2.70

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="matemato/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

Eksperymenty/Pong-PLE-v0

|

Eksperymenty

| 2022-09-17T14:44:18Z | 0 | 0 | null |

[

"Pong-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-17T14:44:08Z |

---

tags:

- Pong-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Pong-PLE-v0

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pong-PLE-v0

type: Pong-PLE-v0

metrics:

- type: mean_reward

value: -16.00 +/- 0.00

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pong-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pong-PLE-v0** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

DeividasM/finetuning-sentiment-model-3000-samples

|

DeividasM

| 2022-09-17T13:05:46Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-17T12:51:33Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

- f1

model-index:

- name: finetuning-sentiment-model-3000-samples

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

config: plain_text

split: train

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.8766666666666667

- name: F1

type: f1

value: 0.877887788778878

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3275

- Accuracy: 0.8767

- F1: 0.8779

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

jayanta/swin-base-patch4-window7-224-20epochs-finetuned-memes

|

jayanta

| 2022-09-17T13:02:25Z | 216 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"swin",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-09-17T12:07:58Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: swin-base-patch4-window7-224-20epochs-finetuned-memes

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.847758887171561

- task:

type: image-classification

name: Image Classification

dataset:

type: custom

name: custom

split: test

metrics:

- type: f1

value: 0.8504084378729573

name: F1

- type: precision

value: 0.8519647060733512

name: Precision

- type: recall

value: 0.8523956723338485

name: Recall

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-base-patch4-window7-224-20epochs-finetuned-memes

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7090

- Accuracy: 0.8478

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.00012

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.0238 | 0.99 | 40 | 0.9636 | 0.6445 |

| 0.777 | 1.99 | 80 | 0.6591 | 0.7666 |

| 0.4763 | 2.99 | 120 | 0.5381 | 0.8130 |

| 0.3215 | 3.99 | 160 | 0.5244 | 0.8253 |

| 0.2179 | 4.99 | 200 | 0.5123 | 0.8238 |

| 0.1868 | 5.99 | 240 | 0.5052 | 0.8308 |

| 0.154 | 6.99 | 280 | 0.5444 | 0.8338 |

| 0.1166 | 7.99 | 320 | 0.6318 | 0.8238 |

| 0.1099 | 8.99 | 360 | 0.5656 | 0.8338 |

| 0.0925 | 9.99 | 400 | 0.6057 | 0.8338 |

| 0.0779 | 10.99 | 440 | 0.5942 | 0.8393 |

| 0.0629 | 11.99 | 480 | 0.6112 | 0.8400 |

| 0.0742 | 12.99 | 520 | 0.6588 | 0.8331 |

| 0.0752 | 13.99 | 560 | 0.6143 | 0.8408 |

| 0.0577 | 14.99 | 600 | 0.6450 | 0.8516 |

| 0.0589 | 15.99 | 640 | 0.6787 | 0.8400 |

| 0.0555 | 16.99 | 680 | 0.6641 | 0.8454 |

| 0.052 | 17.99 | 720 | 0.7213 | 0.8524 |

| 0.0589 | 18.99 | 760 | 0.6917 | 0.8470 |

| 0.0506 | 19.99 | 800 | 0.7090 | 0.8478 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

test1234678/distilbert-base-uncased-distilled-clinc

|

test1234678

| 2022-09-17T12:34:43Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:clinc_oos",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-17T07:24:42Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- clinc_oos

metrics:

- accuracy

model-index:

- name: distilbert-base-uncased-distilled-clinc

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: clinc_oos

type: clinc_oos

config: plus

split: train

args: plus

metrics:

- name: Accuracy

type: accuracy

value: 0.9461290322580646

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-distilled-clinc

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the clinc_oos dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2712

- Accuracy: 0.9461

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 48

- eval_batch_size: 48

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 2.2629 | 1.0 | 318 | 1.6048 | 0.7368 |

| 1.2437 | 2.0 | 636 | 0.8148 | 0.8565 |

| 0.6604 | 3.0 | 954 | 0.4768 | 0.9161 |

| 0.4054 | 4.0 | 1272 | 0.3548 | 0.9352 |

| 0.2987 | 5.0 | 1590 | 0.3084 | 0.9419 |

| 0.2549 | 6.0 | 1908 | 0.2909 | 0.9435 |

| 0.232 | 7.0 | 2226 | 0.2804 | 0.9458 |

| 0.221 | 8.0 | 2544 | 0.2749 | 0.9458 |

| 0.2145 | 9.0 | 2862 | 0.2722 | 0.9468 |

| 0.2112 | 10.0 | 3180 | 0.2712 | 0.9461 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.10.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Shamus/NLLB-600m-vie_Latn-to-eng_Latn

|

Shamus

| 2022-09-17T11:54:50Z | 107 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"m2m_100",

"text2text-generation",

"generated_from_trainer",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-17T03:28:00Z |

---

license: cc-by-nc-4.0

tags:

- generated_from_trainer

metrics:

- bleu

model-index:

- name: NLLB-600m-vie_Latn-to-eng_Latn

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# NLLB-600m-vie_Latn-to-eng_Latn

This model is a fine-tuned version of [facebook/nllb-200-distilled-600M](https://huggingface.co/facebook/nllb-200-distilled-600M) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1189

- Bleu: 36.6767

- Gen Len: 47.504

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 3

- eval_batch_size: 3

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 24

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 10000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|

| 1.9294 | 2.24 | 1000 | 1.5970 | 23.6201 | 48.1 |

| 1.4 | 4.47 | 2000 | 1.3216 | 28.9526 | 45.156 |

| 1.2071 | 6.71 | 3000 | 1.2245 | 32.5538 | 46.576 |

| 1.0893 | 8.95 | 4000 | 1.1720 | 34.265 | 46.052 |

| 1.0064 | 11.19 | 5000 | 1.1497 | 34.9249 | 46.508 |

| 0.9562 | 13.42 | 6000 | 1.1331 | 36.4619 | 47.244 |

| 0.9183 | 15.66 | 7000 | 1.1247 | 36.4723 | 47.26 |

| 0.8858 | 17.9 | 8000 | 1.1198 | 36.7058 | 47.376 |

| 0.8651 | 20.13 | 9000 | 1.1201 | 36.7897 | 47.496 |

| 0.8546 | 22.37 | 10000 | 1.1189 | 36.6767 | 47.504 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/uzumaki

|

sd-concepts-library

| 2022-09-17T11:40:47Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-17T11:40:41Z |

---

license: mit

---

### UZUMAKI on Stable Diffusion

This is the `<NARUTO>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

pnr-svc/distilbert-turkish-ner

|

pnr-svc

| 2022-09-17T11:09:26Z | 104 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"token-classification",

"generated_from_trainer",

"dataset:ner-tr",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-17T10:53:29Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- ner-tr

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: distilbert-turkish-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: ner-tr

type: ner-tr

config: NERTR

split: train

args: NERTR

metrics:

- name: Precision

type: precision

value: 1.0

- name: Recall

type: recall

value: 1.0

- name: F1

type: f1

value: 1.0

- name: Accuracy

type: accuracy

value: 1.0

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-turkish-ner

This model is a fine-tuned version of [dbmdz/distilbert-base-turkish-cased](https://huggingface.co/dbmdz/distilbert-base-turkish-cased) on the ner-tr dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0013

- Precision: 1.0

- Recall: 1.0

- F1: 1.0

- Accuracy: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:---:|:--------:|

| 0.5744 | 1.0 | 529 | 0.0058 | 1.0 | 1.0 | 1.0 | 1.0 |

| 0.0094 | 2.0 | 1058 | 0.0017 | 1.0 | 1.0 | 1.0 | 1.0 |

| 0.0047 | 3.0 | 1587 | 0.0013 | 1.0 | 1.0 | 1.0 | 1.0 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

LanYiU/distilbert-base-uncased-finetuned-imdb

|

LanYiU

| 2022-09-17T11:04:50Z | 161 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"fill-mask",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-09-17T10:55:23Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

model-index:

- name: distilbert-base-uncased-finetuned-imdb

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-imdb

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 2.4738

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- distributed_type: tpu

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.7 | 1.0 | 157 | 2.4988 |

| 2.5821 | 2.0 | 314 | 2.4242 |

| 2.541 | 3.0 | 471 | 2.4371 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.9.0+cu102

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Eksperymenty/Reinforce-CartPole-v1

|

Eksperymenty

| 2022-09-17T10:09:00Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-17T10:07:54Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-CartPole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 445.10 +/- 56.96

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

Gxl/MINI

|

Gxl

| 2022-09-17T08:24:39Z | 0 | 0 | null |

[

"license:afl-3.0",

"region:us"

] | null | 2022-09-07T11:45:56Z |

---

license: afl-3.0

---

11

# 1

23

3224

342

## 324

432455

23445

455

#### 32424

34442

|

sd-concepts-library/ouroboros

|

sd-concepts-library

| 2022-09-17T02:34:14Z | 0 | 3 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-17T02:34:09Z |

---

license: mit

---

### Ouroboros on Stable Diffusion

This is the `<ouroboros>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/dtv-pkmn

|

sd-concepts-library

| 2022-09-17T01:25:50Z | 0 | 5 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-13T23:08:57Z |

---

license: mit

---

### dtv-pkmn on Stable Diffusion

This is the `<dtv-pkm2>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

`"hyperdetailed fantasy (monster) (dragon-like) character on top of a rock in the style of <dtv-pkm2> . extremely detailed, amazing artwork with depth and realistic CINEMATIC lighting, matte painting"`

Here is the new concept you will be able to use as a `style`:

|

g30rv17ys/ddpm-geeve-dme-1000-128

|

g30rv17ys

| 2022-09-16T22:45:49Z | 0 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:imagefolder",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-09-16T20:29:37Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: imagefolder

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-geeve-dme-1000-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `imagefolder` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/geevegeorge/ddpm-geeve-dme-1000-128/tensorboard?#scalars)

|

g30rv17ys/ddpm-geeve-cnv-1000-128

|

g30rv17ys

| 2022-09-16T22:44:56Z | 1 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:imagefolder",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-09-16T20:19:10Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: imagefolder

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-geeve-cnv-1000-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `imagefolder` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/geevegeorge/ddpm-geeve-cnv-1000-128/tensorboard?#scalars)

|

sd-concepts-library/jamie-hewlett-style

|

sd-concepts-library

| 2022-09-16T22:32:42Z | 0 | 14 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-16T22:32:38Z |

---

license: mit

---

### Jamie Hewlett Style on Stable Diffusion

This is the `<hewlett>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

rhiga/a2c-AntBulletEnv-v0

|

rhiga

| 2022-09-16T22:26:26Z | 1 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"AntBulletEnv-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-16T22:25:06Z |

---

library_name: stable-baselines3

tags:

- AntBulletEnv-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- metrics:

- type: mean_reward

value: 1742.04 +/- 217.69

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AntBulletEnv-v0

type: AntBulletEnv-v0

---

# **A2C** Agent playing **AntBulletEnv-v0**

This is a trained model of a **A2C** agent playing **AntBulletEnv-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

matemato/q-FrozenLake-v1-4x4-noSlippery

|

matemato

| 2022-09-16T22:04:18Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-16T22:04:10Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="matemato/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

sd-concepts-library/lugal-ki-en

|

sd-concepts-library

| 2022-09-16T19:32:47Z | 0 | 14 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-16T05:58:43Z |

---

title: Lugal Ki EN

emoji: 🪐

colorFrom: gray

colorTo: red

sdk: gradio

sdk_version: 3.3

app_file: app.py

pinned: false

license: mit

---

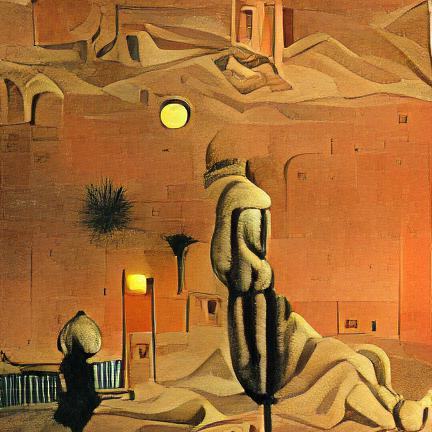

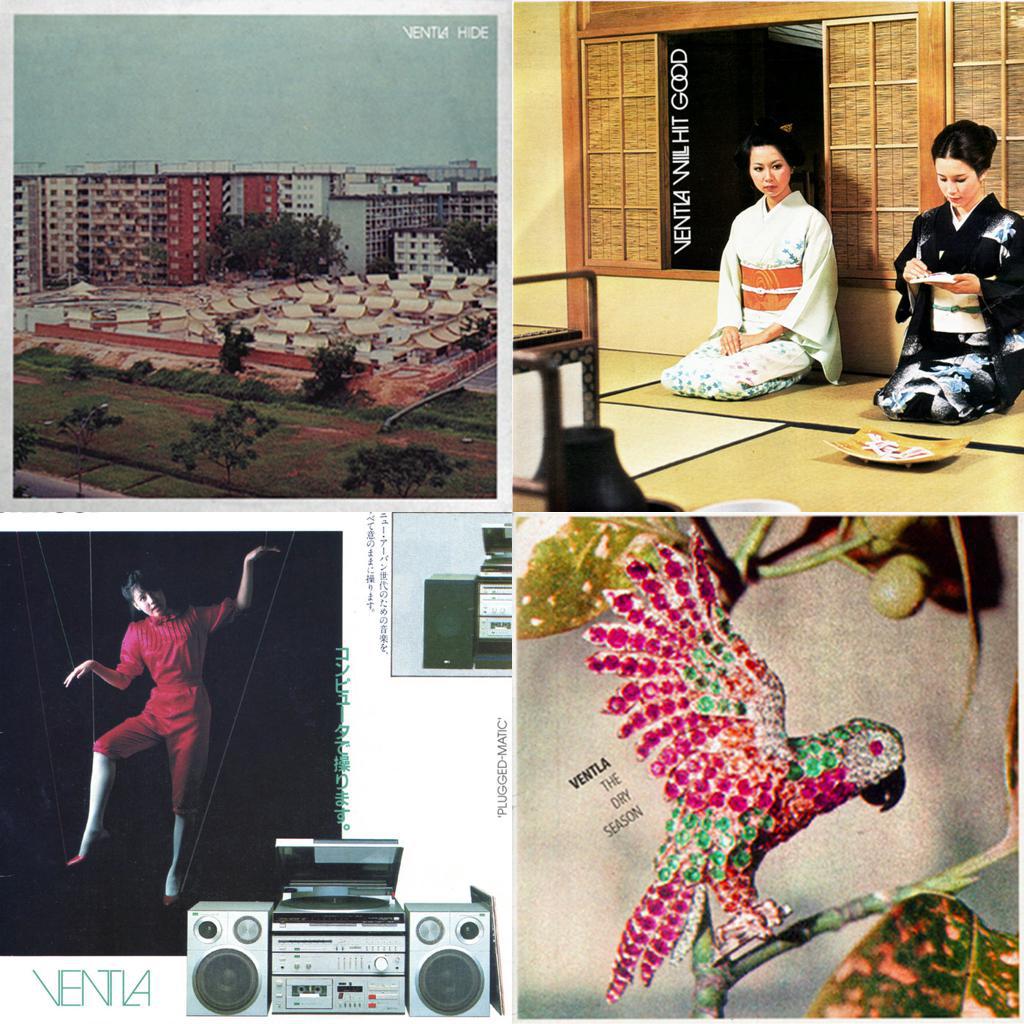

### Lugal ki en on Stable Diffusion

This is the `<lugal-ki-en>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/harmless-ai-house-style-1

|

sd-concepts-library

| 2022-09-16T19:21:04Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-16T19:20:03Z |

---

license: mit

---

### Harmless ai house style 1 on Stable Diffusion

This is the `<bee-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

,+The+computer+is+the+enemy+of+transhumanity,+detailed,+beautiful+masterpiece,+unreal+engine,+4k-0.024599999999999973.png)

,+The+computer+is+the+enemy+of+transhumanity,+detailed,+beautiful+masterpiece,+unreal+engine,+4k-0.02-3024.png)

|

sanchit-gandhi/wav2vec2-ctc-earnings22-baseline-5-gram

|

sanchit-gandhi

| 2022-09-16T18:50:03Z | 70 | 0 |

transformers

|

[

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-09-16T18:34:22Z |

Unrolled PT and FX weights of https://huggingface.co/sanchit-gandhi/flax-wav2vec2-ctc-earnings22-baseline/tree/main

|

MayaGalvez/bert-base-multilingual-cased-finetuned-pos

|

MayaGalvez

| 2022-09-16T18:35:53Z | 104 | 1 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"token-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-16T18:16:35Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-base-multilingual-cased-finetuned-pos

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-multilingual-cased-finetuned-pos

This model is a fine-tuned version of [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1736

- Precision: 0.9499

- Recall: 0.9504

- F1: 0.9501

- Accuracy: 0.9551

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.7663 | 0.27 | 200 | 0.2047 | 0.9318 | 0.9312 | 0.9315 | 0.9388 |

| 0.5539 | 0.53 | 400 | 0.1815 | 0.9381 | 0.9404 | 0.9392 | 0.9460 |

| 0.5222 | 0.8 | 600 | 0.1787 | 0.9400 | 0.9424 | 0.9412 | 0.9468 |

| 0.5084 | 1.07 | 800 | 0.1591 | 0.9470 | 0.9463 | 0.9467 | 0.9519 |

| 0.4703 | 1.33 | 1000 | 0.1622 | 0.9456 | 0.9458 | 0.9457 | 0.9510 |

| 0.5005 | 1.6 | 1200 | 0.1666 | 0.9470 | 0.9464 | 0.9467 | 0.9519 |

| 0.4677 | 1.87 | 1400 | 0.1583 | 0.9483 | 0.9483 | 0.9483 | 0.9532 |

| 0.4704 | 2.13 | 1600 | 0.1635 | 0.9472 | 0.9475 | 0.9473 | 0.9528 |

| 0.4639 | 2.4 | 1800 | 0.1569 | 0.9475 | 0.9488 | 0.9482 | 0.9536 |

| 0.4627 | 2.67 | 2000 | 0.1605 | 0.9474 | 0.9478 | 0.9476 | 0.9527 |

| 0.4608 | 2.93 | 2200 | 0.1535 | 0.9485 | 0.9495 | 0.9490 | 0.9538 |

| 0.4306 | 3.2 | 2400 | 0.1646 | 0.9489 | 0.9487 | 0.9488 | 0.9536 |

| 0.4583 | 3.47 | 2600 | 0.1642 | 0.9488 | 0.9495 | 0.9491 | 0.9539 |

| 0.453 | 3.73 | 2800 | 0.1646 | 0.9498 | 0.9505 | 0.9501 | 0.9554 |

| 0.4347 | 4.0 | 3000 | 0.1629 | 0.9494 | 0.9504 | 0.9499 | 0.9552 |

| 0.4425 | 4.27 | 3200 | 0.1738 | 0.9495 | 0.9502 | 0.9498 | 0.9550 |

| 0.4335 | 4.53 | 3400 | 0.1733 | 0.9499 | 0.9506 | 0.9503 | 0.9550 |

| 0.4306 | 4.8 | 3600 | 0.1736 | 0.9499 | 0.9504 | 0.9501 | 0.9551 |

### Framework versions

- Transformers 4.21.0

- Pytorch 1.12.0+cu102

- Datasets 2.4.0

- Tokenizers 0.12.1

|

wyu1/FiD-NQ

|

wyu1

| 2022-09-16T16:34:33Z | 47 | 1 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"license:cc-by-4.0",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | null | 2022-08-18T22:15:17Z |

---

license: cc-by-4.0

---

# FiD model trained on NQ

-- This is the model checkpoint of FiD [2], based on the T5 large (with 770M parameters) and trained on the natural question (NQ) dataset [1].

-- Hyperparameters: 8 x 40GB A100 GPUs; batch size 8; AdamW; LR 3e-5; 50000 steps

References:

[1] Natural Questions: A Benchmark for Question Answering Research. TACL 2019.

[2] Leveraging Passage Retrieval with Generative Models for Open Domain Question Answering. EACL 2021.

## Model performance

We evaluate it on the NQ dataset, the EM score is 51.3 (0.1 lower than original performance reported in the paper).

<a href="https://huggingface.co/exbert/?model=bert-base-uncased">

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

</a>

|

shamr9/autotrain-firsttransformersproject-1478954182

|

shamr9

| 2022-09-16T15:46:18Z | 1 | 0 |

transformers

|

[

"transformers",

"pytorch",

"autotrain",

"summarization",

"ar",

"dataset:shamr9/autotrain-data-firsttransformersproject",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-09-16T05:53:23Z |

---

tags:

- autotrain

- summarization

language:

- ar

widget:

- text: "I love AutoTrain 🤗"

datasets:

- shamr9/autotrain-data-firsttransformersproject

co2_eq_emissions:

emissions: 5.113476145275885

---

# Model Trained Using AutoTrain

- Problem type: Summarization

- Model ID: 1478954182

- CO2 Emissions (in grams): 5.1135

## Validation Metrics

- Loss: 0.534

- Rouge1: 4.247

- Rouge2: 0.522

- RougeL: 4.260

- RougeLsum: 4.241

- Gen Len: 18.928

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_HUGGINGFACE_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/shamr9/autotrain-firsttransformersproject-1478954182

```

|

sd-concepts-library/diaosu-toy

|

sd-concepts-library

| 2022-09-16T14:53:35Z | 0 | 2 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-16T14:53:28Z |

---

license: mit

---

### diaosu toy on Stable Diffusion

This is the `<diaosu-toy>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

bibekitani123/finetuning-sentiment-model-3000-samples

|

bibekitani123

| 2022-09-16T14:46:45Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-15T21:05:05Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

- f1

model-index:

- name: finetuning-sentiment-model-3000-samples

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

config: plain_text

split: train

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.8666666666666667

- name: F1

type: f1

value: 0.8684210526315789

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3132

- Accuracy: 0.8667

- F1: 0.8684

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.22.0

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

pyronear/rexnet1_5x

|

pyronear

| 2022-09-16T12:47:25Z | 64 | 0 |

transformers

|

[

"transformers",

"pytorch",

"onnx",

"image-classification",

"dataset:pyronear/openfire",

"arxiv:2007.00992",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-07-17T20:30:57Z |

---

license: apache-2.0

tags:

- image-classification

- pytorch

- onnx

datasets:

- pyronear/openfire

---

# ReXNet-1.5x model

Pretrained on a dataset for wildfire binary classification (soon to be shared). The ReXNet architecture was introduced in [this paper](https://arxiv.org/pdf/2007.00992.pdf).

## Model description

The core idea of the author is to add a customized Squeeze-Excitation layer in the residual blocks that will prevent channel redundancy.

## Installation

### Prerequisites

Python 3.6 (or higher) and [pip](https://pip.pypa.io/en/stable/)/[conda](https://docs.conda.io/en/latest/miniconda.html) are required to install PyroVision.

### Latest stable release

You can install the last stable release of the package using [pypi](https://pypi.org/project/pyrovision/) as follows:

```shell

pip install pyrovision

```

or using [conda](https://anaconda.org/pyronear/pyrovision):

```shell

conda install -c pyronear pyrovision

```

### Developer mode

Alternatively, if you wish to use the latest features of the project that haven't made their way to a release yet, you can install the package from source *(install [Git](https://git-scm.com/book/en/v2/Getting-Started-Installing-Git) first)*:

```shell

git clone https://github.com/pyronear/pyro-vision.git

pip install -e pyro-vision/.

```

## Usage instructions

```python

from PIL import Image

from torchvision.transforms import Compose, ConvertImageDtype, Normalize, PILToTensor, Resize

from torchvision.transforms.functional import InterpolationMode

from pyrovision.models import model_from_hf_hub

model = model_from_hf_hub("pyronear/rexnet1_5x").eval()

img = Image.open(path_to_an_image).convert("RGB")

# Preprocessing

config = model.default_cfg

transform = Compose([

Resize(config['input_shape'][1:], interpolation=InterpolationMode.BILINEAR),

PILToTensor(),

ConvertImageDtype(torch.float32),

Normalize(config['mean'], config['std'])

])

input_tensor = transform(img).unsqueeze(0)

# Inference

with torch.inference_mode():

output = model(input_tensor)

probs = output.squeeze(0).softmax(dim=0)

```

## Citation

Original paper

```bibtex

@article{DBLP:journals/corr/abs-2007-00992,

author = {Dongyoon Han and

Sangdoo Yun and

Byeongho Heo and

Young Joon Yoo},

title = {ReXNet: Diminishing Representational Bottleneck on Convolutional Neural

Network},

journal = {CoRR},

volume = {abs/2007.00992},

year = {2020},

url = {https://arxiv.org/abs/2007.00992},

eprinttype = {arXiv},

eprint = {2007.00992},

timestamp = {Mon, 06 Jul 2020 15:26:01 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2007-00992.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

Source of this implementation

```bibtex

@software{Fernandez_Holocron_2020,

author = {Fernandez, François-Guillaume},

month = {5},

title = {{Holocron}},

url = {https://github.com/frgfm/Holocron},

year = {2020}

}

```

|

pyronear/rexnet1_3x

|

pyronear

| 2022-09-16T12:46:31Z | 65 | 1 |

transformers

|

[

"transformers",

"pytorch",

"onnx",

"image-classification",

"dataset:pyronear/openfire",

"arxiv:2007.00992",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-07-17T20:30:22Z |

---

license: apache-2.0

tags:

- image-classification

- pytorch

- onnx

datasets:

- pyronear/openfire

---

# ReXNet-1.3x model

Pretrained on a dataset for wildfire binary classification (soon to be shared). The ReXNet architecture was introduced in [this paper](https://arxiv.org/pdf/2007.00992.pdf).

## Model description

The core idea of the author is to add a customized Squeeze-Excitation layer in the residual blocks that will prevent channel redundancy.

## Installation

### Prerequisites

Python 3.6 (or higher) and [pip](https://pip.pypa.io/en/stable/)/[conda](https://docs.conda.io/en/latest/miniconda.html) are required to install PyroVision.

### Latest stable release

You can install the last stable release of the package using [pypi](https://pypi.org/project/pyrovision/) as follows:

```shell

pip install pyrovision

```

or using [conda](https://anaconda.org/pyronear/pyrovision):

```shell

conda install -c pyronear pyrovision

```

### Developer mode

Alternatively, if you wish to use the latest features of the project that haven't made their way to a release yet, you can install the package from source *(install [Git](https://git-scm.com/book/en/v2/Getting-Started-Installing-Git) first)*:

```shell

git clone https://github.com/pyronear/pyro-vision.git

pip install -e pyro-vision/.

```

## Usage instructions

```python

from PIL import Image

from torchvision.transforms import Compose, ConvertImageDtype, Normalize, PILToTensor, Resize

from torchvision.transforms.functional import InterpolationMode

from pyrovision.models import model_from_hf_hub

model = model_from_hf_hub("pyronear/rexnet1_3x").eval()

img = Image.open(path_to_an_image).convert("RGB")

# Preprocessing

config = model.default_cfg

transform = Compose([

Resize(config['input_shape'][1:], interpolation=InterpolationMode.BILINEAR),

PILToTensor(),

ConvertImageDtype(torch.float32),

Normalize(config['mean'], config['std'])

])

input_tensor = transform(img).unsqueeze(0)

# Inference

with torch.inference_mode():

output = model(input_tensor)

probs = output.squeeze(0).softmax(dim=0)

```

## Citation

Original paper

```bibtex

@article{DBLP:journals/corr/abs-2007-00992,

author = {Dongyoon Han and

Sangdoo Yun and

Byeongho Heo and

Young Joon Yoo},

title = {ReXNet: Diminishing Representational Bottleneck on Convolutional Neural

Network},

journal = {CoRR},

volume = {abs/2007.00992},

year = {2020},

url = {https://arxiv.org/abs/2007.00992},

eprinttype = {arXiv},

eprint = {2007.00992},

timestamp = {Mon, 06 Jul 2020 15:26:01 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2007-00992.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

Source of this implementation

```bibtex

@software{Fernandez_Holocron_2020,

author = {Fernandez, François-Guillaume},

month = {5},

title = {{Holocron}},

url = {https://github.com/frgfm/Holocron},

year = {2020}

}

```

|

test1234678/distilbert-base-uncased-finetuned-clinc

|

test1234678

| 2022-09-16T12:22:33Z | 110 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",