modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-13 00:37:47

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 555

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-13 00:35:18

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

M-CLIP/Swedish-2M

|

M-CLIP

| 2022-09-15T10:46:07Z | 154 | 1 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"bert",

"feature-extraction",

"sv",

"endpoints_compatible",

"region:us"

] |

feature-extraction

| 2022-03-02T23:29:04Z |

---

language: sv

---

<br />

<p align="center">

<h1 align="center">Swe-CLIP 2M</h1>

<p align="center">

<a href="https://github.com/FreddeFrallan/Multilingual-CLIP/tree/main/Model%20Cards/Swe-CLIP%202M">Github Model Card</a>

</p>

</p>

## Usage

To use this model along with the original CLIP vision encoder you need to download the code and additional linear weights from the [Multilingual-CLIP Github](https://github.com/FreddeFrallan/Multilingual-CLIP).

Once this is done, you can load and use the model with the following code

```python

from src import multilingual_clip

model = multilingual_clip.load_model('Swe-CLIP-500k')

embeddings = model(['Älgen är skogens konung!', 'Alla isbjörnar är vänsterhänta'])

print(embeddings.shape)

# Yields: torch.Size([2, 640])

```

<!-- ABOUT THE PROJECT -->

## About

A [KB/Bert-Swedish-Cased](https://huggingface.co/KB/bert-base-swedish-cased) tuned to match the embedding space of the CLIP text encoder which accompanies the Res50x4 vision encoder. <br>

Training data pairs was generated by sampling 2 Million sentences from the combined descriptions of [GCC](https://ai.google.com/research/ConceptualCaptions/) + [MSCOCO](https://cocodataset.org/#home) + [VizWiz](https://vizwiz.org/tasks-and-datasets/image-captioning/), and translating them into Swedish.

All translation was done using the [Huggingface Opus Model](https://huggingface.co/Helsinki-NLP/opus-mt-en-sv), which seemingly procudes higher quality translations than relying on the [AWS translate service](https://aws.amazon.com/translate/).

|

M-CLIP/XLM-Roberta-Large-Vit-B-16Plus

|

M-CLIP

| 2022-09-15T10:45:56Z | 57,956 | 27 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"multilingual",

"af",

"sq",

"am",

"ar",

"az",

"bn",

"bs",

"bg",

"ca",

"zh",

"hr",

"cs",

"da",

"nl",

"en",

"et",

"fr",

"de",

"el",

"hi",

"hu",

"is",

"id",

"it",

"ja",

"mk",

"ml",

"mr",

"pl",

"pt",

"ro",

"ru",

"sr",

"sl",

"es",

"sw",

"sv",

"tl",

"te",

"tr",

"tk",

"uk",

"ur",

"ug",

"uz",

"vi",

"xh",

"endpoints_compatible",

"region:us"

] | null | 2022-05-30T21:33:14Z |

---

language:

- multilingual

- af

- sq

- am

- ar

- az

- bn

- bs

- bg

- ca

- zh

- hr

- cs

- da

- nl

- en

- et

- fr

- de

- el

- hi

- hu

- is

- id

- it

- ja

- mk

- ml

- mr

- pl

- pt

- ro

- ru

- sr

- sl

- es

- sw

- sv

- tl

- te

- tr

- tk

- uk

- ur

- ug

- uz

- vi

- xh

---

## Multilingual-clip: XLM-Roberta-Large-Vit-B-16Plus

Multilingual-CLIP extends OpenAI's English text encoders to multiple other languages. This model *only* contains the multilingual text encoder. The corresponding image model `Vit-B-16Plus` can be retrieved via instructions found on `mlfoundations` [open_clip repository on Github](https://github.com/mlfoundations/open_clip). We provide a usage example below.

## Requirements

To use both the multilingual text encoder and corresponding image encoder, we need to install the packages [`multilingual-clip`](https://github.com/FreddeFrallan/Multilingual-CLIP) and [`open_clip_torch`](https://github.com/mlfoundations/open_clip).

```

pip install multilingual-clip

pip install open_clip_torch

```

## Usage

Extracting embeddings from the text encoder can be done in the following way:

```python

from multilingual_clip import pt_multilingual_clip

import transformers

texts = [

'Three blind horses listening to Mozart.',

'Älgen är skogens konung!',

'Wie leben Eisbären in der Antarktis?',

'Вы знали, что все белые медведи левши?'

]

model_name = 'M-CLIP/XLM-Roberta-Large-Vit-B-16Plus'

# Load Model & Tokenizer

model = pt_multilingual_clip.MultilingualCLIP.from_pretrained(model_name)

tokenizer = transformers.AutoTokenizer.from_pretrained(model_name)

embeddings = model.forward(texts, tokenizer)

print("Text features shape:", embeddings.shape)

```

Extracting embeddings from the corresponding image encoder:

```python

import torch

import open_clip

import requests

from PIL import Image

device = "cuda" if torch.cuda.is_available() else "cpu"

model, _, preprocess = open_clip.create_model_and_transforms('ViT-B-16-plus-240', pretrained="laion400m_e32")

model.to(device)

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

image = preprocess(image).unsqueeze(0).to(device)

with torch.no_grad():

image_features = model.encode_image(image)

print("Image features shape:", image_features.shape)

```

## Evaluation results

None of the M-CLIP models have been extensivly evaluated, but testing them on Txt2Img retrieval on the humanly translated MS-COCO dataset, we see the following **R@10** results:

| Name | En | De | Es | Fr | Zh | It | Pl | Ko | Ru | Tr | Jp |

| ----------------------------------|:-----: |:-----: |:-----: |:-----: | :-----: |:-----: |:-----: |:-----: |:-----: |:-----: |:-----: |

| [OpenAI CLIP Vit-B/32](https://github.com/openai/CLIP)| 90.3 | - | - | - | - | - | - | - | - | - | - |

| [OpenAI CLIP Vit-L/14](https://github.com/openai/CLIP)| 91.8 | - | - | - | - | - | - | - | - | - | - |

| [OpenCLIP ViT-B-16+-](https://github.com/openai/CLIP)| 94.3 | - | - | - | - | - | - | - | - | - | - |

| [LABSE Vit-L/14](https://huggingface.co/M-CLIP/LABSE-Vit-L-14)| 91.6 | 89.6 | 89.5 | 89.9 | 88.9 | 90.1 | 89.8 | 80.8 | 85.5 | 89.8 | 73.9 |

| [XLM-R Large Vit-B/32](https://huggingface.co/M-CLIP/XLM-Roberta-Large-Vit-B-32)| 91.8 | 88.7 | 89.1 | 89.4 | 89.3 | 89.8| 91.4 | 82.1 | 86.1 | 88.8 | 81.0 |

| [XLM-R Vit-L/14](https://huggingface.co/M-CLIP/XLM-Roberta-Large-Vit-L-14)| 92.4 | 90.6 | 91.0 | 90.0 | 89.7 | 91.1 | 91.3 | 85.2 | 85.8 | 90.3 | 81.9 |

| [XLM-R Large Vit-B/16+](https://huggingface.co/M-CLIP/XLM-Roberta-Large-Vit-B-16Plus)| **95.0** | **93.0** | **93.6** | **93.1** | **94.0** | **93.1** | **94.4** | **89.0** | **90.0** | **93.0** | **84.2** |

## Training/Model details

Further details about the model training and data can be found in the [model card](https://github.com/FreddeFrallan/Multilingual-CLIP/blob/main/larger_mclip.md).

|

M-CLIP/XLM-Roberta-Large-Vit-B-32

|

M-CLIP

| 2022-09-15T10:45:49Z | 13,427 | 15 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"M-CLIP",

"multilingual",

"af",

"sq",

"am",

"ar",

"az",

"bn",

"bs",

"bg",

"ca",

"zh",

"hr",

"cs",

"da",

"nl",

"en",

"et",

"fr",

"de",

"el",

"hi",

"hu",

"is",

"id",

"it",

"ja",

"mk",

"ml",

"mr",

"pl",

"pt",

"ro",

"ru",

"sr",

"sl",

"es",

"sw",

"sv",

"tl",

"te",

"tr",

"tk",

"uk",

"ur",

"ug",

"uz",

"vi",

"xh",

"endpoints_compatible",

"region:us"

] | null | 2022-05-31T09:50:54Z |

---

language:

- multilingual

- af

- sq

- am

- ar

- az

- bn

- bs

- bg

- ca

- zh

- hr

- cs

- da

- nl

- en

- et

- fr

- de

- el

- hi

- hu

- is

- id

- it

- ja

- mk

- ml

- mr

- pl

- pt

- ro

- ru

- sr

- sl

- es

- sw

- sv

- tl

- te

- tr

- tk

- uk

- ur

- ug

- uz

- vi

- xh

---

## Multilingual-clip: XLM-Roberta-Large-Vit-B-32

Multilingual-CLIP extends OpenAI's English text encoders to multiple other languages. This model *only* contains the multilingual text encoder. The corresponding image model `ViT-B-32` can be retrieved via instructions found on OpenAI's [CLIP repository on Github](https://github.com/openai/CLIP). We provide a usage example below.

## Requirements

To use both the multilingual text encoder and corresponding image encoder, we need to install the packages [`multilingual-clip`](https://github.com/FreddeFrallan/Multilingual-CLIP) and [`clip`](https://github.com/openai/CLIP).

```

pip install multilingual-clip

pip install git+https://github.com/openai/CLIP.git

```

## Usage

Extracting embeddings from the text encoder can be done in the following way:

```python

from multilingual_clip import pt_multilingual_clip

import transformers

texts = [

'Three blind horses listening to Mozart.',

'Älgen är skogens konung!',

'Wie leben Eisbären in der Antarktis?',

'Вы знали, что все белые медведи левши?'

]

model_name = 'M-CLIP/XLM-Roberta-Large-Vit-B-32'

# Load Model & Tokenizer

model = pt_multilingual_clip.MultilingualCLIP.from_pretrained(model_name)

tokenizer = transformers.AutoTokenizer.from_pretrained(model_name)

embeddings = model.forward(texts, tokenizer)

print("Text features shape:", embeddings.shape)

```

Extracting embeddings from the corresponding image encoder:

```python

import torch

import clip

import requests

from PIL import Image

device = "cuda" if torch.cuda.is_available() else "cpu"

model, preprocess = clip.load("ViT-B/32", device=device)

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

image = preprocess(image).unsqueeze(0).to(device)

with torch.no_grad():

image_features = model.encode_image(image)

print("Image features shape:", image_features.shape)

```

## Evaluation results

None of the M-CLIP models have been extensivly evaluated, but testing them on Txt2Img retrieval on the humanly translated MS-COCO dataset, we see the following **R@10** results:

| Name | En | De | Es | Fr | Zh | It | Pl | Ko | Ru | Tr | Jp |

| ----------------------------------|:-----: |:-----: |:-----: |:-----: | :-----: |:-----: |:-----: |:-----: |:-----: |:-----: |:-----: |

| [OpenAI CLIP Vit-B/32](https://github.com/openai/CLIP)| 90.3 | - | - | - | - | - | - | - | - | - | - |

| [OpenAI CLIP Vit-L/14](https://github.com/openai/CLIP)| 91.8 | - | - | - | - | - | - | - | - | - | - |

| [OpenCLIP ViT-B-16+-](https://github.com/openai/CLIP)| 94.3 | - | - | - | - | - | - | - | - | - | - |

| [LABSE Vit-L/14](https://huggingface.co/M-CLIP/LABSE-Vit-L-14)| 91.6 | 89.6 | 89.5 | 89.9 | 88.9 | 90.1 | 89.8 | 80.8 | 85.5 | 89.8 | 73.9 |

| [XLM-R Large Vit-B/32](https://huggingface.co/M-CLIP/XLM-Roberta-Large-Vit-B-32)| 91.8 | 88.7 | 89.1 | 89.4 | 89.3 | 89.8| 91.4 | 82.1 | 86.1 | 88.8 | 81.0 |

| [XLM-R Vit-L/14](https://huggingface.co/M-CLIP/XLM-Roberta-Large-Vit-L-14)| 92.4 | 90.6 | 91.0 | 90.0 | 89.7 | 91.1 | 91.3 | 85.2 | 85.8 | 90.3 | 81.9 |

| [XLM-R Large Vit-B/16+](https://huggingface.co/M-CLIP/XLM-Roberta-Large-Vit-B-16Plus)| **95.0** | **93.0** | **93.6** | **93.1** | **94.0** | **93.1** | **94.4** | **89.0** | **90.0** | **93.0** | **84.2** |

## Training/Model details

Further details about the model training and data can be found in the [model card](https://github.com/FreddeFrallan/Multilingual-CLIP/blob/main/larger_mclip.md).

|

M-CLIP/XLM-Roberta-Large-Vit-L-14

|

M-CLIP

| 2022-09-15T10:44:59Z | 27,133 | 14 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"multilingual",

"af",

"sq",

"am",

"ar",

"az",

"bn",

"bs",

"bg",

"ca",

"zh",

"hr",

"cs",

"da",

"nl",

"en",

"et",

"fr",

"de",

"el",

"hi",

"hu",

"is",

"id",

"it",

"ja",

"mk",

"ml",

"mr",

"pl",

"pt",

"ro",

"ru",

"sr",

"sl",

"es",

"sw",

"sv",

"tl",

"te",

"tr",

"tk",

"uk",

"ur",

"ug",

"uz",

"vi",

"xh",

"endpoints_compatible",

"region:us"

] | null | 2022-05-30T14:35:41Z |

---

language:

- multilingual

- af

- sq

- am

- ar

- az

- bn

- bs

- bg

- ca

- zh

- hr

- cs

- da

- nl

- en

- et

- fr

- de

- el

- hi

- hu

- is

- id

- it

- ja

- mk

- ml

- mr

- pl

- pt

- ro

- ru

- sr

- sl

- es

- sw

- sv

- tl

- te

- tr

- tk

- uk

- ur

- ug

- uz

- vi

- xh

---

## Multilingual-clip: XLM-Roberta-Large-Vit-L-14

Multilingual-CLIP extends OpenAI's English text encoders to multiple other languages. This model *only* contains the multilingual text encoder. The corresponding image model `ViT-L-14` can be retrieved via instructions found on OpenAI's [CLIP repository on Github](https://github.com/openai/CLIP). We provide a usage example below.

## Requirements

To use both the multilingual text encoder and corresponding image encoder, we need to install the packages [`multilingual-clip`](https://github.com/FreddeFrallan/Multilingual-CLIP) and [`clip`](https://github.com/openai/CLIP).

```

pip install multilingual-clip

pip install git+https://github.com/openai/CLIP.git

```

## Usage

Extracting embeddings from the text encoder can be done in the following way:

```python

from multilingual_clip import pt_multilingual_clip

import transformers

texts = [

'Three blind horses listening to Mozart.',

'Älgen är skogens konung!',

'Wie leben Eisbären in der Antarktis?',

'Вы знали, что все белые медведи левши?'

]

model_name = 'M-CLIP/XLM-Roberta-Large-Vit-L-14'

# Load Model & Tokenizer

model = pt_multilingual_clip.MultilingualCLIP.from_pretrained(model_name)

tokenizer = transformers.AutoTokenizer.from_pretrained(model_name)

embeddings = model.forward(texts, tokenizer)

print("Text features shape:", embeddings.shape)

```

Extracting embeddings from the corresponding image encoder:

```python

import torch

import clip

import requests

from PIL import Image

device = "cuda" if torch.cuda.is_available() else "cpu"

model, preprocess = clip.load("ViT-L/14", device=device)

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

image = preprocess(image).unsqueeze(0).to(device)

with torch.no_grad():

image_features = model.encode_image(image)

print("Image features shape:", image_features.shape)

```

## Evaluation results

None of the M-CLIP models have been extensivly evaluated, but testing them on Txt2Img retrieval on the humanly translated MS-COCO dataset, we see the following **R@10** results:

| Name | En | De | Es | Fr | Zh | It | Pl | Ko | Ru | Tr | Jp |

| ----------------------------------|:-----: |:-----: |:-----: |:-----: | :-----: |:-----: |:-----: |:-----: |:-----: |:-----: |:-----: |

| [OpenAI CLIP Vit-B/32](https://github.com/openai/CLIP)| 90.3 | - | - | - | - | - | - | - | - | - | - |

| [OpenAI CLIP Vit-L/14](https://github.com/openai/CLIP)| 91.8 | - | - | - | - | - | - | - | - | - | - |

| [OpenCLIP ViT-B-16+-](https://github.com/openai/CLIP)| 94.3 | - | - | - | - | - | - | - | - | - | - |

| [LABSE Vit-L/14](https://huggingface.co/M-CLIP/LABSE-Vit-L-14)| 91.6 | 89.6 | 89.5 | 89.9 | 88.9 | 90.1 | 89.8 | 80.8 | 85.5 | 89.8 | 73.9 |

| [XLM-R Large Vit-B/32](https://huggingface.co/M-CLIP/XLM-Roberta-Large-Vit-B-32)| 91.8 | 88.7 | 89.1 | 89.4 | 89.3 | 89.8| 91.4 | 82.1 | 86.1 | 88.8 | 81.0 |

| [XLM-R Vit-L/14](https://huggingface.co/M-CLIP/XLM-Roberta-Large-Vit-L-14)| 92.4 | 90.6 | 91.0 | 90.0 | 89.7 | 91.1 | 91.3 | 85.2 | 85.8 | 90.3 | 81.9 |

| [XLM-R Large Vit-B/16+](https://huggingface.co/M-CLIP/XLM-Roberta-Large-Vit-B-16Plus)| **95.0** | **93.0** | **93.6** | **93.1** | **94.0** | **93.1** | **94.4** | **89.0** | **90.0** | **93.0** | **84.2** |

## Training/Model details

Further details about the model training and data can be found in the [model card](https://github.com/FreddeFrallan/Multilingual-CLIP/blob/main/larger_mclip.md).

|

mpapucci/it5-age-classification-tag-it

|

mpapucci

| 2022-09-15T10:31:04Z | 111 | 0 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"T5",

"Text Classification",

"it",

"dataset:TAG-IT",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-01T20:24:09Z |

---

language:

- it

tags:

- T5

- Text Classification

datasets:

- TAG-IT

---

Write an italian sentence with the prefix "Classifica Età: " to get an age classification of the sentence.

The dataset used for the task is: [TAG-IT](https://sites.google.com/view/tag-it-2020/).

The model is a fine tuned version of [IT5-base](https://huggingface.co/gsarti/it5-base) of Sarti and Nissim.

|

sd-concepts-library/cow-uwu

|

sd-concepts-library

| 2022-09-15T09:36:06Z | 0 | 1 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-15T09:36:02Z |

---

license: mit

---

### cow uwu on Stable Diffusion

This is the `<cow-uwu>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

oeg/esT5s-small

|

oeg

| 2022-09-15T09:31:00Z | 0 | 0 | null |

[

"license:cc-by-nc-4.0",

"region:us"

] | null | 2022-09-15T08:50:26Z |

---

license: cc-by-nc-4.0

---

This is the small version (274MB) of the summarization model for the Spanish language presented in the SEMANTiCS 2022 conference (paper entitled "esT5s: A Spanish Model for Text Summarization"). This model was created in less than 1 hour (using a single GPU, specifically an NVIDIA v100 16GB) from the multilingual T5 model using the XL-Sum dataset. It achieves a ROUGE-1 value of 22.21 (mT5 achieves 26.21 after a 96h training using 4 GPUs), ROUGE-2 5.28 (mT5 achieves 8.74), and ROUGE-l 17.44 (mT5 achieves 21.06).

|

sd-concepts-library/renalla

|

sd-concepts-library

| 2022-09-15T09:23:43Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-15T09:23:40Z |

---

license: mit

---

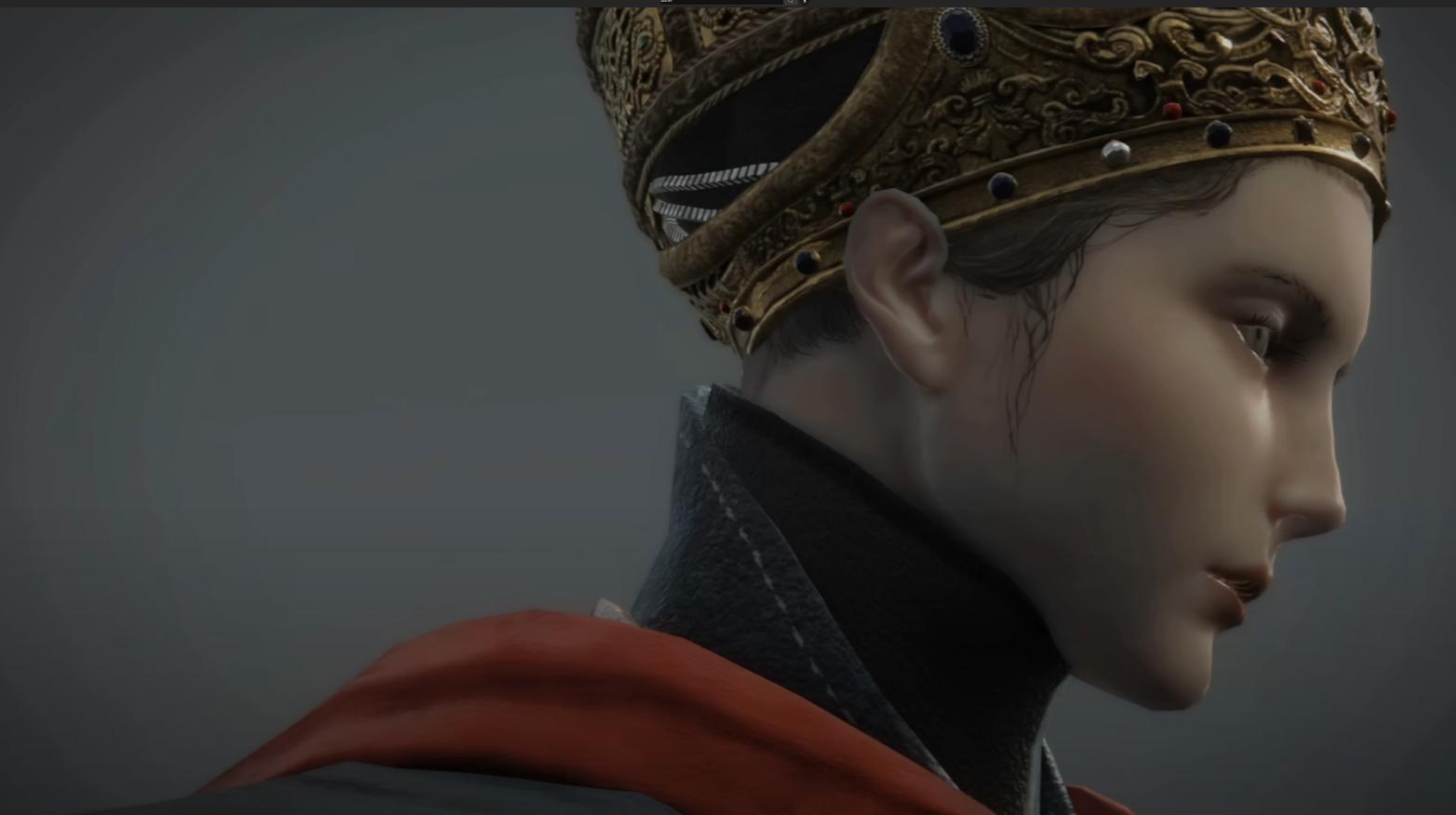

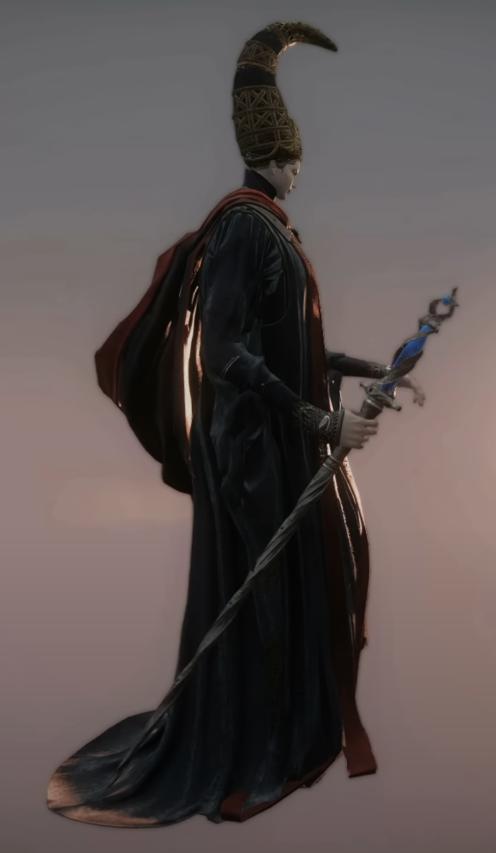

### Renalla on Stable Diffusion

This is the `Renalla` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

yandex/RuLeanALBERT

|

yandex

| 2022-09-15T09:16:42Z | 36 | 32 |

transformers

|

[

"transformers",

"lean_albert",

"fill-mask",

"ru",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-09-15T06:41:21Z |

---

language: ru

license: apache-2.0

---

RuLeanALBERT is a pretrained masked language model for the Russian language using a memory-efficient architecture.

Read more about the model in [this blog post](https://habr.com/ru/company/yandex/blog/688234/) (in Russian).

See its implementation, as well as the pretraining and finetuning code, at [https://github.com/yandex-research/RuLeanALBERT](https://github.com/yandex-research/RuLeanALBERT).

|

sd-concepts-library/style-of-marc-allante

|

sd-concepts-library

| 2022-09-15T07:48:41Z | 0 | 47 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-11T01:25:10Z |

---

license: mit

---

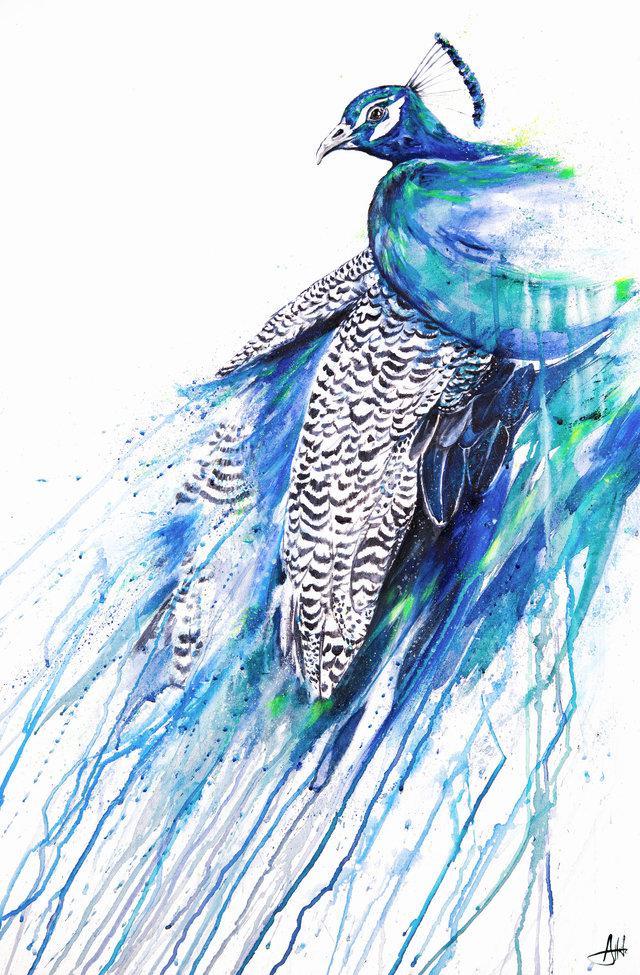

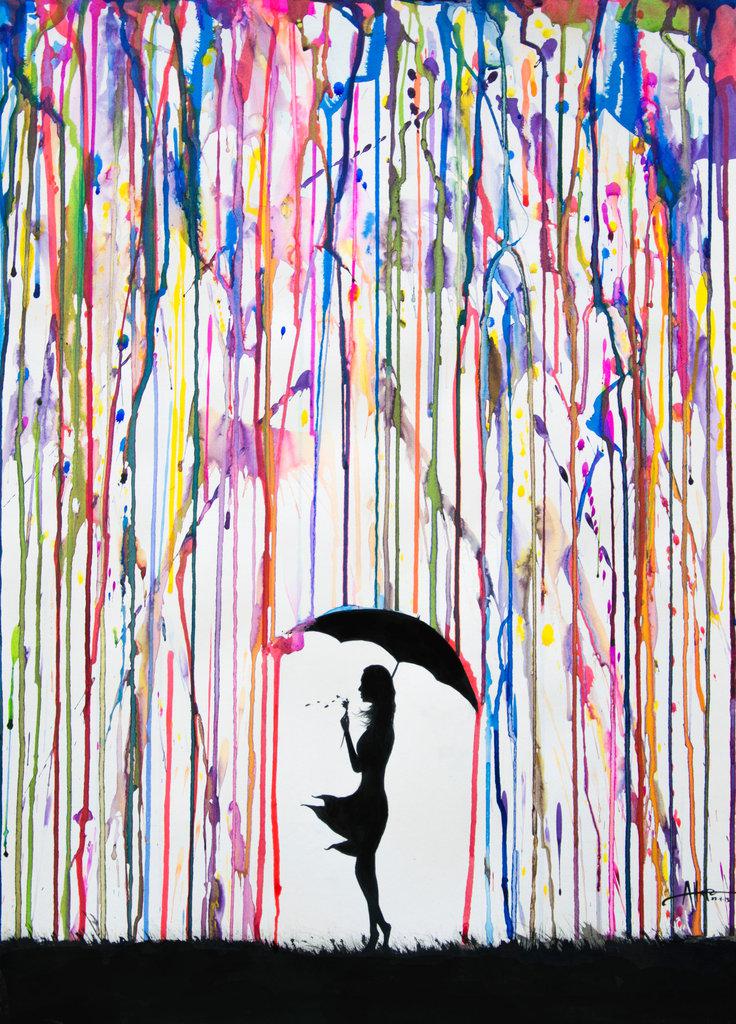

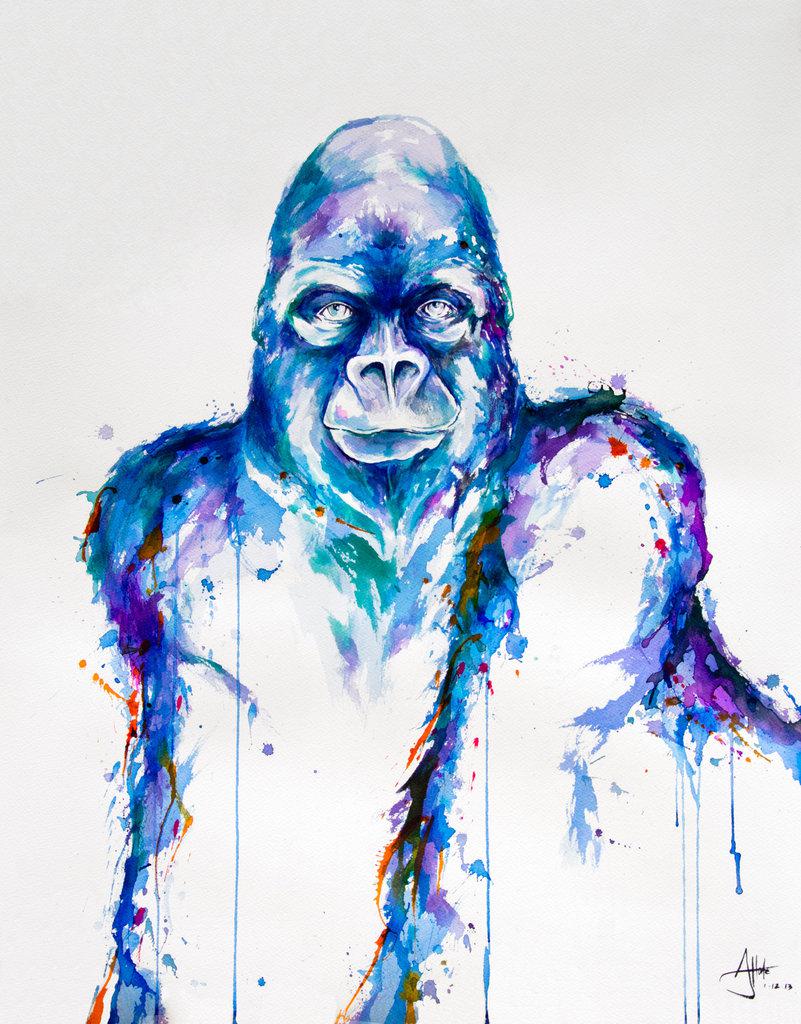

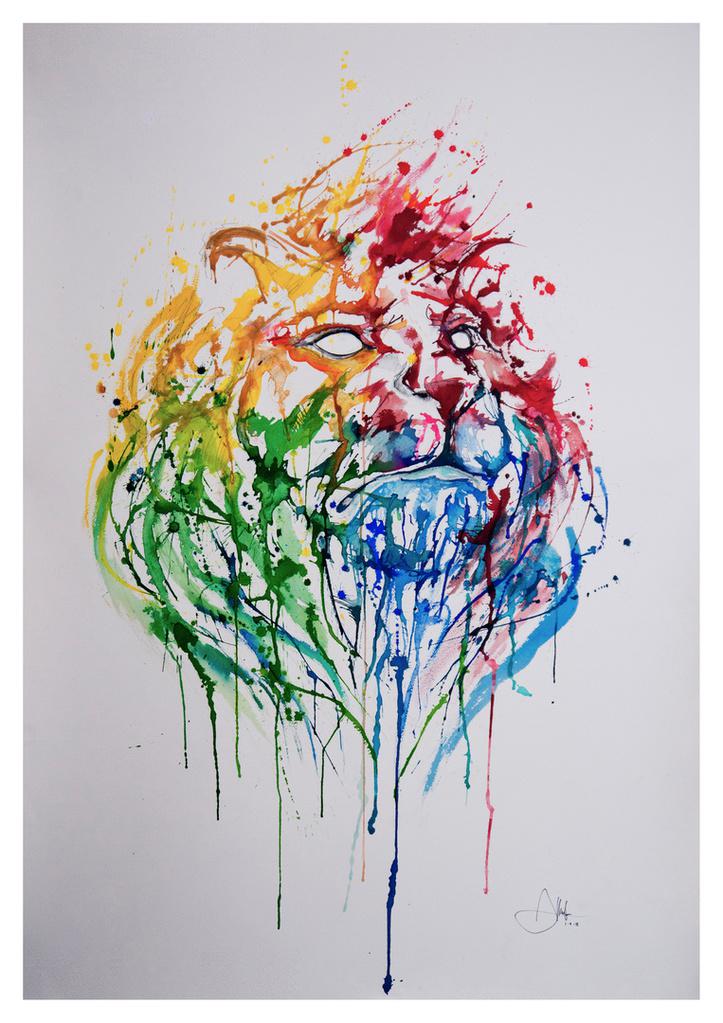

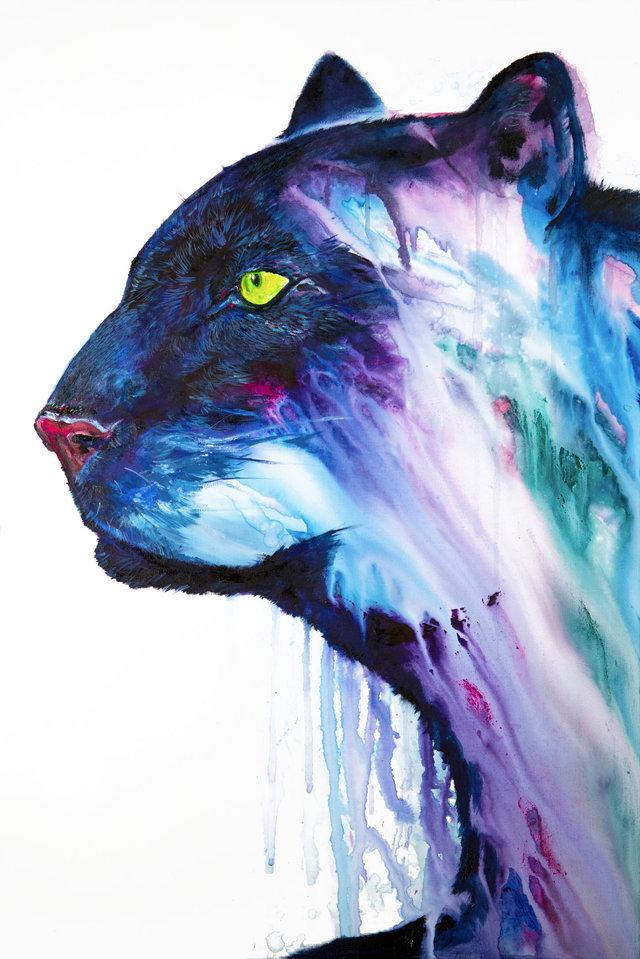

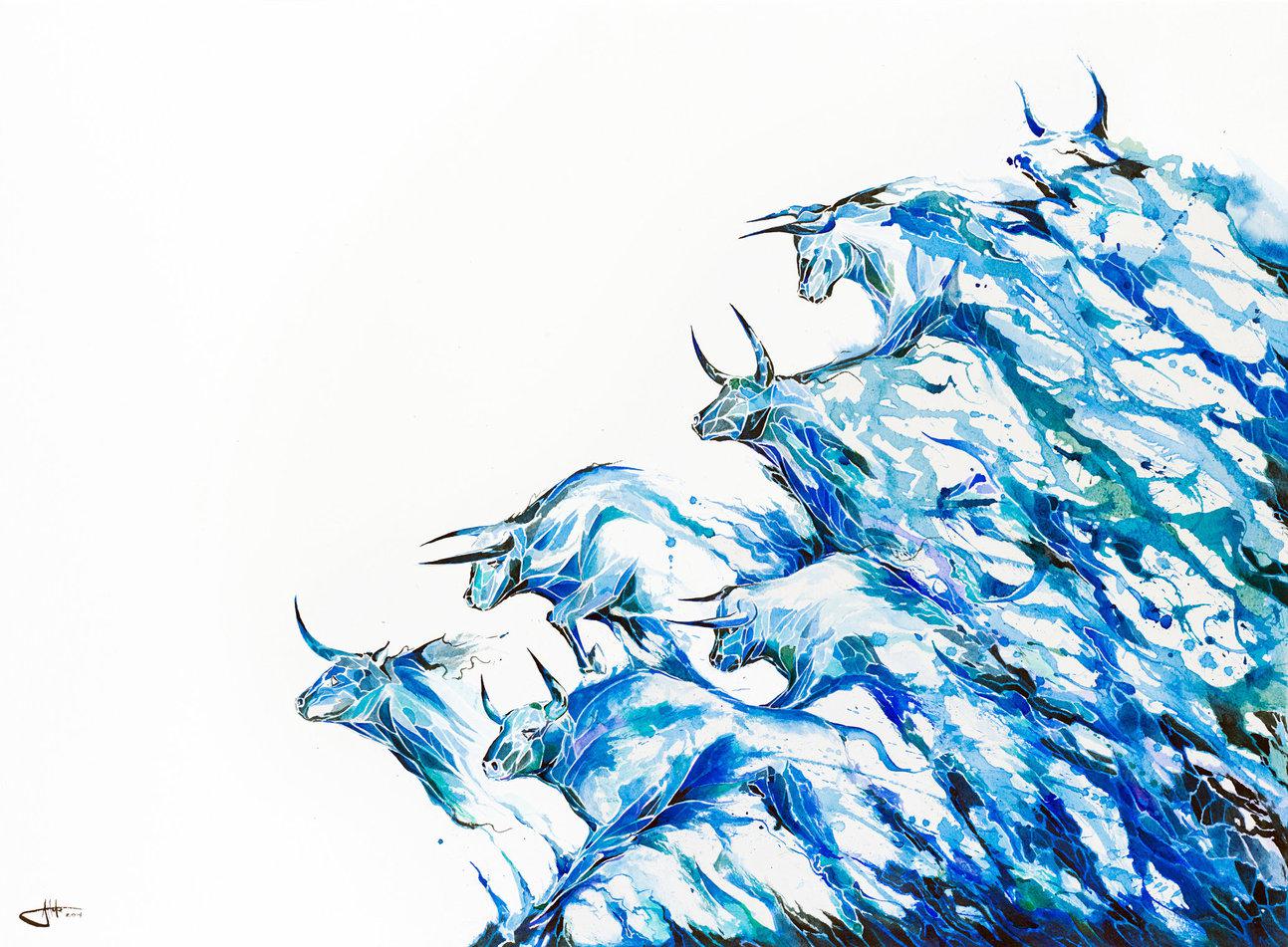

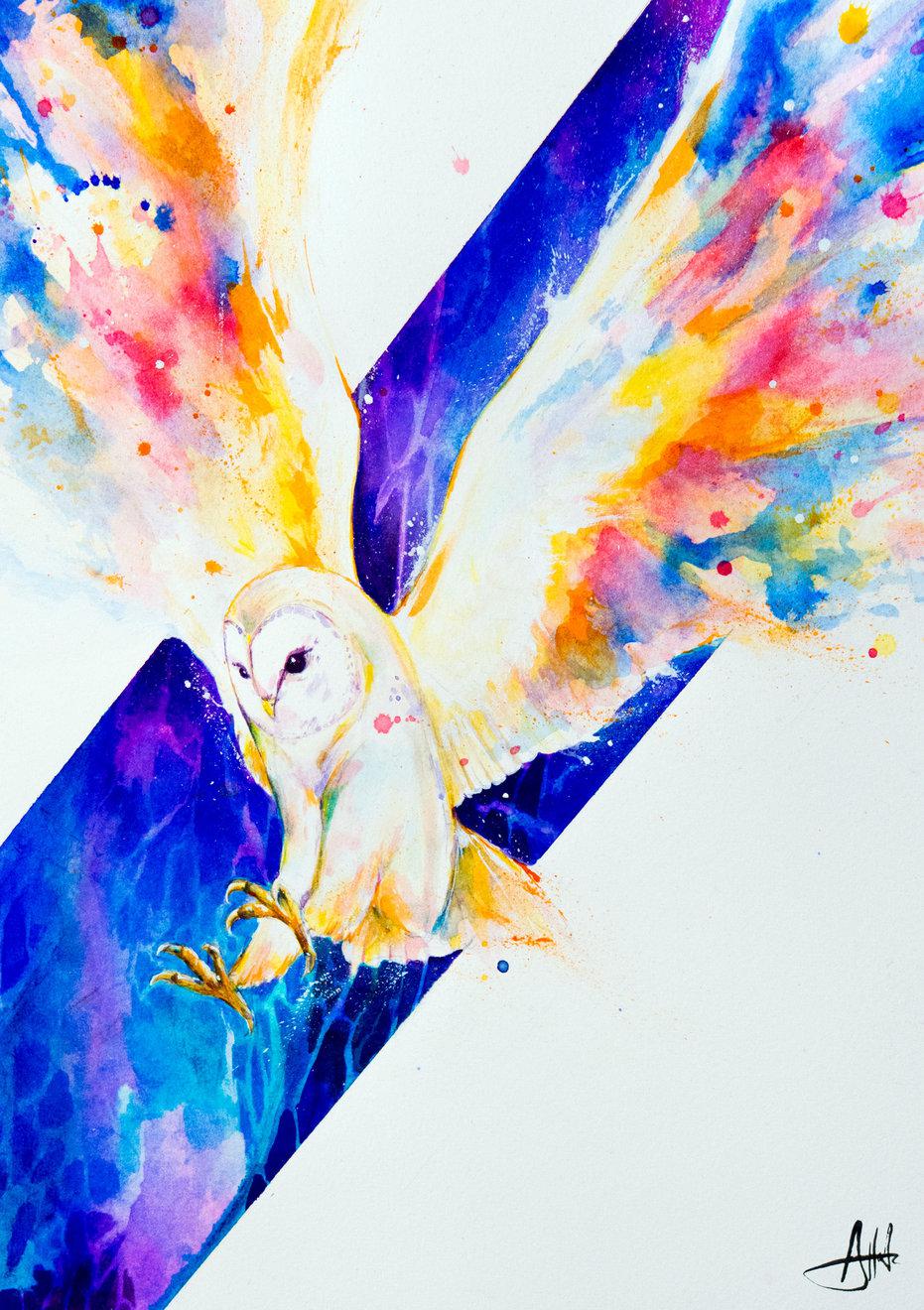

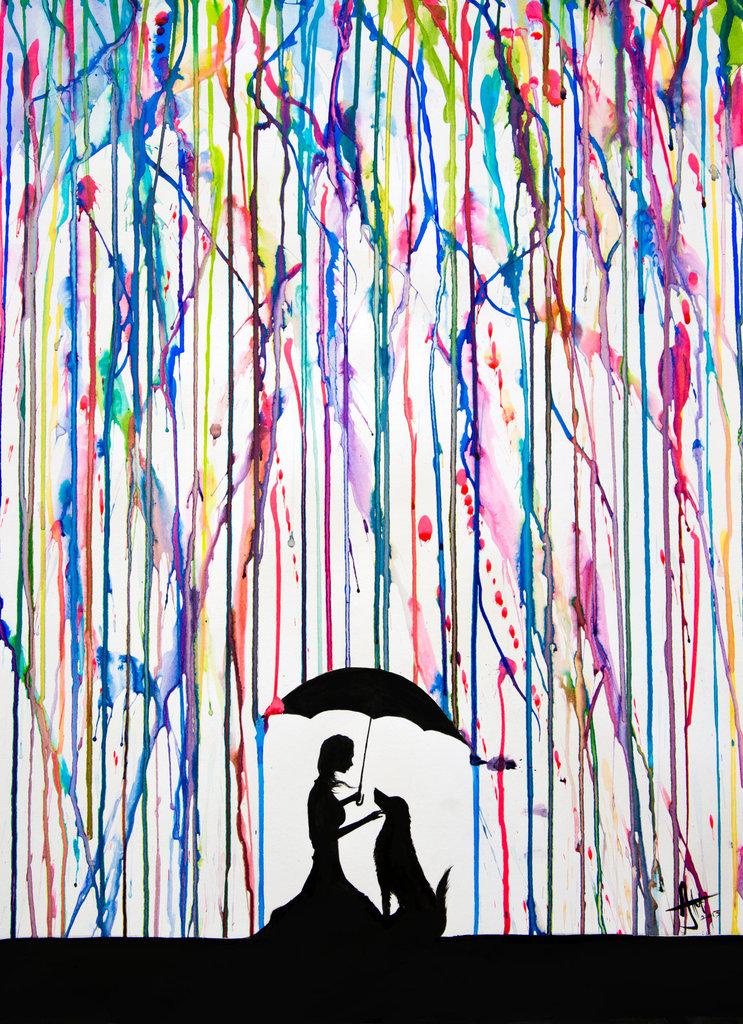

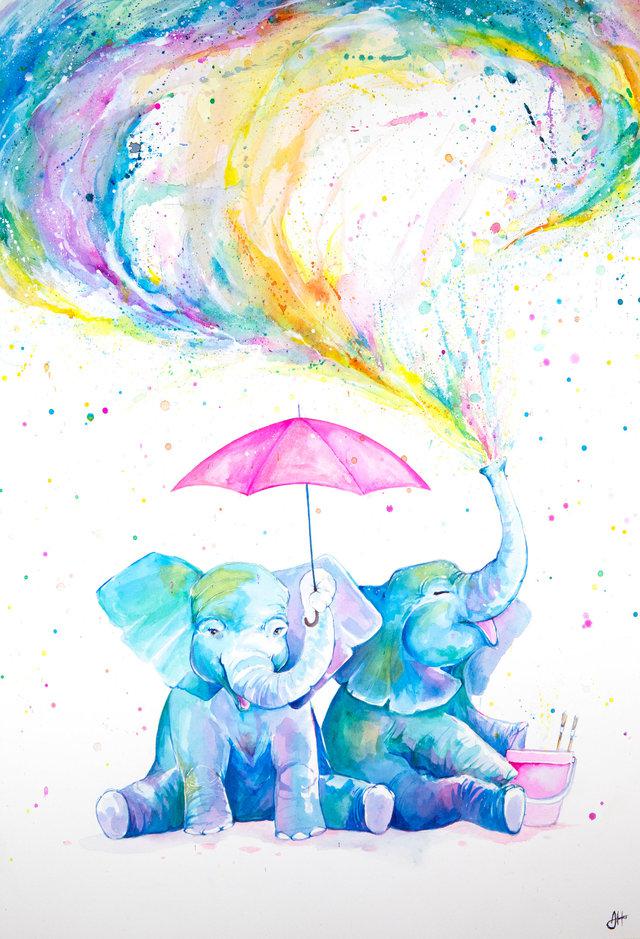

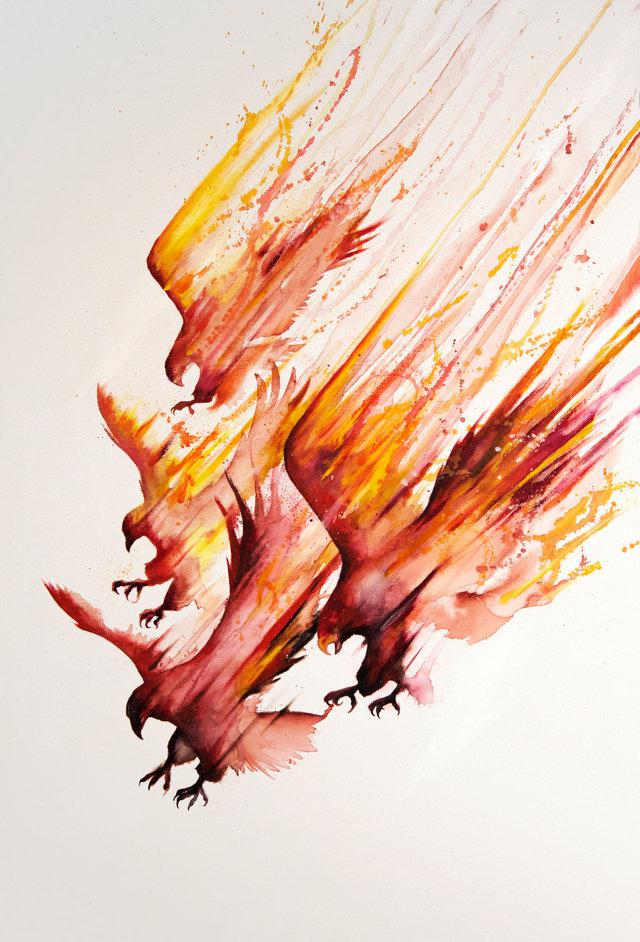

### Style-of-Marc-Allante on Stable Diffusion

This is the `<Marc_Allante>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

racheltong/wav2vec2-large-xlsr-chinese

|

racheltong

| 2022-09-15T07:36:59Z | 109 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-09-15T05:46:49Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: wav2vec2-large-xlsr-chinese

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xlsr-chinese

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 3.3216

- Cer: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 15

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Cer |

|:-------------:|:-----:|:----:|:---------------:|:---:|

| 16.1908 | 7.83 | 400 | 3.3216 | 1.0 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

lxj616/stable-diffusion-cn-poster

|

lxj616

| 2022-09-15T06:41:48Z | 0 | 9 | null |

[

"license:bigscience-bloom-rail-1.0",

"region:us"

] | null | 2022-09-15T05:31:45Z |

---

license: bigscience-bloom-rail-1.0

---

## Stable Diffusion With Chinese Characteristics

A finetuned stable diffusion on early chinese posters of farmers and workers

The meme text in sample are added manually afterwards

This prompt generate posters as original

```

a drawing of people in style of chinese propaganda poster

```

<img src="https://huggingface.co/lxj616/stable-diffusion-cn-poster/resolve/main/sample1.jpg" width=50% height=50%>

You may add some creativity to the prompt

```

a drawing of batman/superman in style of chinese propaganda poster

```

<img src="https://huggingface.co/lxj616/stable-diffusion-cn-poster/resolve/main/sample2.jpg" width=50% height=50%>

## License

See LICENSE.TXT from original stable-diffusion model repo

## Technical Details

On a single RTX 3090 Ti, 24G VRAM, 3 hours, 40 images of chinese posters, based on sd-v1.4

[Finetune stable diffusion under 24gb vram in hours](https://lxj616.github.io/jekyll/update/2022/09/12/finetune-stable-diffusion-under-24gb-vram-in-hours.html)

|

sd-concepts-library/ttte

|

sd-concepts-library

| 2022-09-15T06:33:45Z | 0 | 2 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-15T06:33:31Z |

---

license: mit

---

### TTTE on Stable Diffusion

This is the `<ttte-2>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

slplab/wav2vec2_xlsr50k_english_phoneme

|

slplab

| 2022-09-15T05:10:10Z | 38 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-08-29T15:42:18Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: wav2vec2_xlsr50k_english_phoneme

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2_xlsr50k_english_phoneme

This model is a fine-tuned version of [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on [the TIMIT dataset](https://catalog.ldc.upenn.edu/LDC93s1).

It achieves the following results on the evaluation set:

- Loss: 0.5783

- Cer: 0.1178

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 50

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Cer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 4.8403 | 6.94 | 500 | 1.1345 | 0.4657 |

| 0.5795 | 13.88 | 1000 | 0.3579 | 0.1169 |

| 0.3567 | 20.83 | 1500 | 0.3866 | 0.1174 |

| 0.2717 | 27.77 | 2000 | 0.4219 | 0.1169 |

| 0.2135 | 34.72 | 2500 | 0.4861 | 0.1199 |

| 0.1664 | 41.66 | 3000 | 0.5490 | 0.1179 |

| 0.1375 | 48.61 | 3500 | 0.5783 | 0.1178 |

### Framework versions

- Transformers 4.22.0.dev0

- Pytorch 1.12.1

- Datasets 1.13.3

- Tokenizers 0.12.1

|

sd-concepts-library/babushork

|

sd-concepts-library

| 2022-09-15T04:29:38Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-15T04:29:27Z |

---

license: mit

---

### babushork on Stable Diffusion

This is the `<babushork>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

xusysh/Reinforce-test-1

|

xusysh

| 2022-09-15T03:12:25Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-15T03:10:22Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-test-1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 462.70 +/- 92.29

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

sd-concepts-library/agm-style-nao

|

sd-concepts-library

| 2022-09-15T02:22:22Z | 0 | 35 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-15T02:13:10Z |

---

license: mit

---

### NOTE: USED WAIFU DIFFUSION

<https://huggingface.co/hakurei/waifu-diffusion>

### agm-style on Stable Diffusion

Artist: <https://www.pixiv.net/en/users/20670939>

This is the `<agm-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/uma-meme-style

|

sd-concepts-library

| 2022-09-15T02:16:00Z | 0 | 1 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-15T02:15:36Z |

---

license: mit

---

### uma-meme-style on Stable Diffusion

This is the `<uma-meme-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

rebolforces/a2c-AntBulletEnv-v0

|

rebolforces

| 2022-09-15T00:55:27Z | 1 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"AntBulletEnv-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-14T20:35:00Z |

---

library_name: stable-baselines3

tags:

- AntBulletEnv-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- metrics:

- type: mean_reward

value: 2124.31 +/- 153.87

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AntBulletEnv-v0

type: AntBulletEnv-v0

---

# **A2C** Agent playing **AntBulletEnv-v0**

This is a trained model of a **A2C** agent playing **AntBulletEnv-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Padomin/t5-base-TEDxJP-10front-1body-10rear

|

Padomin

| 2022-09-15T00:29:58Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:te_dx_jp",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-14T06:57:42Z |

---

license: cc-by-sa-4.0

tags:

- generated_from_trainer

datasets:

- te_dx_jp

model-index:

- name: t5-base-TEDxJP-10front-1body-10rear

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-TEDxJP-10front-1body-10rear

This model is a fine-tuned version of [sonoisa/t5-base-japanese](https://huggingface.co/sonoisa/t5-base-japanese) on the te_dx_jp dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4366

- Wer: 0.1693

- Mer: 0.1636

- Wil: 0.2493

- Wip: 0.7507

- Hits: 55904

- Substitutions: 6304

- Deletions: 2379

- Insertions: 2249

- Cer: 0.1332

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 40

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Mer | Wil | Wip | Hits | Substitutions | Deletions | Insertions | Cer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:------:|:-----:|:-------------:|:---------:|:----------:|:------:|

| 0.6166 | 1.0 | 1457 | 0.4595 | 0.2096 | 0.1979 | 0.2878 | 0.7122 | 54866 | 6757 | 2964 | 3819 | 0.1793 |

| 0.4985 | 2.0 | 2914 | 0.4190 | 0.1769 | 0.1710 | 0.2587 | 0.7413 | 55401 | 6467 | 2719 | 2241 | 0.1417 |

| 0.4787 | 3.0 | 4371 | 0.4130 | 0.1728 | 0.1670 | 0.2534 | 0.7466 | 55677 | 6357 | 2553 | 2249 | 0.1368 |

| 0.4299 | 4.0 | 5828 | 0.4085 | 0.1726 | 0.1665 | 0.2530 | 0.7470 | 55799 | 6381 | 2407 | 2357 | 0.1348 |

| 0.3855 | 5.0 | 7285 | 0.4130 | 0.1702 | 0.1644 | 0.2501 | 0.7499 | 55887 | 6309 | 2391 | 2292 | 0.1336 |

| 0.3109 | 6.0 | 8742 | 0.4182 | 0.1732 | 0.1668 | 0.2525 | 0.7475 | 55893 | 6317 | 2377 | 2494 | 0.1450 |

| 0.3027 | 7.0 | 10199 | 0.4256 | 0.1691 | 0.1633 | 0.2486 | 0.7514 | 55949 | 6273 | 2365 | 2283 | 0.1325 |

| 0.2729 | 8.0 | 11656 | 0.4252 | 0.1709 | 0.1649 | 0.2503 | 0.7497 | 55909 | 6283 | 2395 | 2362 | 0.1375 |

| 0.2531 | 9.0 | 13113 | 0.4329 | 0.1696 | 0.1639 | 0.2499 | 0.7501 | 55870 | 6322 | 2395 | 2235 | 0.1334 |

| 0.2388 | 10.0 | 14570 | 0.4366 | 0.1693 | 0.1636 | 0.2493 | 0.7507 | 55904 | 6304 | 2379 | 2249 | 0.1332 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu116

- Datasets 2.4.0

- Tokenizers 0.12.1

|

mlaricheva/roberta-psych

|

mlaricheva

| 2022-09-14T23:37:07Z | 170 | 1 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"fill-mask",

"arxiv:1907.11692",

"arxiv:2208.06525",

"doi:10.57967/hf/1497",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-09-09T22:44:25Z |

# roberta-psych

---

language: en

---

This is a [RoBERTa](https://arxiv.org/pdf/1907.11692.pdf) model pretrained on Alexander Street Database of Counselling and Psychotherapy Transcripts (see more about database and its content [here](https://alexanderstreet.com/products/counseling-and-psychotherapy-transcripts-series)).

Further information about training, parameters and evaluation is available in our paper:

Laricheva, M., Zhang, C., Liu, Y., Chen, G., Tracey, T., Young, R., & Carenini, G. (2022). [Automated Utterance Labeling of Conversations Using Natural Language Processing.](https://arxiv.org/abs/2208.06525) arXiv preprint arXiv:2208.06525

---

license: cc-by-nc-sa-2.0

---

|

Padomin/t5-base-TEDxJP-9front-1body-9rear

|

Padomin

| 2022-09-14T23:16:48Z | 8 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:te_dx_jp",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-09-14T06:57:11Z |

---

license: cc-by-sa-4.0

tags:

- generated_from_trainer

datasets:

- te_dx_jp

model-index:

- name: t5-base-TEDxJP-9front-1body-9rear

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-TEDxJP-9front-1body-9rear

This model is a fine-tuned version of [sonoisa/t5-base-japanese](https://huggingface.co/sonoisa/t5-base-japanese) on the te_dx_jp dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4361

- Wer: 0.1687

- Mer: 0.1630

- Wil: 0.2486

- Wip: 0.7514

- Hits: 55941

- Substitutions: 6292

- Deletions: 2354

- Insertions: 2252

- Cer: 0.1338

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 40

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Mer | Wil | Wip | Hits | Substitutions | Deletions | Insertions | Cer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:------:|:-----:|:-------------:|:---------:|:----------:|:------:|

| 0.6124 | 1.0 | 1457 | 0.4613 | 0.2407 | 0.2209 | 0.3091 | 0.6909 | 54843 | 6758 | 2986 | 5804 | 0.2153 |

| 0.4968 | 2.0 | 2914 | 0.4171 | 0.1777 | 0.1716 | 0.2580 | 0.7420 | 55404 | 6354 | 2829 | 2293 | 0.1402 |

| 0.4817 | 3.0 | 4371 | 0.4129 | 0.1731 | 0.1673 | 0.2534 | 0.7466 | 55636 | 6332 | 2619 | 2227 | 0.1349 |

| 0.4257 | 4.0 | 5828 | 0.4089 | 0.1722 | 0.1659 | 0.2520 | 0.7480 | 55904 | 6346 | 2337 | 2437 | 0.1361 |

| 0.3831 | 5.0 | 7285 | 0.4144 | 0.1705 | 0.1646 | 0.2508 | 0.7492 | 55868 | 6343 | 2376 | 2290 | 0.1358 |

| 0.3057 | 6.0 | 8742 | 0.4198 | 0.1690 | 0.1632 | 0.2492 | 0.7508 | 55972 | 6333 | 2282 | 2298 | 0.1350 |

| 0.2919 | 7.0 | 10199 | 0.4220 | 0.1693 | 0.1635 | 0.2492 | 0.7508 | 55936 | 6310 | 2341 | 2281 | 0.1337 |

| 0.2712 | 8.0 | 11656 | 0.4252 | 0.1688 | 0.1632 | 0.2487 | 0.7513 | 55905 | 6286 | 2396 | 2218 | 0.1348 |

| 0.2504 | 9.0 | 13113 | 0.4332 | 0.1685 | 0.1629 | 0.2482 | 0.7518 | 55931 | 6270 | 2386 | 2226 | 0.1331 |

| 0.2446 | 10.0 | 14570 | 0.4361 | 0.1687 | 0.1630 | 0.2486 | 0.7514 | 55941 | 6292 | 2354 | 2252 | 0.1338 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu116

- Datasets 2.4.0

- Tokenizers 0.12.1

|

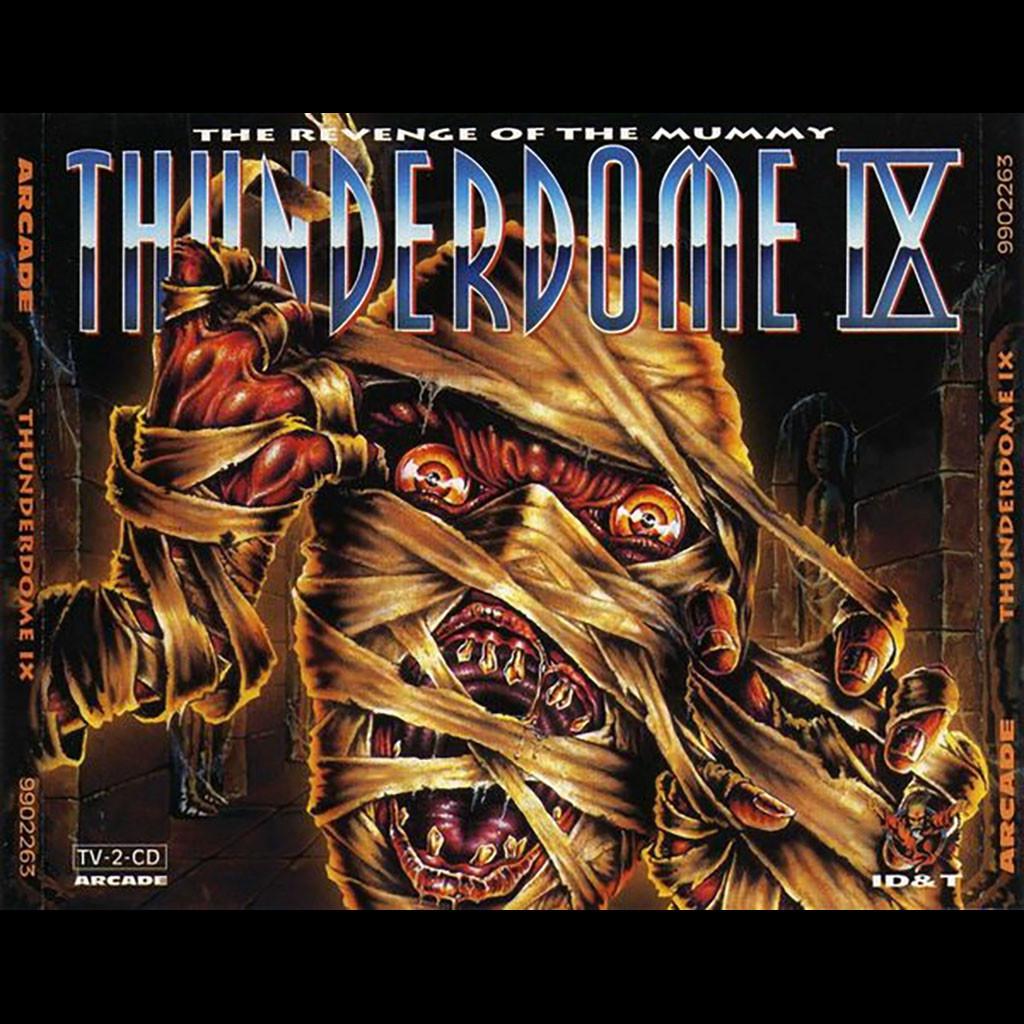

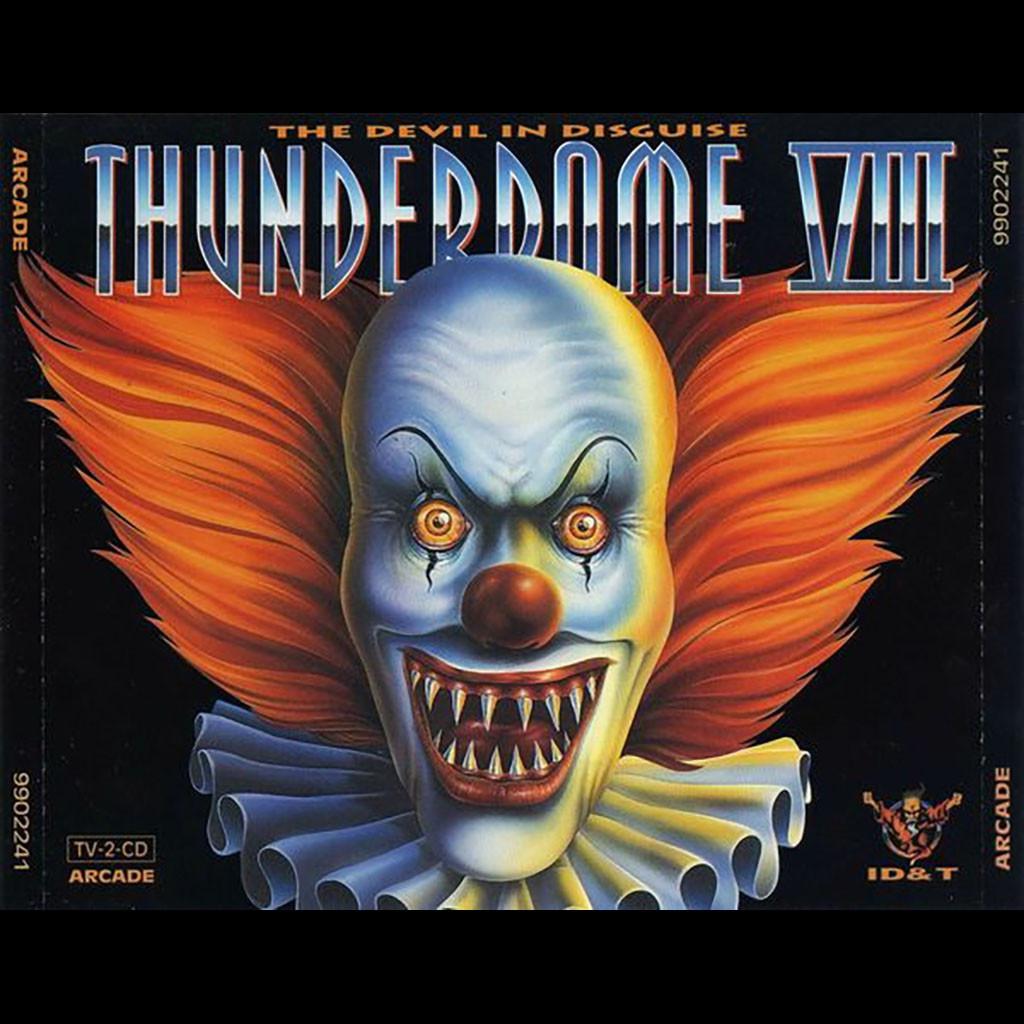

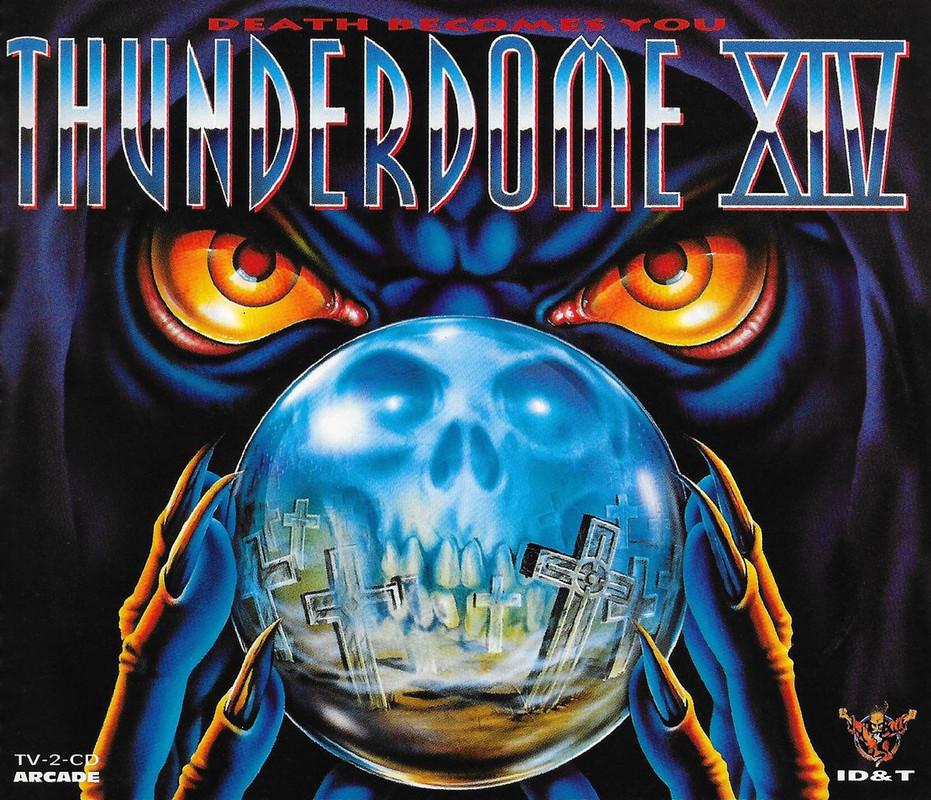

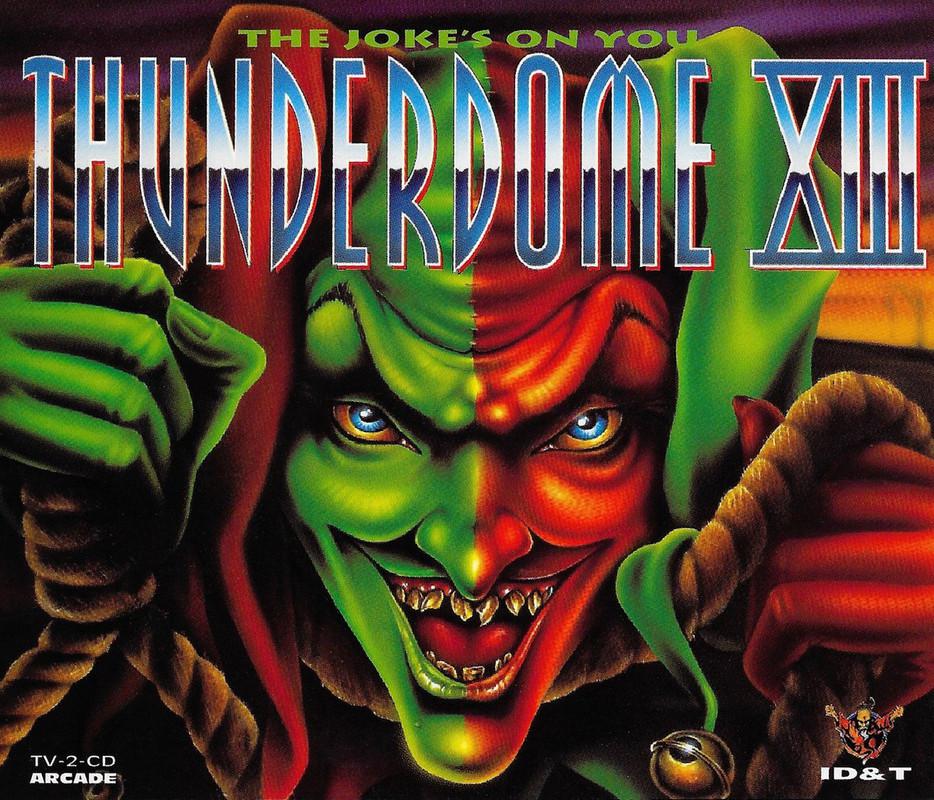

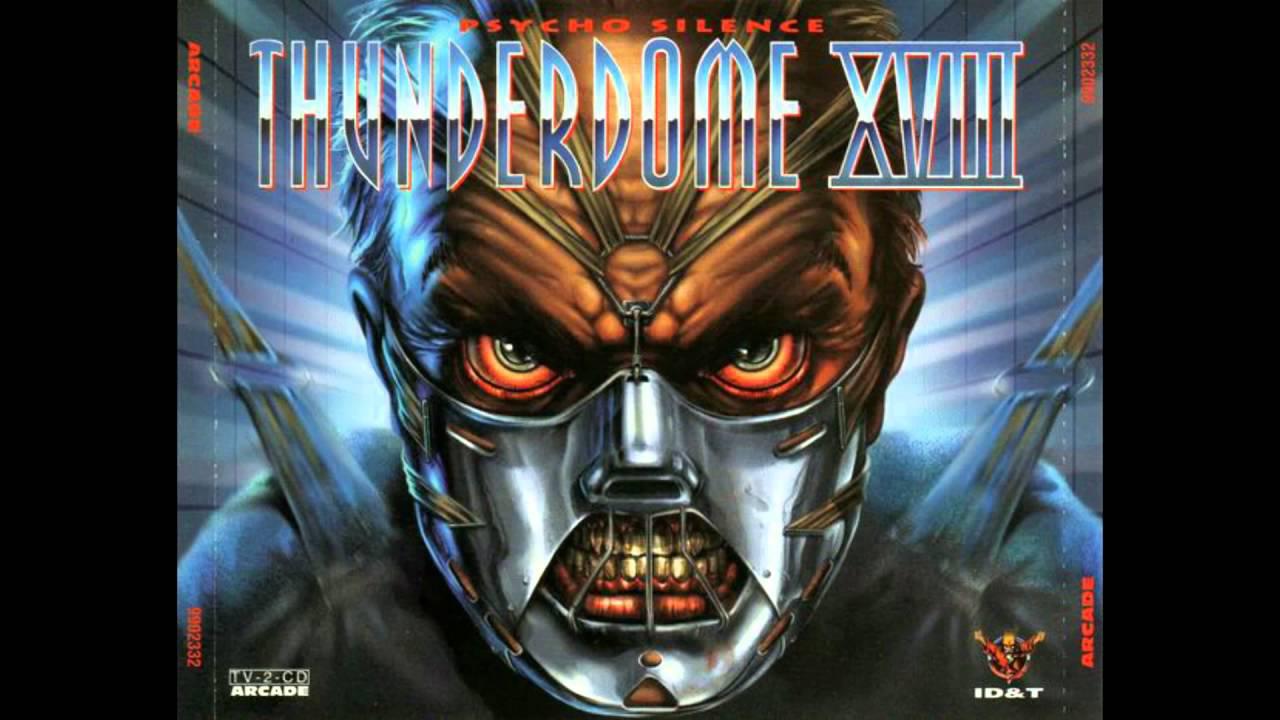

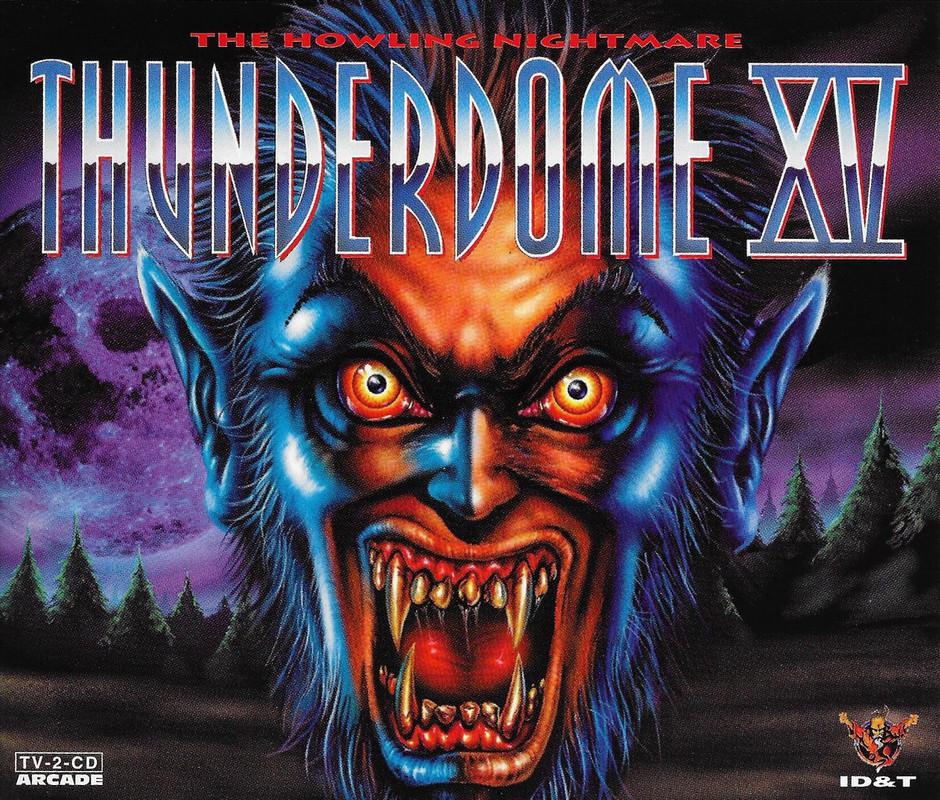

sd-concepts-library/thunderdome-cover

|

sd-concepts-library

| 2022-09-14T23:12:38Z | 0 | 2 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T23:12:33Z |

---

license: mit

---

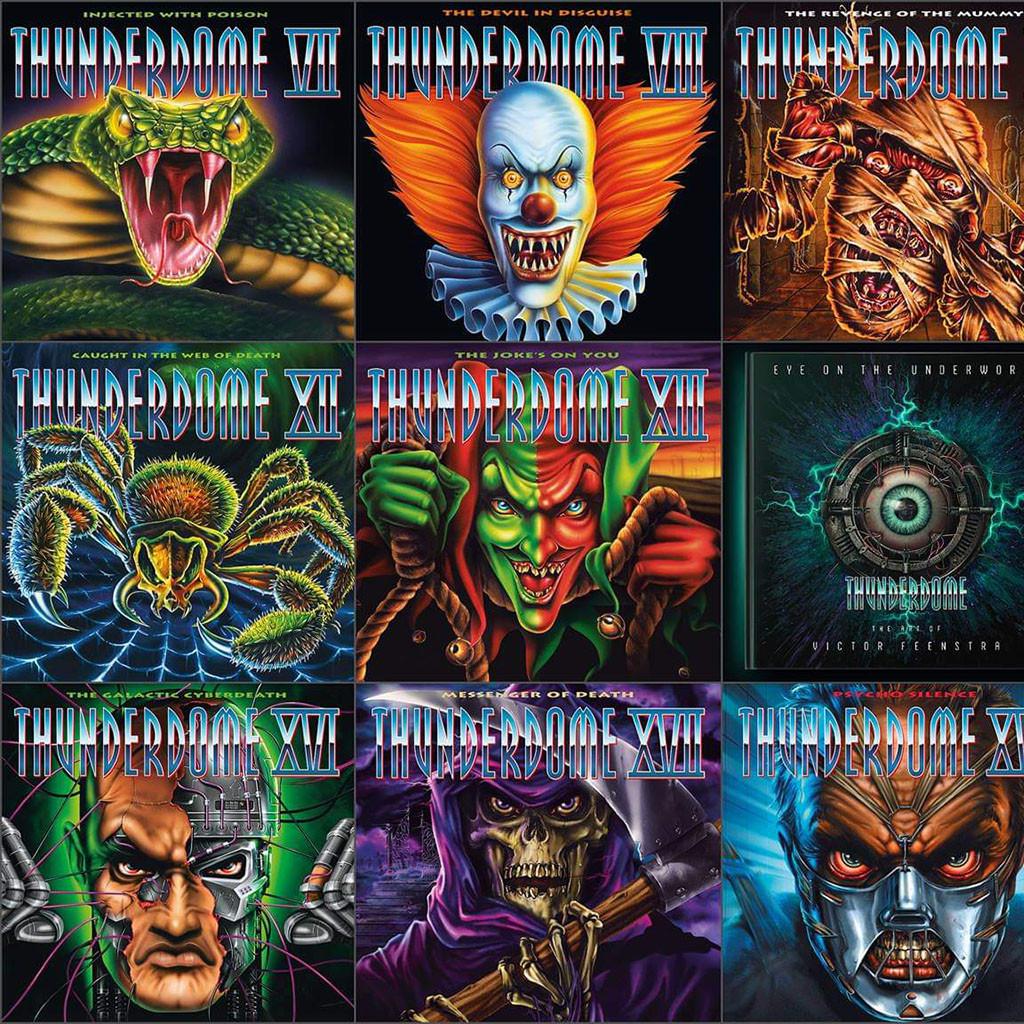

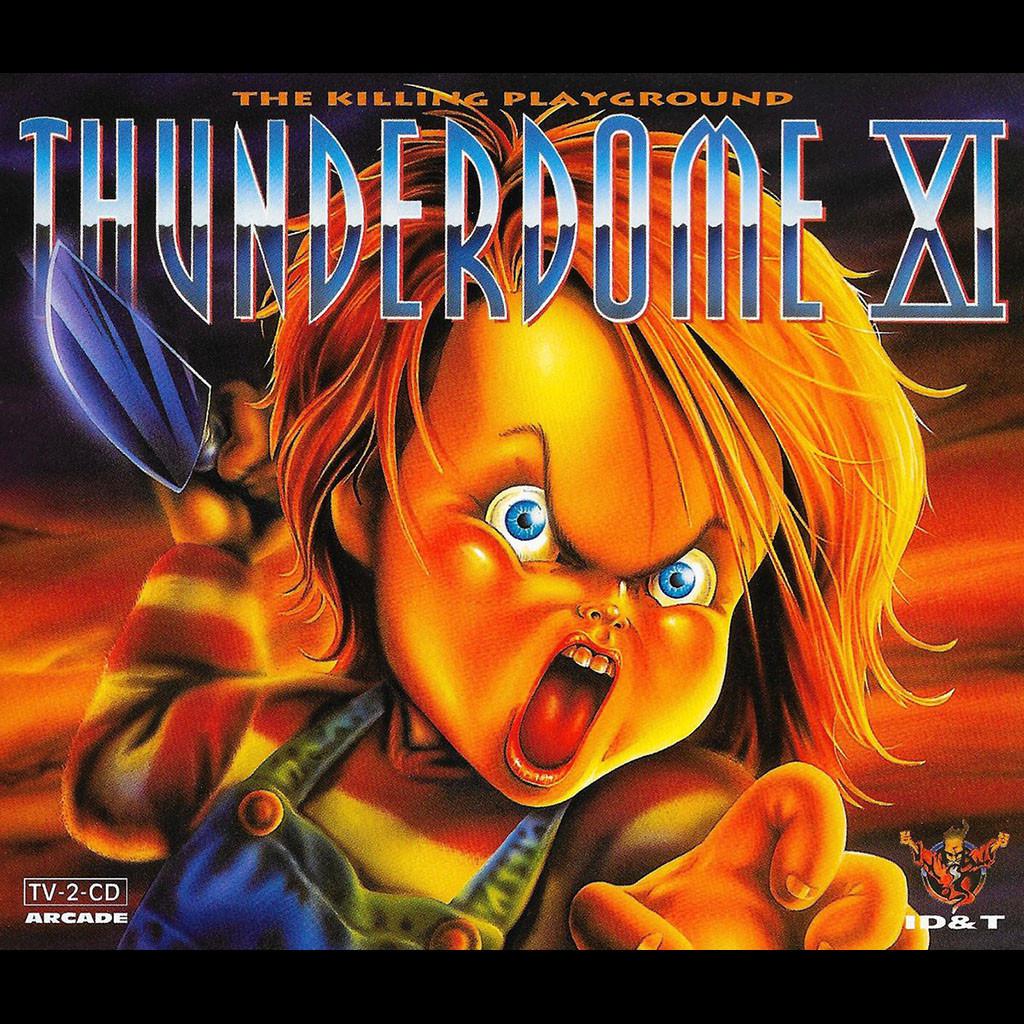

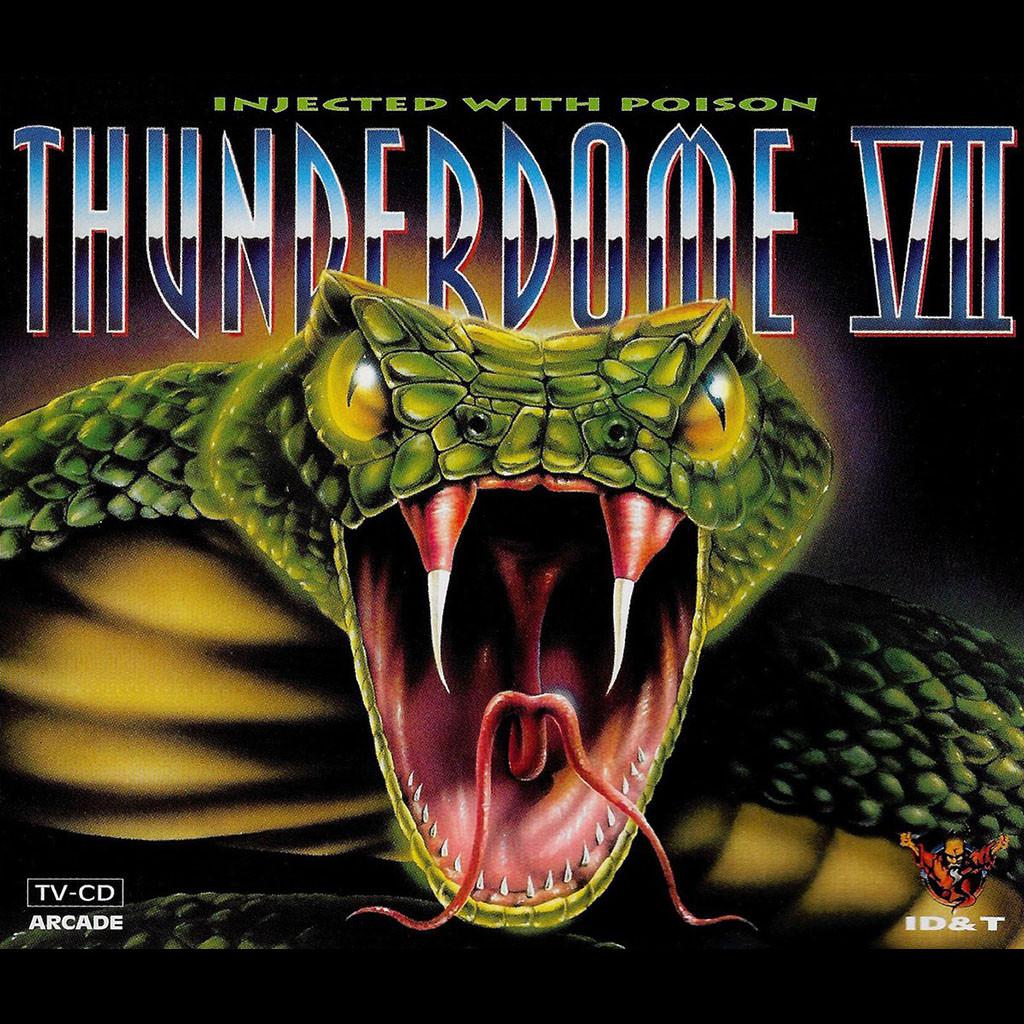

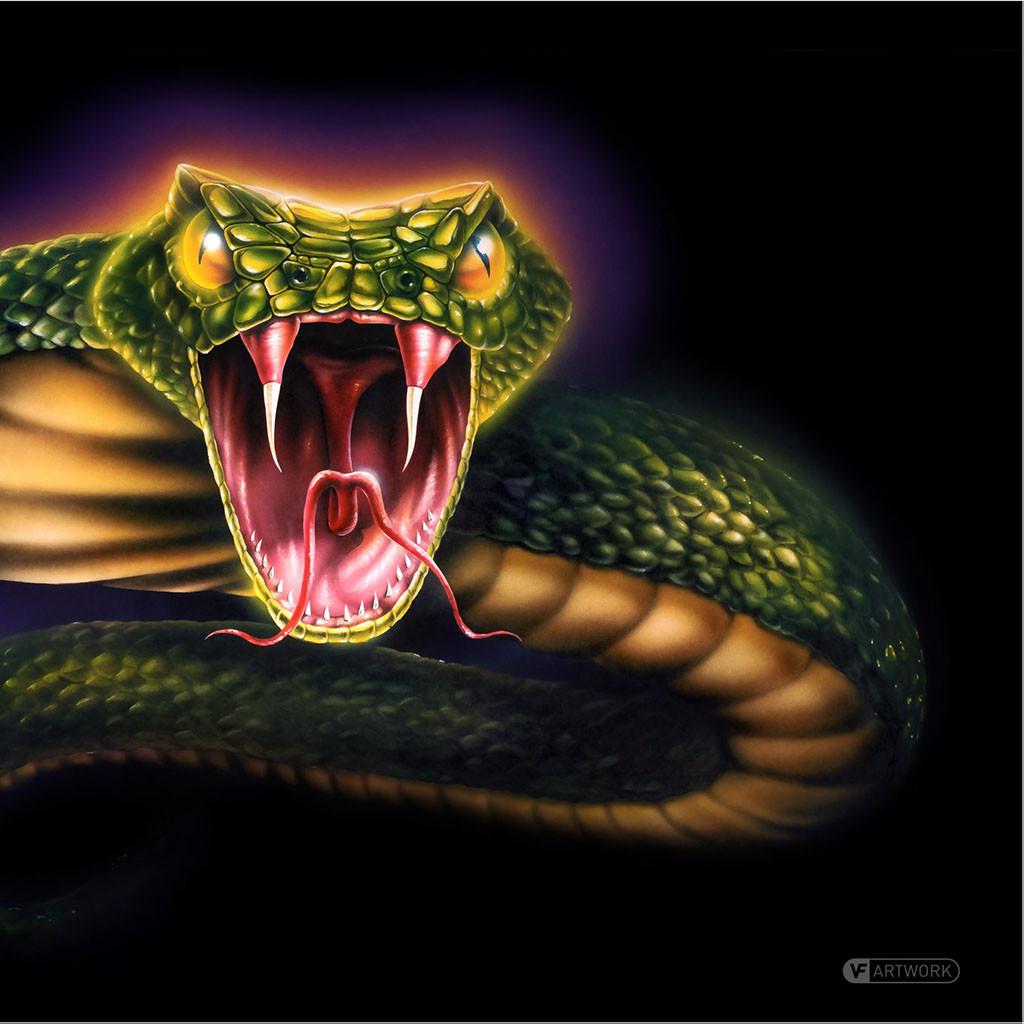

### thunderdome-cover on Stable Diffusion

This is the `<thunderdome-cover>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/uma-meme

|

sd-concepts-library

| 2022-09-14T23:08:43Z | 0 | 1 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T23:08:20Z |

---

license: mit

---

### uma-meme on Stable Diffusion

This is the `<uma-object-full>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/mayor-richard-irvin

|

sd-concepts-library

| 2022-09-14T23:07:45Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T23:07:34Z |

---

license: mit

---

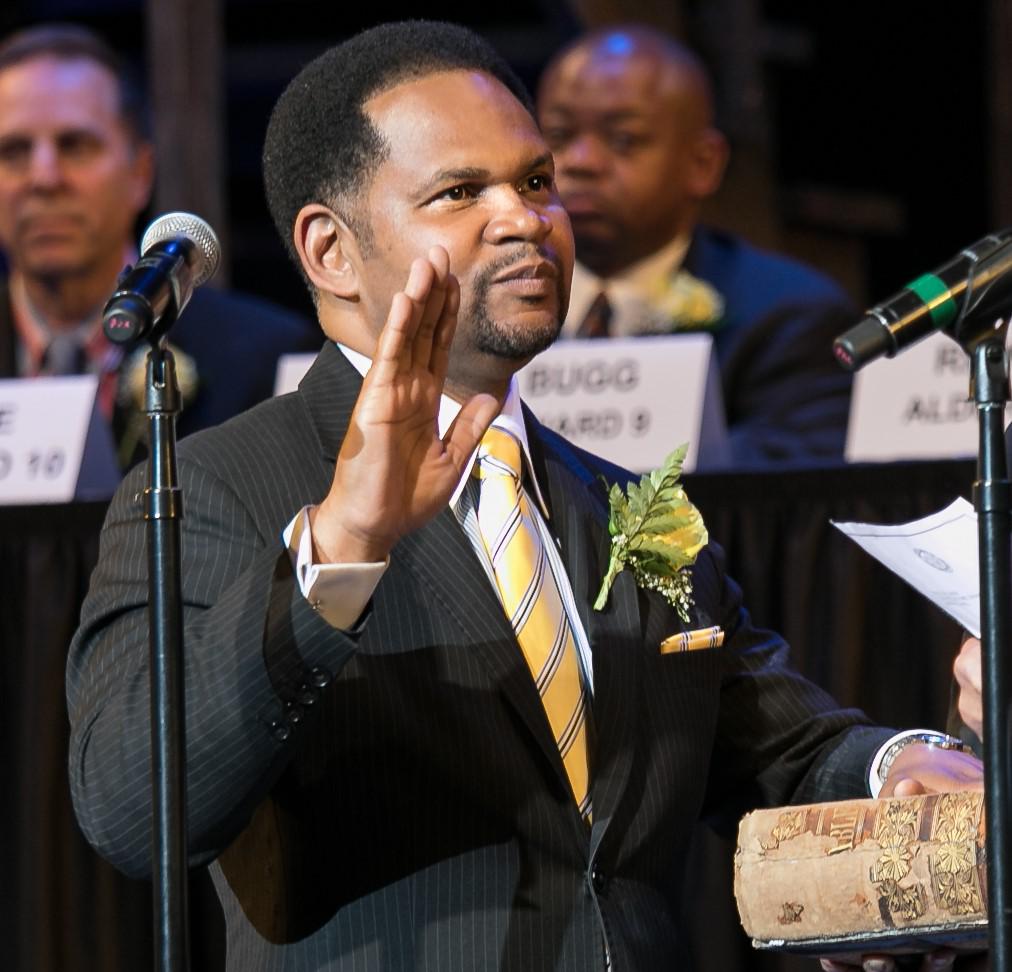

### mayor-richard-irvin on Stable Diffusion

This is the `<Richard_Irvin>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/buddha-statue

|

sd-concepts-library

| 2022-09-14T22:23:55Z | 0 | 4 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T22:23:48Z |

---

license: mit

---

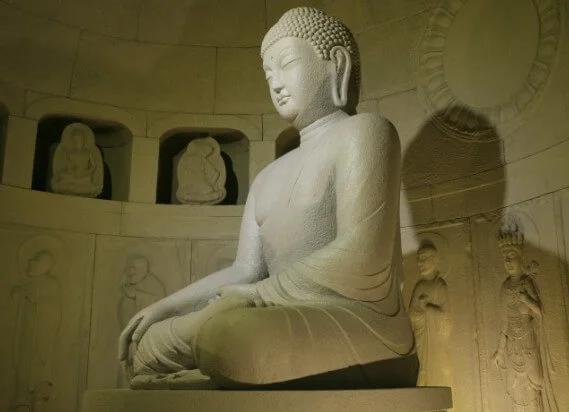

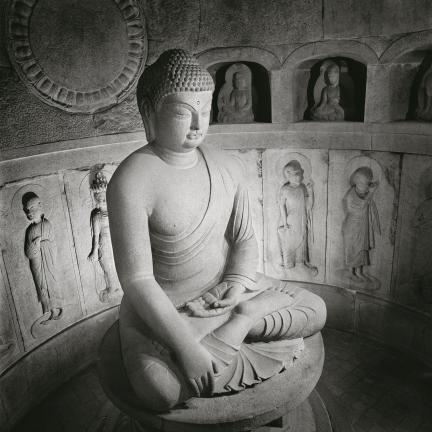

### Buddha statue on Stable Diffusion

This is the `<buddha-statue>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/retro-girl

|

sd-concepts-library

| 2022-09-14T21:34:09Z | 0 | 9 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T21:33:58Z |

---

license: mit

---

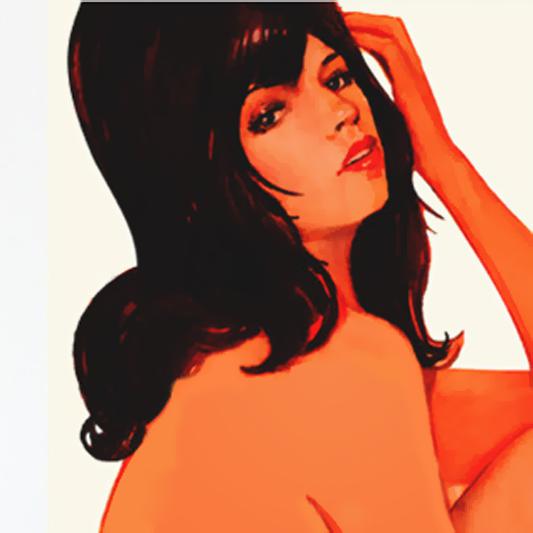

### Retro-Girl on Stable Diffusion

This is the `<retro-girl>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

michael20at/q-Taxi-v3

|

michael20at

| 2022-09-14T21:20:43Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-14T21:16:38Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

sd-concepts-library/collage3-hubcity

|

sd-concepts-library

| 2022-09-14T20:42:35Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T05:37:48Z |

---

license: mit

---

### Collage3-HubCity on Stable Diffusion

This is the `<C3Hub>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

DamianCummins/distilbert-base-uncased-finetuned-ner

|

DamianCummins

| 2022-09-14T20:03:13Z | 61 | 0 |

transformers

|

[

"transformers",

"tf",

"tensorboard",

"distilbert",

"token-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-14T17:54:02Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: DamianCummins/distilbert-base-uncased-finetuned-ner

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# DamianCummins/distilbert-base-uncased-finetuned-ner

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0556

- Validation Loss: 0.0608

- Train Precision: 0.9196

- Train Recall: 0.9304

- Train F1: 0.9250

- Train Accuracy: 0.9820

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 2631, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Precision | Train Recall | Train F1 | Train Accuracy | Epoch |

|:----------:|:---------------:|:---------------:|:------------:|:--------:|:--------------:|:-----:|

| 0.0556 | 0.0608 | 0.9196 | 0.9304 | 0.9250 | 0.9820 | 0 |

### Framework versions

- Transformers 4.21.3

- TensorFlow 2.9.2

- Datasets 2.4.0

- Tokenizers 0.12.1

|

michael20at/q-FrozenLake-v1-4x4-noSlippery

|

michael20at

| 2022-09-14T20:03:08Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-14T20:03:02Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="michael20at/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

sd-concepts-library/rektguy

|

sd-concepts-library

| 2022-09-14T19:39:29Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T19:39:24Z |

---

license: mit

---

### rektguy on Stable Diffusion

This is the `<rektguy>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

matemato/ppo-LunarLander-v2

|

matemato

| 2022-09-14T18:58:12Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-14T18:57:33Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: 191.85 +/- 23.17

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

sd-concepts-library/joe-whiteford-art-style

|

sd-concepts-library

| 2022-09-14T18:43:02Z | 0 | 3 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T18:42:46Z |

---

license: mit

---

### Joe Whiteford Art Style on Stable Diffusion

This is the `<joe-whiteford-artstyle>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/my-mug

|

sd-concepts-library

| 2022-09-14T17:53:46Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T17:53:40Z |

---

license: mit

---

### My mug on Stable Diffusion

This is the `<my-mug>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

rvidaurre/ddpm-butterflies-128

|

rvidaurre

| 2022-09-14T17:21:07Z | 0 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:huggan/smithsonian_butterflies_subset",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-09-14T16:06:53Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: huggan/smithsonian_butterflies_subset

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/smithsonian_butterflies_subset` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/rvidaurre/ddpm-butterflies-128/tensorboard?#scalars)

|

sd-concepts-library/sterling-archer

|

sd-concepts-library

| 2022-09-14T17:00:25Z | 0 | 13 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T17:00:11Z |

---

license: mit

---

### Sterling-Archer on Stable Diffusion

This is the `<archer-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

VietAI/vit5-base-vietnews-summarization

|

VietAI

| 2022-09-14T16:46:02Z | 543 | 7 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"t5",

"text2text-generation",

"summarization",

"vi",

"dataset:cc100",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-09-07T02:47:53Z |

---

language: vi

datasets:

- cc100

tags:

- summarization

license: mit

widget:

- text: "VietAI là tổ chức phi lợi nhuận với sứ mệnh ươm mầm tài năng về trí tuệ nhân tạo và xây dựng một cộng đồng các chuyên gia trong lĩnh vực trí tuệ nhân tạo đẳng cấp quốc tế tại Việt Nam."

---

# ViT5-Base Finetuned on `vietnews` Abstractive Summarization (No prefix needed)

State-of-the-art pretrained Transformer-based encoder-decoder model for Vietnamese.

[](https://paperswithcode.com/sota/abstractive-text-summarization-on-vietnews?p=vit5-pretrained-text-to-text-transformer-for)

## How to use

For more details, do check out [our Github repo](https://github.com/vietai/ViT5) and [eval script](https://github.com/vietai/ViT5/blob/main/eval/Eval_vietnews_sum.ipynb).

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("VietAI/vit5-base-vietnews-summarization")

model = AutoModelForSeq2SeqLM.from_pretrained("VietAI/vit5-base-vietnews-summarization")

model.cuda()

sentence = "VietAI là tổ chức phi lợi nhuận với sứ mệnh ươm mầm tài năng về trí tuệ nhân tạo và xây dựng một cộng đồng các chuyên gia trong lĩnh vực trí tuệ nhân tạo đẳng cấp quốc tế tại Việt Nam."

sentence = sentence + "</s>"

encoding = tokenizer(sentence, return_tensors="pt")

input_ids, attention_masks = encoding["input_ids"].to("cuda"), encoding["attention_mask"].to("cuda")

outputs = model.generate(

input_ids=input_ids, attention_mask=attention_masks,

max_length=256,

early_stopping=True

)

for output in outputs:

line = tokenizer.decode(output, skip_special_tokens=True, clean_up_tokenization_spaces=True)

print(line)

```

## Citation

```

@inproceedings{phan-etal-2022-vit5,

title = "{V}i{T}5: Pretrained Text-to-Text Transformer for {V}ietnamese Language Generation",

author = "Phan, Long and Tran, Hieu and Nguyen, Hieu and Trinh, Trieu H.",

booktitle = "Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Student Research Workshop",

year = "2022",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.naacl-srw.18",

pages = "136--142",

}

```

|

jcastanyo/Reinforce-CP-v0

|

jcastanyo

| 2022-09-14T16:19:16Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-09-14T16:18:12Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-CP-v0

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 62.30 +/- 30.18

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

sd-concepts-library/neon-pastel

|

sd-concepts-library

| 2022-09-14T15:55:45Z | 0 | 6 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T15:47:29Z |

---

license: mit

---

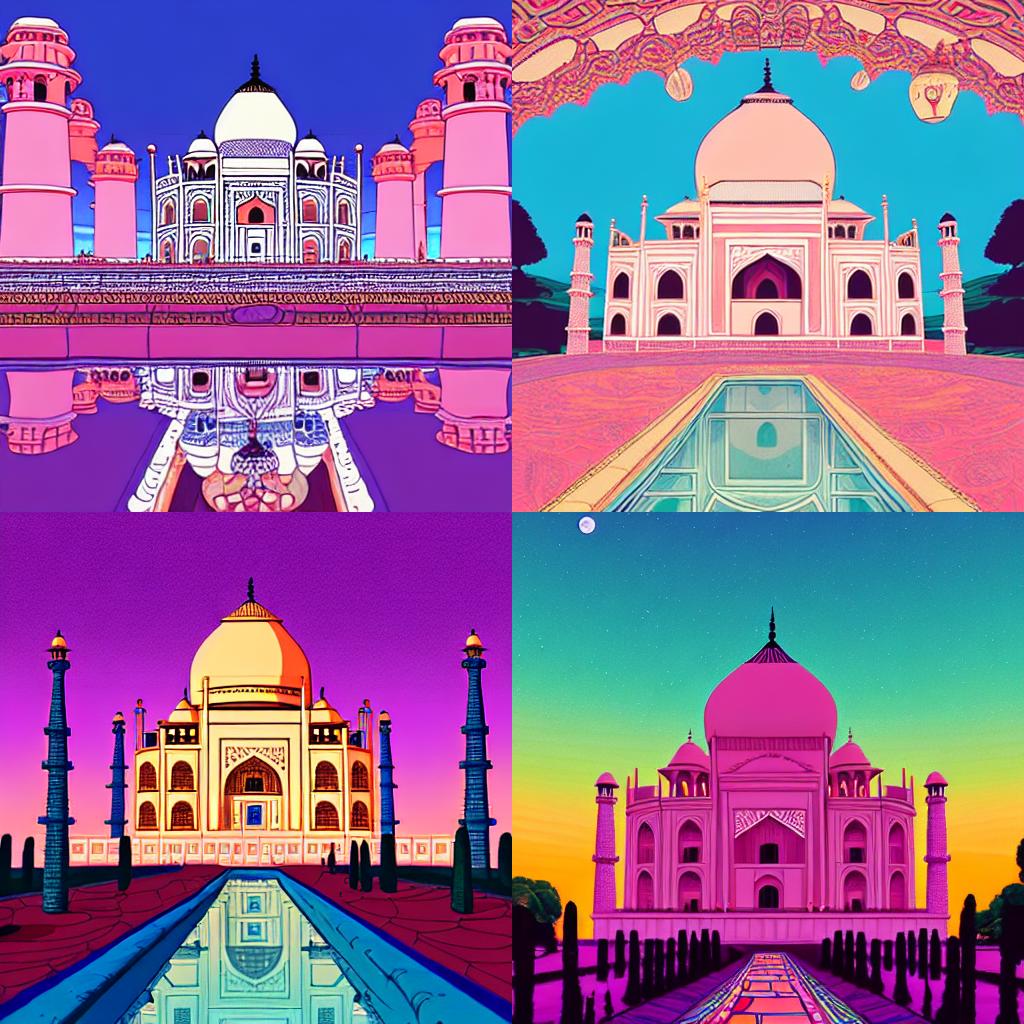

### Neon Pastel on Stable Diffusion

This is the `<neon-pastel>` style taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here are some of the outputs from this model:

Prompt: the taj mahal in `<neon-pastel>` style

Prompt: portrait of barack obama in `<neon-pastel>` style

Prompt: a beautiful beach landscape in `<neon-pastel>` style

|

theunnecessarythings/ddpm-ema-flowers-64

|

theunnecessarythings

| 2022-09-14T15:54:23Z | 2 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:huggan/flowers-102-categories",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-09-14T14:15:13Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: huggan/flowers-102-categories

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-ema-flowers-64

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/flowers-102-categories` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(0.95, 0.999), weight_decay=1e-06 and epsilon=1e-08

- lr_scheduler: cosine

- lr_warmup_steps: 500

- ema_inv_gamma: 1.0

- ema_inv_gamma: 0.75

- ema_inv_gamma: 0.9999

- mixed_precision: no

### Training results

📈 [TensorBoard logs](https://huggingface.co/sreerajr000/ddpm-ema-flowers-64/tensorboard?#scalars)

|

sd-concepts-library/tb303

|

sd-concepts-library

| 2022-09-14T15:26:31Z | 0 | 3 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-14T15:26:17Z |

---

license: mit

---

### TB303 on Stable Diffusion

This is the `<"tb303>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

MayaGalvez/bert-base-multilingual-cased-finetuned-multilingual-nli

|

MayaGalvez

| 2022-09-14T15:24:58Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-14T13:25:22Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- precision

- recall

- f1

model-index:

- name: bert-base-multilingual-cased-finetuned-multilingual-nli_newdata_oneepoch

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-multilingual-cased-finetuned-multilingual-nli_newdata_oneepoch

This model is a fine-tuned version of [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7647

- Accuracy: 0.6853

- Precision: 0.6932

- Recall: 0.6853

- F1: 0.6847

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 0.9394 | 0.04 | 500 | 0.9044 | 0.592 | 0.5985 | 0.592 | 0.5917 |

| 0.8603 | 0.08 | 1000 | 0.9159 | 0.579 | 0.6210 | 0.579 | 0.5739 |

| 0.8293 | 0.11 | 1500 | 0.8520 | 0.6214 | 0.6278 | 0.6214 | 0.6215 |

| 0.8042 | 0.15 | 2000 | 0.8085 | 0.6418 | 0.6439 | 0.6418 | 0.6414 |

| 0.7945 | 0.19 | 2500 | 0.8251 | 0.6319 | 0.6575 | 0.6319 | 0.6262 |

| 0.7768 | 0.23 | 3000 | 0.8298 | 0.6383 | 0.6556 | 0.6383 | 0.6365 |

| 0.753 | 0.27 | 3500 | 0.8225 | 0.6464 | 0.6684 | 0.6464 | 0.6436 |

| 0.754 | 0.3 | 4000 | 0.7979 | 0.6529 | 0.6750 | 0.6529 | 0.6523 |

| 0.7466 | 0.34 | 4500 | 0.7644 | 0.6718 | 0.6727 | 0.6718 | 0.6713 |

| 0.7331 | 0.38 | 5000 | 0.7861 | 0.6591 | 0.6757 | 0.6591 | 0.6581 |

| 0.72 | 0.42 | 5500 | 0.7972 | 0.6595 | 0.6815 | 0.6595 | 0.6582 |

| 0.7103 | 0.46 | 6000 | 0.7652 | 0.6702 | 0.6728 | 0.6702 | 0.6688 |

| 0.7103 | 0.49 | 6500 | 0.7732 | 0.6684 | 0.6796 | 0.6684 | 0.6670 |

| 0.7023 | 0.53 | 7000 | 0.7921 | 0.6657 | 0.6834 | 0.6657 | 0.6663 |

| 0.6827 | 0.57 | 7500 | 0.7672 | 0.6733 | 0.6824 | 0.6733 | 0.6726 |

| 0.6826 | 0.61 | 8000 | 0.7665 | 0.6755 | 0.6789 | 0.6755 | 0.6747 |

| 0.6705 | 0.65 | 8500 | 0.7659 | 0.6755 | 0.6815 | 0.6755 | 0.6748 |

| 0.662 | 0.68 | 9000 | 0.7738 | 0.6767 | 0.6833 | 0.6767 | 0.6757 |

| 0.6556 | 0.72 | 9500 | 0.7623 | 0.6805 | 0.6906 | 0.6805 | 0.6799 |

| 0.6462 | 0.76 | 10000 | 0.7863 | 0.6719 | 0.6849 | 0.6719 | 0.6701 |

| 0.6405 | 0.8 | 10500 | 0.7523 | 0.681 | 0.6845 | 0.681 | 0.6805 |

| 0.6407 | 0.84 | 11000 | 0.7661 | 0.6807 | 0.6856 | 0.6807 | 0.6801 |

| 0.6341 | 0.87 | 11500 | 0.7672 | 0.6787 | 0.6904 | 0.6787 | 0.6770 |

| 0.6292 | 0.91 | 12000 | 0.7742 | 0.682 | 0.6922 | 0.682 | 0.6803 |

| 0.6238 | 0.95 | 12500 | 0.7584 | 0.6855 | 0.6926 | 0.6855 | 0.6850 |

| 0.6201 | 0.99 | 13000 | 0.7647 | 0.6853 | 0.6932 | 0.6853 | 0.6847 |

### Framework versions

- Transformers 4.21.0

- Pytorch 1.12.0+cu102

- Datasets 2.4.0

- Tokenizers 0.12.1

|

JAS100/bert-finetuned-ner

|

JAS100

| 2022-09-14T14:28:29Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:conll2003",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-14T14:10:54Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

config: conll2003

split: train

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9330024813895782

- name: Recall

type: recall

value: 0.9491753618310333

- name: F1

type: f1

value: 0.9410194377242012

- name: Accuracy

type: accuracy

value: 0.9865926885265203

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0620

- Precision: 0.9330

- Recall: 0.9492

- F1: 0.9410

- Accuracy: 0.9866

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0852 | 1.0 | 1756 | 0.0722 | 0.9149 | 0.9295 | 0.9221 | 0.9814 |

| 0.0353 | 2.0 | 3512 | 0.0593 | 0.9223 | 0.9492 | 0.9356 | 0.9863 |

| 0.018 | 3.0 | 5268 | 0.0620 | 0.9330 | 0.9492 | 0.9410 | 0.9866 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Abdulmateen/bert-finetuned-ner

|

Abdulmateen

| 2022-09-14T14:26:48Z | 61 | 0 |

transformers

|

[

"transformers",

"tf",

"bert",

"token-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-13T05:49:10Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: Abdulmateen/bert-finetuned-ner

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Abdulmateen/bert-finetuned-ner

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.1865

- Validation Loss: 0.1351

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 2634, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 0.4679 | 0.2717 | 0 |

| 0.2578 | 0.1703 | 1 |

| 0.1865 | 0.1351 | 2 |

### Framework versions

- Transformers 4.21.3

- TensorFlow 2.8.2

- Datasets 2.4.0

- Tokenizers 0.12.1

|

BlinkDL/rwkv-3-pile-1b5

|

BlinkDL

| 2022-09-14T13:54:48Z | 0 | 7 | null |

[

"pytorch",

"text-generation",

"causal-lm",

"rwkv",

"en",

"license:apache-2.0",

"region:us"

] |

text-generation

| 2022-06-23T11:44:36Z |

---

language:

- en

tags:

- pytorch

- text-generation

- causal-lm

- rwkv

license: apache-2.0

datasets:

- The Pile

---

# RWKV-3 1.5B

## Model Description